Abstract

In this paper, we study the algebraic, rational and formal Puiseux series solutions of certain type of systems of autonomous ordinary differential equations. More precisely, we deal with systems which associated algebraic set is of dimension one. We establish a relationship between the solutions of the system and the solutions of an associated first order autonomous ordinary differential equation, that we call the reduced differential equation. Using results on such equations, we prove the convergence of the formal Puiseux series solutions of the system, expanded around a finite point or at infinity, and we present an algorithm to describe them. In addition, we bound the degree of the possible algebraic and rational solutions, and we provide an algorithm to decide their existence and to compute such solutions if they exist. Moreover, if the reduced differential equation is non trivial, for every given point \((x_0,y_0) \in \mathbb {C}^2\), we prove the existence of a convergent Puiseux series solution y(x) of the original system such that \(y(x_0)=y_0\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In [2], we have studied local solutions of first order autonomous algebraic ordinary differential equations. In this paper, we generalize the results obtained there to systems of higher order autonomous ordinary differential equations in one unknown function which associated algebraic set is of dimension one, i.e. the algebraic set is a finite union of curves and, maybe, points. In particular, we prove that every fractional power series solution of such systems is convergent, and an algorithm for computing these solutions is provided. Note that in [4] it is shown that for general systems of algebraic ordinary differential equations the existence of non-constant formal power series solutions can not be decided algorithmically. Nevertheless, in the case of systems as above, this undecidability property does not hold.

Finding rational general solutions of such systems has been studied in [8]. There, a necessary condition on the degree of the associated algebraic curve is provided. If the condition is fulfilled, the solutions are constructed from a rational parametrization of a birational planar projection of the associated space curve. Here, we provide an algorithm which decides the existence of not only rational but also algebraic solutions of such systems. Differently to the method described in [8], in the current approach we do not need to consider a rational parametrization of the associated curve. We instead triangularize the given system and we derive from there a single autonomous ordinary differential equation of first order with the same non-constant formal Puiseux series solutions. We call it the reduced differential equation of the system. Since rational or algebraic functions are determined by their Puiseux series expansion, the reduced differential equation has also the same algebraic solutions that the original system. Furthermore, taking into account that the reduced equation is autonomous and of order one, we bound, using the results from [1], the degree of its possible algebraic solutions, and hence of those of the original system. Once the degree of the solutions is bounded, one may use the algorithm from section 4.3 in [1] to decide the existence and compute such solutions.

We derive the existence and convergence of formal Puiseux series solutions of such systems (see Theorems 5 and 4) from the corresponding results (see [2]) applied to the obtained reduced differential equation. With respect to the convergence of formal solutions a related result is given by Gerasimova and Razmyslov in [6]. They show the convergence of formal power series solutions of a system of ordinary differential equations under some additional conditions such as that there are no zero-divisors of the differential algebra induced by the system and that the system is of transcendence degree one. Their method is based on the fact that the induced differential algebra is finitely generated as an algebra over its base field and then they reduce the problem to the Cauchy-Kowalevski theorem. Their method does not deal with fractional power series solutions.

In the literature there are several methods to triangularize differential systems and to obtain resolvent representations of them, see for instance [3, 9] and references therein. The description of these methods are quite involved because they apply to general differential systems. This paper addresses only ordinary differential systems which associated algebraic set has dimension one and we can split the process into an algebraic triangularization part and then a straightforward differential elimination process. For the algebraic part we use regular chains as described in [7, 11]. This simple description of the process allow us to have a precise relation between the formal Puiseux series solutions of the original system and those of the reduced differential equation.

The structure of the paper is as follows. In Sect. 2 we recall some necessary concepts such as regular chains and regular zeros. Section 3 is devoted to derive from a system of autonomous ordinary differential equations of dimension one in one unknown function, a finite union of such regular chains. From them we derive a single autonomous ordinary differential equation of order one with the same non-constant formal Puiseux series solutions as the original system. Using this reduction, the main results in [2] can be generalized to these particular systems (see Theorems 4 and 5). In Sect. 4 we present an algorithm for this reduction and, using the algorithms in [1, 2], all formal Puiseux series and algebraic solutions of the original system can be found as we illustrate by examples.

2 Preliminaries

We recall the notion of regular chains and regular zeros; for further details we refer to [7, 11]. Let us denote for \(f,g \in \mathbb {C}[y_0,\ldots ,y_m]\) by \({{\,\mathrm{lv}\,}}(f)\) the leading variable, by \({{\,\mathrm{lc}\,}}(f)\) the leading coefficient and by \({{\,\mathrm{init}\,}}(f)\) the initial of f with respect to the ordering \(y_0<\cdots <y_m\). In addition, we denote by \(\mathrm {Res}_{y_i}(f,g)\) the resultant of f and g with respect to \(y_i\). Let \(\mathcal {S}=\{F_1,\ldots ,F_M\} \subset \mathbb {C}[y_0,\ldots ,y_m]\) be a finite system of polynomials in triangular form, i.e. \({{\,\mathrm{lv}\,}}(F_i)<{{\,\mathrm{lv}\,}}(F_j)\) for any \(1 \le i<j \le M\). Then we define \(\mathrm {Res}(f,\mathcal {S})\) as the resultant of f and consecutively \(F_M,\ldots ,F_1\) with respect to their leading variables, i.e.

Moreover, we define \({{\,\mathrm{init}\,}}(\mathcal {S})=\{{{\,\mathrm{init}\,}}(F_j)~|~1 \le j \le M\}\) and \({{\,\mathrm{pinit}\,}}(\mathcal {S})=\prod _{j=1}^M {{\,\mathrm{init}\,}}(F_j)\). A regular chain is a system of algebraic equations \(\mathcal {S}\) in triangular form with the additional property that \(\mathrm {Res}(f,\mathcal {S}) \ne 0\) for any \(f \in {{\,\mathrm{init}\,}}(\mathcal {S})\).

Let \(K \supseteq \mathbb {C}\) be a field and \(\mathcal {S}\subset \mathbb {C}[y_0,\ldots ,y_m]\). Then let us denote

For a regular chain \(\mathcal {S}\), we define a regular zero of \(\mathcal {S}\) as an element \(a=(a_0,\ldots ,a_m) \in \mathbb {V}_{K}(\mathcal {S})\) such that for \((\mathcal {S}\cap \mathbb {C}[y_0]) \backslash \mathbb {C}= \emptyset \) the component \(a_0\) is transcendental over \(\mathbb {C}\) and for \(1 \le k \le m\) with \((\mathcal {S}\cap \mathbb {C}[y_0,\ldots ,y_k]) \backslash \mathbb {C}[y_0,\ldots ,y_{k-1}] = \emptyset \) the component \(a_k\) is transcendental over \(\mathbb {C}(a_0,\ldots ,a_{k-1})\).

We recall a well-known theorem for the relation between regular chains and regular zeros, see [11, Proposition 5.1.5, Corollary 5.1.6].

Theorem 1

Let \(\mathcal {S}=\{F_1,\ldots ,F_M\} \subset \mathbb {C}[y_0,\ldots ,y_m]\) be a finite system of polynomials in triangular form and denote by \(\mathcal {S}_k\) the first k polynomials of \(\mathcal {S}\). Then the following are equivalent:

-

1.

\(\mathcal {S}\) is a regular chain.

-

2.

\(|\mathcal {S}|=1\) or for any \(k=2,\ldots ,M\) the subsystem \(\mathcal {S}_{k-1}\) is a regular chain and for any regular zero a of \(\mathcal {S}_{k-1}\) and \(f \in \mathcal {S}_{k}\) it holds that \({{\,\mathrm{init}\,}}(f)(a) \ne 0.\)

In fact, statement (2) above is used in [7] as definition of regular chains. Note that in [12] regular chains are called “proper ascending chains”, but are defined exactly as in this paper.

Regular chains can be helpful in order to represent algebraic sets as Theorem 5.2.2 in [11] shows.

Theorem 2

Let \(\mathcal {S}\subseteq \mathbb {C}[y_0,\ldots ,y_m]\) be a polynomial system. Then there exists a finite set of regular chains \(\mathcal {S}_1,\ldots ,\mathcal {S}_N \subseteq \mathbb {C}[y_0,\ldots ,y_m]\) such that

We note that, in the notation of [11], \(\mathbb {V}_{K}(\mathcal {S}_j / {{\,\mathrm{init}\,}}(\mathcal {S}_j))=\mathbb {V}_{K}(\mathcal {S}_j) \backslash \mathbb {V}_{K}({{\,\mathrm{pinit}\,}}(\mathcal {S}_j))\), as it is also mentioned in chapter 1.5 therein. Let us recall that \(\mathbb {V}_{\mathbb {C}}(\mathcal {S}_j)\) is an algebraic set of dimension \(m-|\mathcal {S}_j|\).

There are several implementations for performing computations with regular chains and in particular computing regular chain decomposition as in (1) such as in the Maple-package RegularChains.

Let \(\mathcal {K}\) be the field of formal Puiseux series expanded around any \(x_0 \in \mathbb {C}_{\infty }\), where \(\mathbb {C}_{\infty }=\mathbb {C}\cup \{\infty \}\). We are interested in non-constant formal Puiseux series solutions \(y(x) \in \mathcal {K}\) such that \(y(x_0)=y_0 \in \mathbb {C}_{\infty }\). Since the systems we are dealing with, see (2) below, are invariant under the translation of the independent variable, we can assume without loss of generality that the formal Puiseux series is expanded around zero or at infinity such as in [2]. For any subset \(\widetilde{\mathcal {S}} \subseteq \mathbb {C}[y,y',\ldots ,y^{(m)}]\) let us denote

3 Systems of Algebro-Geometric Dimension One

Let us consider systems of differential equations of the form

where \(F_1, \ldots , F_M \in \mathbb {C}[y,y',\ldots ,y^{(m)}]\) with \(m>0\). For a field \(K \supseteq \mathbb {C}\), by considering y and its derivatives as independent variables, we write \(\mathbb {V}_{K}(\widetilde{\mathcal {S}})\) for the algebraic set generated by \(\widetilde{\mathcal {S}}\). We assume the dimension of \(\mathbb {V}_{\mathbb {C}}(\widetilde{\mathcal {S}})\) to be one, i.e. \(\mathbb {V}_{\mathbb {C}}(\widetilde{\mathcal {S}})\) is a finite union of curves and, maybe, a finite union of points. Note that a single AODE of order one can be seen as a system of the type (2) with \(M=m=1\) and is of dimension one.

Lemma 1

For every \(\widetilde{\mathcal {S}}\) as in (2) we can compute a finite union of regular chains \(\mathcal {S}\) as in (3) with the same non-constant formal Puiseux series solutions.

Proof

Let us choose the ordering \(y<y'<\cdots <y^{(m)}\). By Theorem 2 there is a regular chain decomposition \(\mathcal {S}_1,\ldots ,\mathcal {S}_N\) such that every regular chain has a zeroset of dimension zero or one. We omit systems of regular chains starting with an algebraic equation in y, since they only lead to constant solutions. Thus, the remaining systems are of dimension one and of the form

with \(r_j \ge 1\) and \({{\,\mathrm{init}\,}}(G_j)=G_{j,r_j} \ne 0\) for every \(1 \le j \le m\).

Now we want to study in (1) which kind of solutions might be a solution of a regular chain but not of the original system, i.e. the solutions of \(\mathcal {S}\) and \({{\,\mathrm{pinit}\,}}(\mathcal {S})={{\,\mathrm{init}\,}}(G_1) \cdots {{\,\mathrm{init}\,}}(G_m)=0\). If y(x) is a non-constant formal Puiseux series solution of a \(\mathcal {S}_j\), then \((y(x),y'(x),\ldots ,y^{(m)}(x))\) is a regular zero of \(\mathcal {S}_j\), because y(x) is transcendental over \(\mathbb {C}\) and for every \(1 \le k \le m\) we have

Then, by Theorem 1,

Since \({{\,\mathrm{init}\,}}(G_1)(y)=0\) is an algebraic equation in y, there can only be constant common zeros of \(\mathcal {S}_j\) and \({{\,\mathrm{pinit}\,}}(\mathcal {S}_j)\). \(\square \)

System (3) could be further decomposed into systems with the factors of \(G_1\) as initial equations. However, for our purposes it is sufficient that \(G_1\) and its separant, namely \(\frac{\partial \, G_1}{\partial u_1}\), have no common differential solutions, i.e. if \(G_1(y(x),y'(x))=0\) for \(y(x) \in \mathcal {K}\) then \(\frac{\partial \, G_1}{\partial u_1}(y(x),y'(x)) \ne 0\). To ensure this we consider \(G_1 \in \mathbb {C}[u_0,u_1]\) to be square-free and with no factor in \(\mathbb {C}[u_0]\) or \(\mathbb {C}[u_1]\); compare with the hypotheses in [2].

Moreover, we can assume without loss of generality for every solution \(y(x) \in \mathcal {K}\) of a system \(\mathcal {S}\) as in (3) that \(y(0)=y_0\in \mathbb {C}\). Otherwise, if \(y_0=\infty \), consider the change of variable \(y=1/\tilde{y}\,\). Let \(G_j^*(\tilde{y}\,,\tilde{y}\,',\ldots ,\tilde{y}\,^{(j)})\) be the numerator of \(G_j(1/\tilde{y}\,,(1/\tilde{y}\,)',\ldots ,(1/\tilde{y}\,)^{(j)})\), and let \(\mathcal {S}^*\) be the system \(\{ G_j^*=0\}_{1\le j \le m}\). In this situation, if \(y(x) \in \mathcal {K}\) is a solution of \(\mathcal {S}\) such that \(y(x_0)=\infty \), then \(\tilde{y}\,(x)=1/y(x)\) is a formal Puiseux series solution of \(\mathcal {S}^*\) with \(\tilde{y}\,(x_0)\in \mathbb {C}\). Moreover, for \(j>0\), the jth derivative of \(\tilde{y}\,\) can be written as

where \(P_j\in \mathbb {C}[u_0,\ldots ,u_{j-1}]\). In this situation, we consider the rational map

where \(w_0=1/u_0\) and

Since the equality above is linear in \(u_j\), \(\Phi \) is birational. In addition, taking into account that \(u_0\) is not a factor of \(G_1(u_0,u_1)\), one has that the Zariski closure of \(\Phi (\mathbb {V}_{\mathbb {C}}(\mathcal {S}))\) is \(\mathbb {V}_{\mathbb {C}}(\mathcal {S}^*)\). Since \(\dim (\mathbb {V}_{\mathbb {C}}(\mathcal {S}))=1\), also \(\dim (\mathbb {V}_{\mathbb {C}}(\mathcal {S}^*))=1\) and one may proceed with \(\mathcal {S}^*\) instead of \(\mathcal {S}\).

For a given system \(\mathcal {S}\) as in (3) we now associate a finite set of bivariate polynomials \(\mathcal {H}(\mathcal {S})=\{H_1,\ldots ,H_m\} \subset \mathbb {C}[u_0,u_1]\). According to [10, p. 6], for every \(j \ge 2\) there exists a differential polynomial \(R_{j-1}\) of order \(j-1\) such that

Then, for \(2 \le j \le m\), we introduce the rational functions

Now we recursively substitute in (5) the variables \(u_2,\ldots ,u_m\) by

\(A_2(u_0,u_1),\ldots ,A_m(u_0,\ldots ,u_{m-1})\) to obtain the new rational functions \(B_2,\ldots ,B_m \in \mathbb {C}(u_0,u_1)\):

Observe that the denominators of the rational functions \(A_j\) are powers of the separant and depend only on \(u_0\) and \(u_1\). Finally we set

where \({{\,\mathrm{num}\,}}(f)\) denotes the numerator of the rational function f.

In this situation, we introduce a new autonomous first order algebraic differential equation, namely

and call it the reduced differential equation (of \(\mathcal {S}\)). Note that by construction, H divides \(G_1\). Moreover, if \(\mathcal {S}\) contains only one single equation \(G_1\), then the reduced differential equation of \(\mathcal {S}\) is equal to \(G_1\).

Theorem 3

Let \(\mathcal {S}\) be as in (3). Then \(\mathcal {S}\) and its reduced differential equation have the same non-constant formal Puiseux series solutions.

Proof

Let \(G_1\) be the square-free starting equation of \(\mathcal {S}\). First of all we observe that \(\gcd (G_1,\frac{\partial \, G_1}{\partial u_1})=1\) (see e.g. proof of Theorem 4.4. in [5]). Therefore, if \(y(x)\in \mathcal {K}\) is non-constant and \(G_1(y(x),y'(x))=0\), then \(\frac{\partial \, G_1}{\partial u_1}(y(x),y'(x))\ne 0\).

Let \(y(x)\in \mathcal {K}\) be a non-constant formal Puiseux solution of \(\mathcal {S}\). Then

\(G_1(y(x),y'(x))=0\), and hence \(G_1^{(j-1)}(y(x),\ldots ,y^{(j)}(x))=0\). Applying formula (4) and since \(\frac{\partial \, G_1}{\partial u_1}(y(x),y'(x))\ne 0\), we get that

and we obtain that

Therefore,

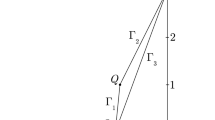

for every \(2 \le j \le m\). By Bézout’s identity, there exists \(Q \in \mathbb {C}[y]\) such that the polynomial \(Q \cdot \gcd (H_1,\ldots ,H_m)\) is an algebraic combination of the \(H_j\). Since the equation \(Q(y)=0\) has only constant solutions, y(x) is a solution of \(H(y,y')=0\).

Conversely, let \(y(x) \in \mathcal {K}\) be a non-constant solution of the reduced differential equation \(H(y,y')=\gcd (H_1,\ldots ,H_m)(y,y')=0\). Then, \(G_1(y(x),y'(x))=H_1(y(x),y'(x))=0\) and as observed above, \(\frac{\partial \, G_1}{\partial u_1}(y(x),y'(x))\ne 0\). Thus, for every \(1 \le j \le m\) the denominator of \(G_j(u_0,u_1,B_2(u_0,u_1),\ldots ,B_j(u_0,u_1))\) does not vanish at \((y(x),y'(x))\). Taking into account (7), it follows that

Now, let us recursively show \(B_j(y(x),y'(x))=y^{(j)}(x)\) for \(2 \le j \le m\), which proves the theorem: since \(B_2(y(x),y'(x))=A_2(y(x),y'(x))\) and by (5),

Then, by (4),

Using that \(\frac{\partial G_1}{\partial u_1}(y(x),y'(x))\ne 0\), we obtain \(B_2(y(x),y'(x))=y''(x)\). Now, let us assume that \(B_i(y(x),y'(x))=y^{(i)}(x)\) for \(2 \le i \le j\). By (6), \(B_{j+1}(y(x),y'(x))=A_{j+1}(y(x),\ldots ,y^{(j)}(x))\). Then, reasoning as above, we obtain

and hence, \(B_{j+1}(y(x),y'(x))=y^{(j+1)}(x).\) \(\square \)

Corollary 1

Let \(\mathcal {S}\) be as in (3) and let its reduced differential equation H be a product of factors in \(\mathbb {C}[y]\) and \(\mathbb {C}[y']\). Then \(\mathcal {S}\) has only linear formal Puisex series solutions, i.e. solutions of the form \(\alpha \,x+\beta \) for some \(\alpha ,\beta \in \mathbb {C}\).

Proof

From the construction of the reduced differential equations, and the assumption that \(G_1,\ldots ,G_m \ne 0\), we know that \(H \ne 0\). For every factor in \(\mathbb {C}[y]\) of H, there are only constant solutions, and for every factor in \(\mathbb {C}[y']\), there are only linear solutions of \(H(y,y')=0\). Then from Theorem 3 the statement follows.

Let \(\widetilde{\mathcal {S}}\) be as in (2). Then, by Lemma 1, it can be written as the union of systems \(\mathcal {S}_1,\ldots ,\mathcal {S}_K\) of the form (3). Let \(H_1,\ldots ,H_K\) be the reduced differential equations of these systems \(\mathcal {S}_1,\ldots ,\mathcal {S}_K\). Then, as a consequence of Theorem 3, \(\widetilde{\mathcal {S}}\) and

have the same non-constant formal Puiseux series solutions. Equation (9) is called a reduced differential equation of \(\widetilde{\mathcal {S}}\). Now we are in the position to generalize the theoretical results obtained in [2], in particular the two main Theorems in Sect. 3, to systems of dimension one.

Theorem 4

All formal Puiseux series solutions of the system of differential Eq. (2), expanded around a finite point or at infinity, are convergent.

Proof

By Theorem 3, the system (2) and its reduced differential equation have the same non-constant solutions. By [2, Theorem 10] all formal Puiseux series solutions of \(H=0\) are convergent. Since constant solutions are convergent as well, the statement follows.

Theorem 5

Let \(\widetilde{\mathcal {S}}\), as in (2), have a non-linear solution. Then for any point \((x_0,y_0) \in \mathbb {C}^2\) there exists an analytic solution y(x) of (2) such that \(y(x_0)=y_0\).

Proof

By Corollary 1, the reduced differential equation of \(\widetilde{\mathcal {S}}\) has at least one irreducible factor depending on y and \(y'\). Then by [2, Theorem 11] the statement follows.

Theorem 6

Let y(x) be a non-constant formal Puiseux series solution of \(\widetilde{\mathcal {S}}\) as in (2) algebraic over \(\mathbb {C}(x)\) and H the reduced differential equation of \(\widetilde{\mathcal {S}}\). Then the minimal polynomial G(x, Y) of y(x) fulfills the degree bounds

In particular if y(x) is a rational solution of \(\widetilde{\mathcal {S}}\), then its degree, the maximum of the degree of the numerator and denominator, is less than or equal to \(\deg _{y'}(H)\).

Proof

By Theorem 3, y(x) is a solution of the autonomous first order differential equation \(H(y,y')=0\). Then by Theorems 3.4 and 3.8 in [1] the degree bounds (10) follow.

4 Algorithms and Examples

In this section we outline an algorithm based on the results in Sect. 3 to derive the reduced differential equation from a given system (2). By Triangularize\((\widetilde{\mathcal {S}})\) we refer to the computation of a regular chain decomposition of a given system \(\widetilde{\mathcal {S}}\) as in Theorem 2. Then, using the algorithms in [2], it is possible to algorithmically describe all formal Puiseux series solutions of the given system. We illustrate this in the subsequent examples.

Example 1

Let us consider the system of differential equations given by

The system \(\widetilde{\mathcal {S}}\) can be decomposed into the system of regular chains

For the system \(\mathcal {S}_1\) the starting equation \(G_1\) is already square-free with no factor in \(\mathbb {C}[y]\) or \(\mathbb {C}[y']\) and we set \(H_1=G_1\). By computing \(\frac{d\,G_1}{dx}(y,y',y'')\) and setting it to zero we obtain \(y''=\frac{-y'^2}{y}.\) Hence,

Then the reduced differential equation of \(\mathcal {S}\) is

For the system \(\mathcal {S}_2\) we obtain \(H_1(y,y')=y'-1\) and \(H_2(y,y')=2-y'\), which are coprime. Hence, the reduced differential equation of \(\mathcal {S}_2\) is equal to one and therefore, the reduced differential equation of \(\widetilde{\mathcal {S}}\) is \(H(y,y')=yy'-1\).

We remark that by using the RosenfeldGroebner-command from Maple, which uses regular differential chains as described in [3], the reduced differential equation \(H(y,y')=0\) of \(\widetilde{\mathcal {S}}\) can be found as well.

The next algorithm describes all formal Puiseux series solutions of a system \(\widetilde{\mathcal {S}}\) which associated algebraic set is of dimension one. We use algorithm PuiseuxSolve described in [2] whose input is an autonomous ordinary differential equation of order one and algorithm ReduceSystem from above. The output is a finite set of truncations in one-to-one correspondence to all Puiseux series solutions.

We can devise a similar algorithm to compute a set of truncations of Puiseux solutions expanded at the infinity point replacing in the above algorithm the algorithm PuiseuxSolve by the algorithm PuiseuxSolveInfinity from [2]. However, the uniqueness of the extension can in general not be ensured.

The next algorithm decides if a system \(\widetilde{\mathcal {S}}\) as (2) has an algebraic solution and compute some of them in the affirmative case. Its correctness is based on the proof of Theorem 3 where it is shown that the non constant algebraic solutions of the system \(\widetilde{\mathcal {S}}\) are the non constant algebraic solutions of the reduced equation of \(\widetilde{\mathcal {S}}\). In section 4 of [1] there is a description of an algorithm that decides if an autonomous differential equation \(H_i(y,y')=0\) has algebraic solutions and compute them in the affirmative case. This algorithm needs that the polynomials \(H_i(y,y')\) are irreducible. Hence, the next algorithm is not factor free. Let us call the output of this algorithm AlgSol\((H_i)\).

Example 2

Let us consider system (11) of Example 1. By Theorem 3, the solutions are those of the reduced differential equation

We obtain all the formal Puiseux series solutions, expanded around zero, by the one-parameter family of solutions

with \(y_0 \in \mathbb {C}\backslash \{0\}\), and the particular solutions

There is no formal Puiseux series solution with the initial value \(y(0)=\infty \). The only linear solutions of \(\widetilde{\mathcal {S}}\) are \(y(x)=\pm 1\). The possible algebraic solutions y(x) have a minimal polynomial G(x, Y) with degree bound of \(\deg _xG\le \deg _{y'}(H)=1\) and \(\deg _YG\le \deg _{y'}(H)+\deg _{y}(H)=2\). They are given by the zeros of

The assumption on the dimension of the given system is crucial in our work. Otherwise for instance Theorem 4 does not hold anymore as the following example shows.

Example 3

Let us consider \(F(x,y,y')=x^2\,y'-y+x\). The non-convergent formal power series

is a solution of \(F=0\). Hence, y(x) is also a zero of \(\frac{d\,F}{dx}(x,y,y',y'')\) and consequently, of the resultant of F and \(\frac{d\,F}{dx}\) with respect to x, namely

Note that \(\{ \mathrm {Res}_x\left( F,\frac{d\,F}{dx}\right) (y,y',y'')=0 \}\) defines a system of autonomous ordinary algebraic differential equations of algebro-geometric dimension two.

References

Aroca, J., Cano, J., Feng, R., Gao, X.-S.: Algebraic general solutions of algebraic ordinary differential equations. In: Proceedings of the 2005 International Symposium on Symbolic and Algebraic Computation, ACM, pp. 29–36 (2005)

Cano, J., Falkensteiner, S., Sendra, J.R.: Existence and convergence of Puiseux series solutions for first order autonomous differential equations. pre-print abs/1908.09196 (2019)

Cluzeau, T., Hubert, E.: Resolvent representation for regular differential ideals. Appl. Algebra Eng. Commun. Comput. 13(5), 395–425 (2003)

Denef, J., Lipshitz, L.: Power series solutions of algebraic differential equations. Math. Ann. 267, 213–238 (1984)

Falkensteiner, S., Sendra, J.R.: Formal power series solutions of first order autonomous algebraic ordinary differential equations. Math. Comput. Sci. (2019). https://doi.org/10.1007/s11786-019-00431-6

Gerasimova, O.V., Razmyslov, Y.P.: Nonaffine differential-algebraic curves do not exist. Moscow Univ. Math. Bull. 72(3), 89–93 (2017)

Kalkbrener, M.: A generalized euclidean algorithm for computing triangular representations of algebraic varieties. J. Symb. Comput. 15, 143–167 (1993)

Lastra, A., Sendra, J.R., Ngô, L.X.C., Winkler, F.: Rational general solutions of systems of autonomous ordinary differential equations of algebro-geometric dimension one. Publ. Math. Debrecen 86(1–2), 49–69 (2015)

Ovchinnikov, A., Pogudin, G., Thieu Vo, N.: Bounds for elimination of unknowns in systems of differential-algebraic equations. Pre-print arXiv:1610.04022 (2016)

Ritt, J.: Differential Algebra, vol. 33. American Mathematical Society, Providence (1950)

Wang, D.: Elimination Methods. Springer, Heidelberg (2012)

Yang, L., Zhang, J.: Searching dependency between algebraic equations: an algorithm applied to automated reasoning. Tech. rep, International Centre for Theoretical Physics (1990)

Acknowledgements

Open access funding provided by Johannes Kepler University Linz. First author partially supported by MTM2016-77642-C2-1-P (AEI/FEDER, UE). Second and third authors partially supported by FEDER/Ministerio de Ciencia, Innovación y Universidades Agencia Estatal de Investigación/MTM2017-88796-P (Symbolic Computation: new challenges in Algebra and Geometry together with its applications). Second author also supported by the Austrian Science Fund (FWF): P 31327-N32. Third author is a member of the Research Group ASYNACS (Ref.CT-CE2019/683).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cano, J., Falkensteiner, S. & Sendra, J.R. Algebraic, Rational and Puiseux Series Solutions of Systems of Autonomous Algebraic ODEs of Dimension One. Math.Comput.Sci. 15, 189–198 (2021). https://doi.org/10.1007/s11786-020-00478-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11786-020-00478-w

Keywords

- Algebraic autonomous ordinary differential equation

- Formal Puiseux series solution

- Algebraic solutions

- Rational solutions

- Convergent solution

- Algebraic space curve