Abstract

Image interpolation is an important topic in the field of image processing. It is defined as the process of transforming low-resolution images into high-resolution ones using image processing methods. Recent studies on interpolation have shown that researchers are focusing on successful interpolation techniques that preserve edge information. Therefore, the edge detection phase plays a vital role in interpolation studies. However, these approaches typically rely on gradient-based linear computations for edge detection. On the other hand, non-linear structures that effectively simulate the human visual system have gained attention. In this study, a non-linear method was developed to detect edge information using a pixel similarity approach. Pixel similarity-based edge detection approach offers both lower computational complexity and more successful interpolation results compared to gradient-based approaches. 1D cubic interpolation was applied to the pixels identified as edges based on pixel similarity, while bicubic interpolation was applied to the remaining pixels. The algorithm was tested on 12 commonly used images and compared with various interpolation techniques. The results were evaluated using metrics such as SSIM and PSNR, as well as visual assessment. The experimental findings clearly demonstrated that the proposed method outperformed other approaches. Additionally, the method offers significant advantages, such as not requiring any parameters and having competitive computational cost.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of networking and communication technologies, multimedia applications such as image and video have become increasingly widespread. As a result, techniques developed for these environments have become the focus of researchers. While high-resolution images can be obtained with hard-ware, it is a costly process. Additionally, the unavailability of image sources in the desired dimensions presents another challenge. Therefore, soft-ware solutions for image interpolation are indispensable [1]. Image interpolation, which is based on generating a high-resolution image from a low-resolution image, is a fundamental research topic frequently employed in image processing. It finds applications in various fields such as digital photography, biomedical imaging, remote sensing, and forensic analysis [2,3,4]. When the literature is reviewed, there are many methods developed for image interpolation. These interpolation techniques can be categorized into two groups: learning-based and traditional (reconstruction-based) methods [5]. Learning-based methods [6,7,8,9,10,11] utilize training data, extracting information from training examples to generate new pixels. Traditional methods [12,13,14,15,16,17], on the other hand, rely on the original image to formulate interpolation functions. Moreover, they perform the interpolation process through mathematical formulas without requiring any training step. The most well-known mathematical approaches are nearest neighbor, bilinear, and bicubic interpolation techniques [12,13,14]. These methods are simple to implement and have low computational costs. However, they tend to introduce blurring and distortions at the edges. This is primarily due to the fact that these methods do not consider any information other than the intensity values [15,16,17]. Consequently, edge-based image interpolation approaches have gained attention in recent years [18, 19]. These methods are designed to adapt to the human visual system and prioritize edge information in the image [20,21,22,23,24].

IEDI [25], CGI [26], CED [27], PCI [28], WTCGI [29], and GEI [30] are some of the edge-based interpolation techniques proposed in recent years. In the IEDI [25] technique, the edge pixels of the images are detected using an adaptive edge filter. The main drawback of the IEDI method is its high computational time. Contrast-Guided Interpolation (CGI) [26], proposed in 2013, is another well-known interpolation approach. The authors utilized the contrast feature, which is important for the human visual system. Additionally, in the study, the interpolation process was performed in collaboration with guided decision maps and edge filtering algorithms. However, while the CGI approach produces successful results, its iterative nature results in high computational cost. Another effective approach is the CED [27] technique, which is an improved version of the CGI approach. This interpolation algorithm maintains the performance of the CGI approach while increasing the time and computational cost of diffusion processes. The PCI [28] approach, proposed in 2019, uses another version of the CGI technique. In this approach, a prediction-validation model is employed. The WTCGI [29] technique combines wavelet transform and CGI structure and is applied to the interpolation of images used in the robotics industry. The GEI [30] technique, developed in 2022, performs regional gradient estimation. This approach has shown successful results. On the other hand, an approach proposed by İncetaş in 2023 is an significant study where edges are determined nonlinearly [31]. The author utilized the well-known spike neural network (SNN) architecture, which successfully simulates the human visual system. In relevant study, edge pixels were calculated nonlinearly using the presented SNN model instead of a linear approach like gradient.

The general motivation behind the aforementioned interpolation approaches is to detect edges by using gradient-based linear methods. On the other hand, it is well-known that the human visual system is more effectively simulated using nonlinear models [32, 33]. In 2007, Demirci developed a similarity-based nonlinear edge detection approach. In the proposed approach for edge detection in color images, the similarity relationship matrix mapped the 3D color space to a one-dimensional space. The developed approach served as an alternative to gradient and statistical models. Additionally, its faster and more efficient operation is an important feature of the method [34]. Pixel similarity has been a popular approach used in many studies, such as edge detection [35], segmentation [36, 37], and noise filters [38]. In a study conducted in 2017, pixel similarity was applied to 200 images from the BSDS database. Threshold values were computed based on the similarity images to discuss the effect of pixel similarity. In another study, the relevant method was used for the automatic separation of color images [36]. The researchers calculated the number of regions in their work without the need for any initial value. Similarly, there are various studies demonstrating the effective results of the pixel similarity approach in image segmentation [37]. Additionally, Aydın et al. applied the pixel similarity approach to medical images. They utilized advanced image processing techniques for the early diagnosis of jaundice instead of blood samples and clinical tests. In their studies, they proposed a prediction model with high accuracy using the pixel similarity approach [39]. Another significant study is the proposal of using pixel similarity as a noise filter [38]. In [35], the distance values of pixels to their neighbors were evaluated as the diffusion coefficient. Thus, the traditional user-dependent diffusion filter was made adaptive. Pixel similarity shows effective performance in edge detection, it is based on linear calculation of the difference between pixels. On the other hand, the SNN-based model, which detects edges in accordance with HVS proposed in 2023, appears to be quite successful [31]. However, that study presented a complex model in which calculations had to be made on all 9 pixels in the 3 × 3 window at each stage when determining the edges. In this study, a novel approach is proposed to increase the achievement of image interpolation and edge preservation ability and reduce the calculation time by using SNN-based similarity transformation. In this study, a novel approach is proposed to increase the achievement of image interpolation and edge preservation ability by using SNN-based similarity transform. In the proposed interpolation approach, firstly, the interpolation type is selected according to the edge information obtained non-linearly from the SNN based pixel similarity of the image. While 2D-Bicubic interpolation is performed on non-edge pixels, 1D interpolation is performed on edge pixels. Thus, while the edge losses have been minimized and the interpolation success has been increased. Edge information was detected more accurately with the SNN-based pixel similarity approach. The main contribution of this work over previously approaches is the introduction of an SNN-based pixel similarity model compatible with HVS. When determining edge pixels, the gray level differences of the pixels are calculated non-linearly, unlike gradient-based linear approaches. A simpler and faster SNN model than existing SNN models is presented.

The organization of the following sections of the article is as follows; Chapter 2 Image Interpolation, Chapter 3 SNN based Pixel Similarity, Chapter 4 Proposed Method, Chapter 5 Experimental Findings and Discussion, and finally Results.

2 Image interpolation

Image interpolation refers to the process of generating a high-resolution (HR) image from a low-resolution (LR) image and is an important topic in image processing. Figure 1 represents the creation of an image with a resolution of 2Mx2N from an original image with a resolution of MxN. In the resulting image, the unknown pixels (white dots with red lines) are estimated using the known pixels (blue dots). The estimation of the unknown pixels in the interpolation process is a critical operation. In Fig. 1, the low-resolution image is denoted as ILR(m, n), while the high-resolution image obtained through 2 × 2 image interpolation is represented as IHR(2 m-1, 2n-1). The relationship between ILR and IHR is shown in Eq. 1, where the known pixel values in ILR are assigned to IHR. Then, the detection of edges and their orientations is performed. The estimation of unknown pixel values starts with the pixels on the diagonal axis which IHR(2 m, 2n). If the pixel at ILR(m, n) is an edge, its value is calculated using the known neighboring pixel values. The next step is to compute the values of unknown pixels in the horizontal and vertical positions. The unknown pixel values at IHR(2 m, 2n + 1) in the horizontal position and IHR(2 m + 1, n) in the vertical position are also calculated using the known neighboring pixels. Finally, all the unknown pixels in IHR are computed as:

3 Spike neural network based pixel similarity

The pixel similarity approach was proposed by Demirci [34]. The developed approach is a pixel similarity-based edge detection technique. The notable aspect of this technique is the absence of complex steps. The pixel similarity technique is essentially based on mathematically expressing the similarity between two pixels. Figure 2 illustrates a 3 × 3 pixel block, where p0 represents the center pixel and p1, p2,…,p8 are its neighbors. The similarity of the center pixel (p0) to any other pixel (pi) can be mathematically expressed as;

\(\left\| {p_{0} - p_{i} } \right\|\) defines the normalized Euclidean distance between two pixels in Eq. 2. Dn is the normalization coefficient and the relevant value is determined by the user. The average similarity (Asim) of a 3 × 3 mask is formulated as:

The outputs with similarity change graph according to the different Dn coefficient determined by the user is shown in Fig. 3. In addition, the output images of a randomly selected image with pixel similarity applied according to the relevant Dn coefficients are represented in Fig. 4.

Pixel similarity graph according to Eq. (2)

The non-linear structure of the similarity function in Fig. 3 is remarkable. When Fig. 4 is examined, it is seen that the pixel values approach 255 with the increase in the Dn coefficient. Therefore, the detection of correct edge pixels depends on the appropriate selection of the normalization coefficient Dn. Incetaş et al. in their study in 2019, made the relevant coefficient independent of the user. As a result, they developed an innovative automatic edge detection model in their work. Edge pixels detected with the automatically detected Dn coefficient produced results similar to the conventional method [40]. However, it is important that the relevant coefficient is user independent. Equations 6 and 7 show how the Dn is calculated automatically.

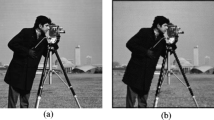

where da represents the average distance between the center pixel and its neighbors and d0,j is the similarity of any neighboring pixels. Figure 5 is the output obtained by pixel similarity of a cameraman's image obtained by automatically determining the Dn coefficient.

While pixel similarity produces effective outcomes for edge detection, it is based on simple calculations between two pixels. Therefore, it produces similar results to gradient-based approaches. Because of this situation, the pixel similarity technique considers the absolute difference or gradient difference between two-pixel values. In other words, although pixel similarity seems to be a non-linear approach, it is actually linear because it calculates the absolute differences between pixels. On the other hand, the SNN approach is a network approach that effectively simulates the human visual system. In the SNN approach, the difference values between two pixels are calculated by the firing rates of the spikes generated depending on the membrane voltage of the neurons modeled by the conductance based integrate and fire.

In the early 1950s, a study was proposed by Hodgkin and Huxley (HH) to define the characteristics of human neurons. In their study, a series of mathematical processes were used to describe the functions of neurons. In other words, neuron behaviors were simulated with differential equations [41]. The presence of complex mathematical solutions in HH's successful approach increases its computational cost. For this reason, different neuron models such as integrate-and-fire (IF), FitzHugh–Nagumo (FHN) and Izhikevich techniques have been presented [42,43,44]. The IF neuron model attracts attention because it has a simpler architecture and lower computational complexity in comprehensive neural systems. In the IF neuron model, change of membrane potential with time v(t) [45] is formulated as follows:

Here cm, gl and El represent the membrane capacity, membrane conductance and membrane reversal potential, respectively. Eex stands for the reversal potential of excitatory synapses, while Eih stands for the reversal potential of inhibitory synapses. wex and wih are the weights of the synapses and and Aex and Aih are the membrane surface areas. The variable gex(t) is the time-varying conductance of excitatory synapses. Similarly, gih(t) is the time-varying conductance of inhibitory synapses. The excitatory connections of a neuron can send a higher signal than the inhibitory connections. In this case, the membrane potential of the neuron reaches a value above the threshold voltage vth at time t and the neuron generates a new action potential. Subsequently, starting at time t + 1, the membrane potential of the neuron remains constant at its initial value vreset for a period of time τref, called the refractory time. The value of τref is important for the ease of calculation of the model. For this reason, in this study, the relevant value is set to 0 as in similar studies [46,47,48]. Figure 6 shows a basic IF neuron model.

The proposed study is introduced a pioneering edge detection methodology employing Spiking Neural Network (SNN) architecture, which effectively compete with the Human Visual System (HVS). Unlike traditional linear edge detection models relying on pixel similarity, this novel approach incorporates a multi-layered SNN structure depicted in Fig. 7. The SNN comprises three layers: the receptor layer, an intermediate layer, and an output layer. In the receptor layer, each pixel in the image is represented, forming a 3 × 3 receptor field (RF). The relationships between the central pixel and its neighbors are analyzed through synaptic connections to the intermediate layer, which consists of 16 separate neurons facilitating computations in 8 directions. These neurons measure the difference between the central pixel and its neighboring pixels using excitatory and inhibitory synapses represented by synaptic matrices denoted as X and Δ respectively. The intermediate layer neurons are interconnected with 8 separate output neurons to express the similarities between the central pixel and its neighbors. The firing frequency of each output neuron indicates the dissimilarities between the central pixel and its neighbors. For instance, the synaptic connections from the RF to a specific neuron in the intermediate layer which M5A, facilitate computations between the central pixel and its bottom neighbor. Excitatory synapses from the neighbor to M5A and inhibitory synapses from the central pixel to M5A modulate the membrane potential of M5A. An increase in the membrane potential of M5A shows a higher gray-level value in the neighbor compared to the central pixel, leading to the formation of spikes. This process is repeated for all directions and neuron pairs in the intermediate layer. As a result, in the output layer is assigned to the central pixel, providing an advanced edge detection capability that provides the dynamic processing of the SNN architecture that mimics the complexities of the Human Visual System. As a result, the differences between the center pixel and neighboring pixels were calculated with the help of values expressed as Q1 … Q8 in the output layer. Thus, the differences between the gray levels of the pixels were obtained in accordance with HVS, not on a gradient basis, and were used for edge detection based on pixel similarity.

Unlike gradient or similarity-based approaches, the proposed method determines the difference calculations according to the firing numbers of neurons with inhibitory and excitatory synaptic connections. Therefore, two different synaptic matrices in the same direction are used to determine the difference information between neighboring pixels. In the model, when one of the M5A and M5B neurons produces spikes at certain intervals, these spikes will be transmitted to the Q5 neuron in the output layer. In the proposed network structure, all neurons in the intermediate layer have only an excitatory synaptic connection with their corresponding neuron in the output layer. Depending on the number of excitatory spikes from neurons M5A and M5B, the increase in the membrane potential of neuron Q5 will also cause spike formation at certain intervals. When this process is calculated for all neighboring pixels, 8 different values will be obtained. IF neuron model is used in the proposed method [49]. Additionally, the IF model used was designed according to Eq. 9–15 [31, 45, 50].

The peak conductivity values of the pixels, qex and qih in the RF are calculated as:

where Gxy value is the gray level value of the pixel in x, y coordinates. \(\alpha\) and \(\beta\) are normalization coefficients and are set to 255. The following equations were used for neurons in the intermediate layers.

In Eq. 10, \(\tau_{ex}\) and \(\tau_{ih}\) are the time constants of excitatory and inhibitory synapses. Synaptic current Isyn formed by excitatory and inhibitory connections formulated as;

Membrane potential of any neuron v(t) is calculated as;

The equations given above for any neuron are calculated similarly for 16 neurons. When the membrane potential of neurons reaches the threshold voltage, the neurons form spikes and then return to the initial state. These spikes form separate Spike Trains for each neuron. Spike train belonging to neurons is calculated as SN

8 neurons \(\left( {Q_{1} , \ldots ,Q_{8} } \right)\) in the output layer are produced for each receptor field. The relevant neurons have synaptic connections with neuron pairs in the intermediate layer, and the output neurons are calculated by the following equations.

In Eq. 14 gout and τout represent the time-changeable conductivity and time constant, respectively; Iout is the total synaptic current of any QN neuron.\(v_{QN}\) is the membrane potential of the QN neuron. In the output layer, the firing rates of the spindles produced by the QN neuron during the T (ms) period of FQ1 per second can be determined as in Eq. 15.

4 Image interpolation by using SNN based pixel similarity

In recent interpolation studies, it is seen that the edges are determined as vertical, horizontal and diagonal (45 and 135 degrees). While most of these have detected the edges with linear gradient values, the edges have been detected as non-linear, especially with the SNN-based approach developed by İncetaş recently [28]. In this study, a new approach SNN based similarity transform has put forward for non-linear detection of edges in four different directions.

For example, to determine the horizontal edges, the similarities between the center pixel and its left neighbor and between the center pixel and its right neighbor are calculated in a 3 × 3 neighborhood. Figure 8 represents a mask used to calculate horizontal edges. Then their average is calculated. For vertical edges, the similarity between the center and the pixel above and the center and the pixel below is calculated and their average is evaluated. Similarly, the average of the similarities between the center pixel and its neighbors is calculated when determining the 45- and 135° edges. Thus, the edges in 4 different directions are determined as non-linear. In Fig. 9, the edge outputs in different directions can be seen.

Figure 10 represents the block diagram of the proposed interpolation technique. The low-resolution input image ILR is first converted to gray level image. Afterwards, the Similarity Transform approach, which stands out with its non-linear feature, was used for the detection of edge pixels. The related approach makes 4-way edge detection in the image. Because the most important disadvantage in interpolation studies is the information loss in the edge pixels. Therefore, determining the edge pixels and their orientation in low resolution images is vital in the interpolation process. While determining the edges, the pixels on the diagonal axis are checked first. The difference in pixels on the diagonal, horizontal and vertical axis means that the relevant pixel is an edge pixel. In the diagonal, if there is a difference between two pixels, it means that the corresponding pixel is an edge and the edge direction is assigned as 45° or 135°. This process is also carried out between horizontal and vertical neighborhoods. The edge direction on the diagonal axis is calculated by Eq. 16, while the edge direction on the horizontal and vertical axis is made according to Eq. 17.

Firstly, pixel values at IHR(2 m, 2n) location are calculated according to the pixel value at the ILR(m,n) location. Subsequently, IHR(2 m-1, n) and IHR(2 m, 2n-1) values were determined similarly. Finally, bicubic interpolation processes are applied for each non-edge pixel. For edge pixels, calculations used in many studies were used [26,27,28, 40]. Related equations are given in Eqs. 18–22.

\(\omega\) is the interpolation coefficient and its value is set to 0.575. While I expresses the gray level value of any pixel to be interpolated; Ix, Iy, Iz and It represent 4 known pixels around the unknown pixel. The corresponding values in the high-resolution IHR image are if \(O(r,s) = 45^{ \circ }\);

if \(O(r,s) = 135^{ \circ }\);

if \(O(r,s) = 0^{ \circ }\);

if \(O(r,s) = 90^{ \circ }\);

5 Experimental results and discussion

The experimental findings were obtained using a computer with an Intel Core i7-12700 K processor and 32 GB of RAM. The average computation time of the proposed approach was calculated using MATLAB soft-ware. To evaluate the performance of the proposed approach, 12 frequently used images were utilized. These images are Bike, Wheel, Boats, Butterfly, House, Cameraman, Baboon, Peppers, Fence, Airplane, Barbara, and Star. In addition, the developed technique was compared and tested against 7 different approaches, namely SNN [28], GEI [27], WTCGI [26], PCI [25], CED [24], CGI [23], and IEDI [22]. Related images were also used in comparison studies. Therefore, the validity and reliability of the experimental findings are high. In the experiments, as in recent studies, the 1/2 × 1/2 sub-sampling of the 12 images was performed. Commonly used methods for sub-sampling include nearest neighbor, linear, and bilinear approaches. However, in this study, a successful method called direct inference was employed [27]. Subsequently, image interpolation was applied to the ILR image obtained through direct inference using a 2 × 2 upsampling to generate the IHR image. The resulting images were then compared to the original images. PSNR and SSIM metrics were used for the comparison process. Table 1 represents the comparative performance of all methods in terms of PSNR.

When examining the results in Table 1, it is evident that the proposed approach achieves high success. Particularly noteworthy are the interpolation works carried out in recent studies, such as GEI and SNN. On the other hand, when compared to these approaches, the proposed model yields more effective results. This demonstrates that the critical stage of edge detection, which is a crucial aspect in interpolation works, is performed more successfully with the proposed method. SSIM results, which is another metric, are given in Table 2. The results in Table 2 show compatible with Table 1. The developed technique showed superior performance in all images. In other words, using similarity transform in edge detection has increased the success of interpolation. Output images of GEI, SNN and proposed methods are given visually in Fig. 11. There are 3 methods that give the best results in the related figure. Although it is noteworthy that the methods protect the edge pixels, Table 1 and Table 2 prove the success of the proposed method. In addition, Similarity Based interpolation approach produced faster results than other methods.

When the studies conducted in recent years are examined, it is seen that the methods generally benefit from gradient-based linear structures. However, it is known that non-linear structures represent HVS more successfully. The non-linear structure of the proposed method is one of its important advantages. İncetas [28] model directly used the inter-pixel differences obtained with SNN. In this proposed study, these non-linear differences were used in a similarity-based non-linear edge detection approach. In addition, GEI are successful interpolation techniques developed in 2022. However, it is an important drawback that method needs user dependent parameters. GEI is an interpolation technique that requires calculating regional gradients for each pixel. Noise in the input image negatively affects the gradient estimation, resulting in noise and artifacts as a result of interpolation. Errors made during regional gradient estimation may become more apparent as a result of interpolation and may reduce image quality. The SNN based pixel similarity is completely user-independent and does not need any parameters. CGI, WTCGI and CED approaches often involve complex algorithms, which require high computational cost. Therefore, it is inevitable that processing times will increase, especially for high-resolution images. Additionally, the presence of noise in the input images affects the results of the respective approaches. The performance of contrast-guided interpolation is highly dependent on the quality of the input images. Additionally user dependent. Our proposed method, unlike existing gradient-based linear techniques, determines the difference between pixels non-linearly. Thanks to the proposed SNN model, edges are determined in accordance with HVS. In addition, with the help of the proposed model, calculations are made faster than previously presented SNN models. In addition to all these, it is seen that the success of interpolation is high when compared to state-of-art articles.

In the similarity image, the closer the gray level value of a pixel is to its neighbors, the closer the similarity value is to 255 (or 1). It’s attractive easy to interpolate an area with pixels that are similar to each other because the gray level values are also very similar. However, when the entire image is interpolated in the same way, distortions occur, especially at the edges. Techniques developed for this purpose generally include linear structures such as gradients. However, proposed architecture produces results more similar to the non-linear structure of the human visual system. Moreover, the computational cost is competitive compared to various approaches that simulate the human visual system. The calculation time of the methods is shown in Table 3. It is clear from the details of Table 3 that the CED approach performs transactions at the best times. However, the proposed method is clearly superior to methods such as CGI and IEDI.

Although the proposed SNN model and interpolation approach have successful results, the disadvantage of the method is that it does not include a learning process. It will be possible to achieve higher success by using more trained data sets with learning processes that can be easily included in SNN models.

6 Conclusion

In disciplines such as defense, criminal and medicine, where improving image quality is of vital importance, increasing the size of the image to preserve the edges is very important. In many studies proposed in recent years, the importance of preserving edge information in the interpolation process has been emphasized. For this reason, the studies consist of two basic stages. These stages can be expressed as edge detection and applying the appropriate interpolation technique. The use of linear approaches such as gradients in the detection of edge information is seen in current studies. However, algorithms representing the non-linear feature of the human visual system give more successful results. In this study, a novel nonlinear interpolation method based on pixel similarity with SNN architecture is proposed. The focus of the proposed study is the edge detection, which is the first phase of the interpolation process. In the second stage, the same procedures were carried out as in the current studies. Experimental findings have shown that the proposed method is more effective. In addition, the proposed SNN based pixel similarity approach produced competitive faster results than the others. Numerical evaluations were made using SSIM and PSNR performance criteria. Both numerical results and visual evaluations provided promising interpolation results. Additionally, by including the learning phase, it is planned to future work on edge detection based on pixel similarity and use the determined edges in different image processing areas such as image interpolation, content-based image retrieval and diffusion filter.

Data availability

All data have appeared in this paper.

References

Jiang, C., et al.: Image interpolation model based on packet losing network. Multimed. Tools Appl. 79, 25785–25800 (2020)

Karwowska, K., Wierzbicki, D.: Using super-resolution algorithms for small satellite imagery: a systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 15, 3292–3312 (2022)

Zheng, J., et al.: Image interpolation with adaptive k-nearest neighbours search and random non-linear regression. IET Image Proc. 14(8), 1539–1548 (2020)

Guo, A., et al.: An energy-efficient image filtering interpolation algorithm using domain-specific dynamic reconfigurable array processor. Integration 96, 102167 (2024)

Hung, K.-W., Wang, K., Jiang, J.: Image interpolation using convolutional neural networks with deep recursive residual learning. Multimed. Tools Appl. 78, 22813–22831 (2019)

Liu, X., et al.: Image interpolation via graph-based Bayesian label propagation. IEEE Trans. Image Process. 23(3), 1084–1096 (2013)

Baghaie, A., Yu, Z.: Structure tensor based image interpolation method. AEU Int. J. Electron. Commun. 69(2), 515–522 (2015)

Thévenaz, P., Blu, T., Unser, M.: Interpolation revisited [medical images application]. IEEE Trans. Med. Imaging 19(7), 739–758 (2000)

Xin, J., et al.: Wavelet-based dual recursive network for image super-resolution. IEEE Trans. Neural Netw. Learn. Syst. 33(2), 707–720 (2020)

Diana Earshia, V., Sumathi, M.: A guided optimized recursive least square adaptive filtering based multi-variate dense fusion network model for image interpolation. Signal Image Video Process. 18(2), 991–1005 (2024)

Kong, L., et al.: FISRCN: a single small-sized image super-resolution convolutional neural network by using edge detection. Multimed. Tools Appl. 83(7), 19609–19627 (2024)

Amidror, I.: Scattered data interpolation methods for electronic imaging systems: a survey. J. Electron. Imaging 11(2), 157–176 (2002)

Zhang, L., Wu, X.: An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 15(8), 2226–2238 (2006)

Sánchez-García, E., et al.: A new adaptive image interpolation method to define the shoreline at sub-pixel level. Remote Sens. 11(16), 1880 (2019)

Liu, Y., Huang, Q., Sato, K.: Differential filtering algorithm for robot welding seam image enhancement. In: Journal of Physics: Conference Series. IOP Publishing (2020)

Chao, Y., et al.: Adaptive directional cubic convolution for integrated circuit chip defect image interpolation. Int. J. Circuits 15, 1084–1090 (2021)

Gupta, S., Sharma, D.K., Ranta, S.: A new hybrid image enlargement method using singular value decomposition and cubic spline interpolation. Multimed. Tools Appl. 81(3), 4241–4254 (2022)

Guan, H., et al.: Using correction parameters to improve real-time video interpolation in low-cost VLSI implementation. Microelectron. J. 117, 105254 (2021)

Benseddik, M.L., et al.: Interpolation-based reversible data hiding in the transform domain for fingerprint images. Multimed. Tools Appl. 81(14), 20329–20356 (2022)

Deeba, F., et al.: Wavelet integrated residual dictionary training for single image super-resolution. Multimed. Tools Appl. 78, 27683–27701 (2019)

Shivagunde, S., Biswas, M.: Single image super-resolution based on modified interpolation method using MLP and DWT. In: 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI). IEEE (2019)

Dharejo, F.A., et al.: TWIST-GAN: towards wavelet transform and transferred GAN for spatio-temporal single image super resolution. ACM Trans. Intell. Syst. Technol. (TIST) 12(6), 1–20 (2021)

Xue, S., et al.: Wavelet-based residual attention network for image super-resolution. Neurocomputing 382, 116–126 (2020)

Sahito, F., et al.: Wavelet-integrated deep networks for single image super-resolution. Electronics 8(5), 553 (2019)

Hossain, M.S., et al.: Image resolution enhancement using improved edge directed interpolation algorithm. In: 2019 9th IEEE International Conference on Control System, Computing and Engineering (ICCSCE). IEEE (2019)

Wei, Z., Ma, K.-K.: Contrast-guided image interpolation. IEEE Trans. Image Process. 22(11), 4271–4285 (2013)

Ye, W., Ma, K.-K.: Convolutional edge diffusion for fast contrast-guided image interpolation. IEEE Signal Process. Lett. 23(9), 1260–1264 (2016)

Zhong, B., Ma, K.-K., Lu, Z.: Predictor-corrector image interpolation. J. Vis. Commun. Image Represent. 61, 50–60 (2019)

Zhao, Y., Huang, Q.: Image enhancement of robot welding seam based on wavelet transform and contrast guidance. Int. J. Innov. Comput. Inf. Control 18, 149–159 (2022)

Jia, Z., Huang, Q.: Image interpolation with regional gradient estimation. Appl. Sci. 12(15), 7359 (2022)

İncetaş, M.O.: Image interpolation based on spiking neural network model. Appl. Sci. 13(4), 2438 (2023)

Bhateja, V., Misra, M., Urooj, S.: Non-linear polynomial filters for edge enhancement of mammogram lesions. Comput. Methods Progr. Biomed. 129, 125–134 (2016)

Fang, Y., et al.: Stereoscopic image quality assessment by deep convolutional neural network. J. Vis. Commun. Image Represent. 58, 400–406 (2019)

Demirci, R.: Similarity relation matrix-based color edge detection. AEU Int. J. Electron. Commun. 61(7), 469–477 (2007)

Incetaş, M., et al.: Eşik Seçiminin Benzerliğe Dayalı Kenar Belirlemeye Etkisi (2017)

Guvenc, U., Elmas, C., Demirci, R.: Automatic segmentation of color images. J. Polytech. Politeknik Dergisi 11(1), 9–12 (2008)

Incetas, M.O., Demirci, R., Yavuzcan, H.G.: Automatic segmentation of color images with transitive closure. AEU Int. J. Electron. Commun. 68(3), 260–269 (2014)

Tanyeri, U., İncetaş, M.O., Demirci, R.: Similarity based anisotropic diffusion filter. In: 2016 24th Signal Processing and Communication Application Conference (SIU). IEEE (2016)

Aydın, M., et al.: Neonatal jaundice detection system. J. Med. Syst. 40, 1–11 (2016)

Incetas, M.O., Demirci, R., Yavuzcan, H.G.: Automatic color edge detection with similarity transformation. Gazi Univ. J. Sci. 32(2), 458–469 (2019)

Lama, R.K., et al.: Interpolation using wavelet transform and discrete cosine transform for high resolution display. In: 2016 IEEE International Conference on Consumer Electronics (ICCE). IEEE (2016)

FitzHugh, R.: Mathematical models of excitation and propagation in nerve. Biol. Eng. 9, 1–85 (1969)

Nagumo, J., Arimoto, S., Yoshizawa, S.: An active pulse transmission line simulating nerve axon. Proc. IRE 50(10), 2061–2070 (1962)

Izhikevich, E.M.: Simple model of spiking neurons. IEEE Trans. Neural Netw. 14(6), 1569–1572 (2003)

Wu, Q., et al.: A visual attention model based on hierarchical spiking neural networks. Neurocomputing 116, 3–12 (2013)

Kerr, D., et al.: A biologically inspired spiking model of visual processing for image feature detection. Neurocomputing 158, 268–280 (2015)

Kerr, D., et al.: Biologically inspired edge detection. In: 2011 11th International Conference on Intelligent Systems Design and Applications. IEEE (2011)

Kerr, D., et al.: Biologically inspired intensity and range image feature extraction. In: The 2013 International Joint Conference on Neural Networks (IJCNN). IEEE (2013)

Wu, Q., et al.: Edge detection based on spiking neural network model. In: Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence: Third International Conference on Intelligent Computing, ICIC 2007, Qingdao, China, August 21–24, 2007. Proceedings 3. Springer (2007)

İncetaş, M.O.: Anisotropic diffusion filter based on spiking neural network model. Arab. J. Sci. Eng. 47(8), 9849–9860 (2022)

Acknowledgements

The authors would like to thank to the anonymous reviewers and editor for their insightful comments.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK). This article is not supported by any funding.

Author information

Authors and Affiliations

Contributions

MK conceives the idea and writes the draft of the paper, implements the algorithm and performs analysis.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent for publication

All authors appeared in this paper agreed to publication in this journal.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kılıçaslan, M. Image interpolation with spiking neural network based pixel similarity. SIViP (2024). https://doi.org/10.1007/s11760-024-03362-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03362-3