Abstract

In this paper we present a novel data-driven subsampling method that can be seamlessly integrated into any neural network architecture to identify the most informative subset of samples within the original acquisition domain for a variety of tasks that rely on deep learning inference from sampled signals. In contrast to existing methods that require signal transformation into a sparse basis, expensive signal reconstruction as an intermediate step, and that can support a single predefined sampling rate only, our approach allows the sampling inference pipeline to adapt to multiple sampling rates directly in the original signal domain. The key innovations enabling such operation are a custom subsampling layer and a novel training mechanism. Through extensive experiments with four data sets and four different network architectures, our method demonstrates a simple yet powerful sampling strategy that allows the given network to be efficiently utilized at any given sampling rate, while the inference accuracy degrades smoothly and gradually as the sampling rate is reduced. Experimental comparison with state-of-the-art sparse sensing and learning techniques demonstrates competitive inference accuracy at different sampling rates, coupled with a significant improvement in computational efficiency, and the crucial ability to operate at arbitrary sampling rates without the need for retraining.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Advances in machine learning (ML) and miniaturization of electronics have created favorable conditions for rapid proliferation of data sensing and processing. It is expected that 30 billion sensor-enabled Internet of Things (IoT) devices will be deployed worldwide by 2030, providing a basis for novel applications in areas ranging from precision agriculture, over personal healthcare, to industrial automatization. However, sensor sampling is one of the most energy-hungry aspect of ubiquitous computing [1], while on-device ML can incur further energy burden on battery-powered devices. Consequently, there is an immediate need for improving the efficiency of the sampling–learning pipeline by reducing the amount of sensed and process data to the minimum necessary for accurate ML inference.

According to the Nyquist theorem, to be reconstructed, a signal needs to be sampled at rates at least twice as high as the highest frequency contained in it. More recently, however, reconstruction of sparse real-world signals sampled at sub-Nyquist rates was demonstrated through the concept termed compressive sensing (CS) [2, 3]. Yet, the benefits of CS do not come for free, as CS remains challenging to implement. First, CS requires that the sparsifying basis of the signal (i.e. the projection space within which the signal is fully represented with a small number of non-zero values) is known in advance. Second, reconstructing the signal from sparse measurements implies solving an NP hard problem usually through iterative solutions that are computationally demanding.

Realizing that high-level ML inference, not signal reconstruction, is the primary reason for sensing, recent work proposes the merging of CS with deep learning (DL) models to deliver a complete sensing–learning pipeline and jointly learn the appropriate sparse sampling patterns and the downstream inference model [4]. While such short-circuiting of CS and DL inference provides clear benefits by avoiding the costly intermediate reconstruction of the signal, the existing solutions are still plagued with practical problems. First, most of the CS–DL sampling strategies are only applicable to signals that are already transformed to their sparsifying basis and not to the original signal samples. Since such transformation often requires that full resolution samples are available, the actual amount of physical sampling is not reduced. Second, the existing CS–DL approaches support a fixed sparse sampling rate specified before model training. On the other hand, sensing and ML are increasingly deployed in highly dynamic contexts where the properties of the sensed phenomena and, thus, the amount of sensing that is necessary for successful ML inference, vary.

In this work, we address the above limitations and, building on the CS theory and the actual real-world applications’ requirements, introduce new DL functionalities. We propose a data-driven subsampling scheme that provides efficient sparse sampling for various data domains and downstream DL network architecture. In addition, we develop a CS–DL training procedure that enables on-the-fly adaptation of the signal sampling rate. Our approach achieves this without the need for storing multiple DL models in memory and incurs graceful degradation of DL inference accuracy, thus opens opportunities for balancing inference accuracy and resource usage, which is highly beneficial for lightweight IoT sensor-enabled devices.

To summarize, the contributions of our work include:

-

We design a novel subsampling layer whose weights are trained to uncover the most task-informative subset of samples in the original signal acquisition domain;

-

We develop a training method that results in a rate-flexible CS–DL pipeline;

-

We evaluate our approach on four different datasets with four different neural network architectures and demonstrate that it affords graceful monotonic reduction in DL inference with reduced sampling rates;

-

We show that our solution delivers performance comparable to that of a state-of-the-art fixed-rate CS solution, while also enabling sensing rate adaptability.

2 Related work

Compressive sensing (CS), often using sampling rates a few orders of magnitude lower than the Nyquist rate, is poised to have significant impact in areas where the rate reduction implies reduced resource usage and accelerated decision making. Theoretically, CS is founded in the restricted isometry property of the sampling matrix, i.e., its ability to preserve information when sampling sparse vectors. Consequently, research has focused on identifying suitable sampling matrices and reconstructing original signals from sparse measurements. Deep learning (DL) naturally complements CS for both of the above tasks, while also enabling direct learning from sparse samples [5]. Thus, in the rest of the section we focus on research that successfully merges CS and DL.

CS–DL integration allows a joint search for the sampling matrix and the appropriate DL-based reconstruction pipeline. Bahadir et al. [6] jointly trained a U-net architecture with a Bernoulli matrix that defines the sample selection pattern in the magnetic resonance imaging (MRI). The training of the binary elements of the sampling matrix was performed through a sigmoid based thresholding, while the sampling procedure was performed in the discrete Fourier domain, and not directly in the original pixel domain. In practice, this requires additional steps such as transforming the data into Fourier space and back to the original domain. These transformations, but especially the derivation of the correct sparse vector of Fourier coefficients, are computationally prohibitive and are worthwhile in areas such as MRI where the reduction in the number of measurements is significant [7]. Huijben et al. [8] also tackled the CS–DL pipeline optimization from a probabilistic point of view. The authors used a relaxation of the Gumbel-max re-parametrization trick, that enabled them to sample from a categorical probability distribution inside a neural network. Unlike the previous mentioned work, this can be applied to both reconstruction and classification tasks, and was validated in both the pixel domain and the spatial frequency domain. However, this method too was only assessed on image data, and only aimed for a single, reduced subsampling rate, predefined before the training.

On-the-fly adaptation of the acquisition pattern is important in applications where the sensed data, the quality of inference expectations, or the available energy/computational resources vary over time. This is often the case in mobile computing, where, for instance, for human activity recognition from accelerometer samples we can use fewer samples if a person is conducting a simple activity (e.g. sitting), then if a person is conducting a more complex activity (e.g. walking up the stairs). Pineda et al. proposed sequential acquisition of samples guided by reinforcement learning [9]. Although in this case the sampling procedure can be adapted to the data and thus lead to better task performance for the same rate compared to static, non-adaptive sampling schemes, the utility is limited by the iterative nature of the solution—only one sample is acquired at a time, used for DL inference and for deciding the next sample to be acquired, and the whole process is iterated until the desired sampling rate is reached.

Non-iterative CS–DL pipeline that operates over multiple sampling rates was first proposed by Lohit et al. [10]. It was based on a three-steps training process: first, for the highest desired sampling rate, second, for the lowest sampling rate (all other parameters frozen) and, finally, for an a additional sampling rate between the highest and the lowest (with the rest of parameters frozen). In another work [11], more closely related to our research, the authors also address the rate adaptivity aspect in the CS–DL context. Their solution is to create measurement vectors of various lengths, simulating samples acquired at different measurement rates, and use them to re-train a neural network with a fixed input layer size. Unlike [11], where the sensing matrix is a fixed binary one, our approach is based on a data-driven, learned sampling scheme. In addition, the structure of the measurement vectors also differs: in [11] these vectors are zero-padded at the end to compensate for the size mismatch between their variable length and the fixed input size of the inference network, while in our case, the measurement vectors have zero values in the locations corresponding to the skipped samples.

Our previous work [12] tackles joint sampling and inference training of the CS–DL pipeline. Yet, different from the approach presented in this paper, the sampling was implemented with a fully connected layer with a kernel constraint limiting the training to diagonal matrix values with the remaining elements set to zero. Matching the input size and the subsampling layer dimensionality, as we do in this work, drastically reduces the computation complexity, especially for large 1-dimensional inputs. In addition, in this paper we improved the learning of a sparse sample selection during the training by adding the \(l_1\)-norm constraint on the weights associated with each input sample. Finally, in this paper we experimentally confirm general applicability of variable rate CS–DL, while the analysis in [12] remains confined to the activity recognition domain.

3 Rate-adaptable sensing-learning pipeline

In this section we present our approach for enabling controlled reduction in signal sampling rate in situations where such signal is further used for DL-based inference. The key novelties of our approach are the subsampling technique that relies on a lightweight subsampling layer for a soft selection of the most important points in a given signal and a two-step training method ensuring that the downstream DL model can indeed provide high-quality inference on the sub-sampled signal.

Subsampling scheme and inference network exemplified on an EEG signal. Each data sample from a 30 s window frame sampled at 100 MHz is encoded by the subsampling layer, which was trained to “learn” the contribution each sample brings to the classification task. The output of the subsampling layer is connected to the inference subnetwork, here implemented as a convolutional neural network, yet any kind of architecture can be used

3.1 Subsampling technique

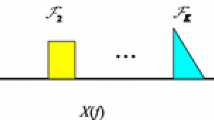

For a given inference task, we jointly train the DL inference model and the subsampling layer that enforces the optimal subsampling scheme \(A \in \mathcal {R}^N\), where N is the total number of samples acquired (Fig. 1). To achieve this, the subsampling layer maps, one-on-one, each of the input’s \(x \in \mathcal {R}^N\) samples to the corresponding layer weights, where the layer weights are trained with the \(l_1\)-norm constraint. The \(l_1\)-norm is known to enforce sparsity by nullifying less important features or, in this case, suppressing certain input samples, and is often used to alleviate over-fitting and/or to perform feature selection. The novelty of our approach comes from applying the \(l_1\)-norm constraint at the input, as a mean of “sample selection”, without explicitly specifying the number of samples to be retained. In this manner, A enables a generic sparse selection of input samples that are optimal for the given DL inference task. The process of acquiring the optimal sparse subset of samples y from the original input x can be written as:

where the function f can be any neural network, parameterized with the weights \(\theta \); \(\mathcal {L}\) defines the loss function of the network, \(\hat{z}\) are the network outputs, z the actual labels, and A the subsampling layer’s weights. The sparsity enforcing coefficient \(\lambda \) is a penalty term to the loss function. The magnitude of this coefficient is determined by the desired baseline sub-sampling sparsity. In practice, a very large value of \(\lambda \) constrains the sampling coefficients towards zero, which may prevent training convergence.

The \(l_1\)-norm constrained training naturally supports non-uniform sub-sampling beyond the original baseline sparsity, as it emphasizes the difference among the impact of individual input samples on the final inference task. From the learned sampling scheme, we can now distill sampling patterns corresponding to various percentages of selected input samples. The percentage of retained samples (M) from all the original input samples (N) is termed the sampling rate \(r = M/N \cdot 100\%\) and various sampling rates can be achieved by pruning the elements of A according to the increasing weights’ magnitude.

3.2 Two-step training algorithm

One of the major downsides of most DL-based approaches for CS [13, 14] comes from the fact that once trained, a network is only valid for a single sampling rate, while many applications benefit from adjusting the sampling rates during runtime, according to the context’s requirements. For instance, the optimal sampling rate for data coming from a sensor monitoring the vibrations of a bridge significantly fluctuates with time, according to the rate of passing cars. Similarly, certain human activities can be inferred at very low sampling rates, while other, more complex require higher sampling rates. As ML increasingly gets deployed on low-resource edge devices, the deployment of multiple DL models, each trained for a particular sampling rate, becomes impractical. To overcome this, we devise a training method that results in a single sampling–learning pipeline that is able to seamlessly handle data sampled at different sampling rates.

Our two-step training technique consists of a pre-training step, where the subsampling pattern is learned, which is followed by the fine-tuning step, where the inference model is adapted to various sampling rates (Algorithm 1). To achieve different sampling rates, we set different percentages of elements from the previously learned subsampling pattern to zero, leaving all the other elements unchanged. The upper and lower limits of the percentages of zeroed elements are given by the specifications of each particular application (i.e. which sub-sampling rates it should support), but also on the inherent sparsity of the data (some data sets allow very low subsampling rates, while others support only higher sampling rates).

The training algorithm fine tunes the model for the given subsampling rates, where the number of these rates is defined at the training time. Since the training complexity correlates with the number of rates used for fine tuning, using a few intermediate sampling rates between the highest and the lowest rate is suggested. Furthermore, our experiments demonstrated that fine-tuning over a handful of rates is often sufficient for ensuring high accuracy over the entire range of desired subsampling rates.

4 Experimental results

We assess the effectiveness of our approach across various architectures and data domains that align with our primary objective of reducing input samples to enhance resource efficiency. However, it is important to acknowledge that certain architectures, such as large language models (LLMs), may present challenges due to their complex sequential processing (that makes input subsampling difficult as it might drastically change the meaning of the input) and their highly sophisticated training, and careful consideration of hyperparameters. All these challenges hinder their integration with other network architectures. Conversely, LLMs typically do not need input data reduction and are less prevalent in resource-constrained environments, falling beyond the primary focus of our approach.

4.1 Spoken keyword recognition

Spoken keyword recognition (SKR) is a critical affordance enabling interaction with ubiquitous computing devices. The unpredictability of interaction requires that microphone sensing is on at almost all times, yet, the density of samples needed for successful SKR varies with different contextual factors. We therefore assess whether our solution can provide adaptable sensing upon which context-aware rate adaptation for SKR would be built.

We focus on the 8 keywords (“up”, “down”,“left”, “right”, “go”, “stop”, “yes” and “no”) subset of the Google Speech Commands dataset [15], consisting of 8000 audio clips of 1 s or less sampled at 16 kHz. For the inference task we used a convolutional recurrent network with attention mechanism [16]. This network at the input expects pre-computed spectrograms of the audio waveforms and has a dedicated layer that computes the Mel spectrograms, before feeding them to the downstream model. At the ingress of this network, before the computation of the spectrograms, we append the subsampling layer. Unlike common CS approaches that target sub-sampling from a sparsifying domain (e.g. the Fourier domain), we identify the most task-informative samples in the original sampling domain. Such a strategy is much more efficient, since it can early on reduce the amount of data that flows through the pipeline (example in Fig. 2).

We trained the entire pipeline (consisting of the subsampling layer, the Mel spectrogram layer and the inference model), for a maximum of 100 epochs, using the Adam optimizer, with the initial learning rate of 0.001 and 0.4 decay every 10 epoch. The training converged after 28 epochs.

In case no additional subsampling is performed, the inference accuracy of our pipeline is 93% on previously unseen utterances, which is very close to the 94% accuracy obtained using the inference model only (without the subsampling layer) on the same dataset. Thus, the addition of the subsampling layer does not incur a substantial performance penalty.

After the initial training phase, the accuracy of the model ranges from 93% when using all the samples (100% sampling rate) to 70% when using half the samples, to just 32% when using only 10% of the samples (Fig. 3 orange points). Once the novel fine-tuning training step is conducted for ten different sampling rates between 10 and 100%, the slope of the accuracy-sampling rates trade-off is much smoother, and the model might be used for any desired sampling rate, with an accuracy between 91 and 65% (Fig. 3 blue points).

4.2 Environmental sound classification

We then evaluate our solution on a related domain of environmental sound classification. We use the ESC-10 dataset [17], an audio annotated collection of 400 5-s-long recordings of various sound events, sampled at 44.1 kHz and labeled as belonging to one of the 10 equally sized classes: “dog barking”, “rain”, “sea waves”, “baby crying”, “clock ticking”, “person sneezing”, “helicopter”, “chainsaw”, “rooster” or “fire crackling”. The dataset is arranged into 5 uniformly sized cross-validation folds ensuring that clips originating from the same initial source are always contained in a single fold. We used the first three folds for training, the fourth fold for validation, and the fifth for testing. In addition, to reduce the risk of over-fitting due to the limited size of this dataset, we augmented the data by adding Gaussian white noise and time stretching.

For the inference we construct a convolutional network consisting of a sequence of three blocks of CNNs with ReLU activations and MaxPooling, followed by two dense layers and a Softmax activation. As in the case of keyword recognition, this model also requires pre-computed features (log Mel spectrograms) of the audio waveforms, thus, after the subsampling layer and before the inference model, we introduce a dedicated layer for computing the Mel spectrograms.

We train the entire pipeline for a maximum of 100 epochs, with an Adam optimizer and the initial learning rate of 0.001 that is reduced each time the validation accuracy plateaus. The training is stopped if no improvement is observed over 10 consecutive epochs. After training we refined just the inference sub-model for sampling rates of 20%, 40%, 60%, 80% and 100%. As discussed in Sect. 3.2 our approach does not mandate refinement on all the rates that will actually be used during inference, which we experimentally validate here.

Figure 4 illustrates that the accuracy of the model ranges from 74% when using all the samples to 60% for half the samples and 50% when using just 10% of the samples. For comparison, the accuracy of the original inference model is 76%. Notably, the accuracy gracefully degrades with reduced sampling rates, even for rates that were not explicitly used during the model refinement (e.g. 10%, 30%, etc.).

4.3 EEG classification

Wireless electroencephalogram (EEG)-enabled wearables could revolutionize a range of fields related to human-computer interaction, healthcare, and accessibility, to name a few. Yet, as untethered functioning of these devices requires them to be battery powered, it is crucial that the sampling and processing are kept at a minimum. We now examine the potential of our approach for enabling rate-adaptive sampling and learning for EEG signal processing.

We use Sleep EDF [18], a publicly available EEG dataset containing recordings of 20 healthy subjects, obtained using 2 scalp-EEG signals channels sampled at 100Hz. The inputs are labeled with one of the 5 sleep cycles. We split the data into train and test subsets so that no study subject is in both at the same time. For sleep classification on this data, we use the DeepSleepNet architecture [19] that consists of two CNNs (each having four convolutional layers and two max-pooling layers) applied independently on the same input signal to extract temporal and frequency information, respectively. These CNNs are later combined and fed to the residual learning part of the network comprising two bidirectional-LSTMs and a shortcut connection from the CNNs to the output of the LSTMs. After training this model on the Sleep EDF (on the Fpz-Cz channels) dataset with an Adam optimizer and a learning rate of 0.0001, with a stopping criteria if no improvement was made over 10 consecutive epochs, we achieve an overall inference accuracy of 85%.

To this architecture we add the subsampling layer that takes as an input a sequence of raw EEG recordings (30 s each) and learns the optimal subsampling scheme. The whole pipeline is trained in an end-to-end manner using the Adam optimizer with an initial learning rate of 0.0001 that is reduced each time the validation accuracy plateaus. After training, the accuracy of the subsampling–inference pipeline is 84%.

After the initial training, we fine-tuned the inference model for subsampling rates of 20%, 40%, 60% and 80%. At the end of the training and fine-tuning the accuracy of the model varies between 83% for full scale sampling, to 81% at half-scale sampling and up to 57% for 10% sampling rate (Fig. 5). The minimal drop in accuracy between the highest and the half-scale sampling rate is particularly promising for wearable applications.

4.4 Image classification

We argue that current camera technology renders image classification unsuitable for CS-based optimization, if resource savings on the sensing front are desired. Namely, camera sensors are not designed to record a subset of pixels—they either take the full frame or none. Consequently, any post-acquisition CS-based processing has at most very limited impact on the efficiency of the overall sensing-learning pipeline. Nevertheless, most of the sub-sampling approaches presented in the literature were validated exclusively on image datasets, thus, for the sake of direct comparison we also evaluate our approach on the image classification task.

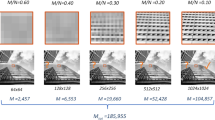

We use the MNIST dataset [20] consisting of 70,000 grayscale images of handwritten digits of size 28 \(\times \) 28 pixels. We split the dataset into 60,000 training, 5000 validation, and 5000 test images, and train a general ConvNet architecture comprising three convolutional layers, followed by BatchNormalizations and ReLUs, a Pooling layer, and a fully connected layer. The model was trained for 20 epochs using the Adam optimizer with the initial learning rate of 0.0002 that is reduced each time the validation accuracy plateaus. An example of the learned sampling scheme versus a sample input is shown in Fig. 6.

We now compare our approach to deep probabilistic subsampling (DPS), a recently proposed data-driven method for subsampling and inference [8]. Unlike alternatives, DPS is among the very few methods designed to support DL-based inference, not only sparse signal reconstruction, and was successfully validated in the original signal sampling domain (in this case—on raw pixels). Thus, unlike the majority of alternatives, it does not require for data to be transformed to another sparsifying domain (e.g. Fourier). In addition, DPS exhibits the highest inference accuracy among alternatives (e.g. [6]). A major difference between DPS and our method, however, is that our approach enables operation over dynamically switchable sampling rates. With DPS multi-rate adaptivity cannot be achieved since the sampling rate defines the size of the first layer of the DL model used for the inference, thus it must be specified before the training. While this precludes direct comparison between DPS and our approach, we nevertheless compare multiple DPS models, each trained at different rate, with our approach that allows rate adaptation (termed “Multirate model” in Fig. 7). In addition, to single out the impact of rate-specific training, we also re-train the DL model in our approach separately for each sampling rate (termed “Individual models” in Fig. 7). Note that these individual models still allow variable rate sampling, since the subsampling scheme remains the same. Yet, at each point in the graph we evaluate the model trained for that particular sampling rate.

Figure 7 demonstrates that our approach enables multi-rate operation with the minimal accuracy penalty (green line). In addition, once “focused” to a particular sampling rate, our approach outperforms DPS for all rates between 10 and 100%. The only sampling rates for which DPS performs better are those under 10%. We suspect that the threshold for which the trend reverses and the more aggressive strategy outperforms the more relaxed one probably depends on the sparsity of the data—the sparser the data, the lower the threshold.

The reason behind DPS’s superior performance at very low sub-sampling rates comes from the fact that DPS implements a more aggressive sparsity enforcing strategy, by defining the desired sampling rate in advance, before training, and re-enforcing it throughout the training process. Our approach, on the other hand relies on a more relaxed sparsity promoting strategy based on the \(l_1\)-norm regularization and learns a more generic sparse sampling mask starting from the original dimensionality of the input data vector, only to zero-out a given percent of the least important samples for the inference task after the training.

We further investigate the learned sampling schemes for both DPS and our approach on the MNIST dataset, at a sampling rate of 3%. Both approaches learn a scatter distribution of pixels (Fig. 8), considered to be the most informative for classifying the MNIST images. Yet, the perspective from which these pixels are considered important differs between the two approaches. While DPS focuses on pixels in the center of the image, our approach focuses on pixels placed at the edges of the numbers, and thus produces a slightly more scattered sampling pattern. The reason behind the different subsampling patterns could be connected to the nature of the subsampling strategy that revolves around learning the most informative pixels for classification, though the inference subnet could also play a role—we opted for a convolutional architecture, while DPS uses a fully connected one, and each architecture produces different representations of the objects they are trained on.

Finally, our approach also brings advantages such as its simplicity, its general-applicability, and increased computational efficiency. The training of a single DPS model able to perform inference at a sampling rate of 75% took over 24 h on a Intel(R) Core(TM) i9 workstation running at 5.1 GHz and having 64GB DDR4 memory at 3600 MHz, while training of the corresponding model with our approach took less than 1 h.

5 Conclusions

In this work we propose a simple, yet powerful subsampling strategy that can be jointly trained with many kinds of DL architectures to uncover the most informative subset of samples in the original acquisition domain. We also introduce a novel two-step training mechanism to enable rate-flexible sensing and inference. Extensive evaluation of our approach on four different domains and datasets and a comparison with a state-of-the art sensing-inference technique demonstrates that our approach can achieve performance comparable to some of the most aggressive subsampling strategies, while also bringing advantages such as reduced computation overhead and runtime adaptability of the sampling rate. To facilitate further research in this domain, we make our solution programming code (and additional experimental results) available at https://gitlab.fri.uni-lj.si/lrk/add-subsampling.

References

Pramanik, P.K.D., et al.: Power consumption analysis, measurement, management, and issues: a state-of-the-art review of smartphone battery and energy usage. IEEE Access 7, 182113–182172 (2019)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Adler, A., Elad, M., Zibulevsky, M.: Compressed learning: a deep neural network approach (2016). arXiv preprint arXiv:1610.09615

Machidon, A.L., Pejović, V.: Deep learning for compressive sensing: a ubiquitous systems perspective. Artif. Intell. Rev. 56, 3619–3658 (2023)

Bahadir, C.D., Dalca, A.V., Sabuncu, M.R.: Learning-based optimization of the under-sampling pattern in MRI. In: Paper Presented at IPMI 2019, Hong Kong, China, 2–7 (2019)

Brunton, S.L., Kutz, J.N.: Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control. Cambridge University Press, Cambridge (2022)

Huijben, I., Veeling, B.S., van Sloun, R.J.: Deep probabilistic subsampling for task-adaptive compressed sensing. In: Paper Presented at the 8th International Conference on Learning Representations, ICLR 2020 (2020)

Pineda, L., Basu, S., Romero, A., Calandra, R., Drozdzal, M.: Active MR k-space sampling with reinforcement learning. In: Paper Presented at the 23rd International Conference on Medical Image Computing and Computer Assisted Intervention, Lima, Peru (2020)

Lohit, S., Singh, R., Kulkarni, K., Turaga, P.: Rate-adaptive neural networks for spatial multiplexers (2018). arXiv preprint arXiv:1809.02850

Xu, Y., Liu, W., Kelly, K.F.: Compressed domain image classification using a dynamic-rate neural network. IEEE Access 8, 217711–217722 (2020)

Machidon, A., Pejović, V.: Enabling resource-efficient edge intelligence with compressive sensing-based deep learning. In: Paper Presented at ACM Computing Frontiers, 17, 2022. Italy, Turin (2022)

Ouchi, S., Ito, S.: Reconstruction of compressed-sensing MR imaging using deep residual learning in the image domain. Magn. Reson. Med. Sci. 20, 190 (2021)

Yang, Y., Sun, J., Li, H., Xu, Z.: ADMM-CSNET: a deep learning approach for image compressive sensing. IEEE Trans. Pattern Anal. Mach. Intell. 42, 521–538 (2018)

Warden, P.: Speech commands: a dataset for limited-vocabulary speech recognition (2018). arXiv preprint arXiv:1804.03209

De Andrade, D.C., Leo, S., Viana, M.L.D.S., Bernkopf, C.: A neural attention model for speech command recognition (2018). arXiv preprint arXiv:1808.08929

Piczak, K.J.: ESC: Dataset for environmental sound classification. In: Paper Presented at ACM Multimedia, October 13, 2015. Brisbane, Australia (2015)

Goldberger, A.L., et al.: Physiobank, physiotoolkit, and physionet: components of a new research resource for complex physiologic signals. Circulation 101, e215–e220 (2000)

Supratak, A., Dong, H., Wu, C., Guo, Y.: DeepSleepNet: a model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 1998–2008 (2017)

Deng, L.: The MNIST database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 29, 141–142 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Machidon, A.L., Pejović, V. Adaptive data-driven subsampling for efficient neural network inference. SIViP (2024). https://doi.org/10.1007/s11760-024-03223-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03223-z