Abstract

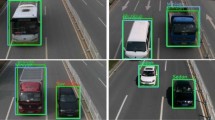

Nowadays, accurate and fast vehicle detection technology is of great significance for constructing intelligent transportation systems in the context of the era of big data. This paper proposes an improved lightweight YOLOX real-time vehicle detection algorithm. Compared with the original network, the detection speed and accuracy of the new algorithm have been improved with fewer parameters. First, referring to the GhostNet, we make a lightweight design of the backbone extraction network, which significantly reduces the network parameters, training cost, and inference time. Furthermore, by introducing the α-CIoU loss function, the regression accuracy of the bounding box (bbox) is improved, while the convergence speed of the model is also accelerated. The experimental results show that the mAP of the improved algorithm on the BIT-Vehicle dataset can reach up to 99.21% with 41.2% fewer network parameters and 12.7% higher FPS than the original network and demonstrate the effectiveness of our proposed method.

Similar content being viewed by others

Availability of data and materials

This article uses a public dataset: BIT-Vehicle dataset.

References

Qi, L.: Research on intelligent transportation system technologies and applications. In: 2008 Workshop on Power Electronics and Intelligent Transportation System, pp. 529–531. IEEE (2008)

Navastara, D.A., et al.: Vehicle classification based on CCTV video recording using histogram of oriented gradients, local binary patterns, and hierarchical multi-SVM. In: IOP Conference Series: Materials Science and Engineering, vol. 1077, no. 1. IOP Publishing (2021)

Wei, Y., et al.: Multi-vehicle detection algorithm through combining Harr and HOG features. Math. Comput. Simul. 155, 130–145 (2019)

Guo, E., Bai, L., Zhang, Y., et al.: Vehicle detection based on superpixel and improved hog in aerial images. In: International Conference on Image and Graphics, pp. 362–373. Springer, Cham

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Girshick, R.: Fast r-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R., et al.: Faster r-CNN: towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 28 (2015)

Redmon, J., Divvala, S., Girshick, R., et al.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Liu, W., Anguelov, D., Erhan, D., et al.: Ssd: Single shot multibox detector. In: European Conference on Computer Vision, pp. 21–37. Springer, Cham (2016)

Yang, Z., Li, J., Li, H.: Real-time pedestrian and vehicle detection for autonomous driving. In: 2018 IEEE Intelligent Vehicles Symposium (IV), pp. 179–184. IEEE (2018)

Redmon, J., Farhadi, A.: YOLO9000: Better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017)

Yang, S., Chong, X., Li, X., et al.: Intelligent intersection vehicle and pedestrian detection based on convolutional neural network. J. Sens. 2022, 8445816 (2022)

Wang, H., Yu, Y., Cai, Y., et al.: Soft-weighted-average ensemble vehicle detection method based on single-stage and two-stage deep learning models. IEEE Trans. Intell. Veh. 6(1), 100–109 (2020)

Lin, T.Y., Goyal, P., Girshick, R., et al.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Cai, Z., Vasconcelos, N.: Cascade r-CNN: Delving into high quality object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6154–6162 (2018)

Taheri Tajar, A., Ramazani, A., Mansoorizadeh, M.: A lightweight Tiny-YOLOv3 vehicle detection approach. J. Real-Time Image Proc. 18(6), 2389–2401 (2021)

Wang, X., Zhang, W., Wu, X., et al.: Real-time vehicle type classification with deep convolutional neural networks. J. Real-Time Image Proc. 16(1), 5–14 (2019)

Ge, Z., Liu, S., Wang, F., et al. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021)

Vaswani, A., Shazeer, N., Parmar, N., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Wortsman, M., Ilharco, G., Gadre, S.Y., et al.: Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. arXiv preprint arXiv:2203.05482 (2022)

Ma, N., Zhang, X., Zheng, H.T., et al.: Shufflenet v2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 116–131 (2018)

Howard, A.G., Zhu, M., Chen, B., et al.: Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Han, K., Wang, Y., Tian, Q., et al.: Ghostnet: More features from cheap operations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1580–1589 (2020)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: ECA-net: efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 11531–11539 (2020)

He, J., Erfani, S., Ma, X., et al.:α-IoU: a family of power intersection over union losses for bounding box regression. Adv. Neural Inf. Process. Syst. 34, 20230–20242 (2021)

Zheng, Z., Wang, P., Liu, W., et al.: Distance-IoU loss: Faster and better learning for bounding box regression. Proc. AAAI Confer. Artif. Intell. 34(07), 12993–13000 (2020)

Zheng, Z., Wang, P., Ren, D., et al.: Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 52(8), 8574–8586 (2022)

Bochkovskiy, A., Wang, C.Y., Liao, H.Y.M.: Yolov4: optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Dong, Z., Wu, Y., Pei, M., et al.: Vehicle type classification using a semisupervised convolutional neural network. IEEE Trans. Intell. Transp. Syst. 16(4), 2247–2256 (2015)

Funding

This study was funded by Chongqing Municipal Education Commission Science and Technology Research Project(KJQN202001523); 2021 Cooperation Project between Chongqing Municipal Undergraduate Universities and Chinese Academy of Sciences(HZ2021015); Chongqing University of Science and Technology Youth Science Fund Project(CKRC2019042).

Author information

Authors and Affiliations

Contributions

Yu Anning is responsible for providing experimental platform and paper review; Xiong Cong is responsible for writing the paper and completing the experiment; Senhao Yuan is responsible for the drawing of Figs. 1, 3, 4, 5, 6 and 7; Xinghua Gao is responsible for the production of all tables.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xiong, C., Yu, A., Yuan, S. et al. Vehicle detection algorithm based on lightweight YOLOX. SIViP 17, 1793–1800 (2023). https://doi.org/10.1007/s11760-022-02390-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02390-1