Abstract

The coarse-to-fine image defogging strategy has been widely used in the structural design of individual image defogging networks. In the traditional method, multi-scale input image subnets are superimposed, so that the sharpness of the image is gradually improved from the bottom subnet to the top subnet, which inevitably leads to the loss of image details. Toward a fast and accurate dehazing network design, we revisit the coarse-to-fine strategy and present a multi-input and multi-scale U-Net (MIMS-UNet). The MIMS-UNet has two distinct features. On the one hand, the single-encoder of MIMS-UNet adopts multi-input and multi-scale image, which increases the computation amount but greatly improves the network performance. On the other hand, codec structures with context blocks are used to capture context information and recover more details. The experimental results show that the proposed method achieves good results in both quantification and visualization. Compared with the existing methods, the proposed network can achieve ideal results of defogging and effectively avoid color distortion after defogging.

Similar content being viewed by others

References

Mccartney, E.J., Hall, F.F.: Optics of the atmosphere: scattering by molecules and particles. Phys. Today 30, 76–77 (1976). https://doi.org/10.1063/1.3037551

Narasimhan, S.G., Nayar, S.K.: Vision and the atmosphere. Int. J. Comput. Vision 48, 233–254 (2002). https://doi.org/10.1023/A:1016328200723

Li, Y., You, S., Brown, M.S., Tan, R.T.: Haze visibility enhancement: a survey and quantitative benchmarking. Comput. Vis. Image Underst. 165, 1–16 (2017). https://doi.org/10.1016/j.cviu.2017.09.003

Berman, D., Treibitz, T., Avidan, S.: Non-local image dehazing. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1674–1682 (2016). https://doi.org/10.1109/CVPR.2016.185

Zhu, Q., Mai, J., Shao, L.: A fast single image haze removal algorithm using color attenuation prior. In: IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3522–3533 (2015). https://doi.org/10.1109/TIP.2015.2446191

Xu, H., Zhai, G., Wu, X., Yang, X.: Generalized equalization model for image enhancement. In: IEEE Transactions on Multimedia, vol. 16, no. 1, pp. 68–82 (2014). https://doi.org/10.1109/TMM.2013.2283453

Jiang, B., Woodell, G.A., Jobson, D.J.: Novel multi-scale retinex with color restoration on graphics processing unit. J. Real-Time Image Proc. 10, 239–253 (2015). https://doi.org/10.1007/s11554-014-0399-9

Xu, H.T., Zhai, G.T., Wu, X.L., et al.: Generalized equalization model for image enhancement. IEEE Trans. Multimed. 16(1), 68–82 (2014)

Liu, H.B., Yang, J., Wu, Z.P., et al.: A fast single image dehazing method based on dark channel prior and Retinex theory. Acta Automatica Sinica 41(7), 1264–1273 (2015). https://doi.org/10.16383/j.aas.2015.c140748

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011). https://doi.org/10.1109/TPAMI.2010.168

Tan, R.T.: Visibility in bad weather from a single image. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008).https://doi.org/10.1109/CVPR.2008.4587643

Fattal, R.: Dehazing using color-lines. ACM Trans. Graph. (TOG) 34, 1–14 (2014). https://doi.org/10.1145/2651362

Tarel, J., Hautière, N.: Fast visibility restoration from a single color or gray level image. In: 2009 IEEE 12th International Conference on Computer Vision, pp. 2201–2208 (2009). https://doi.org/10.1109/ICCV.2009.5459251

Meng, G., Wang, Y., Duan, J., Xiang, S., Pan, C.: Efficient image dehazing with boundary constraint and contextual regularization. In: 2013 IEEE International Conference on Computer Vision, pp. 617–624 (2013).https://doi.org/10.1109/ICCV.2013.82

Li, Y., Tan, R.T., Brown, M.S.: Nighttime Haze removal with glow and multiple light colors. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 226–234 (2015). https://doi.org/10.1109/ICCV.2015.34

Nishino, K., Kratz, L., Lombardi, S.: Bayesian defogging. Int. J. Comput. Vis. 98, 263–278 (2012). https://doi.org/10.1007/s11263-011-0508-1

Yang, D., Sun, J.: Proximal Dehaze-Net: a prior learning-based deep network for single image dehazing. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision—ECCV 2018. ECCV 2018. Lecture Notes in Computer Science, vol. 11211. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_43

Zhu, Q., Mai, J., Shao, L.: A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 24(11), 3522–3533 (2015). https://doi.org/10.1109/TIP.2015.2446191

Cai, B., Xu, X., Jia, K., Qing, C., Tao, D.: DehazeNet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016). https://doi.org/10.1109/TIP.2016.2598681

Ren, W. et al.: Gated fusion network for single image dehazing. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3253–3261 (2018).https://doi.org/10.1109/CVPR.2018.00343

Ren, W., Liu, S., Zhang, H., Pan, J., Cao, X., Yang, M.H. (2016). Single image dehazing via multi-scale convolutional neural networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision—ECCV 2016. ECCV 2016. Lecture Notes in Computer Science, vol. 9906. Springer, Cham. https://doi.org/10.1007/978-3-319-46475-6_10

Zhang, H., Patel, V.M.: Densely connected pyramid dehazing network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3194–3203 (2018). https://doi.org/10.1109/CVPR.2018.00337

Qu, Y., Chen, Y., Huang, J., Xie, Y.: Enhanced Pix2pix dehazing network. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8152–8160 (2019). https://doi.org/10.1109/CVPR.2019.00835

Li, B., Peng, X., Wang, Z., Xu, J., Feng, D.: AOD-Net: all-in-one dehazing network. In: 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp. 4780–4788 (2017). https://doi.org/10.1109/ICCV.2017.511

Dong, H. et al.: Multi-Scale boosted dehazing network with dense feature fusion. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2154–2164 (2020). https://doi.org/10.1109/CVPR42600.2020.00223

Cho, S.-J., Ji, S.-W., Hong, J.-P., Jung, S.-W., Ko, S.-J. (2021). Rethinking coarse-to-fine approach in single image deblurring. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 4641–4650. https://doi.org/10.48550/arXiv.2108.05054

Chang, M., Li, Q., Feng, H., Xu, Z.: Spatial-adaptive network for single image denoising. In: European Conference on Computer Vision (2020). https://doi.org/10.1007/978-3-030-58577-8_11

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking Atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 (2017)

Zhou, S., Zhang, J., Zuo, W., Xie, H., Pan, J., Ren, J.S.: DAVANet: stereo deblurring with view aggregation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10988–10997 (2019). https://doi.org/10.1109/CVPR.2019.01125

Li, B., Ren, W., Fu, D., Tao, D., Feng, D., Zeng, W., Wang, Z.: RESIDE: a benchmark for single image dehazing. arXiv:1712.04143 (2017)

Ancuti, C., Ancuti, C.O., Timofte, R., De Vleeschouwer, C. (2018). I-HAZE: a dehazing benchmark with real hazy and haze-free indoor images. In: Blanc-Talon, J., Helbert, D., Philips, W., Popescu, D., Scheunders, P. (eds.) Advanced Concepts for Intelligent Vision Systems. ACIVS 2018. Lecture Notes in Computer Science, vol. 11182. Springer, Cham. https://doi.org/10.1007/978-3-030-01449-0_52

Shao, Y., Li, L., Ren, W., Gao, C., Sang, N.: Domain adaptation for image dehazing. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2805–2814 (2020).https://doi.org/10.1109/CVPR42600.2020.00288

Mei, K., Jiang, A., Li, J., Wang, M.: Progressive feature fusion network for realistic image dehazing. In: Asian Conference on Computer Vision, pp. 203–215 (2018). https://doi.org/10.1007/978-3-030-20887-5_13

Liu, X., Ma, Y., Shi, Z., Chen, J.: GridDehazeNet: attention-based multi-scale network for image dehazing. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7313–7322 (2019). https://doi.org/10.1109/ICCV.2019.00741

Liu, X., Suganuma, M., Sun, Z., Okatani, T.: Dual residual networks leveraging the potential of paired operations for image restoration. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7000–7009 (2019). https://doi.org/10.1109/CVPR.2019.00717

Dong, J., Pan, J.: Physics-based feature de-hazing networks. In: European Conference on Computer Vision, pp. 188–204. https://doi.org/10.1007/978-3-030-58577-8_12

Zhang, H., Sindagi, V., Patel, V.M.: Multi-scale single image dehazing using perceptual pyramid deep network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1015–101509 (2018). https://doi.org/10.1109/CVPRW.2018.00135

Li, R., Pan, J., Li, Z., Tang, J.: Single image dehazing via conditional generative adversarial network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8202–8211 (2018). https://doi.org/10.1109/CVPR.2018.00856

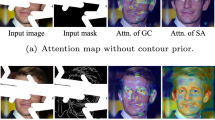

Chen, D. et al.: Gated context aggregation network for image dehazing and deraining. In: 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1375–1383 (2019). https://doi.org/10.1109/WACV.2019.00151.

Author information

Authors and Affiliations

Contributions

Zhengchun Lin:Writing - Review & Editing,Supervision,Funding acquisition,Formal analysis,Funding acquisition. Qingxing Luo: Writing - Original Draft,Data Curation,Software,Methodology,Visualization. Yunzhi Jiang & Jing Wang :Supervision. Siyuan Li:software. Gongwen Cheng & Zheng Genrang :Funding acquisition. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lin, Z., Luo, Q., Jiang, Y. et al. Image defogging based on multi-input and multi-scale UNet. SIViP 17, 1143–1151 (2023). https://doi.org/10.1007/s11760-022-02321-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02321-0