Abstract

Experienced surgeons commonly mentor trainees as they move through their initial learning curves. During robot-assisted minimally invasive surgery, several tools exist to facilitate proctored cases, such as two-dimensional telestration and a dual surgeon console. The purpose of this study was to evaluate the utility and efficiency of three, novel proctoring tools for robot-assisted minimally invasive surgery, and to compare them to existing proctoring tools. Twenty-six proctor-trainee pairs completed validated, dry-lab training exercises using standard two-dimensional telestration and three, new three-dimensional proctoring tools called ghost tools. During each exercise, proctors mentored trainees by correcting trainee technical errors. Proctors and trainees completed post-study questionnaires to compare the effectiveness of the proctoring tools. Proctors and trainees consistently rated the ghost tools as effective proctoring tools. Both proctors and trainees preferred 3DInstruments and 3DHands over standard two-dimensional telestration (proctors p < 0.001 and p = 0.03, respectively, and trainees p < 0.001 and p = 0.002, respectively). In addition, proctors preferred three-dimensional vision of the operative field (used with ghost tools) over two-dimensional vision (p < 0.001). Total mentoring time and number of instructions provided by the proctor were comparable between all proctoring tools (p > 0.05). In summary, ghost tools and three-dimensional vision were preferred over standard two-dimensional telestration and two-dimensional vision, respectively, by both proctors and trainees. Proctoring tools—such as ghost tools—have the potential to improve surgeon training by enabling new interactions between a proctor and trainee.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

As robot-assisted minimally invasive surgery (RAMIS) continues to expand into new surgical specialties, it is important to efficiently guide new surgeons through their learning curves to maximize patient safety [1–3]. One common element of new surgeon training pathways is proctored cases, where an experienced surgeon mentors a trainee [4, 5]. In RAMIS, this typically occurs during the first series of cases undertaken by a surgeon or during complex cases where experienced surgeon input could be helpful.

The current standard of RAMIS proctoring is in-person proctoring using two-dimensional (2D) telestration on the vision cart touchscreen. A more expensive alternative is a dual surgeon console that also allows a proctor to use three-dimensional (3D) pointers to provide instruction to a training surgeon. Given the time and geographic constraints of proctors, researchers have explored a remote proctoring technology for da Vinci® Surgical Systems (Intuitive Surgical, Inc., Sunnyvale, CA, USA) called da Vinci Connect™ [6–9]. da Vinci Connect enables a proctor to remotely view a surgeon’s operative field on his laptop, provide verbal instruction, and telestrate using a mouse or a laptop’s touchscreen. Researchers have found that remote proctoring using da Vinci Connect was feasible and effective [6, 9].

Whether local or remote, the types of interactions between proctors and trainees can be extended beyond 2D telestration and a dual surgeon console given the architecture of RAMIS systems. For example, the proctor might be able to better visualize the operative field using a 3D view [10–17], similar to the surgeon console but using a low-cost, remote setup. Furthermore, given a 3D display, a proctor could interact with the trainee in 3D in new ways. For example, a proctor can explicitly demonstrate how to position the instruments in the operative field and ask the trainee to match postures rather than trying to verbally explain the configuration or draw the configuration in 2D. As with any advanced interaction, these tools need to help with instruction for the trainee, yet not be cumbersome or frustrating for the proctor. Therefore, they must be extensively studied from both the proctor’s and trainee’s perspectives to ensure the appropriate interactions are delivered.

In this study, we examine the utility and efficiency of three novel, 3D proctoring tools, in the form of semi-transparent ghost tools overlaid on the surgeon’s field of view, and compare them to standard 2D telestration. We hypothesized that the 3D ghost tools would enable proctors to more effectively mentor trainees and enable trainees to more effectively extract meaning from proctor input.

Methods

Ghost tools setup

Conventional 2D telestration (2DTele) and three different types of 3D ghost tools—3D pointers (3DPointers), 3D cartoon hands (3DHands), and 3D instruments (3DInstruments)—were compared using the da Vinci Si™ Surgical System (Fig. 1). Custom software was written and run on an external PC to render the ghost tools as semi-transparent overlays on the stereoscopic, endoscopic image captured from the video output channels using Decklink Quad frame grabbers (Blackmagic Design Pty. Ltd., Fremont, CA, USA). The stereoscopic image with the ghost tools overlay was output from the PC and displayed to the trainee at the surgeon console in a sub-window using the 3D TilePro™ Display video inputs and to the proctor using a polarized 3D display (Sony, Inc., Fig. 1d). Importantly, similar setups as the one used in this study, which used readily available video input and output channels, can be replicated by other academic researchers to explore advanced proctoring tools on clinical da Vinci Surgical Systems.

The 3DPointers enabled proctors to point and draw in 3D. The 3DHands enabled proctors to position and orient a cartoon hand in 3D space as well as open and close their index fingers and thumbs to illustrate grasping objects. Finally, the 3DInstruments behaved similar to real da Vinci Endowrist® Large Needle Driver instruments and were able to be positioned and oriented in 3D space while also opening and closing the instrument jaws to illustrate grasping objects. All three variations of ghost tools were controlled using Razer™ Hydra motion controllers (Sixense Entertainment, Inc., Los Gatos, CA, USA).

User study

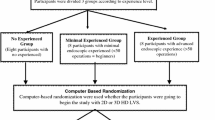

The effectiveness of the four proctoring tools (the three types of ghost tools and standard 2DTele) was examined during four, validated dry-lab exercises. The four dry-lab exercises were previously shown to have construct validity and included Ring Rollercoaster 4, Big Dipper, Around-the-World, and Figure-of-Eight Knot Tying on a luminal closure model (see Fig. 1 in [18] for task images) [18–20]. One proctor was randomly paired with one trainee to evaluate the four proctoring tools across the four exercises. Proctors were experienced surgeons (>50 cases) or experienced RAMIS trainers (>100 surgeons trained). Trainees included surgeons in training with RAMIS technology and new RAMIS trainers with limited exposure to RAMIS. The dry-lab exercises targeted technical skills related to using the da Vinci Surgical System as opposed to cognitive skills requiring surgical judgment in order to standardize across surgical and non-surgical proctors and trainees (see Table 1 in [18] for each exercise’s technical skills).

Each proctor-trainee pair completed the four exercises in random order with a proctoring tool randomly assigned to each exercise. Randomization was achieved by performing two paired random permutations for each subject: one for the four exercises and one for the four proctoring tools.

Informed consent was obtained from all individual participants included in the study (Western IRB, Puyallup, WA). Before beginning the exercise, both proctors and trainees received instructions on how to complete the exercise. Also, proctors were given instructions on how to use the proctoring tool. The use of each proctoring tool was first demonstrated to the proctor by the researcher. Then, the proctor was given up to 2 min to adapt to the new tool. All proctors received the same training for each tool. Finally, proctors were instructed to provide counseling to the trainee on technique, and to correct any technical errors committed. Each dry-lab exercise targeted specific technical skills (e.g. Endowrist manipulation, needle driving, knot tying, etc.) that the proctor reinforced when he found appropriate.

As a trainee performed an exercise, the proctor verbally pronounced “mentoring moment” when he determined mentoring was warranted. This was an indication for the trainee to pause and receive verbal instruction or instruction using one of the proctoring tools. The type of instruction and time in seconds were recorded for each mentoring moment.

After each exercise, proctors and trainees completed a standardized questionnaire to evaluate the proctoring tool used [9] (referred to as Exercise Questionnaire). Eight questions regarding the proctoring tool were delivered on a 5-point scale and addressed the ability of the proctoring tool to (1) help delineate anatomic structures, (2) improve surgical/technical skills, (3) improve confidence, (4) allow for safe completion of task, (5) work smoothly, (6) be easy to use, (7) be helpful, and (8) be more helpful than 2D telestration. The 5-point scale was defined with 1 = “Strongly Disagree”, 2 = “Moderately Disagree”, 3 = “Undecided”, 4 = “Moderately Agree” and 5 = “Strongly Agree”. The Exercise Questionnaire has been used in previous research studies as an effective tool to differentiate preferences for various proctoring modalities [9]. At the end of the study, proctors and trainees completed a standardized, post-questionnaire rating the overall effectiveness of each proctoring tool as well as the 3D video quality for the proctor when using ghost tools (all on a 5 point scale) (referred to as Post-Questionnaire). The Post-Questionnaire 5-point scale was defined as 1 = “Least Effective”, 2 = “Moderately Ineffective”, 3 = “Neutral”, 4 = “Moderately Effective”, and 5 = “Most Effective”.

Analysis

The median and range of the proctor and trainee responses were reported. In addition, the cumulative mentor time, number of instructions provided by the proctor, and average mentor time per instruction were examined and compared across proctoring tool. Some types of instructions were universal across all exercises (e.g., ineffective use of two hands, excessive force, ineffective visualization, etc.) while others were exercise-specific (e.g., dropped ring, missed target, inefficient knot tying technique, etc.). Mann–Whitney U tests were used for all pair-wise comparisons of the proctoring tools. Kruskal–Wallis tests were used for group comparisons across all proctoring tools followed by a Dunn’s test to identify which groups, if any, were responsible for the difference.

Chi-square tests were used to evaluate responses to individual questions from the Exercise Questionnaire and Post-Questionnaire. Two categories were created: “agree” and “disagree”. The “agree” category contained responses with values of 4 or 5 (out of 5), and the “disagree” category contained responses with values 1, 2, or 3 (out of 5). Tests of significance compared the categorized proctor and trainee responses to an expected response of 50 % “agree” and 50 % “disagree”.

Finally, Mann–Whitney U tests were used to examine the inter-rater reliability of surgeon and non-surgeon proctors and trainees for all proctoring tools on both the Exercise Questionnaire and Post-Questionnaire. A p value less than 0.05 was used to determine significance for all statistical tests.

Results

A total of 26 proctors and twenty-six trainees participated in the study at Keck Medical Center of the University of Southern California (Los Angeles, CA, USA) and Intuitive Surgical (Sunnyvale, CA, USA). Twelve proctors were experienced surgeons and 14 were experienced trainers. Twelve trainees were surgeons in training and 14 trainees were non-surgical subjects inexperienced in robotic surgery. Seven pairs of proctor-trainees were unable to complete all four training exercises due to time constraints. The total number of proctored exercises for each proctoring tool was 23 (2DTele), 20 (3DPointers), 26 (3DHands), and 23 (3DInstruments).

Proctors evaluated all four types of proctoring tools favorably (median responses were ≥3 across all categories from the Exercise Questionnaire; see Table 1). The median proctor response indicated 3DHands and 3DInstruments were more effective than 2DTele (column “Vs2DTele” in Table 1); however, only 3DInstruments showed a significant difference compared to 2DTele (p = 0.02). 2DTele was the only proctoring modality that achieved significance for ease of use by proctors (“Easy” in Table 1). The “Easy” score for 3DPointers was particularly low, which was also reported anecdotally by proctors during the study.

Trainees also evaluated all four types of proctoring tools favorably (median response ≥3 across all categories from the Exercise Questionnaire; see Table 2). In general, trainee median evaluations were higher than proctor evaluations, but this difference was not significant (p > 0.05). In particular, trainees evaluated 3DPointers, 3DHands, and 3DInstruments as more effective than 2DTele, however, only 3DHands (p < 0.001) and 3DInstruments (p < 0.001) were significantly different. Unlike with proctors, there existed a significant difference for the three ghost tools for ease of use (“Easy”) (3DPointers p = 0.03, 3DHands p = 0.006, and 3DInstruments p < 0.001).

From the Post-Questionnaire, proctors’ overall evaluations of the three types of ghost tools were positive (median responses were ≥3; see first row of Table 3). The overall evaluation of 3DInstruments was significant (p = 0.01). In addition, proctors rated 3DInstruments as significantly more effective than 2DTele and 3DPointers (p < 0.001, p = 0.05, respectively). Similarly, proctors rated 3DHands as significantly more effective than 2DTele (p = 0.03). Finally, proctors rated the ability to see the operative field in 3D as more effective than 2D (p < 0.001).

Similar to the proctors, trainees’ overall evaluation of the three types of ghost tools from the Post-Questionnaire was positive (median responses were ≥4; see first row of Table 4). The overall evaluation for both 3DHands and 3DInstruments achieved significance (p = 0.01, p < 0.001, respectively). In addition, trainees rated 3DInstruments as significantly more effective than 2DTele and 3DPointers (p < 0.001 for both). Furthermore, trainees rated 3DHands as significantly more effective than 2DTele (p = 0.002) and 3DPointers (p = 0.01). Based on a comparison of trainee and proctor responses to the Post-Questionnaire, trainees evaluated 3DInstruments and 3DHands as more effective than proctors’ evaluations (p = 0.03, p = 0.04, respectively).

Additionally, there existed a significant difference across proctoring tools for proctors (p = 0.003) and trainees (p < 0.001) using a group comparison from Post-Questionnaire responses. For proctors, the mean ranks of 3DInstruments was significantly greater than 2DTele (p < 0.05). For trainees, the mean ranks of 3DInstruments and 3DHands were significantly greater than 3DPointers and 2DTele (p < 0.05).

The cumulative mentor time, number of instructions, and average mentor time per instruction were not significantly different across proctoring tools (p = 0.49, p = 0.83 and p = 0.26, respectively) but trended toward longer mentor times, number of instructions, and mentor time per instruction for 3DHands and 3DInstruments compared to 2DTele and 3DPointers (Fig. 2).

Metrics quantifying proctor-trainee interactions for each proctoring tool (mean with standard error bar). Total instruction time (left) was the cumulative time a proctor provided instruction to a trainee. Number of instructions (middle) was the number of times a proctor provided instructions. Time per instruction (right) was the total instruction time normalized by the number of instructions

Finally, we compared how surgeon proctors and trainees evaluated ghost tools relative to non-surgeon proctors and trainees given the heterogeneity of the proctor and trainee populations. The only significant difference was that surgeon proctors and surgeon trainees evaluated 2DTele as more effective than non-surgeon proctors and non-surgeon trainees (p = 0.03 and p = 0.008, respectively) in the Post-Questionnaire.

Discussion

Proctored cases by an experienced surgeon remain a fundamental component of a new surgeon’s training pathway. During RAMIS, proctors can interact with trainees in novel ways compared to other forms of surgery [21–23]. In this work, we extend these RAMIS proctor-trainee interactions by studying three novel types of proctoring tools called ghost tools (Fig. 1). Ghost tools have two general advantages over conventional proctoring methods; they enabled proctors to see in 3D and to move in 3D with enriched interactions. Indeed, proctors preferred using ghost tools over conventional telestration at the patient side touchscreen (Tables 1, 3), as well as, having a 3D view of the operative field.

However, proctoring technologies impact both the trainee and the proctor and, therefore, careful consideration of both user groups must be made throughout the development process. We illustrate the preferences of these two groups in this study, in particular by the fact that trainees evaluated instruction via all of the ghost tools as easy to accept, whereas proctors evaluated 2D telestration as easier to use than the ghost tools. This could have been mitigated if proctors had more time to acclimate to the ghost tools setup, especially given their familiarity with the da Vinci Surgical System controls (and existing 2D telestration).

Although in-person proctoring will remain essential, there exists a tremendous opportunity for remote proctoring to alleviate geographic and time constraints placed on experienced surgeons serving as proctors [24–28]. In this way, remote proctoring might increase both the number of surgeons proctored and extend the number of cases over which a new surgeon receives some form of expert guidance. The end goal is perhaps better-trained surgeons performing safer surgeries. Future research studies examining how ghost tools may impact the remote proctoring process and the necessary technical specifications (e.g., latency limits [29]) will be needed in order to deliver the most effective interactions between proctors and trainees.

Nevertheless, this study served as an important step to refine the types of interactions between proctors and trainees before moving to a more complex and unstructured environment such as porcine tasks, cadavers, or clinical settings or a remote setup. Although the results of this study are compelling, the utility and performance of ghost tools should be further evaluated on realistic surgical tasks (i.e., tissue dissection, tissue retraction, and anatomy identification). If ghost tools are demonstrated to be efficient and safe in these wet-lab scenarios, clinical testing could be done to determine efficacy in live surgery. Even so, given the results of this study, ghost tools seem to offer advantages during training scenarios as simple as dry-lab tasks that target basic technical skills. Since these sorts of training tasks are commonly performed by new RAMIS surgeons, proctored interactions using ghost tools during similar exercises may help improve the efficiency and effectiveness of surgeon training prior to their first clinical procedures.

A potential limitation with this study was the heterogeneity of proctor and trainee groups. That, along with the small cohorts, could have affected how the ghost tools were evaluated, both from the proctor’s and trainee’s perspectives. However, the only significant difference between groups for both proctors and trainees was how they evaluated 2DTele—surgeons were more favorable of the technology than non-surgeons. One reason might be their familiarity and reliance on two-dimensional telestration for clinical procedures, which non-surgeon proctors and non-surgeon trainees have not experienced.

Another limitation with this study may be that although proctor and trainee preferences of the proctoring tools were elicited, whether their preferences actually translated to practical improvement in proctorship remains unclear. Total mentor time and number of instructions may represent the practical advantage ghost tools have over 2D telestration. However, we did not see a significant difference across proctoring tools with these metrics.

In summary, ghost tools offer compelling improvements over current proctoring tools during RAMIS. They may enable surgeons to move through their learning curves more quickly by providing more effective instruction, and improve patient safety by enabling proctors to more effectively mentor surgeons during clinical procedures. Furthermore, it would be compelling to explore the impact of ghost tools during remote tele-mentored clinical cases and to compare them to existing technologies such as the da Vinci Connect Proctoring System. In the end, additional research is required to continue to understand optimal proctor-trainee interactions.

References

Davis JW, Kreaden US, Gabbert J, Thomas R (2014) Learning curve assessment of robot-assisted radical prostatectomy compared with open-surgery controls from the premier perspective database. J Endourol 28(5):560–566

Schreuder H, Wolswijk R, Zweemer R, Schijven M, Verheijen R (2012) Training and learning robotic surgery, time for a more structured approach: a systematic review. BJOG 119(2):137–149

Lee JY, Mucksavage P, Sundaram CP, McDougall EM (2011) Best practices for robotic surgery training and credentialing. J Urol 185(4):1191–1197

Zorn KC, Gautam G, Shalhav AL, Clayman RV, Ahlering TE, Albala DM, Lee DI, Sundaram CP, Matin SF, Castle EP (2009) Training, credentialing, proctoring and medicolegal risks of robotic urological surgery: recommendations of the society of urologic robotic surgeons. J Urol 182(3):1126–1132

Visco AG, Advincula AP (2008) Robotic gynecologic surgery. Obstet Gynecol 112(6):1369–1384

Shin DH, Dalag L, Gill IS, Hung AJ (2014) ConnectTM–a pilot study for the remote proctoring of robotic surgery. J Urol 191(4):e956

Lenihan J Jr, Brower M (2012) Web-connected surgery: using the internet for teaching and proctoring of live robotic surgeries. J Robot Surg 6(1):47–52

Collins J, Dasgupta P, Kirby R, Gill I (2012) Globalization of surgical expertise without losing the human touch: utilising the network, old and new. BJU Int 109(8):1129

Shin DH, Dalag L, Azhar RA, Santomauro M, Satkunasivam R, Metcalfe C, Dunn M, Berger A, Djaladat H, Nguyen M (2015) A novel interface for the telementoring of robotic surgery. BJU Int 116(2):302–8

Wagner O, Hagen M, Kurmann A, Horgan S, Candinas D, Vorburger S (2012) Three-dimensional vision enhances task performance independently of the surgical method. Surg Endosc 26(10):2961–2968

Smith R, Day A, Rockall T, Ballard K, Bailey M, Jourdan I (2012) Advanced stereoscopic projection technology significantly improves novice performance of minimally invasive surgical skills. Surg Endosc 26(6):1522–1527

Hinata N, Miyake H, Kurahashi T, Ando M, Furukawa J, Ishimura T, Tanaka K, Fujisawa M (2014) Novel telementoring system for robot-assisted radical prostatectomy: impact on the learning curve. Urology 83(5):1088–1092

Blavier A, Gaudissart Q, Cadière G-B, Nyssen A-S (2006) Impact of 2D and 3D vision on performance of novice subjects using da Vinci robotic system. Acta Chir Belg 106(6):662–664

Blavier A, Gaudissart Q, Cadière G-B, Nyssen A-S (2007) Comparison of learning curves and skill transfer between classical and robotic laparoscopy according to the viewing conditions: implications for training. Am J Surg 194(1):115–121

Munz Y, Moorthy K, Dosis A, Hernandez J, Bann S, Bello F, Martin S, Darzi A, Rockall T (2004) The benfits of stereoscopic vision in robotic-assisted performance on bench models. Surg Endosc 18(4):611–616

Jourdan I, Dutson E, Garcia A, Vleugels T, Leroy J, Mutter D, Marescaux J (2004) Stereoscopic vision provides a significant advantage for precision robotic laparoscopy. Br J Surg 91(7):879–885

Byrn JC, Schluender S, Divino CM, Conrad J, Gurland B, Shlasko E, Szold A (2007) Three-dimensional imaging improves surgical performance for both novice and experienced operators using the da Vinci Robot System. Am J Surg 193(4):519–522

Jarc AM, Curet M (2014) Construct validity of nine new inanimate exercises for robotic surgeon training using a standardized setup. Surg Endosc 28(2):648–656

Jarc AM, Curet M (2014) Face, content, and construct validity of four, inanimate training exercises using the da Vinci® Si surgical system configured with Single-Site™ instrumentation. Surgical endoscopy: 1–7

Siddiqui NY, Galloway ML, Geller EJ, Green IC, Hur H-C, Langston K, Pitter MC, Tarr ME, Martino MA (2014) Validity and reliability of the robotic objective structured assessment of technical skills. Obstet Gynecol 123(6):1193–1199

Crusco S, Jackson T, Advincula A (2014) Comparing the da Vinci Si Single console and dual console in teaching novice surgeons suturing techniques. JSLS 18 (3)

Tan GY, Goel RK, Kaouk JH, Tewari AK (2009) Technological advances in robotic-assisted laparoscopic surgery. Urol Clin North Am 36(2):237–249

Matu FO, Thøgersen M, Galsgaard B, Jensen MM, Kraus M Stereoscopic augmented reality system for supervised training on minimal invasive surgery robots. In: Proceedings of the 2014 Virtual Reality International Conference, 2014. ACM, p 33

Lendvay TS, Hannaford B, Satava RM (2013) Future of robotic surgery. Cancer J 19(2):109–119

Augestad KM, Bellika JG, Budrionis A, Chomutare T, Lindsetmo R-O, Patel H, Delaney C, Lindsetmo RO, Hartvigsen G, Hasvold P (2013) Surgical telementoring in knowledge translation—clinical outcomes and educational benefits a comprehensive review. Surg Innov 20(3):273–281

Gambadauro P, Torrejón R (2013) The “tele” factor in surgery today and tomorrow: implications for surgical training and education. Surg Today 43(2):115–122

Aron M (2014) Robotic surgery beyond the prostate. Indian J Urol 30(3):273

Ballantyne GH (2002) Robotic surgery, telerobotic surgery, telepresence, and telementoring. Surg Endosc 16(10):1389–1402

Xu S, Perez M, Yang K, Perrenot C, Felblinger J, Hubert J (2014) Determination of the latency effects on surgical performance and the acceptable latency levels in telesurgery using the dV-Trainer® simulator. Surg Endosc 28(9):2569–2576

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Dr. Jarc is a researcher in the Medical Research group at Intuitive Surgical, Inc. Dr. Gill reports personal fees from Mimic Technologies, Inc., personal fees from EDAP TMS, and stock ownership in Hansen Medical, Inc. Dr. Hung reports personal fees from Mimic Technologies, Inc. and research grants from Intuitive Surgical, Inc. Dr. Shah, Mr. Adebar, Mr. Hwang, and Dr. Aron have no conflicts of interest or financial ties to disclose.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jarc, A., Shah, S.H., Adebar, T. et al. Beyond 2D telestration: an evaluation of novel proctoring tools for robot-assisted minimally invasive surgery. J Robotic Surg 10, 103–109 (2016). https://doi.org/10.1007/s11701-016-0564-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11701-016-0564-1