Abstract

Continuous cooling transformation (CCT) diagrams can be constructed by empirical methods, which is expensive and time consuming, or by fitting a model to available experimental data. Examples of data-driven models implemented so far include regression models, artificial neural networks, k-Nearest Neighbours and Random Forest. Gradient boosting machine (GBM) has been succesfully used in many machine learning applications, but has not been used before in modelling CCT-diagrams. This article presents a novel way of predicting ferrite start temperatures for low alloyed steels using gradient boosting. First, transformation onset temperatures are predicted over a grid of values with a trained GBM-model after which a physically-based model is fitted to the piecewise constant curve obtained as output from the model. Predictability of the GBM-model is tested with two sets of CCT-diagrams and compared to Random Forest and JMatPro software. GBM outperforms its competitors under all tested model performance metrics: e.g. R2 for test data is 0.92, 0.87 and 0.70 for GBM, Random Forest and JMatPro respectively. Output from the GBM-model is used for fitting a physically based model, which enables the estimation of transformation start for any linear or nonlinear cooling path. This can be further converted to Time-Temperature-Transformation (TTT) diagram.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The continuous cooling transformation (CCT) diagram is useful representation of the time and temperature regions where austenite decomposes to different microstructures: polygonal ferrite, pearlite, bainite and martensite during cooling at approximately constant rate. Such diagrams can be applied for designing suitable cooling paths in order to achieve the desired microstructure and resulting mechanical properties at room temperature.1,2,3,4,5,6,7,8,9,10 Since austenite decomposition, and hence the CCT diagram, depend on the chemical composition of the steel, the knowledge on how different alloying elements affect the transformation temperatures and times provides the necessary tool for choosing the suitable steel chemistry.

CCT diagrams can be constructed by empirical methods, which is expensive and time consuming, or by means of mathematical modelling. Data obtained from experiments or statistical models can be used for fitting physically based models that can be used for predicting the transformation behavior for arbitrary cooling paths. Several studies have previously focused on the effect of steel alloying on the phase transformations. In5 the steel isothermal time-temperature-transformation (TTT) diagrams of steels were analysed and a regression model was constructed, which could be used for calculating the austenite decomposition. The similar but enhanced approach has been followed in6 and in the commercial JMatPro software.7 Regression models have been also fitted directly to experimental CCT diagrams.1,2,3,8,9,10,11

These kind of models are useful in assessing the effect of composition on transformation onset temperature, but they lack in ability to account for complex high order interaction effects between alloying elements and austenization parameter which includes austenization time and temperature. Pairwise interactions and second order effects of single variables are considered in,1,2,8 while in3,5,9,10,11 only first order effects are taken into account. With regression trees there is no theoretical upper limit to order of interactions considered. In this article interactions up to tenth order are allowed to be considered by the model.

When applying a statistical mathematical model for CCT diagram prediction, the model is fitted to previously known available experimental data, and then it can be used to predict the CCT diagram for any composition that is within the range of the data used in the fitting. Instances of the mathematical models implemented include the previously mentioned regression models, artificial neural networks,12 k-Nearest neighbours and Random Forests.13 We share opinion that conventionally trained deep neural networks on small datasets commonly show worse performance than traditional machine learning methods, such as gradient boosting machine.14,15 Also, recent comparison study13 found Random Forests (i.e., regression trees) type of predictions to be competitive compared to many alternatives.

In the current study we experiment with a new approach by utilizing gradient boosted regression trees, and further refining outputs given by gradient boosting with a physically based model. Boosting in general refers to a class of methods for combining weak learners or predictors to form a strong “committee” of predictors. These weak learners are usually functions belonging to some chosen parametric family and are in this context sometimes referred to as base learners.16 In this article the chosen family of functions is regression trees. The word gradient refers to the manner in which the base learners are to be combined. The model is first initialized by fitting a base learner to the data, and at each subsequent boosting iteration a new base learner is fitted to the negative gradient of some predefined loss criterion of the current model, and added to the model. This is a form of constrained gradient descent in function space: At each iteration the base learner to be added is the one most parallel to the negative gradient of the loss criterion. As a result a sum of base learners is obtained as shown in Figure 1. The predictive power of this committee model is considerably greater compared to that of a single base learner. Later on in this article gradient boosting with regression trees as base learners is sometimes referred to as gradient boosting machine (GBM).

Regression trees recursively split the data into disjoint rectangular regions and fit a constant in each one. They can be implemented quickly and are conceptually simple and interpretable, yet can capture nonlinear effects and higher-order interactions. Major disadvantages with regression trees are their inaccuracy and instability: small perturbations in the data can result in significant differences in the resulting tree model. One remedy is to grow a “forest” of regression trees to bootstrap samples of the training data and to average the predictions over all trees. This method is known as Random Forest.17 In contrast in gradient boosting new trees are added sequentially to shift the model to the desired direction.

GBM retains many favourable characteristics of regression trees, while dismissing the unwanted ones. Both are invariant under strictly monotone transformations of predictors, and as a consequence insensitive to long tailed error distributions.18 Regression trees also carry out internal feature selection, and this property is also passed on to GBM.18 On the other hand gradient boosting remedies to some extent the most obvious problem with single tree models, that is their inaccuracy.18 This is further improved by taking a subsample of the original data at each iteration, as this results in less correlated trees and smaller variance in the total model.19

The rest of this article is structured as follows. First, a discussion of the data used in the data-driven models is given. This is followed by a description of gradient boosted regression trees and the procedure used to train the models, including the issue of hyperparameter selection. Next, the physically based model is described, and the methodology to derive the TTT-diagram. After this GBM-model performance metrics are presented and compared to those gained from Random Forest and JMatPro. Article is then closed with concluding remarks.

Data

The main part of the CCT data for this study was taken from the wide experimental studies conducted in Germany,20,21 Britain,22 USA23 and Slovenia.24 Additional data were also collected from many single publications, including also the inhouse data conducted in the University of Oulu using Gleeble equipment. The focus was chosen to be on low alloyed steels. In the current study no data was collected for high-carbon steels, which may include carbides in their austenitic structure.

It is known that only the dissolved elements control the austenite decomposition. The dissolved composition can be altered if carbides form. To avoid using data which is affected by carbide formation, those steels where carbides were known to be present after holding, i.e., the austenite phase was not homogenous but included carbides before cooling started (as indicated in the original source) were not included in data collection. If carbides or other precipitates are still present in the austenite after the sample austenization, the sample is non-homogeneous, and the results cannot be compared with homogeneous cases.

Because the dissolved composition controls the austenite decomposition, we should in fact apply the dissolved composition and not the nominal composition in the model development. However, dissolved compositions are in general not known, only nominal compositions are given in the CCT diagrams. Therefore, in the present study, nominal compositions are used. It might be that when applying highly non-linear statistical (machine learning) methods combined with nominal compositions, the correlations between nominal and soluble compositions could be automatically taken into account. This might be the case also with high carbon steels if the sample is not fully homogeneous. Also for these reasons, the highly non-linear machine learning methods could be useful.

The following chemical elements are now considered in the present study: C, Si, Mn, Cr, Mo, Ni, Al, Cu and B. In addition to the chemical composition, also an austenization parameter \({P}_{\text {A}}\) was used as a variable in the model. It has been introduced as \( P_{{\text{A}}} = [1/T_{{\text{A}}} - R/{\text{Q}} \cdot {\text{ln}}(t_{{\text{A}}} /60)]^{{ - 1}} \) where \({T}_{\text {A}}\) (K) is the austenization (holding) temperature, \({t}_{\text {A}}\) (min) is the austenitization (holding) time, \( R = 1.987\;{\text{cal/molK}} \) is the gas constant and \({Q} = 110000 \; \rm{cal}/\rm{mol}\) is the activation energy.1,25 Holding time and austenizing temperature both have increasing functional dependence with the \({P}_{\text {A}}\) number. In this way, \({P}_{\text {A}}\) considers the effects of austenite grain size as well as other phenomena taking place during holding on the austenite decomposition. As grain boundaries act as nucleation sites for ferrite, grain size has a significant effect on nucleation rate for ferrite.26 On the other hand it ignores the effects of inclusions or precipitates on the austenite grain growth during holding. Therefore it might be better to use directly the holding time and temperature together with steel composition as inputs, instead of the \({P}_{\rm{A}}\) term.

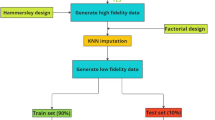

The total number of observations in the training set is 1543. The number of unique combinations of variables C, Si, Mn, Cr, Mo, Ni, Al, Cu, B and Pa is 442. This means that there are on average about 3.5 (cooling rate, temperature)-points per diagram. Table I summarizes essential descriptive statistics about the training data. Distributions of values among variables in the training data are illustrated with frequency histograms in Figure 2. Figures 3 and 4 illustrate comparisons between training and test data for each variable.

Distributions of values for each variable in training data illustrated as frequency histograms. y-axis is the number of observations. x-axis is the weight percent for C, Si, Mn, Cr, Mo, Ni, Al and Cu. For ppmB x-axis is weight percent times 104. For Pa x-axis is the (dimensionless) Pa-value. For LogRc x-axis is the base 10 logarithm of cooling rate in °C/s. Light green vertical dashed line marks the lower quartile. Respectively red, blue and dark green dashed vertical lines mark the median, the mean, and the upper quartile (Color figure online)

It is also good to note that the experimental data points measured are not usually presented in the published CCT diagrams. The CCT curves given are then fitted based on the measurements. This is because of the scatter observed in the experimental data. For instance, it might be very difficult to accurately detect the point where the austenite starts its decomposition. It is believed that the fitted curves are more accurate. Anyhow this means that the digitized data from CCT curves have some uncertainty, and it is evident that near the critical cooling rate, i.e., near the nose areas of the CCT curves, the uncertainty is highest. So, it could be assumed that the data for critical cooling rates (nose cooling rates) have highest amounts of scatter.

Another uncertainty is the steel composition because typically only the main chemical elements are given, not the full composition.

As for the sample holding parameters at high temperature, the holding times were not given for all the CCT diagrams applied in the present study. For these cases, we applied value \({t}_{\text {A}} = 5 \; \rm{min}\) because between temperatures 1200 °C and 800 °C, it makes \({P}_{\text {A}}\) to deviate only with about 3 to 4 pct from the \({P}_{\text {A}}\) value obtained with \({t}_{\text {A}}\) values of 1 and 30 minutes. This determines a typical range of the holding times in most experiments.

Because the main aim of this publication was to study the feasibility of chosen modern machine learning methods to predict CCT curves, only ferrite start curves and ferrite critical cooling rates (nose cooling rate) were modelled as the case examples. For validation, 19 new CCT diagrams were digitized (see Appendix B). In collecting the data that was used in comparison of the model data to experimental results, we employed a freely available digitization software.27 This validation data was not used in the fitting of the GBM-model, hence comparison to these experimental curves gives realistic view of the predictive capabilities of the model.

Illustration of how close compositions of test set 1 are to the training compositions. Vertical position of letters A–K represent values of variables of test steels in test set 1. Boxplots represent distributions of values in the training data. The boxes contain ranges of values that are between 25-percentile (Q1) and 75-percentile (Q3) of the data. Solid line segments outside the boxes extend to \(1.5 \times (Q3 - Q1)\) away from the box edge or observation farthest away from the box edge, whichever is closer. Small circles denote single training observations that are farther away from the box edge than \(1.5 \times (Q3-Q1)\)

Illustration of how close compositions of test set 2 are to the training compositions. Interpretation is equivalent to the one in Fig. 3

Method

In this article we propose a new method for estimating the onset of austenite to ferrite transformation during cooling for arbitrary alloy composition. We take a hybrid approach exploiting both purely data driven models and a physically based model, which connects the numerical fitting methods to elementary description of nucleation theory through the application of the widely used rule of Scheil.28 Initially two models are fitted with gradient boosting machine18,29 with regression trees30 as base learners. One is for predicting the critical cooling rate from alloy composition, the other is for predicting transformation onset temperature from alloy composition and cooling rate. Then the CCT data predicted by the GBM-models are used as inputs for the physically based model. This model requires also the Ae3 equilibrium temperature of the alloy representing the highest temperature where austenite can start to decompose to proeutectoid ferrite. This is given by InterDendritic Solidification software (IDS).31 This final model gives both CCT- and TTT-diagrams for the alloy composition of interest (Figure 5), and it can be used for calculating an estimate for the transformation start for any linear or nonlinear cooling path.

Modelling flow. Two predictive GBM-models are trained, one that predicts ferrite onset temperature (\((\mathcal {T}_i)_{i=1}^N\)), and one that predicts critical cooling rate (\(CCR_0\)). Both models get steel composition (\(C_0, \, Si_o, \ldots \)) as input. The model that predicts ferrite onset temperature has also a grid of cooling rate values (\((Rc_i)_{i=1}^N\)) as inputs, while the model for predicting critical cooling rate has the respective critical cooling rates for each steel. Predicted ferrite onset temperatures and predicted critical cooling rates are then fed to a physically based model, that also gets estimated equilibrium temperature (\(Ae3_0\)) (calculated with the software IDS) as input. The physically based model then outputs the final CCT-diagram. Also a TTT-diagram can be estimated from the estimated CCT-diagram

Gradient Boosting with Regression Trees

Statistical learning problems can often be considered as minimization tasks of expected loss over some unknown joint distribution of input values and labels \(({\bf{x}}, y)\), where \({\bf{x}} \in \mathbb {R}^m\) and \(y \in \mathbb {R}\), with respect to a predictor function:18,32

Gradient boosting is an algorithm that seeks an approximate solution to (1) by repeatedly approximating the steepest descent direction of the empirical loss \( \sum\limits_{{i = 1}}^{N} L (y_{i} ,F(\bf{x}_{{i}} )) \) in function space, where the approximation to steepest descent direction belongs to a chosen parametric family of functions. In this case the predictor vector x contains cooling rate and relative proportions of different alloy components, the response y is the corresponding transformation onset temperature and the parametric family of functions is regression trees.

Regression trees. A Regression tree can be viewed as a linear combination of indicator functions16

where the indicator function is a function that determines if x belongs to a set or not:

Therefore, a regression tree fits a constant to each of the regions \(R_j\), \(j = 1, \ldots , J\). These regions, also known as nodes, are formed by splitting the predictor space repeatably with respect to one variable at a time, each time choosing the optimal split in the sense that squared loss of the resulting tree is minimized. That is, in each node the split variable \( {\bf{x}}^{{({j})}} \) and split point \(s_j\) of the next split are chosen as to minimize

if the resulting decrease in squared loss in making a further split is great enough or if the depth of the branch has not reached a prespecified maximum. Regression trees can be thought of as decision rules, for example as follows:

If

-

1.

\(x_i^{(1)} < s_1\), and

-

2.

\(x_i^{(2)} \ge s_2\), and

-

3.

\(x_i^{(3)} < s_3\),

where \(x_i^{(1)}\) and \(x_i^{(3)}\) could be e.g. relative proportions of carbon and silicon respectively, and \(x_i^{(2)}\) is perhaps the cooling rate, then the observation \({\bf{x}}_i\) belongs to terminal node \(R_k {:}{=}(\infty , s_1) \times [s_2, \infty ) \times (-\infty , s_3) \times \mathbb {R} \times \cdots \times \mathbb {R}\), and based on (2) the predicted transformation onset temperature for the steel in question given by the model is \(c_k\). The predictions in each node are the arithmetic means of temperatures in those nodes.

Gradient boosting. Gradient boosting16,18,19 approximates the solution to (1) as an additive model in a stage-wise fashion. According to the original formulation the model is initialized with a constant \(F_0({\bf{x}}) = \rm{arg}\min _\gamma \sum _{i=1}^N L(y_i, \gamma )\), and at iteration m the regression tree \(f_m(\,{\cdot }\,)\) most parallel to the negative gradient of the current model \(-g_i = -\frac{\partial L(y_i, F({\bf{x}}_i))}{\partial F({\bf{x}}_i)}\bigg |_{F=F_{m-1}}\) is added to the total model so as to minimize a loss criterion L:

where the regression tree \(f_m\) is determined as the solution to

For computational convenience a second order approximation to the loss criterion in (5) is used29:

where \(g_i\) is as before and \(h_i\) is the second order derivative:

Also included is a regularization term

that penalizes the complexity of the candidate tree \(f(\,\cdot \,) = w_{q(\,\cdot \,)}\), where \(q :\mathbb {R}^p \rightarrow \{ 1, \ldots , T \}\) maps the observations to terminal nodes that are also called leaves. That is, in (9) T is the number of leaves and \(w = (w_1, \ldots , w_T)\) is the vector of weights, i.e. predicted transformation onset temperatures in the corresponding leaves. \(\gamma \) and \(\lambda \) are both non-negative adjustable hyperparameters that control the amount and type of regularization imposed on the objective (7). Details concerning the splitting of nodes are given in Appendix A.

Training the model. The resulting model can be written as

Because the chosen loss criterion in this case is squared loss, minimizing the objective (7) is equivalent to minimizing a regularized version of (6)29, thus the method can be viewed as regularized steepest descent. This begs the question of step length. At iteration m the negative gradient of the loss functional is approximated with tree \(f_m\), and the total model is shifted in the approximate direction of steepest descent by a step scaled by a factor of \(\eta \in (0, 1)\):

Empirically it has been found that smaller is generally better,18 but the downside is longer training time, and greater number of trees in the final model resulting in greater memory consumption. Setting the step length is thus balancing between gained precision, and required computational resources. In this study a step length of 0.005 was found to achieve a reasonable compromise between these two.

Another adjustment that is known to better the model is to use only a random subsample of fraction \(\mu \in (0, 1)\) of the training instances for constructing each new tree.19 This means that at each iteration, a sample \(({\bf{x}}_{j_1}, y_{j_1}), \ldots , ({\bf{x}}_{j_S}, y_{j_S})\) of size \(S = [\mu \cdot N]\) is drawn from the training data without replacement, and the tree is grown based on that sample as described in the previous paragraph. This kind of subsampling not only makes the model more precise, but also cuts computation time, since the number of possible split points is decreased.

The model was trained with the R-package XGBoost.29 Besides step size \(\eta \), minimum loss reduction in order to make a further split \(\gamma \), L2-penalty parameter \(\lambda \) and subsample ratio μ XGBoost allows to define maximum allowed tree depth \(\rm{d}\), minimum number of observations required in a node \(\rm{n}_{\rm{min}}\), L1-penalty parameter \(\alpha \), and a number of other hyperparameters that were not considered in this study. As step size was fixed at 0.005, six hyperparameters remain undetermined. Define the optimal hyperparameter vector \( {\theta }^* = (\gamma ^*, \lambda ^*, \mu ^*, \rm{d}^*, \rm{n}_{\text {min}}^*, \alpha ^*)\) to be the one that satisfies

The optimal hyperparameter vector was approximated by maximizing the reciprocal of mean loss of 10 repeated 10-fold cross-validation rounds. To clarify it is defined

where \( \rm{CV}_i(\gamma , \lambda , \mu , \rm{d}, \rm{n}_{\text {min}}, \alpha )\) is the mean absolute loss of one 10-fold cross-validation round with hyperparameters \(\gamma , \lambda , \mu , \rm{d}, \rm{n}_{\text {min}}, \alpha \). That is, for each i, \(\rm{CV}_i\) partitions the training data randomly into 10 disjoint subsets and calculates the mean absolute cross-validation loss over this partition using each of the 10 subsets as a test set in turn. \(f_{\rm{CV}}\) was approximately maximized by the following steps:

-

1.

Draw a random sample of size 100 from the 6-dimensional hyperparameter space.

-

2.

Calculate \(f_{\rm{CV}}\) in all the drawn sample points.

-

3.

Fit a Gaussian process in the results.

-

4.

Identify 8 potential local maxima, and calculate \(f_{\rm{CV}}\) in these points.

-

5.

Repeat steps 3. - 4. twice.

-

6.

Choose the hyperparameter vector that produces the highest value for \(f_{\rm{CV}}\).

The hyperparameters that were found to produce the highest value for \(f_{\rm{CV}}\) are listed in Table II.

Some improvement probably could have been gained by using a larger starting sample size and repeating steps 3. - 4. more than twice, but this would require more CPU time and memory. The kriging procedure described in the above steps was implemented using the R-package ParBayesianOptimization.33,34

XGBoost also gives the option to force the response to be monotonic with respect to one or several predictors. As the transformation start temperature is known to have decreasing functional dependence with cooling rate, this additional restriction was imposed on the model during training.

Physically Based Model for Fitting CCT Start Curve and Estimation of Transformation Start for Arbitrary Cooling Paths

To construct smooth physically based function for describing the transformation start during cooling (i.e. the CCT start curve), we applied suitable equation for isothermal holding start curve (i.e. TTT start curve) and use it for calculating the CCT curve with the rule of Scheil. In addition to describing the smooth CCT curve, the approach has the benefit that once the function parameters are obtained by fitting to the data, an estimate for the transformation start can be calculated for any linear or nonlinear cooling path. Although the aim of the current study is to calculate the transformation behaviour occurring during cooling, also the TTT curve can be calculated and compared to experimental results available in literature.

The TTT start curve is closely linked to the nucleation rate N, described by Eq. [14].

where \(\omega \) is a factor that includes the vibration frequency of the atoms and the area of critical nucleus, \({\Delta } G^*\) is the energy barrier for formation of critical sized nucleus, and C is the concentration of nucleation sites, \({\Delta } G_m\) is the activation energy for atomic migration between the nucleus and the matrix.35 The quantity \({\Delta } G^*\) is strongly temperature dependent and the type of nucleation sites affecting most the nucleation rate also depend on temperature (for low undercooling, nucleation on grain corners can be dominant, but for higher undercooling nucleation on the flat grain interfaces can affect the rate more.35) However, the term \({\Delta } G_m\) can be considered as independent of temperature. Although some growth probably occurs before the transformation start is observed, it can be expected that the transformation time \(\tau \) dependence on temperature is approximately proportional to \(\frac{1}{N}\), and the growth rate is less important. As the undercooling increases, \({\Delta } G^*\) decreases.

In the current study we take the pragmatic approach, that the part \(\omega C\rm{exp}(-\frac{{\Delta } G^*}{RT})\) in the nucleation rate function, Eq. [14], is described by a fitting function which approaches zero as the temperature approaches the limiting transformation start temperature on high temperatures and increases strongly as the undercooling increases. On this basis, a suitable simple functional form for describing temperature dependent transformation start time (and kinetics) during isothermal holding, which has been succesfully applied earlier,5 was also used in this study Eq. [15]

where T is absolute temperature, \({\Delta } G_m\) activation energy, R ideal gas constant and \(T_{\infty }\) is certain limiting temperature for the transformation. Because the transformation is energetically favourable only below the limiting temperature, the temperature T must be below \(T_{\infty }\) for the transformation to occur. For ferrite, \(T_{\infty }\) is the equilibrium Ae3 temperature, and for bainite it is the so-called bainite start temperature \(B_S\). The curve described by Eq. [15] is called TTT (Time Temperature Transformation), or equivalently, IT (Isothermal Transformation) curve.

The so-called nose temperature of the TTT diagram, i.e the temperature where the time required for the transformation to start during isothermal holding is shortest can be derived from Eq. [14]. At the nose temperature \(T_N\) the derivative \(d\tau /dT\) changes sign, and \(T_N\) can be calculated from Eq. [15] when \(d\tau /dT=0\). This yields \(T_N=\frac{-{\Delta } G_m+\sqrt{{\Delta } G_m^2+4mR T_{\infty } {\Delta } G_m}}{2mR}\).

In order to transform the TTT/IT curve given by Eq. [15] for calculating the transformation start during cooling, the additivity rule (i.e. the rule of Scheil)28 is usually applied, so that the transformation is estimated to start at time \(t_s\) when the integral given by Eq. [16] over time t yields unity, i.e.

where the lower bound, \(t=0\), is the time instant when the material temperature has been \(T_{\infty }\). As discussed by Kirkaldy36, the rule described by Eq. [16] can be expected to have general validity in describing the transformation onset. Furthermore, according to,36 for constant cooling rate \(\dot{\theta }(T_{\text{CCT}})\), which produces the transformation start at temperature \(T_{\text{CCT}}\), the condition given by Eq. [16] is equivalent to (17)

From Eq. [17], \(\dot{\theta }(T_{CCT})\) can be obtained by integration so that

where \(T_{CCT}^*\) is used to denote the integration variable. Finally, when \(\dot{\theta }(T_{\text{CCT}})\) is known, the time coordinate in the usual (time, temperature) CCT diagram can be calculated as

where \(T_0\) is the temperature corresponding to the cooling start time \(t=0\).

While the TTT/IT curve is conveniently described by the analytical Eq. [15], the integral in Eq. [18], which is needed for constructing the CCT curve cannot be easily obtained in a function form, since parameter m can be any positive real number (not necessarily an integer). For this reason the CCT curve is evaluated by numerical integration from Eqs. [15], [18] and [19] using the trapezoid rule, once the parameters K, \({\Delta } G_m\), m and \(T_{\infty }\) are defined.

Since we wish to calculate the transformation start during cooling for any steel composition and for any cooling path, the physically based model was fitted to the data obtained from the gradient boosting model described in Section III–A. As described in Section III GBM provides estimates both for the critical cooling rate, as well as the transformation start temperature as function of cooling rate, when the cooling rate is lower than the critical value. To find the parameters of the physically based model we applied the least squares curve fitting algorithm using Matlab lsqcurvefit function37,38 to fit the numerical function \( t_{{{\text{CCT}}}} (T) \) defined by Eqs. [15], [18] and [19]. The physically based model (blue dashed line in Figure 6) was fitted to the values obtained by GBM (green solid line) for the cooling rates that are slower than the critical cooling path (black dash-dot line).

Fitting of the physically based model (CCT fitted to output from GBM-model) to the data obtained from GBM-model for transformation onset temperatures (CCT predicted by GBM) for cooling rates slower than the critical cooling rate (GBM critical cooling path). The experimental CCT data is shown with blue circles. The predicted TTT diagram is shown with the red dotted line and compared to the experimental TTT data (Color figure online)

Since the gradient boosting algorithm yields values that are not distributed evenly on the temperature axis, we first divided the temperature values used in the fitting into equally spaced intervals, and then calculated the average time coordinate for each temperature interval. The mid-point of the temperature interval and the corresponding averaged time coordinate were then used in the parameter fitting of the numerical function \( t_{{{\text{CCT}}}} (T) \). The curve was fitted to (\(\rm{log}_{10}\)(time), temperature) coordinates. The fitting limits were set as follows: According to Reference 39, the typical value of 240 kJ/mol, equal to the activation energy for self-diffusion of iron, would be a suitable value for the activation energy for atomic migration between the nucleus and the matrix, \({\Delta } G_m\). Since the chemical free energy change due to the transformation affects the activation energy,39 this value was allowed to change within 70 to 105 pct in the fitting. The parameter m was allowed to change from 2 to 5.5. No fitting limits were set for the parameter K.

After the parameter values have been obtained from the least squares fitting, prediction for the TTT curve can be calculated using Eq. [15]. The result for the example case is shown in red dotted line in Figure 6 and the predicted curve is compared to the experimental data points shown with red markers and the start curve described by the red solid line.

Results and Discussion

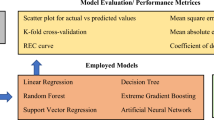

Testing the Accuracy of Predictions on Digitized CCT-Diagrams

Two GBM-models that describe the effect of cooling rate on ferrite transformation start temperature for a given composition were fitted with R-library XGBoost. One data set contained ferrite transformation onset temperatures for varying nominal compositions over a range of cooling rates. Additionally a GBM-model was fitted for predicting the critical cooling rate for ferrite transformation. Training examples were here essentially the same as in the earlier model, but in this case the response was the critical cooling rate, and the covariates were the nominal compositions of the steel.

For the sake of comparison, a Random Forest -model was also fitted to ferrite transformation onset data by using the R-package randomForest.40 Since Random Forest had only one adjustable hyperparameter, and it was integer valued, the optimal Random Forest-model could be easily found purely by experimentation.

Ferrite start-curves were also predicted with the proprietary software JMatPro. The performance of the GBM-model that predicted ferrite start temperature was tested on digitized CCT-diagrams from,23 and compared to predictions given by the Random Forest-model and JMatPro. The chosen objective function in the first two models was squared error, since in XGBoost it was discovered to consistently lead to better performance than pseudo-Huber error, the only other reasonable alternative available at this time. Squared error was also the only possible objective function in the Random Forest -model.

Since the temperatures received from JMatPro were over a grid of cooling rates that did not precisely coincide with any of the cooling rates from the digitized diagrams, for each cooling rate the reported prediction from JMatPro was actually a linearly interpolated value between temperatures corresponding to two nearest cooling rate values from the grid of cooling rates used by JMatPro.

Because of adaptiveness of gradient boosting, training error could practically be brought about to be as small as is desired. At some point in the training process however, the model starts to overfit, i.e., it starts to adjust to random fluctuations specific only to the particular training data set, but that are not generally characteristic to observations of interest. Thus goodness of fit of different models is illustrated only for test data in Figure 9.

Figures 7 and 8 contains predictions of ferrite start temperatures as a function of cooling rate plotted against test data for 19 different compositions. x-coordinate of a point thus corresponds to \(\log _{10}\) of cooling rate (°C/s), and y-coordinate the temperature in Celcius. Red circles correspond to digitized observations, green triangles are predictions given by JMatPro, purple crosses correspond to Random Forest predictions, and turquoise squares mark predictions of the gradient boosting model. Dashed black vertical lines are predicted critical cooling rates given by the GBM-model. As these cannot be accurately digitized, corresponding measured critical cooling rates could not be provided. Goodness of fit for predicted critical cooling rates can be approximately evaluated from the locations of points that form the experimental curve. For example for Steel-2B the shape of experimental curve suggests that the true critical cooling rate might be quite close to the predicted one, whereas for Steel-2C the predicted critical cooling rate is clearly too small. The labels denote diagrams in Reference 23. Compositions together with values for \({P}_{\text {A}}\)-parameter for test steels are given in Appendix B. The compositions for test set 2 are not complete, as some of them contain also small amounts of one or several of the following elements: Phosphorus, sulfur and vanadium. This fact is addressed in the second paragraph of Section IV–D.

Tables III and IV contain mean absolute prediction errors for each test steel for each method, as well as the averages over all steels for each test set. As it turned out, gradient boosting has the smallest average mean absolute prediction error in both test sets. For reference, mean absolute errors for the chosen gradient boosting model for the 10-fold cross-validation cases were 10.91, 12.84, 12.06, 11.60, 12.28, 12.17, 11.94, 13.03, 11.61, 14.45, and the average of these was 12.29. Frequency histograms for each of these cross-validation cases can be found in Appendix C. Tables V and VI contain correspondingly relative errors. Note that the reported average for each model is not the average over the reported errors, since those that are reported for each steel are already averages over errors of predictions for individual cooling rates. Reported average for each model is the average over all cooling rates in all diagrams for each test set. Values for R2, also known as coefficient of determination, calculated on predictions on test data for different models are listed in Table VII.

Figure 10 illustrates the relative importance of variables during model fitting in the GBM-model. For each variable relative importance is the relative average decrease in squared loss caused by creating a split with respect to that variable across all nodes in all trees of the model. For mathematical derivation, see18. Note the great importance of \({P}_{\text {A}}\) in building the trees. This may be partly because \({P}_{\text {A}}\) and C are the only variables not equal to zero in every observation. It should be noted that lengths of bars do not directly translate to first order effects.

Discrepancy between observed and predicted temperatures seems most notable for Steel-1A. This could be due to lack of similar compositions in the training set. The reported composition of Steel-1A is 0.1 wt pct C, 0.29 wt pct Si and 0.74 wt pct Mn. In the training set there exists data from only four such compositions that contain only C, Si and Mn with wt pct of C being less than 0.2. These composition are

-

1.

0.15 wt pct C, 0.25 wt pct Si, 1.40 wt pct Mn,

-

2.

0.18 wt pct C, 0.20 wt pct Si, 0.45 wt pct Mn,

-

3.

0.19 wt pct C, 0.20 wt pct Si, 1.50 wt pct Mn,

-

4.

0.19 wt pct C, 0.20 wt pct Si, 1.20 wt pct Mn.

From these only the second composition comes any close to the composition of Steel-1A. There does exist two other compositions in the training set significantly closer to the composition of Steel-1A in terms of Euclidean distance than any of these four. These are

-

1.

0.25 wt pct C, 0.20 wt pct Si, 0.70 wt pct Mn,

-

2.

0.30 wt pct C, 0.20 wt pct Si, 0.70 wt pct Mn.

However, as carbon is clearly the single most significant element (see Figure 10), neither of these can be regarded as being close to the composition of Steel-1A.

Fitting of Physically Based Model on Either Experimental Data or Virtual Data Generated by Gradient Boosting Method

While the gradient boosting method can calculate an estimate for the transformation start for approximately linear cooling rates, the physically based model offers the possibility to calculate the transformation start for any cooling path. In this subsection we first show the results of the fitting of the physically based model directly to the experimental CCT data and then compare the results of the fitting to the virtual data generated by the gradient boosting method. The aim is to show that the virtual data can be used to fit the physically based model. To see how well the physically based model described in Section III–B can reproduce the experimentally observed CCT and TTT curves we first fitted the function \( t_{{{\text{CCT}}}} (T) \) defined by Eqs. [15], [18] and [19] to experimental CCT start data given in41 for four steels where the ferrite start curve was clearly distinguishable from the bainite start curve. These steels were chosen from the second test set. The compositions, austenization temperatures and times of the steels are shown in the Table VIII.

These steels contain trace amounts of elements not present in the training steels. However, the model seems to work reasonably well without taking them into consideration. This seems to indicate that their effect is not significant. Also, in earlier models3,42 dependency between ferrite start temperature and vanadium was negligible, and the effect of sulfur and phosphorus was not considered.

The following procedure was applied to fit the physically based model directly using experimental data: for each case, the model was fitted to the six fastest cooling rates where the transformation start was experimentally observed, so that the CCT data described the shape of the CCT “nose” as accurately as possible. The nose region is important from the application point of view, since it describes the lowest cooling rate for avoiding ferrite formation. After fitting the model, we used it for predicting the TTT start curve by applying Eq. [15]. The results were compared to the experimental TTT start curve and the measured TTT start points which were indicated by the measured hardness value in the figures presented in.41 Two measures were calculated to quantify the difference between the TTT data: \({\Delta }_t\) is the average time difference between the measured TTT point (red star marker) and the simulated value. \({\Delta }_d\) is the average minimum distance per point in (temperature/°C, time/s) coordinates between the digitized TTT curve (continuous red line) and the simulated curve, which was obtained by calculating the distance for each digitized point. These comparisons are shown in Figure 11. It can be seen that for all of the test cases, the CCT data is reasonably well fitted by the function, but the TTT start time is overestimated for the cases a) and b). For the cases c) and d) the TTT “nose” times are well predicted but for case d) the top part of the predicted TTT curve is slightly higher than the experimental measurements.

Physically based model (blue dashed line) fitted to the experimental CCT data (blue circles) for the four test steels chosen for the purpose of comparing the fitting of the physically based model directly to experimental data and to predictions given the GBM-model. The predicted TTT curve (red dotted line) is compared to experimental TTT data (red star markers and red solid curve). Compositions, austenization temperatures and holding times for steels (a)–(d) are given in Table VIII (Color figure online)

To see how well the virtual data obtained from the GBM-model can be used for fitting the physically based model, we fitted the model by using the procedure described in Section III–B to the virtual data and compared the result to the experimental CCT and TTT data for the same four steels that were used for directly fitting the model to the experimental CCT data. The result of the fitting of the physically based model is shown in Figure 12. The same measures (\({\Delta }_t\) and \({\Delta }_d\)), as for the experimental data fit, were calculated for the difference between the simulated and experimental TTT data. It can be seen that the experimental CCT data is well reproduced by the fitting to the virtual GB data, except for the case c) where the physically based model estimates the start temperatures slightly lower for intermediate cooling rates. However, in comparison to the results of the direct fitting to the experimental data (shown in Figure 11), the fitting to the virtual data given by the GBM-model yields similar kind of accuracy. Therefore the fitting provides a useful approximation for the function \(\tau (T)\) described by Eq. [15]. The values of the function \(\tau \) can then be used for calculating the transformation start for arbitrary cooling paths using the rule of Scheil, Eq. [16].

Physically based model (blue dashed line) fitted to the CCT data obtained from the GBM-model (green solid line) for the four test steels chosen for the purpose of comparing the fitting of the physically based model directly to experimental data and to predictions given the GBM-model. The predicted TTT curve (red dotted line) is compared to experimental TTT data (red star markers and red solid curve). Compositions, austenization temperatures and holding times for steels (a)–(d) are given in Table VIII (Color figure online)

Conclusions and Outlook

In this article three different methods for predicting ferrite start temperature were tested: A modification of gradient boosted regression trees called XGBoost,29 Random Forest17,40 and JMatPro.7 Under all tested model performance metrics, gradient boosting performed best.

Despite enforced monotonicity with respect to cooling rate, the piecewise constant nature of regression trees makes the resulting curve shape non-smooth. The physically based model was used to smooth the predictions resulting in smoother shape for the final curve. The fitted physically based model then enables the estimation of the transformation start for any linear or nonlinear cooling path by applying the rule of Scheil, or even for predicting the isothermal holding transformation diagram (i.e. the TTT curve) as an extreme case. Calculation of the transformation for arbitrary cooling paths has been used in several modelling works related to actual processing.44,45,46 The TTT curve calculated by the physically based model was compared to experimentally measured curve for four different steels. It was found that using the gradient boosting CCT data for fitting the physically based model yielded similar accuracy for the TTT diagram predicted by the physically based model as if experimental CCT data was used for fitting.

As mentioned earlier, it might be better to use directly austenization temperature \(\rm{T}_{\text {A}}\) and holding time \(\rm{t}_{\text {A}}\) instead of using the austenization parameter \({P}_{\text {A}}\). The reason for this is two-fold. First, the mapping \((\rm{T}_{\text {A}}, \rm{t}_{\text {A}}) \mapsto {P}_{\text {A}}\) is not injective, which inherently results in some loss of information. Second, because some of the \(\rm{t}_{\text {A}}\)-values are not known holding times but educated guesses, the justification of using \(\rm{t}_{\text {A}}\) at all may seem a little questionable. However, using separate values for \(\rm{T}_{\text {A}}\) and \(\rm{t}_{\text {A}}\) could result in a model, that mostly dismisses \(\rm{t}_{\text {A}}\), if creating splits with respect to \(\rm{t}_{\text {A}}\) during tree-building does not improve the goodness of fit much.

In future, the gradient boosting method will be extended for calculating also other phase transformation curves such as bainite start and martensite start temperatures. Furthermore, by fitting to the corresponding data, the model could be used for calculating the curves representing fractions of austenite decomposed at different temperatures during cooling. Also the effect of prior deformation of austenite on the CCT curves could be taken into account in the following studies. Another application of this method could be doing sensitivity analysis for different types of steel.

References

J. Miettinen, S. Koskenniska, M. Somani, S. Louhenkilpi, A. Pohjonen, J. Larkiola, and J. Kömi: Metall. Mater. Trans. B, 2019, vol. 50(6), pp. 2853–66.

J. Miettinen, S. Koskenniska, M. Somani, S. Louhenkilpi, A. Pohjonen, J. Larkiola, and J. Kömi: Metall. Mater. Trans. B, 2021, vol. 52(3), pp. 1640–63.

A. Pohjonen, M. Somani, and D. Porter: Metals, 2018, vol. 8(7), p. 540.

A. Pohjonen, M. Somani, and D. Porter: Comput. Mater. Sci., 2018, vol. 150, pp. 244–51

J. Kirkaldy, D. Venugopalan: Proc. Int. Conf. Phase Transform. Ferr. Alloysa,1983, vol. 8, pp. 125–148

M.V. Li, D.V. Niebuhr, L.L. Meekisho and D.G. Atteridge: Metall. Mater. Trans. B, 1998, vol. 29(3), pp. 661–72

N. Saunders, Z. Guo, X. Li, A.P. Miodownik, andJ.P. Schille: JMatPro Softw. Lit., 2004, 12, pp. 1–12.

H. Martin, P. Amoako-Yirenkyi, A. Pohjonen, N.K. Frempong, J. Kömi, and M. Somani: Metall. Mater. Trans. B, 2021, vol. 52(1), pp. 223–35.

U. Lotter: Aufstellung von Regressionsgleichungen zur Beschreibung des Umwandlungsverhaltens beim thermomechanischen Walzen. Forschungsvertrag Nr. 7210EA/123, Kommission der Europäischen Gemeinschaften, Thyssen Stahl AG, Thyssen Forschung, Duisburg, 1991.

A. Pohjonen, M. Somani, J. Pyykkönen, J. Paananen, and D.A. Porter, Key Eng. Mater., 2016, vol. 716, pp. 368–75

J. Trzaska, L.A. Dobrzański: J. Mater. Process. Technol., 2007, vol. 192, pp. 504–10.

S. Chakraborty, P.P. Chattopadhyay, S.K. Ghosh, and S. Datta: Appl. Soft Comput., 2017, vol. 58, pp. 297–306.

X. Geng, H. Wang, W. Xue, S. Xiang, H. Huang, L. Meng, and G. Ma: Comput. Mater. Sci., 2020, vol. 171, p.109235.

J. Jiang, R. Wang, M. Wang, K. Gao, D.D. Nguyen, and G.-W. Wei: J. Chem. Inf. Model., 2020, vol. 60(3), pp. 1235–44.

S. Feng, H. Zhou, and H. Dong: Mater. Des., 2019, vol.162, pp. 300–10.

T. Hastie, R. Tibshirani, J. Friedman, The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd edn. (Springer, New York, 2009)

L. Breiman: Mach. Learn., 2001, vol. 45(1), pp. 5–32.

J.H. Friedman: Ann. Stat., 2001, vol.8, pp.1189–232.

J.H. Friedman: Comput. Stat. Data Anal., 2002, vol. 38(4), pp. 367–78.

F. Wever, A. Rose, W. Peter, W. Strassburg, and L. Rademacher: Atlas zur Wärmebehandlung der Stähle (Verlag Stahleisen m.b.H, Düsseldorf, 1961)

A. Rose and H. Hougardy: Atlas zur Wärmebehandlung der Stähle. Verlag Stahleisen m.b.H, Düsseldorf, 2, 1972.

M. Atkins, Atlas of Continuous Cooling Transformation Diagrams for Engineering Steels (British Steel Corporation, Sheffield, 1977).

W.W. Cias, Austenite Transformation Kinetics of Ferrous Alloys (Climax Molybdenum Company, Greenwich, 1977).

H. Kaker, Database of Steel Transformation Diagrams (SEMS-EDS and XED Laboratory, Metals Ravne Company, 2007).

P. Maynier, J. Dollet, P. Bastien, Hardenability concepts with application to steel, D. V. Doane and J. S. Kirkaldy, eds, TMS-AIME, Warrendale PA, p. 163 (1978)

S.S. Li, Y.H. Liu, Y.L. Song, L.N. Kong, T.J. Li, and R.H. Zhang:Steel Res. Int., 2016, vol. 87(11), pp. 1450–60.

A. Rohatgi, Webplotdigitizer: Version 4.5, 2021, https://automeris.io/WebPlotDigitizer. Accessed 20 Jan 2023

E. Scheil: Archiv für das Eisenhüttenwesen, 1935, vol. 8(12), pp. 565–67.

T. Chen, C. Guestrin: Proceedings of the 22nd ACM SIGKDD International Conference on knowledge discovery and data mining, pp. 785–794 2016.

L. Breiman, J. Friedman, C.J. Stone, R.A. Olshen, Classification and Regression Trees (Chapman & Hall/CRC, New York, 1984)

J. Miettinen, S. Louhenkilpi, H. Kytönen, and J. Laine: Math. Comput. Simul., 2010, vol. 80(7), pp. 1536–50.

V.N. Vapnik, Statistical Learning Theory (Wiley-Interscience, New York, 1998)

S. Wilson, ParBayesianOptimization: Parallel Bayesian Optimization of Hyperparameters. R package version 1.2.4. (2021). https://CRAN.R-project.org/package=ParBayesianOptimization. Accessed 22 Jan 2023

J. Snoek, H. Larochelle, and R.P. Adams: Adv. Neural Inform. Process. Syst., 2012, vol.25, pp. 2960–68.

D.A. Porter, K.E. Easterling, Phase Transformations in Metals and Alloys, 2nd edn. (Chapman & Hall, London, 1992)

J.S. Kirkaldy, R.C. Sharma: Scr. Metall.,1982, vol. 16(10), pp. 1193–98.

Online document: Matlab documentation, https://se.mathworks.com/help/matlab/, Accessed 10th May 2021

J.C. Lagarias, J.A. Reeds, M.H. Wright, and P.E. Wright: SIAM J. Optim., 1998, vol. 9(1), pp. 112–47.

C. Capdevila, F.G. Caballero and C.G. de Andrés: Mater. Trans., 2003, 44(6), pp. 1087–95

A. Liaw, M. Wiener: Classif. Regres. Random For. R. News, 2002, vol. 2(3), pp. 18–22.

G.F. van der Voort, Atlas of Time-Temperature Diagrams for Irons and Steels (ASM International, Cleveland, 1991)

U. Lotter, J. Herman, B. Thomas, Computer Assisted Modelling of Metallurgical Aspects of Hot Deformation and Transformation of Steels (Publications Office for the European Union, Luxembourg, 1997)

F. Wever, A. Rose, Atlas für Wärmbehandlung der Stähle (Verlag Stahleisen, m.b.H, Düsseldorf, 1954)

V. Javaheri, A. Pohjonen, J.I. Asperheim, D. Ivanov, and D. Porter: Mater. Des., 2019, vol. 182, p. 108047.

A. Pohjonen, P. Kaikkonen, O. Seppälä, J. Ilmola, V. Javaheri, T. Manninen and M. Somani: Materialia, 2021, vol. 18, p. 101150.

J. Ilmola, A. Pohjonen, O. Seppälä, O. Leinonen, J. Larkiola, J. Jokisaari, E. Putaansuu, and P. Lehtikangas, Procedia Manuf., 2018, vol.15, pp. 65–71.

Acknowledgments

The authors wish to acknowledge CSC - IT Center for Science, Finland, for computational resources. This work was supported by the Academy of Finland Profi5/HiDyn funding for mathematics and AI: data insight for high-dimensional dynamics (Grant 326291).

Funding

Open Access funding provided by University of Oulu including Oulu University Hospital.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Seppo Louhenkilpi is a professor (retired) and Jyrki Miettinen is a senior scientist (retired).

Appendices

Appendix A: XGBoost Algorithm Description

This section is entirely based on 29.

As the goal is to find the tree \(f_m\) that minimizes (7), terms not dependent on \(f_m\) can be omitted, and the objective becomes

For a fixed tree structure \(f(\,\cdot \,)\), the optimal predicted temperature \(w_j^*\) in leaf j is given by

where \(I_j = \{ i :{\bf{x}}_i \in q^{-1}(j) \}\) is the index set of leaf j.

Note that if \(L(y, F) = \frac{1}{2}(y - F)^2\), and \(\lambda = 0\), then (7) becomes \(L(y_i, F_{m-1}({\bf{x}}_i) + f_m({\bf{x}}_i)) + \gamma T\), and if furthermore the fixed tree structure in (A2) is the same as in the solution to (6), the temperatures \(w_j^*\) are the fitted values in the terminal nodes of the tree resulting as a solution to (6).

Plugging in the optimal temperatures (A2) in the effective regularized objective (A1), the optimal value becomes

which can be used to assess the quality of a given candidate tree. From (A3) one obtains a formula for loss reduction for a given split as

where I is the index set of the node to be split, and \(I_L\) and \(I_R\) are index sets for the left and right child nodes respectively given the candidate split. Formula (A4) is used for evaluating the split candidates. When choosing a split point in a given node of a tree, for every variable, the set of instances present in the node is sorted with respect to that variable, and for every value in the sorted list the score

is calculated. The split candidate with the highest score is then chosen as the split point if the split criterion (A4) is positive.

Appendix B: Compositions of Test Steels

Appendix C: Cross-Validation Results

See Fig. A1

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Luukkonen, J., Pohjonen, A., Louhenkilpi, S. et al. Gradient Boosted Regression Trees for Modelling Onset of Austenite Decomposition During Cooling of Steels. Metall Mater Trans B 54, 1705–1724 (2023). https://doi.org/10.1007/s11663-023-02782-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11663-023-02782-9