Abstract

This article in the journal Gruppe. Interaktion. Organisation. (GIO) introduces sociodigital self-comparisons (SDSC) as individual evaluations of own abilities in comparison to the knowledge and skills of a cooperating digital actor in a group. SDSC provide a complementary perspective for the acceptance and evaluation of human-robot interaction (HRI). As social robots enter the workplace, in addition to human-human comparisons, digital actors also become objects of comparisons (i.e., I vs. robot). To date, SDSC have not been systematically reflected in HRI. Therefore, we introduce SDSC from a theoretical perspective and reflect its significance in social robot applications. First, we conceptualize SDSC based on psychological theories and research on social comparison. Second, we illustrate the concept of SDSC for HRI using empirical data from 80 hybrid teams (two human actors and one autonomous agent) who worked together in an interdependent computer-simulated team task. SDSC in favor of the autonomous agent corresponded to functional (e.g., robot trust, or team efficacy) and dysfunctional (e.g., job threat) team-relevant variables, highlighting the two-sidedness of SDSC in hybrid teams. Third, we outline the (practical) potential of SDSC for social robots in the field and the lab.

Zusammenfassung

Dieser Beitrag in der Zeitschrift Gruppe. Interaktion. Organisation. (GIO) stellt soziodigitale Selbstvergleiche (engl. sociodigital self-comparisons, SDSC) als individuelle Bewertung der eigenen Fähigkeiten im Vergleich zu den Kenntnissen und Fähigkeiten eines kooperierenden digitalen Akteurs in einer Gruppe vor. SDSC bieten eine ergänzende Perspektive auf die Akzeptanz und Bewertung von Mensch-Roboter-Interaktion (engl. human-robot interaction, HRI). Mit dem Einzug von sozialen Robotern in die Arbeitswelt werden neben Mensch-Mensch-Vergleichen auch autonome Akteure zu Vergleichsobjekten in Arbeitsteams (d. h. Ich vs. Roboter). Bislang wurden SDSC in der HRI-Forschung jedoch nicht systematisch betrachtet. Daher führen wir SDSC aus einer theoretischen Perspektive ein und reflektieren die Bedeutung für Praxisanwendungen sozialer Roboter. Zunächst werden SDSC auf der Grundlage psychologischer Theorien und Forschung zu sozialen Vergleichen konzeptualisiert. Im zweiten Teil veranschaulichen wir das Konzept SDSC für HRI anhand empirischer Daten von 80 hybriden Teams (zwei menschliche Akteure und ein autonomer Agent), die in einer interdependenten, computersimulierten Teamaufgabe zusammenarbeiten. SDSC zugunsten des autonomen Agenten korrelierten mit funktionalen (z. B. Robotervertrauen oder Teameffizienz) und dysfunktionalen (z. B. Arbeitsplatzbedrohung) teamrelevanten Variablen, was die Zweiseitigkeit von SDSC in hybriden Teams verdeutlicht. Im dritten Teil wird das (praktische) Potenzial von SDSC für soziale Roboter im Feld und Labor skizziert.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Humans have a natural drive to compare their own abilities with others in social settings such as work teams with positive and negative implications for team effectiveness and satisfaction (Festinger 1954; Margolis and Dust 2016). As autonomous technologies are implemented in the field, all-human teams turn into hybrid teams including sociodigital team members (i.e., humans, software agents based on artificial intelligence [AI], and robots), working interdependently toward a shared goal. Apart from humans, digital actors also become objects of comparison, especially in the context of social robots that are designed to interact with humans in a social setting (Koolwaay 2018) and fulfill complex, former human-specific tasks (e.g., conversation with a customer at the hotel reception) autonomously. Thus, it is necessary to examine how individual sociodigital self-comparisons (SDSC) of a human within a hybrid team that are either in favor of the human self (I > robot) or the nonhuman team member (I < robot) impact affective, cognitive-motivational, and behavioral core variables of (human-robot) teaming.

Evidence on SDSC in the field of human-robot interaction (HRI) is very limited (Granulo et al. 2019). However, decades of psychological research on social comparisons in traditional all-human work teams have uncovered a complex pattern of relations between social comparisons and team-relevant outcomes such as satisfaction, efficacy, or trust (see Margolis and Dust 2016) and the importance of social comparisons for self-perceptions of competence (Trautwein and Möller 2016) or threat (Granulo et al. 2019).

For decades, automation research has classified task-overarching abilities at which humans or machines are better (e.g., Fitts 1951) or compared humans and robots regarding basic psychological states (e.g., emotions or thoughts, Haslam et al. 2008). If the focus is on a specific work task, HRI researchers compared humans’ and robots’ objective performance from an external point of view (e.g., Bridgeman et al. 2012). Subjective SDSC from a self-perspective have not been systematically assessed in HRI (e.g., You and Robert 2017). Especially when humans cooperate with social robots, the proximity to human emotions and behaviors should stimulate SDSC and serve as a powerful predictor of HRI. However, SDSC are not limited to interactions with social robots. The concept is transferable to other interdependent tasks in which autonomous digital actors (e.g., industrial robots, AI-based software agents) interact with humans.

With this article, we present SDSC as a new explanatory variable for the evaluation, acceptance, and rejection of social robots in the field, especially in settings with human-like robotic communication and gestures. In the first section, we conceptualize SDSC theoretically based on psychological research (i.e., work, team, social, and educational psychology as well as HRI). The existence of SDSC especially—but not only—in the context of social robots is reflected along with the key characteristics of social robots that reinforce SDSC as predicted by social comparison theory (Festinger 1954). Second, we illustrate the relevance of SDSC in a computer-simulated hybrid team task, to point out correlates with relevant variables of HRI. Reflecting on SDSC correlates with functional and dysfunctional variables of HRI (i.e., robots as opportunity and threat) the relevance of careful and guided human-robot comparisons for robot implementation become obvious. Most importantly, we discuss the potential of SDSC for scientific research and field applications in social robots in the third part of this paper. Social robots are designed to support human-like interactions (Koolwaay 2018). Due to the high robot similarity to human interaction (and appearance) peoples’ natural drive to compare themselves (Festinger 1954) may be of special importance in the field application of social robots.

1 Theoretical conceptualization of SDSC and related concepts: Relevance for HRI

In his social comparison theory, Festinger (1954) posits that people have a natural drive to accurately assess their own abilities and that comparisons between the self and others deliver information for such accurate self-evaluations, especially in the absence of objective standards. Extensive research has continuously extended and transferred Festinger’s (1954) theory to various settings, including the work context (see Buunk and Gibbons 2007). Within work teams, research supports that social comparisons are likely and show complex relations to team-relevant outcomes, depending on the frame of reference (e.g., upward vs. downward comparisons with an individual or a group) or the interpretation of social comparisons (i.e., assimilation vs. contrasting, see Margolis and Dust 2016).

In the field of HRI, research is nearly nonexistent on SDSC (e.g., Granulo et al. 2019). This situation is probably due to one of Festinger’s (1954) axioms stating that “[t]he tendency to compare oneself with some other specific person decreases as the difference between his opinion or ability and one’s own increases” (p. 120). Thus, people are less likely to compare themselves with traditional robots when thinking about robots as automatized or teleoperated tools fulfilling repetitive tasks. However, as social robots enter the workplace, SDSC in specific work tasks are likely to exist for reasons of the robot type, work task, and teaming aspect.

First, social robots are explicitly designed to support human-like interactions. Robots in general are “embodied machines equipped with sensors and actuators and some (potentially limited) degree of artificial intelligence” (Smids et al. 2019, p. 505). In the case of social robots, the level of autonomy (Endsley 2017; O’Neill et al. 2020) is high, enabling autonomous robotic behavior following human expectations and roles. Future research in AI even aims to mirror cognitive and emotional human processes in AI-based software agents and robots (e.g., Ellwart and Kluge 2019). Social robots have a social interface that expresses to the user that the robot is social (Hegel et al. 2009). Social robots “mimic the behavior, appearance, or movement of a living being” (Naneva et al. 2020, p. 1179). Thus, regarding social robots, similarity to a living being is explicitly integrated into robot design. Second, social robots perform complex, interactive, cooperative, and former human-specific work tasks in diverse application fields (e.g., health care or education, Lambert et al. 2020). Third, social robots are often introduced as new teammates instead of technological tools (You and Robert 2017). Following a technology-as-teammate approach (e.g., Seeber et al. 2020), social robots work autonomously but interdependently with humans toward a team goal (i.e., joint task accomplishment).

Consequently, as social robots enter the workplace, it becomes necessary to include digital teammates in task-specific comparison processes. Especially in hybrid teams that include human-like social robots and in which humans and robots cooperate, SDSC should be particularly powerful because the similarity in appearance and work tasks is most salient. Conceptual similarities of SDSC to existing psychological concepts allow us to suggest correlates with affective (e.g., job threat), cognitive-motivational (e.g., trust), and behavioral team-relevant variables (e.g., future task allocation).

Definition and characteristics of SDSC

We define SDSC as an individual’s evaluations of his or her own abilities in comparison (i.e., </=/>) to the knowledge or skills of another digital actor in a group. SDSC are task-specific, meaning individuals compare their own abilities in a specific work task or demand. Furthermore, SDSC focus on the individual level, not on comparing the self with a whole group. Additionally, SDSC reflect subjective self-perceptions, which may not be accurate, and which may differ from objective external judgments and between individuals.

SDSC, robot trust, and team efficacy

In well-established models of (human-robot) teaming (e.g., Ilgen et al. 2005; You and Robert 2017), transactive memory systems (TMS) and team mental models (TMM) of expertise location (i.e., who knows what in a team) are important cognitive team constructs. Similar to SDSC, TMS and TMM include subjective competence attributions and require subjective and individual evaluations of the other members’ abilities (e.g., social reference, Ellwart et al. 2014). However, TMS and TMM of expertise location may not reflect task-specific abilities but knowledge of expertise within the team from a global meta-perspective (see Austin 2003). Moreover, TMS and TMM represent team-level concepts that are either shared or unshared within the team (see Ellwart et al. 2014). Empirical data show that if team members recognize others as experts, they develop trust in their abilities (i.e., credibility) and have high confidence that the team will accomplish a given work task (i.e., team efficacy, Ellwart et al. 2014; Lewis 2003; McKnight et al. 2011). Consequently, transferring this evidence to SDSC, it seems reasonable that SDSC in favor of the digital teammate (i.e., I < robot) should correspond to high robot trust and team efficacy.

SDSC and task allocation

Furthermore, TMS research shows that the perception of team member expertise leads to further team specialization because team members divide labor according to individuals’ competences and thus further specialize in different domains (Lewis 2003). Task assignment to the perceived expert by TMS is also highlighted in adaptation research (Zajac et al. 2014). In human factors, a variety of strategies exists to allocate a work task to human or nonhuman team members that are based on competence comparisons, allocating the task to the team member with the most appropriate competences (Rauterberg et al. 1993).Footnote 1 Unlike SDSC, these task allocation strategies focus on an external work design perspective (humane work design) rather than the subjective self-perceptions of unique actors. Nevertheless, SDSC might also impact the subjective preferences of future task allocation. If human team members perceive a robot team member as superior in specific work tasks, future tasks may be allocated to a robot instead of a human.

SDSC and performance satisfaction

Comparing individual abilities with self-referent goals or the performance of others is a research topic in work (e.g., feedback processes, Belschak & den Hartog, 2009) and educational psychology. Here, research shows that being subjectively worse than a colleague can have a downgrading effect on self-perceptions (e.g., the big fish little pond effect, see Trautwein and Möller 2016). It can also have an upgrading effect if the focus is at the team level (e.g., basking in reflected glory) because the self feels like a part of the team and if people assimilate (not contrast) with the object of comparison (Margolis and Dust 2016). However, unlike SDSC, competence comparisons often refer to quantifiable achievements (e.g., school grades) or whole reference groups (e.g., school classes). Nevertheless, the results suggest that SDSC might correspond to subjective satisfaction with one’s own performance. SDSC in favor of the robot might correspond to lower satisfaction with one’s own performance but higher satisfaction with agent or team performance.

SDSC and job threat

On an individual level, threat perception at work is an evolving topic of HRI and lasting technology acceptance (Smids et al. 2019). Recent studies show that social robots are perceived as a threat to human distinctiveness (Ferrari et al. 2016) and that comparing oneself with robots in the context of job replacement corresponds to the perception of threat (Granulo et al. 2019; McClure 2018). SDSC could therefore be a crucial underlying mechanism regarding job threats. SDSC in favor of the nonhuman teammate may correspond to higher levels of job-related threat perceptions (e.g., fear of losing the job) because humans feel they performed worse than the robot did in core tasks of their occupations.

In summary, SDSC represent an independent concept in HRI. However, SDSC have close links to team research, human factors, work psychology, and educational research.

2 Laboratory illustration of SDSC in hybrid teams

The previously plausibly assumed correlates of SDSC with robot trust, team efficacy, task allocation, performance satisfaction, and job threat indicate the potential of SDSC for HRI. Since empirical evidence on the relevance of SDSC for HRI is lacking, we aim to illustrate the relationships between SDSC and such functional (e.g., team efficacy) and dysfunctional (e.g., job threat) team-relevant variables.

2.1 Method

2.1.1 Design and sample

We present data collected in a computer-simulated team setting, based on Network Fire Chief software (NFC, Omodei and Wearing 1995). Hybrid teams of three (two humans and one software agent) collaborated to minimize damage from evolving fires presented on a graphical user interface by detecting fire, navigating fire trucks, applying fire extinguishing rules, and refueling fire trucks. Each team member worked with a certain number of (color-coded) vehicles. In testing phases with evolving complexity (i.e., increasing number and speed of fires) the participants became familiar with the NFC simulation (German instructions), followed by the interaction phase. The hybrid team interaction itself lasted three minutes. Afterward, participants completed a survey. In total, participation lasted no longer than 45 min, was voluntary, and rewarded with credits or money. Withdrawal was possible at any given time. A positive ethics vote confirmed compliance with ethical and data protection standards. Fielding took place from November 2019 until October 2020 in the experimental laboratory of a German university. The final sample consisted of 80 hybrid teams (N = 160).Footnote 2 Participants were 18 to 38 years old (M = 23.43, SD = 3.42). A total of 118 participants were female (41 male, 1 not specified).

2.1.2 Measures and data analysis

Measures

Because the study was part of a large survey series, the measures reported are those relevant in the context of this article. Full items are documented in the Appendix (Table A1). The survey was administered in German. SDSC were operationalized with four items referring to the main competences needed in the team task: detecting fire, navigating fire trucks, applying fire extinguishing rules, and refueling fire trucks (α = .86). For each competence, the participants were asked to assess their performance in comparison to the autonomous digital agent on a scale ranging from a competence advantage in favor of the human (1) to a competence advantage in favor of the digital agent (5). Robot trust was measured with three adapted items (α = .91) based on Thielsch et al. (2018). The response format ranged from strongly disagree (1) to strongly agree (6). Task allocation was operationalized with a vignette followed by a single item, measuring the preference for future task allocation ranging from I clearly prefer a human team member similar to me (1) to I clearly prefer the digital agent as a team member (4). Satisfaction with one’s own, the agent’s, and team performance was measured with one item each ranging from not satisfied (1) to satisfied (6). Team efficacy was measured with four adapted items (α = .92) based on Salanova et al. (2003). The response format ranged from strongly disagree (1) to strongly agree (6). Job threat was operationalized with a single item. Participants were asked to assess the likelihood of their replacement by a digital agent for performance optimization from very unlikely (1) to very likely (6).

Data analysis

First, we examined item and scale characteristics (e.g., M, SD, rit). Then, we used bivariate correlation analyses in SPSS 26 (small: |r| ≥ 0.10, medium: |r| ≥ 0.30, large: |r| ≥ 0.50, Cohen 1988) to anchor SDSC in a nomological network. Therewith, we illustrate the relations of SDSC with functional and dysfunctional team-relevant constructs. Following Cronbach and Meehl (1955) and Preckel and Brunner (2017), embedding a construct in a nomological network of related constructs is an important aspect of construct validation.

2.2 Results

Items and scale characteristics are documented in the Appendix (Table A2). On average, SDSC (M = 3.91, SD = 0.86) and task allocation (M = 2.73, SD = 0.93) were rated in favor of the digital agent. Robot trust (M = 4.00, SD = 1.37), team efficacy (M = 4.70, SD = 0.93) but also job threat (M = 4.13, SD = 1.35) were rated rather high. Descriptively, satisfaction with the own human performance was rated lower (M = 4.27, SD = 1.21) than satisfaction with robot (M = 4.76, SD = 1.22) or team (M = 4.96, SD = 0.94) performance, but still rather high. Anchoring SDSC in a nomological network of team-relevant outcomes, SDSC in favor of the nonhuman agent corresponded to higher robot trust (r = 0.48, p < .001), preference for future task allocation to a nonhuman agent (r = .37, p < .001), and higher job threat (r = .36, p < .001). Furthermore, SDSC correlated positively with satisfaction with agent performance (r = .46, p < .001), team performance (r = .23, p = .003), and team efficacy (r = .31, p < .001). SDSC did not correlate with satisfaction with one’s own performance (r = −.11, p = .188) but showed a negative trend of relation (Table 1).

2.3 Discussion

Given the theoretical and empirical evidence on self-comparisons in traditional all-human teams, extended knowledge on the topic of SDSC in hybrid teams is vital when implementing social robots in the field. This laboratory study provides initial support that SDSC relate to diverse affective (e.g., job threat), cognitive-motivational (e.g., team efficacy), and behavioral (i.e., task allocation) team-related outcomes. This finding highlights the importance of SDSC at multiple levels (i.e., individual and team) and the two-sidedness of the functional and dysfunctional effects of nonhuman team members. For the context of performing collaborative tasks with social robots, the results offer the cautious indication that social robots may be simultaneously experienced as an opportunity regarding team efficacy and as a threat, indicated by the substantial correlation of SDSC with job threat.

However, the practical impact and generalizability of the study results on the real-life applications of social robots in work teams must be critically reflected due to our time-limited laboratory setting, team composition, and methodological shortcomings (i.e., cross-sectional data, correlative design, exploratory research character).

The concept of SDSC as an explanatory mechanism linking the complex (positive and negative) consequences of nonhuman team members is directly transferable to the context of social robots at work. However, the level and individual impact of SDSC on team-relevant outcomes must be considered depending on the application context. It can be assumed that the given empirical results are likely to underestimate the real-world impact of social robots. Whereas we used a simulation experiment within a university sample, field settings are characterized by a higher degree of self-relevance (i.e., a real job and tangible consequences). High self-relevance fosters upward comparison processes (Dijkstra et al. 2008). Furthermore, the NFC simulation was a control and monitoring task requiring mainly high situational awareness and manageable digital competence (i.e., drag and drop). The real-world applications of social robots (e.g., education and nursing) are more complex, including verbal communication and learning (Shahid et al. 2014). That is why the consequences of SDSC in favor of a digital agent could be more severe regarding job threat or future task allocation in such real-life applications in which multiple identity-related, social, and economic concerns intertwine (Granulo et al. 2019). Because higher similarity between objects of comparisons fosters comparison processes (Festinger 1954), it is also likely that our hybrid team setting in which humans collaborated with a software agent without physical embodiment, was a rather conservative condition for SDSC than for example a human-like social robot with larger spatial presence. However, Chen et al. (2018) point out the similarity between physical robots and software agents regarding autonomous task execution, either in motion (i.e., social robots) or at rest (i.e., software agents), making both systems comparable for SDSC. Software agents are already used in practice in many work areas such as flight safety (e.g., Rieth and Hagemann 2021), highlighting the practical relevance of the study context. Of note, our hybrid team interaction was limited in time. More extended and regular HRI might lead to habituation effects (Shiwa et al. 2009). The cross-sectional data structure does not allow an evaluation of the long-term relations of SDSC which are highly relevant for field-application of social robots. However, the results are a first empirical basis for an informed discussion on SDSC concerning social robots in the field and the lab.

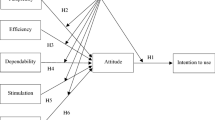

3 Potential of SDSC for research and practice in social robotics

With the introduction of SDSC, this paper provides insight into an as yet overlooked psychological barrier toward social robots’ acceptance in work teams. Considering that humans, within the next decades, will be outperformed by robots in many tasks (Grace et al. 2018), it is essential to consider under which conditions the negative impact of upward SDSC on human well-being (e.g., threat perception) is minimal and functional relations with robot trust or team efficacy are powerful. This fact calls for a systematic evaluation of the concept of SDSC, SDSC antecedents, consequences, and temporal dynamics, especially in the context of social robots that are designed to interact with humans. We highlight these four fields of action in terms of their relevance for research and practice (see Fig. 1).

[1] Explore the concept of SDSC and specify the validity and reliability of SDSC measurement

The present study shows that people compare themselves with autonomous digital teammates if explicitly asked to do so. This is an important first step toward a comprehensive understanding of SDSC in hybrid teams. However, social comparisons are often “relatively spontaneous, effortless, and unintentional” (Gilbert et al. 1995, p. 227). Therefore, future research should also study whether people unintentionally compare themselves with social robots. Furthermore, the accuracy of SDSC within different settings (e.g., varying levels of self-relevance, motives, and task types) compared to an objective standard of evaluation is worth examining, as is the development of validated SDSC measures. For practitioners confronted with social robots in the field more extended knowledge on the intentionality and the accuracy of SDSC offers implications for HRI reflection and intervention (e.g., reduction of misperceptions).

[2] Consider the antecedents of SDSC within the task, human, robot, and team

Comparison processes are impacted by numerous variables found in different subsystems of hybrid teams (i.e., task, human, robot, and team) that should be systematically examined in future research. Apart from the mentioned self-relevance, the task type (e.g., food serving vs. drug administration of social robots in nursing) may also impact SDSC. Furthermore, research shows that people differ in their tendency to compare themselves with others (i.e., social comparison orientation) and their motives for comparison (i.e., self-evaluation, self-enhancement, and self-improvement, cf., Gibbons and Buunk 1999). Future research should contrast SDSC with different types of social robots regarding appearance (i.e., human-like, animal-like, and machine-like), the level of autonomy, and AI because the similarity between a human and a robot should impact comparison processes (Festinger 1954).

In practice, this variety of SDSC antecedents requires a systemic HRI perspective, in line with a sociotechnical system view on work design (Ulich 2013). SDSC in favor of a human or robot might represent a complex interplay of human- and robot-related features in a social work setting. Because social comparisons are more likely to have detrimental effects in competitive than in cooperative settings (Margolis and Dust 2016), social robots should be introduced as cooperative, helpful, and supportive teammates (i.e., cooperative team norms) rather than as competing rivals to minimize the “dark sides” of SDSC.

[3] Explore the functional and dysfunctional consequences of SDSC

This study illustrated selected relations between SDSC and team-relevant outcomes. Future research should replicate existing findings in real-life scenarios and extend the outcome variables by objective measures of task quality and success (e.g., error rate), further team-relevant variables (e.g., coordination, communication, and fairness), as well as well-established technology acceptance (e.g., Venkatesh et al. 2016) and usability metrics (e.g., isometrics, Figl 2009). However, the results suggest that social robots in practice might be a double-edged sword. Social robots in the workplace evoke additional objects of comparison. Personnel developers need to consider this factor regarding employees’ fears and expectations.

[4] Be aware of the temporal dynamics of SDSC

Teamwork is a dynamic topic, not a static one (e.g., Input-mediator-output-input [IMOI] models of teamwork, Ilgen et al. 2005; You and Robert 2017). The outcomes of a given task in cooperation with a social robot will influence individual SDSC and subsequent task performance. Hence, SDSC may change over time. Future research should systematically test long-term changes and causalities regarding SDSC. Given the temporal dynamics, SDSC become a valuable variable for change management (i.e., modifiable construct). Critical reflection on SDSC may help to control their consequences. Research shows that people can compensate for robot threats by valuing alternative comparison dimensions in favor of the self (Cha et al. 2020).

4 Conclusion

The acceptance of social robots in the field often falls short of expectations. In this study, we presented SDSC as a complementary perspective on the acceptance and evaluation of social robots, especially in field settings with human-like robotic communication and gestures. SDSC are rooted in psychological research on social comparisons. Positive and negative relations of SDSC with team-relevant outcomes highlight the two-sided nature of hybrid teamwork and the necessity for extended SDSC attention in research and practice. Concrete research topics and practical implications provide starting points for this SDSC attention.

Notes

Comparison allocation describes a task allocation strategy that assigns tasks in accordance with general competence advantages. Here, standardized lists systematize abilities at which humans or machines are better, such as the HABA-MABA (humans-are-better-at/machines-are-better-at) list (Fitts 1951). Following a humanized task approach, task allocation to a robot serves as human support and compensates for human competence deficits. Flexible task allocation gives the human team member the possibility to allocate and reallocate tasks within HRI, based on subjective judgments (Rauterberg et al. 1993).

In this article, we refer to the screened data only. To avoid biases, six participants were excluded prior to the analysis due to the incompleteness of hybrid teams and technical problems.

References

Austin, J. R. (2003). Transactive memory in organizational groups: the effects of content, consensus, specialization, and accuracy on group performance. The Journal of Applied Psychology, 88(5), 866–878. https://doi.org/10.1037/0021-9010.88.5.866.

Belschak, F. D., & den Hartog, D. N. (2009). Consequences of positive and negative feedback: the impact on emotions and extra-role behaviors. Applied Psychology, 58(2), 274–303. https://doi.org/10.1111/j.1464-0597.2008.00336.x.

Bridgeman, B., Trapani, C., & Attali, Y. (2012). Comparison of human and machine scoring of essays: differences by gender, ethnicity, and country. Applied Measurement in Education, 25(1), 27–40. https://doi.org/10.1080/08957347.2012.635502.

Buunk, A. P., & Gibbons, F. X. (2007). Social comparison: the end of a theory and the emergence of a field. Organizational Behavior and Human Decision Processes, 102(1), 3–21. https://doi.org/10.1016/j.obhdp.2006.09.007.

Cha, Y. J., Baek, S., Ahn, G., Lee, H., Lee, B., Shin, J., & Jang, D. (2020). Compensating for the loss of human distinctiveness: the use of social creativity under human-machine comparisons. Computers in Human Behavior, 103(7), 80–90. https://doi.org/10.1016/j.chb.2019.08.027.

Chen, J. Y. C., Lakhmani, S. G., Stowers, K., Selkowitz, A. R., Wright, J. L., & Barnes, M. (2018). Situation awareness-based agent transparency and human-autonomy teaming effectiveness. Theoretical Issues in Ergonomics Science, 19(3), 259–282. https://doi.org/10.1080/1463922X.2017.1315750.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd edn.). Lawrence Erlbaum.

Cronbach, L. J., & Meehl, P. E. (1955). Construct validity in psychological tests. Psychological Bulletin, 52(4), 281–302. https://doi.org/10.1037/h0040957.

Dijkstra, P., Kuyper, H., van der Werf, G., Buunk, A. P., & van der Zee, Y. G. (2008). Social comparison in the classroom: A review. Review of Educational Research, 78(4), 828–879. https://doi.org/10.3102/0034654308321210.

Ellwart, T., & Kluge, A. (2019). Psychological perspectives on intentional forgetting: an overview of concepts and literature. KI – Künstliche Intelligenz, 33(1), 79–84. https://doi.org/10.1007/s13218-018-00571-0.

Ellwart, T., Konradt, U., & Rack, O. (2014). Team mental models of expertise location. Small Group Research, 45(2), 119–153. https://doi.org/10.1177/1046496414521303.

Endsley, M. R. (2017). From here to autonomy: lessons learned from human-automation research. Human Factors, 59(1), 5–27. https://doi.org/10.1177/0018720816681350.

Ferrari, F., Paladino, M. P., & Jetten, J. (2016). Blurring human-machine distinctions: anthropomorphic appearance in social robots as a threat to human distinctiveness. International Journal of Social Robotics, 8(2), 287–302. https://doi.org/10.1007/s12369-016-0338-y.

Festinger, L. (1954). A theory of social comparison processes. Human Relations, 7(2), 117–140. https://doi.org/10.1177/001872675400700202.

Figl, K. (2009). Isonorm 9241/10 und Isometrics: Usability-Fragebögen im Vergleich. In H. Wandke & S. Kain (Eds.), Mensch & Computer 2009: 9. Fachübergreifende Konferenz für interaktive und kooperative Medien; grenzenlos frei!? (pp. 143–152). Oldenbourg.

Fitts, P. M. (Ed.). (1951). Human engineering for an effective air-navigation and traffic-control system. National Research Council.

Gibbons, F. X., & Buunk, B. P. (1999). Individual differences in social comparison: development of a scale of social comparison orientation. Journal of Personality and Social Psychology, 76(1), 129–142. https://doi.org/10.1037//0022-3514.76.1.129.

Gilbert, D. T., Giesler, R. B., & Morris, K. A. (1995). When comparisons arise. Journal of Personality and Social Psychology, 69(2), 227–236. https://doi.org/10.1037/0022-3514.69.2.227.

Grace, K., Salvatier, J., Dafoe, A., Zhang, B., & Evans, O. (2018). Viewpoint: when will AI exceed human performance? Evidence from AI experts. Journal of Artificial Intelligence Research, 62, 729–754. https://doi.org/10.1613/jair.1.11222.

Granulo, A., Fuchs, C., & Puntoni, S. (2019). Psychological reactions to human versus robotic job replacement. Nature Human Behaviour, 3(10), 1062–1069. https://doi.org/10.1038/s41562-019-0670-y.

Haslam, N., Kashima, Y., Loughnan, S., Shi, J., & Suitner, C. (2008). Subhuman, inhuman, and superhuman: contrasting humans with nonhumans in three cultures. Social Cognition, 26(2), 248–258. https://doi.org/10.1521/soco.2008.26.2.248.

Hegel, F., Muhl, C., Wrede, B., Hielscher-Fastabend, M., & Sagerer, G. (2009). Understanding social robots. In IEEE Computer Society (Ed.), The second international conference on advances in computer-human interactions (ACHI) (pp. 169–174). https://doi.org/10.1109/ACHI.2009.51.

Ilgen, D. R., Hollenbeck, J. R., Johnson, M., & Jundt, D. (2005). Teams in organizations: from input-process-output models to IMOI models. Annual Review of Psychology, 56, 517–543. https://doi.org/10.1146/annurev.psych.56.091103.070250.

Koolwaay, J. (2018). Die soziale Welt der Roboter: Interaktive Maschinen und ihre Verbindung zum Menschen. Science Studies. transcript. https://doi.org/10.14361/9783839441671.

Lambert, A., Norouzi, N., Bruder, G., & Welch, G. (2020). A systematic review of ten years of research on human interaction with social robots. International Journal of Human-Computer Interaction, 36(19), 1804–1817. https://doi.org/10.1080/10447318.2020.1801172.

Lewis, K. (2003). Measuring transactive memory systems in the field: scale development and validation. The Journal of Applied Psychology, 88(4), 587–604. https://doi.org/10.1037/0021-9010.88.4.587.

Margolis, J. A., & Dust, S. B. (2016). It’s all relative: a team-based social comparison model for self-evaluations of effectiveness. Group & Organization Management, 44(2), 361–395. https://doi.org/10.1177/1059601116682901.

McClure, P. K. (2018). “You’re fired,” says the robot: The rise of automation in the workplace, technophobes, and fears of unemployment. Social Science Computer Review, 36(2), 139–156. https://doi.org/10.1177/0894439317698637.

McKnight, D. H., Carter, M., Thatcher, J. B., & Clay, P. F. (2011). Trust in a specific technology: an investigation of its components and measures. ACM Transactions on Management Information Systems, 2(2), 1–25. https://doi.org/10.1145/1985347.1985353.

Naneva, S., Sarda Gou, M., Webb, T. L., & Prescott, T. J. (2020). A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. International Journal of Social Robotics, 12(6), 1179–1201. https://doi.org/10.1007/s12369-020-00659-4.

Omodei, M. M., & Wearing, A. J. (1995). The fire chief microworld generating program: an illustration of computer-simulated microworlds as an experimental paradigm for studying complex decision-making behavior. Behavior Research Methods, Instruments, & Computers, 27(3), 303–316. https://doi.org/10.3758/BF03200423.

O’Neill, T., McNeese, N., Barron, A., Schelble, B., & O’Neill, T. (2020). Human-autonomy teaming: a review and analysis of the empirical literature. Human Factors, 0(1), 1–35. https://doi.org/10.1177/0018720820960865.

Preckel, F., & Brunner, M. (2017). Nomological nets. In V. Zeigler-Hill & T. K. Shackelford (Eds.), Encyclopedia of personality and individual differences (pp. 1–4). Springer. https://doi.org/10.1007/978-3-319-28099-8_1334-1.

Rauterberg, M., Strohm, O., & Ulich, E. (1993). Arbeitsorientiertes Vorgehen zur Gestaltung menschengerechter Software. Ergonomie & Information, 20, 7–21.

Rieth, M., & Hagemann, V. (2021). Veränderte Kompetenzanforderungen an Mitarbeitende infolge zunehmender Automatisierung – Eine Arbeitsfeldbetrachtung. Gruppe. Interaktion. Organisation. Zeitschrift Für Angewandte Organisationspsychologie (GIO), 52(1), 37–49. https://doi.org/10.1007/s11612-021-00561-1.

Salanova, M., Llorens, S., Cifre, E., Martínez, I. M., & Schaufeli, W. B. (2003). Perceived collective efficacy, subjective well-being and task performance among electronic work groups. Small Group Research, 34(1), 43–73. https://doi.org/10.1177/1046496402239577.

Seeber, I., Bittner, E., Briggs, R. O., de Vreede, T., de Vreede, G.-J., Elkins, A., Maier, R., Merz, A. B., Oeste-Reiß, S., Randrup, N., Schwabe, G., & Söllner, M. (2020). Machines as teammates: a research agenda on AI in team collaboration. Information & Management, 57(2), 103174. https://doi.org/10.1016/j.im.2019.103174.

Shahid, S., Krahmer, E., & Swerts, M. (2014). Child-robot interaction across cultures: how does playing a game with a social robot compare to playing a game alone or with a friend? Computers in Human Behavior, 40, 86–100. https://doi.org/10.1016/j.chb.2014.07.043.

Shiwa, T., Kanda, T., Imai, M., Ishiguro, H., & Hagita, N. (2009). How quickly should a communication robot respond? Delaying strategies and habituation effects. International Journal of Social Robotics, 1(2), 141–155. https://doi.org/10.1007/s12369-009-0012-8.

Smids, J., Nyholm, S., & Berkers, H. (2019). Robots in the workplace: a threat to—or opportunity for—meaningful work? Philosophy & Technology, 33, 503–522. https://doi.org/10.1007/s13347-019-00377-4.

Thielsch, M. T., Meeßen, S. M., & Hertel, G. (2018). Trust and distrust in information systems at the workplace. PeerJ, 6, e5483. https://doi.org/10.7717/peerj.5483.

Trautwein, U., & Möller, J. (2016). Self-concept: Determinants and consequences of academic self-concept in school contexts. In A. A. Lipnevich, F. Preckel & R. D. Roberts (Eds.), The Springer Series on Human Exceptionality. Psychosocial skills and school systems in the 21st century: Theory, research, and practice (Vol. 84, pp. 187–214). Springer. https://doi.org/10.1007/978-3-319-28606-8_8.

Ulich, E. (2013). Arbeitssysteme als soziotechnische Systeme – eine Erinnerung. Journal Psychologie des Alltagshandelns, 6(1), 4–12.

Venkatesh, V., Thong, J., & Xu, X. (2016). Unified theory of acceptance and use of technology: a synthesis and the road ahead. Journal of the Association for Information Systems, 17(5), 328–376. https://doi.org/10.17705/1jais.00428.

You, S., & Robert, L. P. (2017). Teaming up with robots: an IMOI (inputs-mediators-outputs-inputs) framework of human-robot teamwork. International Journal of Robotic Engineering, 2(1), 1–7. https://doi.org/10.35840/2631-5106/4103.

Zajac, S., Gregory, M. E., Bedwell, W. L., Kramer, W. S., & Salas, E. (2014). The cognitive underpinnings of adaptive team performance in ill-defined task situations. Organizational Psychology Review, 4(1), 49–73. https://doi.org/10.1177/2041386613492787.

Funding

This project was partially funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 871260 (BUGWRIGHT2) and by a research grant from the German Research Foundation (DFG, EL 269/9-1).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors wish it to be known that, in their opinion, the first two authors should be regarded as joint first authors.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ellwart, T., Schauffel, N., Antoni, C.H. et al. I vs. robot: Sociodigital self-comparisons in hybrid teams from a theoretical, empirical, and practical perspective. Gr Interakt Org 53, 273–284 (2022). https://doi.org/10.1007/s11612-022-00638-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11612-022-00638-5

Keywords

- Sociodigital self-comparisons

- Social robots

- Human-robot interaction

- Hybrid team

- Social comparison

- Human-autonomy teaming