ABSTRACT

Background

The Protocol-guided Rapid Evaluation of Veterans Experiencing New Transient Neurologic Symptoms (PREVENT) program was designed to address systemic barriers to providing timely guideline-concordant care for patients with transient ischemic attack (TIA).

Objective

We evaluated an implementation bundle used to promote local adaptation and adoption of a multi-component, complex quality improvement (QI) intervention to improve the quality of TIA care Bravata et al. (BMC Neurology 19:294, 2019).

Design

A stepped-wedge implementation trial with six geographically diverse sites.

Participants

The six facility QI teams were multi-disciplinary, clinical staff.

Interventions

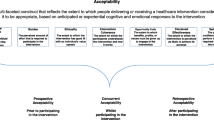

PREVENT employed a bundle of key implementation strategies: team activation; external facilitation; and a community of practice. This strategy bundle had direct ties to four constructs from the Consolidated Framework for Implementation Research (CFIR): Champions, Reflecting & Evaluating, Planning, and Goals & Feedback.

Main Measures

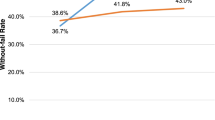

Using a mixed-methods approach guided by the CFIR and data matrix analyses, we evaluated the degree to which implementation success and clinical improvement were associated with implementation strategies. The primary outcomes were the number of completed implementation activities, the level of team organization and > 15 points improvement in the Without Fail Rate (WFR) over 1 year.

Key Results

Facility QI teams actively engaged in the implementation strategies with high utilization. Facilities with the greatest implementation success were those with central champions whose teams engaged in planning and goal setting, and regularly reflected upon their quality data and evaluated their progress against their QI plan. The strong presence of effective champions acted as a pre-condition for the strong presence of Reflecting & Evaluating, Goals & Feedback, and Planning (rather than the other way around), helping to explain how champions at the +2 level influenced ongoing implementation.

Conclusions

The CFIR-guided bundle of implementation strategies facilitated the local implementation of the PREVENT QI program and was associated with clinical improvement in the national VA healthcare system.

Trial registration: clinicaltrials.gov: NCT02769338

Similar content being viewed by others

Avoid common mistakes on your manuscript.

BACKGROUND

Approximately 3300 veterans with transient ischemic attack (TIA) are cared for in a United States Department of Veterans Affairs (VA) Emergency Department (ED) or inpatient ward annually.1 TIA patients are at high risk of recurrent vascular events2,3,4; however, interventions which deliver timely TIA care can reduce that risk by up to 70%.5,6,7,8 Despite the known benefits of timely TIA care, gaps in care quality exist in both private-sector US hospitals9 and the VA healthcare system.10 A formative evaluation of TIA acute care in VA indicated that most facilities lacked a TIA-specific protocol and that clinicians struggled with uncertainty regarding the decisions to admit TIA patients for timely care.11, 12

The objective of the PREVENT trial13 was to evaluate the effectiveness of an implementation strategy bundle to promote local adaptation and adoption of a multi-component QI intervention to improve TIA care quality. The bundle was based upon several implementation frameworks and our previous success employing external facilitation with VA clinical teams.14 First, we used the integrated Promoting Action on Research Implementation in Health Services (iPARIHS) framework15 to guide external facilitation to assist local champions cultivate clinical teams, and disseminate professional education materials related to acute TIA care, and to facilitate local QI efforts using performance data. Second, based on prior studies which have distinguished CFIR constructs between high and low effective implementation,16 we operationalized key constructs (Planning; Goals & Feedback; and Reflecting & Evaluating) from the CFIR inner setting and implementation process domains17 a priori. Participating teams were trained on these concepts and received reinforcement through external facilitation.

The specific aim of this evaluation was to examine the effect of the implementation strategy bundle on implementation success. We hypothesized that clinical teams which engaged in the implementation strategies and locally adapted the PREVENT program components would realize the greatest implementation success.

METHODS

Setting

VA facilities were rank ordered in terms of the quality of TIA care based on seven guideline-concordant processes of care and invitations to participate were sent to VA facilities with the greatest opportunity for improvement. Recruitment stratified by region continued until six facilities agreed to participate. PREVENT sites were pragmatically allocated to the stepped-wedge trial in three waves based on the ability to schedule baseline and kickoff meetings.

Quality Improvement Intervention

The rationale and methods used for the development of the PREVENT intervention have been described elsewhere.13 The provider-facing QI intervention was based on a prior systematic assessment of TIA care performance at VA facilities nationwide as well as an evaluation of barriers and facilitators of TIA care performance using four sources of information: baseline quality of care data,10 staff interviews,11 existing literature,18,19,20,21,22 and validated electronic quality measures.10 The PREVENT QI intervention included five components (see Appendix 1): quality of care reporting system (see Appendix 2), clinical programs, professional education, electronic health record tools, and QI support including a virtual collaborative.

Implementation Strategies

PREVENT employed a bundle of three primary implementation strategies: (1) team activation via audit and feedback,23, 24 reflecting and evaluating, planning, and goal setting17; (2) external facilitation (EF)23,24,25; and (3) building a community of practice (CoP).26 In addition, PREVENT allowed for local adaptation of its intervention components and the coordinating site provided EF to the site champion and team.

Active implementation of PREVENT at each site began with a full-day kickoff meeting facilitated by the coordinating site, and involved multidisciplinary staff members from the participating site involved in TIA clinical care. The site team used the PREVENT Hub, a Web-based audit and feedback platform (see Appendix 2), to explore their facility-specific quality of care data and identify gaps. Using approaches from systems redesign,27, 28 site team members brainstormed about barriers to providing highest quality of care, identified solutions to address barriers, ranked solutions on an impact-effort matrix, and developed a site-specific action plan that included high-impact/low-effort activities in the short-term plan and high-impact/high-effort activities in the long-term plan. Local QI plans were entered into the PREVENT Hub, and metrics were tracked on the Hub allowing teams to monitor performance over time. Using the Hub to observe other participating sites’ QI activities and performance, facility teams could learn which QI activities either did or did not improve metrics at peer sites. In addition, the coordinating team introduced strategies for activating teams: team planning, goal setting and feedback, and reflecting and evaluating using examples and data from past stroke QI teams and presenting a video of a VA stroke QI team in practice.

During the 1-year active implementation period, the site team members joined monthly PREVENT virtual collaborative conferences which served as a forum for sites to share progress on action plans, articulate goals for the next month, and review new evidence or tools. EF was provided by the PREVENT nurse trained in Lean Six Sigma methodology28 and quality management.

Evaluation Approach

The stepped-wedge29, 30 implementation trial included six participating sites where active implementation was initiated in three waves, with two facilities per wave. The unit of analysis was the VA facility.

Measurement

We employed a mixed-methods design to evaluate this complex implementation intervention with prospective data collection from multiple sources. Qualitative data sources included the following: semi-structured interviews, observations, field notes, and Fast Analysis and Synthesis Template (FAST) facilitation tracking.31 Interviews were conducted in-person during site visits or by telephone at baseline, and at 6 and 12 months after active implementation. Key stakeholders included staff involved in the delivery of TIA care, their managers, and facility leadership; we also accepted “snowball” referrals from key stakeholders. Upon receipt of verbal consent, interviews were audio-recorded. The audio-recordings were transcribed verbatim. Transcripts were de-identified and imported into Nvivo12 for data coding and analysis.32

Using a common codebook, two team members independently coded identical transcripts for the presence or absence of CFIR constructs as well as magnitude and valence for four CFIR implementation constructs (i.e., Goals & Feedback, Planning, Reflecting & Evaluating, and Champions). Valence (+ or −) was scored for each construct if it was present and influencing the implementation of PREVENT at that site.16, 17 Magnitude was scored as 2 if it had a strong influence on PREVENT implementation, 1 if it had a weak or moderate effect, and 0 if it had a neutral effect. The evaluation team conducted formal debriefings after each kickoff, site visit, and collaborative call. These observations were recorded and transcribed for analyses.

We also used the FAST template, which is a structured electronic log, as a rapid, systematic method for extracting key concepts across data sources including interviews, collaborative calls, and Hub utilization data.31 We adapted an external facilitator tracking sheet for prospective collection of the dose and contents of site-specific, external facilitation provided by the evaluation team to participating site teams.25

Facility Baseline Characteristics

The measure of quality of care was the “without-fail” rate (WFR), defined as the proportion of veterans with TIA who received all of the processes of care for which they were eligible from among seven processes of care (Table 1). The WFR was calculated at the facility level based on electronic health record data using validated algorithms.10 In addition to the baseline WFR for each facility, data from the Office of Productivity, Efficiency and Staffing (OPES) were obtained to classify neurology and emergency medicine staffing levels (https://reports.vssc.med.va.gov/ReportServer/Pages/ReportViewer.aspx?/OPES/WorkforceRpt/WorkforceReport&rs:Command=Render&rc:Parameters=True&Specialty=Internal%20Medicine&FiscalYear=FY%202018). We report the annual TIA volume at each site (seen in the ED and inpatient setting) and the proportion of patients who were admitted.

Implementation Evaluation

Teams reported on implementation progress on a monthly basis during the virtual collaborative calls. Teams were encouraged to adapt PREVENT components best suited for their local context and addressed gaps in care; thus, a fidelity evaluation was not applicable. The first of three primary implementation outcomes included the facility team’s number of implementation activities (defined as an intentional activity planned to change practices; aligned with each site’s action plans (see Appendix 3) and completed during the 1-year active implementation period).14

The second outcome included final level of team organization (defined as the degree of team cohesion and Group Organization [GO Score])16, 33 for improving TIA care at the end of the 12-month active implementation period. The GO Score16, 33 is a measure of team activation on a 1–10 scale for improving TIA care based on specified practices (see Table 2). The evaluation team independently determined each site’s GO Score by discussing evidence from the study data sources during team meetings and then voting independently using a digital, real-time anonymous ballot until 80% agreement was reached. The rationale for using both implementation outcomes was that they measured two distinct but complementary aspects of implementation: number of activities completed is an overall measure of implementation action, whereas the GO Score describes the degree to which the facility team is functioning as a unit to implement facility-wide policies and structures of care.

As an additional clinical measure of implementation outcome, the final column in Table 3 indicates whether or not the facility achieved ≥ 15-point improvement (in absolute terms to reflect planned vs temporal change) in their WFR over their 1-year course of active implementation (see Table 1).

Using a mixed-methods approach34, 35 grounded in the CFIR,16, 17 we conducted a cross-case and data matrix approach36 to evaluate the degree to which the sites engaged in the bundle of implementation strategies; the association between implementation strategies and implementation and clinical success; and the associated contextual factors. Given that PREVENT’s implementation strategies and outcomes were tracked and rated by the coordinating team prospectively, no data were missing.

RESULTS

Baseline Context

The baseline facility context and QI team characteristics of the six participating facilities are provided in Table 1, listed in order of their baseline quality (WFR). The WFR for sites B, C, and D was similar to the fiscal year 2017 national WFR average of 34.3%, whereas site A was substantially below and Sites E and F were considerably higher than the national WFR. Sites E and F also had the highest level of neurology staffing. Emergency medicine staffing was similar across sites. More than 50% of TIA patients were admitted to the hospital, but admission rates were lowest at the two sites (A and B) with the lowest WFRs. The annual TIA patient volume varied from 13 to 46. At baseline, no sites had active teams in place working on TIA care quality, indicating that all of the teams began the active implementation period from a similar starting point. The participating site QI teams were diverse but generally included members from neurology, emergency medicine, nursing, pharmacy, and radiology; some teams also included hospitalists, primary care staff, education staff, telehealth staff, or systems redesign staff.

Implementation Strategies

Over the course of the 1-year active implementation period, we observed an overall high site engagement with each of the implementation strategies. In Table 2, we present the dose of implementation strategies delivered within the overall strategy bundle: EF, community of practice, Hub (audit and feedback), and local adaptation of PREVENT. The total number of completed implementation activities and final GO Score after 12 months of active implementation are also presented in Table 2. Site labels are retained from Table 1 and are listed in order of the three waves.

Audit and Feedback

We observed frequent usage of the Hub. The quality of care data (i.e., the 7 processes of care that comprised WFR) was updated monthly on the Hub; the average site champion Hub usage was 21.3 visits per 12 months (1.8 visits per month) and the average non-champion team member Hub usage was 20.2 visits per 12 months (1.7 visits per month). This Hub usage aligns with interview data from site team members indicating that they used the Hub for the process of care data, to access the QI plans, and to download materials from the library:

I [champion] used some of those slides [hub library] in order to show them [providers at local site] what the PREVENT program was and why it’s important.

External Facilitation

Facility QI teams and champions engaged with the EF during active implementation. Education on PREVENT components during the first half of the year, quality monitoring, planning, and networking between and within sites were the most commonly employed EF topics. Overcoming barriers and data reflection and evaluation were also frequent EF tasks.

The EF was really helpful in allowing me to …call and vent, and she was also really very encouraging. …That was an interesting lesson to learn that you might feel like you’re unsuccessful because of that one particular metric, …and so I appreciate her lending me her ear...

When the [EF] knew that I was encountering a barrier that was related to physicians, without asking, she would immediately provide me the data that I needed to discuss with that provider to make them understand what we were doing and that was really helpful.

Community of Practice

Sites were active participants in the virtual CoP. A site representative was present on all calls. Site champions attended an average of 80.5% of the monthly collaborative conferences (range 66 to 92%) during the active implementation period. All six teams participated in a promotion ceremony which was attended by local VA facility leadership as well as all of the PREVENT sites’ team members; peers from other sites acknowledged the implementation successes and lessons learned from the graduating site.

Implementation Outcomes

The implementation outcome data (Tables 2 and 3) indicated that implementation took place at all facilities given that all sites successfully completed at least 15 implementation activities (range 15–39, mean 26.5) as part of their action plans to improve the quality of TIA care over 12 months. Despite heavy clinical demands at all participating sites, none of the teams withdrew from PREVENT.

Table 3 provides longitudinal data for implementation outcomes as well as scores on CFIR constructs related to the implementation strategies which PREVENT facilitated among the local clinical champions and QI teams during the year of active implementation. The sites were ranked in terms of the final GO Score. All sites achieved a substantial increase in the GO Score from baseline to the midpoint of the active implementation period (6 months): mean of 1 at baseline (no facility-wide approach) to 5.5 at 6 months (some facility-wide approach activities). All sites began at the same level, 1, because they had no pre-existing organization around TIA. The kickoff and the Hub allowed teams to examine their data, identify gaps in care, develop an action plan, and start to come together as a team; these two elements of the PREVENT intervention were the key factors responsible for the observed improvements in team activation across sites during the first 6 months of active implementation.

I came out of it [kickoff] feeling that I knew what the issue is…I know what the goal is, and I have information sources so that I'm able to do it. …. one of the biggest things that I see is that I think that it really helped to come up with like more of a team…

I think [the kickoff] played a large role of how we decided we wanted to proceed … I feel like that was the first time we’ve, we really kind of drilled down on, on a good …starting plan …of an outline of what we wanted to accomplish.

The matrix display in Table 3 also indicates the key role of champions in promoting implementation success, as there was a direct link between the strong presence of effective champions (i.e., “+2” level) and the GO Score at both the 6- and 12-month timepoints. At 6 months, the correspondence between a champion score of + 2 and a GO Score of ≥ 6 (cf. sites F, A, and C) was 100% (3/3). None of the other CFIR constructs systematically distinguished the three sites with scores ≥ 6 from the three sites without. Moreover, all three of these sites with GO Scores ≥ 6 at the 6-month timepoint ultimately achieved ≥ 15 point improvements (in absolute terms) in their WFR rates over the 1-year active implementation period, explaining 75%, 3 of the 4 sites, with ≥ 15 point gains.

At 12 months, the correspondence between a champion score of + 2 and a positive gain in the GO Score between the 6- and 12-month timepoints was likewise 100% (cf. sites F, A, B, D). As before, none of the other constructs systematically distinguished the four sites that improved their GO Score between the 6- and 12-month timepoints from those that did not.

Furthermore, Table 3 indicates that the presence of an effective champion was a necessary but not sufficient condition for the strong presence of the CFIR constructs of Reflecting & Evaluating, Goals & Feedback, and Planning. For all five of the rows in Table 3, where a + 2 score appeared for any of these three CFIR constructs (cf. site F, 6 and 12 months; site A, 6 and 12 months; site B, 12 months), the correspondence with a champion score of + 2 was 100% (5/5). The reverse was not true, however: the correspondence between sites with + 2 scores for champions with + 2 scores for all 3 CFIR constructs of was only 43% (3/7) (cf. site A, 12 months, Reflecting & Evaluating = + 1; site C, 6 months, Planning = 0; site D, 12 months, all 3 constructs = +1). This indicates that the strong presence of effective champions acted as a pre-condition for the strong presence of Reflecting & Evaluating, Goals & Feedback, and Planning (rather than the other way around), helping to explain how champions at the + 2 level influenced ongoing implementation.

Site E had multiple individuals who engaged in clinical champion activities, and therefore had lower champion construct scores and achieved lower GO Scores. For example, individual team members at site E often engaged in activities that addressed processes within their own service areas, rather than carrying out coordinated, cross-service efforts led by a central champion. The negative valence in site D’s clinical champion construct reflected turnover in their local champion early in active implementation which resulted in the lowest total number of activities completed. However, with the replacement of the local champion, addition of new team members, dedicated EF, and CoP and Hub engagement by the new champion and team members, site D’s GO Score improved.

Although the sites completed a diverse range of implementation activities (Table 2), the most common categories included activities related to professional education (e.g., teaching house staff) and implementation of clinical programs (e.g., prospective patient identification systems). Teams that engaged in planning to the greatest degree were those with the highest number of completed implementation activities (Table 3). Sites varied considerably in terms of the timing of the implementation activities completed over the 1 year of active implementation (Fig. 1, Appendix 3). Half of the teams began strongly with implementation soon after the kickoff whereas other teams engaged in more activities during the latter half of the year.

DISCUSSION

Our data suggested that the presence of an effective champion was key for implementation success . Indeed, when one site lost its champion, implementation progress was halted, and then revived with the replacement of the local champion who was subsequently supported with EF. Effective champions, individuals who “drive through” implementation according to CFIR16, appear to play a critical role in engaging in the implementation strategies including the EF, and fostering teams in reflecting and evaluating, goal setting, and planning: activities which were related both to the total number of implementation activities completed and the degree of team cohesion.37, 38

Our results also suggest that an alternative approach to implementation occurred at a site with a distributed champion model—one in which several individuals shared the responsibility for actions usually performed by a champion. In this context, the individuals limit the activities in which they drive through implementation to their specific clinical area versus the overall program. Although this site (E) did not achieve high scores for team functioning, they did complete many implementation activities. A key to the success of this approach is the degree to which the various champions are able to independently complete a given implementation activity with limited guidance by a central champion

PREVENT site champions were diverse, including staff from neurology, nursing, pharmacy, and systems redesign. Our study findings indicated that the professional discipline of the champion was less important than the role they played in either performing implementation activities themselves or engaging other team members in the QI process.

The site team members, and especially the champions, regularly contacted the EF who provided information, support, and encouragement across a broad range of topics. Two key EF activities merit further description: conducting chart reviews and facilitating implementation of the patient identification tool. Clinical champions were sometimes dismayed when the monthly performance data indicated that some patients had failed process measures. The EF conducted targeted chart review which identified gaps in care or documentation; this chart review information supported the champions in their efforts to engage in quality improvement. The EF also worked with teams to implement a patient identification tool to identify patients with TIA who were cared for in the ED or inpatient setting. This tool was used at some sites to prospectively ensure that patients received needed elements of care and at other sites to retrospectively identify opportunities for improvement. Given that many of the champions were clinicians without prior QI experience, the EF was able to help connect clinicians with local clinical informatics staff to implement the patient identification tool.

These findings have direct relevance for healthcare systems like the VA where quality improvement resources may need to be targeted at lower performing facilities which may lack existing teams, have small patient volume, and vary considerably in terms of baseline context. The current findings emphasized the importance of EF and indicate that EFs should be flexible given the heterogeneity in site needs. Moreover, the combination of the in-person kickoff meeting and the Hub at the launch of the active implementation period was critical in three domains/areas: the development of site-specific action plans that were based on site-specific performance data; the early formation of team identity; and to the training of champions and QI teams on how to reflect and evaluate on their data. The high degree of Hub usage suggests that healthcare systems implementing QI programs should provide a forum for providing updated site-level quality of care data as well as resources for quality improvement that can be readily shared across sites. Finally, healthcare systems should consider supporting QI teams within CoP that serve as supported arenas for public accountability for making progress on action plans, sharing lessons learned, and providing encouragement.

Contribution to the Literature

To our knowledge, the PREVENT implementation strategy bundle is one of the few implementation interventions to operationalize CFIR implementation process constructs as strategies a priori to train its QI teams and prospectively evaluate its uptake. Indeed, a recent review of CFIR usage in implementation research recommended future research identified the need for research with prospective CFIR use with a priori specification.34 Moreover, we provided specifications39 on the set of implementation strategies delivered as a bundle. In addition, we described the level and timing of engagement and implementation activities across QI teams. These findings provide evidence for specific implementation strategies in the setting of a complex clinical problem when no quality of care reporting system exists.40

Our results are aligned with a recent systematic review of the effect of clinical champions on implementation which concluded champions were necessary but insufficient for implementation.41 An emergent finding was that modest and strong positive CFIR planning construct was related to implementation success (especially with respect to the number of implementation activities completed).

Limitations

The primary limitation of PREVENT is that implementation occurred only within VA hospitals. Future research should evaluate implementation in non-VA settings. Because several implementation strategies were deployed simultaneously, it was difficult to disentangle the unique effects of each strategy. Although a six-site sample was sufficient to make case comparisons, future studies might include a larger number of facilities to evaluate additional implementation outcomes.

In summary, this study found that engagement in a bundle of CFIR-related implementation strategies facilitated the local adaptation and adoption of the PREVENT TIA QI program and that two alternative approaches to the role of champions were associated with implementation success.

Data Availability

These data must remain on Department of Veterans Affairs servers; investigators interested in working with these data are encouraged to contact the corresponding author.

Abbreviations

- ED:

-

Emergency Department

- PREVENT:

-

Protocol-guided Rapid Evaluation of Veterans Experiencing New Transient Neurological Symptoms

- QI:

-

quality improvement

- TIA:

-

Transient ischemic attack

- VA:

-

Department of Veterans Affairs

- EF:

-

External Facilitation or External Facilitator

- WFR:

-

Without Fail Rate

- EHR:

-

Electronic Health Record

- GO Score:

-

Group Organization Score

- CFIR:

-

Consolidated Framework for Implementation Research

- CoP:

-

Community of Practice

REFERENCES

Bravata D, Myers L, Arling G, Miech EJ, Damush T et al Quality of care for Veterans with transient ischemic attack and minor stroke. JAMA Neurology 2018. Apr 75(4):419-427.

Johnston KC, Connors AF, Wagner DP, Knaus WA, Wang XQ, Haley EC. A predictive risk model for outcomes of ischemic stroke. Stroke; a journal of cerebral circulation. 2000;31:448-455.

Johnston S. Short-term prognosis after a TIA: a simple score predicts risk. Cleveland Clinic Journal of Medicine. 2007;74(10):729-736.

Rothwell P, Johnston S. Transient ischemic attacks: stratifying risk. Stroke; a journal of cerebral circulation. 2006;37(2):320-322.

Kernan WN, Ovbiagele B, Black HR, et al. Guidelines for the prevention of stroke in patients with stroke and transient ischemic attack: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke; a journal of cerebral circulation. 2014;45(7):2160-2236.

Lavallée P, Meseguer E, Abboud H, et al. A transient ischaemic attack clinic with round-the-clock access (SOS-TIA): feasibility and effects. Lancet Neurol. 2007;6(11):953-960.

Luengo-Fernandez R, Gray A, Rothwell P. Effect of urgent treatment for transient ischaemic attack and minor stroke on disability and hospital costs (EXPRESS study): a prospective population-based sequential comparison. Lancet Neurol. 2009;8(3):235-243.

Giles M, Rothwell P. Risk of stroke early after transient ischaemic attack: a systematic review and meta-analysis. Lancet Neurol. 2007 6(12):1063-1072.

O'Brien EC, Zhao X, Fonarow GC, et al. Quality of Care and Ischemic Stroke Risk After Hospitalization for Transient Ischemic Attack: Findings From Get With The Guidelines-Stroke. Circulation Cardiovascular quality and outcomes. 2015;8(6 Suppl 3):S117-124.

Bravata DM, Myers LJ, Arling G, Miech EJ, Damush T, Sico JJ, Phipps MS, Zillich A, Yu Z, Reeves M, Williams LS, Johanning J, Chaturvedi S, Baye F, Ofner S, Austin C, Ferguson J, Graham GD, Rhude R, Kessler CS, Higgins DS Jr., Cheng E. Quality of care for Veterans with transient ischemic attack and minor stroke. JAMA Neurology, 2018, April 1;75(4):419-427.

Damush TM, Miech EJ, Sico JJ, et al. Barriers and facilitators to provide quality TIA care in the Veterans Healthcare Administration. Neurology. 2017;89(24):2422-2430.

Homoya BJ, Damush TM, Sico JJ, et al. Uncertainty as a Key Influence in the Decision To Admit Patients with Transient Ischemic Attack. Journal of general internal medicine. 2018.

Bravata DM, Myers L, Homoya B, Miech EJ, Rattray N, Perkins A, Zhang Y, Ferguson J, Myers J, Cheatham A, Murphy L, Giacherio B, Kumar M, Cheng E, Levine D, Sico J, Ward M, Damush T. The protocol-guided rapid evaluation of veterans experiencing new transient neurological symptoms (PREVENT) quality improvement program: rationale and methods. BMC Neurology, 2019, 19; 294.

Williams L, Daggett V, Slaven JE, Yu Z, Sager D, Myers J, Plue L, Woodward-Hagg H, Damush TM. A cluster-randomised quality improvement study to improve two inpatient stroke quality indicators. BMJ Qual Saf. 2016 Apr;25(4):257-64.

Harvey G and Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implementation Science. 2016;11:33.

Damschroder LJ, Lowery JC. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR). Implementation Science. 2013;8(1):51.

Rattray NA, Damush TM, Luckhurst C, Bauer-Martinez CJ, Homoya BJ, Miech EJ. Prime movers: Advanced practice professionals in the role of stroke coordinator. J Am Assoc Nurse Pract. 2017;29(7):392-402.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science, 2009;4(1):50.

Bosworth H, Olsen M, Grubber J, et al. Two self-management interventions to improve hypertension control: a randomized trial. Annals of internal medicine. 2009;151(10):687-695.

Bosworth H, Powers B, Olsen M, et al. Home blood pressure management and improved blood pressure control: results from a randomized controlled trial. Archives of internal medicine. 2011;171(13):1173-1180.

Ranta A, Dovey S, Weatherall M, O'Dea D, Gommans J, Tilyard M. Cluster randomized controlled trial of TIA electronic decision support in primary care. Neurology. 2015;84(15):1545-1551.

Rothwell P, Giles M, Chandratheva A, et al. Effect of urgent treatment of transient ischaemic attack and minor stroke on early recurrent stroke (EXPRESS study): a prospective population-based sequential comparison. Lancet (London, England). 2007;370(9596):1432-1442.

Powell B, Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015 Feb 12;10:21

Bidassie B, Williams LS, Woodward-Hagg H, Matthias MS, Damush TM. Key components of external facilitation in an acute stroke quality improvement collaborative in the VHA. Imp Sci, 2015, May 14;10:69.

Ritchie MJ, Kirchner JE, Townsend JC, Pitcock JA, Dollar Km, Liu C-F. Time and Organizational Cost for Facilitating Implementation of Primary Care Mental Health Integration. JGIM; 2019; https://doi.org/10.1007/s11606-019-05537-y.

Cruess RL, Cruess SR, Steinhert Y. Medicine as a Community of Practice: Implications for medical education. Acad Med. 2018 Feb;93(2):185-191.

Kizer KW, Dudley RA. Extreme makeover: Transformation of the veterans health care system. Annual review of public health. 2009;30:313-339.

Hagg H, Workman-Germann J, Flanagan M, et al. Implementation of Systems Redesign: Approaches to Spread and Sustain Adoption. Rockville, MD (USA): Agency for Healthcare Research and Quality;2008.

Li J, Zhang Y, Myers LJ, Bravata DM. “Power Calculation in Stepped-Wedge Cluster Randomized Trial with Reduced Intervention Sustainability Effect.” Journal of Biopharmaceutical Statistics. 2019;29(4): 663-674

Mdege ND, Man MS, Taylor Nee Brown CA, Torgerson DJ. Systematic review of stepped wedge cluster randomized trials shows that design is particularly used to evaluate interventions during routine implementation. J Clin Epidemiol. 2011;64(9):936-948.

Hamilton A, Brunner J, Cain C, et al. Engaging multilevel stakeholders in an implementation trial of evidence-based quality improvement in VA women’s health primary care. TBM. 2017; 7(3):478-485.

NVivo qualitative data analysis software; QSR International Pty Ltd. Version 12, 2018.

Miech E. The GO Score: A New Context-Sensitive Instrument to Measure Group Organization Level for Providing and Improving Care. Washington DC.2015.

Averill, JB (2002). "Matrix analysis as a complementary analytic strategy in qualitative inquiry." Qualitative health research 12(6): 855-866.

Creswell JW, Klassen AC, Clark VLP, Smith KC. Best Practices for Mixed Methods Research in the Health Sciences. Washington, DC, USA: Office of Behavioral and Social Sciences Research 2011. http://obssr.od.nih.gov/scientific_areas/methodology/mixed_methods_research/pdf/Best_Practices_for_Mixed_Methods_Research.pdf.

Averill, J. B. (2002). Matrix analysis as a complementary analytic strategy in qualitative inquiry. Qualitative health research 12(6): 855-866.

Miech EJ, Rattray NA, Flanagan ME, Damschroder L, Schmid AA, Damush TM. (2018). Inside Help: An Integrative Review of Champions in Healthcare-Related Implementation. SAGE Open Medicine. 6: 2050312118773261.

Flanagan ME, Plue L, Miller KK, Schmid AA, Myers L, Graham G, Miech EJ, Williams LS, Damush TM. A qualitative study of clinical champions in context: Clinical champions across three levels of acute care. SAGE Open Medicine, 2018;6.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, and Damschroder L. A systematic review of the use of the Consolidated Framework for Implementation Research (CFIR). Implementation Science, 2016;11:72.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendation for specifying and reporting. Imp Sci 2013; 8; 139.

Waltz TJ, Powell BJ, Fernandez ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implementation Science, 2019;14:42.

Funding

This work was supported by the Department of Veterans Affairs (VA), Health Services Research & Development Service (HSRD), Precision Monitoring to Transform Care (PRISM) Quality Enhancement Research Initiative (QUERI) (QUE 15-280) and in part by the VA HSRD Research Career Scientist Award (Damush RCS 19-002).

Author information

Authors and Affiliations

Contributions

All authors participated in the revision of the manuscript and read and approved the final manuscript. DMB: obtained funding and was responsible for the design and conduct of the study including intervention development, data collection, data analysis, and interpretation of the results. TD, LJM, EJM, NR: instrumental in the design of the intervention, implementation strategies, data collection, data analysis, and interpretation of the results. TD and DMB drafted and revised the manuscript along with edits from LP, EJM, NR, BH, LM. BH: instrumental in the design of the intervention and data analysis. LP: participated in data analysis, and interpretation of results. AJC, JM, SB, CA: participated in data analysis. JJS, AP, ZY, LDM: participated in study design, data analysis, and interpretation of results. BG and MK: participated in the intervention design and data collection.

Corresponding author

Ethics declarations

Disclaimer

Support for VA/Centers for Medicare and Medicaid Service (CMS) data is provided by the VA Information Resource Center (SDR 02-237 and 98-004). The funding agencies had no role in the design of the study, data collection, analysis, interpretation, or in the writing of this manuscript.

Ethics Approval and Consent to Participate

PREVENT and the Implementation Evaluation team received human subjects (institutional review board [IRB]) and VA research and development committee approvals. Staff members who participated in interviews provided verbal informed consent. PREVENT was registered with clinicaltrials.gov (NCT02769338) and received human subjects (institutional review board [IRB]) and VA research and development committee approvals. In addition, the implementation evaluation received separate human subjects (IRB) and VA research and development committee approvals (IRB 1602800879).

Consent to Publish

This manuscript does not include any individual person’s data.

Conflict of Interest

The authors declare that they have no competing interests. TD, AP, LM: Disclosed funding from a VA HSRD QUERI grant. DMB, EJM, NR, LP, BH, JM, AJC, SB, CA, JJS, ZY, LDM, BG, and MK reported no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 224 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Damush, T.M., Miech, E.J., Rattray, N.A. et al. Implementation Evaluation of a Complex Intervention to Improve Timeliness of Care for Veterans with Transient Ischemic Attack. J GEN INTERN MED 36, 322–332 (2021). https://doi.org/10.1007/s11606-020-06100-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-020-06100-w