Abstract

Bedload transport is an important factor to describe the hydromorphological processes of fluvial systems. However, conventional bedload sampling methods have large uncertainty, making it harder to understand this notoriously complex phenomenon. In this study, a novel, image-based approach, the Video-based Bedload Tracker (VBT), is implemented to quantify gravel bedload transport by combining two different techniques: Statistical Background Model and Large-Scale Particle Image Velocimetry. For testing purposes, we use underwater videos, captured in a laboratory flume, with future field adaptation as an overall goal. VBT offers a full statistics of the individual velocity and grainsize data for the moving particles. The paper introduces the testing of the method which requires minimal preprocessing (a simple and quick 2D Gaussian filter) to retrieve and calculate bedload transport rate. A detailed sensitivity analysis is also carried out to introduce the parameters of the method, during which it was found that by simply relying on literature and the visual evaluation of the resulting segmented videos, it is simple to set them to the correct values. Practical aspects of the applicability of VBT in the field are also discussed and a statistical filter, accounting for the suspended sediment and air bubbles, is provided.

Similar content being viewed by others

Introduction

The sediment transport capacity of a river is determined by the constant interaction between the water flow and the riverbed, both of which are equally important sides of the mechanism. This leads to a complex matrix of parameters that are necessary to know if one attempts to describe the sediment transport features. This is especially true for the bedload transport, where sediment particles with high particle Reynolds number are sliding, saltating and rolling on the riverbed. During their movement, they are constantly experiencing friction and collision with each other and the immobile parts of the bed (Parker 2004). The complexity of the process on one hand lies in its temporal and spatial variations, but also in its partially random and stochastic nature (Einstein 1937; Csoma 1975). In fact, conventional measuring and sampling methods (i.e., sediment trapping instruments, lowered to the bottom of the river; e.g., Hubbel 1964) that could help to gather data and lead to better understanding of the phenomenon are limited. Besides being very time and energy consuming, they have inherent errors and limitations both in temporal and spatial terms. For example, if the river bed is characterized by bedforms or dunes, these trap boxes may get stuck on top of them, while the bedload sediment passes under the sampling tool. Similarly, the measurements are biased if the flow turns the tool away from being parallel to the flow direction. Also, choosing wrong sampling time or sampler type can yield false results (Hubbel 1987; Gaweesh and Van Rijn 1994; Liu et al. 2008). Furthermore, all of these methods are intrusive, i.e., they disturb the flow in the vicinity of the sampler, hence the bedload too (Hubbel 1964).

Due to the complexity of the bedload process and the limitations in its measurement, there is no univocally accepted, universal equation to describe and calculate bedload transport rate, based on the flow and riverbed characteristics. For this reason, researchers are constantly developing and experimenting with new measurement methods, mainly so-called surrogate or indirect ones, that could possibly replace the conventional ones.

One large group of the indirect bedload measurement methods build on acoustic techniques. Numerous studies tested the applicability of Acoustic Doppler Current Profiler (ADCP) to quantify bedload transport based on the bias between bottom tracking and actual GPS positions of the measurement vessel (e.g., Rennie et al. 2004; Rennie and Villard 2004; Latosinski et al. 2017). When bedload transport is represented by propagating bedforms, tracking of dune displacements, with single beam echo-sounders or ADCPs, can provide information on transported amount of sediments (e.g., Engel and Lau 1981; Kostaschuk et al. 1989; Dinehart 2002). Moreover, recently, the more sophisticated multibeam echo sounding (MBES), providing high-resolution 3D bed geometry, was tested to quantify bedload transport based on repeated surveys (e.g., Duffy 2006; Abraham et al. 2010). Furthermore, MBES combined with image-based technique, called Acoustic Mapping Velocimetry (AMV), has also been developed and tested by Muste et al. (2016) and You et al. (2021), providing a very promising alternative for bedload transport assessments.

The other large group of indirect methods exploits videography and image processing, where video recordings of the moving particles are taken and analyzed. Various experiments were carried out in the past decades such as particle tracking velocimetry (PTV) (Drake et al. 1988; Papanicolaou et al. 1999) to image differencing (Radice et al. 2006; Conevski et al. 2019, 2020). However, these were limited to laboratory circumstances, with a lesser potential to deploy in the field. The exception was the so-called Particle Image Velocimetry (PIV; Adrian 1991), which provided fast and non-intrusive measurement of flow velocities both in laboratories and field conditions, even where traditional measurements would have been difficult to carry out (Fujita et al. 1998; Muste et al. 2011; Detert et al. 2019).

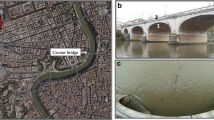

The main goal of this study was to develop a video-based bedload analysis method which has a potential for further field-use, performing underwater imaging in a large river. This was done through a novel combination of two already existing image processing methods, namely the Large-Scale Particle Image Velocimetry (LSPIV; Fujita et al. 1998; Muste et al. 2008; Fleit and Baranya 2019), which is an extension of the traditional PIV (Adrian 1991; Willert et al. 1991) and the Statistical Background Modelling (SBM; also known as Gaussian Mixture Modelling, Statistical Background Segmentation or Adaptive Background Mixture Modelling; Stauffer and Grimson 1999; Kaewtrakulpong and Bowden 2002), for the analyses of laboratory gravel bedload videos. The first is already widely known in general hydraulic measurements, while the latter counts as the most sophisticated, robust and applied method in general computer vision and motion detection.

Laboratory setup

The laboratory tests were carried out in a flume with a rectangular cross section, at the Hydraulic Laboratory of the Norwegian University of Science and Technology (NTNU) in Trondheim. The channel was 12.5 m long, of which 10 m was mobile bed (gravel sediment), and 0.6 m wide. Originally, this setup was used to measure bedload velocity with ADCP (Acoustic Doppler Current Profilers). A camera was mounted facing downwards the channel bed in order to use the image frame differencing method to gain velocity information this way too (Conevski et al. 2020). The experimental setup can be seen in Fig. 1. At the end of the flume, a 1 m long and 1 m wide bedload trap was installed to collect and measure the transported sediments. After sieving the gathered bedload material, the grainsize distribution was established to be D50 = 7.55 mm and D90 = 10.45 mm. In this study, the videos of 3 test runs were processed, each of them having different bedload transport conditions. All experiments represented the transport of gravel particles. The hydraulic parameters of the three different tests can be seen in Table 1. Besides, for Experiment 3, three additional (repetition) videos were analyzed in this study, taken during the same run, but at different times in order to see if there was any significant temporal fluctuation in the bedload transport rate.

(modified from Conevski et al. (2020))

Side-view sketch of the flume and the camera setup

The footages were 8–10 s long and taken by a high-speed camera AOS1000 with Full HD (1984 × 1264 pixels) resolution and 139 framerate (fps). In order to decrease computational need, the images were grayscale (i.e., only 1 value (intensity) for each pixel, instead of 3 (RGB)). The Field of View covered an area of approximately 19.8 × 12.6 cm (Fig. 2). The camera was submerged a couple of centimeters into the water. Due to the high fps and resolution, suspended sediment and air bubbles appeared as either fast-moving blurs and noises in the videos, or slow-moving particles (as they sticked to the camera case and slowly migrated onward). These were especially true in case of EXP1 and EXP2. This phenomenon was accounted for later on.

Methodology

The method developed in this paper is built on two pillars: (1) Large-Scale Particle Image Velocimetry (LSPIV) and (2) Statistical Background Modelling (SBM). The former is already known in hydraulics as one of the innovative ways to calculate flow velocities from video footages. The latter is generally known in moving object recognition (video surveillance systems and traffic control), but it is not widely known in hydraulics so far (the exceptions are focusing on fish monitoring, e.g., Huang et al. 2015; Scofield et al. 2021). We name the new method Video-based Bedload Tracker (VBT).

Image preprocessing

Preliminary tests showed that the VBT detects suspended sediment particles and air bubbles as coarse sediment particles of the bedload transport. This is due to the fact that both the fine sediments and the bubbles are transported close to the camera lenses, and consequently, they are apparently large and fast-moving particles. Since these false particle recognitions would have biased the bedload transport calculation, a 2D Gaussian filter was applied on the videos (Fig. 3) until a point where the disturbances were weaker based on visual evaluation.

After applying the Gaussian filter, the LSPIV and SBM algorithms are both run through the preprocessed image sequences. It is important to mention that the two algorithms are run separately and parallel at this point. This is to avoid their potential, individual errors passing on. For instance, due to its learning process, the SBM has a relaxation (adaptation time; see later in Section “Statistical Background Modelling (SBM)”), meaning particles that are settling down/stopping are still detected by the model (until a certain degree of time), and they do not disappear instantly from the binary image, only gradually. This means they are still accounted for as surfaces/masses even when they stopped a few frames ago. On the other hand, the PIV grid solution can have false velocity detections on its own: low cross-correlation values create errors in velocities.

Large-Scale Particle Image Velocimetry (LSPIV)

The method is based on matching surface patterns through image sequences of the recorded fluid from which local displacements and then two-dimensional velocity vectors can be calculated. The patterns are detected in the pre-defined interrogation areas (IA), having a calculation node in their centers (Fig. 4). The calculation nodes build up a grid over the image; velocities are calculated at each node. It is the IA pattern that is searched for in the following image using the cross-correlation as a similarity index method. The candidate displacement is going to be the distance between the original position and the position with the highest cross-correlation. The displacements are refined with sub-pixel displacement approximation method presented by Nobach and Honkanen (2005). The user needs to define the Search Areas (SA) around the IAs, to speed up the calculations. These SAs are estimation windows, which are possibly going to contain the displaced pattern, avoiding the unnecessary calculation of cross-correlation all over the image. The smaller the SA is, the lower the computational demand becomes; however, the SA has to be large enough to contain the true displacements. Each gridpoint of the computational grid had the same size of IA, and SA around them, respectively. Additionally, the neighboring SAs were overlapping. In this paper, an in-house developed LSPIV code was used (Fleit and Baranya 2019). The IA size were chosen to be smaller than the particles. This contradicts conventional PIV recommendations (i.e., at least six particles to be captured within the IA); however, the nature of the data (1), the "flow" itself (2), and the post-processing methodology (3) justify this setting, as detailed below: (1) After preprocessing the images with contrast limited adaptive histogram equalization (CLAHE; Yadav et al. 2014), the image matrices cover a wide range of grayscale intensity values (contrary to the practically binary frames in conventional PIV) forming patches to be matched on consecutive frames. This approach is often followed in classical LSPIV calculations as well, where the movement of patches (debris, sediment plumes, turbulent boils, etc.) and not actual particles are analyzed; (2) In contrast with the fluid flow, where the velocity field and thus the particle movements are rather coherent and smooth, the movement of the particles is less deterministic. Apart from collisions, the local, individual particle velocities are independent from one another. Using a large enough IA to capture multiple particles (of different velocities) could not reflect the real particle velocity conditions and would result in a potentially erroneous, spatially averaged velocity field; (3) Due to the dense LSPIV grid, different parts and the direct proximity of each particle is characterized with multiple velocity vectors. For the actual bedload calculations, only the vector closest to the center of the particle (derived via background modelling; see later) is used, as it characterizes the velocity of the given particle the best.

The structure of the LSPIV. The pattern found inside the IA (yellow square) is looked for in the consequent image, inside the SA (blue rectangle). From the measured displacement and time between frames, the calculated velocity vector is added to the given grid point (center of the IA). This is carried out on every point of the grid (black dots) and results in a velocity vector field as seen here. The grid is fixed during the whole sequence or video

Statistical Background Modelling (SBM)

This method is mostly used in video surveillance systems (e.g., observing the perimeter of guarded buildings or traffic; Zhang et al. 2018) for separating the immobile background of a video from its mobile foreground parts. It is one of the most trustworthy motion detection approaches. Its popularity is due to its robustness: it is able to handle lighting changes, detects slow movement, lessen the effect of smaller camera displacements, tracks objects in crowded scenes, deals with repetitive motions and it can introduce or remove objects (if they start to move or stop) inside of the field-of-view (Stauffer and Grimson 1999). In the last decades, the method has gone through further improvements, for instance: (1) it became more resilient to noise and shadows (Kaewtrakulpong and Bowden 2002), (2) it was adapted on videos from moving cameras (Hayman and Ekhlund 2003), and (3) overcame the limitations of using high-resolution RGB cameras with low-resolution Time-of-Flight (depth information) cameras (Langmann et al. 2010).

In the following, a short and basic introduction of the method is provided, with Fig. 5 presenting the general flowchart of the SBM on a grayscale video. The footage is analyzed frame-by-frame and pixel-by-pixel. The individual pixel intensity (gray value) histogram in each pixel is drawn, based on the occurring values at their location during the previous frames and the current one. It is then assumed that the peak of the histogram (the most probable/frequent intensity) is the background value of the given pixel. Any other value is treated as they belonged to moving objects, passing in front of the immobile background. To rationalize calculation times, these histograms are covered and replaced by Gaussians as they can be described by their mean and standard deviations only (Bouwmans et al. 2010). Their initial number is defined by the user. A matching operator is also set which will define if an intensity value fits any of the Gaussians or not. If it does, then the so-called weight parameter of that Gaussian will increase, while the others’ will decrease (i.e., their sum needs to be 1). If it does not, then the value is defined as the mean of a new Gaussian, with the lowest weight. In this simplified model, the mean of the Gaussian with the largest weight (i.e., the tallest one in the histogram) is defined as the background value of the pixel. If a new immobile object appears and stays, then its new Gaussian will eventually outweigh the previous value. Hence, this adaptation needs a short amount of time due to the statistic nature of the method and happens after a few frames (recovering time, relaxation time). Still, this recovery happens faster than it does in other motion detection algorithms (Stauffer and Grimson 1999; Bouwmans et al. 2010). Any occurring values that do not fit the current background Gaussian are categorized as foreground. This background calculation is carried out in each pixel of the frame, resulting in the background image. This image is then subtracted from the original frame to retrieve the difference, i.e., the foreground. These steps are carried out in each frame of the video. The total background and foreground image sequences are then ready for further analysis. The background detection and subtraction in this paper were based on the MATLAB vision.ForegroundDetector code (MATLAB 2011) which uses the above introduced SBM.

Flowchart of the Statistical Background Modelling method. Step 3.1 showcases an example for the frequency diagram of a pixel (blue). The simplifying Gaussian curves can be seen with black (two curves in this example; bimodal background distribution). Only the describing parameters of the curves are stored in each pixel, reducing computational time

In this study, this method was adapted to separate the moving bedload (foreground) from the fix bed (background) and to analyze the former in details. Unlike image-differencing (the other well-known motion detection approach; Lu et al. 2003), this method uses more than the consecutive two images step-by-step and sets a dataset for the whole video using more frames to learn and adapt for the calculation. To make the calculation easier, it is a well-known step to turn the foreground images into binary images (Fig. 5) where the pixels that are part of moving objects receive the value 1 (white), while the rest is 0 (black). As the next step, the areas of the white, moving particles are calculated individually, along with the coordinates of their centroids. Here, the assumption was made that all gravel particles are sphere-shaped, so their equivalent diameter and corresponding spherical volume could be derived based on the detected areas, in each frame. Multiplying these volumes by a constant sediment density of 2650 kg/m3, the mass of each moving particles could be approximated.

Combination of the two methods; Bedload Tracking Velocimetry (BTV)

The main novelty of this paper is the combination of the Eulerian LSPIV velocities with the SBG for the analysis of the videos. The centroid coordinates are taken from the background model and the closest velocity vector of the LSPIV grid is then interpolated onto that point (closest neighbor interpolation; Fig. 6). As a result, particle data are available in a Lagrangian manner, i.e., the list of moving particles, their size (diameter and mass), position, number and velocity is available for each frame.

Calculation of bedload transport rate

First, using the flow-directional components of the velocities only, the momentum of each individual, moving particle of the image is calculated and summarized [kg∙m/s], then divided by the total area of the FOV, resulting in the instantaneous specific bedload transport rate [kg/(m∙s)]. This is carried out for every video frame (Fig. 7). At the end of the footage, the cumulative mean is calculated for qb, from which the total bedload transport rate in the flume (Qb [kg/s]) is finally determined by multiplying qb with the width of the flume (0.6 m).

The reason behind performing the LSPIV and the SBM separately as a first step was to make them independent, so their potential flaws do not influence each other. Rather, they would go through a mutual reinforcement as a trade-off (the main hypothesis of this paper). The sources of these errors are inherent in the two methods; however, they are related to different substeps of the bedload estimation procedure as both the LSPIV and the SBM are used for different tasks in the proposed combined framework. While the SBM is only responsible for the detection of the moving particles, LSPIV is only used for velocity calculations. Consequently, neither of the two methods is suitable for the estimation of bedload alone. The elimination of the potential errors is not done in a controlled manner like a filtering, but is rather a result of the formula used for bedload calculations. For instance, if there is relaxation (see on Section “Statistical Background Modelling (SBM)”) from the SBM in the given point, there is no velocity from the LSPIV, because in reality there is no displacement of the IA (or just very small velocities from the PIV noise/false detection) between the two consecutive frames. Indeed, their impulse would be very low and close to zero, not influencing the overall bedload transport rate calculation. Hence, it is not necessary to filter out these false detections from neither the SBM, nor the LSPIV. It is known to be problematic to manually pick the right cutoff filter values (either velocity or size) above or under which the results are considered to be noises and left out. This way, the main hypothesis being true could showcase one of the advantages of the method.

Sensitivity analysis

Velocities

Manual counting was carried out in case of EXP3, in order to get a validation of the velocity range. We chose this experiment since it had multiple repetition videos and was relatively undisturbed by suspended sediment or air bubbles. We followed the path of selected gravels in the videos, through several frames, and measured their displacement to calculate their velocities. In general, velocities seemed to be between 0.05 and 0.80 m/s after analyzing approximately 200 frames.

Furthermore, we wanted to ensure that it is indeed an advantage to combine the two methods via interpolation only after their individual analyzes, and not before (i.e., without interpolation and the mutual reinforcement discussed earlier, by simply using average mass in motion and velocity values per images to calculate bedload transport rate). We, therefore, performed a statistical assessment of the LSPIV velocities (Fig. 8). The results clearly showed that using the mean of the frame averaged velocities (i.e., over-all average; purple line in Fig. 8), the actual bedload transport is significantly underestimated, because low and incorrect velocity (even false negative) vectors were present due to false recognition of the LSPIV. A minimum cutoff velocity would have been necessary to be chosen.

Statistical analysis of the LSPIV results in EXP3. Orange dashed line depicts the time series of the detected maximum velocities for each frame along the video. Linear green is the mean of the detected maximum velocities. Blue dashed line depicts the time series of the frame-averaged velocities and the purple is its mean. Yellow is the time series of the detected minimum velocity in the frames, with linear light blue being its mean. These latter, close-to-zero and negative values were false detections/noises

After confirming the presence of erroneous velocity vectors, a sensitivity analysis was carried out to test the effect of introducing a minimum velocity threshold on the calculated bedload transport rates. It can be observed that introducing a 0 m/s filter, i.e., no gravel should be moving against the flow, did not change the original result, only by ca. 0.5%. By increasing this threshold velocity, the change remained around 1% up until 0.05 m/s was reached. According to the manual count, this was roughly the lower limit of the moving particle velocities. Thus, introducing thresholds higher than this would result in losing correctly calculated grains and velocities, leading to an underestimation of the bedload transport. Even without threshold filter, the difference between the calculation and the laboratory measurement remained under 10%, enhancing that the main hypothesis (i.e., the advantage of merging the LSPIV and SBM results; mutual reinforcement) was correct and there was no need for thresholding and further preprocessing (Fig. 9).

Sensitivity on chosen video footages of EXP3

EXP3, least affected by bubbles and suspended sediment, was chosen to assess if the method is sensitive to the video footage selection. For EXP3, four video footages, recorded at different time sections of the whole experiment, were analyzed. The calculated bedload transport rates for the four recordings showed that the proposed methodology provides consequent performance during all the videos (Fig. 10). Note that the very same set of input parameters was applied, and the deviation from the experimental data remained below 10%.

Input parameters

It is important to mention that even though the two methods have several free parameters, the parameter setting used for the EXP3 repetitions below (and later for EXP1 and EXP2) was based on visual observation and reasonable estimations. This is in fact an advantage of videography and image-processing methods, in general, that they allow the user to set the algorithm parameters correctly before continuing with calculations and further quantifications. The sensitivity analyses below confirm that optimal parameter setup can be found based on visual observations and literature recommendations. The selected parameters are consequently indicated with red symbols (REF) in Figs. 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24. These REF parameters were the same and kept constant for all the videos (EXP1, EXP2, EXP3 and its repetitions), and only the subject parameter of the sensitivity analysis was changed to see its effect on the bedload transport rate. To quantify sensitivity, we proposed a ± 10% margin measured from the transport rate reached by the REF parameter setup. If the calculated transport rate was in that range with the analyzed parameter, then the method was defined as insensitive to it. Choosing ± 10% as threshold was based on the bedload transport being strongly stochastic phenomenon, as it has been discussed in earlier papers (e.g., Einstein 1937; Ancey and Pascal 2020). Field measurement studies (Csoma and Szigyártó 1975; Recking et al. 2012), with conventional sampling methods, showed the uncertainty domain for bedload transport measurements can range anywhere between ± 25 and 100%. In laboratory, this was found to be somewhat lower, with a value of ± 10% for shorter sampling times (around 10 s). The duration of the test videos analyzed in our paper belonged to this category. Hence, the choice of 10% for the acceptable limit was made with these considerations.

The change of detected D50 values from the image processing method due to different cutoff particle sizes. Even though it shows how significantly false detections were present, together with Fig. 21, they prove the effectiveness of initial method separation and mutual reinforcement as these false positives did not influence the calculated bedload transport rate

Effect of the background ratio

The background ratio sets the minimum probability for a pixel to be considered as background (immobile) value. It is an overall prior probability in the given pixels (Power and Schoones 2002). If the background is expected to change frequently, a higher value needs to be set, while in case of lower background motion it should be lower. At the analyzed laboratory videos, most of the bed was unchanged. Some of the gravel bed particles were shaking or swept away, but the majority remained. Also, most of the moving particles travelled through the camera FOV, and only a few particles settled during the video recordings (i.e., new background values were not adapted, relaxed). This meant a unimodal background distribution in most pixels. The sensitivity analysis results confirm this too. Under 0.7 probability (the default value from literature; MATLAB 2011), the variation of the calculated bedload transport rate remained within the 10% threshold (Fig. 11). However, setting the parameter to higher values (> 0.7), a significant decrease in the calculated transport rate was observed. This is because the background did not change frequently enough.

Effect of the number of Gaussians

We analyzed the influence of the number of Gaussians, i.e., the number of covering curves (discussed in Fig. 5). Note that with increasing changes in the background, the higher this value has to be (more modes require more Gaussians). In the analyzed videos, the background was rather permanent; hence, increasing the number of Gaussians has no significant influence (Fig. 12).

Effect of the number of learning frames

The number of learning frames sets the number of initial training frames for initializing the Gaussian mixture model. Effectively, the user does not receive specific foreground detection result in this first set of images as they are solely used for setting the Gaussian modes as a start. The correct amount depends on the velocities of the moving objects, but also on the framerate of the video. Too low number led to miss certain moving gravels, while high numbers led to longer relaxation times for settling particles. In the latter case, particles might be merging together with neighboring, moving gravels. As Fig. 13 clearly suggests, the VBT is indeed sensitive to this parameter, indicating a variance of ± 20% in the detected bedload transport rate.

Effect of the video framrate

Decreasing the framerate (in frame per second—fps) of the source video was also tested by excluding image sequences from the original footage. This way the timestep between analysed frames was increased. The estimated bedload transport rate was lower when the fps was decreased to either half or third of the original. It is important to mention that the SA size was adjusted accordingly, i.e., with higher fps, larger SA is needed to avoid loosing particles. This way, the effect on the LSPIV was not tested as it is known from previous studies (Radice et al. 2006). The SBM, however, suffered the loss of frames, as the dataset shrinked (fewer frames, higher timesteps, loss of moving particles with high variances).

It is intuitive that if the framerate is different, the number of learning frames should also be adjusted. Hence, sensitivity analysis was carried out with the three different fps to see how the number of learning frames has to be chosen to keep the good match with the physically measured bedload transport rate (Fig. 15). The results suggested that it is possible to use lower framerates, but number of learning frames has to be well chosen.

Effect of the learning rate

By default, the hereby used Gaussian mixture model adapts the rate of refreshing its background model parameters (Bouwmans et al. 2010) during the training frames, by using the learning rate parameter, which is calculated for every Gaussian at every frame (Lee 2005). After the initial training period is over (Section “Effect of the number of learning frames”), this parameter takes a constant value the user has chosen. Practically, it describes how fast the model can adapt to quickly changing environment. A brief sensitivity analysis was carried out for choosing the constant value of this parameter as well (Fig. 16). For the reference run (marked with red), the default value of 0.005 (MATLAB 2011) was kept and indeed gave the best result out of the examined setups. It is known that high learning rates can lead to pixel saturation (Bouzerdoum and Beghdadi 2010), and the false positive detections start to increase (Zitouni et al. 2016). As a result, the bedload transport rate also started to increase, as the detected moving patches started to grow and merge together due to high saturation. On the other hand, when the value is too low, slow moving particles are neglected, and even though the detection of smaller and faster particles is becoming more accurate, the algorithm underestimates the bedload transport rate.

Effect of the initial mixture model variance

One of the most important inputs is the initial mixture model variance (measured in grayscale intensity; GSI). When estimating the background in each pixel, the goal is to determine which Gaussian of our mixture model has the highest probability to contain the background value. This means, the distribution with the most frequent values and smallest variance is the actual background distribution (Stauffer and Grimson 1999). When the gravel particle in the given pixels is immobile, the same values are going to appear repeatedly, with a low variance. The moving particles will represent higher variances. This parameter sets the initial value for the variance of our initial Gaussians. If it was set too low, then even smaller changes of the background gravel particles (e.g., shaking or lightning changes) were categorized as foreground, increasing the bedload transport rate (Fig. 17). On the other hand, setting it to higher values resulted in omitting slower moving particles from the foreground as the model defined them as immobile. Consequently, the LSPIV were not coupled with them. This is because their variance is lower than in case of fast movement. Interestingly, by setting the algorithm to “Auto” mode for defining this value by itself, the same incorrect result was reached as in case of the high variance. The results show the importance of choosing this parameter correctly; however, it could be done by visual observation of the binary videos after the background modelling. The herein presented method seems to be sensitive to this parameter. It is especially true at low values, when the increased relaxation time led to foreground areas merge into ones, increasing their estimated mass and relocating their centroids.

Effect of the LSPIV interrogation area size

The effect of the LSPIV IA (and SA proportionally) size was also analyzed. Generally, PIV methods work well when there is a coherent pattern inside of their IA to look for in the SA. In case of bedload particles, it can be difficult to choose this parameter well, as the particles are not necessarily moving in a coherent way, but rather collide, circumvent, separate and saltate. That is, in case of large IAs, the LSPIV may not necessarily be able to reflect the smaller fast-moving particles, but rather provides spatially averaged, and hence lower value. Also, if the majority of the IA pixels contains immobile particles, and only a few of them presents moving particles, that can also corrupt the cross-correlation calculation. Keeping these in mind, the original (reference) size of the IA was set to sub-particle level (12px × 12px, which accounted for 4.8 mm × 4.8 mm at this point; see Fig. 18) when the satisfactory bedload transport rate was achieved. For the sake of the sensitivity analysis, the LSPIV calculations were performed with smaller and bigger IA sizes as well (around D50 and above D90, Fig. 19). Results show that the larger the IA became, the more significant the difference started to be, resulting in gradually lower bedload transport rates. This was due to the reasons above, the detected moving particles were coupled with lower, spatially averaged velocities. If the IA was set to be even smaller than the original, the good result did not change significantly. If the IA size was kept under at least D50, the result stayed in the acceptable 10% difference range.

Effect of LSPIV vector density

Briefly, the effect of the LSPIV computational grid density (i.e., vector density) is also discussed. The original grid distribution (marked with red in Fig. 19) was already as sparse as possible in regard of the used IA size, meaning the interrogation areas of the neighboring grid points were fitting but not overlapping. Analyzing lower grid distribution would have not benefitted the research, as areas of the images would have been neglected. This is due to moving the IAs further and further away from each other, along with the SA, resulting in lower bedload transport rates. The effect of doubling the grid density (from 40 × 25 points to 80 × 50) was assessed. The result did not differ significantly (lower than 10%) from the original run, meaning that the method is not particularly sensitive to grid resolution within reasonable limits.

Effect of pixel connectivity

Pixel connectivity is a parameter not specially belonging to either the background mixture model, or the LSPIV, but rather to general image morphology. When the foreground segmentation is carried out, the resulting images are binarized (stationary pixel–0; pixel in motion–1). From this point, the pixel connectivity parameter defines how the pixels are connecting and which pixels belong to the same object. If they are a connected group, then they form one object. Hence, this parameter plays a role in the calculation of grainsizes. It can either set to the value 4, defining connectivity between pixels if one of their edges touches, or to 8, when it is either one of the edges or corners. In a 3D case, with voxels, the parameters could be 6, 18 or 26. The sensitivity analysis showed that detected bedload particles were separated confidentally by the algorithm, even when they were close to each other, and did not change the bedload transport rate significantly.

Effect of cutoff particle size

A cutoff particle size was also tested. The point of this sensitivity analysis was to prove why the LSPIV and the SBM run separately and only combined later, via interpolation. The cutoff particle size defines the minimum size (px2) below which foreground detections are neglected, when calculating bedload. In the reference run, the most obvious value, 400 px2, was chosen since this was equivalent with D = 2 mm (with the resolution in this case being 0.1 mm/px; also assuming spherical particles), below which particles are categorized as sand. The experiment was controlled by gravel transport, thus this lower limit was natural and anything below it could be named as noise or false detection. However, by reducing the cutoff to 0 mm, and even increasing it further (up until cca. D = 6.2 mm, which was around the D10 value of the actual bedload after measuring and sieving in the laboratory) did not influence the good result with more than the acceptable 10%.

In order to prove the main hypothesis of mutual reinforcement (Section “Calculation of bedload transport rate”) from the herein introduced method, Fig. 22. shows the D50 (sphere equivalent) value of the detected moving particles, while using the different cutoff particle sizes from Fig. 21. The D50 value changed drastically during the analysis, meaning numerous false positive detections were indeed present, but they did not play a role in calculating the bedload. This proved the main hypothesis of the paper (Section “Calculation of bedload transport rate”; mutual reinforcement between LSPIV and SBM) and the new approach. Interestingly, when the cutoff was around the D10 of the physical laboratory sample (Conevski et al. 2020), the D50 of the image processing reached a good match with the laboratory D50. It has to be noted that grainsizes detected and calculated here, by image-processing, are theoretically slightly different than the physically sieved grainsizes (i.e., in the video, one individual grain will represent itself with several different diameters [D] as it rolls in front of the camera). Considering this and our assumption of spherical particles (3.3.), which in itself leads to uncertainties, it was decided to not investigate this further in this paper, but rather at a later step.

Effect of the Gaussian filter

It was mentioned before that a strong, isotropic 2D Gaussian filter, with a standard deviation of 8, was introduced to the sample videos during preprocessing. This type of filter is frequently used in image processing for smoothing noises. In this study, the goal of using this filter was to reduce the noisy effect of suspended sediment particles, bubbles and changes in lighting. The initial value of 8 (marked in Fig. 23 with red) used in this study was selected by visual check of the foreground segmentation. A test run was performed neglecting the use of this filter, i.e., the raw footage was analyzed. It was shown that by not using the filter, the foreground detection became oversensitive and pixel patches that apparently belonged together, fell apart. This was due to the higher range of variance, as the crispy, high quality pixels suffered frequent intensity changes as the visible suspended particles were transported between the camera lenses and the bed. As a result, the binary images were also noisier and a high number of small particles were detected and calculated with low, even zero velocities, leading eventually to a significant underestimation of the bedload transport rate.

Effect of the video resolution

Lastly, the effect of the video resolution was also assessed by decreasing the original values to 50%, 75%, and 87.5%, respectively. The method was apparently not influenced by lower image quality (Fig. 24). This result suggests that the computational time can be drastically decreased, providing a more efficient algorithm. This, together with the previous point, at the same time, might suggest that the introduced method benefits from lower image quality.

Treatment of disturbed conditions (EXP1, EXP2)

The sensitivity analysis procedure in the previous points was performed for the EXP3 experiment, with the highest bedload transport rate. As introduced earlier, two other datasets were avaiable (EXP1 and EXP2), representing lower transport rates. The videos related to these experiments, however, were still significantly noisy, in contrast with EXP3, even after the Gaussian filter. As mentioned before, without any sort of filtering, the suspended sediments and air bubbles (well visible in the video footages) appeared as additional momentums (high or lower velocities coupled with large surfaces) in the calculations, resulting in QEXP1 = 36 g/s and QEXP2 = 230 g/s bedload transport rates, significantly higher than their laboratory results (Table 1).

For treating these false detections, we decided to exploit a novelty of the developed algorithm, i.e., the corresponding size—velocity data pairs and introduce a statistical filtering. We used the MATLAB DistFit application to find which type of distribution fits the best for: (a) detected particle diameter (size) data and (b) detected velocity data. Based on literature, both bedload velocities (Einstein 1950, Conevski et al. 2020) and grainsize distribution (Csoma and Szigyártó 1975) should follow gamma distributions. This was further confirmed after fitting several distibutions on our data and checking the log-likelihood values. As the next step, we examined the Joint Probability Function of the velocity and diameter data (Fig. 25).

The hypothesis was that the appearance probability of suspended sediment and bubble particles is low (and of different nature and distribution); thus, a threshold joint probability can likely be used for filtering them out. For this, the integral of the multiplication of the Probability Density Function (PDF) gamma distributions was used:

where Γ: gamma function, θ: scale parameter of the velocity gamma distribution, k: shape parameter of the velocity gamma distribution, κ: scale parameter of the diameter gamma distribution, m: shape parameter of the velocity gamma distribution, p: joint probability, di: diameter of particle I, vi: velocity of particle I, x: coordinate x; diameter, y: coordinate y; velocity corresponding to the given x.

In fact, by solving Eq. 1, we define a curve along which the coupled diameter-velocity data have the same constant joint probability. The distribution parameters were given by MATLAB DisFit for the dataset, respectively. The analysis was carried out by first, assuming a Dmax [mm] value with v = 0 m/s (i.e., the threshold size above which no movement is expected) and then using Eq. 1 to calculate p [%] value, corresponding to this data pair. With this p [%] value and Eq. 1, the iso-probability curve was calculated for the dataset (i.e., the curve along which the diameter-velocity pairs have the same joint probability). By omitting the data with lower joint probability than p (i.e., outside of the curve; outliers), the bedload transport rate was finally calculated. The diameter-velocity points of the original datasets of EXP2 and EXP1 can be seen on the left sides of Figs. 26 and 27, respectively. High momentum particles, indicated with red, represent unrealistic behavior, considering the known characteristic grain diameter (from sieving) and known characteristic grain velocities (from LSPIV). Indeed, based on the known Dmax (14 mm in this study) values, the threshold for the expected joint probability can be given. For EXP2 and EXP1, the resulted joint probabilities were pEXP2 = 0.7%, pEXP1 = 0.05%, respectively. Higher joint probability value at EXP2 can be explained with the stronger flow conditions (see the hydraulic conditions in Table 1). After filtering both datasets using this Dmax value, the unrealistic, high momentum data could be excluded (see the right sides of Figs. 26 and 27). Based on the filtered datasets, we calculated the bedload transport rate for EXP1 and EXP2. Moreover, the sensitivity of applying the joint probability based filter on the Dmax value was also tested, as some uncertainty can arise in the determination of this parameter. Accordingly, the bedload transport rate was estimated for the Dmax range of 12–14–15 mm.

EXP2. A The 2D (top-view) plane of Fig. 25 with color-weighting. The colors represent the momentum of the given particle, with red being the highest, and blue the lowest. The rare values with very high diameter and velocity are unrealistic at the studied experimental conditions. These were most probably the results of suspended sediment and/or air bubbles passing by. The black dashed curve represents the pEXP2 = 0.7% threshold. On its left, the data had higher, while on its right higher joint probabilities. B Dataset after the statistical filtering. Only data with higher joint probability than pEXP2 remained. Dmax = 14 mm

Results

As a summary, Fig. 28 shows the bedload transport rate at the three different hydraulic conditions calculated by the method introduced in this study. For all the three experiments, i.e., EXP1, EXP2 and EXP3 the same input parameter setup was used (see Section “Input parameters” for the reference values). As discussed in Section “Treatment of disturbed conditions (EXP1, EXP2)”, the joint probability filter with Dmax = 14 mm was used for the experiments with lower transport rates (EXP1 and EXP2). The lowest transport rate of EXP1 could be captured by the image-based method and showed satisfactory agreement with the laboratory measurement (QVBT = 0.44 g/s, Qlab = 1.11 g/s). At EXP2, the match was also satisfactory (3% difference). There was no need to consider the influence of bubbles and suspended sediment in EXP3. The VBT calculated satisfactory result (less than 2% difference) with the chosen REF input parameters, as it was shown in Section “Sensitivity analysis”. It was also shown that the Dmax value has to be further investigated and chosen carefully, as the result was found to be sensitive to it. Figure 28 shows the scale of this sensitivity analysis at EXP1 and EXP2. During the analysis, Dmax values between 12 and15 mm were tested.

Bedload transport rates calculated by the image-processing method of the study. For the lower transport rates (EXP1, EXP2), the joint probability filter was used, with Dmax = 14 mm (green square symbols). Other Dmax values (12–15 mm) were also tested and the range of their results is also shown with scales of probability sensitivity. At the highest yield (EXP3), applying this filter was unnecessary; therefore, the result from the tested REF input parameter setup (see Section “Input parameters”) is shown

Discussion

In the past few decades, several image processing methods were developed for sediment bedload measurements. These methods are based on either Eulerian (measuring sediment fluxes; Radice et al. 2006; Conevski et al. 2019), or Lagrangian (tracking individual grains and trajectories; Papanicolaou et al. 1999; Radice 2017; Tauro et al. 2019) approach. While each of them had their advantages, they all had weaknesses and lacked certain information of the bedload (Sabzi et al. 2017). Besides, the techniques introduced were difficult to adapt in the field (Conevski et al. 2020). Recently, it was also suggested that the Eulerian and Lagrangian approach should be combined and used together to fully understand the bedload phenomenon, as both techniques provide unique information unlike the other (Ballio et al. 2018; Ancey and Pascal 2020). Following these critics, we developed a new image processing approach for bedload transport rate measurements by adapting existing image processing approaches (LSPIV and Statistical Background Modelling) from other research fields. Combining them in an innovative way led to a methodology which has both Eulerian and Lagrangian characteristics, called Video-based Bedload Tracker (VBT). The VBT enabled to retrieve the individual velocity-grain size data pairs of all moving gravel particles. Videos of three laboratory experiments (gravel bedload) of different hydraulic parameters were analyzed with the VBT.

Two sets of the videos (for EXP1 and EXP2) suffered from significant suspended sediment load and air bubbles, biasing the grain detection algorithms. This problem is also expected in the field; however according to our experiences (see e.g., Ermilov et al. 2019), these disrupting particles in larger rivers, besides suspended sediment, are rather leaves and branches. To account for bubbles, Sabzi et al. (2017) set a maximum pixel intensity threshold and other morphological operations. However, the assumption of bubbles having higher intensities might not always be the case. For example, leaves and branches can mix and be transported together with the bedload (having the same distance from the light source). We developed a more general approach here, applying statistical filtering which considers the expected Dmax value, but also the distributions of bedload sediment sizes and velocities. It relies on the hypothesis that these noises appear as low joint probabilities in the velocity-grain size datasets and they should not fit the gamma distributions neither of the diameter, nor of the velocities. Threshold values of the joint probability, based on the maximum grain diameter, were defined. Data with lower probability than the threshold were neglected from the bedload transport rate calculation.

The applied Statistical Background Modelling (SBM) has the advantage of separating the foreground (moving particles) from the background (immobile riverbed) adaptively (i.e., the background refreshes with the given timestep). This opens up the possibility to retrieve undisturbed images of the bed, even if there is movement on it and separately analyze them with image processing techniques (e.g., Buscombe 2013; Ermilov et al. 2020).

The Large-Scale Particle Image Velocimetry (LSPIV) method was chosen for this study partially due to the earlier experience of the authors. Furthermore, previous studies in sedimentation successfully applied it on dune movements (Muste et al. 2016; Tsubaki et al. 2018; You et al. 2021), leading the authors of this paper to believe in its applicability for estimating particle velocities as well. In this study, an in-house LSPIV code was used (Fleit and Baranya 2019), which provided access and control over every step of the velocity calculations. This freedom enabled the authors to focus on the novelty of this paper, i.e., implementing the SBM in such environment and testing the VBT approach. While the implementation of LSPIV requires subjective decisions (e.g., size of the Interrogation Area), the abovementioned reasons and the general robustness of cross-correlation methods (CCMs) provided solid ground for choosing it over other, possible approaches (e.g., Optical Flow (OF); Horn and Schunk 1981). Nevertheless, the use of Optical Flow should be considered in future studies, since this approach generally provides smoother velocity distributions with higher spatial resolution and in a computationally more efficient way. Moreover, a recent example showed that the combination of OF and CCM successfully exploited the benefits of both methods (You et al. 2022). The present VBT method could be definitely improved with adapting such approach.

The detailed sensitivity analysis performed in the study supported understanding the role of the input parameters. The number of learning frames, the learning rate and the initial variance proved to be the most sensitive parameters. Meanwhile, the video framerate, the PIV interrogation area and the Gaussian filter were less sensitive. The background ratio, the number of Gauss curves, the PIV gridsize, the pixel connectivity, the minimal cutoff particle size and the video resolution were non-sensitive. The discussed parameters of this study could be handled by: (1) visually evaluating the result images, which is actually a great advantage of image processing techniques (Agudo et al. 2017) or (2) using default or literature-suggested values (MATLAB 2011). Furthermore, this study is also aimed to provide guidelines for future works.

The method showed very good agreement with the laboratory observations; moreover, it is robust, at least for the herein analyzed bedload transport conditions. It is expected that the studied range of bedload transport conditions, i.e., (1) the range of the incipient motion (EXP1 and EXP2) and (2) the continuous particle motion (EXP3) can be well revealed in the field too. However, at very high bedload transport rates in gravel bed rivers, or at sand bedload, where the sediment movement is rather a multilayer transport and the particles show complex dynamics, the method has yet to be tested. It may be possible that at such conditions, other Eulerian approaches, focusing on the fluxes (e.g., Muste et al. 2016; Blanckaert et al. 2017; Conevski et al. 2020), have to be used instead.

Conclusions

A proof-of-concept of a novel, image-based method for the quantification of bedload transport was introduced in this paper and tested in laboratory environment. The algorithm is based on two main pillars: (1) a Statistical Background Model (SBM) to separate the moving particles, i.e., the bedload on the series of images and (2) a Large-Scale Particle Image Velocimetry (LSPIV) to analyze the dynamics of the moving particles. Combining the two approaches, the bedload transport rate could eventually be estimated. A thorough sensitivity analysis performed on the relevant parameters proved that the method is robust; however, special attention has to be paid to filtering the suspended sediment particles and bubbles. For this, we proposed a physical and mathematical filtering technique, which considers the expected range of the particle momentums. Also, the algorithm showed sensitivity to parameters such as the number of frames (Section “Effect of the video framrate”), the learning rate (Section “Effect of the learning rate”) and the initial variance (Section “Effect of the initial mixture model variance”), but all of them can be reasonably estimated by eye observation of the bedload transport. Overall, the developed image based method is foreseen to be a good alternative of bedload transport measurements in gravel bed rivers, especially at the range of the incipient particle motion and weak transport.

References

Abraham D, Pratt TC, Sharp J (2010) Measuring bedload transport on the Missouri River using time sequenced bathymetric data. In: 2nd Joint Federal Interagency Conference, Las Vegas, NV

Adrian R (1991) Particle-imaging techniques for experimental fluid-mechanics. Annu Rev Fluid Mech 23(1991):261–304. https://doi.org/10.1146/annurev.fluid.23.1.261

Agudo JR, Luzi G, Han J, Hwang M, Lee J, Wierschem A (2017) Detection of particle motion using image processing with particular emphasis on rolling motion. Rev Sci Instrum 88(5):051805. https://doi.org/10.1063/1.4983054

Ancey C, Pascal I (2020) Estimating mean bedload transport rates and their uncertainty. J Geophys Res Earth Surf. https://doi.org/10.1029/2020JF005534

Ballio F, Pokrajac D, Radice A, Hosseini Sadabadi SA (2018) Lagrangian and Eulerian description of bed load transport. J Geophys Res Earth Surf 123:384–408. https://doi.org/10.1002/2016JF004087

Blanckaert K, Heyman JH, Rennie CD (2017) Measurments of bedload sediment transport with an acoustic doppler velocity profiler(ADVP). J Hydraul Eng 143(6):04017008

Bouwmans T, Baf F, Vachon B (2010) Statistical background modeling for foreground detection a survey.In: Handbook of pattern recognition and computer. World Scientific Publishing

Bouzerdoum A, Beghdadi A (2010). On the analysis of background subtraction techniques using Gaussian Mixture Models.In: Conference Paper in Acoustics, Speech, and Signal Processing, 1988. ICASSP-88., 1988 International Conference on April 2010. DOI: https://doi.org/10.1109/ICASSP.2010.5495760

Buscombe Daniel (2013) Transferable wavelet method for grain-size distribution from images of sediment surfaces and thin sections, and other natural granular patterns. Sedimentology 60(7):1709–1732. https://doi.org/10.1111/sed.12049

Conevski S, Guerrero M, Ruther N, Rennie C (2019) Laboratory investigation of apparent bedload velocity measured by ADCPs under different transport conditions. J Hydraul Eng 145:04019036. https://doi.org/10.1061/(ASCE)HY.1943-7900.0001632

Conevski S, Guerrero M, Rennie CD, Ruther N (2020) Towards an evaluation of bedload transport characteristics by using doppler and backscatter outputs from ADCPs. J Hydraul Res 59(5):703–723. https://doi.org/10.1080/00221686.2020.1818311

Csoma, J, Szigyártó, Z (1975) A matematikai statisztika alkalmazása a hidrológiában (in English: The application of mathematical statistics in hydrology). Book, pp.341–357; Vízügyi Dokumentációs és Tájékoztató Iroda, Budapest

Detert, M, Liekai, C, Ismail, A (2019). Airborne image velocimetry measurements at the hydropower plant Schiffmühle on Limmat river, Switzerland. Proc. In: 2nd Int. Symp. Exhib. Hydro-Environment Sensors Software, HydroSenSoft, pp. 211–217

Dinehart RL (2002) Bedform movement recorded by sequential single-beam surveys in tidal rivers. J Hydrol 258(1–4):25–39. https://doi.org/10.1016/S0022-1694(01)00558-3

Drake TG, Shreve RL, Dietrich WE, Whiting PJ, Leopold LB (1988) Bedload transport of fine gravel observed by motion-picture photography. J Fluid Mech 192(1988):193–217. https://doi.org/10.1017/S0022112088001831

Duffy, GP (2006). Bedform migration and associated sand transport on a banner bank: application of repetitive multibeam surveying and tidal current measurement to the estimation of sediment transport. Ph.D. thesis, The University of New Brunswick, Fredericton, Canada

Einstein, H (1937). Der Geschiebetrieb als Wahrscheinlichkeitproblem (Bedload transport as a probability problem) ((English translation by W. W. Sayre, in Sedimentation (Symposium to honor H. A. Einstein, edited by H. W. Shen), Fort Collins, Colorado, 1972, C1–C105). Zurich: ETHZ

Einstein, H (1950). The bed-load function for sediment transportation in open channel flows. Technical Bulletins No. 1026. Washington, DC: US Dept. of Agriculture

Engel P, Lau YL (1981) Bed Load Discharge Coefficient. Hydraul Div 107:1445–1454

Ermilov AA, Baranya S, Török GT (2020) Image-based bed material mapping of a large river. Water 2020(12):916. https://doi.org/10.3390/w12030916

Ermilov AA, Fleit G, Zsugyel M, Baranya S, Török GT (2019). Video based bedload transport analysis in gravel bed rivers. Geophysical Research Abstracts. Vol. 21, EGU2019–15071, 2019. EGU General Assembly

Fleit G, Baranya S (2019) An improved particle image velocimetry method for efficient flow analyses. Flow Meas Instrum 69:101619

Fujita I, Muste M, Kruger A (1998) Large-scale particle image velocimetry for flow analysis in hydraulic engineering applications. J Hydraul Res 36(3):397–414. https://doi.org/10.1080/00221689809498626

Gaweesh M, Van Rijn L (1994) Bed-load sampling in sand-bed rivers. J Hydraul Eng 120(12):164–1384. https://doi.org/10.1061/(ASCE)0733-9429(1994)120:12(1364)

Latosinski FG, Szupiany RN, Guerrero M, Amsler ML, Vionnet C (2017) The ADCP’s bottom track capability for bedload prediction: Evidence on method reliability from sandy river applications. Flow Meas Instrum 54:124–135

Hayman E, Eklundh J (2003) Statistical Background Subtraction for a Mobile Observer. In: Proceedings of the Ninth IEEE International Conference on Computer Vision (ICCV 2003) 2-Volume Set 0–7695–1950–4/03 $17.00

Honkanen M, Nobach H (2005) Background extraction from double-frame PIV images. Exp Fluids 38(3):348–362. https://doi.org/10.1007/s00348-004-0916-x

Horn BKP, Schunck BG (1981) Determining optical flow. Artif Intell 17(1–3):185–203. https://doi.org/10.1016/0004-3702(81)90024-2

Huang PX, Boom BJ, Fisher RB (2015) Hierarchical classification with reject option for live fish recognition. Mach Vis App 26(1):89–102. https://doi.org/10.1007/s00138-014-0641-2

Hubbell DW (1964). Apparatus and techniques for measuring bedload. USGS. United States Government Printing Office. https://doi.org/10.3133/wsp1748

Hubbell D (1987). Bed load sampling and analysis, in Sediment transport in gravel bed rivers. Edited by C. Thorne, J. Bathurst, and R. Hey, pp.89–106, John Wiley and Sons Ltd., Chichester

KaewTraKulPong P, Bowden R (2002) An improved adaptive background mixture model for real-time tracking with shadow detection. In: Remagnino P, Jones GA, Paragios N, Regazzoni CS (eds) Video-based surveillance systems. Springer, Boston, pp 135–144. https://doi.org/10.1007/978-1-4615-0913-4_11

Kostaschuk RA, Church MA, Luternauer JL (1989) Bedforms, bed material, and bedload transport in a salt-wedge estuary: fraser river. Br Columbia Can J Earth Sci 26:1440–1452

Langmann B, Ghobadi SE, Hartmann K, Loffeld O (2010). Multi-modal background subtraction using Gaussian mixture models. In: Paparoditis N, Pierrot-Deseilligny M, Mallet C, Tournaire . (Eds), IAPRS, Vol. XXXVIII, Part 3A – Saint-Mandé, France, Sep 1–3

Lee D-S (2005) Effective gaussian mixture learning for video background subtraction. IEEE Trans Pattern Anal Mach Intell 27(5):827–832

Liu Y, Métivier F, Lajeunesse É, Lancien P, Narteau C, Ye B, Meunier P (2008). Measuring bedload in gravel-bed mountain rivers: averaging methods and sampling strategies. In: Conference Paper in Geodinamica Acta–April 2008. DOI: https://doi.org/10.3166/ga.21.81-92

Dengsheng L, Mausel P, Brondizio E, Moran E (2004) Change detection techniques. Int J Remote Sens 25(12):2365–2401

MATLAB (2011). https://www.mathworks.com/help/vision/ref/vision.foregrounddetector-system-object.html

Muste M, Ho HC, Kim D (2011) Considerations on direct stream flow measurements using video imagery: outlook and research needs. J Hydro Environ Res 5(2011):289–300. https://doi.org/10.1016/j.jher.2010.11.002

Muste M, Fujita I, Hauet A (2008) Large scale particle image velocimetry for measurements in riverine environments. Spec Issue Hydrol Measure Water Resour Res 44(4):19. https://doi.org/10.1029/2008WR006950

Muste M, Baranya S, Tsubaki R, Kim D, Ho H, Tsai H, Law D (2016) Acoustic mapping velocimetry. Water Resour Res 52(5):4132–4150. https://doi.org/10.1002/2015WR018354

Papanicolaou A, Diplas P, Balakrishnan M, Dancey C (1999) Computer vision technique for tracking bed load movement. J Comput Civ Eng 13(2):71–79. https://doi.org/10.1061/(ASCE)0887-3801(1999)13:2(71)

Parker, G (2004). 1D sediment transport morphodynamics with applications to rivers and turbidity currents. St. Anthony Falls Laboratory, Mississippi River at 3rd Avenue SE

Power WP, Schoones JA (2002) Understanding Background Mixture Models for Foreground Segmentation. In: Proceedings Image and Vision Computing New Zealand 2002. University of Auckland, Auckland, New Zealand 26–28th November 2002

Radice A, Malavasi S, Ballio F (2006) Solid transport measurements through image processing. Exp Fluids 41(5):721–734. https://doi.org/10.1007/s00348-006-0195-9

Radice A, Sarkar S, Ballio F (2017) Image-based Lagrangian Particle tracking in bed-load experiments. J Visual Exp. https://doi.org/10.3791/55874

Recking A, Liébault F, Peteuil C, Jolimet T (2012) Testing bedload transport equations with consideration of time scales. Earth Surf Proc Land 37:774–789

Rennie CD, Villard PV (2004) Site specificity of bedload measurement using an ADCP. J Geophys Res. https://doi.org/10.1029/2003JF000106)

Sabzi AK, Hasanzadeh RPR, Chegini AHN (2018) An Image-based object tracking technique for identification of moving sediment grains on bed deposits. KSCE J Civil Eng 22(4):1170–1178. https://doi.org/10.1007/s12205-017-0576-z

Scofield TP, Belfort J, Churnside JH, Roddewig MR, Shaw JA, Whitaker BM (2021) Applying Gaussian Mixture Models to Detect Fish from Airborne LiDAR Measurements.In: 2021 IEEE Research and Applications of Photonics in Defense Conference (RAPID), 2021, pp. 1–2, doi: https://doi.org/10.1109/RAPID51799.2021.9521457

Stauffer C, Grimson WEL (1999) Adaptive background mixture models for real-time tracking. In: Proceedings. 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), 1999, pp. 246–252 Vol. 2, doi: https://doi.org/10.1109/CVPR.1999.784637

Tauro F, Piscopia R, Grimaldi S (2019) PTV-stream: a simplified particle tracking velocimetry framework for stream surface flow monitoring. CATENA 172(2019):378–386. https://doi.org/10.1016/j.catena.2018.09.009

Tsubaki R, Baranya S, Muste M, Toda Y (2018) Spatio-temporal patterns of sediment particle movement on 2D and 3D bedforms. Exp Fluids 59(93):14p

Willert CE, Gharib M (1991) Digital particle image velocimetry. Exp Fluid 10(1991):181–193. https://doi.org/10.1007/BF00190388

Yadav G, Maheshwari S, Agarwal A (2014) Contrast limited adaptive histogram equalization based enhancement for real time video system. In: 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI). DOI: https://doi.org/10.1109/ICACCI.2014.6968381

You H, Kim D, Muste M (2022) High-gradient pattern image velocimetry (HGPIV). Adv Water Resour 159(104092):13p

You H, Muste M, Kim D, Baranya S (2021) Considerations on acoustic mapping velocimetry (AMV) application for in-situ measurement of bedform dynamics. Front Water 140(3):715308

Zhang C, Liu X, Xu Q (2018) Mixed Gaussian Model Based Video Foreground Object Detection. CSAE '18. In: Proceedings of the 2nd International Conference on Computer Science and Application Engineering. October 2018 Article No.: 112Pages 1–5. https://doi.org/10.1145/3207677.3278023

Zitouni MS, Bhaskar H, Al-Mualla M (2016) Robust Background Modeling and Foreground Detection using Dynamic Textures. In: Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2016) - Volume 4: VISAPP, pages 403–410. ISBN: 978–989–758–175–5. DOI: https://doi.org/10.5220/0005724204030410

Funding

Open access funding provided by Budapest University of Technology and Economics. The first author acknowledges the support of the ÚNKP-20–3 New National Excellence Program of the Ministry for Innovation and Technology, and the National Research, Development and Innovation Fund, Hungary. The second author acknowledges the support of the ÚNKP-21–4 New National Excellence Program of the Ministry for Innovation and Technology from the source of the National Research, Development and Innovation Fund. The fifth author acknowledges the support of Bolyai János fellowship of the Hungarian Academy of Sciences. The research reported in this paper and carried out at BME has been supported by the NRDI Fund TKP2021 based on the charter of bolster issued by the NRDI Office under the auspices of the Ministry for Innovation and Technology (project id: BME-NVA-02). The authors acknowledge the funding of the OTKA FK 128429 Grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Edited by Dr. Michael Nones (CO-EDITOR-IN-CHIEF).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visithttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ermilov, A.A., Fleit, G., Conevski, S. et al. Bedload transport analysis using image processing techniques. Acta Geophys. 70, 2341–2360 (2022). https://doi.org/10.1007/s11600-022-00791-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11600-022-00791-x