Abstract

Solving convex semi-infinite programming (SIP) problems is challenging when the separation problem, namely, the problem of finding the most violated constraint, is computationally hard. We propose to tackle this difficulty by solving the separation problem approximately, i.e., by using an inexact oracle. Our focus lies in two algorithms for SIP, namely the cutting-planes (CP) and the inner-outer approximation (IOA) algorithms. We prove the CP convergence rate to be in O(1/k), where k is the number of calls to the limited-accuracy oracle, if the objective function is strongly convex. Compared to the CP algorithm, the advantage of the IOA algorithm is the feasibility of its iterates. In the case of a semi-infinite program with a Quadratically Constrained Quadratic Programming separation problem, we prove the convergence of the IOA algorithm toward an optimal solution of the SIP problem despite the oracle’s inexactness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Standard semi-infinite programming (SIP) problems are optimization problems with a finite number of variables and infinitely many constraints. These constraints are indexed by a continuous parameter that takes values from a certain parameter set. Given two integers \(m,n \in \mathbb {N}^+\), we consider two continuous functions \(F :\mathbb {R}^m \rightarrow \mathbb {R}\) and \(G :\mathbb {R}^m \times \mathbb {R}^n \rightarrow \mathbb {R}\). Furthermore, we consider two non-empty and compact sets \(\mathcal {X} \subset \mathbb {R}^m\), and \(\mathcal {Y} \subset \mathbb {R}^n\). The SIP problem on which we focus is formulated as

with the value function \(\phi (x)\) defined as \(\phi (x) = \max _{y \in \mathcal {Y}} G(x,y),\) also referred to as lower-level problem or separation problem in the rest of the paper. The infinitely many constraints \( G(x,y) \le 0, \,\forall y \in \mathcal {Y}\) in (\(\textsf{SIP}\)) can be reformulated as \(\phi (x) \le 0\), where function \(\phi \) is continuous as a direct application of the Maximum Theorem [2, Th. 2.1.6]. This paper restricts to the following convex setting for the formulation (\(\textsf{SIP}\)).

Assumption 1

The non-empty and compact set \(\mathcal {X}\) is convex, the function F(x) is convex and the function \(G(\cdot ,y)\) is linear for every \(y \in \mathcal {Y}\).

We underline that we make no convexity assumptions regarding the set \(\mathcal {Y}\) or the function \(G(x, \cdot )\). This setting covers the case where the function \(G(\cdot ,y)\) is affine for every \(y \in \mathcal {Y}\). Because of the infinite number of constraints, SIP problems are challenging optimization problems [11, 18, 34], for the solution of which several methods have been developed in the literature. Whenever the inner problem is convex and regular, it can be replaced by its KKT first-order optimality conditions [13, 17, 35], obtaining a problem with complementarity constraints. However, this approach is not applicable in the general case of nonconvex lower-level problems. A valid alternative is the Iterative Discretization methods [19, 28, 31, 36], consisting in replacing the infinite constraints with several finite constraints, by approximating the infinite parameter set with a finite subset. The original SIP problem can then be transformed into a sequence of finite optimization problems solved using standard optimization techniques. By refining the discretization and solving the finite optimization problems iteratively, the optimal solution to the original SIP problem can be obtained. The convergence rate of the error between the SIP solution and the solution of each discretized program depends on the solution’s order and the choice of the gridpoints [36]. The classical Blankenship and Falk’s algorithm [3, 4, 37] is a discretization method where the considered finite subset of constraints is increased at each iteration by adding the most violated constraint. In the case of convex SIP problems, it corresponds to Kelley’s algorithm [22] also known as Cutting-Planes (CP) algorithm. In the exchange methods [17, 40], at every iteration, some new constraints are added and some old constraints may be deleted. Recently, in [32], a new adaptive discretization method based on Blankenship and Falk CP [4] is proposed, exhibiting a local quadratic rate of convergence to a SIP stationary point. In [6], a convergent inner-outer approximation (IOA) algorithm is introduced to solve convex SIP problems by combining a CP and a lower-level dualization approach. In these methods, in order to address the infinite number of constraints, an optimization algorithm is employed to solve problem \(\phi (x)\) for specific values of x. However, this may be computationally difficult, and assuming we solve it exactly is not necessarily realistic. Therefore, we may assume that an inexact separation oracle (i.e., a black-box algorithm) is used to compute a feasible point of the problem \(\phi (x)\) with a relative optimality gap of \(\delta \in [0,1)\). More precisely, for every \(x \in \mathcal {X},\) this “\(\delta \)-oracle” computes \(\hat{y}(x) \in \mathcal {Y}\), and an upper bound \(\hat{v}(x) \ge \phi (x)\) such that \( \hat{v}(x)- G(x,\hat{y}(x)) \le \delta \, |\phi (x) |.\) Consequently, the following inequalities hold:

The literature on convex optimization algorithms using an inexact oracle is extensive. In [7], the fast gradient method proposed in [26] is extended to include inexact gradient computation. In [9], the general concept of inexact oracle for convex problems is introduced and applied to First-Order methods, i.e., primal, dual, and fast gradient methods. The classical primal-dual gradient method can be seen as slow but robust with respect to (w.r.t.) oracle errors. The fast one, instead, is faster but sensitive w.r.t. oracle error. In [8], the same authors propose the intermediate gradient method, combining classical and fast gradient methods, providing the flexibility to select a suitable parameter value that balances the convergence rate and the accumulation of oracle errors. Both stochastic and deterministic errors are considered in the oracle information in [12]. In the literature on convex minmax problems (which can be seen as a particular type of SIP problem), the oracle’s inexactness is rarely considered. The approximate calculation of the optimal solution of the inner problem has been explored in [14,15,16], in the context of bundle algorithms. When dealing with nonsmooth convex SIP problems, another bundle method with inexact oracle solving the inner problem is proposed in [27]. For general SIP programs, the algorithms proposed in [10, 25] allow for suboptimal solution of the involved subproblems, i.e., the lower-level, the upper and lower bounding problems. Indeed, in [25], within the proposed method, solving restrictions of the discretization-based relaxations, the assumption regarding the solutions of the subproblems is only that their accuracy suffices to definitively determine the feasibility of a given iterate. Similarly, in [10], where the algorithms proposed in [25] and [38] are combined, subproblems are assumed to be solvable to an arbitrary absolute optimality tolerance, which is adjusted as necessary according to a refinement scheme. Two other adaptive discretization algorithms, still leveraging the same restriction idea of [25], are proposed in [30] for convex SIP problems, with finite termination guarantees for any arbitrary precision, despite the possibility, again, of solving the inner problems only approximately.

Compared to these earlier works, the contribution of this paper is to (i) prove a global rate of convergence for the Blankenship and Falk’s CP algorithm [4], despite the inexactness of the separation oracle, in the context of a strongly convex objective function (ii) show that the IOA algorithm is able, once again despite the inexactness of the oracle, to generate a sequence of feasible points converging to an optimum. In other words, this paper extends the convergence results for the CP and IOA algorithms from [6] to the case of an inexact separation oracle, and for more general separation problems. As regards the CP algorithm with inexact oracle, we prove, under specific assumptions, a global rate of convergence for the optimality gap, and for the feasibility error. As regards the dualization approach and the IOA algorithm, we trace the steps of [6], in a broader setting. Indeed, we consider a lower level which is not a Quadratic Programming (QP), but a Quadratically Constrained QP (QCQP) problem; we propose a restriction of this SIP problem, which is a reformulation of it when its lower level is convex; we review the sufficient condition proposed in [6], which may be verified a posteriori on a solution of this restriction, to check if it is in fact optimal for the original SIP problem; we present the IOA algorithm with inexact oracle, and we prove that it is convergent. We refer to Table 2 in Appendix A.1 for a summary of notations used in the paper.

2 Convergence rate for the CP algorithm with inexact oracle

In the setting of convex SIP (Assumption 1) with a strongly convex objective (Assumption 2), we prove a convergence rate for the Blankenship and Falk’s CP algorithm [4] despite the inexactness of the separation oracle. For this purpose, we use Lagrangian duality to determine a dual of (\(\textsf{SIP}\)) in Subsection 2.1, and interpret this algorithm as a variant of the Frank-Wolfe algorithm applied to the dual problem of (\(\textsf{SIP}\)) in Subsection 2.2.

2.1 Lagrangian dual of the convex SIP problem

In line with Assumption 1, for every \(y \in \mathcal {Y}\), we define \(a(y) \in \mathbb {R}^m\) such that \(G(x,y)= x^\top a(y)\). As G is assumed to be continuous, we deduce the continuity of \(a(\cdot )\). We also define the compact set \(\mathcal {M} = \{ a(y) :y \in \mathcal {Y} \}\) and \(\mathcal {K} = \textsf{cone}(\mathcal {M})\) the convex cone generated by \(\mathcal {M}\). With this notation, program (\(\textsf{SIP}\)) can be cast as

We introduce the Lagrangian function \(\mathcal {L}(x,z) = F(x) + x^\top z\), defined over \(\mathcal {X} \times \mathcal {K}\), and we have that \(\textsf{val}\) (\(\textsf{SIP}\)) = \(\min _{x \in \mathcal {X}} \sup _{z \in \mathcal {K}} \mathcal {L}(x,z)\). We notice that the Lagrangian is convex w.r.t. x, and affine w.r.t. z. Since the set \(\mathcal {X}\) is compact and convex (Assumption 1) and the set \(\mathcal {K}\) is convex too, Sion’s minimax theorem [33] is applicable and the following holds:

The dual function is \(\theta (z) = \min _{x \in \mathcal {X}} \mathcal {L}(x,z)\), and the dual optimization problem is

With this definition, Equation (2) may be read as the absence of duality gap between the dual problems (\(\textsf{SIP}\)) and (\(\textsf{DSIP}\)), i.e., \(\textsf{val}\) (\(\textsf{SIP}\)) = \( \textsf{val}\) (\(\textsf{DSIP}\)).

Assumption 2

The function F(x) is \(\mu \)-strongly convex.

Lemma 1

Under Assumptions 1-2, the dual function \(\theta (z)\) is differentiable, with gradient \(\nabla \theta (z) = \arg \min \limits _{x \in \mathcal {X}} \mathcal {L}(x,z)\). The gradient \(\nabla \theta (z)\) is \(\frac{1}{\mu }\)-Lipschitz continuous.

Proof

Proof in Appendix A.2. \(\square \)

We underline that, due to the strong convexity of \(\mathcal {L}(x,z)\) with respect to x, its argminimum is a singleton, which is assimilated to its unique element.

Lemma 2

Under Assumptions 1 and 2, for every \(y,z \in \mathcal {K}\), for every \(\gamma \ge 0\),

Proof

The proof follows the proof of [6, Lemma 3.4]. This comes directly from the \(\frac{1}{\mu }\)-Lipschitzness of \(\nabla \theta \). \(\square \)

We prove now that we can replace the sup operator with the max operator in the formulation (\(\textsf{DSIP}\)), under the following additional assumption.

Assumption 3

There exists \(\hat{x} \in \mathcal {X}\), such that \(\hat{x}^\top z < 0\) for all \(z \in \mathcal {M}\), i.e., \(\hat{x}^\top a(y) < 0\) for all \(y \in \mathcal {Y}\).

Lemma 3

Under Assumptions 1–3, problem (\(\textsf{DSIP}\)) admits an optimal solution.

Proof

Proof in Appendix A.3. \(\square \)

2.2 The CP algorithm with inexact oracle and its dual interpretation

The CP algorithm (Algorithm 1) is a variant of Blankenship and Falk’s algorithm [4] allowing for approximate solutions of the separation problems, and for more flexibility with respect to the finite set of constraints \(\mathcal {M}^k\) maintained at iteration k. The master problem (\(R_k\)) at iteration k is a relaxation of problem (\(\mathsf {SIP'}\)) since \(\mathcal {M}^k \subset \textsf{conv}(\mathcal {M})\) by construction. We compute a primal solution \(x^k\) of this relaxation (\(R_k\)) and the Lagrange multipliers \((\lambda ^k_z)_{z\in \mathcal {M}^k}\) associated to the constraints \(x^\top z \le 0\) for \(z\in \mathcal {M}^k\). The existence of these Lagrange multipliers is discussed in Remark 1. The point \(x^k\) is then provided to the \(\delta \)-oracle that solves the separation problem \(\max \limits _{y \in \mathcal {Y}} \, G(x^k,y) = \max \limits _{y \in \mathcal {Y}} \, a(y)^\top x^k\) and returns an approximate solution \(y^k\) as well as \(\xi ^k:= a(y^k) \in \mathcal {M}\). The finite set of constraints used in the next iteration \(k+1\) is defined by adding \(\xi ^k\) to the set \(\mathcal {B}^k\), which may be either just \(\mathcal {M}^k\) or a subset of its convex hull such that \(z^k = \sum _{z \in \mathcal {M}^k} \lambda ^k_z z\) belongs to \(\textsf{cone}(\mathcal {B}^k)\). The algorithm stops whenever \((\xi ^k)^\top x^k \le \epsilon \).

We insist on particular cases regarding the set \(\mathcal {B}^k\) used in Step 5 of Algorithm 1:

-

The set \(\mathcal {B}^k\) may be equal to \(\mathcal {M}^k\) at every step.

-

The set \(\mathcal {B}^k\) may be the set of atoms \(z \in \mathcal {M}^k\) such that \(\lambda ^{{k}}_z > 0\), in which case Step 5 consists in dropping all the inactive constraints.

-

The set \(\mathcal {B}^k\) may be a subset of \(\textsf{conv}(\mathcal {M}^k)\) of size at most M, and such that \(z^k \in \textsf{cone}(\mathcal {B}^k)\). For \(M=2\), e.g., we can take \(\mathcal {B}^k = \left\{ \frac{1}{\sum \limits _{z\in \mathcal {M}^{k}} \lambda ^{{k}}_z} \sum \limits _{z\in \mathcal {M}^{k}} \lambda ^{{k}}_z z \right\} \). In this case, following what is known as the constraint-aggregation approach [23], we obtain a bounded-memory algorithm.

-

We can also think about intermediate approaches where we do not drop every inactive constraint, but only the ones that have been staying inactive for a given number of iterations. Such strategies are also included in the framework of Algorithm 1.

We now present a dual interpretation of Algorithm 1, as done in [6] for the case of an exact oracle. For every iteration k, we define the following restriction of (\(\textsf{DSIP}\)):

Lemma 4 states that the restriction (\(D_k\)) of the problem (\(\textsf{DSIP}\)) may be seen as the Lagrangian dual of the relaxation (\(R_k\)) of (\(\mathsf {SIP'}\)) solved at iteration k.

Lemma 4

Under Assumptions 1–3, problems (\(R_k\)) and (\(D_k\)) forms a pair of primal-dual problems and \(\textsf{val}\)(\(R_k\)) = \(\textsf{val}\)(\(D_k\)). There exists a pair of optimal primal-dual solutions \((x^k, z^k)\), and, for every such pair, \(x^k = \nabla \theta (z^{k})\).

Proof

Proof in Appendix A.4. \(\square \)

Having shown the existence of a dual optimal solution \(z^k\), we can rigorously define the Lagrangian multipliers \(\lambda _z^k\) used in Algorithm 1 as follows.

Remark 1

The decomposition of \(z^k \in \textsf{cone}(\mathcal {M}^k)\) as a conic combination of elements of \(\mathcal {M}^k\) yields the Lagrange multipliers \((\lambda ^k_z)_{z \in \mathcal {M}^k}\) invoked at Step 3 of Algorithm 1.

Based on Lemma 4, we deduce that, during the execution of Algorithm 1, the dual sequence \(z^k\) instantiates the iterates of a cone-constrained fully corrective Frank-Wolfe (FCFW) algorithm [24] solving the dual problem (\(\textsf{DSIP}\)). The primal and dual interpretations of each step of the generic iteration k are presented in Table 1.

2.3 Convergence rate for the CP algorithm with inexact oracle

We define the constant \(R= \sup _{z \in \mathcal {M}} \Vert z \Vert = \sup _{z \in \textsf{conv}(\mathcal {M})} \Vert z \Vert \). According to Lemma 3, problem (\(\textsf{DSIP}\)) admits an optimal solution \(z^* \in \mathcal {K}\). We define \(\tau = \inf \{ t \ge 0 :z^* \in t \, \textsf{conv}(\mathcal {M}) \}\). The scalar \(\tau \) plays a central role in the convergence rate analysis of the CP algorithm with inexact oracle, conducted in the following theorem. An interesting future research direction is finding an efficient approach to estimate (an upper bound on) \(\tau \) without computing \(z^*\).

Theorem 5

Under Assumption 1-3, denoting by \(x^*\) an optimal solution of (\(\mathsf {SIP'}\)), if Algorithm 1 executes iteration \(k \in \mathbb {N}\), then

Proof

We define the optimality gap \(\Delta _k = F(x^*) - F(x^k) = \textsf{val}\)(\(\mathsf {SIP'}\)) \(- F(x^k)\). We emphasize that at each iteration k, \( \theta (z^{k}) = F(x^k)\), thus \(\Delta _k\) may also be seen as the optimality gap in the dual problem (\(\textsf{DSIP}\)), i.e., \(\Delta _k = \textsf{val}\)(\(\textsf{DSIP}\)) \(- F(x^k) = \theta (z^*) - \theta (z^{k})\). We prove the inequality (4) by induction. When \(\tau = 0\) (i.e., 0 is a dual optimal solution), inequality (4) holds trivially. Indeed, for every k, 0 is a feasible point of the dual problem of (\(R_k\)), thus \(\theta (0) \le \theta (z^k)\). Since \(\theta (z) = \min _{x \in \mathcal {X}} F(x) + x^\top z \), \(\theta (0) = \min _{x \in \mathcal {X}} F(x) = F(x^*).\) Combining this with the fact that, for every k, \(F(x^k) \le F(x^*)\) and \(\theta (z^k) = F(x^k)\), we have: \(F(x^k) \le F(x^*) = \theta (0) \le \theta (z^k) = F(x^k)\). Thus, \(F(x^k) = F(x^*)\), and inequality (4) holds. We assume now that \(\tau > 0\).

Base case (k).

Since \(\theta \) is concave, \(\Delta _0 = \theta (z^*) - \theta (z^0) \le \nabla \theta (z^0)^\top (z^* - z^0) = \theta (z^0)^\top z^*,\) with the last equality following from \(z^0 = 0\) (as \(\mathcal {M}^0 = \emptyset )\). We remark that \(\nabla \theta (z^0)^\top z^* = (\nabla \theta (z^0)-\nabla \theta (z^*))^\top z^*\) since \(\nabla \theta (z^*)^\top z^* = 0\) by optimality of \(z^*\). Hence, \(\Delta _0 \le (\nabla \theta (z^0) - \nabla \theta (z^*))^\top z^* \le \Vert \nabla \theta (z^0) - \nabla \theta (z^*) \Vert \, \Vert z^* \Vert \), where the last inequality is the Cauchy-Schwartz inequality. Using the \(\frac{1}{\mu }\)-Lipschitzness of \(\nabla \theta \) (Lemma 1), we know that \(\Vert \nabla \theta (z^0) - \nabla \theta (z^*) \Vert \le \frac{1}{\mu } \Vert z^0 - z^* \Vert = \frac{1}{\mu } \Vert z^* \Vert \). Since \(z^* \in \tau \textsf{conv}(\mathcal {M})\), \(\Delta _0 \le \frac{1}{\mu } \Vert z^* \Vert ^2 \le \frac{(R\tau )^2}{\mu } \le \frac{(R\tau )^2}{(1-\delta )^2\mu }\) as \(1-\delta \in (0,1]\).

Induction.

We suppose that the algorithm runs \(k+1\) iterations and does not meet the stopping condition; we assume that property (4) is true for k. Since \(z^k \in \textsf{cone}(\mathcal {B}^k)\), and \(\mathcal {M}^{k+1} = \mathcal {B}^k \cup \{ \xi ^k \}\), we deduce that \(z^k + \gamma \xi ^k \in \textsf{cone}(\mathcal {M}^{k+1})\), for every \(\gamma \ge 0\), implying \(\theta (z^{k+1}) \ge \theta (z^k + \gamma \xi ^k)\). Moreover, Lemma 2 yields a lower bound on the progress made during iteration \(k+1\):

for every \(\gamma \ge 0\). Multiplying by \(-1\), adding \(\theta (z^*)\) to both left and right-hand sides of the above inequality, and using \(\Vert \xi ^{k} \Vert \le R\), we have that

for every \(\gamma \ge 0\). In addition, by concavity of \(\theta ,\) \(\Delta _k = \theta (z^*) - \theta (z^{k}) \le \nabla \theta (z^{k})^\top (z^* - z^{k})\). Note that we have \(\nabla \theta (z^k)^\top z^k = 0\), following from the first-order optimality condition holding at 1 of the differentiable function \(\alpha (t) = \theta (t z^k)\). Indeed, \(\alpha '(1) =(\nabla \theta (z^k))^\top z^k = 0\), because (i) 1 is optimal for \(\alpha \) since \(z^k \in \underset{z \in \textsf{cone}(\mathcal {M}^k)}{\text {argmax}} \theta (z)\), (ii) 1 lies in the interior of the definition domain of \(\alpha \).

Thus, \( \Delta _k \le \nabla \theta (z^k)^\top z^*\). As \(z^* \in \tau \, \textsf{conv}(\mathcal {M})\),

where the last equality follows from \(\nabla \theta (z^k) = x^{{k}}\), and from the definition of the value function \(\phi (x) = \max _{y \in \mathcal {Y}} x^\top a(y)\). The stopping criterion \(a(y^k)^\top x^k = (\xi ^k)^\top x^k \le \epsilon \) is not met at the end of iteration k, as iteration \(k+1\) is executed. Therefore, \(\phi (x^{{k}}) \ge a(y^k)^\top x^k > \epsilon \ge 0\). Inequality (1) yields \(\phi (x^k)- G(x^k,y^k) \le \delta \phi (x^k)\), i.e., \((1-\delta )\phi (x^k) \le G(x^k,y^k) = (x^k)^\top a(y^k) = \nabla \theta (z^k)^\top \xi ^k\). Therefore, as we have \(\tau > 0\), we deduce from Eq. (7) that

Combining Eqs. (6) and (8), we obtain \( \Delta _{k+1} \le \Delta _{k} - \gamma \frac{1-\delta }{\tau } \Delta _{k} + \frac{R^2}{2 \mu } \gamma ^2,\) for every \(\gamma \ge 0\). Factoring and setting \(\tilde{\gamma } = \gamma \frac{1-\delta }{\tau }\) (for every \(\tilde{\gamma } \ge 0\)) yields:

Applying Eq. (9) with \(\tilde{\gamma } = \frac{2}{k+2}\), and defining \(C = \frac{ 2 R^2 \tau ^2}{ \mu (1-\delta )^2}\) we obtain:

with the second inequality coming from the application of (4), which holds for k by the induction hypothesis. Finally, we deduce that

where the third inequality follows from the observation that \(\frac{k+1}{k+2} \le \frac{k+2}{k+3}\). Hence, the property (4) is true for \(k+1\) as well. This concludes the proof. \(\square \)

The main difference between Eq. (4) and the convergence rate of the CP algorithm with exact oracle considered in [6] is exactly the term \(\frac{1}{(1-\delta )^2}\), which is related to the inexactness of the oracle here considered. Furthermore, the convergence result of Theorem 5 differs from [24, Th. 2], where the existence of a scalar \(t \ge 0\) such that \(\{z^*\} \cup \{ z^k\}_{k \in \mathbb {N}} \subset t\, \textsf{conv}(\mathcal {M})\) is assumed. Indeed, the present analysis only uses the property \(z^* \in \tau \, \textsf{conv}(\mathcal {M})\), regardless of whether the iterates \((z^k)_{k \in \mathbb {N}}\) also belong to this set or not. Therefore the objective error estimate in Eq. (4) is independent of the particular sequence \((z^k)_{k \in \mathbb {N}}\) resulting from the choice of the sets \(\mathcal {B}^k\) at Step 5 of Algorithm 1 (constraint management strategy).

The following theorem states that smallest feasibility error among the k first iterates \(x_1, \dots , x_k\) follows a \(O(\frac{1}{k})\) convergence rate.

Theorem 6

Under Assumptions 1–3, if Algorithm 1 executes iteration k, for \(k \ge 2\), then

Proof

If \(\tau =0,\) inequality (10) holds trivially because, from Theorem 5, \(F(x^k) = \textsf{val}\)(\(\mathsf {SIP'}\)), thus \(\phi (x^k) \le 0.\) We consider the case \(\tau >0\). We keep the definition of the constant \(C = \frac{ 2 R^2 \tau ^2}{ \mu \, (1-\delta )^2}\), and we define the constants \(\alpha = \frac{2}{3}\), \(\beta = \frac{27}{8\tau }\), and \(D = k +2\). Let us suppose that

We will show a contradiction. If iteration \(k+1\) is not executed because the algorithm stopped at iteration k, we still define \(z^{k+1} = \underset{z = z^k + \gamma {\xi }^k, \gamma \ge 0 }{\text {argmax}} \theta (z)\), and \(\Delta _{k+1} = \theta (z^*) - \theta (z^{k+1}) \ge 0\). Hence, regardless whether the iteration \(k+1\) is executed or not, \(\Delta _{\ell }\) and \(\Delta _{\ell +1}\) are well defined for all \(\ell \in \lbrace 0, \dots , k \rbrace \), and we can apply Eq. (6) to deduce \(\Delta _{\ell +1} \le \Delta _{\ell } - \gamma \, \nabla \theta (z^{\ell })^\top \xi ^{\ell } + \frac{R^2}{2\mu } \gamma ^2\), for every \(\gamma \ge 0\). Equation (11) implies that \(\phi (x^\ell ) >0\), and from Eq. (1), we deduce that \(\phi (x^\ell )- G(x^\ell ,y^\ell ) \le \delta \phi (x^\ell )\), i.e., \((1-\delta )\phi (x^\ell ) \le G(x^\ell ,y^\ell ) = (x^\ell )^\top a(y^\ell ) = \nabla \theta (z^\ell )^\top \xi ^\ell \). Combining this with Eq. (11), we deduce that \(\nabla \theta (z^\ell )^\top \xi ^\ell > \frac{(1 -\delta )\beta C}{D}\), and therefore, for every \(\gamma { > } 0\), \(\Delta _{\ell +1} < \Delta _{\ell } - \gamma \, \frac{(1 -\delta )\beta C}{D} + \frac{R^2}{2\mu } \gamma ^2\). Applying this inequality for \(\gamma = \frac{{2}\tau }{(1-\delta )(\ell +2)} > 0\), we obtain

We define \(k_{\min } = \lceil \alpha D \rceil - 2\), and we notice that \(k_{\min } \ge 0\), since \(D\ge 4\). Furthermore, for every \(\ell \in \{ k_{\min }, \dots k \}\), \(\alpha D \le \ell + 2 \le D\). Combining this with Eq. (12), we know that, for every \(\ell \in \{ k_{\min }, \dots k \}\),

Summing these inequalities for \(\ell \in \{ k_{\min }, \dots k \}\), we obtain

and, using the bound on the objective gap at iteration \(k_{\min }\) given by Theorem 5, we have \(\Delta _{k+1} < \frac{C}{k_{\min } + 2} + \frac{C (k +1 - k_{\min } )}{D^2}(\frac{1}{\alpha ^2} - 2\tau \beta )\). We notice that \(k_{\min } + 2 \ge \alpha D\), and \( k + 1 - k_{\min } \ge (1-\alpha ) D\). By definition of \(\alpha \) and \(\beta \), \(\frac{1}{\alpha ^2} - 2\tau \beta = \frac{9}{4}- \frac{27}{4} \le 0\), and thus

Using again that \(\alpha = \frac{2}{3}\), we deduce that \(\Delta _{k+1} < \frac{C}{\alpha D}(\frac{3}{2} - \frac{4}{9} \tau \beta )\). Since \(\beta = \frac{27}{8\tau }\), we have \((\frac{3}{2} - \frac{4}{9} \tau \beta ) = 0\). Therefore, we obtain \(\Delta _{k+1} < 0\), which contradicts the definition of \(\Delta _{k+1}\). We can conclude that the assumption at Eq. (11) cannot hold, and there exists \(\ell \in \{0, \dots , k\}\) such that \(\phi (x^\ell ) \le \frac{\beta C}{D} = \frac{27 \, R^2 \tau }{4 \mu (1-\delta )^2} \, \frac{1}{k+2}\). \(\square \)

The proof of Theorem 6 is inspired by a previous work on the FCFW algorithm [21], with some adaptations to the problem (\(\textsf{DSIP}\)), a convex optimization problem on a cone. Indeed, [21, Th. 2] considers the duality gap convergence for the FCFW algorithm in the case of an optimization problem over a convex and compact domain for which a linear minimization oracle [21] is available. Yet, in the case of problem (\(\textsf{DSIP}\)), the optimization set \(\textsf{cone}(\mathcal {M})\) is not compact, and we do not assume, as done in [24], that the iterates \((z^k)_{k\in \mathbb {N}}\) belong to a same compact set of the form \(t \, \textsf{conv}(\mathcal {M})\). Therefore, we propose an adapted result and proof for such a case, which only rely on the property \(z^* \in \tau \, \textsf{conv}(\mathcal {M})\), regardless of whether the iterates \((z^k)_{k\in \mathbb {N}}\) also belong to this set.

3 IOA algorithm with inexact oracle

The CP algorithm provides iterates that are not feasible for the problem (\(\textsf{SIP}\)) until the algorithm converges; feasibility is obtained only asymptotically. To overcome this limitation, the authors of [6] proposed an IOA algorithm that generates a minimizing sequence of points that are feasible in (\(\textsf{SIP}\)), in the case where the inner problem is a QP problem, solved through an exact separation oracle. We extend this algorithm to the case where the inner problem is a QCQP problem, and the separation oracle is inexact. In particular, we consider the setting defined in the following assumption.

Assumption 4

The parameterization of the SIP constraints satisfies the following:

-

Linear mappings \(x \mapsto Q(x) \in \mathbb {S}_n\), \(x \mapsto q(x) \in \mathbb {R}^n\) and \(x \mapsto b(x) \in \mathbb {R}\) exist such that

$$G(x,y) = - \frac{1}{2} y^\top Q(x) y + q(x)^\top y + b(x).$$ -

There exist \(Q^1, \dots , Q^r \in \mathbb {S}_n\), \(q^1, \dots , q^r \in \mathbb {R}^n\) and \(b_1, \dots , b_r \in \mathbb {R}\) such that

$$\mathcal {Y} = \left\{ y \in \mathbb {R}^n :\frac{1}{2} y^\top Q^j y + (q^j) ^\top y + b_j \le 0, \forall j \in \{1, \dots , r \} \right\} .$$We also assume to know \(\rho \ge 0\) such that \(\mathcal {Y} \subset B(0, \rho )\).

In the context of Assumption 4, a possible way to deal with the SIP problem (\(\textsf{SIP}\)) is what is called lower-level dualization approach in [6], which consists in replacing the constraint involving the QCQP inner problem with one involving its dual. In particular, we consider a strong dual of a Semidefinite Programming (SDP) relaxation of the inner problem (or a reformulation if the latter is convex). In Subsection 3.1, we introduce the classical SDP relaxation of the inner problem (reformulation, if it is convex) regularized by a ball constraint, and then, we introduce the SDP dual of this relaxation (reformulation, resp.). In Subsection 3.2 we present the finite formulation (\(\textsf{SIPR}\)), obtained by applying the lower-level dualization approach to the problem (\(\textsf{SIP}\)). This formulation is a reformulation of (\(\textsf{SIP}\)) if \(Q^1, \dots , Q^r\) are Positive Semidefinite (PSD) and Q(x) is PSD for every \(x \in \mathcal {X}\). Otherwise, an a posteriori sufficient condition on a computed optimal solution \(\bar{x}\) of (\(\textsf{SIPR}\)) introduced in Subsection 3.3 can be verified. If \(\bar{x}\) satisfies such a condition, one can state that it is an optimal solution of (\(\textsf{SIP}\)). If not, the IOA algorithm is proposed in Subsection 3.4, which generates a sequence of converging feasible points of (\(\textsf{SIPR}\)).

3.1 SDP relaxation/reformulation of the inner problem

In this section, we reason for any fixed value of the decision vector \(x \in \mathcal {X}\). The corresponding inner problem \(\max _{y \in \mathcal {Y}} G(x,y)\) is the following QCQP problem

We define the linear matrix operator \({\mathcal {Q}}(x) = \frac{1}{2} \begin{pmatrix} -Q(x) & q(x) \\ q(x)^\top & 2 b(x) \end{pmatrix} \in \mathbb {S}_{n+1}\), and the matrices \({\mathcal {Q}}^j = \frac{1}{2} \begin{pmatrix} Q^j & q^j \\ (q^j)^\top & 2 b_j \end{pmatrix} \in \mathbb {S}_{n+1}\), for \(j \in \{1, \dots , r\}\), the identity matrix \(I_{n+1} \in \mathbb {S}_{n+1}\), as well as \(E:= \textsf{diag}(0, \dots , 0,1) \in \mathbb {S}_{n+1}\). With this notation, we introduce the following SDP problem

Under Assumption 4, this problem is the standard Shor SDP relaxation of (\(\textsf{P}_x\)). This convex relaxation is obtained by first reformulating (\(\textsf{P}_x\)) in a lifted space with an additional rank constraint and then dropping such a constraint. If the inner problem (\(\textsf{P}_x\)) is convex, i.e., \(Q^1, \dots , Q^r\) are PSD and Q(x) is PSD for every \(x \in \mathcal {X}\), (\(\textsf{SDP}_x\)) and (\(\textsf{P}_x\)) have the same optimal value. These results are proven in the following lemma.

Lemma 7

Under Assumption 4, \(\textsf{val}\) (\(\textsf{SDP}_x\)) \(\ge \textsf{val}\) (\(\textsf{P}_x\)). If, moreover, Q(x), \(Q^1, \dots , Q^r\) are PSD, then \(\textsf{val}\) (\(\textsf{SDP}_x\))\(=\textsf{val}\) (\(\textsf{P}_x\)).

Proof

Proof in Appendix A.5. \(\square \)

The following SDP problem

is the dual of problem (\(\textsf{SDP}_x\)), as the following lemma states.

Lemma 8

Formulations (\(\textsf{SDP}_x\)) and (\(\textsf{DSDP}_x\)) are a primal-dual pair of SDP problems and strong duality holds, thus \(\textsf{val}\)(\(\textsf{SDP}_x\))\( = \textsf{val}\)(\(\textsf{DSDP}_x\)).

Proof

Proof in Appendix A.6. \(\square \)

3.2 SDP restriction/reformulation of the SIP problem

Leveraging Sect. 3.1, which focuses on the inner problem (\(\textsf{P}_x\)), its SDP relaxation (\(\textsf{SDP}_x\)) and the respective dual problem (\(\textsf{DSDP}_x\)), we propose a single-level finite restriction of problem (\(\textsf{SIP}\)). It is a reformulation of (\(\textsf{SIP}\)) if \(Q^1, \dots , Q^r\) are PSD, and Q(x) is PSD for every \(x \in \mathcal {X}\).

Theorem 9

Under Assumption 4, the finite formulation

is a restriction of problem (\(\textsf{SIP}\)). If \(Q^1, \dots , Q^r\) are PSD, and if Q(x) is PSD for every \(x \in \mathcal {X}\), then the finite formulation (\(\textsf{SIPR}\)) is a reformulation of (\(\textsf{SIP}\)).

Proof

Let \(\textsf{Feas}\)(\(\textsf{SIP}\)) and \(\textsf{Feas}\)(\(\textsf{SIPR}\)) be the feasible sets of (\(\textsf{SIP}\)) and (\(\textsf{SIPR}\)), respectively. Since (\(\textsf{SIP}\)) and (\(\textsf{SIPR}\)) share the same objective function, proving for every \(x\in \mathcal {X}\) the implication

will prove the first part of the theorem. For every \(x\in \mathcal {X}\), we have:

stems from the fact that \(\textsf{val}\)(\(\textsf{P}_x\)) \(\le \textsf{val}\)(\(\textsf{SDP}_x\)). Applying the strong duality Lemma 8, we obtain that, for every \(x \in \mathcal {X}\),

The equivalence (20), together with implication (17), prove the implication (16).

If \(Q^1, \dots , Q^r\) are PSD, and if Q(x) is PSD for every \(x \in \mathcal {X}\), we can replace the implication (17) by the equivalence \( \textsf{val}\)(\(\textsf{SDP}_x\)) \(\le 0 \iff \textsf{val}\)(\(\textsf{P}_x\)) \(\le 0 \iff x \in \textsf{Feas}\)(\(\textsf{SIP}\)). This, together with equivalence (20), proves that

i.e., (\(\textsf{SIPR}\)) is a reformulation of (\(\textsf{SIP}\)), having the same objective function. \(\square \)

Note that under Assumptions 1 and 4, the finite formulation (\(\textsf{SIPR}\)) is convex.

3.3 Optimality of the restriction: a sufficient condition

Theorem 9 states that the single-level finite formulation (\(\textsf{SIPR}\)) is an exact reformulation of the problem (\(\textsf{SIP}\)), if \(Q^1,\dots Q^r\) are PSD, and \(Q(x) \succeq 0\) for all \(x \in \mathcal {X}\). Even if this a priori condition is not satisfied for all \(x\in \mathcal {X}\), as what is done in [6] in a different setting, an a posteriori condition on the computed solution \(\bar{x}\) of (\(\textsf{SIPR}\)) enables us to state that \(\bar{x}\) is an optimal solution of (\(\textsf{SIP}\)). Assumptions 1 and 4 are fundamental here to ensure that the feasible sets of (\(\textsf{SIP}\)) and (\(\textsf{SIPR}\)) are closed and convex.

Theorem 10

Under Assumptions 1 and 4, and assuming \(Q^1, \dots , Q^r\) are PSD, let \(\bar{x}\) be a solution of the single-level formulation (\(\textsf{SIPR}\)). If \(Q(\bar{x}) \succ 0\), then \(\bar{x}\) is optimal in (\(\textsf{SIP}\)).

Proof

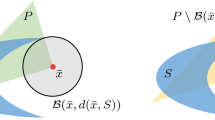

Given a closed convex set S, according to Def. 5.1.1 in [20, Chap. III], the tangent cone to S at x (denoted by \(T_S(x)\)) is the set of directions \(u \in \mathbb {R}^m\) such that there exist a sequence \((x_k)_{k \in \mathbb {N}}\) in S, and a positive sequence \((t_k)_{k \in \mathbb {N}}\) s.t. \(t_k \rightarrow 0\) and \(\frac{x_k - x}{t_k} \rightarrow u\). Moreover, according to Def. 5.2.4 in [20, Chap. III], the normal cone \(N_S(x)\) to S at x is the polar cone of the tangent cone \(T_S(x)\), i.e., \(N_S(x) = T_S(x)^\circ \). We define the closed convex set C (resp. \(\hat{C}\)) as the feasible set of formulation (\(\textsf{SIP}\)) (resp. (\(\textsf{SIPR}\))).

Since \(Q(\bar{x}) \succ 0\), the set of positive definite matrices is open, and Q(x) is continuous, there exists \(r >0\) s.t. for all x in the open ball of radius r with center \(\bar{x}\) (denoted by \(B(\bar{x},r)\)) \(Q(x) \succeq 0\). This means that for all x in \(\mathcal {X} \cap B(\bar{x},r)\), \(\textsf{val}\)(\(\textsf{P}_x\)) \(= \textsf{val}\)(\(\textsf{SDP}_x\)). Hence, we deduce that, for every \(x \in \mathcal {X} \cap B(\bar{x},r)\), x is feasible in (\(\textsf{SIP}\)) if and only if x is feasible in (\(\textsf{SIPR}\)). In other words, \(C \cap B(\bar{x},r) = \hat{C} \cap B(\bar{x},r)\). According to the aforementioned definition of the tangent and normal cones, we further deduce that \(T_C(\bar{x}) = T_{\hat{C}}(\bar{x})\), and \(N_C(\bar{x})= T_C(\bar{x})^\circ =T_{\hat{C}}(\bar{x})^\circ = N_{\hat{C}}(\bar{x})\).

We know that \(\bar{x}\) is optimal in (\(\textsf{SIPR}\)), i.e., \(\bar{x} \in \arg \min _{x \in \hat{C}} F(x)\). Since F is a finite-valued convex function, and \(\hat{C}\) is a closed and convex set, the assumptions of Theorem 1.1.1 in [20, Chap. VII] hold, and we can deduce that \(0 \in \partial F(\bar{x}) + N_{\hat{C}}(\bar{x})\). Using the equality \(N_C(\bar{x}) = N_{\hat{C}}(\bar{x})\), we have that \(0 \in \partial F(\bar{x}) + N_{C}(\bar{x})\) too. Applying the same theorem with the closed and convex set C, we know that \(0 \in \partial F(\bar{x}) + N_{C}(\bar{x})\) implies that \(\bar{x} \in \arg \min \limits _{x \in C} F(x)\), meaning that \(\bar{x}\) is optimal in (\(\textsf{SIP}\)). \(\square \)

3.4 The IOA algorithm

If neither the lower level is convex, nor the sufficient optimality condition in Theorem 10 is satisfied, we do not directly obtain an optimal solution of problem (\(\textsf{SIP}\)) by solving the finite formulation (\(\textsf{SIPR}\)). In this section, we present an algorithm based on the lower-level dualization approach (presented above) and on an inexact separation oracle, that generates a minimizing sequence of feasible points of problem (\(\textsf{SIP}\)).

For \(k \in \mathbb {N}_{+}\), we consider two finite sequences \(x^{1}, \dots , x^{k-1} \in \mathcal {X}\), and \(v_{1}, \dots , v_{k-1} \in \mathbb {R}\) s.t. \(v_\ell \) is an upper bound on \(\textsf{val}(\textsf{P}_{x^{\ell }})\), given by a \(\delta \)-oracle. Since, for all \(\ell = 1, \dots , k-1\), the inequality \(-\frac{1}{2}y^\top Q(x^{\ell })y +q(x^{\ell })^\top y + b(x^\ell ) \le v_\ell ,\) (i.e., \(\langle {\mathcal {Q}}(x^\ell ), Y \rangle \le v_\ell \) for \(Y:=yy^\top \)) holds for any \(y \in \mathcal {Y}\), the following SDP problem is still a relaxation of (\(\textsf{P}_x\)), for every \(x \in \mathcal {X}\):

We recall that the value of (\(\textsf{P}_x\)) is \(\phi (x) = \max _{y \in \mathcal {Y}} G(x,y)\). For ease of reading, we also denote by \(\phi _\textsf{SDP}(x)\) the value of (\(\textsf{SDP}_x\)), and by \(\phi _\textsf{SDP}^k(x)\) the value of (\(\textsf{SDP}^k_x\)). We underline that function \(\phi _\textsf{SDP}^k(x)\) implicitly depends on the sequences \((x^\ell )_{1\le \ell \le k}\) and \((v_\ell )_{1\le \ell \le k}\). Given the Lagrangian multiplier \(\zeta _\ell \) associated to the constraint \(\langle {\mathcal {Q}}(x^\ell ), Y \rangle \le v_\ell \), the strong SDP dual of problem (\(\textsf{SDP}^k_x\)) is

Applying the analog of Lemma 8 to the primal-dual pair of (\(\textsf{SDP}^k_x\))–(\(\textsf{DSDP}^k_x\)), for every \(\hat{x} \in \mathcal {X}\), \(\phi _\textsf{SDP}^k(\hat{x}) \le 0\) holds if and only if \(\hat{x} \in \mathcal {R}^k\), where \(\mathcal {R}^k\) is defined as

Proposition 11

Under Assumption 4, for every finite sequences \(x^{1}, \dots , x^{k-1} \in \mathcal {X}\), and \(v_{1}, \dots , v_{k-1} \in \mathbb {R}\) s.t. \(v_\ell \ge \phi (x^{\ell })\), the resulting set \(\mathcal {X} \cap \mathcal {R}^k\) is included in the feasible set of (\(\textsf{SIP}\)).

Proof

We apply Theorem 9 to the inner problem modified with the additional valid cuts \(\langle {\mathcal {Q}}(x^\ell ), Y \rangle \le v_\ell \; \forall \ell \in \{1,\dots ,k-1\}\), which do not change the value of the non-convex inner problem \(\phi (x)\), and, therefore, do not change the feasible set of problem (\(\textsf{SIP}\)). We deduce that \(\mathcal {X} \cap \mathcal {R}^k\) is a subset of the feasible set of (\(\textsf{SIP}\)).

Algorithm 2 is the pseudocode of the IOA algorithm with inexact oracle. It starts by solving the restriction (\(\textsf{SIPR}\)) and checks whether the condition presented in Theorem 10 is satisfied or not. If yes, the algorithm stops returning the solution which is optimal for both (\(\textsf{SIPR}\)) and (\(\textsf{SIP}\)). Otherwise, it performs a sequence of iterations, until the stopping criteria are satisfied, i.e., \(G(x^k,y^k) \le \epsilon _1\), and \(\Vert x^k - \hat{x}^k \Vert \le \epsilon _2\). At each iteration, the convex optimization problem (21) is solved. This problem is a coupling between the minimization of F on a relaxed set, and the minimization of F on a restricted set. Indeed, x belongs to an outer-approximation (relaxation), whereas \(\hat{x}\) belongs to an inner-approximation (restriction) of (\(\textsf{SIP}\)) feasible set. The minimization of F over these two sets is coupled by a proximal term that penalizes the distance between x and \(\hat{x}\). After solving the master problem (21), the lower-level problem (\(\textsf{P}_x\)) is solved for \(x = x^k\). The solution of this problem is used to restrict the outer-approximation, and to enlarge the inner-approximation.

Before proving the termination and the convergence of Algorithm 2, under Assumptions 1 and 4, we introduce two technical lemmas.

Lemma 12

Under Assumptions 1 and 4, denoting by \(x^*\) an optimal solution of (\(\textsf{SIP}\)), if Algorithm 2 runs iteration k, \(F(x^k) \le F(x^*) + \mu _k(x^k-\hat{x}^k)^\top (x^* - x^k)\).

Proof

Proof in Appendix A.7. \(\square \)

Lemma 13

Consider a parameter \(\delta \in [0,1)\), the infinite sequences \((x^k)_{k \in \mathbb {N}} \subset \mathcal {X}\) and \((y^k)_{k \in \mathbb {N}}\subset \mathcal {Y}\), where \(y^k = \hat{y}(x^k)\) is the output of the \(\delta \)-oracle evaluated at point \(x^k\). If these sequences are such that, for every \(k \in \mathbb {N}\),

then, the feasibility error \(\phi (x^k)^+\) vanishes in the limit \(k\rightarrow \infty \).

Proof

Proof in Appendix A.8.\(\square \)

Before analyzing the convergence and the termination of the IOA algorithm (Theorems 14–16), we make the additional assumption that F is Lipschitz continuous, and that the Slater condition holds for the restriction (\(\textsf{SIPR}\)). However, the knowledge of this Slater point is not a prerequisite for running the IOA algorithm.

Assumption 5

F(x) is \(C_F\)-Lipschitz, and there exists \(x^S \in \mathcal {X}\) s.t. \({\phi }_{\textsf{SDP}}(x^S)< 0\).

Theorem 14 states the asymptotic convergence of Algorithm 2 if it does not terminate.

Theorem 14

Under Assumptions 1, 4, and 5, if Algorithm 2 does not stop then, it generates infinite sequences \((x^k)_{k \in \mathbb {N}_{+}}\) and \((\hat{x}^k)_{k \in \mathbb {N}_{+}}\) such that (i) \(\Vert x^k - \hat{x}^k \Vert \rightarrow 0\), and (ii) the sequence \((\hat{x}^k)_{n\in \mathbb {N}}\) of feasible points in (\(\textsf{SIP}\)) is a minimizing sequence, i.e., \(F(\hat{x}^k)\rightarrow \textsf{val}\)(\(\textsf{SIP}\)).

Proof

We start by proving that \(\phi _{\textsf{SDP}}^k(x^k)^+ \rightarrow 0\). Since \(\phi _{\textsf{SDP}}^k(x^k)^+\) is bounded, there exists at least one accumulation value for this sequence. We show that, necessarily, every accumulation point of \(\phi _{\textsf{SDP}}^k(x^k)^+\) is 0. We take any strictly increasing application \(\psi : \mathbb {N} \rightarrow \mathbb {N}\), such that \(\phi _{\textsf{SDP}}^{\psi (k)}(x^{\psi (k)})^+ \rightarrow {h}\). We show that for every \(\psi \), \(h=0\). From a corollary of Bolzano–Weierstrass theorem [1, Ex. 2.5.4], we will then conclude that \(\phi _{\textsf{SDP}}^k(x^k)^+ \rightarrow 0\).

Up to the extraction of a subsequence, we can assume, by the compactness of \(\mathcal {X}\), that \(x^{\psi (k)} \rightarrow x \in \mathcal {X}\). For \(k \in \mathbb {N}\), we define \(j = \psi (k)\) and \(\ell = \psi (k-1)\), and

as the constraint \(\langle \mathcal {Q}(x^{\ell }), Y \rangle \le v_{\ell }\) is enforced in the problem \((\textsf{SDP}^{j}_{x^{{\ell }}})\), since \(\ell \le j-1\). We introduce \(\tilde{Y}\) the solution of \(\textsf{SDP}^{j}_x\) at \(x = x^{{j}}\) so that, \(\langle \mathcal {Q}(x^{{j}}), \tilde{Y} \rangle = \phi _{\textsf{SDP}}^j(x^{{j}}) \). Since \(\tilde{Y}\) is feasible in \(\textsf{SDP}^{j}_x\) at \(x = x^{{\ell }}\), \(\langle \mathcal {Q}(x^{{\ell }}), \tilde{Y} \rangle \le \phi _{\textsf{SDP}}^j(x^{{\ell }})\). Therefore, by linearity of \(\mathcal {Q}\), and due to the Cauchy-Schwartz inequality, \(\phi _{\textsf{SDP}}^j(x^j) - \phi _{\textsf{SDP}}^j(x^{\ell }) \le \langle \mathcal {Q}(x^j - x^{\ell }), \tilde{Y} \rangle \le \Vert \mathcal {Q}(x^j - x^{\ell }) \Vert _F \, B \) where \(B:= \max _Y \in \textsf{Feas}\)(\(\textsf{SDP}_x\)) \(\Vert Y \Vert _F\), which is independent from j, and \(\ell \). Indeed, \(\tilde{Y}\) is feasible in (\(\textsf{SDP}_x\)). By defining the operator norm of \(x \mapsto \mathcal {Q}(x)\) as \(\Vert \mathcal {Q} \Vert _{op}\), we obtain that \(\phi _{\textsf{SDP}}^j(x^j) - \phi _{\textsf{SDP}}^j(x^{\ell }) \le \Vert x^j - x^\ell \Vert \, \Vert \mathcal {Q} \Vert _{op} \, B\). We combine this with Eq. (23), using the fact that the positive part is non-decreasing, to obtain

the second inequality coming from the property of the \(\delta \)-oracle (see Eq. (1)). Using the definition of \(\ell \) and j, we obtain \( 0 \le \phi _{\textsf{SDP}}^{\psi (k)}(x^{\psi (k)})^+ \le \phi (x^{\psi (k-1)})^+ (1+ \delta ) + \Vert x^{\psi (k)} - x^{\psi (k-1)} \Vert \, \Vert \mathcal {Q} \Vert _{op} \, B.\) Since \(\phi (x^{k})^+ \rightarrow 0\) (due to Lemma 13), and \(x^{\psi (k)}\) is converging, we deduce, by taking the limit, that \({h} = 0\). This holds for any \(\psi \), i.e., for any converging subsequence \(\phi _{\textsf{SDP}}^{\psi (k)}(x^{\psi (k)})^+\). We can thus conclude that \(\phi _{\textsf{SDP}}^k(x^k)^+ \rightarrow 0\) [1, Ex. 2.5.4]. Using Assumption 5, we introduce a Slater point \(x^S \in \mathcal {X}\) such that \(\phi _{\textsf{SDP}}(x^S) = -c\), for \(c > 0\). We also introduce \(\omega _k:= \phi _{\textsf{SDP}}^k(x^k)^+/(c+\phi _{\textsf{SDP}}^k(x^k)^+)\). We notice that \(\omega _k \rightarrow 0\), as \(\phi _{\textsf{SDP}}^k(x^k)^+ \rightarrow 0\). We define the convex combination \(\bar{x}_k = (1-\omega _k) x^k + \omega _k x^S\). We emphasize that \((\bar{x}_k,\bar{x}_k)\) is feasible in problem (21) at iteration k since \(\mathcal {X}\) is convex and

-

\(\bar{x}_k\) satisfies the constraints on x, because both \(x^k\) and \(x^S\) satisfy the convex constraints G(x, y) for \(y \in \mathcal {Y}^k\), and, by convex combination, so does \(\bar{x}_k\);

-

\(\bar{x}_k\) satisfies the constraints on \(\hat{x}\); indeed, by convexity of \(\phi _{\textsf{SDP}}^k(x)\) (as a maximum of linear functions), the following holds:

$$\begin{aligned} \phi _{\textsf{SDP}}^k(\bar{x}_k)&\le (1- \omega _k) \phi _{\textsf{SDP}}^k(x^k) + \omega _k \phi _{\textsf{SDP}}^k(x^S) \\&\le \frac{c}{c+\phi _{\textsf{SDP}}^k(x^k)^+} \phi _{\textsf{SDP}}^k(x^k) + \frac{\phi _{\textsf{SDP}}^k(x^k)^+}{c+\phi _{\textsf{SDP}}^k(x^k)^+} \phi _{\textsf{SDP}}^k(x^S). \end{aligned}$$Since \(\phi _{\textsf{SDP}}^k(x^S) \le \phi _{\textsf{SDP}}(x^S) = - c\) and \(\frac{\phi _{\textsf{SDP}}^k(x^k)^+}{c+\phi _{\textsf{SDP}}^k(x^k)^+} \ge 0\), we obtain

$$\begin{aligned} \phi _{\textsf{SDP}}^k(\bar{x}_k)\le \frac{c}{c+\phi _{\textsf{SDP}}^k(x^k)^+}\phi _{\textsf{SDP}}^k(x^k) - \frac{c}{c+\phi _{\textsf{SDP}}^k(x^k)^+}\phi _{\textsf{SDP}}^k(x^k)^+, \end{aligned}$$and thus

$$\begin{aligned} \phi _{\textsf{SDP}}^k(\bar{x}_k)\le \frac{c}{c+\phi _{\textsf{SDP}}^k(x^k)^+} \left( \phi _{\textsf{SDP}}^k(x^{k}) - \phi _{\textsf{SDP}}^k(x^{k})^+\right) . \end{aligned}$$As \(\frac{c}{c+\phi _{\textsf{SDP}}^k(x^k)^+} \ge 0\) and \(\phi _{\textsf{SDP}}^k(x^{k}) \le \phi _{\textsf{SDP}}^k(x^{k})^+\), we deduce that \(\phi _{\textsf{SDP}}^k(\bar{x}_k) \le 0\), i.e., \(\bar{x}_k \in \mathcal {R}^k\).

As the objective value of \((\bar{x}_k,\bar{x}_k)\) in the problem (21) is \(2F(\bar{x}_k)\), by optimality of \((x^k, \hat{x}^k)\): \( F(x^k) + F(\hat{x}^k) + \frac{\mu _k}{2} \Vert x^k - \hat{x}^k \Vert ^2 \le 2 F( (1-\omega _k) x^k + \omega _k x^S)\), which means, by convexity of F, that

We also notice that \((\hat{x}^{k},\hat{x}^{k})\) is feasible in the problem (21) at iteration k, thus \(F(x^k) + F(\hat{x}^k) + \frac{\mu _k}{2} \Vert x^k - \hat{x}^k \Vert ^2 \le 2 F( \hat{x}^{k})\), which means

Summing Eq. (26) with Eq. (27), we have: \( 2 F(x^k) + \mu _k \Vert x^k - \hat{x}^k \Vert ^2 \le 2(1-\omega _k) F(x^k) + 2\omega _k F(x^S)\), and thus \(\mu _k \Vert x^k - \hat{x}^k \Vert ^2 \le 2 \omega _k \left( F(x^S) - F(x^k) \right) \). Since \(0<\underline{\mu } \le \mu _k\),

holds. Since \(\omega _k \rightarrow 0\), and \(F(x^S) - F(x^k)\) is bounded, we deduce from Eq. (28) that

Every point \(\hat{x}^k \in \mathcal {R}^{k}\) of the generated sequence is feasible in (\(\textsf{SIP}\)), as stated in Proposition 11, and F is \(C_F\)-Lipschitz. Therefore, we have \(F(x^*) \le F(\hat{x}^k) \le F(x^k) + {C_F} \Vert x^k - \hat{x}^k\Vert \). According to Lemma 12, we know that \(F(x^k) \le F( x^{*}) + \mu _k (x^k - \hat{x}^k)^\top (x^* - x^k)\), which implies, according to the Cauchy-Schwartz inequality, that

Since \(\Vert x^* - x^k \Vert \) is bounded, we deduce from Eq. (29) that \(F(x^*) + \mu _k \Vert x^k - \hat{x}^k\Vert \Vert x^* - x^k \Vert + C_F \Vert x^k - \hat{x}^k\Vert \rightarrow F(x^*)\), and thus, \(F(\hat{x}^k) \rightarrow F(x^*) = \textsf{val}\)(\(\textsf{SIP}\)). \(\square \)

We move now to the case where the IOA Algorithm terminates in a finite number of iterations: Theorem 15 studies a sufficient condition for such a finite termination, and Theorem 16 studies the suboptimality of the returned feasible point.

Theorem 15

Under Assumptions 1, 4, and 5, if \(\epsilon _1>0\) and \( \epsilon _2 > 0\) then Algorithm 2 terminates after a finite number of iterations.

Proof

We reason by contrapositive: we suppose that Algorithm 2 does not stop, i.e., generates two infinite sequences \((x^k)_{k \in \mathbb {N}_{+}}\) and \((\hat{x}^k)_{k \in \mathbb {N}_{+}}\), and we show that either \(\epsilon _1 =0\) or \(\epsilon _2 = 0\). On the one hand, as the stopping criterion is not met, for all \(k \in \mathbb {N}\), either \(G(x^k,y^k) > \epsilon _1\) (and therefore \(G(x^k,y^k)^+ > \epsilon _1\)), or \(\Vert x^k - \hat{x}^k \Vert > \epsilon _2\). In other words, \(\max \{ G(x^k,y^k)^+ - \epsilon _1, \Vert x^k - \hat{x}^k \Vert - \epsilon _2 \} >0\). On the other hand, we deduce from Lemma 13 that \(G(x^k,y^k)^+ \le \phi (x^k)^+ \rightarrow 0\), and from Theorem 14 that \(\Vert x^k - \hat{x}^k \Vert \rightarrow 0\). By continutuity of the max operator, we deduce that \(\max \{ - \epsilon _1, - \epsilon _2 \} \ge 0\), i.e. \(\min \{ \epsilon _1, \epsilon _2 \} \le 0\). Since the scalars \(\epsilon _1\) and \(\epsilon _2\) are nonnegative by definition, we deduce that \(\min \{ \epsilon _1, \epsilon _2 \} = 0\). \(\square \)

Theorem 16 provides an upper bound on the optimality gap of the returned feasible point in case of finite termination. We use the notation \(\textsf{diam}(\mathcal {X}):= \underset{x_1, x_2 \in \mathcal {X}}{\max } \Vert x_1 - x_2\Vert \).

Theorem 16

Under Assumptions 1, 4, and 5, if Algorithm 2 terminates after iteration K, then it returns a feasible iterate \(\hat{x}^K\) s.t. \(F(\hat{x}^K) \le \textsf{val}\)(\(\textsf{SIP}\)) \(+ \epsilon _2 (\mu _K \textsf{diam}(\mathcal {X}) + C_F).\)

Proof

From Lemma 12, and from Cauchy-Schwartz inequality, we know that \(F(x^K) \le F(x^*) + \mu _k(x^K-\hat{x}^K)^\top (x^* - x^K) \le F(x^*) + \mu _k \Vert x^K-\hat{x}^K \Vert \, \Vert x^* - x^K \Vert \). Using that F is \(C_F\)-Lipschitz, we obtain \(F(\hat{x}^K) \le F(x^*) + \mu _K \Vert x^K-\hat{x}^K \Vert \, \Vert x^* - x^K \Vert + C_F \Vert x^K-\hat{x}^K \Vert \). We note that \(\Vert x^K - \hat{x}^K\Vert \le \epsilon _2\) and \(\Vert x^* - x^K \Vert \le \textsf{diam}(\mathcal {X})\) to conclude. \(\square \)

4 Conclusion

In this paper, we address the issue of solving a convex Semi-Infinite Programming problem despite the difficulty of the separation problem. We proceed by allowing for the approximate solution of the separation problem, up to a given relative optimality gap. We see that, in the case where the objective function is strongly convex, the Cutting-Planes algorithm has guaranteed theoretical performance despite the inexactness of the oracle: it converges in O(1/k). Contrary to the Cutting-Planes algorithm, the Inner-Outer Approximation algorithm generates a sequence of feasible points converging towards the optimum of the Semi-Infinite Programming problem. This paper shows that this is also the case when the separation problem is a Quadratically Constrained Quadratic Programming problem despite the inexactness of the oracle. An avenue of research is to extend these results to the setting of Mixed-Integer Convex Semi-Infinite Programming.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Abbott, S.: Understanding Analysis. Undergraduate texts in mathematics, Springer, New York (2016). https://doi.org/10.1007/978-1-4939-2712-8

Aubin, J.P.: Viability Theory. Systems and control: foundations and applications, Springer (1991). https://doi.org/10.1007/978-0-8176-4910-4

Betró, B.: An accelerated central cutting plane algorithm for linear semi-infinite programming. Math. Program. 101(3), 479–495 (2004). https://doi.org/10.1007/s10107-003-0492-5

Blankenship, J.W., Falk, J.E.: Infinitely constrained optimization problems. J. Optim. Theory Appl. 19(2), 261–281 (1976). https://doi.org/10.1007/BF00934096

Boyd, S.P., Vandenberghe, L.: Convex Optimization. Cambridge University Press (2004). https://doi.org/10.1017/CBO9780511804441

Cerulli, M., Oustry, A., D’Ambrosio, C., et al.: Convergent algorithms for a class of convex semi-infinite programs. SIAM J. Optim. 32(4), 2493–2526 (2022). https://doi.org/10.1137/21M1431047

d’Aspremont, A.: Smooth optimization with approximate gradient. SIAM J. Optim. 19(3), 1171–1183 (2008). https://doi.org/10.1137/060676386

Devolder, O., Glineur, F., Nesterov, Y., et al.: Intermediate gradient methods for smooth convex problems with inexact oracle. LIDAM Discussion Papers CORE-2013017, Università catholique de Louvain, Center for Operations Research and Econometrics (CORE) (2013). https://ideas.repec.org/p/cor/louvco/2013017.html

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods of smooth convex optimization with inexact oracle. Math. Program. 146, 37–75 (2014). https://doi.org/10.1007/s10107-013-0677-5

Djelassi, H., Mitsos, A.: A hybrid discretization algorithm with guaranteed feasibility for the global solution of semi-infinite programs. J. Glob. Optimiz. (2017). https://doi.org/10.1007/s10898-016-0476-7

Djelassi, H., Mitsos, A., Stein, O.: Recent advances in nonconvex semi-infinite programming: applications and algorithms. EURO J. Comput. Optimiz. (2021). https://doi.org/10.1016/j.ejco.2021.100006

Dvurechensky, P., Gasnikov, A.: Stochastic intermediate gradient method for convex problems with stochastic inexact oracle. J. Optim. Theory Appl. 171, 121–145 (2016). https://doi.org/10.1007/s10957-016-0999-6

Floudas, C.A., Stein, O.: The adaptive convexification algorithm: a feasible point method for semi-infinite programming. SIAM J. Optim. 18(4), 1187–1208 (2008). https://doi.org/10.1137/060657741

Fuduli, A., Gaudioso, M., Giallombardo, G., et al.: A partially inexact bundle method for convex semi-infinite minmax problems. Commun. Nonlinear Sci. Numer. Simul. 21(1), 172–180 (2015). https://doi.org/10.1016/j.cnsns.2014.07.033

Gaudioso, M., Giallombardo, G., Miglionico, G.: An incremental method for solving convex finite min-max problems. Math. Oper. Res. 31(1), 173–187 (2006). https://doi.org/10.1287/moor.1050.0175

Gaudioso, M., Giallombardo, G., Miglionico, G.: On solving the lagrangian dual of integer programs via an incremental approach. Comput. Optim. Appl. 44(1), 117–138 (2009). https://doi.org/10.1007/s10589-007-9149-2

Goberna, M., López-Cerdá, M.: Linear semi-infinite optimization. Mathematical Methods in Practice. John Wiley and Sons (1998). https://doi.org/10.1007/978-1-4899-8044-1_3

Hettich, R.: A review of numerical methods for semi-infinite optimization. Semi-infin. Program. Appl. (1983). https://doi.org/10.1007/978-3-642-46477-5_11

Hettich, R.: An implementation of a discretization method for semi-infinite programming. Math. Program. 34(3), 354–361 (1986). https://doi.org/10.1007/BF01582235

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex analysis and minimization algorithms I: fundamentals, vol. 305. Springer, Germany (2013). https://doi.org/10.1007/978-3-662-02796-7

Jaggi, M.: Revisiting Frank-Wolfe: projection-free sparse convex optimization. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 28, pp 427–435. PMLR, Atlanta (2013) https://proceedings.mlr.press/v28/jaggi13.html

Kelley, J.E., Jr.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8(4), 703–712 (1960). https://doi.org/10.1137/0108053

Kennedy, G.J., Hicken, J.E.: Improved constraint-aggregation methods. Comput. Methods Appl. Mech. Eng. 289, 332–354 (2015). https://doi.org/10.1016/j.cma.2015.02.017

Locatello, F., Tschannen, M., Rätsch, G., et al.: Greedy algorithms for cone constrained optimization with convergence guarantees. In: Neural Information Processing Systems (2017) https://api.semanticscholar.org/CorpusID:3380974

Mitsos, A.: Global optimization of semi-infinite programs via restriction of the right-hand side. Optimization 60(10–11), 1291–1308 (2011). https://doi.org/10.1080/02331934.2010.527970

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103, 127–152 (2005). https://doi.org/10.1007/s10107-004-0552-5

Pang, L.P., Lv, J., Wang, J.H.: Constrained incremental bundle method with partial inexact oracle for nonsmooth convex semi-infinite programming problems. Comput. Optim. Appl. 64, 433–465 (2016). https://doi.org/10.1007/s10589-015-9810-0

Reemtsen, R.: Discretization methods for the solution of semi-infinite programming problems. J. Optim. Theory Appl. 71(1), 85–103 (1991). https://doi.org/10.1007/BF00940041

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970). https://doi.org/10.1515/9781400873173

Schmid, J., Poursanidis, M.: Approximate solutions of convex semi-infinite optimization problems in finitely many iterations (2022) https://doi.org/10.48550/arXiv.2105.08417

Schwientek, J., Seidel, T., Küfer, K.H.: A transformation-based discretization method for solving general semi-infinite optimization problems. Math. Methods Oper. Res. 93(1), 83–114 (2021). https://doi.org/10.1007/s00186-020-00724-8

Seidel, T., Küfer, K.H.: An adaptive discretization method solving semi-infinite optimization problems with quadratic rate of convergence. Optimization 71(8), 2211–2239 (2022). https://doi.org/10.1080/02331934.2020.1804566

Sion, M.: On general minimax theorems. Pac. J. Math. 8(1), 171–176 (1958)

Stein, O.: How to solve a semi-infinite optimization problem. Eur. J. Oper. Res. 223(2), 312–320 (2012). https://doi.org/10.1016/j.ejor.2012.06.00

Stein, O., Still, G.: Solving semi-infinite optimization problems with interior point techniques. SIAM J Control Optim. 42, 769–788 (2003). https://doi.org/10.1137/S0363012901398393

Still, G.: Discretization in semi-infinite programming: the rate of convergence. Math. Program. 91(1), 53–69 (2001). https://doi.org/10.1007/s101070100239

Tichatschke, R., Nebeling, V.: A cutting-plane method for quadratic semi infinite programming problems. Optimization 19(6), 803–817 (1988). https://doi.org/10.1080/02331938808843393

Tsoukalas, A., Rustem, B.: A feasible point adaptation of the blankenship and falk algorithm for semi-infinite programming. Optim. Lett. 5, 705–716 (2011). https://doi.org/10.1007/s11590-010-0236-4

Tuy, H.: Convex Analysis and Global Optimization, vol. 22, 2nd edn. Springer, Boston (1998). https://doi.org/10.1007/978-3-319-31484-6

Zhang, L., Wu, S.Y., López, M.A.: A new exchange method for convex semi-infinite programming. SIAM J. Optim. 20(6), 2959–2977 (2010). https://doi.org/10.1137/090767133

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Notations

We summarize the notations used throughout the paper in Table 2.

1.2 Proof of Lemma 1

We have that: (i) the set \(\mathcal {X}\) is compact, (ii) the function \(-\mathcal {L}(\cdot ,z)\) is continuous for all \(z \in \mathbb {R}^m\), (iii) the function \(-\mathcal {L}(x,\cdot )\) is convex and differentiable for all \(x \in \mathcal {X}\), (iv) the function \(\sup _{x\in \mathcal {X}} -\mathcal {L}(x,\cdot )\) (with x function of z) is finite-valued over \(\mathbb {R}^m\), and (v) due to Assumptions 1 and 2, the function \(-\mathcal {L}(\cdot ,z)\) is strongly concave and the set \(\mathcal {X}\) is compact and convex, therefore the supremum \(\sup _{x\in \mathcal {X}} -\mathcal {L}(x,z)\) is attained for a unique x(z). In this setting, we deduce from [20, Cor. VI.4.4.5] that \(\theta (z)\) is differentiable over \(\mathbb {R}^m\), with gradient \(\nabla _z \mathcal {L}(x(z),z) = x(z)\).

We now take \(z,z' \in \mathbb {R}^m\), and prove that \(\Vert \nabla \theta (z) - \nabla \theta (z') \Vert \le \frac{1}{\mu } \Vert z - z'\Vert \). We define the functions \(w(u) = \mathcal {L}(u,z) + {i}_\mathcal {X}(u)\) and \(w'(u) = \mathcal {L}(u,z') + {i}_\mathcal {X}(u)\), where \(i_\mathcal {X}(\cdot )\) is the characteristic function of \(\mathcal {X}\). We introduce x (resp. \(x'\)) the unique minimum of w (resp. \(w'\)). The first-order optimality condition for these convex functions reads

We notice that the function \((F + i_\mathcal {X})(u)\) is convex due to Assumption 1, and the function \(\ell (u) = z^\top u\) is linear and thus convex. The intersection of the relative interiors of the domains of these convex functions is \(\textsf{ri}(\mathcal {X})\). With \(\mathcal {X}\) a finite-dimensional convex set, \(\textsf{ri}(\mathcal {X}) \ne \emptyset \), according to [39, Prop. 1.9]. Hence, the subdifferential of the sum is the sum of the subdifferentials [29, Th. 23.8], i.e., \(\partial w(x) = \partial (F + i_\mathcal {X})(x) + \partial \ell (x) =\partial (F + i_\mathcal {X})(x) + z\). Similarly, \(\partial w'(x') = \partial (F + i_\mathcal {X})(x') + z'\). Therefore, Eqs. (A1) may be rephrased as the existence of \(s \in \partial (F + i_\mathcal {X})(x)\) and \(s' \in \partial (F + i_\mathcal {X})(x')\) such that

Due to Assumptions 1 and 2, the function \(F + i_\mathcal {X}\) is \(\mu \)-strongly convex. Applying [20, Th. VI.6.1.2], the \(\mu \)-strong convexity of \(F + i_\mathcal {X}\) gives that \( (s-s')^\top (x -x' ) \ge \mu \Vert x - x' \Vert ^2\), since \(s \in \partial (F + i_\mathcal {X})(x)\) and \(s' \in \partial (F + i_\mathcal {X})(x')\). Using the Cauchy-Schwartz inequality and Eqs. (A2), we deduce that \(\Vert z - z' \Vert \, \Vert x - x' \Vert \ge \mu \Vert x - x' \Vert ^2\). Since \(\nabla \theta (z) = x\) and \(\nabla \theta (z') = x'\) (following from the first part of this proof):

From Eq. (A3), \( \Vert \nabla \theta (z) - \nabla \theta (z') \Vert \le \frac{1}{\mu } \Vert z - z' \Vert \), if \(\Vert \nabla \theta (z) - \nabla \theta (z') \Vert > 0\). If \(\Vert \nabla \theta (z) - \nabla \theta (z') \Vert =0\), this inequality is also trivially true.

1.3 Proof of Lemma 3

According to Assumption 3, we introduce \(\hat{x} \in \mathcal {X}\) such that \(\hat{x}^\top a(y) < 0\) for all \(y \in \mathcal {Y}\). By continuity of G and compactness of \(\mathcal {Y}\), we know that there exists a constant \(c > 0\) such that \(\hat{x}^\top a(y) \le -c\) for all \(y \in \mathcal {Y}\). For every \(z \in \mathcal {K}\), there exist \(r \in \mathbb {N}\), \(y_1, \dots , y_r \in \mathcal {Y}\), and \(\lambda _1, \dots , \lambda _r \in \mathbb {R}_{++}\) such that \(z = \sum _{i=1}^p \lambda _i a(y_i)\). By definition of \(\theta (z)\), \(\theta (z) \le \mathcal {L}(\hat{x},z)\). We deduce that \(\theta (z) \le F(\hat{x}) - c \sum _{i=1}^p \lambda _i\), which also reads \(\sum _{i=1}^p \lambda _i \le c^{-1}(F(\hat{x}) - \theta (z)).\) For this equation, we deduce that for every feasible point of (\(\textsf{DSIP}\)) such that \(\theta (z) \ge V -1\) where \(V = \textsf{val}\) (\(\textsf{SIP}\)) \(= \textsf{val}\) (\(\textsf{DSIP}\)), we have \(\sum _{i=1}^p \lambda _i \le c^{-1}(F(\hat{x}) - V + 1),\) and this holds for every decomposition \(z =\sum _{i=1}^p \lambda _i a(y_i)\). In particular, the set of \(z \in \mathcal {K}\), such that \(\theta (z) \ge V -1\) is included in the compact set \(q \textsf{conv}(\mathcal {M})\), where \(q = c^{-1}(F(\hat{x}) - V + 1)\). Over this compact set, the continuous function \(\theta (z)\) reaches a maximum.

1.4 Proof of Lemma 4

We notice that the problems pair (\(R_k\))-(\(D_k\)) is a particular case of the generic problems pair (\(\mathsf {SIP'}\))-(\(\textsf{DSIP}\)) when instantiating \({\mathcal {M}} \leftarrow \mathcal {M}^k\). The equality \(\textsf{val}\)(\(R_k\)) =\( \textsf{val}\)(\(D_k\)) is thus the application of Eq. (2) in this case. The existence of an optimal solution \(x^k\) in (\(R_k\)) follows from the compactness of \(\mathcal {X}\), the continuity of F(x), and the existence of a feasible point due to Assumption 3. The existence of an optimal solution \(z^k\) to (\(D_k\)) is a direct application of Lemma 3, which is applicable since (\(R_k\))-(\(D_k\)) also satisfies Assumption 3. Indeed, \(\hat{x}^\top z < 0\) for all \(z \in \mathcal {M}\), so \(\hat{x}^\top z < 0\) for all \(z \in \textsf{conv}(\mathcal {M})\); as \(\mathcal {M}^k \subset \textsf{conv}(\mathcal {M})\) by construction, we deduce that \(\hat{x}^\top z < 0\) for all \(z \in \mathcal {M}^k\). By definition of \(\theta \), \(\mathcal {L}(x,z^k) \ge \theta (z^k)\) for all \(x \in \mathcal {X}\). We also know that \(\mathcal {L}(x^k,z^k) = \theta (z^k)\) since \(\textsf{val}\)(\(R_k\)) \(= F(x^k) \ge \mathcal {L}(x^k,z^k) \ge \theta (z^k) = \textsf{val}\) (\(D_k\)) \(= \textsf{val}\)(\(R_k\)), which means \(x^k = \arg \min \limits _{x \in \mathcal {X}} \mathcal {L}(x,z^k)\). Lemma 1 gives \(\nabla \theta (z^{k}) = \arg \min \limits _{x \in \mathcal {X}} \mathcal {L}(x,z^k)\), thus \(\nabla \theta (z^{k}) = x^k\).

1.5 Proof of Lemma 7

We underline that, due to Assumption 4, the constraint \(\Vert y \Vert ^2 + 1 \le 1 + \rho ^2 \) is redundant in the problem (\(\textsf{P}_x\)), i.e., adding it does not change the value of this problem. Consequently, we recognize that (\(\textsf{SDP}_x\)) is the standard Shor SDP relaxation of the problem (\(\textsf{P}_x\)) augmented with this redundant constraint, this is why \(\textsf{val}\) (\(\textsf{SDP}_x\)) \(\ge \textsf{val}\) (\(\textsf{P}_x\)).

We assume now that \(Q(x), Q^1, \dots , Q^r\) are PSD. Given a matrix Y feasible for (\(\textsf{SDP}_x\)), we denote by \(u_1, \dots , u_{n+1}\) \(\in \mathbb {R}^{n+1}\) a basis of eigenvectors of Y (which is PSD) and their respective eigenvalues \(v_1, \dots , v_{n+1}\) \(\in \mathbb {R}_+\). Let us introduce the two following index sets: \(I = \{ i \in \{ 1, \dots , n+1 \}: (u_i)_{n+1} \ne 0 \} \text { and } J = \{ i \in \{ 1, \dots , n+1 \}: (u_i)_{n+1} = 0 \}.\) We have then \(I \cup J = \{ 1, \dots , n+1 \}\). Moreover,

-

if \(i \in I\): we define the nonnegative scalar \(\mu _i = v_i \, (u_i)_{n+1}^2\) and \(y_i \in \mathbb {R}^n\) s.t. \(u_i = (u_i)_{n+1} \begin{pmatrix} y_i \\ 1 \end{pmatrix}\)

-

if \(i \in J\): we define the nonnegative scalar \(\nu _i = v_i\) and \(z_i \in \mathbb {R}^n\) s.t. \(u_i = \begin{pmatrix} z_i \\ 0 \end{pmatrix}\).

With this notation, we have that

where \(\textbf{0}\) is the null n-dimensional vector (whereas \({0_n}\) is the \(n \times n\) null matrix). Let us define the vector \(\bar{y} = \sum \limits _{i \in I} \mu _i y_i\). Its objective value in (\(\textsf{P}_x\)) is larger than the objective value of Y in (\(\textsf{SDP}_x\)), since

The first inequality is due to \(Q(x) \succeq 0\) and \(\nu _i \ge 0\). The second inequality derives from \(\sum _{i \in I} \mu _i = Y_{n+1,n+1} = 1\), and from the concavity of the function \(G(x,\cdot )\) (Jensen inequality). Similarly, knowing that \(Q^j\) is PSD and that Y is feasible in (\(\textsf{SDP}_x\)), we can show that \(\frac{1}{2} \bar{y}^\top Q^j \bar{y} + (q^j) ^\top \bar{y} + b_j \le \langle {\mathcal {Q}}^j, Y \rangle \le 0\), which means that \(\bar{y}\) is feasible in (\(\textsf{P}_x\)). This implies that \(\langle \mathcal {Q}(x),Y \rangle \le G(x,\bar{y}) \le \textsf{val}\)(\(\textsf{P}_x\)). This being true for any matrix Y feasible in (\(\textsf{SDP}_x\)), we conclude that \(\textsf{val}\)(\(\textsf{SDP}_x\)) \(\ge \textsf{val}\) (\(\textsf{P}_x\)). This proves that \(\textsf{val}\)(\(\textsf{SDP}_x\)) \(= \textsf{val}\)(\(\textsf{P}_x\)).

1.6 Proof of Lemma 8

The Lagrangian of problem (\(\textsf{SDP}_x\)) is defined over \(Y \in \mathbb {S}_{n+1}^+\), \(\lambda \in \mathbb {R}_+^r, \alpha \in \mathbb {R}_+, \beta \in \mathbb {R}\) and reads \( L_x(Y, \lambda , \alpha , \beta ) = \langle \mathcal {Q}(x), Y \rangle - \sum \limits _{j=1}^r \lambda _j \langle \mathcal {Q}^j, Y \rangle + \alpha ( 1 + \rho ^2 - \langle I_{n+1}, Y \rangle ) + \beta (1 - \langle E, Y \rangle ) = \alpha (1+\rho ^2) + \beta + \langle {\mathcal {Q}}(x) - \sum \limits _{j=1}^r \lambda _j {\mathcal {Q}}^j - \alpha I_{n+1} - \beta E, Y \rangle .\)

The Lagrangian dual problem of (\(\textsf{SDP}_x\)) is \(\min \limits _{\lambda ,\alpha ,\beta } \, {\sup \limits _{Y}} \,L_x(Y, \lambda , \alpha , \beta )\), i.e.,

We recognize that the supremum is \(+\infty \), unless \(\sum \limits _{j=1}^r \lambda _j {\mathcal {Q}}^j + \alpha I_{n+1} + \beta E \succeq {\mathcal {Q}}(x)\). This proves that the dual problem of (\(\textsf{SDP}_x\)) can be formulated as (\(\textsf{DSDP}_x\)). We prove now that the Slater condition, which is a sufficient condition for strong duality (\(\textsf{val}\)(\(\textsf{SDP}_x\)) \(= \textsf{val}\)(\(\textsf{DSDP}_x\))) [5, p. 265], holds for the dual problem (\(\textsf{DSDP}_x\)). We denote by \(m_x\) the maximum eigenvalue of \(\mathcal {Q}(x){-\sum \limits _{j=1}^r {\mathcal {Q}}^j}\), and we notice that \((\lambda ,\alpha ,\beta ) = ({1, \dots , 1}, \max \{1 + m_x, 1\}, 0)\) is a strictly feasible point of (\(\textsf{DSDP}_x\)). Hence, the Slater condition holds.

1.7 Proof of Lemma 12

We analyze the variation of the objective function w.r.t. the variable x. Since \(x^* \in \mathcal {X}\) is a feasible value for variable x, the direction \(h = x^* - x^k\) is admissible at \(x^k\) in the problem (21). As F(x) is convex over \(\mathbb {R}^n\), the directional derivative \(F'(x^k,h) = \lim \limits _{t\rightarrow 0^+} \frac{F(x^k + t h) - F(x^k)}{t}\) is well-defined. By optimality of \(x^k\), the directional derivative of function \(F(x) + \frac{\mu _k}{2} \Vert x - \hat{x}^k \Vert ^2\) in the direction h is non-negative, i.e., \(F'(x^k,h) + \mu _k (x^k- \hat{x}^k)^\top h \ge 0\). By convexity of F(x), \(F(x^*) - F(x^k) \ge F'(x^k,h)\). Combining this with the previous inequality yields \(F(x^k) \le F( x^{*}) + \mu _k (x^k - \hat{x}^k)^\top (x^* - x^k)\).

1.8 Proof of Lemma 13

Let \(t^+ = \max \{ t,0 \}\) be the positive part of function t. We notice that the sequence \(\phi (x^k)^+\) is bounded, and thus admits at least one accumulation value \(\ell \). We are going to prove that \(\ell = 0\). Let \(\psi :\mathbb {N} \rightarrow \mathbb {N}\) be any increasing function, such that \(\phi (x^{\psi (k)})^+ \rightarrow \ell \). By compactness of \(\mathcal {X}\) (resp. \(\mathcal {Y}\)), we also assume that \(x^{\psi (k)} \rightarrow x \in \mathcal {X}\) (resp. \(y^{\psi (k)} \rightarrow y \in \mathcal {Y}\)). For \((*)\), \(G(x^{\psi (k)},y^j) \le 0\) for all \(j \in \{0,\dots , \psi (k) - 1\}\); in particular, \(G(x^{\psi (k)},y^{\psi (k-1)}) \le 0\). We deduce that \(G(x^{\psi (k)},y^{\psi (k)}) \le G(x^{\psi (k)},y^{\psi (k)}) - G(x^{\psi (k)},y^{\psi (k-1)}),\) and therefore, since the positive part of a function is non-decreasing,

According to the definition of the \(\delta \)-oracle, Eqs. (1) yields \(\phi (x^{\psi (k)}) - G(x^{\psi (k)},y^{\psi (k)}) \le \delta |\phi (x^{\psi (k)}) |\). If \(\phi (x^{\psi (k)}) \ge 0\), this means that \( \phi (x^{\psi (k)}) - G(x^{\psi (k)},y^{\psi (k)}) \le \delta \, \phi (x^{\psi (k)})\), i.e., \(\phi (x^{\psi (k)}) \le \frac{1}{1 - \delta } G(x^{\psi (k)},y^{\psi (k)})\). To also cover the case, \(\phi (x^{\psi (k)}) < 0\), we can write \(\phi (x^{\psi (k)})^+ \le \frac{1}{1 - \delta } G(x^{\psi (k)},y^{\psi (k)})^+\). As \(\frac{1}{1-\delta } \ge 0\), we obtain from Eq. (A7) that \(\phi (x^{\psi (k)})^+ \le \frac{1}{1 - \delta } \Bigl ( G(x^{\psi (k)},y^{\psi (k)}) - G(x^{\psi (k)},y^{\psi (k-1)})\Bigr )^+\). By continuity of the functions G, and \(\phi \), and the positive part, we deduce that \(\ell = \phi (x)^+ \le \frac{1}{1 - \delta } \left( G(x,y) - G(x,y)\right) ^+ = 0,\) since \((x^{\psi (k)},y^{\psi (k)})\) and \((x^{\psi (k)},y^{\psi (k-1)})\) both converges towards (x, y). We deduce that \(\phi (x^k)^+ \rightarrow 0\) [1, Ex. 2.5.4].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Oustry, A., Cerulli, M. Convex semi-infinite programming algorithms with inexact separation oracles. Optim Lett (2024). https://doi.org/10.1007/s11590-024-02148-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11590-024-02148-3