Abstract

We give a simple and natural method for computing approximately optimal solutions for minimizing a convex function f over a convex set K given by a separation oracle. Our method utilizes the Frank–Wolfe algorithm over the cone of valid inequalities of K and subgradients of f. Under the assumption that f is L-Lipschitz and that K contains a ball of radius r and is contained inside the origin centered ball of radius R, using \(O\left( \frac{(RL)^2}{\varepsilon ^2} \cdot \frac{R^2}{r^2}\right) \) iterations and calls to the oracle, our main method outputs a point \(x \in K\) satisfying \(f(x) \le \varepsilon + \min _{z \in K} f(z)\). Our algorithm is easy to implement, and we believe it can serve as a useful alternative to existing cutting plane methods. As evidence towards this, we show that it compares favorably in terms of iteration counts to the standard LP based cutting plane method and the analytic center cutting plane method, on a testbed of combinatorial, semidefinite and machine learning instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the problem of minimizing a convex function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) over a compact convex set \(K \subseteq \mathbb {R}^n\). We assume that K contains an (unknown) Euclidean ball of radius \(r > 0\) and is contained inside the origin centered ball of radius \(R > 0\), and that f is L-Lipschitz. We have first-order access to f that yields f(x) and a subgradient of f at x for any given x. Moreover, we only have access to K through a separation oracle (SO), which, given a point \(x \in \mathbb {R}^n\), either asserts that \(x \in K\) or returns a linear constraint valid for K but violated by x.

Convex optimization in the SO model is one of the fundamental settings in optimization. The model is relevant for a wide variety of implicit optimization problems, where an explicit description of the defining inequalities for K is either too large to store or not fully known. The SO model was first introduced in [29] where it was shown that an additive \(\varepsilon \)-approximate solution can be obtained using \(O(n\log (LR/(\varepsilon r)))\) queries via the center of gravity method and \(O(n^2\log (LR/(\varepsilon r)))\) queries via the ellipsoid method. This latter result was used by Khachiyan [27] to give the first polynomial time method for linear programming. The study of oracle-type models was greatly extended in the classic book of Grötschel et al. [23], where many applications to combinatorial optimization were provided. Further progress on the SO model was given by Vaidya [36], who showed that the \(O(n \log (LR/(\varepsilon r)))\) oracle complexity can be efficiently achieved using the so-called volumetric barrier as a potential function, where the best current running time for such methods was given very recently [25, 28].

From the practical perspective, two of the most popular methods in the SO model are the standard linear programming (LP) based cutting plane method, independently discovered by Kelley [26], Goldstein-Cheney [9] as well as Gomory [22] (in the integer programming context), and the analytic center cutting plane method [34] (ACCPM).

The LP based cutting plane method, which we henceforth dub the standard cut loop, proceeds as follows: starting with finitely many linear underestimators of f and linear constraints valid for K, in each iteration it solves a linear program that minimizes the lower envelope of f subject to the current linear relaxation of K. The resulting point x is then used to query f and the SO to obtain a new underestimator for f and a new constraint valid for K. Note that if f is a linear function, it repeatedly minimizes f over linear relaxations of K. While it is typically fast in practice, it can be unstable, and no general quantitative convergence guarantees are known for the standard cut loop.

To link to integer programming, in that context K is the convex hull of integer points of some polytope P and the objective is often linear, and the method is initialized with a linear description of P. A crucial difference there is that the separator SO is generally only efficient when queried at vertices of the current relaxation.

ACCPM is a barrier based method, in which the next query point is the minimizer of the barrier for the current inequalities in the system. ACCPM is in general a more stable method with provable complexity guarantees. Interestingly, while variants of ACCPM with \(O\left( n \log (RL/(r\varepsilon ))^2\right) \) convergence exist, achieved by judiciously dropping constraints [1], the more practical variants have worse guarantees. For instance, if K is the ball of radius R, the standard variant of ACCPM is only shown to achieve \(O(n(RL/\varepsilon )^2 \log (RL/\varepsilon ))\) convergence [30].

In this paper, we describe a new method for convex optimization in the SO model that computes an additive \(\varepsilon \)-approximate solution within  iterations. Our algorithm is easy to implement, and we believe it can serve as a useful alternative to existing methods. In our experimental results, we show that it compares favorably in terms of iteration counts to the standard cut loop and the analytic center cutting plane method, on a testbed of combinatorial, semidefinite and machine learning instances.

iterations. Our algorithm is easy to implement, and we believe it can serve as a useful alternative to existing methods. In our experimental results, we show that it compares favorably in terms of iteration counts to the standard cut loop and the analytic center cutting plane method, on a testbed of combinatorial, semidefinite and machine learning instances.

Before explaining our approach, we review the relevant work in related models. To begin, there has been a tremendous amount of work in the context of first-order methods [3, 5], where the goal is to minimize a possibly complicated function, given by a gradient oracle, over a simple domain K (e.g., the simplex, cube, \(\ell _2\) ball). These methods tend to have cheap iterations and to achieve \(\textrm{poly}(1/\varepsilon )\) convergence rates. They are often superior in practice when the requisite accuracy is low or moderate, e.g., within \(1\%\) of optimal. For these methods, often variants of (sub-)gradient descent, it is generally assumed that computing (Euclidean) projections onto K as well as linear optimization over K are easy. If one only assumes access to a linear optimization (LO) oracle on K, K can become more interesting (e.g., the shortest-path or spanning-tree polytope). In this context, one of the most popular methods is the so-called Frank–Wolfe algorithm [19] (see [24] for a modern treatment), which iteratively computes a convex combination of vertices of K to obtain an approximate minimizer of a smooth convex function.

In the context of combinatorial optimization, there has been a considerable line of work on solving (implicit) packing and covering problems using the so-called multiplicative weights update (MWU) framework [20, 31, 33]. In this framework, one must be able to implement an MWU oracle, which in essence computes optimal solutions for the target problem after the “difficult” constraints have been aggregated according to the current weights. This framework has been applied for getting fast \((1 \pm \varepsilon )\)-approximate solutions to multi-commodity flow [20, 33], packing spanning trees [8], the Held–Karp approximation for TSP [7], and more, where the MWU oracle computes shortest paths, minimum cost spanning trees, minimum cuts respectively in a sequence of weighted graphs. The MWU oracle is in general just a special type of LO oracle, which can often be interpreted as a SO that returns a maximally violated constraint. While certainly related to the SO model, it is not entirely clear how to adapt MWU to work with a general SO, in particular in settings unrelated to packing and covering.

A final line of work, which directly inspires our work, has examined simple iterative methods for computing a point in the interior of a cone \(\Sigma \) that directly apply in the SO model. The application of simple iterative methods for solving conic feasibility problems can be traced to Von Neumann in 1948 (see [15]), and a variant of this method, the perceptron algorithm [32] is still very popular today. Von Neumann’s algorithm computes a convex combination of the defining inequalities of the cone, scaled to be of unit length, of nearly minimal Euclidean norm. The separation oracle is called to find an inequality violated by the current convex combination, and this inequality is then used to make the current convex combination shorter, in an analogous way to Frank–Wolfe. This method is guaranteed to find a point in the cone in \(O\left( 1/\rho ^2\right) \) iterations, where \(\rho \) is the so-called width of \(\Sigma \) (the radius of the largest ball contained in \(\Sigma \) centered at a point of norm 1). Starting in 2004, polynomial time variants of this and related methods (i.e., achieving \(\log (1/\rho )\) dependence) have been found [6, 10, 17], which iteratively “rescale” the norm to speed up the convergence. These rescaled variants can also be applied in the oracle setting [4, 11, 14] with appropriate adaptations. The main shortcoming of existing conic approaches is that they are currently not well-adapted for solving optimization problems rather than feasibility problems.

Our approach. In this work, we build upon von Neumann’s approach and utilize the Frank–Wolfe algorithm over the cone of valid inequalities of K as well as the subgradients of f in a way that yields a clean, simple, and flexible framework for solving general convex optimization problems in the SO model. For simpler explanation, let us assume that \(f(x) = \langle c,x \rangle \) is a linear function and that we know an upper bound \(\textrm{UB}\) on the minimum of f over K. Given some linear inequalities \(\langle a_i,x \rangle \le b_i\) that are valid for all \(x \in K\), our goal is to find convex combinations p of the homogenized points \((c,\textrm{UB})\) and \((a_i,b_i)\) that are “close” to the origin. Note that if \(p = \textbf{0}\), the fact that K is full-dimensional implies that \((c,\textrm{UB})\) appears with a nonzero coefficient and hence \((-c,-\textrm{UB})\) is a nonnegative combination of the points \((a_i,b_i)\), which in turn shows that \(\langle -c, x \rangle \le -UB\) is implied by the linear inequalities \(\langle a_i,x \rangle \le b_i\), i.e., \(\textrm{UB}\) is equal to the minimum of f over K. In view of this, we will consider a potential \(\varPhi :\mathbb {R}^{n+1} \rightarrow \mathbb {R}_+\) with the property that if \(\varPhi (p)\) is sufficiently small, then the convex combination will yield an explicit certificate that \(\textrm{UB}\) is close to the minimum of f over K.

Given a certain convex combination p, note that the gradient of \(\varPhi \) at p provides information about whether moving towards one of the known points will (significantly) decrease \(\varPhi (p)\). However, if no such known point exists, it turns out that the “dehomogenization” of the gradient (a scaling of its projection onto the first n coordinates) is a natural point \(x \in \mathbb {R}^n\) to query the SO with. In fact, if \(x \in K\), it will have improved objective value with respect to f. Otherwise, the SO will provide a linear inequality such that moving towards its homogenization decreases \(\varPhi (p)\).

In this work, we will show that the above paradigm immediately yields a rigorous algorithm for various natural choices of \(\varPhi \) and scalings of inequalities. We will also see that general convex functions can be directly handled in the same manner by simply replacing \((c,\textrm{UB})\) with all subgradient cuts of f learned throughout the iterations. The same applies to pure feasibility problems for which we set \(f = \textbf{0}\). The convergence analysis of our algorithm is simple and based on standard estimates for the Frank–Wolfe algorithm.

Besides its conceptual simplicity and distinction to existing methods for convex optimization in the SO model, we also regard it as a practical alternative. In fact, in terms of iterations, our vanilla implementation in JuliaFootnote 1 performs similarly and often even better than the standard cut loop and the analytic center cutting plane method evaluated on a testbed of oracle-based linear optimization problems for matching problems, semidefinite relaxations of the maximum cut problem, and LPBoost. Moreover, the flexibility of our framework leaves several degrees of freedom to obtain optimized implementations that outperform our naive implementation.

A preliminary version of this article has appeared in the conference proceedings of IPCO 2022 [13]. The current article extends on this version by providing missing proofs and presenting more detailed numerical experiments. Moreover, we generalize Algorithm 1 by no longer requiring explicit knowledge on the Lipschitz constant L.

2 Algorithm

Recall that we are given first-order access to a convex function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) that we want to minimize over a convex body \(K \subseteq \mathbb {R}^n\). In the case where f is not differentiable, with a slight abuse of notation we interpret \(\nabla f(x)\) to be any subgradient of f at x. We can access K by a separation oracle that, given a point \(x \in \mathbb {R}^n\), either asserts that \(x \in K\) or returns a point \((a,b) \in \mathcal {A}\subseteq \mathbb {R}^{n+1}\) with \(\langle a,x \rangle > b\) such that \(\langle a,y \rangle \le b\) holds for all \(y \in K\). Here, \(\langle \cdot ,\cdot \rangle \) denotes the standard scalar product and we assume that all points in \(\mathcal {A}\) correspond to linear constraints valid for K. To state our algorithm, let \(\Vert \cdot \Vert \) denote any norm on \(\mathbb {R}^{n+1}\) and \(\Vert \cdot \Vert _*\) its dual norm. Moreover, let \(\varPhi :\mathbb {R}^{n+1} \rightarrow \mathbb {R}_+\) be any strictly convex and differentiable function with \(\min _{x \in \mathbb {R}^{n+1}} \varPhi (x) = \varPhi (\textbf{0}) = 0\). Our method is given in Algorithm 1, in which we denote the number of iterations by T for later reference. However, T does not need to be specified in advance, and the algorithm may be stopped at any time, e.g., when a solution or bound of desired accuracy has been found.

In Line 5, \(\nabla \varPhi (p_t)[1:n]\) denotes the first n components of \(\nabla \varPhi (p_t)\), and \(\nabla \varPhi (p_t)[n+1]\) denotes the last component of \(\nabla \varPhi (p_t)\). The sets \(A_t\) and \(G_t\) denote the already known/separated inequalities and objective gradients during iteration t.

Lemma 1

When \(x_t \in \mathbb {R}^n\) is computed in iteration t of Algorithm 1, it is well-defined and we have \(\langle c, x_t \rangle \le d\) for every \((c,d) \in A_{t} \cup G_t\).

Proof

Since \(p_t\) minimizes \(\varPhi \) over \({\text {conv}}(A_t \cup G_t)\), for every \(q \in {\text {conv}}(A_{t} \cup G_t)\) we have \(\langle \nabla \varPhi (p_t), q-p_t \rangle \ge 0\). In particular, if \(p_t \ne \textbf{0}\), then we have

First, apply (1) to \(q = (\textbf{0},1)/\Vert (\textbf{0},1)\Vert _* \in A_t\) and conclude \(\nabla \varPhi (p_t)[n+1] > 0\). This makes sure that \(x_t\) can be computed. Second, we apply Inequality (1) to \(q = (c,d) \in A_t \cup G_t\) and find that \( d - \langle c, x_t \rangle = \frac{1}{\nabla \varPhi (p_t)[n+1]}\langle \nabla \varPhi (p_t), (c,d) \rangle > 0, \) thus \(x_t\) satisfies \(\langle c, x_t \rangle \le d\) for all \((c,d)\in A_t \cup G_t\). \(\square \)

Notice that throughout our algorithm \(\textrm{UB}\) is always an upper bound on \(\textrm{OPT}= \min _{x \in K} f(x)\). We remark that when \(\textrm{UB}\) is returned in Line 9, then \(\nabla f(x_t) = \textbf{0}\) and hence \(f(x_t) = \textrm{UB}= \min _{x \in \mathbb {R}^n} f(x) = \textrm{OPT}\). In what follows, we see that \(\textrm{UB}\) is generally getting closer to \(\textrm{OPT}\) as the number of iterations increases. To this end, we will first show that \(\varPhi (p_t)\) decreases at a specific rate.

Note that, for the sake of presentation, in Line 3 we require \(p_t\) to be the convex combination of minimum \(\varPhi \)-value. As is implicit in the proof of Lemma 2, it is not necessary to compute such a minimum to ensure the desired convergence rate. More precisely, it suffices to let \(p_t\) be a suitable convex combination of \(p_{t-1}\) and some \({(c,d) \in A_t \cup G_t}\) with \(\langle \nabla \varPhi (p_{t-1}), (c,d) \rangle < 0\). If the last coordinate of \(p_{t-1}\), as discussed in the above proof, is not positive, then such an update can be made towards \((\textbf{0},1)/\Vert (\textbf{0},1)\Vert _* \in A_t\). Any such update will significantly decrease \(\varPhi (p_t)\), and the computation in Line 3 is guaranteed to make at least that much progress. This shows that simple updates of \(p_t\), which may be more preferable in practice, still suffice to achieve the claimed convergence rates.

Lemma 2

Suppose that \(\varPhi \) is 1-smooth with respect to \(\Vert \cdot \Vert _*\). Then for every \(t = 1,\dots ,T\), Algorithm 1 satisfies \(\varPhi (p_t) \le \frac{8}{t+2}\).

Proof

Suppose that \(p_t \ne \textbf{0}\). Then, by Line 3 in Algorithm 1, \(\Vert p_t\Vert _* \le 1\) since \(p_t\) is a convex combination of points with norm 1. We claim that in every iteration with \(p_t \ne \textbf{0}\) we add a point \(q_t \in A_{t+1} \cup G_{t+1}\) with \(\Vert q_t\Vert _* = 1\) such that

holds. To see this, recall from the proof of the previous lemma that \(\nabla \varPhi (p_t)[n+1] > 0\) holds. If \(x_t \in K\), then \(q_t = g_t / \Vert g_t\Vert _*\) is added to \(G_{t+1}\), which satisfies

where the last equality is due to the definition of \(x_t\). Otherwise, our algorithm receives some \((a,b) \in \mathcal {A}\) with \(\langle a, x_t \rangle > b\) and \(\Vert (a,b)\Vert _*=1\) and hence setting \(q_t = (a,b) \in A_{t+1}\) we see that

holds. This establishes the claim.

Next, recall that \(\varPhi \) is 1-smooth with respect to \(\Vert \cdot \Vert _*\) if

holds for all \(x,y \in \mathbb {R}^{n+1}\). In particular, for \(x=\textbf{0}\) and \(y=p_1\) this yields

where the first inequality follows from Line 3 in Algorithm 1 as well as \(A_1 \subseteq A_t\) and \(G_1 \subseteq G_t\). Moreover, setting \(\lambda :=\frac{1}{4} \varPhi (p_t)\) we obtain \(\lambda \le 1\) and

where the second inequality follows from (3), the third inequality follows from (2), the fourth inequality follows from convexity and \(\varPhi (\textbf{0}) = 0\), and the last inequality holds since \(\Vert p_t\Vert _* \le 1\) and \(\Vert q_t\Vert _* \le 1\). From this we derive

It follows that \(\frac{1}{\varPhi (p_t)} \ge \frac{1}{\varPhi (p_1)} + \frac{1}{8}(t-1)\) for all t, which yields the claim since \(\varPhi (p_1) \le \frac{1}{2}\). \(\square \)

The following lemma yields conditions under which a small value of \(\varPhi (p_t)\) implies that \(\textrm{UB}\) is close to \(\textrm{OPT}\). Note in particular that it proves that if \(\Vert p_t\Vert = 0\) then \(\textrm{UB}= \textrm{OPT}\). Recall that a function f is L-Lipschitz on a set \(K \subset \mathbb {R}^n\) when \(|f(x) - f(y)| \le L\Vert x-y\Vert _2\) for all \(x,y \in K\).

Lemma 3

Assume that \(\Vert (x,-1)\Vert \le 2\) holds for every \(x \in K\), and there exist \(z \in K\) and \(\alpha \in (0,1]\) such that \(\langle (a,b), (-z,1) \rangle \ge \alpha \Vert (-z,1)\Vert \Vert (a,b)\Vert _*\) holds for every \((a,b) \in \mathcal {A}\cup \{(\textbf{0},1)/\Vert (\textbf{0},1)\Vert _*\}\). If \(\Vert p_T\Vert _* \le \alpha /2\) in Algorithm 1, then the returned value satisfies \(\textrm{UB}\ge \textrm{OPT}\ge \textrm{UB}- \frac{8 U \Vert p_T\Vert _*}{\alpha }\), where \(U :=\max _{x \in K} \Vert (\nabla f(x), \langle \nabla f(x), x \rangle )\Vert _*\).

Proof

Let \(x^* \in K\) minimize f(x) over \(x\in K\) and let \(F \subset [T-1]\) be the set of iterations (except the last one) in which \(x_t \in K\). Now write the point \(p_T\) as a convex combination

where \(\lambda \ge 0, \gamma \ge 0\) and \(\Vert (\lambda ,\gamma )\Vert _1 = 1\), and where \( g_t = (\nabla f(x_t), \langle \nabla f(x_t), x_t \rangle )\) as defined in Algorithm 1. Then we have

Here, the first inequality arises from convexity of f, the second from the facts that \(U \ge 0\) and that \(x^* \in K\) satisfies \(\langle (a,b), (-x^*,1) \rangle \ge 0\) for every \((a,b) \in A_T\), and the third from the Cauchy–Schwarz inequality. In particular, we find that \(\min _{t \in F} f(x_t) - f(x^*) \le \frac{2 U \Vert p_T\Vert _*}{\sum _{t \in F} \gamma _t}\) whenever \({\sum _{t \in F}\gamma _t > 0}\). To lower bound this latter quantity, we use the assumptions on z to derive the inequalities

Now divide through by \(\Vert (-z,1)\Vert \) to find \(\alpha (1-\sum _{t\in F}\gamma _t) \le \Vert p_T\Vert _* + \sum _{t \in F} \gamma _t\). Hence, if \(\Vert p_T\Vert _* \le \frac{\alpha }{2} \) then \(\alpha /2 \le (\alpha + 1)\sum _{t \in F}\gamma _t \le 2\sum _{t \in F}\gamma _t\). This lower bound on \(\sum _{t \in F}\gamma _t\) suffices to prove the lemma. \(\square \)

Combining the previous two lemmas, we obtain the following convergence rate of our algorithm:

Theorem 1

Assume that \(\beta > 0\) is such that \(\varPhi (x) \ge \beta \Vert x\Vert ^2_*\) for all \(x \in \mathbb {R}^{n+1}\). Under the assumptions of Lemmas 2 and 3, Algorithm 1 computes, for every \(T \ge \frac{32}{\beta \alpha ^2}\), a value \(\textrm{UB}< \infty \) satisfying \(\textrm{UB}\ge \min _{x \in K} f(x) \ge \textrm{UB}- \frac{32U}{\alpha \sqrt{\beta (T+2)}}\).

Proof

After T iterations, we have \(\beta \Vert p_T\Vert _*^2 \le \varPhi (p_T) \le \frac{8}{T+2} \le \beta \alpha ^2/4\) per Lemma 2. Since then \(\Vert p_T\Vert _* \le \frac{\sqrt{8}}{\sqrt{\beta (T+2)}} \le \alpha /2\), Lemma 3 tells us that \(\textrm{OPT}\ge \textrm{UB}- \frac{32 U}{\sqrt{\beta (T+2)}\alpha }\). \(\square \)

Theorem 2

Let \(K \subset \mathbb {R}^n\) be a convex body satisfying \(z + r\mathbb B_2^n \subset K \subset R\mathbb B_2^n\), given by a separation oracle \(\mathcal {A}\), and let \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) be an L-Lipschitz convex function given by a subgradient oracle.

Apply Algorithm 1 to the function f using norm \(\Vert (x,y)\Vert :=\sqrt{2} \Vert (x/R,y)\Vert _2\) and potential \(\varPhi (a,b) :=\frac{1}{4} \Vert (R a,b)\Vert _2^2\). Then, for every \(\varepsilon > 0\), after

iterations we have \(\textrm{UB}\ge \min _{x \in K} f(x) \ge \textrm{UB}- \varepsilon \).

Proof

Note that our choice of norm implies that \(\Vert (a,b)\Vert _* = \frac{1}{\sqrt{2}} \Vert (Ra, b)\Vert _2\). We claim that our choice of input satisfies the conditions of Theorem 1 with \(\beta = 1/2\) and \(\alpha = r/4R\). Given the claim, Theorem 1 directly proves the result. To prove the claim, apart from verifying that the bounds on \(\beta \) and \(\alpha \) hold, we must verify smoothness of \(\varPhi \) with respect to the dual norm, a bound of 2 on the norm of \((-x,1)\) for \(x \in K\), as well as \(U \le LR\).

The setting \(\beta =1/2\) is direct by definition of \(\varPhi \). Since \(\Vert \cdot \Vert _*\) is a Euclidean norm, it is immediate that \(\varPhi \) is 1-smooth with respect to \(\Vert \cdot \Vert _*\). For each \(x \in K\), we may also verify that

and

which implies that \(U \le LR\). We now show the lower bound \(\alpha \ge r/4R\). Firstly, we see that

Next, any (a, b) returned by the oracle is normalized so that \(\Vert (a,b)\Vert _* = 1 \Leftrightarrow \Vert (R a,b)\Vert _2=\sqrt{2}\). Note then that \(\Vert (-z,1)\Vert \Vert (a,b)\Vert _* \le 2\). From here, we observe that

since \(z+r a/\Vert a\Vert _2 \in K\) by assumption. Furthermore, \(b - \langle a, z \rangle \ge b - \Vert a\Vert _2 \Vert z\Vert _2 \ge b - R\Vert a\Vert _2\). Thus, \(b - \langle a, z \rangle \ge \max \{ r\Vert a\Vert _2, b-R\Vert a\Vert _2\}\). We now examine two cases. If \(\Vert a\Vert _2 \ge 1/2R\), then \(b - \langle a, z \rangle \ge r/2R \ge r/4R \cdot \Vert (-z,1)\Vert \Vert (a,b)\Vert _*\). If \(\Vert a\Vert _2 \le 1/2R\), then \(|b| \ge 1\) since \(\Vert (R a,b)\Vert _2^2 = 2\). This gives \(b- \langle a, z \rangle \ge b-\Vert a\Vert _2 \ge 1/2 \ge r/2R\). Thus, \(\alpha \ge r/4\), as needed. \(\square \)

3 Computational experiments

In this section, we provide a computational comparison of our method with the standard cut loop, the ellipsoid method, and the analytic center cutting plane method on a testbed of linear optimization instances. For comparison purposes, all four methods are embedded into a common cutting plane framework such that the same termination criteria apply, see Algorithm 2 which we describe in the next paragraph in more detail.

3.1 Framework

Our generic cutting plane framework gets the objective \(\langle c, x \rangle \) which is to be minimized, the radius R of an outer ball containing the feasible region K, and two oracles as input. The separation oracle SO is equipped with a set of initial linear inequalities valid for K (such as bounds on variables). These inequalities define an initial polyhedral relaxation P of K, which will be refined whenever new inequalities are separated. The point oracle PO implements the points that are to be separated by the different methods, e.g., the oracle for the standard cut loop will return a point in \(\textrm{argmin}\{\langle c, x \rangle : x \in P\}\). Moreover, for each instance, we will be given a finite upper bound \(\textrm{UB}\) and incorporate the linear inequality \(\langle c, x \rangle \le \textrm{UB}\) in a similar way. This upper bound gets updated whenever a feasible solution of better objective value was found. Our framework collects all inequalities queried by the current method and computes the resulting lower bound on the optimum value in every iteration. Each method is stopped whenever the difference of upper and lower bound is below \(10^{-3}\).

We will also inspect the possibility of a smart oracle that, regardless of whether a given point x is feasible, may still provide a valid inequality as well as a feasible solution (for instance, by modifying x in a simple way so that it becomes feasible). Such an oracle is often automatically available and can have a positive impact on the performance of the considered algorithms. For the problems we consider, the actual implementation of a smart oracle will be specified below.

3.2 Implementation

The framework has been implemented in julia 1.6.2 using JuMP and Gurobi 9.1.1. To guarantee a fair comparison, all four methods have been implemented in a straightforward fashion. We use the textbook implementation of the ellipsoid method, and Badenbroek’s implementation of the analytic center cutting plane method [2]. Our method is implementedFootnote 2 in the spirit of Theorem 2, where \(p_t\) is computed using Gurobi. Note that the number reported in Table 1 deviate slightly from those reported in [13], because we have rerun the experiments in a different computational environment and Algorithm 1 does not longer require to normalize objective gradients.

3.3 Test sets

We use three problem classes in our experiments: linear programming formulations of the maximum-cardinality matching problem, semidefinite relaxations of the maximum cut problem, and LPBoost instances for classification problems.

For the maximum-cardinality matching problem, we consider the linear program

due to Edmonds [18], where \(G=(V,E)\) is a given undirected graph, \(\delta (v)\) is the set of all edges incident to v, and E[U] is the set of all edges with both endpoints in U. The latter constraints are handled within an oracle that computes an inequality minimizing \((|U|-1)/2 - \sum _{e \in E[U]} x_e\), whereas the other inequalities are provided as initial constraints. For the above problem, the smart version of the oracle does not provide a feasible point since there is no obvious way of transforming a given point into a feasible one. However, the smart version always provides the minimizing inequality.

We consider 16 random instances with 500 nodes, generated as follows. For each \(r \in \{30,33,\dots ,75\}\) we build an instance by sampling r triples of nodes \(\{u,v,w\}\) and adding the edges of the induced triangles to the graph, forming the test set matching. We believe that these instances are interesting because the r triangles give rise to many constraints to be added by the oracle. Moreover, we selected all 13 instances from the Color02 symposium [12] with less than 300 edges, yielding the test set matching02.

Our second set of instances is based on the semidefinite relaxation of Goemans and Williamson [21] for the maximum cut problem

where c are edge weights on the edges of (V, E). We add the box constraints \({X \in [-1,1]^{V \times V}}\) to the initial constraints and handle the semidefiniteness constraint by a separation oracle that, given X, computes an eigenvector h of X of minimum eigenvalue and returns the inequality \(\langle hh^\intercal , X \rangle \ge 0\).

Within the smart version of the oracle, this constraint is returned regardless of the feasibility of X. If X is not feasible, the semidefinite matrix \(\frac{1}{\lambda - 1}X - \frac{\lambda }{\lambda - 1}I\) is returned, where \(\lambda \) denotes the minimum eigenvalue of X and I the identity matrix. We generated 10 complete graphs on 10 nodes with edge weights chosen uniformly at random in [0, 1].

Our third set of instances arises from LPBoost [16], a classifier algorithm based on column generation. To solve the pricing problem in column generation, the following linear program is solved:

where \(\Omega \) is a set of parameters, for \(i \in [m]\), \(x^i\) is a data point labeled as \(y_i = \pm 1\), \(h(\cdot , \omega )\) is a classifier parameterized by \(\omega \in \Omega \) that predicts the label of \(x^i\) as \(h(x^i, \omega ) \in \{-1,+1\}\), and \(D > 0\) is a parameter. In our experiments, we restrict \(h(\cdot , \omega )\) to be a decision tree of height 1, so-called tree stumps, and choose \(D = \frac{5}{n}\). To separate a point \((\gamma ', \lambda ')\), we use julia’s DecisionTree module to compute a decision stump with score function \(\lambda '\) that weights the data points, whose corresponding inequality classifies \((\gamma ', \lambda ')\) as feasible or not. A smart oracle always returns the computed inequality and decreases \(\gamma '\) until \((\gamma ', \lambda ')\) becomes feasible according to the found decision stump.

We extracted all data sets from the UC Irvine Machine Learning Repository [35] that are labeled as multivariate, classification, ten-to-hundred attributes, hundred-to-thousand instances. Data sets with alpha-numeric values or too many missing values have been discarded. If a selected data set contains data points with missing values, these are removed from the data set. Table 5 in Appendix 1 reports on more details of this test set.

3.4 Results

In the remainder of this section, we evaluate the performance of Algorithm 1 in comparison to the standard cut loop, the ellipsoid method, and the analytic center method. To this end, we have conducted three different experiments, which we will detail below. In what follows, we report on the number of iterations, i.e., oracle calls, each method needs to obtain a gap (upper bound minus lower bound) below \(10^{-3}\). We impose a limit of 500 iterations per instance. Since we are testing naive implementations of each method, we do not report on running time.

To get more insights on the primal and dual performance of the tested methods, we also report on their primal and dual integrals. Note that we are solving maximization problems in this section, as opposed to minimization problems in Sect. 2. That is, primal (dual) solutions provide lower (upper) bounds on \(\textrm{OPT}\). If \(\ell _i\) is the lower bound on the optimal objective value \(\textrm{OPT}\) in iteration i, the primal integral is \(\sum _{i = 1}^{500} \frac{\textrm{OPT}- \ell _i}{\textrm{OPT}- \ell _1}\). The dual integral is computed analogously. If an integral is small, this indicates quick progress in finding the correct value of the corresponding bound.

Our first experiment compares the different algorithms using non-smart oracles and using \(R \Vert c\Vert \) as upper bound on the optimal objective value. Table 1 summarizes our results, where all numbers are average values. Here, “matching” refers to the random instances and “matching02” to the instances from the Color02 symposium. The standard cut loop is referred to as “LP”, the ellipsoid method as “ellipsoid”, the analytic center method as “analytic”, and Algorithm 1 as “our”. Note that Table 2 does not report on the primal integral of “LP” since the standard cut loop is a dual method.

For all tested instances, we observe that the ellipsoid and analytic center methods are struggling with solving any instance within 500 iterations. The standard cut loop is able to solve all problems more efficiently with, on average, between 90–284 iterations per instance. Our algorithm outperforms the standard cut loop on all test sets except for LPboost. For matching problems, our algorithm requires 44% (matching) and 84% less iterations than the standard cut loop, for maxcut problems it is 27% faster, and only for LPboost instances it requires three times as many iterations as the standard cut loop on average.

An explanation for this behavior is explained by the integral values, which are provided by Table 1. Among all tested algorithms, our method beforms best in finding an optimal solution as the primal integrals are comparably very small. On the dual side, the picture is less clear, but in particular for matching instances our algorithm is able to quickly find the true dual value. For LPboost, however, our method converges much slower on the dual side than the other methods. We conjecture that this behavior is due to the special structure of the objective for LPboost instances. While the other problem classes have a completely dense objective, only one variable contributes to the objective for LPboost.

For this reason, our second experiment tested the impact of smart oracles on the performance of the four different methods. In particular for LPboost this might make a difference, because it is rather easy to turn an infeasible point into a feasible solution by setting the objective value \(\gamma \) on the corresponding value. Indeed, as Table 2 shows, there is almost no effect of smart oracles on the tested maxcut problems. Moreover, there is no effect on matching problems since there we did not implement smart oracles as discussed above. For LPboost, however, the ellipsoid and analytic center method as well as our algorithm benefit greatly from smart oracles. While the analytic center method and Algorithm 1 achieve the lowest primal integral values of approximately 6, the relative improvement is most pronounced for the ellipsoid method whose primal integral drops by 93%.

Finally, we investigated the impact of knowing a good feasible solution on the performance of the four different algorithms. To this end, we initialized the upper bound on the optimal objective value in our cutting plane framework either with the optimal value or the a value being 1% larger than the optimum value. In the first case, all algorithms only need to find a matching dual value, whereas in the second case also an (almost) optimal primal solution needs to be found. Tables 3 and 4 report on our results, respectively, using smart oracles.

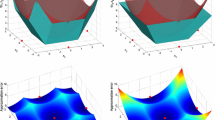

We can see that initializing the upper bound with the optimal value greatly improves the performance of the analytic center method and our algorithm, and also the ellipsoid method performs faster for matching02 instances. The performance of the standard cut loop, however, does not show significant differences in comparison with the standard initialization. But if an almost optimal initialization of the upper bound is used, we cannot observe large differences in the average iteration count in comparison to the standard initialization. This shows that all tested methods perform rather well on the dual side, but are struggling in finding an almost optimal primal solution. Based on the primal integral values, however, we can see that our method performs on average better than the ellipsoid and analytic center method. The above findings are also supported by the plots in Fig. 1 that show the typical development of the relative primal and dual bounds for the different problem types.

References

Atkinson, D.S., Vaidya, P.M.: A cutting plane algorithm for convex programming that uses analytic centers. Math. Program. 69(1), 1–43 (1995)

Badenbroek, R., de Klerk, E.: An analytic center cutting plane method to determine complete positivity of a matrix. INFORMS J. Comput. 34, 1115–125 2022 . https://doi.org/10.1287/ijoc.2021.1108

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics (2017). https://doi.org/10.1137/1.9781611974997

Belloni, A., Freund, R.M., Vempala, S.: An efficient rescaled perceptron algorithm for conic systems. Math. Oper. Res. 34(3), 621–641 (2009)

Ben-Tal, A., Nemirovski, A.: Lectures on Modern Convex Optimization. Society for Industrial and Applied Mathematics, London (2001). https://doi.org/10.1137/1.9780898718829

Betke, U.: Relaxation, new combinatorial and polynomial algorithms for the linear feasibility problem. Discret. Comput. Geom. (2004). https://doi.org/10.1007/s00454-004-2878-4

Chekuri, C., Quanrud, K.: Approximating the Held–Karp bound for metric TSP in nearly-linear time. In: 2017 IEEE 58th Annual Symposium on Foundations of Computer Science (FOCS). IEEE (2017). https://doi.org/10.1109/focs.2017.78

Chekuri, C., Quanrud, K.: Near-linear time approximation schemes for some implicit fractional packing problems. In: Proceedings of the Twenty-Eighth Annual ACM-SIAM Symposium on Discrete Algorithms. Society for Industrial and Applied Mathematics (2017). https://doi.org/10.1137/1.9781611974782.51

Cheney, E.W., Goldstein, A.A.: Newton’s method for convex programming and Tchebycheff approximation. Numer. Math. 1(1), 253–268 (1959)

Chubanov, S.: A strongly polynomial algorithm for linear systems having a binary solution. Math. Program. 134(2), 533–570 (2011). https://doi.org/10.1007/s10107-011-0445-3

Chubanov, S.: A polynomial algorithm for linear feasibility problems given by separation oracles. Optimization Online (2017)

Color02—Computational Symposium: Graph Coloring and its Generalizations. Available at http://mat.gsia.cmu.edu/COLOR02 (2002)

Dadush, D., Hojny, C., Huiberts, S., Weltge, S.: A simple method for convex optimization in the oracle model. In: Aardal, K., Sanità, L. (eds.) Integer Programming and Combinatorial Optimization, 23rd International Conference, IPCO 2022 (2022)

Dadush, D., Végh, L.A., Zambelli, G.: Rescaling algorithms for linear conic feasibility. Math. Oper. Res. 45(2), 732–754 (2020). https://doi.org/10.1287/moor.2019.1011

Dantzig, G.B.: Converting a converging algorithm into a polynomially bounded algorithm. Technical report, Stanford University, 1992. 5.6, 6.1, 6.5 (1991)

Demiriz, A., Bennett, K.P., Shawe-Taylor, J.: Linear programming boosting via column generation. Mach. Learn. 46(1), 225–254 (2002)

Dunagan, J., Vempala, S.: A simple polynomial-time rescaling algorithm for solving linear programs. Math. Program. 114(1), 101–114 (2007). https://doi.org/10.1007/s10107-007-0095-7

Edmonds, J.: Maximum matching and a polyhedron with 0,1-vertices. J. Res. Natl. Bureau Stand. 69B(1–2), 125–130 (1964)

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Naval Res. Logist. Q. 3(1–2), 95–110 (1956)

Garg, N., Könemann, J.: Faster and simpler algorithms for multicommodity flow and other fractional packing problems. SIAM J. Comput. 37(2), 630–652 (2007). https://doi.org/10.1137/s0097539704446232

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 42(6), 1115–1145 (1995). https://doi.org/10.1145/227683.227684

Gomory, R.E.: Outline of an algorithm for integer solutions to linear programs. Bull. Am. Math. Soc. 64, 275–278 (1958)

Grötschel, M., Lovász, L., Schrijver, A.: Geometric Algorithms and Combinatorial Optimization, vol. 2. Springer (1988). https://doi.org/10.1007/978-3-642-78240-4

Jaggi, M.: Revisiting Frank–Wolfe: projection-free sparse convex optimization. In: Proceedings of Machine Learning Research, vol. 28, pp. 427–435. PMLR, Atlanta, Georgia, USA (17–19, 2013). http://proceedings.mlr.press/v28/jaggi13.html

Jiang, H., Lee, Y.T., Song, Z., Wong, S.C.w.: An improved cutting plane method for convex optimization, convex-concave games, and its applications. In: Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing, pp. 944–953. STOC 2020, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3357713.3384284

Kelley, J.E., Jr.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8(4), 703–712 (1960)

Khachiyan, L.G.: A polynomial algorithm in linear programming (in Russian). Doklady Akademiia Nauk SSSR 224, 1093–1096 (1979), English Translation: Soviet Mathematics Doklady 20, 191–194

Lee, Y.T., Sidford, A., Wong, S.C.: A faster cutting plane method and its implications for combinatorial and convex optimization. In: 2015 IEEE 56th Annual Symposium on Foundations of Computer Science, pp. 1049–1065 (2015). https://doi.org/10.1109/FOCS.2015.68

Nesterov, Y.: Cutting plane algorithms from analytic centers: efficiency estimates. Math. Program. 69(1), 149–176 (1995)

Plotkin, S.A., Shmoys, D.B., Tardos, É.: Fast approximation algorithms for fractional packing and covering problems. Math. Oper. Res. 20(2), 257–301 (1995). https://doi.org/10.1287/moor.20.2.257

Rosenblatt, F.: The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65(6), 386–408 (1958). https://doi.org/10.1037/h0042519

Shahrokhi, F., Matula, D.W.: The maximum concurrent flow problem. J. ACM 37(2), 318–334 (1990). https://doi.org/10.1145/77600.77620

Sonnevend, G.: New algorithms in convex programming based on a notion of “centre” (for systems of analytic inequalities) and on rational extrapolation. In: Hoffmann, K.H., Zowe, J., Hiriart-Urruty, J.B., Lemarechal, C. (eds.) Trends in Mathematical Optimization: 4th French-German Conference on Optimization, pp. 311–326. Birkhäuser Basel, Basel (1988)

UC Irvine Machine Learning Repository. https://archive-beta.ics.uci.edu/ml/datasets. Accessed 3 Sep 2021

Vaidya, P.M.: A new algorithm for minimizing convex functions over convex sets. Math. Program. 73(3), 291–341 (1996). https://doi.org/10.1007/bf02592216

Yudin, D., Nemirovsky, A.: Informational complexity and efficient methods for solution of convex extremal problems. Econ. Math. Methods 12, 357–369 (1976)

Acknowledgements

We would like to thank Robert Luce and Sebastian Pokutta for their very valuable feedback on our work. The first author has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement QIP–805241)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A preliminary version of this article has appeared in the conference proceedings of IPCO 2022.

A Details about the LPboost test set

A Details about the LPboost test set

Table 5 reports about the characteristics of the LPboost test set. The names of the different data sets match those from the UC Irvine Machine Learning Repository [35] and columns “#data points” and “#features” report about the number of data points and the number of different features of each data point.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dadush, D., Hojny, C., Huiberts, S. et al. A simple method for convex optimization in the oracle model. Math. Program. 206, 283–304 (2024). https://doi.org/10.1007/s10107-023-02005-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-02005-8