Abstract

In this study, effects of high-order interactions on synchronization of the fractional-order Hindmarsh–Rose neuron models have been examined deeply. Three different network situations in which first-order coupling, high-order couplings and first-plus second-order couplings included in the neuron models, have been considered, respectively. In order to find the optimal values of the first- and high-order coupling parameters by minimizing the cost function resulted from pairwise and triple interactions, the particle swarm optimization algorithm is employed. It has been deduced from the numerical simulation results that the first-plus second-order couplings induce the synchronization with both reduced first-order coupling strength and total cost compared to the first-order coupled case solely. When the only first-order coupled case is compared with the only second-order coupled case, it is determined that the neural network with only second-order couplings involved could achieve synchronization with lower coupling strength and, as a natural result, lower cost. On the other hand, solely second- and first-plus second-order coupled networks give very similar results each other. Therefore, high-order interactions have a positive effect on the synchronization. Additionally, increasing the network size decreases the values of the both first- and high-order coupling strengths to reach synchronization. However, in this case, total cost should be kept in the mind. Decreasing the fractional order parameter causes slower synchronization due to the decreased frequency of the neural response. On the other hand, more synchronous network is possible with increasing the fractional order parameter. Thus, the neural network with higher fractional order as well as high-order coupled is a good candidate in terms of the neural synchronization.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

One of the most important phenomena in terms of neuron modeling is synchronization. It is considered that synchronization is a natural result of collective behaviors of neurons firing in a coordinated and rhythmic manner. Neural synchronization has been linked to several brain activities such as consciousness, attention, memory, and perception. It is assumed to be vital for the integration of information across different brain regions (Dayan and Abbott 2001). In addition, its disruption has been associated with various neurological and psychiatric disorders. Investigation of relationships between some diseases like epilepsy, Parkinson’s and synchronization has also been ongoing research (Squire et al. 2008). Therefore, understanding the mechanisms underlying neural synchronization and its modulation is of great importance in neuroscience research.

Complex network theory provides a framework for studying the structure and dynamics of interacting systems. It is expressly considered that the foundation for linkages between the system components is dyadic or pairwise interactions. However, in order to fully explain many complex systems, structural modeling of networked systems must be improved further. It is not possible for classical networks, which characterize pairwise connections, to fully capture group relationships in complex systems. This circumstance makes it difficult to describe complicated behaviors. To solve these restrictions, higher-order networks, in which linkages can connect more than two nodes, have arisen as a new area in network research. Higher-order structures, such as simplicial complexes and hypernetworks, have been proposed as useful models to explain the structure of a wide range of technical, biological, social, and other situations that are encoded in group interactions involving three or more members. Simplicial complexes are a strong mathematical basis for describing higher-order networks involving the interactions of nodes at different granularities. It broadens the notion of a graph by including higher order interactions like triangles, tetrahedra, and higher dimensional simplices (Majhi et al. 2022; Alvarez-Rodriguez et al. 2021; Bick et al. 2023; Boccaletti et al. 2023).

Due to utmost importance of the synchronization, there are lots of reports in the literature about coupling mechanism and types, synchronization control, coupling strength, topology, noise different states of the coupled networks and so on (Belykh et al. 2005; Casado 2003; Ma and Tang 2017; Ma et al. 2017; Gerstner et al. 2014; Sun et al. 2019; Usha and Subha 2019; Guo et al. 2017; Shajan et al. 2021; Xu et al. 2017; Elson et al. 1998; Li et al. 2013). However, coupling mechanism in these studies usually involves only pairwise relations, namely first-order couplings. On the other hand, some up to date studies have shown clues about that high-order relations in neural networks of the brain are available (Petri et al. 2014; Lord et al. 2016). Understanding high-order interactions in neural systems has significant implications for different fields such as neuroscience and robotics. It can help us develop more accurate models of neural activity and behavior, leading to improved diagnostic and therapeutic approaches for neurological disorders. Additionally, insights gained from studying high-order interactions in neural systems can inform the development of more advanced machine learning as well as artificial intelligence algorithms. High-order interactions/correlations, also known as multi-way interactions refer to the simultaneous effects of three or more variables on a system/process. In neural systems, the high-order relations are called “simplicial complexes” which construct body interactions among neurons of network. Higher order couplings, thus, encompass not just pairwise linkages but also triple, quadruple, and other interactions. For instance, a 0-simplex corresponds to a single neuron, a 1-simplex means a line segment connecting two neurons, a 2-simplex is a triangle formed by connecting three neurons, and so on (Gambuzza et al. 2021; Battiston et al. 2020). The interactions among m + 1 nodes can be presented by m simplex in the simplicial complexes. Kuramoto oscillators constructed in the 2-simplex have been studied in Millan et al. (2020) and it is shown that there is explosive synchronization. Another study results that high-order interactions cause the rapid synchronization in the high-order phase oscillators in Skardal and Arenas (2021). It is observed in Gallo et al. (2022) that the application of directed higher-order interactions might disrupt the synchronization state in a higher-order network consisting of eight Rössler oscillators. The synchronization in a multiplex network within each layer, the Rössler oscillators coupled through diffusive pairwise and non-pairwise connections have been examined in Anwar and Ghosh (2022) and it is concluded that higher-order interactions improve intra-layer synchronization and increase the robustness of interlayer synchronization in comparison to the pure pairwise situation.

High-order interactions have been involved in a neural network consisted of the Morris-Lecar (ML) neuronal models in Tlaie et al. (2019) and it is reported that time ordered synchronization and fast information transfer are possible by means of high-order interactions. It is stated in Ince et al. (2009) that neural dynamics in rat somatosensory cortex can not modelled in detail by employing only first-order interactions. Additionally, the reaction of the neuro-information propagation to high-order interactions has also been investigated in that study. Existence of the high-order interaction in neuronal activity has been shown in Amari et al. (2003). The effects of the three body interactions along with pairwise interactions on the synchronization of the Hindmarsh–Rose (HR) neurons have been evaluated in Parastesh et al. (2022) and it is concluded that overall synchronization can be achieved with reduced cost and lower first-order coupling parameters in presence of the second-order interactions. High-order interactions and electromagnetic induction together have been included in HR and ML neuron models in Ramasamy et al. (2022) and it is mentioned that even though flux coupling leads to increased coupling strength parameters for synchronization of HR neurons, it makes synchronization possible with lesser strength parameters in a network of ML neuron models. The synchronization of a higher-order network of the memristive Rulkov model with several kinds of synapses has been explored in Mehrabbeik et al. (2023a) and it is shown that higher-order interactions alter synchronization patterns to lower multi-node chemical coupling parameter values. The impact of higher-order chemical interactions and pairwise connections on the synchronization of a network comprising globally coupled Rulkov neuronal models has been investigated in Mirzaei et al. (2022). The findings demonstrate that when more neurons are engaged in the network, multi-node interactions can significantly improve neural network synchronization more so than pairwise interactions. The influence of pairwise and non-pairwise interactions on the synchronization coupled memristive HR neuron maps has been addressed in Mehrabbeik et al. (2023b). The findings indicate that synchronization among the neurons is achieved through chemical pairwise and non-pairwise synapses, even with the presence of the weakest coupling strengths.

On the other hand, the aforementioned studies are primarily based on integer-order mathematics. However, a more general form of this classical approach can be achieved through fractional calculus, enabling more accurate and wide-ranging modeling. As a result, fractional calculus has diverse applications across different areas such as engineering, biology, medicine, and many more (Baleanu et al. 2016; Sun et al. 2018; Dalir and Bashour 2010; Podlubny 1998). Unlike classical neuron models, fractional neuron models offer various opportunities such as the possibility to treat the fractional order as a separate bifurcation parameter and thus achievement of new rich dynamics, the memory effect due to the dependence on entire past values inherent in fractional derivative definitions, power law dynamics, spike time adaptation and the ability to define coupling functions in a fractional manner (Ionescu et al. 2017; Drapaca 2017; Lundstrom et al. 2008; Teka et al. 2018; Korkmaz and Saçu 2022). Memory effect of the fractional neuronal models are supported by representation of the cell membrane with the coupled active conductances. Neural dynamics with multiple time scales can be interpreted via the fractional calculus. Significant studies concerning fractional neuron models, their dynamics, synchronization and synchronization control have been extensively addressed in the literature (Liu et al. 2021; Ding et al. 2021; Malik and Mir 2022, 2021, 2020; Xie et al. 2014; Kaslik and Radulescu 2017; Song and Cao 2014; Tolba et al. 2019; Upadhyay and Mondal 2015; Shi and Wang 2014; Dar et al. 2021; Yang et al. 2021; Giresse et al. 2019; Meng et al. 2020; Jun et al. 2014). On the other hand, the fact that fractional neural networks support infinitive memory and inherited features distinguish them from classical neural networks. In addition, the data processing, detection of stimulations and anticipation capabilities of fractional neural networks are getting better (Song and Cao 2014). It is expected that fractional recurrent neural networks can provide better parameter estimation. The rate of network approximation increases with including fractional calculus into the network (Song and Cao 2014). Rich dynamics such as bifurcation, chaos and multi-stability can be achieved with fractional neural networks. Again, it can be said that fractional-order neural networks are effective in system identification (Kaslik and Radulescu 2017). However, beyond all these comprehensive studies, the influence of high-order interactions on the synchronization characteristics of fractional neuron models has not yet been adequately addressed. With this motivation, in this study, the impacts of high-order interactions on neural synchronization in a neural network consisting of fractional-order HR neurons have been discussed and the following points are sought to be clarified:

-

(i)

Determining whether high-order interactions have a positive or negative effect on neural synchronization in the neural network composed of fractional-order neuron models,

-

(ii)

To achieve synchronization, determination of the most appropriate first- and high-order coupling strengths by considering the cost function,

-

(iii)

Impact of fractional order parameter on neuronal synchronization

-

(iv)

Impact of network size on neural synchronization

In this context, the fractional-order HR neuronal model and preliminaries have been introduced in section"preliminaries and fractional-order hr neuronal model". The first- and second-order couplings of the fractional neuron models and the obtained simulation results have been presented in section"Coupling of the fractional-order neuronal models". In section"Coupling of the fractional-order neuronal models", the determination of the optimal first- and second-order coupling parameters is also presented, taking into account the cost function. The effects of network size and fractional order parameter on neural synchronization have been examined in sections "Effect of the fractional order of the neural network" and "Effect of the size of the neural network", respectively. The evaluation of the results and future studies are provided in section "Conclusion".

Preliminaries and fractional-order HR neuronal model

When long-term interactions, dynamic processes, and the continuous flow of time are taken into account in the analysis and modeling of physical or natural phenomena, the fractional integral emerges as a key instrument. From this perspective, the Riemann–Liouville fractional integral is one of the most widely recognized and extensively utilized definitions of fractional integrals, while there are several definitions and forms that may be found in the literature.

The fractional integral definition of Riemann–Liouville type with order v is given as below (Baleanu et al. 2016)

where v ∈ (0, 1), g(t) is a continuous function and Γ(.) is Gamma function. On the other hand, the fractional derivative definition of Caputo type is expressed as following (Podlubny 1998)

where g'(s) is first order derivative of the function g(s). With the presence of integer order derivatives in the initial conditions, Caputo type fractional derivatives are simpler to interpret. Additionally, a constant's derivative under the Caputo definition is zero as is usual in integer calculus. A general type of fractional differential equation with order v is given as following (Podlubny 1998)

where Dv is fractional derivative operator of Caputo type and g0 is initial condition.

To mimic the dynamics of real neurons, various neuron models have been available in the literature. Conductance-based neuron models are the type that resembles biological neurons the most. In these models, ion channels are imitated by voltage-dependent conductances, as well as the cell membrane by a capacitor. The most common model from this category is the Hodgkin-Huxley (HH) neuron model (Hodgkin and Huxley 1952), which allows for rich dynamics to be obtained. However, this model comes with significant computational and hardware implementation costs. Especially if a network structure to be created using these models is considered, the cost becomes even higher. On the other hand, different neuronal models have been proposed to overcome these limitations. One of them is the FitzHugh-Nagumo (FHN) neuronal model, which is represented by 2 dynamic variables (Fitzhugh 1969). This model is important in terms of showing the spiking behavior of a real neuron. However, the dynamic behaviors that can be obtained with this model are very limited. The 3-variable Hindmarsh–Rose (HR) neuronal model is derived by adding another dynamic variable to the FHN model (Hindmarsh and Rose 1984). Thus, it becomes biologically more realistic and captures several important features of neural dynamics. The HR neuronal model is salient in terms of being low-cost and providing richer dynamic diversity. One of the indispensable features of the HR model is its ability to generate a variety of spiking patterns, involving bursting, regular spiking, and chaotic spiking. The HR model has been employed extensively in the study of synchronization in neuronal systems, both in simulations and in experiments. For example, it has been used to model the synchronization of neurons in the thalamus, and the hippocampus (McCulloch and Pitts 1943). It should also be noted that, in addition to the neuron models mentioned above, there are also different neuronal models like Integrate-and-Fire (McCulloch and Pitts 1943), Izhikevich (Izhikevich 2003), etc.

The fractional HR neural model commensurate order of α is defined by the following equation set (Malik and Mir 2020).

where α (0 < α < 1) is fractional order, and the state variables x, y, and z correspond to respectively membrane potential, spiking variable as well as the adaptation variable. The other control parameters are set to bh = 3, r = 0.009, s = 4 and xRs = −1.6 for the numerical simulations (Malik and Mir 2020). To check stability of the fractional-order system defined in (4), the minimum fractional order αmin should be determined according to the condition ∀λi > αminπ/2 where λi (i = 1, 2, …) is the eigenvalue of the system at equilibrium points (Tavazoei and Haeri 2009). For the stimulus Iexs = 2.2 and parameter set given above, the minimum fractional order αmin is calculated as 0.5454. The predict and correct (PC) method has been utilized to numerically analyze the fractional-order system given by (4). The application procedure of the PC technique to fractional-order differential equations is given elaborately in Garrappa (2010); Diethelm et al. (2004).

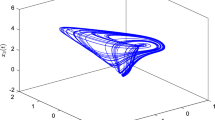

While model parameters are fixed to values given above except the order α, the bifurcation diagram of the HR neuronal model for the fractional order α versus xmax, the maximum values of the variable x, is demonstrated in Fig. 1. It is obviously seen from Fig. 1 that pattern type of the model response has changed by means of fractional order parameter. For example, the neural model exhibits period-3 bursting for α = 0.95 and period-7 bursting for α = 0.9.

Coupling of the fractional-order neuronal models

Electrical and chemical coupling are two pivotal mechanisms which play a critical role in the synchronization of neurons in the brain. Electrical coupling is a direct cell-to-cell communication mechanism that allows ions to flow between neurons through gap junctions. This enables for rapid and reliable synchronization between neurons, particularly in oscillatory networks. On the other hand, chemical coupling involves the release of neurotransmitters that adhere to receptors on neighboring neurons, triggering a cascade of biochemical events that eventually lead to the synchronization of the neurons. Chemical coupling is a more intricate and slower mechanism compared to electrical coupling (Usha and Subha 2019; Li et al. 2013). Various mathematical models of electrical and chemical coupling that aim to elucidate the synchronization of neurons have been developed. These models vary from simple linear models to highly complex nonlinear models, and they have been used to investigate various aspects of neural synchronization, such as the role of coupling mechanism and strength, frequency, the impact of noise and heterogeneity, and the occurrence of synchronization in large-scale networks (Gerstner et al. 2014; Guo et al. 2017). On the other hand, recent years have witnessed an emergence of studies that focus on the reliance of information transmission among neurons on the equilibrium of energy. These studies claim that when neurons are in an energy-balanced state, synaptic channels become obstructed, whereas information transfer is possible in instances of energy imbalance (Yao et al. 2023). A further step of this approach involves altering the coupling intensity of the neuron via self-adjustment, thereby attempting to achieve equilibrium in energy and synchronization (Xie et al. 2022). A disruption in energy balance can be initiated within the neuron as a result of physical stimulus, including but not limited to temperature, illumination, voice, and mechanical forces (Sun et al. 2023).

A simplicial complex order of Cd includes not only the first-order couplings but also high-order couplings. However, here, it is assumed as Cd = 2 which means only first- and second-order couplings considered. The definitive equations of a simplicial complex, that consists of fractional-order HR neuron models, is given in (5).

where Xni ∈ ℝs corresponds to the state variables with s dimensional of the system, F(Xni) is the function which determines relationships among nodes, (σc1, σc2) are strength parameters of first- and second-order couplings. The functions gc1(Xni, Xnj) and gc2(Xni, Xnj, Xnk) are respectively first- as well as second-order coupling functions. The parameter bij is a link indicator between nodes i and j, if bij equals to 1, there is a link, otherwise not. Similarly, if bijk equals to 1, there is a triangle constructed by the nodes i, j and k, otherwise not.

To gain more insights about network topology, a globally coupled neural network involving six neurons (Nn = 6) is shown in Fig. 2a, and the parameters bij and bijk for the connections in the neural network are given in Fig. 2b and 2c, respectively.

The synchronization of the neural network constructed of the Nn = 10 neuronal models has been investigated. So as to evaluate the synchronization status in terms of the quantitative measures, the standard deviation results (Zhang et al. 2015), averaged error, and averaged similarity (Fan and Wang 2017) are calculated as following.

where σeh, Eeh and Ssml are the standard deviation results, averaged error, and averaged similarity, respectively. xj(n) corresponds to the nth entity in the time series of the jth neuron, Nn is number of coupled neurons. These three synchronization performance measures are equal to zero in ideal perfect synchronization, in this way, as the dynamics of each of the neurons forming the network are coherent with each other, all three performance measures approach to zero level.

Firstly, only first-order interactions, namely, diffusive electrical couplings are considered when second order interactions are passive, σc2 = 0. And thus, the synchronization status of the neural network for different values of the coupling parameter σc1 is evaluated at (α = 0.95, Iexs = 2.2). The first-order coupling function is defined as gc1(Xni, Xnj) = Xnj − Xni (Parastesh et al. 2022). The numerical simulation results for the coupling strength σc1 ∈ [− 0.1, 0.2] versus normalized performance measures are displayed in Fig. 3. It is undeniable from the figure that all three performance measures are far from zero level for the negative values of the coupling parameter σc1, so the synchronization of the network is not possible in this range. However, the plots of the performance measures show a rapid decline around σc1 ∈ (0, 0.05) and converge to zero with decreasing slope at positive values of parameter σc1. Therefore, it can be said that the dynamic responses of the neurons in the network are compatible with each other and synchronization is possible only at positive values of σc1. To better perceive this situation, the time domain responses of first, fourth, and ninth neurons in the network obtained for the strength parameter σc1 = −0.1 and 0.1 are portrayed in Fig. 4. It is evidentiary from Fig. 4 that neural dynamics are synchronous for σc1 = 0.1. On the other hand, it may be claimed that the standard deviation results and the averaged error values exhibit a more similar characteristic.

In the second step, second-order interactions are active solely, (σc2 ≠ 0, σc1 = 0). In this case, the second-order coupling function is defined as gc2(Xni, Xnj, Xnk) = Xnj + Xnk − 2Xni (Parastesh et al. 2022). The obtained simulation results for the case of (α = 0.95, Iexs = 2.2) are presented in Fig. 5. If the coupling parameter σc2 is in the range (− 0.1, 0), neural synchronization is not possible owing to fact that all three performance measures are far from zero level. On the other hand, all three performance measures show a rapid decrease around σc2 ∈ (0, 0.01) and converge to zero in the range σc2 > 0. Consequently, the condition σc2 > 0 must be met for neural synchronization to be possible. This can be observed more easily from the time domain responses and phase portraits of first, fourth, and ninth neurons in the network obtained for σc2 = − 0.01 and 0.1 in Fig. 6.

It is distinguishable from the above simulation results that synchronization can occur in the positive values of the first- and high-order couplings. However, the magnitudes of the first- and high-order interactions required to achieve synchronization have not been compared. Therefore, at this point, one of the coupling parameters is fixed at zero while the other is changed in the range of [0, 0.1]. The obtained simulation results are presented in Fig. 7. It is noticeable from the Fig. 7 that high-order couplings produce smaller error values in terms of all three performance metrics. In other words, a much smaller σc2 than σc1 is demanded to attain the same synchronization performance. Thus, in the case of σc1 = σc2, it can be clearly said that only the second-order coupled network is more synchronous than the solely first-order coupled network.

The comparison of the magnitude of the first- and second-order coupling strengths in terms of the performance measures; (a) (σc1, σc2) vs σeh, (b) (σc1, σc2) vs Eeh, and (c) (σc1, σc2) vs Ssml; σc1: first-order coupling strength, σc2: second-order coupling strength, σeh: standard deviations, Eeh: error, Ssml: similarity

Fourthly, while the coupling parameter σc1 is spanned from 0 to 0.1, the numerical simulation results carried out for several values of the high-order coupling parameter σc2 are demonstrated in Fig. 8. As can be clear from Fig. 8 that as the value of σc2 increases, all three performance metrics reaches smaller errors at smaller values of σc1. That is, smaller values of σc1 are enough to achieve synchronization in the presence of σc2 compared to the case where σc2 is passive. It can even be said that synchronization can be achieved regardless of the value of σc1 when the value of σc2 exceeds a certain threshold. Moreover, it is clearly evident from the simulation results that all three performance metrics yield parallel results. Therefore, from this point on, only the standard deviation results have been considered as the performance metric in the evaluations.

Three performance measures for different first- and second-order coupling strengths active together; (a) σc1 vs σeh for several values of σc2, (b) σc1 vs Eeh for different values of σc2, and (c) σc1 vs Ssml for different values of σc2; σc1: first-order coupling strength, σc2: second-order coupling strength, σeh: standard deviations, Eeh: error, Ssml: similarity

The contour plot of first-order (σc1) vs second-order (σc2) interactions based on the calculated standard deviation results is portrayed in Fig. 9. The shapes of contours show that there is not a perfect linear relationship. So, optimization algorithms can be utilized to determine the most optimal coupling parameters that keep the performance metric below a certain threshold. Optimization algorithms are widely used in broad fields such as engineering, biology, medicine, and computer science.

On the other hand, although the presence of σc2 reduces the value of σc1 required for synchronization, a more accurate decision can be made if the effectiveness of this situation is evaluated considering the cost. In this context, the cost function can be defined as in (7) (Parastesh et al. 2022).

Here, Nn is the number of neurons, Nn(Nn − 1)/2 is the links formed by the pairwise connections between neurons, and the expression 3Nn(Nn − 1)(Nn − 2)/6 is the triangles formed by the triple connections among the neurons.

There are many optimization algorithms available in literature, each with its own advantages and disadvantages. Several of the most commonly used optimization algorithms include gradient descent, genetic algorithms, particle swarm optimization, and ant colony optimization (Gad 2022). In this study, Particle Swarm Optimization Algorithm (PSO) has been exploited to determine the optimum first- and high-order coupling parameters that will minimize the cost function given by (7). (PSO) is a metaheuristic optimization algorithm, that is inspired by the social behavior of bird flocks or fish schools (Gad 2022). In PSO, a group of particles represents the possible solutions to the problem of interest, and each particle adjusts its position in the search space based on its own experience and that of its neighbors. The particles move towards the best-known position in the search space, which is updated as new, better positions are discovered. PSO is characterized by its simplicity, efficiency, and powerful implementation. It has been successfully applied to a broad range of optimization problems, including engineering design, data mining, and machine learning. Here, while the performance metric σeh is below a certain limit value, the cost function given by (7) is tried to be minimized by searching for the best candidate in the solution space. Thus, coupling parameters are determined by considering both cost and synchronization criteria. The total number of iterations is adjusted to 15, the swarm size is fixed to 30, and the algorithm is run 10 times by assigning different initial conditions independently. In addition, the lower and upper bounds for the coupling parameters σc1 as well as σc2 are restricted as lb = [0 0] and ub = [0.2 0.2].

When considering the standard deviation results σeh, the cost function Ccst is minimized with the constraint that (σeh ≤ 0.001). In this case, the repeated running results are obtained as in Table 1. From Table 1, the mean optimal coupling parameters and the mean cost are calculated as (σc1 = 0.00052, σc2 = 0.00494) and Ccst = 1.8003, respectively. Under the same conditions, when the coupling parameter σc2 = 0, the mean value of σc1 and the cost Ccst are calculated as 0.08014 and 3.60782, respectively. Similarly, when the coupling parameter σc1 = 0, the mean values of σc2 and Ccst are respectively 0.00491 and 1.76668. Based on these results, it can be said that the cost of a first-order coupled network alone is greater than that of a high-order coupled network alone. However, the least cost is achieved in case of availability of the second-order couplings solely. On the other hand, when both couplings are active together, the cost decreases compared to the case with only first-order couplings. Therefore, the existence of σc2 leads to a reduction in the cost of a first-order coupled network. From this point, it can be concluded that the high-order couplings have a positive effect on neural synchronization.

Effect of the fractional order of the neural network

To inspect the effect of fractional order parameter on neural synchronization, the variation of the performance parameter σeh with respect to coupling parameters σc1 and σc2 at different fractional orders is presented in Fig. 10. It is visible from Fig. 10a that the performance parameter σeh starts with a smaller value for the network with a lower fractional order and gradually decreases. On the contrary, for the network with a higher fractional order, the performance parameter σeh starts with a larger value and rapidly decreases up to an intersection point. Beyond this point, the network with a higher fractional order produces less error values and approaches a more synchronous state. Ultimately, compared to the network with a higher fractional order, the network with a lower fractional order requires a larger coupling parameter to produce less error. For instance, at σc1 = 0.05, the standard deviation result of the neuronal network with the order of 0.85 is σeh = 0.0036, while the result of the neural network with the order of 0.95 is σeh = 0.010. Hence, the initial metric is smaller for the network with a lower order. However, at σc1 = 0.1, the performance metric of the network with a fractional order of 0.85 is σeh = 0.0018, while the metric of the neural network with the order of 0.95 is σeh = 0.00034. Therefore, the network with a higher order produces less error in the final state. The reason for this is that a neuron model with smaller fractional order has a higher number of spikes in each burst, while a larger fractional order model has a higher frequency of neural response. So, a larger fractional order network can respond faster. On the other hand, it is clear from Fig. 10b that the network with a smaller fractional order starts with a larger error value compared to the network with a larger fractional order, and this trend continues throughout the entire variation range of σc2. Therefore, it can be said that the network with a larger fractional order exhibits more synchronous behavior. Furthermore, it is apparent from Fig. 10a-b that the standard deviation results of only second-order coupled neuronal networks are at a lower level than those of only first-order coupled networks regardless of the fractional order parameter. For the case of Fig. 10c, where both second and first order couplings are active, the performance parameter starts with lower values but shows a behavior similar to the case where only first order coupling is active.

Standard deviation results of the neural network for different fractional orders (a) case with first order coupling only (b) case with second order coupling only and (c) case with first order as well as second order couplings; σc1: first-order coupling strength, σc2: second-order coupling strength, σeh: standard deviations

In conclusion, considering the performance parameter σeh, decreasing the fractional order has not a positive contribution to neural synchronization. However, it can be said that as the fractional order increases, the neurons forming the network exhibit more similar behaviors.

Effect of the size of the neural network

To investigate the effect of network size on neural synchronization, the size of the network has been increased from Nn = 10 to 15. The results of the performance measure σeh obtained for the coupling parameters σc1 and σc2 are shown in Fig. 11. It is noticeable from Fig. 11 that increasing the size of the network reduces the values of performance parameter for all three cases. Therefore, neuronal synchronization can be achieved with smaller coupling parameter values. It should also be noted that the value of σc2 is smaller compared to σc1 at a certain value of the performance parameter.

Standard deviation results of the neural network for different fractional orders (a) case with first order coupling only (b) case with second order coupling only and (c) case with first order as well as second order couplings; σc1: first-order coupling strength, σc2: second-order coupling strength, σeh: standard deviations, Nn: network size

On the other hand, a more accurate inference can be made by evaluating the cost function provided in (7) together with the increasing network size. For this reason, to determine the optimal coupling parameters in response to the increasing network size, the PSO algorithm has been utilized while keeping the set settings in Sect. "Coupling of the fractional-order neuronal models". Under these conditions, the calculated average values for the cases of only first-order coupling, second-order coupling solely, and both couplings being active are as follows: i) (σc1 = 0.044, Ccst = 4.6236), ii) (σc2 = 0.0017, Ccst = 2.2822) and iii) (σc1 = 0.000338, σc2 = 0.00167, Ccst = 2.3117). As can be seen from these results, the highest cost is the case with only first-order coupling. However, by incorporating second-order effects into the coupling mechanism, the cost is reduced and the synchronization is positively affected. In addition, the lowest cost is obtained only in the case of second-order coupling. The crucial point to note is that when the average coupling values obtained for Nn = 10 in Table 1 are compared with the values calculated for Nn = 15, it is clear that the average cost increases with increasing network size for all three coupling scenarios. Therefore, although the increased network size reduces the value of the coupling parameters for synchronization, it also increases the average cost and this aspect should be taken into account.

Considering the all-numerical simulation results, three main inferences can be made:

-

(i)

Second-order interactions, when combined with first-order couplings, reduce both the cost of synchronization and the mean error values. Therefore, high-order interactions have a positive contribution to neural synchronization.

-

(ii)

The fractional order of the HR neuron model affects the neural synchronization performance. As the fractional order parameter increases, the performance criterion σeh decreases. Because frequency of the neural response increases with the increasing fractional order, resulting in a faster response. Thus, a larger fractional order may be a better choice in terms of neural synchronization.

-

(iii)

The network size also influences neural synchronization. Larger networks require smaller coupling parameters for the neural synchronization. However, it is crucial not to disregard the increased cost resulting from the increasing network size.

Conclusion

In this study, high order interactions have been included in the coupling mechanism of the neural network consisting of fractional HR neuronal models. The effect of first- and second-order couplings on neural synchronization has been investigated individually and in combination. Numerical simulation results show that second-order couplings positively affect neural synchronization by reducing cost and pairwise coupling strength. Additionally, decreasing the fractional order has a negative effect on synchronization according to performance measures. On the other hand, the magnitude of coupling parameters necessary for neural synchronization decreases with increasing network size. Consequently, a neural network with high fractional order and both first- and high-order interactions active is more favorable for neural synchronization. In fractional neural networks, since all past values of each individual neuron are taken into account, as the network size increases, the calculation becomes quite difficult and the cost increases.

The effects of high-order interactions and fractional calculus on multilayer structures with map-based neuron models are possible research subjects in future studies. Because discrete map-based neuron models are computationally advantageous especially in case of increasing network size. On the other hand, the accuracy of the results in fractional calculus varies depending on the employed numerical method. If very sensitive methods are used, computational cost can be a serious problem. Another research topic may be synchronization status of fractional neural network in case of both high order interactions and energy exchange.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Alvarez-Rodriguez U, Battiston F, de Arruda GF, Moreno Y, Perc M, Latora V (2021) Evolutionary dynamics of higher-order interactions in social networks. Nat Hum Behav 5(5):586–595. https://doi.org/10.1038/s41562-020-01024-1

Amari SI, Nakahara H, Wu S, Sakai Y (2003) Synchronous firing and higher-order interactions in neuron pool. Neural Comput 15:127–142. https://doi.org/10.1162/089976603321043720

Anwar MS, Ghosh D (2022) Intralayer and interlayer synchronization in multiplex network with higher-order interactions. Chaos 32:033125. https://doi.org/10.1063/5.0074641

Baleanu D, Diethelm K, Scalas E, Trujillo JJ (2016) Fractional calculus: models and numerical methods, 2nd edn. World Scientific, Singapore

Battiston F, Cencetti G, Iacopini I, Latora V, Lucas M, Patania A, Young JG, Petri G (2020) Networks beyond pairwise interactions: structure and dynamics. Phys Rep 874:1–92. https://doi.org/10.1016/J.PHYSREP.2020.05.004

Belykh I, de Lange E, Hasler M (2005) Synchronization of bursting neurons: what matters in the network topology. Phys Rev Lett 94:188101. https://doi.org/10.1103/PhysRevLett.94.188101

Bick C, Gross E, Harrington HA, Schaub MT (2023) What are higher-order networks? SIAM Rev 65:686–731. https://doi.org/10.1137/21M1414024

Boccaletti S, De Lellis P, del Genio CI, Alfaro-Bittner K, Criado R, Jalan S, Romance M (2023) The structure and dynamics of networks with higher order interactions. Phys Rep 1018:1–64. https://doi.org/10.1016/j.physrep.2023.04.002

Casado JM (2003) Synchronization of two Hodgkin–Huxley neurons due to internal noise. Phys Lett A 310:400–406. https://doi.org/10.1016/S0375-9601(03)00387-6

Dalir M, Bashour M (2010) Applications of fractional calculus. Appl Math Sci 4:1021–1032

Dar MR, Kant NA, Khanday FA (2021) Dynamics and implementation techniques of fractional-order neuron models: a survey. In: Radwan AG, Khanday FA, Said AL (eds) Fractional order systems: an overview of mathematics, design, and applications for engineers. Academic Press, London, pp 483–511

Dayan P, Abbott LF (2001) Theoretical neuroscience: computational and mathematical modeling of neural systems. MIT Press, Cambridge

Diethelm K, Ford NJ, Freed AD (2004) Detailed error analysis for a fractional Adams method. Numer Algorithms 36:31–52. https://doi.org/10.1023/B:NUMA.0000027736.85078.be

Ding D, Jiang L, Hu Y, Li Q, Yang Z, Zhang Z, Wu Q (2021) Hidden dynamical behaviors, sliding mode control and circuit implementation of fractional-order memristive Hindmarsh−Rose neuron model. Eur Phys J Plus 136:66. https://doi.org/10.1140/EPJP/S13360-021-01107-6

Drapaca CS (2017) Fractional calculus in neuronal electromechanics. J Mech Mater Struct 12:35–55. https://doi.org/10.2140/jomms.2017.12.35

Elson RC, Selverston AI, Huerta R, Rulkov NF, Rabinovich MI, Abarbanel HDI (1998) Synchronous behavior of two coupled biological neurons. Phys Rev Lett 81:5692–5695. https://doi.org/10.1103/PhysRevLett.81.5692

Fan DG, Wang QY (2017) Synchronization and bursting transition of the coupled Hindmarsh–Rose systems with asymmetrical time-delays. Sci China Technol Sci 60:1019–1031. https://doi.org/10.1007/s11431-016-0169-8

Fitzhugh R (1969) Mathematical models of excitation and propagation in nerve. In: Biological engineering. McCraw Hill, pp 1–85

Gad AG (2022) Particle swarm optimization algorithm and its applications: a systematic review. Arch Comput Methods Eng 29:2531–2561. https://doi.org/10.1007/s11831-021-09694-4

Gallo L, Muolo R, Gambuzza LV, Latora V, Frasca M, Carletti T (2022) Synchronization induced by directed higher-order interactions. Commun Phys. https://doi.org/10.1038/s42005-022-01040-9

Gambuzza LV, Di Patti F, Gallo L, Lepri S, Romance M, Criado R, Frasca M, Latora V, Boccaletti S (2021) Stability of synchronization in simplicial complexes. Nat Commun 12:66. https://doi.org/10.1038/s41467-021-21486-9

Garrappa R (2010) On linear stability of predictor-corrector algorithms for fractional differential equations. Int J Comput Math 87(10):2281–2290. https://doi.org/10.1080/00207160802624331

Gerstner W, Kistler WM, Naud R, Paninski L (2014) Neuronal dynamics: from single neurons to networks and models of cognition. Cambridge University Press, Cambridge

Giresse TA, Crepin KT, Martin T (2019) Generalized synchronization of the extended Hindmarsh–Rose neuronal model with fractional order derivative. Chaos Solitons Fract 118:311–319. https://doi.org/10.1016/j.chaos.2018.11.028

Guo SL, Xu Y, Wang CN, Jin WY, Hobiny A, Ma J (2017) Collective response, synapse coupling and field coupling in neuronal network. Chaos Solitons Fract 105:120–127. https://doi.org/10.1016/j.chaos.2017.10.019

Hindmarsh JL, Rose RM (1984) A model of neuronal bursting using three coupled first order differential equations. Proc R Soc London Ser B 221:87–102. https://doi.org/10.1098/rspb.1984.0024

Hodgkin AL, Huxley AF (1952) A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol 117:500. https://doi.org/10.1113/JPHYSIOL.1952.SP004764

Ince RAA, Montani F, Arabzadeh E, Diamond ME, Panzeri S (2009) On the presence of high-order interactions among somatosensory neurons and their effect on information transmission. J Phys Conf Ser 197:66. https://doi.org/10.1088/1742-6596/197/1/012013

Ionescu C, Lopes A, Copot D, Machado JAT, Bates JHT (2017) The role of fractional calculus in modeling biological phenomena: a review. Commun Nonlinear Sci Numer Simul 51:141–159. https://doi.org/10.1016/J.CNSNS.2017.04.001

Izhikevich EM (2003) Simple model of spiking neurons. IEEE Trans Neural Netw 14:1569–1572. https://doi.org/10.1109/TNN.2003.820440

Jun D, Guang-Jun Z, Yong X, Hong Y, Jue W (2014) Dynamic behavior analysis of fractional-order Hindmarsh–Rose neuronal model. Cogn Neurodyn 8:167–175. https://doi.org/10.1007/S11571-013-9273-X

Kaslik E, Radulescu IR (2017) Dynamics of complex-valued fractional-order neural networks. Neural Netw 89:66. https://doi.org/10.1016/j.neunet.2017.02.011

Korkmaz N, Saçu İE (2022) An alternative perspective on determining the optimum fractional orders of the synaptic coupling functions for the simultaneous neural patterns. Nonlinear Dyn 110:3791–3806. https://doi.org/10.1007/S11071-022-07782-Z

Li JS, Dasanayake I, Ruths J (2013) Control and synchronization of neuron ensembles. IEEE Trans Autom Contr 58:1919–1930. https://doi.org/10.1109/TAC.2013.2250112

Liu D, Zhao S, Luo X, Yuan Y (2021) Synchronization for fractional-order extended Hindmarsh–Rose neuronal models with magneto-acoustical stimulation input. Chaos Solitons Fract. https://doi.org/10.1016/j.chaos.2020.110635

Lord LD, Expert P, Fernandes HM, Petri G, Van Hartevelt TJ, Vaccarino F, Deco G, Turkheimer F, Kringelbach ML (2016) Insights into brain architectures from the homological scaffolds of functional connectivity networks. Front Syst Neurosci. https://doi.org/10.3389/FNSYS.2016.00085

Lundstrom BN, Higgs MH, Spain WJ, Fairhall AL (2008) Fractional differentiation by neocortical pyramidal neurons. Nat Neurosci 11:1335–1342. https://doi.org/10.1038/nn.2212

Ma J, Tang J (2017) A review for dynamics in neuron and neuronal network. Nonlinear Dyn 89:1569–1578. https://doi.org/10.1007/s11071-017-3565-3

Ma J, Mi L, Zhou P, Xu Y, Hayat T (2017) Phase synchronization between two neurons induced by coupling of electromagnetic field. Appl Math Comput 307:321–328. https://doi.org/10.1016/j.amc.2017.03.002

Majhi S, Perc M, Ghosh D (2022) Dynamics on higher-order networks: a review. J R Soc Interface 19(188):20220043. https://doi.org/10.1098/rsif.2022.0043

Malik SA, Mir AH (2020) FPGA realization of fractional order neuron. Appl Math Model 81:372–385. https://doi.org/10.1016/j.apm.2019.12.008

Malik SA, Mir AH (2021) Discrete multiplierless implementation of fractional order Hindmarsh–Rose model. IEEE Trans Emerg Top Comput Intell 5:792–802. https://doi.org/10.1109/TETCI.2020.2979462

Malik SA, Mir AH (2022) Synchronization of fractional order neurons in presence of noise. IEEE/ACM Trans Comput Biol Bioinform 19:1887–1896. https://doi.org/10.1109/TCBB.2020.3040954

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5:115–133. https://doi.org/10.1007/BF02478259

Mehrabbeik M, Jafari S, Perc M (2023a) Synchronization in simplicial complexes of memristive Rulkov neurons. Front Comput Neurosci. https://doi.org/10.3389/fncom.2023.1248976

Mehrabbeik M, Ahmadi A, Bakouie F, Jafari AH, Jafari S, Ghosh D (2023b) The impact of higher-order interactions on the synchronization of Hindmarsh–Rose neuron maps under different coupling functions. Mathematics 11(13):66. https://doi.org/10.3390/math11132811

Meng F, Zeng X, Wang Z, Wang X (2020) Adaptive synchronization of fractional-order coupled neurons under electromagnetic radiation. Int J Bifurc Chaos. https://doi.org/10.1142/S0218127420500443

Millan AP, Torres JJ, Bianconi G (2020) Explosive higher-order Kuramoto dynamics on simplicial complexes. Phys Rev Lett. https://doi.org/10.1103/PhysRevLett.124.218301

Mirzaei S, Mehrabbeik M, Rajagopal K, Jafari S, Chen G (2022) Synchronization of a higher-order network of Rulkov maps. Chaos 32:123133. https://doi.org/10.1063/5.0117473

Parastesh F, Mehrabbeik M, Rajagopal K, Jafari S, Perc M (2022) Synchronization in Hindmarsh–Rose neurons subject to higher-order interactions. Chaos 32:13125. https://doi.org/10.1063/5.0079834/2835680

Petri G, Expert P, Turkheimer F, Carhart-Harris R, Nutt D, Hellyer PJ, Vaccarino F (2014) Homological scaffolds of brain functional networks. J R Soc Interface 11:66. https://doi.org/10.1098/rsif.2014.0873

Podlubny I (1998) Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications. Academic Press, London

Ramasamy M, Devarajan S, Kumarasamy S, Rajagopal K (2022) Effect of higher-order interactions on synchronization of neuron models with electromagnetic induction. Appl Math Comput 434:127447. https://doi.org/10.1016/J.AMC.2022.127447

Shajan E, Asir MP, Dixit S, Kurths J, Shrimali MD (2021) Enhanced synchronization due to intermittent noise. New J Phys. https://doi.org/10.1088/1367-2630/ac3885

Shi M, Wang Z (2014) Abundant bursting patterns of a fractional-order Morris–Lecar neuron model. Commun Nonlinear Sci Numer Simul 19:1956–1969. https://doi.org/10.1016/j.cnsns.2013.10.032

Skardal PS, Arenas A (2021) Memory selection and information switching in oscillator networks with higher-order interactions. J Phys Complex. https://doi.org/10.1088/2632-072X/abbd4c

Song C, Cao J (2014) Dynamics in fractional-order neural networks. Neurocomputing 142:494–498. https://doi.org/10.1016/j.neucom.2014.03.047

Squire LR, Berg D, Bloom FE, du Lac S, Ghosh A, Spitzer NC (2008) Fundamental neuroscience. Academic Press, London

Sun HG, Zhang Y, Baleanu D, Chen W, Chen YQ (2018) A new collection of real world applications of fractional calculus in science and engineering. Commun Nonlinear Sci Numer Simul 64:213–231. https://doi.org/10.1016/J.CNSNS.2018.04.019

Sun XJ, Liu ZF, Perc M (2019) Effects of coupling strength and network topology on signal detection in small-world neuronal networks. Nonlinear Dyn 96:2145–2155. https://doi.org/10.1007/S11071-019-04914-W

Sun G, Yang F, Ren G, Wang C (2023) Energy encoding in a biophysical neuron and adaptive energy balance under field coupling. Chaos Solitons Fract. https://doi.org/10.1016/j.chaos.2023.113230

Tavazoei MS, Haeri M (2009) A note on the stability of fractional order systems. Math Comput Simul 79:1566–1576. https://doi.org/10.1016/J.MATCOM.2008.07.003

Teka WW, Upadhyay RK, Mondal A (2018) Spiking and bursting patterns of fractional-order Izhikevich model. Commun Nonlinear Sci Numer Simul 56:161–176. https://doi.org/10.1016/J.CNSNS.2017.07.026

Tlaie A, Leyva I, Sendina-Nadal I (2019) High-order couplings in geometric complex networks of neurons. Phys Rev E. https://doi.org/10.1103/PhysRevE.100.052305

Tolba MF, Elsafty AH, Armanyos M, Said LA, Madian AH, Radwan AG (2019) Synchronization and FPGA realization of fractional-order Izhikevich neuron model. Microelectronics J 89:56–69. https://doi.org/10.1016/j.mejo.2019.05.003

Upadhyay RK, Mondal A (2015) Dynamics of fractional order modified Morris–Lecar neural model. Netw Biol 5:113–136. https://doi.org/10.0000/issn-2220-8879-networkbiology-2015-v5-0010

Usha K, Subha PA (2019) Collective dynamics and energy aspects of star-coupled Hindmarsh–Rose neuron model with electrical, chemical and field couplings. Nonlinear Dyn 96:2115–2124. https://doi.org/10.1007/S11071-019-04909-7

Xie Y, Kang Y, Liu Y, Wu Y (2014) Firing properties and synchronization rate in fractional-order Hindmarsh–Rose model neurons. Sci China Technol Sci 57:914–922. https://doi.org/10.1007/S11431-014-5531-3

Xie Y, Yao Z, Ma J (2022) Phase synchronization and energy balance between neurons. Front Inform Technol Electron Eng 23:1407–1420. https://doi.org/10.1631/FITEE.2100563

Xu Y, Jia Y, Ma J, Alsaedi A, Ahmad B (2017) Synchronization between neurons coupled by memristor. Chaos Solitons Fract 104:435–442. https://doi.org/10.1016/j.chaos.2017.09.002

Yang X, Zhang G, Li X, Wang D (2021) The synchronization behaviors of coupled fractional-order neuronal networks under electromagnetic radiation. Symmetry 13:66. https://doi.org/10.3390/sym13112204

Yao Z, Sun K, He S (2023) Synchronization in fractional-order neural networks by the energy balance strategy. Cogn Neurodyn. https://doi.org/10.1007/s11571-023-10023-7

Zhang JQ, Huang SF, Pang ST, Wang MS, Gao S (2015) Synchronization in the uncoupled neuron system. Chin Phys Lett. https://doi.org/10.1088/0256-307X/32/12/120502

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saçu, İ.E. Effects of high-order interactions on synchronization of a fractional-order neural system. Cogn Neurodyn 18, 1877–1893 (2024). https://doi.org/10.1007/s11571-023-10055-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-023-10055-z