Abstract

Background

Structured reporting (SR) in radiology is becoming increasingly necessary and has been recognized recently by major scientific societies. This study aims to build structured CT-based reports in colon cancer during the staging phase in order to improve communication between the radiologist, members of multidisciplinary teams and patients.

Materials and methods

A panel of expert radiologists, members of the Italian Society of Medical and Interventional Radiology, was established. A modified Delphi process was used to develop the SR and to assess a level of agreement for all report sections. Cronbach’s alpha (Cα) correlation coefficient was used to assess internal consistency for each section and to measure quality analysis according to the average inter-item correlation.

Results

The final SR version was built by including n = 18 items in the “Patient Clinical Data” section, n = 7 items in the “Clinical Evaluation” section, n = 9 items in the “Imaging Protocol” section and n = 29 items in the “Report” section. Overall, 63 items were included in the final version of the SR. Both in the first and second round, all sections received a higher than good rating: a mean value of 4.6 and range 3.6–4.9 in the first round; a mean value of 5.0 and range 4.9–5 in the second round. In the first round, Cronbach’s alpha (Cα) correlation coefficient was a questionable 0.61. In the first round, the overall mean score of the experts and the sum of scores for the structured report were 4.6 (range 1–5) and 1111 (mean value 74.07, STD 4.85), respectively. In the second round, Cronbach’s alpha (Cα) correlation coefficient was an acceptable 0.70. In the second round, the overall mean score of the experts and the sum of score for structured report were 4.9 (range 4–5) and 1108 (mean value 79.14, STD 1.83), respectively. The overall mean score obtained by the experts in the second round was higher than the overall mean score of the first round, with a lower standard deviation value to underline greater agreement among the experts for the structured report reached in this round.

Conclusions

A wide implementation of SR is of critical importance in order to offer referring physicians and patients optimum quality of service and to provide researchers with the best quality data in the context of big data exploitation of available clinical data. Implementation is a complex procedure, requiring mature technology to successfully address the multiple challenges of user-friendliness, organization and interoperability.

Similar content being viewed by others

Introduction

The clear communication of imaging features to referring physicians is critical for patient management. The data contained move both the decision-making procedure and subsequent treatment. Radiologists’ reports still represent the gold standard concerning comprehensiveness and accuracy [1, 2]. Radiology reports are habitually produced as non-structured free text (FRT). However, variations with regard to content, style and presentation can hamper information transfer and reduce the precision of the reports, which can in turn adversely affect the extraction of the required key information by the referring physician [2]. At worst, the consequential communication errors can lead to improper diagnosis, deferred initiation of satisfactory therapy, with adverse patient outcome. The American Recovery and Reinvestment Act and the Health Information Technology for Economic and Clinical Health specified that structuring data in health records lead to a significant progress in outcome of patients [1, 2]. Since the radiology report is part of the health record, FRT should be systematized and shifted toward structured report (SR). The query of whether all diagnostic processes should have SRs is open [2, 3]. The main purposes for a shift from FTR to SR focus on three key features: quality, datafication/quantification and accessibility [2]. The use of templates provides a checklist as to whether all relevant items for a specific technique are addressed. Moreover, thanks to this “structure,” the radiological report allows for the association of radiological data and other key clinical features, leading to a precise diagnosis and personalized medicine. With regard to accessibility, it is well known that radiology reports are a rich source of data for research. This allows automated data mining, which may help to validate the relevance of imaging biomarkers by highlighting the clinical contexts in which they are most appropriate and helping to devise potential new application domains. For this reason, radiology reports should be structured with content based on standard terminology and should be accessible via standard access mechanisms and protocols [2].

Despite the obvious advantages, structured templates have not yet become established in the radiological routine. The reasons for this include the current lack of usable templates and the minimal availability of software solutions for SR [4]

In this scenario, the Italian Society of Medical and Interventional Radiology (SIRM) created an Italian warehouse of SR templates that can be freely accessed by all SIRM members, with the purpose of its routine use in a clinical setting [3, 5, 6].

The role of imaging in clinically staging colorectal cancer has grown substantially in the twenty-first century, with more widespread availability of multi-row detector computed tomography (CT) [7,8,9], high-resolution magnetic resonance imaging (MRI) [10,11,12,13,14,15] and integrated positron-emission tomography (PET)/CT [16, 17]. In contrast to staging many other cancers, increasing the colorectal cancer stage is not highly correlated with survival. For locally advanced, amenable to resection, non-metastatic colon cancer (T1N0 to T4bN4), colectomy or endoscopic surgery followed by chemotherapy is recommended, and for those amenable to resection, metastatic colon cancer (CC) neoadjuvant chemotherapy and colectomy with/without chemotherapy are recommended [18]. The 5-year survival rates of patients with stage I, II and III CC are ∼ 93, 80 and 60%, respectively [19]. The American Joint Committee on Cancer (AJCC) TNM staging system is widely used to assess the prognosis of patients with CC [20]. However, the prognosis of patients with CC at the same stage varies widely, and the accuracy of TMN staging as a predictive approach has certain limitations [21]. Therefore, another approach is needed to identify patients with poor prognosis to allow for the development of individualized treatment and monitoring approaches [21].

The correct stratification of patients requires an accurate diagnosis not only of the TNM, but also the identification of various risk factors (e.g., mucinous tumor), as well as adequate communication between the radiologist and multidisciplinary group, communication that takes place via radiological reports. To improve this communication and meet the needs of clinicians, the aim of this study is to propose a SR template for colon cancer based on CT to guide radiologists in the systematic reporting of neoplasm findings at the staging phase.

Materials and methods

Panel expert

Following extensive discussion between expert radiologists, a multi-round consensus-building Delphi exercise was performed to develop a comprehensive focused SR template for CT at the staging phase of patients with colon cancer.

A SIRM radiologist, expert in abdominal imaging, created the first draft of the SR for CT colon cancer with the collaboration of a surgeon specializing in colon cancer.

A working team of 15 experts was set up, with members from the Italian College of Gastro-enteric Radiologists and of Diagnostic Imaging in Oncology Radiologists from SIRM. Their aim was to revise the initial draft iteratively, with the objective of reaching a final consensus on SR.

Selection of the Delphi domains and items

All the experts reviewed literature data on the main scientific databases, including PubMed, Scopus and Google Scholar, to assess papers on colon cancer CT and structured radiology reports from December 2000 to May 2021. The full text of the selected studies was reviewed by all members of the expert panel, and each of them developed and shared the list of Delphi items via emails and/or teleconferences.

The SR was divided into four sections: (a) Patient Clinical Data, (b) Clinical Evaluation, (c) Imaging Protocol and (d) Report. A dedicated section of significant images was added as part of the report.

Two Delphi rounds were performed. During the first round, each panelist independently contributed to refining the SR draft by means of online meetings or email exchanges. The level of panelists’ agreement for each SR model was tested in the second Delphi through a Google Form questionnaire shared by email. Each expert expressed individual comments for each specific template section by using a five-point Likert scale (1 = strongly disagree, 2 = slightly disagree, 3 = slightly agree; 4 = generally agree, 5 = strongly agree).

After the second Delphi round, the last version of the SR was generated on the dedicated RSNA website (radreport.org) by using a T-Rex template format, in line with IHE (Integrating Healthcare Enterprise) and the MRRT (management of radiology report templates) profiles, accessible as open-source software, with the technical support of Exprivia (Exprivia SpA, Bari, Italy). These determine both the format of radiology report templates [using version 5 of Hypertext Markup Language (HTML5)] and the transporting mechanism to request, retrieve and stock these schedules [22]. The radiology report was structured by using a series of “codified queries” integrated in the T-Rex editor’s preselected sections [22].

Statistical analysis

Answers from each panelist were exported to a Microsoft Excel® format for ease of data collection and statistical analysis.

All ratings of panelists for each section were analyzed with descriptive statistics measuring the mean score, the standard deviation and the sum of scores. A mean score of 3 was considered good and a score of 4 excellent.

To measure the internal consistency of the panelist ratings for each section of the report, a quality analysis based on the average inter-item correlation was performed with Cronbach’s alpha (Cα) correlation coefficient [23, 24]. The Cα test provides a measure of the internal consistency of a test or scale; it is expressed as a number between 0 and 1. Internal consistency describes the extent to which all the items in a test measure the same concept. Cα was determined after each round.

The closer Cα coefficient is to 1.0, the greater the internal consistency of the items in the scale. An alpha coefficient of (α) ≥ 0.9 was considered excellent, α ≥ 0.8 good, α ≥ 0.7 acceptable, α ≥ 0.6 questionable, α ≥ 0.5 poor and α < 0.5 unacceptable. However, during the iterations an α of 0.8 was considered a reasonable goal for internal reliability.

The data analysis was performed using MATLAB’s Statistic Toolbox (The MathWorks, Inc., Natick, MA, USA).

Results

Structured report

The final SR (Appendix 1 in Electronic of Supplementary Materials) version was built by including n = 18 items in the “Patient Clinical Data” section, n = 7 items in the “Clinical Evaluation” section, n = 9 items in the “Imaging Protocol” section and n = 29 items in the “Report” section. Overall, 63 items were included in the final version of the SR.

The “Patient Clinical Data” section included patient clinical data, previous or family history of malignancies, risk factors or predisposing pathologies. In this section, we included the item “Allergies” to drug or no drug and contrast medium.

The “Clinical Evaluation” section collected previous examination results, a genetic panel and clinical symptoms.

The “Imaging Protocol” section included data on the equipment used, the number of detectors and whether it was multidetector or dual energy, including data on the reconstruction algorithm and slice thickness. In addition, we collected data on contrast study protocol, including data on the contrast study phase, as well as data concerning the contrast medium, such as the active principle, commercial name, dosage, flow rate, concentration and ongoing adverse events.

The “Report” section included data on lesion site, type, size, presence of prosthesis and/or colostomy, local invasion, tumor stage, node stage and metastases stage, as well as the presence of peritoneal carcinomatosis, according to the peritoneal carcinomatosis index (PCI) described by Sugarbaker [25].

Consensus agreement

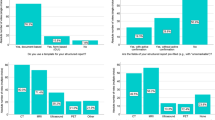

Table 1 reports the single score and sum of scores the 15 panelists gave for the structured report in the first round. One of the experts did not participate in the second round, with a total of 14 panelists for this round. Table 2 reports the single score and sum of scores the panelists gave the structured report in the second round.

Both in the first and second round, as reported in Table 1 and 2, all sections received more than a good rating: a mean value of 4.6 and range 3.6–4.9 in the first round; a mean value of 5.0 and range 4.9–5 in the second round.

In the first round, Cronbach’s alpha (Cα) correlation coefficient was a questionable 0.61. In the first round, the overall mean score of the experts and the sum of scores for the structured report were 4.6 (range 1–5) and 1111 (mean value 74.07, STD 4.85), respectively.

In the second round, Cronbach’s alpha (Cα) correlation coefficient was an acceptable 0.70. In the second round, the overall mean score of the experts and the sum of scores for the structured report were 4.9 (range 4–5) and 1108 (mean value 79.14, STD 1.83), respectively.

The overall mean score of the experts in the second round was higher than the overall mean score of the first round, with a lower standard deviation value to underline greater agreement among the experts in the structured report reached in this round.

Discussion

To the best of our knowledge, this was the first time that a group of experts promoted the creation of a structured report for the staging of colon cancer, based on a multi-round consensus-building Delphi exercise following in-depth discussion between expert radiologists in gastro-enteric and oncological imaging. In our previous study [3], we assessed a SR template for rectal cancer (RC) MRI during staging and re-staging phases. Although the RC MRI templates are based on a multi-round consensus-building Delphi exercise, in this study the draft of the SR proposed derives from the multidisciplinary agreement of a radiologist with a surgeon. A multidisciplinary validation of the SR is necessary, because precision medicine today is based on a multidisciplinary management of the patient.

The final SR version was divided into four sections: (a) Patient Clinical Data, (b) Clinical Evaluation, (c) Imaging Protocol and (d) Report, including 63 items. In both the first and second rounds, all sections received more than a good rating; however, the weakest sections were “Patient Clinical Data” and “Clinical Evaluation.” In the first round, Cronbach’s alpha (Cα) correlation coefficient was a questionable 0.61. In the second round, Cronbach’s alpha (Cα) correlation coefficient was an acceptable 0.70.

Weiss et al. described three levels of SR [26]: (a) The first level is a structured format with paragraphs and subheadings. Currently, almost all radiology reports contain this structure, with sections for clinical information, examination protocol, radiological findings and a conclusion to highlight the most important findings; (b) the second level refers to a consistent organization; and (c) the third level directly addresses the consistent use of dedicated terminology, namely standard language. The present SR is a third-level SR since it is based on standardized terminology and structures, features required to adhere to diagnostic–therapeutic recommendations and enrollment in clinical trials and to reduce any ambiguity that may arise from non-conventional lexicon. Thanks to this “structure,” this report may allow the association of radiological data and other clinical features in order to obtain personalized medicine. In fact, as regards accessibility, it is well known that radiology reports are a rich source of data for research. This allows for automated data mining, which may help to validate the relevance of imaging biomarkers by highlighting the clinical contexts in which they are most appropriate and useful in devising potential new application domains [27,28,29,30,31,32,33,34,35]. As a means of obtaining large databases (and considering the fact that only the “Report” section is mandatory, while the others do not have to be compiled, so as not to slow down workflow), in the second round good agreement was also reached in the “Patient Clinical Data” and “Clinical Evaluation” sections.

In the “Imaging Protocol” section, sharing the examination technique (not only within one's own department but also with the radiology departments of other centers) allows for the standardization and optimization of study protocols. For example, during follow-up, differences in acquisition parameters and segmentation algorithms are important features that can lead to variability in volumetric assessment. Thus, slice thickness and other protocol-related features such as the reconstruction kernel and field of view should remain constant for reliable measurements to be performed. In the protocol optimization stage, enhanced communication between different centers can theoretically lead to quality improvement through enhanced patient safety, contrast optimization and image quality [36,37,38].

A final consideration should be made on the possibility of using a template to guide the radiologist’s description of important radiological features that might be omitted in a free text report through simple distraction. For example, in our report a significant section is dedicated to the description of peritoneal carcinosis. A correct evaluation of this (thereby referring to Sugarbaker’s peritoneal carcinosis index [22]) allows for a stratification of patients and avoids unnecessary surgery. Using a checklist and a systematic search pattern may help to prevent such diagnostic errors. Both radiologists and referring clinicians are keen to reduce the rate of diagnostic errors, which for radiologists accounts for as much as 4% of reports [39,40,41,42,43]. A retrospective review of 3000 MRI examinations helped identify clinically significant extraspinal findings in 28.5% of patients which were not included in the original unstructured report [44]. Similarly, the use of a checklist-style SRs has been shown to improve the rate of diagnosis of non-fracture-related findings on cervical CT [45]. In addition, SRs have been shown to enhance clinical impact on tumor staging and surgical planning for pancreatic and rectal carcinoma [46,47,48]. Brook et al. compared the results of SR versus FRT reporting of CT findings for the staging and the assessment of resectability in pancreatic cancer patients [46]. They concluded that surgeons were more confident about tumor resectability using SR compared to FTR [46]. Sahni et al. showed that the use of an MRI SR improved rectal cancer staging when compared to the use of an FTR [48].

The present study has several limits. Firstly, the panelists were of the same nationality; the contribution of experts from multiple countries would allow for broader sharing and would increase the consistency of the SR. Secondly, this study was not aimed toward assessing the impact of the SR on the management of patients with colon cancer in clinical setting. The future objective is the clinical validation of this report.

Conclusion

The present SR, based on a multi-round consensus-building Delphi exercise, uses standardized terminology and structures, in order to adhere to diagnostic/therapeutic recommendations and facilitate enrollment in clinical trials, to reduce any ambiguity that may arise from non-conventional lexicon and to enable better communication between radiologists and clinicians. A standardized approach with best practice guidelines can offer the foundation for quality assurance measures within institutions. SR improves the quality, clarity and reproducibility of reports across departments, cities and countries, in order to assist patient management, improve patient healthcare and facilitate research.

References

American Recovery and Reinvestment Act of 2009—Title XIII: health information technology: health information technology for economic and clinical health act (HITECH Act), pp 112–164. US Government. https://www.healthit.gov/sites/default/files/hitech_act_excerpt_from_arra_with_index.pdf

European Society of Radiology (ESR) (2018) ESR paper on structured reporting in radiology. Insights Imaging 9(1):1–7. https://doi.org/10.1007/s13244-017-0588-8

Granata V, Caruso D, Grassi R, Cappabianca S, Reginelli A, Rizzati R, Masselli G, Golfieri R, Rengo M, Regge D, Lo Re G, Pradella S, Fusco R, Faggioni L, Laghi A, Miele V, Neri E, Coppola F (2021) Structured reporting of rectal cancer staging and restaging: a consensus proposal. Cancers (Basel) 13(9):2135. https://doi.org/10.3390/cancers13092135

Faggioni L, Coppola F, Ferrari R, Neri E, Regge D (2017) Usage of structured reporting in radiological practice: results from an Italian online survey. Eur Radiol 27(5):1934–1943. https://doi.org/10.1007/s00330-016-4553-6

Neri E, Coppola F, Larici AR, Sverzellati N, Mazzei MA, Sacco P, Dalpiaz G, Feragalli B, Miele V, Grassi R (2020) Structured reporting of chest CT in COVID-19 pneumonia: a consensus proposal. Insights Imaging 11(1):92. https://doi.org/10.1186/s13244-020-00901-7

Guerri S, Danti G, Frezzetti G, Lucarelli E, Pradella S, Miele V (2019) Clostridium difficile colitis: CT findings and differential diagnosis. Radiol Med 124(12):1185–1198. https://doi.org/10.1007/s11547-019-01066-0

Goiffon RJ, O’Shea A, Harisinghani MG (2021) Advances in radiological staging of colorectal cancer. Clin Radiol. https://doi.org/10.1016/j.crad.2021.06.005

Komono A, Kajitani R, Matsumoto Y, Nagano H, Yoshimatsu G, Aisu N, Urakawa H, Hasegawa S (2021) Preoperative T staging of advanced colorectal cancer by computed tomography colonography. Int J Colorectal Dis. https://doi.org/10.1007/s00384-021-03971-1

Cusumano D, Meijer G, Lenkowicz J, Chiloiro G, Boldrini L, Masciocchi C, Dinapoli N, Gatta R, Casà C, Damiani A, Barbaro B, Gambacorta MA, Azario L, De Spirito M, Intven M, Valentini V (2021) A field strength independent MR radiomics model to predict pathological complete response in locally advanced rectal cancer. Radiol Med 126(3):421–429. https://doi.org/10.1007/s11547-020-01266-z

Lorusso F, Principi M, Pedote P, Pignataro P, Francavilla M, Sardaro A, Scardapane A (2021) Prevalence and clinical significance of incidental extra-intestinal findings in MR enterography: experience of a single University Centre. Radiol Med 126(2):181–188. https://doi.org/10.1007/s11547-020-01235-6

Rega D, Granata V, Petrillo A, Pace U, Sassaroli C, Di Marzo M, Cervone C, Fusco R, D’Alessio V, Nasti G, Romano C, Avallone A, Pecori B, Botti G, Tatangelo F, Maiolino P, Delrio P (2021) Organ sparing for locally advanced rectal cancer after neoadjuvant treatment followed by electrochemotherapy. Cancers (Basel) 13(13):3199. https://doi.org/10.3390/cancers13133199

Granata V, Fusco R, Barretta ML, Picone C, Avallone A, Belli A, Patrone R, Ferrante M, Cozzi D, Grassi R, Grassi R, Izzo F, Petrillo A (2021) Radiomics in hepatic metastasis by colorectal cancer. Infect Agent Cancer 16(1):39. https://doi.org/10.1186/s13027-021-00379-y

Fusco R, Granata V, Sansone M, Rega D, Delrio P, Tatangelo F, Romano C, Avallone A, Pupo D, Giordano M, Grassi R, Ravo V, Pecori B, Petrillo A (2021) Validation of the standardized index of shape tool to analyze DCE-MRI data in the assessment of neo-adjuvant therapy in locally advanced rectal cancer. Radiol Med. https://doi.org/10.1007/s11547-021-01369-1

Basar Y, Alis D, Tekcan Sanli DE, Akbas T, Karaarslan E (2021) Whole-body MRI for preventive health screening: management strategies and clinical implications. Eur J Radiol 137:109584. https://doi.org/10.1016/j.ejrad.2021.109584

Jing B, Qian R, Jiang D, Gai Y, Liu Z, Guo F, Ren S, Gao Y, Lan X, An R (2021) Extracellular vesicles-based pre-targeting strategy enables multi-modal imaging of orthotopic colon cancer and image-guided surgery. J Nanobiotechnol 19(1):151. https://doi.org/10.1186/s12951-021-00888-3

Cohen AS, Grudzinski J, Smith GT, Peterson TE, Whisenant JG, Hickman TL, Ciombor KK, Cardin D, Eng C, Goff LW, Das S, Coffey RJ, Berlin JD, Manning HC (2021) First-in-human PET imaging and estimated radiation dosimetry of L-[5–11C]-glutamine in patients with metastatic colorectal cancer. J Nucl Med. https://doi.org/10.2967/jnumed.120.261594

Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, Bray F (2021) Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 71(3):209–249. https://doi.org/10.3322/caac.21660

Weiser MR (2018) AJCC 8th edition: colorectal cancer. Ann Surg Oncol 25:1454–1455. https://doi.org/10.1245/s10434-018-6462-1

Huang C, Zhao J, Zhu Z (2021) Prognostic nomogram of prognosis-related genes and clinicopathological characteristics to predict the 5-year survival rate of colon cancer patients. Front Surg 16(8):681721. https://doi.org/10.3389/fsurg.2021.681721

Kahn CE Jr, Genereaux B, Langlotz CP (2015) Conversion of radiology reporting templates to the MRRT standard. J Digit Imaging 28(5):528–536. https://doi.org/10.1007/s10278-015-9787-3

Becker G (2000) Creating comparability among reliability coefficients: the case of Cronbach Alpha and Cohen Kappa. Psychol Rep 87:1171

Cronbach LJ (1951) Coefficient alpha and the internal structure of tests. Psychometrika 16:297–334

Sugarbaker PH, Yan TD, Stuart OA, Yoo D (2006) Comprehensive management of diffuse malignant peritoneal mesothelioma. Eur J Surg Oncol 32(6):686–691. https://doi.org/10.1016/j.ejso.2006.03.012

Weiss DL, Bolos PR (2009) Reporting and dictation. In: Branstetter IVBF (ed) practical imaging informatics: foundations and applications for PACS professionals. Springer, Heidelberg

Qin H, Que Q, Lin P, Li X, Wang XR, He Y, Chen JQ, Yang H (2021) Magnetic resonance imaging (MRI) radiomics of papillary thyroid cancer (PTC): a comparison of predictive performance of multiple classifiers modeling to identify cervical lymph node metastases before surgery. Radiol Med. https://doi.org/10.1007/s11547-021-01393-1

Santone A, Brunese MC, Donnarumma F, Guerriero P, Mercaldo F, Reginelli A, Miele V, Giovagnoni A, Brunese L (2021) Radiomic features for prostate cancer grade detection through formal verification. Radiol Med 126(5):688–697. https://doi.org/10.1007/s11547-020-01314-8

Granata V, Grassi R, Fusco R, Galdiero R, Setola SV, Palaia R, Belli A, Silvestro L, Cozzi D, Brunese L, Petrillo A, Izzo F (2021) Pancreatic cancer detection and characterization: state of the art and radiomics. Eur Rev Med Pharmacol Sci 25(10):3684–3699. https://doi.org/10.26355/eurrev_202105_25935

Granata V, Fusco R, Avallone A, De Stefano A, Ottaiano A, Sbordone C, Brunese L, Izzo F, Petrillo A (2021) Radiomics-derived data by contrast enhanced magnetic resonance in RAS mutations detection in colorectal liver metastases. Cancers (Basel) 13(3):453. https://doi.org/10.3390/cancers13030453

Reinert CP, Krieg EM, Bösmüller H, Horger M (2020) Mid-term response assessment in multiple myeloma using a texture analysis approach on dual energy-CT-derived bone marrow images—a proof of principle study. Eur J Radiol 131:109214. https://doi.org/10.1016/j.ejrad.2020.109214

Tomori Y, Yamashiro T, Tomita H, Tsubakimoto M, Ishigami K, Atsumi E, Murayama S (2020) CT radiomics analysis of lung cancers: differentiation of squamous cell carcinoma from adenocarcinoma, a correlative study with FDG uptake. Eur J Radiol 128:109032. https://doi.org/10.1016/j.ejrad.2020.109032

Kirienko M, Ninatti G, Cozzi L, Voulaz E, Gennaro N, Barajon I, Ricci F, Carlo-Stella C, Zucali P, Sollini M, Balzarini L, Chiti A (2020) Computed tomography (CT)-derived radiomic features differentiate prevascular mediastinum masses as thymic neoplasms versus lymphomas. Radiol Med 125(10):951–960. https://doi.org/10.1007/s11547-020-01188-w

Coppola F, Faggioni L, Regge D, Giovagnoni A, Golfieri R, Bibbolino C, Miele V, Neri E, Grassi R (2021) Artificial intelligence: radiologists’ expectations and opinions gleaned from a nationwide online survey. Radiol Med 126(1):63–71. https://doi.org/10.1007/s11547-020-01205-y

Grassi R, Belfiore MP, Montanelli A, Patelli G, Urraro F, Giacobbe G, Fusco R, Granata V, Petrillo A, Sacco P, Mazzei MA, Feragalli B, Reginelli A, Cappabianca S (2021) COVID-19 pneumonia: computer-aided quantification of healthy lung parenchyma, emphysema, ground glass and consolidation on chest computed tomography (CT). Radiol Med 126(4):553–560. https://doi.org/10.1007/s11547-020-01305-9

Reiner BI (2014) Strategies for radiology reporting and communication: part 4: quality assurance and education. J Digit Imaging 27(1):1–6. https://doi.org/10.1007/s10278-013-9656-x

Pfaff JAR, Füssel B, Harlan ME, Hubert A, Bendszus M (2021) Variability of acquisition phase of computed tomography angiography in acute ischemic stroke in a real-world scenario. Eur Radiol. https://doi.org/10.1007/s00330-021-08084-5

Feraco P, Piccinini S, Gagliardo C (2021) Imaging of inner ear malformations: a primer for radiologists. Radiol Med. https://doi.org/10.1007/s11547-021-01387-z

Bender LC, Linnau KF, Meier EN et al (2012) Interrater agreement in the evaluation of discrepant imaging findings with the Radpeer system. AJR Am J Roentgenol 199:1320–1327

Borgstede JP, Lewis RS, Bhargavan M et al (2004) RADPEER quality assurance program: a multifacility study of interpretive disagreement rates. J Am Coll Radiol JACR 1:59–65

Donald JJ, Barnard SA (2012) Common patterns in 558 diagnostic radiology errors. J Med Imaging Radiat Oncol 56:173–178

Hsu W, Han SX, Arnold CW et al (2016) A data-driven approach for quality assessment of radiologic interpretations. J Am Med Inform Assoc 23(e1):e152–e156

McCreadie G, Oliver TB (2009) Eight CT lessons that we learned the hard way: an analysis of current patterns of radiological error and discrepancy with particular emphasis on CT. Clin Radiol 64:491–499

Quattrocchi CC, Giona A, Di Martino AC et al (2013) Extra-spinal incidental findings at lumbar spine MRI in the general population: a large cohort study. Insights Imaging 4:301–308

Lin E, Powell DK, Kagetsu NJ (2014) Efficacy of a checklist-style structured radiology reporting template in reducing resident misses on cervical spine computed tomography examinations. J Digit Imaging 27:588–593

Brook OR, Brook A, Vollmer CM et al (2015) Structured reporting of multiphasic CT for pancreatic cancer: potential effect on staging and surgical planning. Radiology 274:464–472

Marcal LP, Fox PS, Evans DB et al (2015) Analysis of free-form radiology dictations for completeness and clarity for pancreatic cancer staging. Abdom Imaging 40:2391–2397

Sahni VA, Silveira PC, Sainani NI et al (2015) Impact of a structured report template on the quality of MRI reports for rectal cancer staging. AJR Am J Roentgenol 205:584–588

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standard

Not applicable.

Research involving human participants and/or animals

Not applicable.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Granata, V., Faggioni, L., Grassi, R. et al. Structured reporting of computed tomography in the staging of colon cancer: a Delphi consensus proposal. Radiol med 127, 21–29 (2022). https://doi.org/10.1007/s11547-021-01418-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11547-021-01418-9