Abstract

In Bhattacharya et al. (Sci Adv 6(32):7682, 2020), a set of chemical reactions involved in the dynamics of actin waves in cells was studied at two levels. The microscopic level, where the individual chemical reactions are directly modelled using Gillespie-type algorithms, and on a macroscopic level where a deterministic reaction–diffusion equation arises as the large-scale limit of the underlying chemical reactions. In this work, we derive, and subsequently study, the related mesoscopic stochastic reaction–diffusion system, or chemical Langevin equation, that arises from the same set of chemical reactions. We explain how the stochastic patterns that arise from this equation can be used to understand the experimentally observed dynamics from Bhattacharya et al. In particular, we argue that the mesoscopic stochastic model better captures the microscopic behaviour than the deterministic reaction–diffusion equation, while being more amenable for mathematical analysis and numerical simulations than the microscopic model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In order to move around, an amoeboid cell can change its shape by polymerising actin to curve the cell membrane. The actin polymerisation is controlled by signalling molecules, and experiments in Dictyostelium discoideum have shown that activation of these signalling molecules happens at localised patches that can move along the membrane like a wave (Inagaki and Katsuno 2017; Bhattacharya et al. 2020). In wild-type (WT) cells, these waves move fast and die out, creating familiar-shaped pseudopods, while in cancerous cells these waves stick to a point, creating elongated protrusions (Bhattacharya et al. 2020), see Fig. 1. In the absence of a signal, the formation of pseudopods happens at random places on the cell membrane, resulting in random motion. In contrast, when a cell senses a chemical signal, it can concentrate the random protrusions at the side of the cell where the signal comes from, leading to movement in the direction of the signal (Deng and Levine 2022). As cells are small, the difference in signal strength between the front and the back of the cell (the gradient) is small as well. Furthermore, the cell can only use discrete points at the membrane where the receptors are to estimate the direction of the signal (Deng and Levine 2022). Therefore, one of the main questions is “How can a cell use a small gradient in the signal to concentrate the actin activity in the front?". This question has been studied intensively, but no complete description of all the microscopic chemical processes involved has been given yet, see Devreotes et al. (2017) for a review.

In Bhattacharya et al. (2020), the choice is made to describe the highly complex actin dynamics with a conceptual activator u and inhibitor v that diffuse and react with each other as summarised in Table 1. The species u and v are an abstraction of the dozens of components that regulate the actual cell movement, but the activator u can be thought of as Ras activity (Bhattacharya et al. 2020), which plays an important role in cell growth and differentiation (Lodish et al. 2008). In particular, u is being activated by Reaction \(\#3\) and Reaction \(\#4\), while being inhibited by Reaction \(\#1\) and Reaction \(\#2\), with propensities as indicated in the table. In addition, v is inhibited by Reaction \(\#5\), while Reaction \(\#6\) activates the inhibitor.

Stochastic simulations of the microscopic Gillespie-type model from Bhattacharya et al. (2020). The figures on the left show stochastic simulations of the Ras activity for parameter values applicable to (A) wild-type cells and to (B) genetically modified cells, where the phosphatase PTEN has been switched off. The figures on the right show typical cell shapes corresponding to the dynamics in the left figures. This shows that mutations in the gene that codes for PTEN lead to elongated protrusions typically associated with cancer. The dotted yellow line is an indicator of the wave speed, i.e. the actin waves in (B) are slower and live longer than in (A). Reproduced from Bhattacharya et al. (2020) under creative commons license 4.0 (Color figure online)

The information on the chemical reactions, in combination with the diffusion of both species, is generally used in one of two ways. First, there is a Gillespie-type algorithm (Gillespie 1976, 1977) which can be used to simulate the involved chemical reactions on a microscopic level. For these simulations, \((u_k(t_n),v_k(t_n))\) (the solution at time \(t_n\) at grid cell k) is treated as the number of molecules of type u and v at time \(t_n\) in a grid cell with finite size. For all these individual molecules, the probabilities of diffusing to other grid cells or taking part in a chemical reaction are prescribed as in Table 1. To be precise, Reaction \(\#1\) implies that the time to the next reaction that degrades a u molecule in grid cell k is exponentially distributed with rate parameter \((a_1u_k(t_n))^{-1}\). See the panels on the left of Fig. 1 for examples of these simulations. This Gillespie-type algorithm approach takes the stochastic nature of a single cell into account. However, it is computationally very expensive and difficult to analyse mathematically. Hence, it is hard to use this type of modelling approach to make valuable predictions.

A second way to use the reactions in Table 1 is to derive an average large-scale limit macroscopic equation. Hence, we assume that u and v are densities on a continuous domain, described by a reaction-rate equation with diffusion, also known as a reaction–diffusion equation (RDE). In particular, the RDEFootnote 1 related to the chemical reactions in Table 1 is given by

which is a specific version of the general RDE we will encounter in Sect. 2. This model is a variation on the classic FitzHugh–Nagumo model for neuron spiking (FitzHugh 1961; Nagumo et al. 1962). Protrusions are formed at places with high activator u, and u is inhibited by the terms \(-a_1 u\) and \(-a_2 u v\), see Reaction \(\#1\) and Reaction \(\#2\) in Table 1. This implies that an increase in u or v leads to a decrease in u, unless the increase is high enough such that activation from Reaction \(\#3\), modelled by a nonlinear Hill function \(a_3u^2/(a_4+u^2)\), takes over and negates the inhibiting effects. Effectively, this means once u overcomes a certain threshold we observe a much larger increase in u and call the system locally activated. Once u is large and the Hill function levels off at a fixed value \(a_3\), the amount of inhibitor v increases via the term \(\varepsilon c_2u\) (related to Reaction \(\#6\)), leading to a fast decay in u by the \(-a_2uv\) term (related to Reaction \(\#2\)). The inhibitor v then decays via Reaction \(\#5\) to the background state, and activation can happen again. In addition, both species diffuse with diffusion coefficient \(D_u\), respectively, \(D_v\), where it is assumed that \(D_u< D_v\). It is important to realise that, in both approaches, the modelled actin waves happen on the surface of the cell, and, as in Bhattacharya et al. (2020), we only study a slice of this surface. Therefore, the spatial domain must be thought of as an (approximate) circle.

For deterministic RDEs like (1.1), a plethora of analytical tools are available (see, for instance, “Appendix B”) and numerical simulations are relatively straightforward. However, being a deterministic equation, this RDE does not show the same stochastic dynamics as the Gillespie simulations and experiments. A crucial difference between the macroscopic RDE model (1.1) and the Gillespie simulations revolves around the duration of the patterns. In the RDE, an established pattern, e.g. a standing or travelling wave, will, if uninterrupted, remain there for a very long time, while these patterns are destroyed quickly both in stochastic simulations and experiments. Furthermore, when the background state of the RDE (1.1) is stable, activation cannot come from the RDE itself, but it needs an external signal large enough to activate the nonlinear term \(a_3 u^2/(a_4 + u^2)\) related to Reaction \(\#3\). We generally refer to the activation of these patterns as activation events.

It is important to realise that the dynamics of the different chemical processes in the cell are inherently stochastic and at the size of a single-cell chemical reactions are not well approximated by large-scale approximations, as Figs. 1 and 2 show. In other words, treating the relevant enzymes and receptors like a continuous medium of infinitely many, infinitely small, particles is invalid, and the stochastic nature of reactions between individual molecules becomes important. This so-called internal noise can serve as a signal to activate the dynamics if it is large enough at a certain point in space and time. As we noted before, the cell hence executes a random walk in the absence of a signal.Footnote 2 This implies that an external signal does not necessarily activate the dynamics at a certain point on the membrane, but rather changes the random walk of the cell into a biased random walk in the direction of the signal. Using a more extended model than presented here, it is shown in Biswas et al. (2022) that coupling an external signal to the stochastic dynamics of the cell indeed can lead to movement in the direction of that signal.

Instead of studying the complex internal dynamics of the cell, it can be advantageous to perturb the deterministic RDE (1.1). For instance, in Bhattacharya et al. (2020), an external source of noise is applied to the RDE (1.1), turning it into a stochastic RDE (or stochastic partial differential equation (SPDE)). While this approach can indeed activate the dynamics and make long-term deterministic waves collapse, it is inherently ad hoc and not a priori based on any of the involved biologically relevant processes.

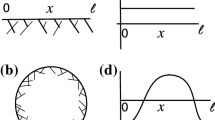

Comparison of the deterministic model (1.1) and its stochastic counterpart (1.2). In (a), we show a simulation of (1.1), which is excited at \(t=0\), resulting in two counter-propagating travelling waves. In the stochastic simulation in (b), the influence of the initial excitation quickly disappears and new pulses appear constantly. The same parameters are used as in the simulations shown in the second row of Fig. 1. Observe the similarities in the shape of the pattern. In (a), the waves travel around the cell where they cancel each other, while in (b) the waves cancel each other at a much shorter scale. See Sect. 3.4 for more details (Color figure online)

In between the macroscopic level of the RDE and the microscopic level of the chemical reactions, one can derive a mesoscopic SPDE, known as a chemical Langevin equation (CLE) (Gillespie 2000) that also incorporates the internal noise of the cell. In Sect. 2, we will show that the SPDE associated with the chemical reactions as described in Table 1 plus diffusion is given by

Here, \((d W^{1}_t, d W^{2}_t)\) and \((d {\tilde{W}}^{1}_t,d {\tilde{W}}^{2}_t)\) are two independent noise vectors with space-time white noise (each component is also independent of the other) and \(\sigma \) is a measure for the strength of the noise. Indeed, in the no-noise limit \(\sigma \rightarrow 0\) the mesoscopic SPDE (1.2) reduces to the macroscopic RDE (1.1). In that sense, \(\sigma \) serves as a scale parameter.

The main advantage of the SPDE description is, on one hand, that the solutions still show the rich dynamics of the Gillespie models, i.e. the activation and destruction of waves, but are computationally significantly less expensive. On the other hand, since the SPDE in the no-noise limit reduces to the deterministic RDE model (1.1), we can use well-developed partial differential equation (PDE) theory to gain insight into the dynamics of the RDE (1.1) and use this to study the closely related SPDE, see for instance (Hamster and Hupkes 2020; Kuehn 2019). To give an idea of the differences between the deterministic and stochastic models, we plot two simulations in Fig. 2 that will be discussed later in Sect. 3. It is clear that the simulation of the SPDE paints a much more dynamic picture than the deterministic one, which is more in line with the inherently noisy nature of the cell’s chemical processes. Hence, SPDEs are an invaluable tool in unravelling the dynamics of a cell.

This article is now organised as follows. In Sect. 2, we explain how to derive the SPDE (1.2) from Table 1. Subsequently, in Sect. 3 we study both the SPDE (1.2) and the RDE (1.1) numerically in different parameter regimes and qualitatively compare the observed dynamics to the Gillespie simulations from Bhattacharya et al. (2020). In Sect. 4, we discuss the results and how they relate to the questions posed in this introduction.

2 Derivation of the SPDE

Our starting point to derive (1.2) is the set of chemical reactions as laid out in Table 1. First, we introduce the column vector \(X(t)=(u(t),v(t)))^T\), where T indicates that we transpose the row vector, and the column vector \({\mathcal {R}}(X(t))\) with the propensities of the six reactions:

The associated stoichiometric matrix \({\mathcal {S}}\), which describes the change in X(t) for each reaction, is then given by

see the last two columns of Table 1. On top of these reactions, we assume that both variables also diffuse, so for a well-mixed solution in a large container we find the classic PDE

where D is a diagonal diffusion matrix with coefficients \(D_u\) and \(D_v\) on the diagonal (Bressloff 2014). This PDE is identical to the RDE (1.1) and describes the dynamics of X(t), averaged over many individual reactions. When the number of reacting molecules is large enough, and when we zoom out far enough such that all individual molecules become effectively a density, the macroscopic PDE gives a good approximation of the microscopic behaviour. Statistically speaking, this means that the probability distribution of all possible states must be very sharply peaked around the average value described by the PDE, so the deviations from the mean can be ignored. In the next section, we study these deviations from the mean for an explicit example (which can be studied in full detail Bressloff 2014), but readers familiar with the subject can directly go to Sect. 2.2.

2.1 Motivating Example

The assumption that we can ignore deviations from the mean is not always valid. For example, in population dynamics, we can write down birth–death models for several hundred individuals and with this number of individuals, random deviations from the mean are actually significant. To further exemplify, and to set the stage for the upcoming derivation, let us study such a simple discrete birth–death process: suppose a population is at time t in state X(t). In the next timestep dt, there are three possible outcomes: (i) the population grows by one individual with probability b(X(t))dt, (ii) the population decreases by one individual with probability d(X(t))dt, or (iii) nothing happens to the population with probability \(1-b(X(t))dt-d(X(t))dt\).

Now, assume we have a continuous stochastic differential equation (SDE)

where \(\beta _t\) is Brownian motion, i.e. we can think of \(d\beta _t\) as a random step with average zero and variance dt. We now ask the question: “When is this continuous SDE a good approximation of the described discrete birth-death process?”. Or, more precisely, “What should f(x) and g(x) be such that (2.3) is a good approximation of the described discrete process?”. Given a solution x of the SDE, we see that the average expected value at \(x(t+dt)\) is approximated, at lowest order in dt, by

For the described birth–death process, we have that the expectation is

Hence, the average expected jump size in population is identical for the SDE (2.3) and the birth–death process if we take \(f(x):=b(x)-d(x)\).

Next, we compute the deviation from the mean of the SDE (2.3)

while this deviation for the birth–death process is

Therefore, to make these deviations coincide at first order in dt, we must take \(g(x):=\sqrt{b(x)+d(x)}\). Hence, the process x(t) described in (2.3), which is continuous in population size and time, is a good approximation of the discrete process X(t) when

The stochastic process x(t) shares the average and variance with X(t) but differs in other points. Higher-order moments of x(t) and X(t) will not be identical, and x(t) can become negative, even when b and d are chosen such that this is not possible in the discrete model.

In order to link the SDE above to chemical reactions, we make the following observation. The birth of an individual can be thought of as the chemical reaction \(\emptyset \rightarrow X\) with propensity b(X) and stoichiometric value 1, while the death of an individual can be seen as the chemical reaction \(X\rightarrow \emptyset \) with propensity d(X) and stoichiometric value \(-1\). Next, we make an assumption which is called the leap condition (Bressloff 2014). That is, we assume that, given a state X(t), enough reactions happen in the interval \([t,t+dt]\) to describe the average jump size in \([t,t+dt]\) by a Poisson process whose parameters depend on X(t). With this leap condition assumption, we implicitly also assume that X(t) is a good approximation of the solution in the whole time interval \([t,t+dt]\). We now turn the discrete process X(t) into a continuous process x(t) by approximating the discrete Poisson process by a continuous Gaussian, see Kim et al. (2017) for details. This approach results in an SDE similar to the SDE (2.4):

for two independent Brownian motions \(\beta ^1_t\) and \(\beta _t^2\). Although visually different from (2.4), both SDEs have a noise term that is Gaussian with identical average and variance. Therefore, both SDEs describe the same stochastic process and hence we can say that (2.4) and (2.5) are equivalent.

2.2 Derivation of the CLE

We have now gained some intuition for linking more general discrete chemical reactions to continuous S(P)DEs: if we have M different molecules in a vector X(t) with diffusion matrix D, N reactions given by a vector \({\mathcal {R}}(X(t))\) and a stoichiometric matrix \({\mathcal {S}}\), then the continuous SPDE for X(t) is given by

see Bressloff (2014); Kim et al. (2017). The equation is made of two parts, a local equation that describes the kinetics as in SDE (2.4)

and a stochastic diffusion equation

as derived in Dogan and Allen (2011). Here, \(dW_t\) and \(d\tilde{W}_t\) are two independent vectors with space-time white noise. These can be understood as infinite-dimensional versions of \(d\beta _t\) from the previous section. The vector \(dW_t\) has N components coming from the N reactions, while \(d{{\tilde{W}}}_t\) has the dimension M of X(t). SPDE (2.6) is known as the chemical Langevin equation (CLE) (Gillespie 2000). The vector X(t) now describes the densities of the molecules involved, not the actual number of molecules. How well the discrete number of molecules is approximated by a density is determined by the scale parameter \(\Omega \) and is in that sense a measure for the noisiness of the system. In the no-noise limit \(\Omega \rightarrow \infty \), we recover the classic RDE (2.2). In contrast, for small \(\Omega \) the dynamics of the discrete process is dominated by random events and the discrete process should be described in full detail by a chemical master equation (Gillespie 1992). The CLE can be understood as the lowest order approximation of the chemical master equation for large \(\Omega \), see for more details (Bressloff 2014). For an overview of all different paths leading from molecular kinetics to (S)PDEs, see (Lei 2021, Fig. 3.4). It is important to realise that SPDE (2.6) does not necessarily inherit all the statistical properties of the chemical master equation, only averages and variances. Another potential issue is that it does not necessarily ensures positivity of the solutions.

Just as (2.4) and (2.5) are identical, we can rewrite (2.6) in the following way:

This time, the noise vector \(dW_t\) has just M components, reducing the number of random vectors that must be generated (when \(M<N\)). The downside is that the computation of \(\sqrt{{\mathcal {S}}\text {diag}({\mathcal {R}}(X)){\mathcal {S}}^T}\) is in general numerically more expensive than the computation of \({\mathcal {S}}\sqrt{\text {diag}({\mathcal {R}}(X))}\). However, in the present setting, there are no connections between the two variables in the stoichiometric matrix \({\mathcal {S}}\) (2.1) and the matrix \({\mathcal {S}}\text {diag}({\mathcal {R}}(X)){\mathcal {S}}^T\) is thus diagonal, making the computation of the square root trivial.

Note that once we have the CLE (2.9), it can be applied to any set of chemical reactions and can therefore have widespread use. For example, we can now return to Table 1 and apply the CLE to these reactions, which results in

For notational convenience, we replaced \(1/\sqrt{\Omega }\) by a small parameter \(\sigma \), resulting in the SPDE (1.2) from the introduction. In the remainder of this work, we will study the SPDE above, mainly using numerical techniques.

Remark 1

It is important to realise that the SPDE above does not have a function-valued solution in general. The term \(\partial _x\left( \sqrt{2DX(t)}d{{\tilde{W}}}_t\right) \) can only be understood in terms of distributions as we take the derivative of stochastic process that is not differentiable. However, for distributions, the square root is not well defined which makes the equation ill-posed. Therefore, it is not a priori clear if the numerical solutions shown in the next section converge to a solution of the SPDE when the spatiotemporal discretisations dx and dt are sent to 0. In Sect. 3.1, we will discuss the implications of omitting this term on the wave dynamics.

3 Simulations

In this section, we will numerically investigate the PDE (1.1) and SPDE (2.10). We investigate three of the main building blocks of the PDE dynamics: localised standing waves, localised travelling waves and time-periodic solutions, together with their counterparts in the SPDE. However, before we can investigate the dynamics, we must first establish some basic properties of the (S)PDE, like the existence, uniqueness and stability of the background state(s).

(a) The green line is the v-nullcline for \(c_1=0.18\), while the red line is the nullcline for \(c_1=0.35\). The blue line is the u-nullcline, independent of \(c_1\). The u-axis is plotted logarithmically to better highlight the shape of the nullcline for small u. Note how the background state moved around the fold. (b) Visual representation of the evolution of the two (complex) eigenvalues of the Jacobian matrix (3.2) for \(c_1\) varying from 0.18 (dark blue) to 0.35 (yellow), following the black arrows. The other parameters are fixed at \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(\varepsilon =0.52\) and \(c_2=3.9\) (Color figure online)

For the existence of localised waves, we need that the spatially homogeneous background state is stable. In contrast, for the time-periodic solutions, we expect the background state to be unstable such that continuous excitations of the background state can happen. The possible background states \((u^*,v^*)\) of (1.1) are given by the positive real solutions of the u-nullcline and v-nullcline

See Fig. 3a for a typical representation of the shape of the nullclines. Since the system parameters are all assumed to be positive, this is equivalent to finding the positive solutions \(u^*\) of

with \(v^*= c_2 u^*/c_1\). Due to the complexity of the general solution formula for quartic polynomials, it is not feasible to write down its solutions explicitly. However, by Descartes’ rule of signs (Descartes 2020) we know that there is only one positive real root if \(c_1(a_3 + a_5) < a_2 a_4 c_2\) and one or three positive real roots otherwise.Footnote 3 The stability of a background state \((u^*,v^*)\) is then determined by the eigenvalues of the associated Jacobian matrix

Since we do not have an explicit formula for \((u^*,v^*)\), we must compute these eigenvalues numerically. For example, when we allow one free parameter, e.g. \(c_1\), and fix the other values, then we can compute the background states and the associated eigenvalues of the Jacobian matrix. Taking the parameter values \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(\varepsilon =0.52\) and \(c_2=3.9\) from Bhattacharya et al. (2020) and letting \(c_1\) range from 0.18 to 0.35, such that \(c_1(a_3 + a_5) < a_2 a_4 c_2\), result in one admissible positive background state ranging from \((u^*,v^*)\approx (0.077, 1.669)\) to \((u^*,v^*)\approx (0.142, 1.586)\). Initially, for the lower values of \(c_1\), the eigenvalues are real and negative, resulting in a stable background state. Increasing the value of \(c_1\) to approximately 0.25 results in complex eigenvalues, still with negative real parts. When we further increase the value of \(c_1\) to approximately 0.29, both eigenvalues cross the imaginary axis, i.e. the background state undergoes a Hopf bifurcation and we expect to see time-periodic solutions. See Fig. 3b for a visual representation of the evolution of the eigenvalues. In Fig. 3a, we show the nullclines for \(c_1=0.18\) and \(c_1=0.35\). The unique background state moved along the fold in the u-nullcline, and as long as the background state is in between the twofold, the background state is unstable.

In the next sections, we will study localised standing and travelling waves for the same parameter set with \(c_1<0.25\) and for time-periodic solutions with \(c_1>0.29\). The complex dynamics of pulse adding for \(c_1\)-values in the intermediate regime between these two boundary values, where the eigenvalues of the Jacobian are stable but complex-valued, is outside the scope of this work, see for example (Carter et al. 2016) for more information.

So far, we only looked at background states, which are spatially homogeneous. However, we are interested in spatially nonhomogeneous patterns. By definition, a localised wave is a fixed profile \((\Phi _u,\Phi _v)\) that moves with a fixed speed c (possibly zero). Therefore, when we change the spatial coordinate x to \(\xi =x-ct\) using the chain rule, the profile \((\Phi _u,\Phi _v)\) is a stationary solution of the following shifted ordinary differential equation (ODE):

This ODE problem can be solved using numerical fixed-point algorithms. For these algorithms, a crude starting point is needed for the profile and the value of c, which can come from a PDE simulation. Note that this problem is translation invariant, meaning that we find a one-dimensional family of travelling waves, all shifted versions of each other. Hence, for the solver to converge, an extra condition to fix the location of the wave is necessary.

3.1 Standing Waves

In this section, we will study standing waves, which means we look for solutions of (3.3) with \(c=0\). A solution to this ODE is shown in Fig. 4a. We observe that both components u and v indeed start at and return to their background state \((u^*,v^*) \approx (0.0523, 2.0394)\). We observe that the activator u changes rapidly in a small region in the spatial domain and we, therefore, call the activator u the fast variable. On the other hand, the inhibitor v is the slow variable as it changes more gradually over a larger spatial distance. Figure 4b shows the corresponding phase plane. The majority of the spatial dynamics happens near the lower branch of the u-nullcline before it has a fast excursion from the lower branch to the upper branch of this nullcline and, by the symmetry \(x \mapsto -x\) of the ODE (3.3), it then returns back to the lower branch in a similar fashion. The fact that both components of the standing pulse evolve on a different spatial scale allows us to mathematically analyse this standing pulse, see “Appendix B”. For instance, the value \({\bar{v}}\) at which the activator u makes a sharp transition (approximately 3.8 in Fig. 4b), can be approximated by the algebraic relation (B.10). The analysis also explains why the solution trajectory in the phase plane closely follows the lower branch of the u-nullcline for the most part of the trajectory.

(a) shows a localised standing wave solution to ODE (3.3), found numerically with MATLAB’s fsolve. The green curve is the u-component and the red curve is the v-component. In (b), the u-nullcline (blue) and v-nullcline (green) of (1.1) are shown together with the v-u phase plane of the standing wave from (a). The phase plane is plotted on a semi-log scale to better highlight the dynamics for small u. We observe that the standing wave starts from the background state (indicated by an asterisk) and initially follows the lower branch of the u-nullcline before jumping to the upper branch of the u-nullcline and follows the same track back to the background state. The system parameters are taken from Bhattacharya et al. (2020) and set to \(D_u=0.1\), \(D_v=1\), \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(\varepsilon =0.52\), \(c_1=0.1\), and \(c_2=3.9\) (Color figure online)

Simulation of the PDE (1.1), (a) shows the activator u and (b) the inhibitor v with an initial condition as described in the main text. The same parameters are used as in Fig. 4. Note that the v-component does not return to its background state in the region between the two pulses (Color figure online)

By assumption, the standing wave in Fig. 4a is a stationary solution of the PDE (1.1). This can be confirmed by using the wave from the ODE as the initial condition for a PDE simulation (not shown). However, we are not likely to find this single standing wave in a PDE simulation without a fine-tuned initial condition. As an example, we use for the simulation the initial condition \(u_0=u^*+e^{-x^2}\) and \(v_0=v^*+2/\cosh ^2(5x)\) as a crude approximation of the wave. The resulting simulation is shown in Fig. 5. This initial condition splits in, what appears to be, two well-separated localised standing waves.Footnote 4 However, the plot of the slow v-component makes clear that this is not the case, and that the two standing waves are connected through the slow component, i.e. the slow component is not in its background state in between the two standing waves. For more details on the numerics of the (S)PDE simulations, see “Appendix A”.

The interaction between the two standing waves in Fig. 5 through the slow v-component makes that the two standing waves repel each other on a very slow timescale as is made clear by taking long integration times, see Fig. 6b. On an infinite domain, the two standing waves slowly drift apart forever, but on a periodic domain, we can expect them to stabilise once they are at an equal distance on both sides. On the timescales of biological processes, this slow continuous splitting is probably not relevant and on short timescales, the term “standing waves” for the solution at later times in Fig. 5 is biologically justifiable. Furthermore, note that it is essential to look at both components simultaneously if one wants to understand the presented dynamics. In other words, for our understanding of Fig. 5a it is essential to also look at Fig. 5b.

Same simulation as in Fig. 5, but on different time scales. (a) shows the u-component, zoomed in to highlight the short-time dynamics, while (b) shows the long-time dynamics of u highlighting the pulse splitting phenomenon. Both simulations were done on a larger grid \([-60,60]\), so the waves would not affect each other on the other side of the domain on this large time scale (Color figure online)

We now take a closer look at the short-time dynamics presented in Fig. 6a. In Bhattacharya et al. (2020), this splitting of the initial condition is described as two counter-propagating travelling waves, sometimes called trigger waves Gelens et al. (2014). By the formal mathematical definition, a travelling wave is a fixed profile moving with a fixed speed, i.e. a solution of (3.3). Therefore, mathematically speaking, these do not classify as travelling waves. Instead, what we observe here would be classified as transient dynamics and pulse splitting. However, it is clear that at \(t=0\), the activity of u is around \(x=0\), and after some time it moved to two different places, justifying the term “travelling”. If we adopt the terms “standing” and “travelling”, it is clear from Fig. 5a that around \(t=3\) a transition occurs from travelling to standing. Hence, the transition from a standing to a travelling wave can occur in the parameter regime where initial conditions typically converge to a double pulse.

Standing waves with noise For the same parameter values as in the previous paragraph, we now study the full SPDE (2.10). In Fig. 7, we plot realisations of the SPDE for different noise intensities. For low noise levels, we see two quasi-stationary waves appear, like in Fig. 5, before they are destroyed at different points in time by the noise. Since the noise is low, no new activation events happen. When we increase the noise intensity, the noise is able to activate the stable background state, but the waves are also destroyed more quickly, resulting in a constant appearance and disappearance of waves. Note the comparison between Fig. 7c and the figures in Biswas et al. (2022), where a similar model is studied using Gillespie algorithms. This activation of the background state is not possible in the deterministic PDE (1.1) without an external force. In Fig. 7b and c, we see that in the first instances, many patterns are generated, causing the inhibitor to increase everywhere which blocks new activation events. After this initial phase, new activation events appear, and significantly more for higher values of the noise as expected. When we increase the noise even further, it becomes impossible to form patterns as every activation event is destroyed instantly. Therefore, pattern formation happens at intermediate values of the noise. The idea that there is some “optimal” value of the noise resulting in complex dynamics has been observed before in, for instance, the context of nerve impulses García-Ojalvo et al. (2001).

The u-component of the SPDE (2.10) for four different values of the noise \(\sigma \). The other system parameters and initial conditions are the same as in the previous figures. In (a), we only show the simulation of wave integrated up to \(T=20\) because the solution remains in the background state; afterwards, the other three figures are shown up to \(T=100\) (Color figure online)

In order to quantify this notion of optimality in the noise intensity, we must first quantify the size and shape of the patterns in Fig. 7b and c. Using MATLAB’s regionprops algorithm, we can automatically detect the patches with a high value for the activator u (see “Appendix A” for details), giving us the possibility to compute the number of activation events and determine the width and duration of each event, see Fig. 8a. In Fig. 8b, we show the statistics for a range of \(\sigma \) values. This figure shows that there is a clear cut-off for when activation events are likely to happen. For values of \(\sigma <0.035\), the average number of events is lower than 1, and the number of activation events increases sharply after this value. We observe that the width, the length and the maximum height of the events are all higher when the number of excitation events is low, but the variability in these values is also larger. In Fig. 9, we look at the statistics of the events for the specific value \(\sigma =0.046\). The value of the maximum is sharply peaked. This is something we expect, as the maximum is mainly determined by the deterministic dynamics after the excitation. The width and length of the events are much more spread out. Particularly for the width, we see a heavy tail towards zero. This is also expected because activation events come in two forms. Most events result in two waves, but a small part of the events has the shape of just a single wave, which has a width of 0.87 in the deterministic case. We checked whether or not these histograms are well approximated by a Gaussian distribution, but this was rejected using a Kolmogorov–Smirnov test \((p\sim 10^{-14})\).

Using the statistics on the width, length and maximum, we can compare the solutions of SPDE (2.10) to SPDEs with the same deterministic part but different noise terms. First, we can set the \(\partial _x\left( \sqrt{2DX}dW_t\right) \) term coming from the diffusion to zero. As noted in Remark 1, this term makes the mathematical analysis of the SPDE (2.10) significantly harder. A single realisation is shown in Fig. 10a, which is visually very similar to Fig. 8a. Indeed, Fig. 9d–f shows that the statistics of the solutions do not change significantly when we delete this term. This indicates that the noise coming from the reaction terms plays a more influential role in determining the shape of the patterns.

We are now also in the position to compare the CLE approach with the more ad hoc approach of adding additive space-time white noise to the u-component of (1.1) to mimic the inherent noisiness of the system. A single realization is shown in Fig. 10b, but this time the general shape of the patterns does not match those of the CLE, see Fig. 9g–i. In particular, with just white noise, the patterns are all short and narrow and do not reflect the complicated dynamics of the underlying chemical reactions and experiments. Also, note that due to the additive noise the solutions can become negative in this case.

In (a), we show a simulation similar to those in Fig. 7, but with \(\sigma =0.045\) and a homogeneous initial condition with \((u^*,4v^*)\) plus a small perturbation. The red boxes are the result of the pattern finding algorithm regionprops in MATLAB; it identifies all the regions of excitations which we would also find by eye, see “Appendix A” for details. In (b), we used this algorithm to find the length, width and maximum of these pulses (left axis), as well as the total number of activation events (right axis). For each value of \(\sigma \), the number of events is averaged over 100 simulations, and the length, width and maximum are averaged over all events in the 100 simulations. We plot the average together with the standard deviation (Color figure online)

In (a–c) show the histograms for the width, length and maximum of the pulses for \(\sigma =0.046\), for the same data as in Fig. 8b. For (a) and (b), the bin width is fixed to 0.25, and for c to 0.1. In (d–f) show the same histograms, but in the simulations the noise coming from the diffusion (the last term in both equations of (2.10)) was set to 0. In (g–i), we again show the same histograms, but with just white noise on the u-component of (1.1). In order to compare the noise levels, we did not choose the same \(\sigma \) value for the three cases but chose \(\sigma \) values such that the average number of activation events per simulation is approximately 50. For (d–f), this means \(\sigma =0.056\), and for (g–i) \(\sigma =0.23\)

In (a) is an example of the simulations used to generate the second row of histograms in Fig. 9, i.e. a solution the SPDE (2.10) without the noise coming from the diffusion and \(\sigma =0.056\). In (b) is an example of the simulations for the last row of histograms in Fig. 9, i.e. a solution with just white noise added to the first component of (1.1) and \(\sigma =0.23\). The simulations are zoomed in to a smaller grid to better show the shape of the individual pulses (Color figure online)

3.2 Travelling Waves

In order to find a travelling wave solution of (1.1), understood as a solution of (3.3) with \(c\ne 0\), we must ensure that the dynamics starting from the initial condition does not reach the standing phase or returns to the background state. This can be achieved by increasing the value of \(c_1\). Increasing \(c_1\) results in a faster exponential decay of v back to the background state after an excitation, see Table 1, preventing the inhibitor from gluing the two waves together like in Fig. 5. Simulations for an increased value of \(c_1\), from 0.1 to 0.2,Footnote 5 are shown in Fig. 11. Note that the PDE (1.1) still only has one stable background state \((u^*,v^*) \approx (0.0833, 1.625)\). The initial condition splits into two counter-propagating travelling waves, but opposite to what happened with the standing wave before, they keep separating and move away from each other at a fixed speed until they collide and cancel each other out due to the periodicity of the domain, see Fig. 11.

Simulation of the PDE (1.1), (a) shows the activator u and (b) the inhibitor v. We observe the splitting of the initial condition in two counter-propagating travelling waves with a constant speed that exist until they cancel each other out due to the periodicity of the domain. The slow inhibitor v decays back to its background state in between the pulses. The red dotted line has a speed of \(-2.10\), which is close to the value of approximately \(-2.17\) found by solving (3.3) using a fixed-point method. The parameters are \(D_u=0.1\), \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(D_v=1\), \(\varepsilon =0.52\), \(c_1=0.2\) and \(c_2=3.9\) (Color figure online)

To find a single travelling wave, we again need to properly tune the initial condition. This can be done by selecting one of the two waves in Fig. 11 and using it as the initial condition of the PDE simulation (not shown). In Fig. 12, we show the travelling wave profile and its associated phase plane. As with the standing pulse, the dynamics around the u-nullcline is essential. The solution trajectory starts from near the background state and follows the lower branch of the u-nullcline, jumps towards the upper branch of the nullcline and keeps following it until it falls off and returns to the lower branch to slowly evolve back towards the stable background state. In contrast to the standing pulse, see Fig. 4, the travelling wave is no longer symmetric and it jumps back to the lower branch by falling off the edge of the upper branch. These travelling wave solutions could be analysed further using techniques similar to “Appendix B”.

Profile of a single travelling wave. (a) shows both components u (green) and v (red) and (b) the related phase plane, plotted on a semi-log scale to highlight the dynamics for small u, as well as the nullclines. The asterisk indicates the background state. This solution is obtained as the endpoint of a PDE simulation (not shown), i.e. similar to Fig. 11, but with just one of the two waves as initial condition (Color figure online)

Travelling Waves with Noise When we now return to SPDE (2.10), there are now four regimes for the same parameters as in the previous section. For high values of the noise, we, as before, do not observe any patterns (not shown). For low values of the noise, we just find the travelling wave (if the simulation is initiated by an appropriate initial condition) since the noise is not strong enough to destroy the wave, nor to activate another pattern, on the timescales of the simulation (not shown). The interesting dynamics happens again at the intermediate levels of the noise. As Fig. 13a shows, the noise activates the dynamics, resulting in many counter-propagating travelling waves. A travelling wave is subsequently annihilated when it collides with a travelling wave coming from the other direction. Hence, the collision dynamics of Fig. 11 is repeated many times on smaller spatial–temporal scales. We see in Fig. 13 that after the annihilation of the travelling waves, the slow inhibitor v initially remains high preventing the activation of new counter-propagating travelling waves. Only when after a certain time the inhibitor has sufficiently decayed, do we see the activation of new counter-propagating travelling waves by the noise. The creation and annihilation of travelling waves happen at a shorter time scale than the decay of the inhibitor, which makes the dynamics look synchronised, or even periodic. In Fig. 20a, we plot the approximate period versus the intensity of the noise. As expected, the period decreases with the intensity of the noise. It differs, however, significantly from the true time-periodic motion we will discuss in Sect. 3.3. When we increase the noise, the quasi-periodic pattern is broken up, as the counter-propagating travelling waves are destroyed before they collide and annihilate each other, so no synchronised patterns emerge, see Fig. 13c and d. These patterns become relevant when we discuss the comparison between the CLE and the Gillespie simulations in Fig. 1, see §3.4.

Given the discussion above, it is now important to realise that we do not expect to see the travelling waves from Fig. 11 in practice as the travelling wave gets destroyed when it collides with another wave or by the noise. Therefore, it might not always be clear in the stochastic simulations whether we are looking at travelling waves or at transient dynamics towards a double pulse. For instance, the characteristics of the individual pulses in Fig. 7c and d are visually very similar.

Simulation of the SPDE (2.10) for \(\sigma =0.02\), (a) and (b), and \(\sigma =0.05\), (c) and (d). The red dashed line in (a) has a slope of 2.05, close to the deterministic wave speed, but given the short-time interval the wave exists, and precise estimates are difficult to obtain. We observe that there is a quasi-periodic behaviour with a period of roughly 20. In (c) and (d), the quasi-periodic structure is destroyed. The same parameters and initial condition are used as in Fig. 11 (Color figure online)

3.3 Time-Periodic Solutions

In the previous sections, it was essential that the background state of the system was stable, because this allowed the dynamics to return to the background state after an activation event. When we increase the value of \(c_1\), the background state becomes unstable through a Hopf bifurcation, see Fig. 3b. In the phase plane, this transition is characterised by the fact that the background state is no longer located on the lower branch of the u-nullcline, as in Figs. 4b and 12b; instead, it lies on the middle branch of the u-nullcline, see Fig. 15b. Hence, after an excursion, the solution cannot return to the unstable background state and is exited again, resulting in time-periodic motion. When we start with a spatial homogeneous initial condition, the PDE simulation shows periodic oscillations in time, see Figs. 14 and 15. Both components still display slow–fast behaviour, however, this time not in the spatial variable x but in the temporal variable t. In the case of nonhomogeneous initial conditions, it takes several oscillations before they are all synced up spatially (not shown). The observed behaviour has the characteristics of a relaxation oscillation as studied intensively for the van der Pol equation Van der Pol (1926). This is not a surprise as the van der Pol equation formed the foundation for the classic FitzHugh–Nagumo model and PDE (1.1) can be seen as a variation on this classic model.

Simulation of the PDE (1.1), (a) shows the activator u and (b) the inhibitor v. By measuring the distances between the maxima of the oscillations we find the estimate \(T=8.14\) for the period of the oscillation. Note that this is significantly smaller than the quasi-periodic oscillations in Fig. 13. The parameters are set to \(D_u=0.1\), \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(D_v=1\), \(\varepsilon =0.52\), \(c_1=0.4\) and \(c_2=3.9\) (Color figure online)

Cross section of Fig. 14 at \(x=0\), together with the corresponding phase plane. It is clear that the solution leaves the background state (marked by an asterisk), but does not return to it (Color figure online)

Time-periodic Solutions with Noise For small values of the noise \(\sigma \), the observed period is close to the deterministic version, but when the value of \(\sigma \) increases, the period also decreases monotonically, as is expected. Note that after excitation, the inhibitor remains high preventing activation events. When the noise is too high, no patterns are observed. We can investigate the relation between the reduction of the period and the intensity of the noise. In Fig. 20b, we plot the estimated period versus the noise intensity. We indeed see that the period decreases monotonically with the noise.

Two simulations of PDE (1.1) with parameters as in Bhattacharya et al. (2020); \(D_u=0.1\), \(a_1=0.167\), \(a_2=16.67\), \(a_4=1.44\), \(a_5=1.47\), \(D_v=1\), \(\varepsilon =0.4\), \(c_1=0.1\) and, for (a), \(a_3=167\) and \(c_2=2.1\), while \(a_3=300.6\) and \(c_2=3\) for (b). The initial condition is equal to those in the previous figures (Color figure online)

3.4 Wild-Type Versus PTEN-Null Cells

Now that we have studied several different fundamental patterns, we can focus on understanding the different cell shapes. In Bhattacharya et al. (2020), two sets of parameters are compared, representing WT cells (i.e. healthy cells) and PTEN-null cells where the tumour-suppressing gene PTEN has been switched off Chen et al. (2018). First, we simulate the deterministic PDE (1.1) for both sets of parameters, see Fig. 17. We observe that in both parameter regimes, there are two counter-propagating travelling waves but the specific profiles and speeds are different. Particularly, note that the wave in Fig. 17b is significantly broader and higher than the wave in Fig. 17a.

When noise is applied, the statistics of the dynamics shows a clear difference. In Fig. 18, we compare the SPDE simulations of (2.10) to the Gillespie simulations from Bhattacharya et al. (2020). Focusing on the typical shape of the excitations, there is a clear qualitative correspondence between the two types of simulation. Furthermore, in both types of simulation, the average pulse duration is longer in the case of the PTEN-null cell simulations. Note that we show the SPDE simulations on a larger spatiotemporal scale to get a better idea of the distribution in shapes and the zoom-boxes highlight the detailed structure of a typical single activation event. In the case of PTEN-null cells, the background state can be excited for much lower noise values (\(\sigma \approx 0.007\)), while for WT cells, the noise needs to be twice as large (\(\sigma \approx 0.014\)) as a result of the increased values of \(c_2\) and \(a_3\). Hence, in PTEN-null cells, an already existing pattern can more easily sustain itself, leading to the elongated shapes of Fig. 18d.

Comparison of the Gillespie model, (a) and (c) from Bhattacharya et al. (2020), versus the CLE approximation (2.10), (b) and (d). The same parameters as in Fig. 17, with \(\sigma =0.06\). The initial condition is \((u^*,2v^*)\). This can lead to an immediate excitation of the background state in (d), while in b, the excitation of the background is more spread out. The zoom-boxes highlight the details of a single excitation (Color figure online)

4 Discussion and Outlook

We set out to show how stochastic partial differential equations (CLE), or more specifically, chemical Langevin equations, can be used to gain more insight into the dynamics of models for cell motility. We have shown for an exemplary set of chemical reactions (see Table 1) that the CLE approach, combined with a basic analysis of the corresponding deterministic PDE, allows us to study the different possible patterns with relative ease, both qualitative and quantitative, while remaining close to the underlying chemical processes. To understand differences in cell behaviour, like the difference between wild-type and cancerous cells as in Bhattacharya et al. (2020), the study of the statistical properties of the observed dynamics is essential. For instance, an essential characteristic differentiating wild-type cells from cancerous cells is how long a pattern can survive after activation. The simulations in the previous section show that the answer not only depends on the parameters of the system but crucially on the interplay between the parameters and the noise. The CLE can be used to study this interplay. A natural question to ask is if all the stochastic terms introduced in the CLE (2.9) are really necessary. Could we, for example, ignore the noise term coming from the diffusion or forget the derivation of the CLE altogether and just naively add an additive white noise term to the equation for u? The histograms in Fig. 9 indicate that the effects of the terms that come from the diffusion are minimal (for the parameter values studied here) and therefore that these terms do not contribute meaningfully to our understanding of the cell dynamics. Note that this would solve the problem of the equation being ill-posed, see Remark 1, and would open up the possibilities for more rigorous mathematical analysis based on the results in Hamster and Hupkes (2020). We also noted that adding just additive white noise changes the statistics significantly, which indicates that completely abandoning the CLE approach throws away too much detail.

In this paper, we studied a basic activator–inhibitor system with only a limited number of chemical reactions. However, the derivation of the CLE (2.9) in §2 holds for any number of molecules and for any number of chemical reactions. As such, one can see this paper as a proof of concept and the methodology of this paper can be directly applied to more complex regulating systems, such as the eight-component system designed in Biswas et al. (2022). In subsequent work, we aim to work on these type of more complex model to better understand the stochastic dynamics that causes the cell to move robustly in one specific direction.

Furthermore, as shown in detail in “Appendix B”, the underlying deterministic RDE (1.1) is amenable for rigorous mathematical analysis by using geometric singular perturbation theory (Fenichel 1979; Hek 2010; Jones 1995; Kaper 1999). We derived a first-order approximation for the jump location where, under certain conditions, the standing wave has a sharp transition in its activator. This methodology could also be used to, for instance, further analyse the travelling waves to derive approximations for the speed of the waves. In other words, questions about the existence of localised solutions of (1.1) and bifurcations can thus be reduced to understanding relatively simple ODEs and the connections between them. The details of these computations are left as future work.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

Notes

Note that the origin (0, 0) is only a background state if \(a_5=0\).

We also observe the evolution of the initial condition back to the stable background state \((u^*,v^*)\), especially for initial conditions with smaller amplitudes. Simulations are not shown.

For values in between, say \(c_1=0.15\), the numerics becomes very sensitive to the chosen discretisation, see “Appendix A”.

It is relatively straightforward to generalise the results for the unbounded domain to the periodic domain for the type of problems under consideration, see, for example, Doelman et al. (2001)

Unfortunately, the theorems do not quantify what is meant by small enough.

References

Bhattacharya S, Banerjee T, Miao Y, Zhan H, Devreotes PN, Iglesias PA (2020) Traveling and standing waves mediate pattern formation in cellular protrusions. Sci Adv 6(32):7682

Biswas D, Bhattacharya S, Iglesias PA (2022) Enhanced chemotaxis through spatially regulated absolute concentration robustness. Int J Robust Nonlinear Control

Bressloff PC (2014) Stochastic processes in cell biology, vol 41. Springer, New York

Carter P, de Rijk B, Sandstede B (2016) Stability of traveling pulses with oscillatory tails in the Fitzhugh–Nagumo system. J Nonlinear Sci 26(5):1369–1444

Chen C-Y, Chen J, He L, Stiles BL (2018) Pten: tumor suppressor and metabolic regulator. Front Endocrinol 9:338

Chen M, Li F, Wang S, Cao Y (2016) Stochastic modeling and simulation of reaction-diffusion system with hill function dynamics. In: Proceedings of the 7th ACM international conference on bioinformatics, computational biology, and health informatics, pp 525–526

Deng Y, Levine H (2022) Introduction to models of cell motility. In: Blagoev KB, Levine H (eds) Physics of molecular and cellular processes, pp 173–212, Chap. 7. Springer, New York

Descartes R (2020) Discours de la méthode/discourse on the method: a bilingual edition with an interpretive essay. University of Notre Dame Press, Notre Dame, Indiana

Devreotes PN, Bhattacharya S, Edwards M, Iglesias PA, Lampert T, Miao Y (2017) Excitable signal transduction networks in directed cell migration. Annu Rev Cell Dev Biol 33:103–125

Doelman A, Kaper TJ, van der Ploeg H (2001) Spatially periodic and aperiodic multi-pulse patterns in the one-dimensional Gierer–Meinhardt equation. Methods Appl Anal 8(3):387–414

Dogan E, Allen EJ (2011) Derivation of stochastic partial differential equations for reaction-diffusion processes. Stoch Anal Appl 29(3):424–443

Fenichel N (1979) Geometric singular perturbation theory for ordinary differential equations. J Differ Equ 31(1):53–98

FitzHugh R (1961) Impulses and physiological states in theoretical models of nerve membrane. Biophys J 1(6):445–466

García-Ojalvo J, Sagués F, Sancho JM, Schimansky-Geier L (2001) Noise-enhanced excitability in bistable activator-inhibitor media. Phys Rev E 65(1):011105

Gelens L, Anderson GA, Ferrell JE Jr (2014) Spatial trigger waves: positive feedback gets you a long way. Mol Biol Cell 25(22):3486–3493

Gillespie DT (1976) A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J Comput Phys 22(4):403–434

Gillespie DT (1977) Exact stochastic simulation of coupled chemical reactions. J Phys Chem 81(25):2340–2361

Gillespie DT (1992) A rigorous derivation of the chemical master equation. Phys A 188(1–3):404–425

Gillespie DT (2000) The chemical langevin equation. J Chem Phys 113(1):297–306

Hamster CHS, Hupkes HJ (2020) Travelling waves for reaction-diffusion equations forced by translation invariant noise. Phys D 401:132233

Hek G (2010) Geometric singular perturbation theory in biological practice. J Math Biol 60(3):347–386

Inagaki N, Katsuno H (2017) Actin waves: origin of cell polarization and migration? Trends Cell Biol 27(7):515–526

Jones CKRT (1995) Geometric singular perturbation theory. In: Dynamical systems (Montecatini Terme, 1994). Lecture Notes in Math., vol 1609, pp 44–118. Springer, Berlin

Kaper TJ (1999) An introduction to geometric methods and dynamical systems theory for singular perturbation problems. In: Proceedings of symposia in applied mathematics, vol 56, pp 85–132. American Mathematical Society

Kim C, Nonaka A, Bell JB, Garcia AL, Donev A (2017) Stochastic simulation of reaction-diffusion systems: a fluctuating-hydrodynamics approach. J Chem Phys 146(12):124110

Kuehn C (2019) Travelling waves in monostable and bistable stochastic partial differential equations. Jahresbericht der Deutschen Mathematiker-Vereinigung, 1–35

Lei J (2021) Systems biology. Springer, New York

Li L, Nørrelykke SF, Cox EC (2008) Persistent cell motion in the absence of external signals: a search strategy for eukaryotic cells. PLoS ONE 3(5):2093

Lodish H, Berk A, Kaiser CA, Kaiser C, Krieger M, Scott MP, Bretscher A, Ploegh H, Matsudaira P et al (2008) Molecular cell biology. Macmillan, New York

Lord GJ, Powell CE, Shardlow T (2014) An introduction to computational stochastic PDEs, vol 50. Cambridge University Press, Cambridge

Nagumo J, Arimoto S, Yoshizawa S (1962) An active pulse transmission line simulating nerve axon. Proc IRE 50(10):2061–2070

Selmeczi D, Li L, Pedersen LI, Nørrelykke SF, Hagedorn PH, Mosler S, Larsen NB, Cox EC, Flyvbjerg H (2008) Cell motility as random motion: a review. Eur Phys J Spec Top 157(1):1–15

Van der Pol B (1926) On relaxation-oscillations. Lond Edinb Dublin Philos Mag J Sci 2(11):978–992

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Numerical Methods

1.1 A.1 (S)PDE Simulations

All the (S)PDE simulations in this paper were done using a semi-implicit Euler–Maruyama method from (Lord et al. (2014), §10.5). For the spatial directions, a standard second-order central difference is used and for the time stepping Euler–Maruyama. The deterministic linear part is evaluated at the next timestep, making it semi-implicit. To be concrete, we study an SPDE of the form

where u is a vector, L is a linear differential operator, f, g are functions, and \(dW_t\) is a white noise vector. In comparison with the main text, the vector u equals (u, v) and \(L=D\partial _{xx}\).

When we denote the numerical approximation of the linear part L with A, and the spatial discretisation of u at time t with \({\textbf{u}}(t)\), we find

The white noise step \(d{\textbf{W}}_t\) is a vector where each random element is distributed as \({\mathcal {N}}(0,dt/h)\). Hence, the approximation for the new value \({\textbf{u}}(t+dt)\) becomes

The equation for \({\textbf{u}}(t+dt)\) is now a matrix equation and can be solved using standard solvers. It is important to realise that the algorithms from Lord et al. (2014) only work for Lipschitz noise terms. Hence, when the term under the square root in (2.10) becomes close to zero, the algorithms become unstable. To correct this, we take after every timestep the maximum of \({\textbf{u}}(t+dt)\) and 0.

The specific models studied in the main text, even the PDE (1.1), can be very sensitive to the size of the spatial discretisation h and temporal discretisation dt in certain parameter regimes. For example, when \(c_1=0.15\) and the remaining parameters are equal to those in Figs. 5 and 11, the dynamics can differ significantly depending on the chosen size of the discretisation, see Fig. 19. For the values used in the main text, \(c_1=0.1\) and \(c_1=0.2\), such a discrepancy was not observed for reasonable discretisations. This is possibly related to the coexistence of travelling and standing waves in this regime.

Simulation of the PDE (1.1). The spatial domain is \([-60,60]\) with \(2^{12}\) gridpoints, and the time interval is [0, 25]. In (a), we used \(dt=6.25\cdot 10^{-4}\) and in (b) \(dt=0.0025\), i.e. 4 times larger. The parameters were set at \(D_u=0.1\), \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(D_v=1\), \(\varepsilon =0.52\), \(c_1=0.15\) and \(c_2=3.9\). In both cases, the initial condition is equal to the one in the main text (Color figure online)

This figure shows the period of the dynamics of SPDE (2.10) in two different regimes. In (a), with \(c_1=0.2\), we are in the regime of travelling waves with quasi-periodic movement as shown in Fig. 13, while in (b), with \(c_1=0.4\), we are in the regime of oscillations in time as shown in Fig. 16. In both figures, the period is estimated by computing the average in the spatial direction and subsequently computing the distances between the maxima in time

1.2 A.2 Pattern Recognition

For Figs. 8 and 9, MATLAB’s regionprops algorithm is used to identify the activation events automatically. This proceeds in the following steps. First, we smooth the data using MATLAB’s Gaussian filter. Without smoothing, the algorithm detects multiple objects in a single event. Next, we transform the data to a binary value by comparing it with a certain threshold: we say that u is activated when it is five times its stationary value \(u^*\). Then, the regionprops algorithm is applied with the option BoundingBox.

One needs to take care of which initial condition to use. When we start with a spatial homogeneous initial condition \((u^*,v^*)\), there is a lot of activation in the first instances of the simulation, see Fig. 7c, and it is not possible to define and detect individual activation events. Therefore, we start not on the fixed point \((u^*,v^*)\), but on \((u^*,4v^*)\) plus a small perturbation. The result is that activation events only appear when v has decayed enough for excitations to happen. As the decay is stochastic, and therefore not spatially homogeneous, the activation events start to appear more spread out, making it possible to determine individual events.

Appendix B: Analysis

The deterministic PDE (1.1) has two components and ten parameters, making it difficult to directly analyse mathematically, even for the simplest of localised structures simulated in the main text. However, these simulations do reveal that the profiles of the two components of the PDE evolve on a different spatial scale: the spatial changes in the slow v-component are more gradual than these of the fast u-component, see, for instance, Figs. 4a, 12a, and 15a. Furthermore, these simulations also revealed that a large part of the spatial dynamics centres around the lower and upper branch of the u-nullcline in the phase plane, with the u-profile making fast jumps in between. To amplify (and exploit) this scale separation, we set \(D_v=1\) (as in Bhattacharya et al. (2020)) and \(D_u={{\tilde{\varepsilon }}}^2\), where \({{\tilde{\varepsilon }}}\) is a small parameter that can be taken arbitrary small. Furthermore, we assume that our spatial domain is no longer periodic but instead unbounded.Footnote 6 This transforms the PDE model (1.1) into

The small parameter \({{\tilde{\varepsilon }}}\) allows us to use geometric singular perturbation theory (GSPT) (Fenichel (1979); Hek (2010); Jones (1995); Kaper (1999), ) to construct solutions that, to leading order in the small parameter, approximate the localised structures of the main text.

In GSPT, the observation that the dynamics centres around the branches of u-nullcline is taken to the extreme and we construct solutions whose slow dynamics in the singular limit, i.e. in the limit of the small parameter \({{\tilde{\varepsilon }}}\) to zero, is confined to this nullcline, which we will refer to as the slow or critical manifold. In contrast, during the fast jump in u, the slow component will not change in this singular limit. These assumptions simplify the computations and allow us to compute parts of the solution in the singular limit. The main theorems of GSPT (e.g. Fenichel 1979; Hek 2010; Jones 1995; Kaper 1999), sometimes called Fenichel theorem 1–3, allow us to conclude that if the small parameter is small enough,Footnote 7 then there indeed is a true solution of the PDE close to the one constructed in the singular limit.

Here, we only show the construction of the standing waves we found in §3.1. That is, we are interested in the fixed points of the PDE dynamics

Upon defining \({{\tilde{\varepsilon }}} u'=p\) and \(v'=q\), where \('\) denotes the derivative with respect to x, we can write this equation as a system of four ODEs:

Taking the scale separation to the extreme, i.e. setting \({{\tilde{\varepsilon }}}=0\), significantly simplifies the equation:

We refer to this set of equations as the slow system. This system should be understood in the following sense: on a large spatial scale, the dynamics of (v, q) is approximated by lines 3 and 4 of the ODE above, and this approximation is valid in the region of the phase plane given by the algebraic equations in lines 1 and 2. We refer to the solution of these algebraic equations as the slow or critical manifold. When we try to explicitly compute the critical manifold as a function u(v), we encounter a third-order polynomial, which can be solved exactly. However, this is not practical as the graph u(v) cannot be represented by a single function, but it has three branches, the upper, middle and lower branch. We will denote the upper branch with \(u_+(v)\) and the lower branch with \(u_-(v)\). Hence, system (B.4) now becomes

We refer to this equation as the reduced slow system.

When we are interested in the dynamics of u instead of v, we must zoom in to a smaller length scale. Therefore, we define \({{\tilde{\varepsilon }}}\xi =x\) and use \(\dot{}\) to denote the derivative with respect to \(\xi \). System (B.3) now becomes

This system is called the fast system and is still equivalent to (B.3), but when we set \({{\tilde{\varepsilon }}}=0\), it is no longer equivalent and reduces to

This shows that in the fast limit, the value of v is constant. When we denote the unknown value by \({\bar{v}}\), the system reduces to

This system is known as the reduced fast system.

How can we use both reduced systems to understand the dynamics of Fig. 21b? We observe slow dynamics on the upper and lower branch of the critical manifold and a fast jump in between. The reduced fast system describes the fast jump between the upper and lower branch of the critical manifold. Therefore, a standing wave exists when this system has a heteroclinic orbit between the upper and lower branch. The reduced fast system (B.8) is a Hamiltonian system with Hamiltonian

In both figures, the red dots are a solution of (B.2), found by using MATLAB’s bvp4c solver, with an initial condition coming from a PDE simulation and the small parameter \({{\tilde{\varepsilon }}}^2\) was set to \(10^{-4}\). Note that this value corresponds to \(D_u=0.01\), which is a factor ten smaller than in the main text. In (a), we compare the fast dynamics, i.e. the jump for variables u and p, with the predicted Hamiltonian (B.9), the solid blue line. In (b), the purple line is given by \({\bar{v}}\), the value of the jump as predicted by (B.10), while the green and blue curves denote the nullclines. The parameters are \(a_1=0.167\), \(a_2=16.67\), \(a_3=167\), \(a_4=1.44\), \(a_5=1.47\), \(\varepsilon =0.52, \) \(c_1=0.1\) and \(c_2=3.9\) (Color figure online)

Hence, a heteroclinic orbit exists when

We cannot solve this algebraic equation exactly, but it is a straightforward numerical problem. Note that \({\bar{v}}\) only depends on the parameters \(a_1,\ldots ,a_5\) and not on the parameters of the equation for v. For this value of \({\bar{v}}\), the Hamiltonian overlaps with the fast dynamics, as shown in Fig. 21a. Furthermore, from Fig. 21b, it is clear that the value for \(\bar{v}\) is a good approximation for the location of the jump for \({{\tilde{\varepsilon }}}=10^{-2}\).

Now, we have all the ingredients to construct the standing wave. We start at \(x=-\infty \) in the background state of the reduced slow system on the lower branch. We follow the dynamics of the reduced slow system (B.5) until we reach the value \({\bar{v}}\) where we jump to the upper branch following the reduced fast system (B.8). We will follow the slow (v, q)-dynamics on the upper branch until we return to the value \({\bar{v}}\), but with the opposite sign for the derivative, i.e. we trace a curve from \(({\bar{v}},q({\bar{v}}))\) to \(({\bar{v}},-q({\bar{v}}))\) in the reduced slow system. Then, we jump down again to the lower branch, which we now trace back to the background state. We implicitly assume here that the maximum value of v remains below the fold of the critical manifold (which is not the case for travelling waves, see Fig. 12).

This example shows how GSPT can be used to construct localised solutions of (1.1) and also how to understand these solutions. Questions about the existence of localised solutions of (1.1) and bifurcations can thus be reduced to understanding relatively simple ODEs and the connections between them.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hamster, C., van Heijster, P. Waves in a Stochastic Cell Motility Model. Bull Math Biol 85, 70 (2023). https://doi.org/10.1007/s11538-023-01164-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11538-023-01164-1