Abstract

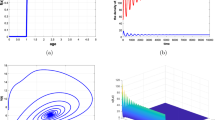

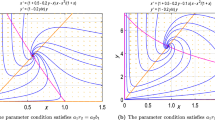

We derive a discrete predator–prey model from first principles, assuming that the prey population grows to carrying capacity in the absence of predators and that the predator population requires prey in order to grow. The proposed derivation method exploits a technique known from economics that describes the relationship between continuous and discrete compounding of bonds. We extend standard phase plane analysis by introducing the next iterate root-curve associated with the nontrivial prey nullcline. Using this curve in combination with the nullclines and direction field, we show that the prey-only equilibrium is globally asymptotic stability if the prey consumption-energy rate of the predator is below a certain threshold that implies that the maximal rate of change of the predator is negative. We also use a Lyapunov function to provide an alternative proof. If the prey consumption-energy rate is above this threshold, and hence the maximal rate of change of the predator is positive, the discrete phase plane method introduced is used to show that the coexistence equilibrium exists and solutions oscillate around it. We provide the parameter values for which the coexistence equilibrium exists and determine when it is locally asymptotically stable and when it destabilizes by means of a supercritical Neimark–Sacker bifurcation. We bound the amplitude of the closed invariant curves born from the Neimark–Sacker bifurcation as a function of the model parameters.

Similar content being viewed by others

Notes

The expression for \({\mathcal {L}}(X,Y)\) can be obtained by the Mathematica command: \(T=\mathrm{Together}[(1+\gamma *X)/(1+d)*Y-r/(\alpha *K)*(K-(1+r)/(1+r/K*X+\alpha *Y)*X)]\) The coefficients \(c_i\) can be obtained by the command: Simplify[Coefficient[Numerator[T],Y,i]] for \(i=0,1,2\).

The expression for \({\mathcal {L}}(X,\ell (X))\) can be obtained by using T found via the Mathematica command in footnote 1: Simplify\([T/.{Y->r/(alpha*K)*(K-X)}]\)

References

Beddington J, Free C, Lawton J (1975) Dynamic complexity in predator–prey models framed in difference equations. Nature 255:58–60

Beddington J, Free C, Lawton J (1978) Characteristics of successful natural enemies in models of biological control of insect pests. Nature 273:513–519

Beverton RJH, Holt SJ (1957) On the dynamics of exploited fish populations, vol. 19 of Fishery Investigations (Great Britain, Ministry of Agriculture, Fisheries, and Food), H. M. Stationery Off., London

Bohner M, R C (2013) The Beverton–Holt \(q\)-difference equation. J Biol Dyn 7(1):86–95

Bohner M, Stević S, Warth H (2007) The Beverton–Holt difference equation. Discrete Dyn Differ Equ, pp 189–193

Bohner M, Streipert SH (2016) Optimal harvesting policy for the Beverton–Holt model. Math Biosci Eng 13(4):673–695

Bohner M, Streipert SH (2017) The second Cushing–Henson conjecture for the Beverton–Holt \(q\)-difference equation. Opuscula Math 37(6):795–819

Bohner M, Warth H (2007) The Beverton–Holt dynamic equation. Appl Anal 86(8):1007–1015

Brauer F, Castillo-Chavez C (2011) Mathematical models in population biology and epidemiology. Texts in applied mathematics. Springer, New York

Chen X-W, Fu X-L, Jing Z-J (2013) Complex dynamics in a discrete-time predator-prey system without Allee effect. Acta Math Appl Sin 29:355–376

Chen X-W, Fu X-L, Jing Z-J (2013) Dynamics in a discrete-time predator-prey system with Allee effect. Acta Math Appl Sin 29:143–164

Cheng KS (1981) Uniqueness of a limit cycle for a predator-prey system. SIAM J Math Anal 12(4):541–548

Choudhury S (1992) On bifurcations and chaos in predator–prey models with delay. Chaos Solitons Fract 2:393–409

Dawes JHP, Souza MO (2013) Mathematical of Holling’s type I, II and III functional responses in predator–prey systems. J Theoret Biol 237:11–22

Din Q (2013) Dynamics of a discrete Lotka–Volterra model. Adv Differ Equ 2013, Article 95

Edelstein-Keshet L (1988) Mathematical models in biology, classics in applied mathematics. Society for Industrial and Applied Mathematics, (SIAM, 3600 Market Street, Floor 6, Philadelphia, PA 19104)

Fan M, Agarwal S (2002) Periodic solutions of nonautonomous discrete predator–prey system of Lotka–Volterra type. Appl Anal 81:801–812

Fazly M, Hesaaraki M (2008a) Periodic solutions for a semi-ratio-dependent predator-prey dynamical system with a class of functional responses on time scales. Discrete Contin Dyn Syst Ser B 9(2), 267–279

Fazly M, Hesaaraki M (2008b) Periodic solutions for predator–prey systems with Beddington–Deangelis functional response on time scales. Nonlinear Anal Real World Appl 9(3):1224–1235

Freedman HI, So JW-H (1989) Persistence in discrete semidynamical systems. SIAM J Appl Math 20(4):930–938

Grove EA, Ladas G (2004) Periodicities in nonlinear difference equations. Advances in discrete mathematics and applications. CRC Press, Boca Raton, FL

Guckenheimer J, Holmes P (1983) Nonlinear oscillations, dynamical systems, and bifurcations of vector fields, vol 42 of Appl. Math. Sci., 2 edn. Springer, New York

He Z, Li B (2014) Complex dynamic behavior of a discrete-time predator–prey system of Holling-III type. Adv Differ Equ 2014:180

Hirsch MW, Smith H (2005) Monotone maps: a review. J Differ Equ Appl 11(4–5):379–398

Hutchinson G (1978) An introduction to population ecology. Yale University Press, New Haven

Iooss G, Joseph D (1980) Elementary stability and bifurcation theory. Undergraduate texts in mathematics. Springer, New York

Kangalgil F, Isik S (2020) Controlling chaos and Neimark–Sacker bifurcation in a discrete-time predator–prey system. Hacet J Math Stat 49(5):1761–1776

Kelley W, Peterson A (2001) Difference equations: an introduction with applications. Elsevier Science, Cambridge

Khan AQ, Ahmad I, Alayachi HS, Noorani MSM, Khaliq A (2020) Discrete-time predator–prey model with flip bifurcation and chaos control. Math Biosci Eng 17(5):5944–5960

Kingsland S (1995) Modeling nature. Science and its conceptual foundations series. University of Chicago Press, Chicago

Kot M (2001) Elements of mathematical ecology. Cambridge University Press, Cambridge

Liu P, Elaydi SN (2001) Discrete competitive and cooperative models of Lotka–Volterra type. J Comput Anal Appl 3(1):53–73

Lotka AJ (1920) Analytical note on certain rhythmic relations in organic systems. Proc Natl Acad Sci USA 6(7):410–415

MATLAB (2020) version R2020b. The MathWorks Inc., Natick, MA

May R (1974) Biological populations with nonoverlapping generations: stable points, stable cycles, and chaos. Science 186:4164

Mickens RE (1989) Exact solutions to a finite-difference model of a nonlinear reaction–advection equation: implications for numerical analysis. Numer Methods Partial Differ Equ 5(4):313–325

Mickens RE (1994a) Genesis of elementary numerical instabilities in finite-difference models of ordinary differential equations. In: Proceedings of dynamic systems and applications, vol 1 (Atlanta, GA, 1993), Dynamic, Atlanta, GA, pp 251–257

Mickens RE (1994) Nonstandard finite difference models of differential equations. World Scientific, River Edge, NJ

Mickens RE, Smith A (1990) Finite-difference models of ordinary differential equations: influence of denominator functions. J Frankl Inst 327(1):143–149

Ogata K (1995) Discrete-time control systems. Prentice Hall, Upper Saddle River

Rana SMS (2019) Bifurcations and chaos control in a discrete-time predator–prey system of Leslie type. J Appl Anal Comput 9(1):31–44

Rosenzweig M (1971) The paradox of enrichment. Science 171(3969):385–387

Rozikov UA, Shoyimardonov SK (2020) Leslie’s prey–predator model in discrete time. Int J Biomath 13(6):2050053

Smith HL, Thieme HR (2013) Persistence and global stability for a class of discrete time structured population models. Discrete Contin Dyn Syst 33:4627

Sugie J, Saito Y (2012) Uniqueness of limit cycles in a Rosenzweig–MacArthur model with prey immigration. SIAM J Appl Math 72(1):299–316

Verhulst P-F (1838) Notice sur la loi que la population suit dans son accroissement. Corr Math Phy 10:113–121

Volterra V (1926) Variazioni e fluttuazioni del numero d’individui in specie animali conviventi. Mem Acad Lincei Roma 2:31–113

Wang W, Jiao Y, Chen X (2013) Asymmetrical impact of Allee effect on a discrete-time predator–prey system. J Appl Math 2013:1–10

Wiggins S (2003) Introduction to applied nonlinear dynamical systems and chaos. Texts in applied mathematics. Springer, New York

Wolfram Research Inc. (2020) Mathematica 12.2

Wolkowicz GSK (1988) Bifurcation analysis of a predator–prey system involving group defence. SIAM J Appl Math 48(3):592–606

Zhang W (2006) Discrete dynamical systems, bifurcations and chaos in economics, mathematics in science and engineering. Elsevier, Amsterdam

Zhang W, Bi P, Zhu D (2008) Periodicity in a ratio-dependent predator–prey system with stage-structured predator on time scales. Nonlinear Anal Real World Appl 9(2):344–353

Zhao M, Xuan Z, Li C (2016) Dynamics of a discrete-time predator–prey system. Adv Differ Equ 191:1–8

Acknowledgements

The research of Gail S. K. Wolkowicz was partially supported by a Natural Sciences and Engineering Research Council of Canada (NSERC) Discovery grant with accelerator supplement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this appendix, we provide the proofs of our results.

Proof of Lemma 2

By Lemma 1, \(X_t, Y_t \ge 0\) for nonnegative initial conditions. We first show in (i) that \(X_t\) is bounded and then, in (ii), that \(Y_t\) is bounded for all \(t\ge 0\).

-

(i)

Since f is increasing in the first variable, we have for \(X_t\le K\),

$$\begin{aligned} X_{t+1} = f(X_t,Y_t)\le f(K,Y_t)= \frac{(1+r)K}{1+r + \alpha Y_t}\le K. \end{aligned}$$By (14), for \(X_t>K\),

$$\begin{aligned} X_{t+1} -X_t = X_{t+1}\left[ \frac{r}{1+r}\left( 1-\frac{X_t}{K}\right) -\frac{\alpha }{1+r} Y_t\right] <0. \end{aligned}$$(A1)Hence, \(X_t\) decreases for \(X_t>K\). Suppose \(X_t\ge K\) for all \(t\ge 0\). Then \(X_t\) is monotone decreasing and therefore convergent. Suppose \(X_t\) does not converge to K. Then \(\lim _{t\rightarrow \infty }X_t={\bar{X}}>K\). However, since

$$\begin{aligned} {\bar{X}}= & {} \lim _{t\rightarrow \infty }X_{t+1}=\lim _{t\rightarrow \infty }\frac{(1+r)X_t}{1+\frac{r}{K}X_t+\alpha Y_t}\\\le & {} \lim _{t\rightarrow \infty }\frac{(1+r)X_t}{1+\frac{r}{K}X_t}<\frac{(1+r){\bar{X}}}{1+r}={\bar{X}}, \end{aligned}$$this results in a contradiction. Thus, \({\bar{X}}=K\) if \(X_t\ge K\) for all \(t\ge 0\). This confirms that for \(X_t\ge K\) for all \(t\ge 0\), then \(X_t\) converges to K. This implies that \(X_t\le \max \{K,X_0\}\) for all \(t\ge 0\). This confirms the additional statements in Lemma 2 regarding the X-component of the solution.

-

(ii)

Next we show that \(Y_t\) is bounded. We consider two cases: (a) \(X_t>K\) for all \(t\ge 0\), and (b) there exists \(t\ge 0\) such that \(X_t\le K\).

Case (a): We prove that \(Y_t\) is bounded using proof by contradiction. By assumption, \(X_t> K\) for all \(t\ge 0\) and by (i), \(\{X_t\}\) decreases monotonically to \({\bar{X}}=K\). Suppose \(Y_t\) is unbounded. Then there exists a subsequence \(\{Y_{t_i}\}\) and j such that \(Y_{t_i}>1\) for all \(i\ge j\). This, however, implies that for the subsequence \(X_{t_i+1}\),

resulting in a contradiction. Thus, \(Y_t\) is bounded for \(t\ge 0\).

Case (b): Without loss of generality, let \(j\ge 0\) denote the first iterate such that \(X_j\le K\). Then, by the previous argument, \(X_t\le K\) for all \(t\ge j\) and

for all \(t> j\). Consider the recurrence

with initial condition \({{\hat{Y}}}_j=Y_j\) and \({{\hat{Y}}}_{j+1}=Y_{j+1}\). We prove by induction that \(Y_{t+1}\le {{\hat{Y}}}_{t+1}\) for all \(t>j\). Since \(\frac{z}{1+r+\alpha z}\) is increasing in z, we have for \(Y_{T}\le {{\hat{Y}}}_{T}\) and \(Y_{T-1} \le {{\hat{Y}}}_{T-1}\),

completing the induction argument. To show that \(Y_t\) is bounded, it therefore suffices to show that \({\hat{Y}}_t\) is bounded, that is, there exists \(M>0\) such that for \({{\hat{Y}}}_j, {{\hat{Y}}}_{j+1}\le M\), \({{\hat{Y}}}_t \le M\), for all \(t>j\). By (A2), \({\hat{Y}}_{t+1}\) increases in \({\hat{Y}}_t\) and \({\hat{Y}}_{t-1}\), and we have

It therefore suffices to show the existence of \(M>0\) such that

Solving this inequality for \(M>0\) yields

Hence, for \({\bar{Y}}=\max _{i=0,1,\dots ,j, j+1} Y_i\), there exists \(M>\max \left\{ {\bar{Y}},\frac{A(1+d)-(1+r)d}{d\alpha }\right\} \) such that \(Y_t\le M\) for all \(t\ge 0\). Thus, \(Y_t\) is bounded for all \(t\ge 0\) with a bound dependent on the initial conditions \(X_0,Y_0\). This completes the proof. \(\square \)

Proof of Lemma 3

(a) Since \(f(0,Y_t)=0\), \(X_t=0\) for all \(t \ge 0\) if \(X_0=0\). In that case, \(Y_{t+1}=\frac{1}{(1+d)^t}Y_0\). This converges to zero for \(d>0\). (b) If \(Y_0=0\), then \(Y_{t}=0\) for all \(t\ge 0\). In the absence of a predator, \(X_t\) satisfies a Beverton–Holt recurrence and hence converges to K. \(\square \)

Proof of Theorem 4

The Jacobian of system (13) at \(({\hat{X}},{\hat{Y}})\) is given in (18).

-

(a)

The Jacobian at \(E_{0}\) is

$$\begin{aligned} J\mid _{(0,0)} = \begin{bmatrix} (1+r) &{}0\\ 0 &{} \frac{1}{1+d} \end{bmatrix}, \end{aligned}$$(A4)with eigenvalues \(\lambda _1= 1+r\) and \(\lambda _2=\frac{1}{1+d}\). Since \(\lambda _1>1\), the trivial equilibrium is unstable.

-

(b)

The Jacobian at \(E_K\) is

$$\begin{aligned} J\mid _{(K,0)}=\begin{bmatrix} \frac{(1+r)}{(1+r)^2} &{} -\frac{(1+r)\alpha K}{(1+r)^2}\\ 0 &{} \frac{1+\gamma K}{1+d} \end{bmatrix} = \begin{bmatrix} \frac{1}{1+r} &{} -\frac{\alpha K}{1+r}\\[2mm] 0 &{} \frac{1+\gamma K}{1+d} \end{bmatrix}. \end{aligned}$$(A5)The eigenvalues of J are \(\lambda _1 = \frac{1}{1+r}\) and \(\lambda _2=\frac{1+\gamma K}{1+d}\). Hence, the equilibrium is asymptotically stable if \(\gamma K < d\) and unstable if \(\gamma K>d\).

-

(c)

At \(E^*=(X^*,Y^*)\), using \(\beta = \frac{r}{K}(\gamma K- d)>0\), the Jacobian is

$$\begin{aligned} J\mid _{(X^*,Y^*)}=\begin{bmatrix} \frac{(1+r)\left( 1+\frac{\beta }{\gamma }\right) }{\left( 1+\frac{r}{K}\frac{d}{\gamma }+\frac{\beta }{\gamma }\right) ^2} &{} \frac{-(1+r)\alpha \frac{d}{\gamma }}{\left( 1+\frac{r}{K}\frac{d}{\gamma }+\frac{\beta }{\gamma }\right) ^2}\\ \frac{\gamma \frac{\beta }{\alpha \gamma }}{1+d} &{} \frac{1+\gamma \frac{d}{\gamma }}{1+d} \end{bmatrix} =\begin{bmatrix} \frac{\left( 1+\frac{\beta }{\gamma }\right) }{(1+r)} &{} \frac{-\alpha d}{\gamma (1+r)}\\ \frac{\beta }{\alpha (1+d)} &{} 1 \end{bmatrix}, \end{aligned}$$(A6)since the denominators in the first row simplify to \((1+r)^2\). The characteristic equation is

$$\begin{aligned} \lambda ^2 - \lambda \left( 1+\frac{1+\frac{\beta }{\gamma }}{1+r} \right) + \frac{1+\frac{\beta }{\gamma }}{1+r} + \frac{d \beta }{\gamma (1+r)(1+d)}=0. \end{aligned}$$Applying the Jury stability test (Ogata 1995, p. 185) to the characteristic equation

$$\begin{aligned} P(\lambda ) = \lambda ^2 + a_1 \lambda + a_2 \end{aligned}$$(A7)with

$$\begin{aligned} a_1 = -\left( 1+\frac{1+\frac{\beta }{\gamma }}{1+r} \right) , \quad a_2 = \frac{1+\frac{\beta }{\gamma }}{1+r} + \frac{d \beta }{\gamma (1+r)(1+d)}, \end{aligned}$$yields the sufficient condition for stability

$$\begin{aligned} \frac{1+\frac{\beta }{\gamma }}{1+r} + \frac{d \beta }{\gamma (1+r)(1+d)}<1. \end{aligned}$$Rearranging yields the equivalent expression

$$\begin{aligned} (1+d)+ (1+2d)\frac{\beta }{\gamma }<(1+r)(1+d) \quad \Longleftrightarrow \quad \beta <\gamma \frac{r(1+d)}{1+2d}, \end{aligned}$$(A8)and recalling \(\beta = \frac{r}{K}( \gamma K -d)\), we have

$$\begin{aligned} \frac{r}{K}(\gamma K - d)<\gamma \frac{r(1+d)}{1+2d} \quad \Longleftrightarrow \quad \gamma <\frac{1+2d}{K}. \end{aligned}$$Hence, the coexistence equilibrium is stable if \(\gamma K<1+2d\). If instead, \(\gamma K>1+2d\), then, by (A8), \(\frac{\beta }{\gamma }>\frac{r(1+d)}{1+2d}\) implies

$$\begin{aligned} (1+2d)\frac{\beta }{\gamma }>r(1+d). \end{aligned}$$(A9)Thus

$$\begin{aligned} a_2=\frac{1+\frac{\beta }{\gamma }}{1+r}+\frac{d\frac{\beta }{\gamma }}{(1+r)(1+d)}=\frac{1+d+(1+2d)\frac{\beta }{\gamma }}{(1+r)(1+d)}{\mathop {>}\limits ^{(A9)}}\frac{1+d+r(1+d)}{(1+r)(1+d)}=1. \end{aligned}$$We also note that \(\beta =\frac{r}{K}(\gamma K-d)\) implies that \(\frac{\beta }{\gamma }<r\) since the parameters are positive. Then, \(0<-a_1=1+\frac{1+\frac{\beta }{\gamma }}{1+r}<2\). Thus \(a_1^2-4a_2<0\), implying that \(P(\lambda )\) has two complex roots with moduli \(\frac{a_1^2+(4a_2-a_1^2)}{4}=a_2>1\), resulting in the instability of \(E^*\). This completes the proof.

\(\square \)

Proof of Lemma 5

Let \(0<X_0<K\), \({\mathcal {L}}\) be defined as in (22). Using (22) and simplifying the expression, we obtain

with

These calculations can be verified using Mathematica (Wolfram Research Inc. 2020).Footnote 1 Note that \(c_2>0\). The sign of \({\mathcal {L}}(X_t,Y_t)\) is determined by the sign of the numerator in (A10), the quadratic function in the variable \(Y_t\). Although the coefficients \(c_i\) are in fact dependent on \(X_t\), \(c_0<0\), for all \(X_t<K\) and \(c_2>0\) for all \(X_t>0\). Hence, there exists a unique \({\widehat{Y}}(X_t)\), such that \(\sum _{i=0}^2 c_i Y_t^i<0\) for \(0<Y_t<{\widehat{Y}}(X_t)\) and \(\sum _{i=0}^2 c_i Y_t^i>0\) for \({\widehat{Y}}(X_t)<Y_t\). This completes the first claim. Replacing \(Y_t\) by \(\ell (X_t)\) in (22), we have

and

Thus,

The expression can be verified using Mathematica (Wolfram Research Inc. 2020).Footnote 2 \(\square \)

Proof of Theorem 6

Let \(X_0,Y_0>0\). When \(d\ge \gamma K\), the set of nonnegative equilibria in the first quadrant is \({\mathcal {E}}=\{E_0,E_K\}\). Without loss of generality, assume that \(X_0<K\), since for \(X_0>0\), by Lemma 2, \(X_t\) either converges to K, so that \((X_t,Y_t)\) converges to \(E_K\), or there exists \(T>0\) such that \(X_t<K\) for all \(t\ge T\). If \(d\ge \gamma K\), the nullclines defined in (20) divide phase space into the three regions \({\mathcal {R}}_i\) (\(i=1,2,3\)) (see Fig. 1b). Observe that

-

(a)

If \((X_t,Y_t)\in {\mathcal {R}}_1\) for all \(t\ge 0\), by the boundedness of solutions proved in Lemma 2 and the monotonicity (\(X_{t+1}<X_t\) and \(Y_{t+1}>Y_t\)), the solution must converge to a point in \({\mathcal {E}}\). However, since the \(Y_t\)-component of the points in \({\mathcal {E}}\) are zero, but the \(Y_t\)-component of the sequence of iterates is increasing for all points in \({\mathcal {R}}_1\), this is impossible, and hence the solution must eventually enter \(B_{12}\cup {\mathcal {R}}_2 \cup B_{23} \cup {\mathcal {R}}_3 \).

-

(b)

Let \((X_t,Y_t)\in {\mathcal {R}}_2\). If \((X_t,Y_t)\) remains in \({\mathcal {R}}_2\) indefinitely, then \((X_t,Y_t)\) converges to \(E_K\). Otherwise, by the direction field, there exists \(T>0\) with \((X_T,Y_T)\in {\mathcal {R}}_3\).

-

(c)

If \((X_t,Y_t)\in {\mathcal {R}}_3\), then \(X_t<K\) and therefore, \(X_{t+1}<K\) so that \((X_{t+1},Y_{t+1})\notin {\mathcal {R}}_1\). We now show that \((X_{t+1},Y_{t+1}) \notin {\mathcal {R}}_2\), and hence must remain in \({\mathcal {R}}_3\). By Lemma 5 iii), there exists a unique positive \({\hat{Y}}(X_t)\) such that \(\sum _{i=0}^2 c_i Y_t^i\) changes sign at \(Y_t={\hat{Y}}(X_t)\) and \(\sum _{i=0}^2 c_i Y_t^i>0\) for all \(Y_t>\hat{Y}_t(X_t)\). Furthermore, since \({\mathcal {L}}(X_t, \ell (X_t))<0\) by (A12) for \(X_t<K<\frac{d}{\gamma }\), \(Y_{t+1}\) remains below the line \(\ell (X_t)\). Thus, \((X_t,Y_t)\) remains in the interior of \({\mathcal {R}}_3\) and converges to \(E_K\). Therefore, in all cases, solutions converge to \(E_K\).

We provide an alternative proof for \(d> \gamma K\) using a Lyapunov function. If \(X_t\ge K\) for all t, then by Lemma 2, \(X_t\) converges to K and by (b), \(\lim _{t\rightarrow \infty }Y_t=0\). Assume now, without loss of generality, that \(X_0< K\). We claim that

is a Lyapunov function for (13). Clearly, \(V(K,0)=0\) and \(V(X_t,Y_t)>0\) for \((X_t,Y_t)\ne (K,0)\). Next, we show that \(\Delta V(X_t,Y_t)<0\).

so that \(\Delta V(K,0)=0\). Replacing \(X_{t+1}-X_t\) and \(Y_{t+1}-Y_t\) with (14) and (15) yields

The first two terms are negative, since by Lemma 2, \(0<X_t< K\) for all \(t\ge 0\). Furthermore,

completing the proof using (Kelley and Peterson 2001, Theorem 4.18), if \(d>\gamma K\). \(\square \)

Proof of Theorem 7

Assume that \(d<\gamma K\). We verify the assumptions (B1)–(B6) and (H1)–(H3) in (Freedman and So 1989, Theorem 3.3).

-

(B1)

Let \({\mathbb {R}}^2_+=\{(x,y) \in {\mathbb {R}}^2 : x\ge 0, y \ge 0 \}\). Then consider the metric space \(\langle {\mathbb {R}}^2_+,{\tilde{d}}\rangle \) with the Euclidean metric \({\tilde{d}}\).

-

(B2)

Let the set \(\partial {\mathbb {R}}^2_+=\{(x,y)\in {\mathbb {R}}^2_+: xy=0 \}\), that is, the boundary of \({\mathbb {R}}^2_+\). Then \(\partial {\mathbb {R}}^2_+\) is a closed subset of \({\mathbb {R}}^2_+\).

-

(B3)

\((f,g): {\mathbb {R}}^2_+\rightarrow {\mathbb {R}}^2_+\) is continuous, where f and g are defined in (13).

-

(B4)

By Lemma 1, \((f,g)(\partial {\mathbb {R}}^2_+)\subset \partial {\mathbb {R}}^2_+\).

-

(B5)

By Lemma 1, \((f,g)({\mathbb {R}}^2_+\setminus \partial {\mathbb {R}}^2_+)\subset {\mathbb {R}}^2_+\setminus \partial {\mathbb {R}}^2_+\).

-

(B6)

By Lemma 2, the closure of any positive orbit through any \((X_0,Y_0)\in {\mathbb {R}}^2_+\) is compact.

-

(H1)

\((f,g)\mid _{\partial {\mathbb {R}}^2_+}\) is dissipative since any orbit with \(X_0=0\) and \(Y_0\ge 0\) converges to \(E_0\) and any point with \(X_0>0\) and \(Y_0 = 0\) converges to \(E_K\).

-

(H2)

\((f,g)\mid _{\partial {\mathbb {R}}^2_+}\) has acyclic covering \(\{E_0,E_K\}\).

-

(H3)

From the local stability analysis given in the proof of Theorem 4, \(E_0\) and \(E_K\) are both saddles and each has a one-dimensional stable manifold. In particular, the stable manifold of \(E_0\) is \(W^+(E_0)=\{(x,y)\in \partial {\mathbb {R}}^2_+: x=0\}\), and the stable manifold of \(E_K\) is \(W^+(E_K)=\{(x,y)\in \partial {\mathbb {R}}^2_+: x>0\}\), and hence \(W^+(E_0)\cap {\mathbb {R}}^2_+\setminus \partial {\mathbb {R}}^2_+ = \emptyset \) and \(W^+(E_K)\cap {\mathbb {R}}^2_+\setminus \partial {\mathbb {R}}^2_+ = \emptyset \).

Since all of the hypotheses of (Freedman and So 1989, Theorem 3.3) are satisfied, (13) is persistent. \(\square \)

Proof of Proposition 8

Assume \(d<\gamma K\). If \(X_t\le K\) and \(Y_t>0\), then \(X_{t+1} =\frac{(1+r)X_t}{1+\frac{r}{K}X_t+\alpha Y_t}< \frac{(1+r)X_t}{1+\frac{r}{K}X_t}\le X_t\le K\), so that \(X_{t+1}<K\). Assume now that \(X_t>K\) for all \(t\ge 0\). Then, by (14) and (A1), \(X_t\) is monotone decreasing for \(K\le X_{t+1}<X_t\). Also, since \(X_t>K>\frac{d}{\gamma }\), by (15), \(Y_t\) is monotone increasing. Thus, \(Y_t>Y_0>0\) and \({\bar{X}}:=\lim \limits _{t \rightarrow \infty }X_t\ge K\) exists. Suppose \({\bar{X}}\ge K\). Then,

Therefore, \(1+r\frac{{\bar{X}}}{K}+\alpha Y_0 \le 1+r \), or equivalently, \(\alpha Y_0 \le r\left( 1-\frac{{\bar{X}}}{K}\right) \le 0,\) contradicting \(Y_0>0\). \(\square \)

Proof of Lemma 9

Assume that \(d<\gamma K\) and \((X_0,Y_0) \in (0,\infty )^2\). By Lemma 2, \(X_t,Y_t\) are bounded for \(t\ge 0\). The claim \(\limsup _{t\rightarrow \infty } X_t\le K\) follows immediately from Lemma 2. By Lemma 1, since \(Y_0>0\), \(Y_t>0\) for all \(t\ge 0\). Thus, there exists \(T\ge 0\) such that \(X_t< K \) for all \(t\ge T\), since otherwise, if \(X_t\ge K\) for all \(t\ge 0\), then \((X_t,Y_t)\in {\mathcal {R}}_1\cup \{(X_t,Y_t):X_t=K\}\) indefinitely. This is, however, not possible, since \(Y_t\) is bounded by Lemma 2. By the direction field, this implies that there exists T such that \((X_T,Y_T)\in {\mathcal {R}}_2\), and therefore \(X_T\le K\). Note that if \(X_T=K\), then since \(Y_T>0\), \(X_{T+1}<K\). Therefore, there exists \(T\ge 0\) such that \(X_T<K\). Recall that the boundedness of \(Y_t\) was obtained by proving an upper bound for the upper solution \({{\hat{Y}}}_t\), where \({{\hat{Y}}}_t\) satisfies (A2). To show that \(\limsup _{t\rightarrow \infty }Y_t\) is uniformly bounded, it suffices to prove there is a unique value \({{\bar{Y}}}\) such that all solutions of the upper solution of (A2) converge to \({{\bar{Y}}}\), since then, for \(U\ge {{\bar{Y}}}\), \(\limsup _{t\rightarrow \infty } {{\hat{Y}}}_t=\lim _{t\rightarrow \infty } {{\hat{Y}}}_t={{\hat{Y}}}^*\le U\), and the claim is justified. The map H in (A2) satisfies

and is therefore component-wise monotone and (strictly) increasing in both variables. For M defined in (A3), \({{\hat{Y}}}_t\le M\) for all \(t\ge 0\) as long as \(0\le {{\hat{Y}}}_0,{{\hat{Y}}}_1\le M\). Note that for \(\gamma K>d\),

and therefore \(M>0\). Furthermore, for \(0<m\le \frac{A(1+d)-d(1+r)}{d\alpha }\),

Thus, \(H:[m,M]\rightarrow [m,M]\). To apply (Grove and Ladas 2004, Theorem 1.15), we note that the only solution \((s,S)\in [m,M]\) of

is \(s=S=s^*=\frac{A(1+d) - d(1+r)}{\alpha d}>0\) and \(s^*\in [m,M]\). Thus, by (Grove and Ladas 2004, Theorem 1.15), \(s^*\) is globally attracting, and therefore \(\lim _{t\rightarrow \infty }{{\hat{Y}}}_t=s^*=\frac{A(1+d) - d(1+r)}{\alpha d}\). Choosing \(U>s^*\) results in \(\limsup _{t\rightarrow \infty }Y_t\le \limsup _{t\rightarrow \infty } {{\hat{Y}}}_t=\lim _{t\rightarrow \infty }{{\hat{Y}}}_t=s^*<U\). \(\square \)

Proof of Proposition 10

Let \({\mathcal {R}}_i\), \(i=1,2,3,4\), be the regions defined in (21) (see Fig. 1b). Lemma 5 and (22) will also be used to prove Theorem 10. Define \({\mathcal {E}}=\{E_0,E_K,E^*\}\), the set of equilibria of (13).

-

(a)

Clearly one possibility is that the solution converges to \(E^*\) in finite time, e.g., the solution with \((X_0,Y_0)=E^*\).

-

(b)

We show that if the solution remains in the single region \({\mathcal {R}}_j\), \(j=1\) or \(j=3\), for all sufficiently large t, then it must converge to \(E^*\). Assume that there exists \(j \in \{1,3\}\) and \(T>0\) such that \((X_t,Y_t) \in {\mathcal {R}}_j\) for all \(t\ge T\).

-

If \(j=1\), that is, there exists T such that \((X_t,Y_t) \in {\mathcal {R}}_1\) for all \(t \ge T\), then by boundedness and monotonicity, the solution must converge to a point in \({\mathcal {E}}\). Given the direction field in \({\mathcal {R}}_1\), the orbit converges to \(E^*\).

-

If \(j=3\), that is, there exists T such that \((X_t,Y_t) \in {\mathcal {R}}_3\) for all \(t \ge T\), then again by monotonicity and boundedness, the solution must converge to a point in \({\mathcal {E}}\). Given the direction field in \({\mathcal {R}}_3\), the orbit converges to \(E^*\).

-

-

(c)

First we show that the solution cannot remain in \({\mathcal {R}}_2\) for all sufficiently large t or in \({\mathcal {R}}_4\) for all sufficiently large t. Suppose there exists \(T>0\) such that \((X_t,Y_t) \in {\mathcal {R}}_2\) for all \(t\ge T\). Then due to the monotonicity and boundedness of solutions, by Lemma 2, in this region, the solution would have to converge to a point in \({\mathcal {E}}\). The intersection of \({\mathcal {E}}\) with the closure of \({\mathcal {R}}_2\) contains only \(E^*\). Since, in \({\mathcal {R}}_2\), \(X_{t+1}<X_t<X^*\), for all \(t\ge T\), convergence to \(E^*\) is impossible. Similarly, suppose there exists \(T>0\) such that \((X_t,Y_t) \in {\mathcal {R}}_4\) for all \(t\ge T\). Then the solution is bounded and monotone, and hence it must converge to a point in \({\mathcal {E}}\). The intersection of \({\mathcal {E}}\) with the closure of \({\mathcal {R}}_4\) contains only \(\{E_K, E^*\}\). Since, for all \(t\ge T\), in \({\mathcal {R}}_4\), \(Y_{t+1}\ge Y_t>0\), convergence to \(E_K\) is impossible and since \(X_{t+1}>X_t>\frac{d}{\gamma }=X^*\), convergence to \(E^*=(X^*,Y^*)\) is also impossible. Assume now that the solution \((X_t,Y_t)\) of (13) does not converge to \(E^*\) in finite time nor does it eventually remain in one of the four regions, \({\mathcal {R}}_i\) for \(i=1,2,3,4\). We now show that it must enter each of the four regions indefinitely.

Since \(X_t< K\), for all sufficiently large t, we assume, without loss of generality, that \(X_0< K\).

-

If \((X_t,Y_t)\in {\mathcal {R}}_1\), then we show that \((X_{t+1},Y_{t+1})\) must be above the line \(Y_t=\ell (X_t)\). For \((X_t,Y_t)\in {\mathcal {R}}_1\), \(\ell (X_t)\le Y_t\) and \(X_t>X^*\). By (A12), \({\mathcal {L}}(X_t,\ell (X_t))>0\) and since \(c_2>0\) by (A11), by Lemma 5 (iii), \({\mathcal {L}}(X_t,Y_t)>0\) for all \(Y_t\ge \ell (X_t)\). Thus, \(Y_{t+1}>\ell (X_{t+1})\), so that \((X_{t+1},Y_{t+1})\in {\mathcal {R}}_1\cup {\mathcal {R}}_2\). Since by assumption, this solution does not remain in a single region, there exists T such that \((X_{T},Y_{T})\in {\mathcal {R}}_1\) and \((X_{T+1},Y_{T+1})\in {\mathcal {R}}_2\).

-

If \((X_t,Y_t)\in {\mathcal {R}}_2\), then \(X_{t+1}<X_t<X^*\), and by the direction field in \({\mathcal {R}}_2\), (see Fig. 1b) as well as the assumption that solutions do not remain in a single region indefinitely, there exists \(T\ge t\) such that \((X_{T},Y_{T})\in {\mathcal {R}}_2\) and \((X_{T+1},Y_{T+1})\in {\mathcal {R}}_3\). This specifically implies that \((X_{T},Y_T)\in {\mathcal {R}}_{2_2}\) since \({\mathcal {L}}(X_t,Y_t)\) must be nonpositive for the next iterate to be in \({\mathcal {R}}_3\). Note that two subsequent iterates cannot be in \({\mathcal {R}}_{2_2}\) because \({\mathcal {L}}(X_T,Y_T)\le 0\) in \({\mathcal {R}}_{2_2}\).

-

If \((X_t,Y_t)\in {\mathcal {R}}_3\), then we show that \((X_{t+1},Y_{t+1})\) must be below the line \(Y_t=\ell (X_t)\). If \((X_t,Y_t)\in {\mathcal {R}}_3\), then \(0<Y_t\le \ell (X_t)\). Since \(X_t<X^*\), by (A12), \({\mathcal {L}}(X_t,\ell (X_t))<0\), and therefore, by Lemma 5 (iii), \({\mathcal {L}}(X_t,Y_t)<0\) for all \(0<Y_t\le \ell ({X}_t)\). Thus, \(Y_{t+1}<\ell (X_{t+1})\) and \((X_{t+1},Y_{t+1})\in {\mathcal {R}}_3 \cup {\mathcal {R}}_4\). By assumption, the solution does not remain in a single region indefinitely, and given the direction field, there exists T such that \((X_{T},Y_{T})\in {\mathcal {R}}_3\) and \((X_{T+1},Y_{T+1})\in {\mathcal {R}}_4\).

-

If \((X_t,Y_t)\in {\mathcal {R}}_4\), then by the monotonicity of each component of the solution in that region (see Fig. 1b) the solution does not remain in \({\mathcal {R}}_4\). Thus, there exists T such that \((X_T,Y_T)\in {\mathcal {R}}_{4}\) and \((X_{T+1},Y_{T+1})\in {\mathcal {R}}_1\). This specifically implies that \((X_{T},Y_T)\in {\mathcal {R}}_{4_2}\) since \({\mathcal {L}}(X_t,Y_t)\) must be nonnegative for the next iterate to be in \({\mathcal {R}}_1\). Note that two subsequent iterates cannot be in \({\mathcal {R}}_{4_2}\) because \({\mathcal {L}}(X_T,Y_T)\ge 0\) in \({\mathcal {R}}_{4_2}\).

-

Therefore, in case (c), the solution rotates counterclockwise about \(E^*\), entering each region \({\mathcal {R}}_i\) for \(i=1,2,3,4\), indefinitely. Furthermore, the solutions lie in \({\mathcal {R}}_{2_2}\) and \({\mathcal {R}}_{4_2}\) exactly once in each cycle. This completes the proof. \(\square \)

Proof of Theorem 12

For \(d=\gamma K\), the equilibria \(E_K\) and \(E^*\) coalesce. The Jacobian evaluated at \(E_K\) given in (A5) has eigenvalues \(\lambda _1 = \frac{1}{1+r}\) and \(\lambda _2=1\). As well, the branches \(E_K\) and \(E^*\) are unique and exchange stability as \(\gamma \) passes through \(\frac{d}{\gamma }\), that is, when \(d-\gamma K\) changes sign. Thus, there is a transcritical bifurcation. \(\square \)

Proof of Theorem 13

Define \(\beta = \frac{r}{K} (K \gamma -d)\). For \(\gamma = \frac{1+2d}{K}\), \(\frac{\beta }{\gamma } = r\frac{1+d}{1+2d}\). The characteristic equation obtained for the Jacobian about \(E^*\) given in (A6) when \(\gamma _{\mathrm{crit}}=\frac{1+2d}{K}\) is

and

Hence, when \(\gamma =\gamma _{\mathrm{crit}}\), the two eigenvalues are complex with \(\Vert \lambda \Vert ^2=C = 1\). The characteristic equation for the coexistence equilibrium at \(E^*\) for \(\gamma =\gamma _{\mathrm{crit}}+\delta \) was given in (A7), that is, \(\lambda ^2 + a_1 \lambda + a_2=0\) with

The eigenvalues in polar form are \(\lambda = R(\gamma )\mathrm{e}^{\pm i \theta (\gamma )} \), where \(R(\gamma ) = \sqrt{a_2(\gamma )}\) and

Since \(a_2(\gamma _{\mathrm{crit}})=1\) and \(\beta '(\gamma )\gamma - \beta (\gamma ) = \frac{r}{K}d \ne 0\), the first degeneracy condition is satisfied. To show that the second is also satisfied, note that \(\tan ( \theta _0) = \frac{\sqrt{4a_2-a_1^2}}{(-a_1)}\) and \(\mathrm{e}^{ k i \theta _0} \ne 1\) for \(k=1,2,3,4\) for \(r,d>0\). Hence there is a Neimark–Sacker bifurcation at \(\gamma =\gamma _{\mathrm{crit}}\). In order to use the formula in Guckenheimer and Holmes (1983) to determine the criticality of the bifurcation, we translate \(E^*\) to the origin. Let

Then, (13) in the variables of \(W_t,Z_t\) becomes

where

The Jacobian of (A13) at \((W_t,Z_t, \gamma ) =(0,0, \gamma _{\mathrm{crit}})\) is given by

with eigenvalues

and \(\lambda _2\), the complex conjugate of \(\lambda _1\). The corresponding eigenvectors are

and \(\mathbf {v}_{\lambda _2}\), the complex conjugate of \(\mathbf {v}_{\lambda _1}\). Define the matrix \(T:= \begin{bmatrix} {\mathbf {U}}_2&{\mathbf {U}}_1 \end{bmatrix}.\) Applying the transformation \((u,v)^T = T^{-1}(w,z)^T\), where

yields

The nonlinear terms are

where

According to the formula in Guckenheimer and Holmes (1983) and Iooss and Joseph (1980), the criticality of the bifurcation at \(\gamma =\gamma _{\mathrm{crit}}\) is determined by the sign of

where

Since

the Neimark–Sacker bifurcation at \(\gamma =\gamma _{\mathrm{crit}}\) is supercritical. \(\square \)

Rights and permissions

About this article

Cite this article

Streipert, S.H., Wolkowicz, G.S.K. & Bohner, M. Derivation and Analysis of a Discrete Predator–Prey Model. Bull Math Biol 84, 67 (2022). https://doi.org/10.1007/s11538-022-01016-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11538-022-01016-4