Abstract

Recently, generative AI has been at the center of disruptive innovation in various settings, including educational sectors. This article investigates ChatGPT, which is one of the most prominent generative AI in the market, to explore its usefulness and potential for instructional design. Four researchers used a set of prompts to generate a course map for an online course that is aimed to teach the topic of makerspace and conducted SWOT analysis to identify strengths, weaknesses, opportunities, and threats of using generative AI for instructional design. The findings suggest that there is promise in using ChatGPT as an efficient and effective tool for creating course maps, yet it still requires the domain knowledge and instructional design expertise to warrant quality and reliability of the tool.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

For over a decade, it has been predicted that AI will be at the center of educational innovation (Becker et al., 2018; Editorial Team, 2018; Freeman et al., 2017; Gallup, & Northeastern University, 2018; Kak, 2018). Interests toward AI in education has been exponentially growing, and they grew even larger since the appearance of OpenAI’s ChatGPT (OpenAI, 2023) in late 2022. In fact, generative AI tools opened a new era by enabling learners to interact with AI using conversational language from deep learning technologies and natural language processing (Farrokhnia et al., 2023; Haque et al., 2022; Susnjak, 2022). Generative AI is expected to impact multiple domains of our society (Baldassarre et al., 2023) as users can search for information, generate creative work, get help with programming, and perform many other tasks that involve textual information.

The field of education is no exception. Due to the generative AI’s capability for disruption and innovation, it is imperative to explore and test its efficacy in the educational contexts (Parsons and Curry, 2024). While numerous studies investigate generative AI in the context of education, only a few studies specifically discuss its usage as a tool to support instructional design process (e.g., Chng, 2023; Parsons and Curry, 2024). Given the lack of research that investigates the potential of generative AI as an instructional design tool, this study aims to investigate potential strengths, weaknesses, opportunities, and threats of using the generative AI to develop a course. Hence, our research questions are as following: 1) How can generative AI be utilized for course planning and development; and 2) What are needed from instructional designers to successfully use generative AI for course planning and development?

Literature Review

The Significance of Automated Instructional Design in Higher Education

Instructional design process is inherently a complex interplay of decision-making processes that spans across macro and micro levels of course planning through incorporation of various design tools (Romiszowski, 1981, 2016). In the field of instructional design and technology, the demand for innovative learning technologies and methodologies have been continuously increasing as a way to enhance student learning experiences (Bannan-Ritland, 2001). In particular, exploring ways to maximize students’ learning experience through the use of various tools—ranging from authoring software to course management platforms that transform instructional design concepts to concrete lesson/course plan materials—have been a primary concern for instructional designers to ensure that student learning experiences are both engaging and effective when implementing learning technologies and methodologies (Klein & Kelly, 2018). This is especially true for instructional designers whose essential skills involve understanding and mastering various design tools and acquiring technology skills that align with the tools (Tripp & Bichelmeyer, 1990; Rothwell and Kazanas, 2011; Hodell, 2015).

In higher education contexts, automated instructional design is noted by scholars as a promising mechanism to support instructional designers to carefully connect and align various instructional elements (e.g., delivery setting, learning activities, and assessment) while simulating or predicting the affordances and limitations when each instructional element is applied in practice (Hora and Ferrare, 2013; Richey et al., 2001). A key aspect of course planning process involves the rigorous evaluation of alignment among content scope, delivery mechanisms, and assessment approaches (Chen, 2014; Dabbagh and English, 2015). While the skeleton of a course map helps to delineate the overarching course goal and objectives and develope a coherent flow for course delivery, the tasks involved with the course planning process become more complex when targeting a wider audience since the scalable content delivery needs to be considered. In accordance with this challenge, there is a burgeoning need for scalable and efficient course planning solutions (Drysdale, 2019). However, existing foundational methods and practices continue to have their limitations against the rapid change and expansive scale of educational needs across various disciplines. From the perspective of instructional designers, the comprehensive crafting of courses is not only resource-intensive (Birch & Burnett, 2009) but also time-limited (Spector & Song, 1995), constrained by deadlines. As such, the potential of automated instructional design has emerged as a promising solution, offering a way to address these challenges and streamline the course development process.

During the early 1980s, the complexity of courseware authoring became a prominent challenge, especially within the Air Force (Spector & Song, 1995; Tennyson, 1994a). Emerging insights from cognitive science posited that knowledge is not just abstract but can be represented and manipulated using computational means. As such, the field of computational cognitive science—focused on developing and redefining understanding of knowledge representation— experienced a rapid surge (Schank & Kass, 1988). Notably, frameworks like Adaptive Control of Thought-Rationale (ACT-R) gained significant attention (Taatgen et al., 2006). This model is a compelling example that shows the potential of computerized methods in representing and disseminating knowledge. ACT-R provides a robust computational approach to identifying and emulating human cognitive activities, ranging from simple memory tasks to complex problem-solving and decision-making processes. ACT-R enables researchers to build embodied models of people to understand how and why people think the way they do. ACT-R has demonstrated the capability of computational approaches to mirror human thought processes (Anderson et al., 2004). It further advances our knowledge of cognitive functions and their applications in real-world scenarios (Rizk et al., 2019). In this regard, ACT-R is more than just theoretical constructs since it offers practical applications that bridge the gap between cognitive science and education (Anderson & Schunn, 2013).

This realization opened doors to innovative approaches in education, suggesting that traditional methods of instructional design could be boosted by automated processes (Tennyson, 1994b). This was a critical turning point, as both instructional design researchers and practitioners acknowledged the transformative potential of automation in instructional design. Automated process signified harmonious interaction between human cognitive processes and the power of computational capabilities. In the field of instructional design and technology, there is a growing consensus that the systematic integration of advanced technologies has the potential to improve the efficiency and adaptability of learning experiences (Gillespie, 1998; Paquette, 2014; Potter & Rockinson-Szapkiw, 2012). It is expected that combining human pedagogical expertise with analytical capabilities of computational techniques could offer a tailored approach to meet learners’ diverse needs (Hamilton and Owens, 2018).

Generative Artificial Intelligence and ChatGPT in Instructional Design

The intersection of human expertise and artificial intelligence (AI) has guided a paradigm shift in instructional design (ID) (Tlili et al., 2023). The recent advancement of AI, exemplified by tools like ChatGPT (OpenAI, 2023), has paved the way for a new paradigm in instructional design research and practices. ChatGPT, renowned for its advanced language processing and transformation capabilities, has attracted attention across various domains. Preliminary reviews have suggested that ChatGPT can dynamically generate content and provide personalized learning experiences with reciprocal interactions (Farrokhnia et al., 2023; Lee, 2023; Tlili et al., 2023). More specifically, the capabilities of large language models (LLMs) with ChatGPT service, have revolutionized the dynamic content creation process, making it faster and more resource-efficient. Specifically, while AI-generated content still requires careful refinement, the initial drafts can be completed efficiently in short period of time at no cost. This efficiency in design performance aids in the swift development of instructional design components (e.g., learning outcome, prompts, and assessment rubrics).

In this regard, the role of instructional designers is being transformed with the emergence of AI. Instead of building courses from scratch, they can collaborate with AI in a synergistic way to enhance content design, instructional strategy delivery, and assessment processes. This collaboration seeks to harness the capabilities of AI to enhance instructional design outcomes. In essence, this viewpoint suggests that artificial intelligence is not merely a performance assistant (McGraw, 1994), but it can serve as a performance-empowering partner. Recognizing the transformative power of ChatGPT (Adiguzel et al., 2023), it is timely to conduct an in-depth exploration of ChatGPT’s use in instructional design context. As educational institutions increasingly integrate ChatGPT into their curricula, a thorough analysis of its use in instructional design through a structured analytical approach is needed to provide insights and guidelines to ensure that ChatGPT technology is deployed in a manner that is educationally effective and sound.

Methods

In order to understand and explore the potential of ChatGPT as a tool that can support instructional design process, the research team engaged in simulating an iterative process of creating a lesson plan for a 4-week online master course on makerspace using ChatGPT 3.5 as a design tool. The research team comprised of four members: one professor in the field of information science (with experience of developing and teaching the makerspace course for several years), two professors in the field of instructional technology and an instructional designer (with research experience and/or interest in makerspace). The researchers utilized SWOT framework as an analytical tool as SWOT analysis can help researchers identify internal and external factors that could play an important role in successfully adopting a novel technology (Farrokhnia et al., 2023). While there already has been a study that performed SWOT analysis on ChatGPT for its general implications for education (e.g., Farrokhnia et al., 2023), this research focuses specifically on generative AI’s potential as an instructional design tool.

ChatGPT Prompts

Before conducting the analysis, each researcher initially tinkered with ChatGPT to see if it has the capacity to create a lesson plan. After freely exploring the possibilities, the researchers discussed formulating a way to create a lesson plan for a hypothetical course. Given the importance of developing appropriate prompts is crucial for effectively utilizing ChatGPT in the field of education (e.g., Qadir, 2023), the research team first created a flowchart that contains specific prompts for ChatGPT that illustrated the steps for designing a course with ChatGPT. Figure 1 shows the flowchart.

The researchers decided to use the backward design approach (Wiggins and McTighe, 1998) as an instructional design framework in ChatGPT prompts. Backward design approach has been widely used in designing and developing courses in diverse disciplines, including biology and engineering (e.g., Mohammed et al., 2022; Reynolds & Kearns, 2017), and in different modalities, such as online and blended courses (e.g., Di Masi & Milani, 2016; Villalta-Cerdas et al., 2022). According to Wiggins and McTighe (1998), the backward design begins with the end goals in mind. It has the following stages: identifying desired results, determining acceptable evidence, and planning learning experiences and instruction (Wiggins and McTighe, 1998). Desired results refer to learning objectives or outcomes. Acceptable evidence refers to assessments.

In the process of course design, a course map is first created as an output of collaboration between instructional designers and faculty (e.g., Drysdale, 2019; Mancilla & Frey, 2020). It typically shows module objectives, assessments, and activities. Before creating a course map, faculty should already have a course title, a course description, an extended course description, course learning outcomes, target learners, course duration, and modality. In the present study, the researchers developed these elements to optimize the output of ChatGPT. The course title was ‘Makerspaces as Learning Environments.’ The target learners were graduate students at the master’s level. Course modality was online. In terms of course duration, a 4-week duration was selected because four modules were sufficient for assessing instructional design work created by ChatGPT. Considering these course design elements, the research team devised a short course description, an extended course description, and three course learning outcomes, which were entered into ChatGPT with the following prompt: “Using a backward design approach, create a course map that consists of module objectives, assessments, and hands-on activities, and assignments to develop a 4-week online master course by using the below course information.” (See Table 1).

Since the course map only provides a list of module objectives, assessments, and hands-on activities, additional prompts were developed to elaborate on each component. Table 2 shows the prompts for course materials, discussion forums, quizzes, assignments, and rubric. Prompt 2 was developed to create course materials, including readings and lecture videos for each module. Prompt 3 was developed to create hands-on activity guidelines and instructions. Prompt 4 was developed to create discussion prompts for each module’s discussion forum. Prompt 5 was developed to create quiz questions for each module. Specifically, this prompt included two multiple choice questions, two true and false questions, and one short essay question. Prompt 6 was developed to create assignment guidelines for each module. Prompt 7 was developed to create a rubric consisting of 5 rating scales and description of criterion for assignment created based on prompt 6.

SWOT Analysis

After developing ChatGPT prompts, each team member utilized them in ChatGPT. Each member typed all prompts from prompt 1 to prompt 7 in a single chat, ensuring that ChatGPT generated outputs based on prompts that indicated modules generated in previous prompts. Afterwards, each member individually generated a course design document containing ChatGPT’s output. The document contained everything aggregated from the prompts that are used. First, the document contained the basic outline of each module followed by more detailed plans for each module. The detailed plan contained course materials, guidelines and instructions for the hands-on activity, quiz questions, assignment guidelines and instructions, and a rubric.

Subsequently, each team member reviewed all the documents created by their team members and provided comments. These comments pertained to the effectiveness and pitfalls of ChatGPT in designing each component of the course. After that, the research team categorized the comments by employing the SWOT framework. The SWOT stands for strengths, weakness, opportunities, and threats.

Results

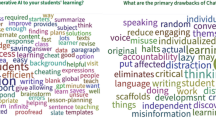

Our SWOT analysis, based on four researchers’ experience of creating a course map, demonstrated several areas of strengths, weaknesses, opportunities, and threats (see Fig. 2). We present strengths and opportunities, followed by weaknesses and threats.

Strengths

Generating Suitable Learning Objectives and Aligning Activities with Learning Objectives

ChatGPT was capable of generating action verbs suitable for various learning objectives in a concise and clear fashion. When instructional designers create a course map, they are often recommended to use clear, measurable action verbs. Words like “understand” or “know” are generally avoided because they are difficult to measure and do not specify the exact cognitive process to be used. Our SWOT analysis showed that ChatGPT was successful in generating suitable learning objectives.

ChatGPT was further capable of aligning instructional activities and assignments with learning objectives it suggested. Assignments were well developed to help students achieve learning objectives for a module. For example, one of the assignments suggested by ChatGPT was a chart activity to compare and contrast different toolkits they explored, and it directly aligned with the learning objective of the module, which was “Students will be able to use and analyze the current landscape of digital and physical making toolkits.”

Suggesting Appropriate Toolkits and Materials

Another strength of ChatGPT was suggesting appropriate toolkits and materials that could be used in a makerspace course, although it lacked the ability to discern which tools and materials would be best suited for online makerspace course for graduate students. Some of the instructional materials provided a solid foundation of the topic addressed in a module. For example, ChatGPT provided a list of materials that could potentially provide learners with a broader perspective on the power and potential of makerspaces in education, along with specific strategies for making, tinkering, and engineering in the classroom context.

Providing Discussion Prompts that Encourage Students’ and Instructors’ Critical Thinking

Another strength that stood out was ChatGPT’s ability to generate discussion prompts that encouraged students’ and instructors’ critical thinking. ChatGPT suggested discussion prompts for students that promoted critical thinking by helping students connect what they have learned to their personal experiences. It also supported students to understand the activity/assignment better through detailed prompts (see Fig. 3).

Also, some discussion prompts in assignments were detailed enough to help students write a reflection paper. Lastly, some assignment guidelines provided examples which were helpful for students to better understand the assignment and complete it. For instance, it is important to provide examples because students may not know much about the tools used for the assignment. Four researchers in this study further noted that ChatGPT’s suggestion of discussion prompts for activities and assignments broadened instructors’ knowledge space and promoted instructors to think more critically about what should be the most important content to be addressed in each module.

Providing a Diversity of Instructional Activities and Assignments

One of the key strengths of ChatGPT was demonstrated by the diversity of instructional activities and assignments it provided. Providing a broad range of activities and assessments helped four authors in this paper consider various options they might consider in their own teaching. When prompted to create hands-on activities for each module, ChatGPT did not repeat the same type of activities for all the modules. For instance, one of the collaborative hands-on activities that ChatGPT suggested was designing a timeline of makerspace and constructionist learning which requires collaboration with peers to build a cohesive timeline. The activity included researching the concept together using various resources, identifying key events and figures, and deciding on the format of the timeline. Another hands-on activity focused on learning how to use different toolkits, and the other activity focused on creating a plan for community engagement. As such, providing different sets of activities for each module that focused on different topics was one of the strengths of using ChatGPT.

Moreover, as mentioned earlier, we prompted ChatGPT to create the rubric for the assignment created for each module. During the course document generating process, creating the grading rubrics involved a detailed process of assessing students’ understanding and performance, matching the goals of each course section (see Fig. 4). We reviewed the course map’s standards to build our evaluation criteria. Peer review feedback helped refine the rubrics further. We made sure the rubrics correspondingly matched the activities and assignments that are evaluated. For example, in the “Analyzing Making Toolkits” assignment, the rubric focused on evaluating the thoroughness of the students’ independent research, investigating how well they organized their findings and applied what they learned. The rubrics were then seamlessly integrated into the course structure, providing clear benchmarks for performance, and supporting the consistent and fair assessment of student work. This strategic approach exemplifies the ChatGPT’s strength in crafting assessment tools that not only measure performance but also guide students towards academic success, reflecting its potential to represent the good alignment between course goals and learning outcomes.

Cross-Referencing Assignments, Courses, and Materials: Alignment

Lastly, ChatGPT showed strength in connecting previous course materials and assignments to another assignment. For instance, ChatGPT would refer to the readings and lecture videos mentioned in the previous module for the assignment. In another case, ChatGPT referred to a hands-on activity when providing a discussion prompt even though we did not mention hands-on activity when instructing ChatGPT to create a discussion activity. We note that ChatGPT automatically linked the individual activities prompted by the instructor.

Opportunities

Decreasing Instructors’ Workload due to Rapid Prototyping of Course Maps

ChatGPT provided opportunities to decrease instructors’ workload in terms of enabling them to come up with rapid prototypes of course maps. While the output of ChatGPT cannot substitute lesson plans, it could be a backbone for a new course design that warrant consistency and alignment between learning objectives, instructional activities, and assignments in and across the modules. Our analysis noted that ChatGPT can potentially take over time-consuming tasks during instructional design, such as information searching and curation since it provides curated outputs with the information that are included in the LLM. For instance, when instructed to provide materials for historical roots of makerspaces and constructionist learning, the LLM was effective in identifying resources written by seminal figures such as Seymour Papert and Mitchel Resnick who played a critical role in the area of makerspace research and constructionist learning, indicating that LLM can be an effective tool in curating essential resources. This shows a potential of the LLM as a surveying tool for identifying and curating information; however, this requires source verification from the user.

Promoting Student-Centered Learning

ChatGPT can provide a diversity of instructional activities and assignments in a fast manner. Therefore, ChatGPT provides opportunities for instructors to fast-track tasks that are related to information search and curation. This enables instructional designers to devote more time for designing scaffolds for each instructional activity and thinking more critically about how to support student engagement during instruction. This shift in how instructors engage in overall instructional design process can potentially promote more student-centered learning.

Weaknesses

Activities Not Feasible due to Limited Contextual Understanding

While our analysis demonstrated many strengths and opportunities, weaknesses were also noted. ChatGPT lacked contextual understanding of the students and the class. For this analysis, we had asked ChatGPT to create a course map for a four-week online makerspace course for graduate students at the master’s level. While we specifically instructed ChatGPT to create an online course, ChatGPT disregarded this command and designed activities that were suitable for an in-person course. For instance, collaborative activities suggested by ChatGPT involved physical materials for crafting, which would not be possible if students were to collaborate in online or remote settings.

ChatGPT often did not consider how much time it would take to complete an assignment, which lowered the level of feasibility of the course plan. For instance, ChatGPT recommended summarizing an entire book in one week. It also suggested designing, facilitating, and reflecting on a makerspace activity within one week, without realizing the time needed to design the activity, identify a makerspace, receive approval from the makerspace to run the activity, and recruit participants.

Low Reliability of Resources and Materials

There were numerous occasions where ChatGPT would fabricate a list of reading materials and references that did not exist. For instance, ChatGPT recommended the following article which did not exist in any databases: Martin, L. M., & Martin, J. P. (2015). Creating makers: Key issues for transforming the education system. TechTrends, 59(1), 21–28. Reliability and accuracy of references are critical in providing information for students. This is one of the most critical weaknesses because using non-existent resources or misuse of materials would make the whole course obsolete.

Vagueness of Instruction

One notable weakness of ChatGPT was vagueness of instruction. Several activities and assignments did not contain detailed guidelines for students to follow. Instructions would provide high-level description of what needs to be done, but they were too vague for students to perform the activities without any scaffolding, which made it difficult for instructors to use ChatGPT other than producing outlines of the course maps. For instance, one of the hands-on activities suggested by ChatGPT was too open-ended which may potentially frustrate the learners (See Fig. 5).

Repetitive Discussion Activities

Each module had a similar structure with similar types of discussion activities. While having a sense of structure for consistency is important in designing a course, if every discussion is too similar, it could lead to boredom and less engagement from students. This was most prevalent among discussion activities.

Lack of Depth in Quiz and Assessments

Most of the quiz questions focused on testing factual recall and did not measure students’ higher-order thinking skills. In order for ChatGPT to be useful for instructional designers, it needs to provide questions that assess higher-order thinking skills, such as applying the principles of constructionist learning to hypothetical scenarios or evaluating the impact of makerspaces in different contexts. Such lack of depth in assessments could potentially limit its utility and acceptance in the field. Such lack of depth was also evident in assignments. Many of the assignments were relevant to key topics yet still shallow. Questions were too generic without any relevance to students’ personal experiences. In fact, some of them were too generic where students could use ChatGPT to answer those questions. For instance, multiple questions as well as true and false questions often focused too much on factual knowledge (See Fig. 6).

Unreliable Output

ChatGPT would often fail to produce the whole course plan due to a system issue. For example, ChatGPT would only print out two modules and stop when we asked it to create four modules. We had to carefully divide our commands to get the outputs for all the prompts because once ChatGPT stopped, it sometimes did not simply resume but started from the beginning.

Threats

Quality Control

In considering the threats of using ChatGPT for instructional design purposes, quality control issues emerge as one of the threats. There were inconsistencies on multiple levels. The biggest inconsistency we observed was output itself. Even though we used almost identical prompts to replicate the results, outputs varied across four researchers’ use of ChatGPT. While this may not necessarily be a threat for an LLM itself, it makes the output assessment more difficult which directly impacts quality control. If ChatGPT does not provide consistent outputs, there could be quality control issues and limits to scalability.

The aforementioned reliability issues could also be considered as threats. Issues such as ChatGPT stopping in the middle of generating contents or having inconsistent outputs that are different in quality would make the output results less reliable. Lastly, unverifiable information provided by ChatGPT and lack of explanation regarding the outputs could also be potential threats.

Discussion

We began this paper highlighting the need to interrogate the use of generative AI, such as ChatGPT, for dynamic content creation of course map for instructional designers. The findings suggest that ChatGPT acted as a performance empowering partner as it supported rapid prototyping of a course map. In particular, our findings demonstrate the potential of ChatGPT to decrease instructors’ workload and address the known challenges in production of course maps, such as the alignment among content scope, delivery mechanisms, and assessment approaches (Chen, 2014; Dabbagh and English, 2015). In leveraging the capabilities of large language models, ChatGPT demonstrated success in generating measurable learning outcomes and carefully aligning instructional activities with proposed learning objectives.

Our analysis also illustrated that ChatGPT holds great potential for instructors to expedite tasks to address the time-limited issue in instructional design (Spector & Song, 1995). By suggesting appropriate toolkits and diverse instructional activities, providing detailed rubric and discussion prompts, and cross-referencing course materials, our findings showed that ChatGPT is particularly useful for expediting the beginning stages in the instructional design process, such as searching, understanding, and curating the instructional resources and course content. With saving time on certain tasks through the support from ChatGPT as a performance empowering partner, we anticipate that instructors and instructional designers would experience future opportunity to focus more on creating a student-centered learning environment.

Importantly, when ChatGPT is incorporated as a performance empowering partner, we emphasize that instructional designers’ nuanced understanding of the domain knowledge is critical for successful adaptation and contextualization of the course map into practice. Our findings highlighted several weaknesses of using ChatGPT for course design, such as lacking understanding of the course context and generating activities that are not feasible and providing resources and materials that do not exist. Moreover, there were vagueness of instruction, repetitive discussion activities, lack of depth in assessments, and unreliable output. While some of these weaknesses (i.e., generating activities that are not feasible) could be rectified through additional prompting, we argue that instructor’s nuanced understanding of the domain knowledge is critical to address these weaknesses. For instance, if instructors incorporate recommendations from ChatGPT without fact-checking the materials and assessing the quality of recommended activities, the instruction could potentially include non-existent resources and also contain activities that are not feasible or relevant to students. Further, our findings showed that many activities suggested by ChatGPT lacked adequate scaffolding to help students engage in the activities, highlighting that simply taking up the ChatGPT outputs would not support students to meet the learning objectives. Given this potential threat, we advocate professional instructors to build domain knowledge prior to utilizing ChatGPT to determine what needs to be adapted and contextualized for the course.

Further, we question: is it always beneficial to expedite certain phases involved in the instructional design process, such as information finding and curating? The use of ChatGPT can potentially impact users’ development of higher-order thinking skills (e.g., creativity, problem-solving) when users get accustomed to obtaining quick and simplified information from ChatGPT, “which can have a negative impact on the students’ motivation to perform independent research and arrive at their own conclusions or solutions” (Farrokhnia et al., 2023, p. 9). When reflecting on four authors’ own experiences of developing a course, we noted that time-consuming and iterative process involved in the beginning phases of course preparation is critical. By identifying relevant instructional resources, determining which resources to include and exclude, and examining how to structure them in which sequences, instructors can enhance clarity regarding the subject matter and gain better understanding of key learning objectives and essential learning activities. In this way, time-consuming tasks in the beginning stages of the instructional design process might serve as a foundation of knowledge for the instructors. If instructors use ChatGPT without any foundational work by themselves, is it forfeiting a valuable opportunity for instructors to engage in learning to better teach and instruct? We must engage with this question when we decide to integrate ChatGPT in pursuit of making expediated progress in our endeavor.

In addition, professionals and researchers in instructional design field should embody and model the responsible integration of ChatGPT into educational practices—characterized by balancing the use of the tool with critical thinking and creative endeavor, rather than overly reliance on the tool. Further, instructional designers and researchers need to engage in conversations to determine and disseminate the best practices for incorporating ChatGPT in the educational practices.

While this study provides a useful overview of utilizing the technology, it has some weaknesses, First, we only explored the use of ChatGPT to generate one course content. Even though we were able to identify strengths, weaknesses, opportunities, and threats of generative AI for instructional design, these may differ depending on the nature of the courses that are to be designed. Second, because ChatGPT has been updated since our analyses, the outputs generated by the current version may differ from the results provided by this study. Lastly, there are variations of ChatGPT (e.g. paid and unpaid versions) that may impact the quality of the outputs.

Conclusion

This study explored how instructional designers and instructors can utilize ChatGPT to develop a course by developing a hypothetical online course that aimed to teach the topic of makerspaces and analyzed the results using the SWOT framework. Our SWOT analysis of the use of generative AI for instructional design highlights the potential of generative AI that: (1) there is promise in using ChatGPT as a performance empowering partner for the production of instructional course maps; and (2) instructional designers’ nuanced understanding of the domain knowledge is critical for successful adaptation and contextualization of the course map developed by ChatGPT into practice. We share several implications based on the study findings. First, the study provides a guideline on how to design a course with generative AI. By providing an actual design example, the study illustrates the process and outcome of utilizing generative AI in the field of instructional design. Also, the illustrated process could function as a model for instructional designers in using generative AI for their course design. Lastly, identifying the strengths and weaknesses of using generative AI for course design can inform instructional designers in establishing a good instructional design practice. Future studies could expand this study further by empirically testing the instructional designers and learners’ perceived effectiveness of using generative AI and provide more concrete design guidelines.

Overall, ChatGPT demonstrated its capability of creating a complete course map that contains useful contents that can lessen the workload of instructional designers, but our study suggests that it still requires human interventions and moderations to provide more reliable and trustworthy outputs for quality control purposes. Such results provide insights on how generative AI can be utilized and how instructional designers and instructors can work with it to provide optimal experiences for learners.

References

Adiguzel, T., Kaya, M. H., & Cansu, F. K. (2023). Revolutionizing education with AI Exploring the transformative potential of ChatGPT. Contemporary Educational Technology, 15(3), 429. https://doi.org/10.30935/cedtech/13152

Anderson, J. R., & Schunn, C. D. (2013). Implications of the ACT-R learning theory: No magic bullets. Educational design and cognitive scienceIn R. Glaser (Ed.), Advances in instructional psychology (Vol. 5, pp. 1–33). Lawrence Erlbaum Associates.

Anderson, J. R., Bothell, D., Byrne, M. D., Douglass, S., Lebiere, C., & Qin, Y. (2004). An integrated theory of the mind. Psychological Review, 111(4), 1036–1060. https://doi.org/10.1037/0033-295X.111.4.1036

Baldassarre, M. T., Caivano, D., Fernandez Nieto, B., Gigante, D., & Ragone, A. (2023). The social impact of generative AI: An analysis on ChatGPT. Proceedings of the 2023 ACM Conference on Information Technology for Social Good. . https://doi.org/10.1145/3582515.3609555

Bannan-Ritland, B. (2001). Teaching instructional design: An action learning approach. Performance Improvement Quarterly, 14(2), 37–52. https://doi.org/10.1111/j.1937-8327.2001.tb00208.x

Becker, S. A., Brown, M., Dahlstrom, E., Davis, A., DePaul, K., Diaz, V., & Pomerantz, J. (2018). NMC horizon report: 2018 higher (education). Educause.

Birch, D., & Burnett, B. (2009). Bringing academics on board: Encouraging institution-wide diffusion of e-learning environments. Australasian Journal of Educational Technology, 25(1), 117–134. https://doi.org/10.14742/ajet.1184

Chen, S. J. (2014). Instructional design strategies for intensive online courses: An objectivist-constructivist blended approach. Journal of Interactive Online Learning, 13(1), 72–86. https://www.learntechlib.org/p/153514/

Chng, L. K. (2023). How AI Makes its Mark on Instructional design. Asian Journal of Distance Education, 18(2), 32–41. http://asianjde.com/ojs/index.php/AsianJDE/article/view/740

Dabbagh, N., & English, M. (2015). Using student self-ratings to assess the alignment of instructional design competencies and courses in a graduate program. TechTrends, 59, 22–31. https://doi.org/10.1007/s11528-015-0868-4

Di Masi, D., & Milani, P. (2016). Backward design in-service training blended curriculum to practitioners in social work as coach in the PIPPI program. Journal of e-Learning and Knowledge Society, 12(3), 31–40. https://www.learntechlib.org/p/173472/

Drysdale, J. (2019). The collaborative mapping model: relationship-centered instructional design for higher education. Online Learning, 23(3), 56–71. https://doi.org/10.24059/olj.v23i3.2058

Editorial Team. (2018, February 28). Impacts of artificial intelligence and higher education’s response. Retrieved August 1, 2019, from InsideBIGDATA website: https://insidebigdata.com/2018/02/28/impacts-artificial-intelligence-higher-educations-response/

Farrokhnia, M., Banihashem, S. K., Noroozi, O., & Wals, A. (2023). A SWOT analysis of ChatGPT: Implications for educational practice and research. Innovations in Education and Teaching International, 1–15. https://doi.org/10.1080/14703297.2023.2195846

Freeman, A., Becker, S. A., Cummins, M., Davis, A., & Giesinger, C. H. (2017). NMC/CoSN Horizon Report: 2017 K–12 Edition. Austin, Texas: The New Media Consortium.

Gallup, & Northeastern University. (2018). Optimism and anxiety: Views on the impact of artificial intelligence and higher education’s response. Gallup.

Gillespie, F. (1998). Instructional design for the new technologies. New Directions for Teaching and Learning, 1998(76), 39–52. https://doi.org/10.1002/tl.7603

Hamilton, E., & Owens, A. M. (2018). Computational thinking and participatory teaching as pathways to personalized learning. In R. Zheng (Ed.), Digital Technologies and Instructional Design for Personalized Learning (pp. 212–228). IGI Global. https://doi.org/10.4018/978-1-5225-3940-7.ch010

Haque, M. U., Dharmadasa, I., Sworna, Z. T., Rajapakse, R. N., & Ahmad, H. (2022). “I think this is the most disruptive technology”: Exploring sentiments of ChatGPT early adopters using Twitter data. arXiv. https://doi.org/10.48550/arXiv.2212.05856

Hodell, C. (2015). ISD from the ground up: A no-nonsense approach to instructional design. ATD Press.

Hora, M. T., & Ferrare, J. J. (2013). Instructional systems of practice: A multidimensional analysis of math and science undergraduate course planning and classroom teaching. Journal of the Learning Sciences, 22(2), 212–257. https://doi.org/10.1080/10508406.2012.729767

Kak, S. (2018, January 9). Will traditional colleges and universities become obsolete? Retrieved August 1, 2019, from The Conversation website: https://theconversation.com/universities-must-prepare-for-a-technology-enabled-future-89354?xid=PS_smithsonian

Klein, J. D., & Kelly, W. Q. (2018). Competencies for instructional designers: A view from employers. Performance Improvement Quarterly, 31(3), 225–247. https://doi.org/10.1002/piq.21257

Lee, H. (2023). The rise of ChatGPT: Exploring its potential in medical education. Anatomical Sciences Education, 00, 1–6. https://doi.org/10.1002/ase.2270

Mancilla, R., & Frey, B. (2020). A model for developing instructional design professionals for higher education through apprenticeship. The Journal of Applied Instructional Design, 9(2), 1–8. https://doi.org/10.51869/92rmbf

McGraw, K. (1994). Developing a user-centric EPSS. Technical & Skills Training, 5(7), 25–32.

Mohammed, J., Schmidt, K., & Williams, J. (2022). Designing a new course using Backward design. In Paper presented at 2022 ASEE Annual Conference & Exposition. Minneapolis, MN. ASEE. https://peer.asee.org/designing-a-new-course-using-backward-design

OpenAI. (2023). ChatGPT (Mar 14 version) [Large language model]. https://chat.openai.com/chat

Paquette, G. (2014). Technology-based instructional design: Evolution and major trends. In Spector, J., Merrill, M., Elen, J., Bishop, M. (Eds.). Handbook of Research on Educational Communications and Technology (pp. 661–671). New York, NY: Springer. https://doi.org/10.1007/978-1-4614-3185-5_53

Parsons, B., & Curry, J. H. (2024). Can ChatGPT Pass Graduate-Level Instructional Design assignments? Potential Implications of Artificial Intelligence in Education and a Call to Action. TechTrends, 68, 67–78. https://doi.org/10.1007/s11528-023-00912-3

Potter, S. L., & Rockinson-Szapkiw, A. J. (2012). Technology integration for instructional improvement: The impact of professional development. Performance Improvement, 51(2), 22–27. https://doi.org/10.1002/pfi.21246

Qadir, J. (2023). Engineering education in the era of ChatGPT: Promise and pitfalls of generative AI for education. Proceedings of the 2023 IEEE Global Engineering Education Conference (EDUCON) (pp. 1–9). https://doi.org/10.1109/EDUCON54358.2023.10125121

Reynolds, H. L., & Kearns, K. D. (2017). A planning tool for incorporating backward design, active learning, and authentic assessment in the college classroom. College Teaching, 65(1), 17–27. https://doi.org/10.1080/87567555.2016.1222575

Rizk, Y., Awad, M., & Tunstel, E. W. (2019). Cooperative heterogeneous multi-robot systems: A survey. ACM Computing Surveys (CSUR), 52(2), 1–31. https://doi.org/10.1145/3303848

Romiszowski, A. J. (1981). A new look at instructional design. part I. learning: Restructuring one’s concepts. British Journal of Educational Technology, 12(1), 19–48.

Romiszowski, A. J. (2016). Designing instructional systems: Decision making in course planning and curriculum design. Routledge.

Rothwell, W. J., & Kazanas, H. C. (2011). Mastering the instructional design process: A systematic approach. John Wiley & Sons.

Schank, R., & Kass, A. (1988). Knowledge representation in people and machines. In U. Eco, M. Santambrogio, & P. Violi (Eds.), Mental and mental representations (pp. 181–200). Indiana University Press.

Spector, J. M., & Song, D. (1995). Automated instructional design advising. In R. D. Tennyson & A. E. Baron (Eds.), Automating instructional design: Computer-based development and delivery tools (pp. 377–402). Springer-Verlag. https://doi.org/10.1007/978-3-642-57821-2_15

Susnjak, T. (2022). ChatGPT: The end of online exam integrity? arXiv. https://doi.org/10.48550/arXiv.2212.09292

Taatgen, N. A., Lebiere, C., & Anderson, J. R. (2006). Modeling paradigms in ACT-R. In R. Sun (Ed.), Cognition and multi-agent interaction: From cognitive modeling to social simulation (pp. 29–52). Cambridge University Press.

Tennyson, R. D. (Ed.). (1994a). Automating instructional design, development, and delivery. Springer-Verlag.

Tennyson, R. D. (1994b). Knowledge base for automated instructional system development. In R. D. Tennyson (Ed.), Automating instructional design, development, and delivery (pp. 29–59). Springer-Verlag. https://doi.org/10.1007/978-3-642-78389-0_3

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10(1), 15. https://doi.org/10.1186/s40561-023-00237-x

Tripp, S. D., & Bichelmeyer, B. (1990). Rapid prototyping: An alternative instructional design strategy. Educational Technology Research and Development, 38(1), 31–44. https://doi.org/10.1007/BF02298246

Villalta-Cerdas, A., & Yildiz, F. (2022, January). Creating Significant Learning Experiences in an Engineering Technology Bridge Course: a backward design approach. In 2022 ASEE Virtual Annual Conference Content Access. ASEE. Retrieved from https://par.nsf.gov/biblio/10352624

Wiggins, G & McTighe, JH. (1998). Understanding by Design. Alexandria, VA: Association for Supervision and Curriculum Development.

Further Reading

Al Ahmed, Y., & Sharo, A. (2023). On the education effect of Chat-GPT: Is AI ChatGPT to dominate education career profession? Proceedings of the 2023 International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS) (pp. 79–84). https://doi.org/10.1109/ICCNS58795.2023.10192993

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Choi, G.W., Kim, S.H., Lee, D. et al. Utilizing Generative AI for Instructional Design: Exploring Strengths, Weaknesses, Opportunities, and Threats. TechTrends 68, 832–844 (2024). https://doi.org/10.1007/s11528-024-00967-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11528-024-00967-w