Abstract

This work presents the design, implementation and validation of learning techniques based on the kNN scheme for gesture detection in prosthetic control. To cope with high computational demands in instance-based prediction, methods of dataset reduction are evaluated considering real-time determinism to allow for the reliable integration into battery-powered portable devices. The influence of parameterization and varying proportionality schemes is analyzed, utilizing an eight-channel-sEMG armband. Besides offline cross-validation accuracy, success rates in real-time pilot experiments (online target achievement tests) are determined. Based on the assessment of specific dataset reduction techniques’ adequacy for embedded control applications regarding accuracy and timing behaviour, decision surface mapping (DSM) proves itself promising when applying kNN on the reduced set. A randomized, double-blind user study was conducted to evaluate the respective methods (kNN and kNN with DSM-reduction) against ridge regression (RR) and RR with random Fourier features (RR-RFF). The kNN-based methods performed significantly better (\(p < 0.0005\)) than the regression techniques. Between DSM-kNN and kNN, there was no statistically significant difference (significance level 0.05). This is remarkable in consideration of only one sample per class in the reduced set, thus yielding a reduction rate of over 99% while preserving success rate. The same behaviour could be confirmed in an extended user study. With \(k=1\), which turned out to be an excellent choice, the runtime complexity of both kNN (in every prediction step) as well as DSM-kNN (in the training phase) becomes linear concerning the number of original samples, favouring dependable wearable prosthesis applications.

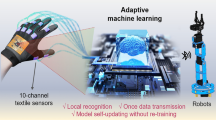

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

The development of prosthetics has been continuously improving since the twentieth century. After being solely a cosmetic replacement for amputees, prostheses evolved to body-driven functional devices and, especially beginning in the 1940s, to powered myoelectric systems ([102], p. 32).

From the existing myographic methods to collect data from muscular activity, this paper focuses on surface electromyography (sEMG), which comprises the capturing, processing and analysis of electromyographic signals, i.e. the changes over time in electric potential originating from skeletal muscles (cf. [63, 68]), measured by electrodes on the skin surface.

Prosthetic control describes the general concept behind the process from capturing signal data (sensor side) via processing and analyzing it to forwarding the data interpretation to a prosthetic device (actuator side)—with potential feedback loops. The (closed-loop) motor-control of the prosthetic actuators themselves, i.e. the single joints of the prosthetic device by, e.g. direct force control, impedance or admittance control will not be considered in the scope of this work.

There has been a variety of control schemes presented for EMG-based prosthetic devices in the area of open-loop myocontrol [81, p. 252]. As a consequence of their diverse fundamental characteristics, they differ in the achieved granularity, precision and stability of movements (dexterity).

Pattern-recognition-based myoelectric control schemes utilize machine learning methods to correctly detect gestures by means of classification or regression. Specific features can be calculated from the raw or filtered signal in time-, frequency- and time-frequency domain [81, p. 250]. The basic principle of action (intent/intention) detection is to predict a specific action from the sensed biological signal.

This work examines and extends the k-nearest neighbour learning scheme (kNN). The respective methods are based upon detecting specific gestures defined beforehand, leading in the application phase to their real-time recognition. For not explicitly learned, intermediate gestures proportionality scaling techniques are introduced.

Commonly applied pattern recognition methods in myocontrol often show disadvantages in terms of generalizability, intuitive control and robustness regarding “electrodes shift, varying force levels” [50] (e.g. overshooting) and others. To cope with these limitations, an extended kNN learning scheme seemed promising due to its simplicity, incrementality and good results in exemplary tests.

Referring to the results of kNN in the context of EMG-based prosthetics in Sect. 2.1, it can be seen that in most cases, kNN delivers high performance in terms of accuracy and success rate respectively. [59] states that “excellent performance can be achieved if sufficient training data is available”.

kNN is shown to be relatively insensitive to noise [4], electrode displacement [11] and sampling frequency variation [16] which speaks in favour of robustness.

Its low complexity is a main advantage of kNN specifically in the context of implementation for embedded systems. As for all instance-based machine learning techniques, it does not require an explicit model training. However, it has to be coped with high computational demands during the prediction. Thus, this paper introduces mechanisms for dataset reduction, combines them with kNN and finally analyzes a selected method to be called DSM-kNN.

The applicability to embedded, wearable systems is specifically important since “users of modern prosthetics are now given access to applications that can run on external devices capable of fine tuning and setting up gestures or gesture patterns [...] [to] allow[] high-level customization” [102] and comes with non-functional constraints regarding energy consumption, portability, timeliness, safety and dependability.

Precise sensor placement on specific muscles is usually considered essential for achieving high detection performance from sEMG signals [83]. This placed-sensor approach is not suitable for wearable devices, but could be compensated by (time-)frequency-domain feature analysis, although it “cannot be realised in real-time using the simple embedded processors housed in EMG wearables”, as mentioned by [83]. They present an operational comparison of applicable features, projection techniques and classifiers. On this basis, they introduce a time-domain algorithm “suitable for deployment on embedded processors for real-time inference in a portable, battery-operated device” [83] by reducing clock cycle and therefore power consumption without impairing accuracy. However, their promising approach neither introduced proportionality schemes to classification nor conducted online user studies and can be seen as a complement.

Although kNN has been applied in several EMG studies, there was no in-depth examination of the strategy’s parameters nor has it been particularly combined with data reduction techniques as in our work.

2 Related work

A variety of machine learning strategies has been followed over the years in the context of myocontrol (cf. [81, p. 251]). This includes neural networks in different compositions [4, 6, 8, 23, 30,31,32, 36, 39, 42, 45, 47, 82, 86, 90, 93], including such based on adaptive resonance theory [91]; support vector machines and variants [16, 39, 48, 49, 66, 84, 86, 90, 108]; decision trees [30]; (naïve) Bayesian classification [52, 70, 90, 103]; fuzzy logic approaches [3, 69]; Gaussian mixture models [44, 46, 106]; logistic regression [30]; logistic model trees [30]; classification via independent component analysis (ICA) [93], canonical discriminant analysis [71]; linear discriminant analysis (LDA) [6, 16, 20, 22, 23, 31, 46, 50, 53, 98, 108]; quadratic discriminant analysis (QDA) [46, 53, 90, 98]; random forest [86]; extreme learning machines (ELM) [90]; hidden Markov models [13]; evolvable hardware (EHW) with embedded Cartesian genetic programming [37]; and kNN (see Sect. 2.1).

The following features and transformations have proven well in the context of pattern-recognition-based myoelectric control (cf. [81, p. 250-251]): linear envelope [107, 10, 76, 104, p. 271]; zero crossings and variance [87]; integral absolute value, variance and zero crossing [94]; mean absolute value [6], its slope, wave form length, number of waveform slope sign changes and number of waveform zero crossings (Hudgins set of features) [45]; frequency spectrum via Fourier transform [26, 39, 93], random Fourier features [34, 35] and local frequency and phase content via short-time Fourier transform [22, 23, 41, 91]; autoregressive coefficients [14, 55, 103]; cepstral coefficients [14, 103]; wavelet decomposition coefficients [8, 22, 23, 36, 47, 48, 67, 84] and their eigenvalues [66]; wavelet packet feature sets [22, 23], motor unit action potentials (MUAPs) via wavelet packet transform and fuzzy C-means clustering [85]; signal energy (overall, within Hamming windows, within trapezoidal windows) as temporal features and spectral magnitude as well as spectral moments from short-time Thompson transform [91]; moving approximate entropy [2]; and contraction factors from fractal modelling [55] and fractal dimensions [7, 43].

A review of classification techniques for forearm prostheses is given in [77, p. 725], along with information about features, performed experiments, selected subjects and achieved results. A review of the multitude of features and an evaluation thereof on EMG data with significance analysis was conducted in [79, p. 4834–4838].

The following subsections particularly summarize the utilization of the kNN learning scheme in the same context, as well as applicable data reduction techniques.

2.1 Nearest neighbour techniques

kNN was firstly proposed for the parameter k (numbers of neighbours to consider) set to 1 as “nearest neighbor decision rule” in 1967 [18].

The basic principle of kNN consists of comparing new arriving data (instances) with all instances that were captured as reference data in an initial step and considering a subset of them (number of reference instances k) for a prediction decision. Although, this initial step does not comprise the generation of a generalized model (training of a model), it is usually called training (also in this paper).

The comparison of instances refers to the comparison of distances between a new instance and the training instances by using a specified distance measure.

After calculating the distance, kNN selects a number k of nearest instances to the new instance. If their labels are immediately averaged (which would have to be specified, usually arithmetic mean), this leads to a prediction in the form of a regression method (kNN regression). If instead of averaging a majority vote is applied on the k nearest instances, the label with the most votes is yielded as categorical label prediction (kNN classification). In this sense, the focus of this paper is on kNN classification which will be extended by a proportionality scaling scheme (see Sect. 4.1).

In place of directly majority voting after a set of neighbouring instances has been selected (uniform weights), distance-based weighting factors can be calculated for all instances of the selection. For each class, these weightings are summed up, so that the prediction is determined as the class with the maximum sum. This distance weighting is typically introduced to avoid that a majority of class labels from instances which are farther away (but still within the neighbourhood) influences the prediction at the expense of instances which are closer but in minority.

kNN has been applied for EMG-based pattern recognition a variety of times. In 1983, a nearest neighbour classifier (\(k=1\)) was already chosen in the context of prosthetics [21]. Additionally, a variant of prototype reduction (see Sect. 2.2) was introduced. There was no decrease of performance when reducing the number of samples per gesture from 100 to 2–6 (for power grasp, flexion, extension, pronation) and to 40–46 (for rest and supination), respectively.

Since then, nearest-neighbour-based methods have been evaluated in various EMG-based gesture detection studies rather unsystematically, mainly for comparison to other classifiers, both for able-bodied subjects and amputees (Table 1). For example, kNN showed to perform as good as multi-layer neural networks. In this context, it was mentioned that the “kNN classifier may be considered to be a better choice for classification of continuous EMG signals to actuate the prosthetic drive” [82, p. 5].

Usually, kNN also exposed similarly good detection accuracy as QDA, SVMs, Gaussian as well as Bayesian methods, and performed comparably or better than LDA (see Table 1). In some cases, kNN was shown to perform statistically significantly better than LDA [46, 53] and even QDA [46]. Further work pointed out that “there was no significant [difference] between weak-load algorithms (NB, KNN, QDA, and ELM) and heavy-load algorithms (SVM and MLP) after applying the dimension reduction” [90]. An experiment with \(k=9\) showed that the “kNN classifier [was] better at classifying the EMG signal with PCA transformed statistical data compared to other classifiers in accuracy, sensitivity and specificity” [30], namely logistic regression, decision and logistic model trees as well as a neural network classifier.

Besides these comparisons of different classifiers without external factors, a study of noise influence on kNN exposed a high stability of detection accuracy even for reduced signal-to-noise ratios if k is chosen properly. For \(k=15\), the accuracy decreased from 100 to 83% for an increase of simulated noise from 25 to 5 dB SNR. With this, it showed a higher robustness than a neural network classifier.

The mid-term performance for discovering the influence of electrode shift on kNN showed a basically constant average performance from one day to another [62]. This confirmed a former analysis of performance over time [37] and is important for prosthetic devices since repositioning regularly introduces an electrode displacement which otherwise would require immediate retraining.

Finally, kNN has shown to be comparably insensitive to the reduction of recording sample rate. In an experiment, kNN achieved higher accuracies than SVM and LDA at all sampling rates, and the performance reduction for frequency reduction was not as steep as for other classifiers [16]. Instead, for a change from 1000 to 200 Hz, the accuracy reduced only minimally from 99.9 to 99%. At 20 Hz, it still provided 78% (vs. 71%/56% for SVM/LDA). This behaviour of the kNN method is highly advantageous for embedded systems scenarios, since lower sampling rates lead to lower CPU clock frequencies and therefore reduced powering requirements, which in the end support a low-cost approach and increase portability by requiring smaller dimensions for the prosthetic controller.

2.2 Training dataset reduction algorithms

An important drawback of instance-based learning schemes is the necessity of comparing new arriving instances whose labels are meant to be predicted to all already stored ones (“training” data). In order to do so, all instances have to be iterated which leads to potentially—depending on the amount of data—high computational effort in the prediction phase.

Typically, two main approaches to improve the performance of nearest neighbour classifiers are pointed out [9]. The first is the utilization of efficient, optimized data structures (“ball-tree data structures, hashing” [58], “kd-tree” [9]). The second approach (thinning) can be seen both in a horizontal (feature-space) as well as in a vertical dimension (sample-space). Aside from that, there are techniques using an approximation of the kNN classification rule, for example large margin nearest neighbour [58].

In terms of horizontal thinning, the concept of feature selection has been applied in the context of pattern-recognition-based prosthetic control for large feature set dimensions, for instance biologically inspired methods such as genetic algorithms and particle swarm optimization [81, p. 251]. Horizontal thinning can be generalized (to horizontal data reduction) when feature projection, positioning [58] and discretization [64] techniques are also considered. These schemes come along with dimensionality reduction algorithms. Examples are principle component analysis (PCA) [39] and adaptions thereof [71] as well as variants of linear discriminant analysis (LDA) [73].

However, the examinations made in this work cope with vertical data reduction techniques. The general idea is to reduce the computational effort of prediction steps in instance-based learning by decreasing the number of instances within the training set. This process is usually referred to as instance reduction or prototype reduction [29, 99]. In principle, prototype stands synonymously for data instance or sample. Nevertheless, it already indicates that it refers to specific instances which represent a larger amount of instances to a certain extent.

Prototype reduction methods can be divided into prototype selection (vertical thinning) on the one hand [29] and prototype generation on the other hand [99]. While the former selects a subset of instances from the existing ones, the latter creates new instances based on the existing ones to represent the whole dataset.

3 Requirements and concept

The experimental studies and the developments which they are based on are driven by the requirements of Sect. 3.1 and composed of different parts:

-

First, a pilot dataset of several (full-intensity) gesture exertions is captured from the authors in order to conduct an offline cross-validation analysis of kNN parameters on gesture classification without real-time application.

-

Second, the obtained kNN parameter configuration is applied in a real-time scenario, in which new (full-intensity) gesture data is gathered. Additionally, an approach of proportionality scaling is introduced here. With that, real-time gesture detection performance is measured in an online target achievement test with just one subject. The success rate in this pilot study is utilized to analyze the influence of proportionality scaling parameters while testing three levels of exertion intensity (but just training on full intensity).

-

Third, the two determined parameter configurations (kNN and proportionality scaling) are tested in a real-time user study with 12 subjects (and in an extended user study with 4 subjects). Again, target achievement tests are conducted, including three levels of gesture exertion intensity for detection (but just full-intensity for training). No further parameter optimization takes place in this step. Moreover, a data reduction technique is introduced and applied to each subject’s data. The success-rate performance of the non-reduced and the reduced data approach are compared.

3.1 Requirements

The requirements listed in Table 2 are to be met by the learning strategies developed in this work. While R1–R4 represent general prerequisites, R5 constitutes an additional constraint for embedded system implementations.

R1 and R2 are considered the minimum standard for myocontrol, while R3 targets the transfer from offline to online scenarios. R4 is motivated by the benefit of home recalibration for prosthetic users [57, 74].

For R5, specific sub-requirements have been defined. The general motivation of providing an algorithm suited for embedded systems and still delivering high performance is the tendency of developing wearable systems that are usable stand-alone without the necessity of connecting standard computers.

3.2 Sensor hardware

A product widely used in research—also in this work—is the Myo wireless armband, produced from 2013 to 2018 by the Canadian company Thalmic Labs Inc. which is characterized by the following (cf. [101]):

-

Eight EMG electrodes with ST 78589 operational amplifier per electrode.

-

Maximum sampling frequency of 200 Hz.

-

9-axes IMU with 3-axis gyroscope, 3-axis accelerometer and 3-axis magnetometer (InvenSense MPU-9150).

-

Freescale Kinetis ARM Cortex M4 120 Mhz MK22FN1M microcontroller.

-

Communication via BLE with Nordic nRF51822 to HM-11 BLE dongle.

-

Vibration motor and LEDs for signalling.

-

Two lithium batteries (3.7 V, 260 mAh), USB-charged.

No IMU information is utilized in the context of this work.

3.3 Signal processing and nearest-neighbour-based methods

In general, a kNN-based classification approach will be given priority over kNN regression, as the latter exposed a high extent of instability in preliminary experiments.

To keep the computational demands as low as possible for an embedded prosthetic control system, we aimed at utilizing time-domain features due to their lower complexity. Specifically, the linear envelope of the signal will be used as input feature. It can be shown that the majority of the discriminatory effect in the widely used Hudgins EMG feature set stems from the mean absolute value [88]. In this sense, the reduced demands for obtaining the amplitude data by calculating the absolute value are combined with a window length of 1 to not induce further calculations. As in similar publications [74], this is followed by low-pass filtering with a cut-off frequency of 1 Hz by a second-order Butterworth filter, as “at least 90% of the power in the power spectral density estimates were found to be below 1 Hz” [76] in the rectified signal.

The gestures chosen to evaluate the performance are selected among rest state (rs), power grasp (pw), pointing (pn), wrist flexion (fl), wrist extension (ex), wrist pronation (pr) and wrist supination (su).

In order to evaluate the static performance of the algorithm and specifically to match requirement R1, the cross-validation accuracy on a variety of Myo armband EMG datasets captured by the authors will be examined. For this purpose, these datasets comprise four repetitions per gesture. One repetition contains the filtered eight-channel-EMG data when exerting one specific gesture for 2 s at the maximum sample rate of the Myo armband, namely 200 Hz. Multiple repetitions are necessary as the gathered samples within one repetition cannot be considered independent and identically distributed. The stochastic dependence is abolished across multiple repetitions since there are interruptions in time, specifically because of training other gestures in between, before capturing the next repetition. With that, a block-wise (group-wise) cross-validation is possible so that samples within one block (group/repetition) are not validated against samples within the same block. In this way, a preventive measure against overfitting is established. In particular, a leave-one-group-out cross-validation will be applied, i.e. selecting one block as testing set while the others form the training set, for all possible combinations. In the end, the arithmetic mean of the single accuracies (i.e. correct classifications relative to all classifications) is used to characterize the accuracy of the whole dataset.

The parameters which can be altered in kNN for static cross-validation are the number of neighbours to be considered (k), the distance metric for comparing sample differences and the weighting of selected samples’ data values. It is known that kNN’s “performance is critically dependent on the selection of k and a suitable distance measure” [59, p. 3] so that these will be subject to a specific analysis.

A problem with kNN classification is the fundamental characteristic that no intermediate states can be predicted. Therefore, kNN classification will be extended by proportionality scaling schemes to provide proportional control.

The following concepts are applicable for kNN classification based on majority-voting regarding the occurrences of individual class labels.

It is assumed that the intensity of an exerted action/gesture is proportional to the amplitude of the EMG signal’s linear envelope [24] (averaged for all channels). By analyzing this magnitude, a proportionality scaling can be applied as soon as a gesture has been detected [45, 89]. To obtain a correct gesture classification from samples of a specific gesture at lower intensity levels, the samples are normalized (assuming that the signal shape is similar when comparing signals of the same gesture at different intensity levels).

Furthermore, a threshold for the rest action, i.e. the state where no gesture is exerted, will be introduced (rest magnitude thresholding). The motivation for this is that, if samples are closer to the rest state than to the specific real gesture, it would be classified as rest, until the transition point in the signal amplitude is reached. The rest state usually resides at around zero signal amplitude, unless distinct postures are considered where this might differ due to the limb position effect.

The concept of the rest magnitude thresholding consists of measuring the average rest activity and basing a threshold of signal amplitude on this value, possibly altered by further parameters. If this threshold amplitude is exceeded when executing the prediction on a new sample, the classification takes place and a class label of the available ones except rest is assigned. Otherwise, the new sample is considered as rest.

Requirement R3 will be evaluated by means of target achievement tests. First, the presented concepts will be evaluated in pilot experiments without being statistically representative. The tendencies obtained are used as a baseline for a user study with multiple subjects following afterwards. For both versions of experiments, several gestures will be tested on different signal intensity levels (for instance exerting just one third of a full wrist flexion), after training solely took place on full intensity level. The single gesture has to be reached and held for a certain period of time without deviating too much within some error range in order to consider the task as successful. For this purpose, the subject will see a visual stimulus in the form of a hand model to be followed, as well as another hand model visualizing the current gesture prediction (as in Fig. 10). The results will be compared to those obtained from state-of-the-art ridge regression methods.

The final user study will be conducted in a double-blind manner in order to provide comparability of the algorithms. Therefore, the selection of gestures and intensity levels during one experiment will be randomized. For the purpose of not favouring a single method over another (if there should be a time-dependency of success), the occurrences of methods and levels will be equally distributed across the available time slots.

Requirement R4 is met inherently by the standard kNN approach since in every prediction step, each instance of the training set is compared with the sample to be predicted for obtaining the particular distance. This means if there are new samples to be stored in the training set, they are directly taken into account during prediction, thus leading to incrementality.

3.4 Assessment of embedded applicability

The applicability on embedded systems is specified in requirement R5 with its sub-components R5.1–R5.4.

To meet requirement R5.1, it is necessary to reduce the kNN computation effort in the prediction phase. As an instance-based learning technique, kNN suffers from the computational disadvantage mentioned in Sect. 2.2. Specifically, for each new instance, the prediction step comprises the calculation of the distance from the new instance to the n stored ones (runtime complexity of \(\mathcal {O}(n)\)) and the sorting of these distances to obtain an ascending order of nearest neighbours. Depending on the sorting algorithm, the overall time complexity can reach \(\mathcal {O}(n\log n)\) (being the proven lowest possible bound for comparison-based data sorting). Nevertheless, if the number of neighbours to consider is set to \(k=1\), no sorting is necessary anymore. Thus, with a minimum search being sufficient instead, the complexity reduces to \(\mathcal {O}(n)\).

Possibilities to reduce the computational effort in terms of the number of training samples n to specifically achieve requirement R5.1 have been introduced in Sect. 2.2. In particular, the concept of prototype reduction is chosen. As presented in [96], an assessment of the variety of these algorithms has to be made in order to lower the number of instances in the training set for kNN. To meet requirement R5.2, it is necessary that the particular algorithm to be chosen provides a possibility to specify the number of prototypes in the final set or accordingly the reduction rate beforehand. When it comes to prototype selection algorithms reviewed in [29], only random mutation hill climbing (RMHC, [92]) inherently possesses this characteristic as it is the only method with fixed reduction. Nevertheless, RMHC is a wrapper method which means that in each step the decision if to select a prototype or not, a complete kNN evaluation for all instances has to take place. For this reason, long computational times during the reduction process have to be expected. In [29, p. 425–427], it is shown that this assumption holds in real use cases for both small and medium-sized datasets. Exemplary tests on EMG datasets confirmed that behaviour so that RMHC was excluded from consideration.

Besides the fixed reduction prototype selection algorithms, there might be also mixed reduction methods which provide the property of determinism with respect to the number of samples contained in the final training set. However, the algorithms of that category described in [29] are all wrapper methods, too. Due to the respective high execution times as mentioned before, these methods are not considered within the scope of this work.

In terms of prototype generation, there is a variety of fixed reduction algorithms. They can be summarized in the following way:

-

Positioning adjustment, condensation approaches: Learning vector quantization (LVQ)-based methods [33, 56].

-

Positioning adjustment, hybrid approach: Particle swarm optimization (PSO [72]).

-

Centroid-based condensation approaches: Bootstrap technique for nearest neighbour (BTS3 [40]) and adaptive condensing algorithm based on mixtures of Gaussians (MGauss [65]).

-

Space-splitting: Chen algorithm [15].

While the Chen and BTS3 algorithms are not incremental in the sense of requirement R3, in PSO, MGauss and the LVQ-based methods, each step in the reduction process only depends on the former step (where a certain model or prototype configuration is obtained) but not on the instances themselves from the initialization of the whole process. Usually, this leads to the characteristic that the reduction process does not depend on the order of decisions, i.e. the order of instances being considered.

The LVQ-based algorithm LVQTC (LVQ with Training Counter, [75]) turned out to not provide determinism with regard to the final set’s size and was therefore not taken into account for further evaluation.

Again, there are mixed algorithms which may also provide the final set size determinism like the fixed ones are supposed to. Some of them are in turn wrapper methods (evolutionary nearest prototype classifier (ENPC) [27], adaptive Michigan particle swarm optimization (AMPSO) [12]) and hence not considered with respect to the previously mentioned reason.

Filter and semi-wrapper methods which might be applicable in principle are gradient descent and deterministic annealing (MSE [19]), hybrid LVQ3 (HYB [54]), integrated concept prototype learner (ICPL2 [60]), LVQ with pruning (LVQPRU [61]) and prototype selection clonal selection algorithm (PSCSA [28], artificial immune system model).

The reason why the first three of these algorithms were not chosen for the evaluation in the end are their non-determinism with respect to the final set size. The remaining algorithms are to be compared. Since they vary with regard to the time needed for the reduction process, this is examined in experiments that are based on datasets of captured rectified and filtered EMG signals (linear envelope). Besides the amount of reduction (requirement R5.2) and the runtime behaviour (R5.3), the achieved accuracies when using the reduced sets in block-wise cross-validation will be assessed. The choice for specific algorithms will be further guided by requirement R5.4, i.e. taking into account the implementation complexity of the algorithms.

4 Methods

In terms of the methodical realization of the algorithm, several characteristics will be pointed out in the following, regarding both the kNN scheme and data reduction techniques.

4.1 Methodological considerations for the kNN approach

The kNN training process is structured as follows (see also Fig. 1): capturing training data, calculating class magnitude averages for proportionality scaling and rest magnitude threshold (if enabled), executing normalization of this data (if enabled), calculating the inverse covariance matrix of the data if the Mahalanobis distance is activated, executing block-wise cross-validation for obtaining the optimal k, weighting and metric in terms of accuracy.

The prediction process comprises the following: applying rest magnitude thresholding (if enabled), executing normalization of sample (if enabled), calculating k nearest neighbours of the sample whose label is supposed to be predicted and their distances, applying distance weighting on the k selected neighbouring samples (if enabled), executing direct averaging of neighbour samples in kNN regression, or calculating the proportionality scaling factor by analysis of the signal amplitude, before executing kNN classification by majority voting on the (potentially weighted) samples, i.e. the class with the highest weight sum will be selected for predicting the full gesture, which will be scaled by applying magnitude proportionality scaling (if enabled).

These steps are also pointed out in Fig. 2. Additionally, different windowing schemes could be applied (cf. [95]).

4.1.1 Nearest neighbour parameter configurations

The number of next neighbouring samples (k) to consider in a prediction step is varied from \(k=1\) (just nearest neighbour) to higher numbers. For each case, the particular block-wise cross-validation accuracy is computed, if enabled. Due to the characteristics of this validation scheme, the maximum k cannot exceed the total number of samples minus the size of one repetition block.

The examined distance measures are the Minkowski-norm-based metrics Manhattan (\(p=1\)), Euclidean (\(p=2\)) and Chebyshev (\(p\rightarrow \infty \)), as well the Mahalanobis distance. For distance weighting, inversely distance-dependent factors are calculated for each sample and summed up for each class within the selected set of neighbouring samples. Hereby, a weighted majority vote is obtained as classification.

kNN inherently involves k minimum searches to obtain the k nearest neighbours. This is implemented by means of sorting the distances in a descending order and picking the k first entries. For this purpose, an appropriate sorting function is called. However, if k is selected to be 1, the sorting procedure can be replaced by a search for the minimum distance within the set.

4.1.2 Proportionality scaling and rest thresholding

As presented in [97], the approach for rest magnitude thresholding is realized in a way that the magnitudes of the rest samples gathered during training are averaged and taken as a baseline for rest activity (\(t_0\)). The threshold of signal amplitude which has to be exceeded for not classifying a gesture as rest anymore is based on the obtained average: \(t=g\cdot t_0\) (amplified by gain g). Although this enables to reduce unintended actuations, a higher thresholding level t results in a lower proportionality resolution by presuming higher activation forces. Another possibility for calculating a threshold could be to consider other functions applied on the rest activity instead of the mean, such as the median or the maximum (although the latter would require a specific consideration of outliers).

For the non-rest gestures, an approach of proportionality scaling is utilized [97]. This is implemented in a linear manner, i.e. intermediate gestures are assumed to be linearly scaled between rest activity and the average training magnitude of the particular gesture set as function maximum. Again, instead of the mean of the individual gestures’ magnitudes, other functions might be used.

As mentioned, there is the need for a trade-off between the level of proportionality resolution and suppressing unintended activations. Therefore, a divisor v to scale the proportionality function offset \(m_0=\frac{t}{v}\) is moreover introduced. This does not scale the rest threshold t itself.

Example of proportionality scaling: \(t=g\cdot t_0\) marks rest threshold, \(m_0=\frac{t}{d} \) adjusted offset (for proportionality function, not for thresholding itself), \(m_{max}\) gesture magnitude maximum. Blue curve is theoretical ideal behaviour, red exceeds \(m_{max}\) and green adjusts function slope (implemented)

These relations between measured magnitude m and applied scaling factor s are depicted in Fig. 3: The blue function describes the theoretical linear proportionality scale, i.e. the scaling of the predicted gesture starts with 0 at 0 magnitude, assuming there is no baseline rest activity at all that could lead to wrong classifications. With introducing the rest threshold t as an offset for the scaling function, too, the average activity of the full gesture \(m_{max}\) would be required to be exceeded in prediction to reach the maximum scaling. This could be avoided by also adapting the scaling function maximum for \(s=1\). Since this would lead to a reduced magnitude resolution, the maximum is pertained and the slope of the function is modified (green curve) as follows:

An alternative approach could be to use piecewise linear functions or modelling non-linear relationships.

4.2 Dataset reduction algorithms

For the evaluation of prototype reduction algorithms, the open-source (GPLv3) software tool KEEL (Knowledge Extraction based on Evolutionary Learning [100, p. 1239]) was chosen and extended, in which the particular prototype reduction algorithms from [29] and [99] have been implemented.

Special focus of this work will be put on reduction methods based on learning vector quantization (LVQ). They are composed of the following basic steps:

-

1.

Initialization by choosing random samples.

-

2.

Or selecting the classes’ centres of masses as initial prototypes and potentially adding more samples randomly (as long as the number of prototypes to be chosen is not exceeded, distribute the selection equally over all classes while choosing randomly within each class).

-

3.

Repeating the correction process for a specified number of iterations: for each sample, decide if it has a rewarding and/or a penalizing effect on particular prototypes and employ this effect.

The idea of the standard LVQ-based approaches, originally proposed in the context of self-organizing maps in [56] (with prototypes being called codebook vectors) in three different variants (LVQ1, LVQ2, LVQ3), is to represent the probability distribution behind the dataset. An exception is the decision surface mapping (DSM) strategy which instead aims at appropriately modelling the class borders (decision boundaries/surfaces) [78, p. 335].

As [38] points out, standard “LVQ corresponds to what is usually known as SCL [Simple Competitive Learning] in the neural network literature”. [1] defines it as “a single-layer neural network in which the outer layer is made of distance units, referred to as prototypes”.

Two specific variants implemented in the scope of this work are the aforementioned methods DSM and LVQ3. Their correction steps are realized as in Algorithm 1.

LVQ-based reduction methods, specific substitutions given in Table 3

For DSM and LVQ3, the specific conditions as well as the prototype adjustment actions are defined in Table 3, which refer to the rewarding and penalization terms in Algorithm 2 and (the learning rate parameter is set to a fixed number of 0.01).

Based on these algorithmic descriptions, a specific runtime complexity analysis of DSM is conducted in Sect. 5.4.

5 Evaluation and results

This section presents the experimental outcomes to evaluate the developed strategies. These results were obtained from conducting the following experiments:

-

1.

Offline tests with datasets from one subject.

-

2.

Online tests with real-time data from one subject (pilot experiments).

-

3.

Online tests with 12 subjects (basic user study).

-

4.

Online tests with 4 subjects (extended user study).

While the offline tests were primarily evaluated by cross-validation accuracy, the main criterion for the online experiments was the success rate (see also requirements Table 2).

Some experiments include specific gestures in one case but do not include these in another. This applies to the pointing gesture to consider and analyze the assumption that it is not as well separable from rest, power grasp, wrist flexion and wrist extension, as these four are from each other. Furthermore, it applies to wrist pronation and supination (again, with and without the pointing gesture) which were chosen to extend the system by a rotational dimension in order to observe the development of performance with an increasing number of degrees of freedom.

5.1 Offline cross-validation accuracy

The offline experiments described in this section are based on several series of EMG data captured from the authors. They provide a rational measure of the applicability by means of cross-validation accuracy. In the datasets, one training repetition consisted of 400 samples per gesture (2 s capturing with 200 Hz sample rate). In each set, each gesture was recorded in several repetitions. For each configuration of considered gestures, several sets of data were recorded; see Table 4 for the resulting number of samples.

The main parameter of kNN, the number of neighbours to consider (k), is varied throughout all cross-validation accuracy experiments. In order to guarantee comparability of the results concerning specific numbers of k across datasets of different sizes, k is not employed as an absolute number of samples. Instead, k is compared in the sense of a relative value \(k_{rel}\), i.e. as the proportion of k relative to the maximum number of samples in the set (n): \( k_{rel} = \frac{k}{n}\).

Since the cross-validation is applied block-wise, the maximum k cannot exceed the total number of samples in the set minus the number of samples in a block.

5.1.1 Influence of distance weighting

For the evaluation of cross-validation accuracy when changing the distance weighting factor, the different datasets showed the same qualitative behaviour. The distance from the current to the particular other samples is denoted by d.

Independent of the weighting factor used, it could be observed that high numbers of k usually decreased the cross-validation accuracy. Considering a dataset of four gestures (rs, pw, fl, ex), the accuracy stays at about 99% until \(k_{rel}\) is at around 15% in Fig. 19a (using Euclidean distance) for all weighting factors. This threshold value of \(k_{rel}\) is even higher in Fig. 19b, namely at about 30% (using Chebyshev distance). Furthermore, in this case, the threshold only applies for weightings of 1 or \(\frac{1}{\sqrt{d}}\). For \(\frac{1}{d}\) and \(\frac{1}{d^2}\), the accuracy stays above 99%. In all cases, the highest accuracy can be noticed with a weighting of \(\frac{1}{d^2}\), followed by \(\frac{1}{d}\), \(\frac{1}{\sqrt{d}}\) and 1. The effect of decreasing accuracy is the most apparent in the case no weighting is applied (decreasing until 0 at about 40–50% relative k). All accuracies stabilize at some point.

When also including the pointing gesture into the comparison, the behaviour is principally similar. Starting at around 98% accuracy in Fig. 4a and even 100% in Fig. 4b respectively for all weightings at \(k=1\), it drops to 0 for higher ks when using no weighting. Again, the decrease at weighting 1 is the highest, followed by \(\frac{1}{\sqrt{d}}\), \(\frac{1}{d}\) and finally \(\frac{1}{d^2}\). The above-mentioned threshold level of decrease lies at about \(k_{rel}=20\%\).

It can be stated that as soon as a low number of k is meant to be used (\(k=1\) seems suitable in all cases), the weighting scheme does not matter. This means that in this case, for the sake of computation resources, even no weighting could be applied. Nevertheless, if higher numbers of k should be necessary, a higher exponent in the weighting factor’s divisor should be introduced. \(\frac{1}{d^2}\) seems to be a good choice for that, without increasing the computation effort notably.

This observation is also confirmed in further tests: Fig. 20c shows this for the Chebyshev distance while additionally including pronation and supination (obtaining a threshold of about \(k_{rel}=15\%\)), and Fig. 20b for the Manhattan distance with pronation and supination included without pointing (threshold value some percents higher). In the latter case, the even better performance of a distance weighting of \(\frac{1}{d^3}\) is additionally depicted, although the difference only appears after reaching a relative k of 25% and is neglectable due to its small value (0.25%).

5.1.2 Influence of distance metric

The variation of the distance metric showed almost no effect in the case of the four gestures (rs, pw, fl, ex); see Fig. 20a in the Appendix (using a weighting factor of \(\frac{1}{d^2}\)). An exception is the Mahalanobis distance which only provided about 96% of accuracy at low numbers of k while the other metrics achieved 100%. Furthermore, in the case of the Mahalanobis distance, the accuracy dropped fast when increasing k until it stabilized at around 69% for \(k_{rel} > 50\%\). The accuracy when using the other metrics stayed constant at about 100% (Euclidean drops slightly to 99%).

The observed behaviour in the case of data which included the pointing gesture exposed the following differences (Fig. 5): While the accuracy when applying the Mahalanobis distance showed the same tendency (starting from at about 98% going down to 89%), it also dropped for the other distance metric cases when increasing \(k_{rel}\) over 10%. This was mostly noticeable when looking at the Manhattan metric as the accuracy started at about 99% for low numbers of k and decreased until 93%. For the other metrics, it went down from almost 100 to 99% (Chebyshev) and 98% (Euclidean) respectively.

Including the wrist rotation gestures without pointing (weighting factor of \(\frac{1}{d^2}\), Appendix Fig. 20b) showed qualitatively the same behaviour as in the already described case where pointing was not included. This means that the Mahalanobis distance started at lower accuracy values than the others (98% instead of 100%) and dropped until it stabilized at 84% (the Chebyshev norm dropped to 99.5%, the Euclidean norm to 99.6% and the Manhattan norm to 99.7%).

When additional including the pointing gesture again, the effect was comparable, although pointing influenced the Minkowski-norm-based distances slightly more. For the Mahalanobis distance, the accuracy dropped from 97% until it reached a stabilization level of about 88%. The Minkowski-based norms started at 100% accuracy for low numbers of k and decreased at a relative k of about 15% until they reached an accuracy of 96% (Chebyshev), 98.4% (Euclidean) and 99.3% (Manhattan) respectively.

Figure 20c also shows that the behaviour is the same when applying a distance weighting of \(\frac{1}{d}\) instead of \(\frac{1}{d^2}\) for the cases of Euclidean and Chebyshev norm, although the drop in accuracy is higher.

The evaluation of the distance metrics showed that differences are not evident in all cases. It can be summarized that the Mahalanobis distance is not recommended to be used for the present data. Due to the necessary calculation of the covariance matrix and its inverse, it is also of disadvantage with respect to computational resources.

The Minkowski-distance-based metrics differ regarding the chosen order of norm, especially for high numbers of k. In some cases, the accuracy gets better the higher the order of norm gets (Chebyshev (\(p\rightarrow \infty \)) is best, followed by Euclidean (\(p=2\)) and Manhattan (\(p=1\)) in the end). However, when pronation and supination are included, the effect is reversed (both with and without pointing). In fact, this reversed effect is lower than original effect. Nevertheless, the Euclidean norm seems to be a good trade-off to compensate both effects.

5.1.3 Summary

All in all, it could be observed that for the cross-validation accuracy in the case of low numbers of k (relative k until about 5–10%), neither the weighting factor nor the distance metric is of essential importance as long as a Minkowski-based distance norm is applied. However, Fig. 21d shows an exceptional case where there was a clear accuracy difference between the Euclidean (99%) and the Chebyshev (91%) distance even at low numbers of k. In the sense of computational demands, for the lower range of \(k_{rel}\), a distance weighting of 1 (i.e. no further arithmetic operations) is recommended. If also considering \(k_{rel}\) higher than 5–10%, a weighting factor of \(\frac{1}{d^2}\) might be the best choice, together with the Euclidean norm. These recommendations hold for all tested sets of gestures. Further evaluations of other datasets which confirm this observation are depicted in the Appendix in Fig. 21 with respect to the Euclidean and the Chebyshev distance as well as several weighting factors.

With that, the Euclidean distance and a weighting of \(\frac{1}{d^2}\) can be seen as a general recommendation in terms of accuracy for a broad range of k. However, with regard to requirement R5.1, the Euclidean distance might also not be preferred since its calculation (8 subtractions, 8 multiplications, 7 additions in each prediction step due to 8 EMG channels) is more computationally expensive than both the Manhattan distance calculation (8 subtractions, 8 absolute value calculations, 7 additions) and the one of the Chebyshev distance (8 subtractions, 8 absolute value calculations, 7 comparisons for maximum search) which do not require multiplication operations. The individual requirements must be balanced with respect to the specific use case.

5.2 Real-time pilot experiments

The pilot study experiments described in this section were only evaluated on one subject. Although the results obtained from these target achievement tests are therefore not representative, they may give insights on how different means and adaptions in the used algorithms can affect the achieved online success rates in gesture recognition with kNN (with k set to 1 and 10 respectively, equally distributed, results averaged), especially when it comes to intermediate intensity levels of gestures. Following the results from Sect. 5.1 for a broad range of k, for kNN, the Euclidean norm was chosen as distance metric with a weighting of \(\frac{1}{d^2}\).

For each pilot experiment, the user first trained the system by capturing data from the exertion of the full-intensity gestures. Each gesture had to be held for 2 s—as in the offline training, resulting in 400 training samples per gesture and repetition. This time, five training repetitions were gathered, i.e. 2000 8-value sample vectors per gesture (see Table 5).

In the prediction phase of each pilot experiment, all gestures (apart from rest) were not only tested on full-intensity exertion, but on three different intensity levels (\(\frac{1}{3}\), \(\frac{2}{3}\), full gesture). For this proportional control, proportionality scaling as described in Sect. 4.1.2 was implemented. To consider a trial a success, the user had to mimic a virtual stimulus, while the real-time continuous prediction was shown in a hand model, and provide spatial matching within a time margin of several seconds. Each combination of gesture and exertion level was tested twice. In this way, the number of prediction samples was several magnitudes higher than the number of training samples, so that issues of overfitting can be further excluded.

As a measure of comparison, the accuracy of ridge regression with random Fourier features (RR-RFF) as state-of-the-art gesture recognition method was also evaluated in each test run.

5.2.1 Rest class thresholding: rest magnitude threshold

The rest magnitude threshold was introduced to cope with the problem of separating intermediate gestures from the rest class in the proposed proportional control. In order to evaluate the influence on the user success rate, multiple tests were conducted with the gesture sets (rs, pw, fl, ex) and (rs, pw, pn, fl, ex).

Figure 6 shows that the standard approach without any rest thresholding yielded averaged success rates of 65% on average for both types of dataset. While the success rates in the variant with pointing could not be considerably increased (only by 4%), it was beneficial for the variant without pointing. Ninety-two percent success rate could be achieved for two times the mean rest signal magnitude (\(g=2\)) as well as three times mean rest magnitude (\(g=3\)) as threshold. Furthermore, it is noticeable that even without thresholding kNN performed better than RR-RFF when including pointing (63% vs. 46%). When not including point, kNN without thresholding performed worse than RR-RFF (67% vs. 83%). But with thresholding in the latter case, kNN’s success rate could exceed RR-RFF’s (92% vs. 83%).

Since there was no difference recognizable between the success rates of \(g=2\) and \(g=3\), \(g=2.5\) was chosen as a compromise for further experiments. The expected performance of this choice could be confirmed in Fig. 7. Ninety-eight percent success rate could be achieved for kNN without including pointing (in comparison to 80% for RR-RFF) and 64% when including pointing (56% for RR-RFF).

It has to be noted that the results of RR-RFF yielded larger standard deviations than in all kNN cases. This could signify that kNN performs more stable and robust with less nondeterminism in the algorithm’s behaviour.

5.2.2 Proportionality offset scaling: scale offset divisor

As described, besides the rest magnitude threshold, a proportionality offset was introduced. This offset is divided by the scale offset divisor v with the purpose of adjusting the proportionality scaling for intermediate gestures. The target achievement tests described in the following refer to a variety of runs with datasets comprising (rs, pw, fl, ex, pr, su) and (rs, pw, pn, fl, ex, pr, su), respectively. Besides kNN with different scale offset divisors, the performance of RR-RFF and standard ridge regression (RR) was also captured. For the evaluation, a rest magnitude threshold with \(g=2.5\) was chosen, as motivated in Sect. 5.2.1.

Figure 8 depicts the particular results. It is observable that the increase of v could initially improve the average success rate for the used datasets. After reaching a maximum around 5–10, the success rates started to decrease again, probably because of low-intensity levels of gestures getting less reachable due to misclassification with rest. Nevertheless, the approach without any offset (corresponding to an infinite scale offset divisor) still performed clearly better than RR-RFF and standard RR.

Higher averaged success rates were achievable for all scale offset divisors in kNN than for RR and RR-RFF. The best averaged performance when the pointing gesture was included could be achieved for \(v=5\) (94% vs. 43% for RR-RFF); and for \(v=10\) (95% vs. 51% for RR-RFF) when pointing was not included.

With higher scale offset divisors, low-intensity level gestures \((\frac{1}{3})\) get less reachable. This property has been assessed as more severely influencing the motivation of subjects than a reduced magnitude value range, since jumps between rest condition and low-intensity gestures appeared rather difficult than reduced sensitivity perceived as “missing damping”.

Because of this, 5 was favoured over 10, although their performance appeared to be comparable (with 5 providing a slightly better performance when averaging over all dataset configurations, i.e. 93% vs. 91% with a comparable standard deviation).

5.3 Evaluation of prototype reduction algorithms

In order to evaluate the performance of the chosen prototype reduction algorithms (see Sect. 3.4), the datasets captured for offline tests (Table 4) were transferred to KEEL and utilized as baseline.

These were pilot results to test the algorithms’ accuracy and processing times with reduced datasets.

The reduction was executed on each cross-validation fold of the dataset individually, followed by the actual validation. As the considered algorithms comprise kNN-calculations inside, specifically for obtaining the validation accuracy, its parameters had to be defined. Following the recommendations in Sect. 5.1.3, a k of 1, using \(\frac{1}{d^2}\) weighting, and the Euclidean distance as metric were configured.

The detailed examinations and results for varying datasets were presented in previous work [96]. From this data, it could be seen that BTS3 and VQ were the lowest performing algorithms in terms of cross-validation accuracy so that these algorithms were disregarded. It also described the exclusion of PSCSA due to slow timing characteristics. Further conclusions drawn in that paper regarding timing can be representatively seen in Fig. 9, where the time needed for reduction to 20 prototypes is depicted. This reduction time adds up with the cross-validation time to constitute the easily measurable overall runtime. Since the validation is the same process for each fold, the validation time can be disregarded so that the runtime qualitatively describes the algorithms’ reduction times for comparison.

With respect to reduction time, MGauss, Chen and LVQPRU exposed a broad variance, leading to the presumption of reduced time determinism. Furthermore, these showed the highest means and medians of runtime, so that MGauss, LVQPRU and Chen were disregarded, too.

With that, LVQ3 and DSM (also based on LVQ) were the techniques to be chosen for a real-time implementation. With a low runtime of about 0.2 ms in most cases and a low time variance [96], they turned out to be suitable for real-time scenarios, thus fulfilling requirement R5.1. For the present study, particularly, DSM was selected to be examined in any further steps and proved itself as appropriate.

In order to deeper analyze DSM’s suitability for embedded systems, an assessment of the runtime complexity will be made in the subsequent section.

5.4 Runtime complexity of DSM

To analyze the DSM prototype generation algorithm with regard to its runtime complexity, two phases can be distinguished, namely initialization and actual reduction. The phases will be analyzed on their worst-case runtime.

The following conventions are made: \( N \equiv \) number of samples in the original training set, \( M \equiv \) number of prototypes in the final reduced set, \( C \equiv \) number of gestures/classes and \( I \equiv \) number of iterations.

The results of this analysis are shown in Table 8. All operations are considered per EMG channel. The initialization process is designed in a way that there is at least one prototype per class by using the class centres as initial prototypes which become adjusted later on by penalizing or rewarding them in the reduction phase.

Summarizing Table 8, this yields the following running time complexity in initialization:

and in reduction:

When assuming the number of classes to be constant with e.g. \(C=7\) for (rs, pw, pn, fl, ex, pr, su) and also thinking of the number of iterations as a constant, e.g. \(I=20\), an overall running-time complexity of \( \mathcal {O}(N\cdot M\cdot \log {}M) \) can be derived.

It has to be noted that the time complexity in reduction can principally be reduced from \(\mathcal {O}(N\cdot M\cdot \log {}M)\) to \(\mathcal {O}(N\cdot M)\) since no complete sorting of the distances between the currently selected sample and the single prototypes is necessary. Instead, a minimum search for the closest sample (1NN approach) and another minimum search for the closest sample with an identical class label would be sufficient—thus leading to two times iterating the full prototype set at most (comparing the distances in the first case and comparing both distance and class label in the second case).

Generally speaking, if \(k=1\) is used in kNN, the runtime complexity can be reduced to linear instead of logarithmic-linear (quasilinear).

Due to the fact that the number of prototypes M is selected small and configured as a constant for the purpose of final prototype set size determinism (e.g. \(M=20\)), it might also be disregarded with regard to runtime, leading to an overall complexity of \(\mathcal {O}(N)\) in the best case.

Interestingly, this would mean that DSM has the same runtime complexity in reduction (which is only performed once) as standard kNN in each single prediction step (or even better if a higher number of k is used in kNN which would require sorting). Depending on the number of prototypes, the computational effort in a prediction step of DSM-reduced kNN is neglectable, in particular if \(k=1\) is set inside prediction.

5.5 Real-time user studies with multiple subjects

In order to analyze if requirement R4 can be fulfilled by the proposed algorithms, online user studies with multiple subjects were conducted for the evaluation of suitability in practical scenarios. The setup of the experiment is shown in Fig. 10. In the basic user study, it was chosen to compare the following four methods:

-

kNN parameterized according to the configuration obtained in the pilot experiments.

-

kNN after training dataset reduction by means of DSM.

-

Ridge regression with random Fourier features (RR-RFF).

-

Standard ridge regression (RF).

In the extended user study, RR was not examined due to a higher number of analyzed gestures.

Following the general recommendations from Sects. 5.1 and 5.2, the configuration of the standard kNN algorithm was set to \(k=1\), the Euclidean distance metric, a weighting of \(\frac{1}{d^2}\), a rest magnitude threshold of \(g=2.5\) and proportionality scaling with \(v=5\).

For DSM-kNN, the same parameters were used within the prediction phase. For the reduction phase, DSM was configured to generate 7 prototypes in the final set with 40 iterations enabled. The results obtained will be explained in the following.

All statistical tests conducted in the following refer to a significance level of \(\alpha =0.05\).

5.5.1 Basic user study (five classes)

The subjects provided informed consent and statistical information as follows:

-

Age range from 21 to 34 (mean 25, median 24).

-

Three female and 9 male.

-

One left-handed and 11 right-handed.

-

Four already participated in many EMG experiments, 3 in a few and 5 without any EMG experience.

The experimental procedure for the real-time user study followed the same structure as the pilot experiments. The participants put on the Myo armband on their dominant forearm. Afterwards, for the training phase, they followed the visual stimulus (as in Fig. 10) by performing a repetitive series of hand and wrist movements (classes rs, pw, pn, fl, ex) one after another in three repetitions for 2 s each (all with full-intensity exertion). At maximum sampling rate of 200 Hz, this results in 6000 training sample vectors (8 channels) per person (\(= 5\cdot 3\cdot 2s\cdot 200\frac{1}{s}\)), see Table 5.

In the prediction and test phase, they were asked to follow the stimulus again, in a total of 96 tasks (randomized but equally distributed among the subjects: 4 gestures (the rest class was not tested), 3 exertion levels, 4 methods, 2 repetitions) with breaks after a quarter, the half and three quarters of tasks. In this phase, they furthermore saw the prediction of the currently exerted gesture in a second hand model. The goal was to match the stimulus and the predicted gesture within some spatial margin and time frame. Success was signalled by a green visualization. Otherwise, a yellow visualization was shown as visual feedback.

The summarized performance of each examined method for the 12 subjects is depicted in Fig. 11, after first averaging the per-level- and -gesture-performances for each subject-method combination. This yields the variance and median of the success rates in a subject-based manner. Overall, it is observable that the success rates achieved with kNN-based methods exceeded the ones from RR-based methods. DSM-reduced kNN performed as good as standard kNN (success rates of 73% and 71% mean, 71% and 67% median respectively), while RR-RFF and RR showed success rates at a lower level (37% and 30% mean, 25% and 25% median). An ANOVA pointed out significance between the groups of kNN-based methods and the group of RR-based methods (\(p<0.0005\)), while there is no significant difference within each of the groups.

Figure 12 splits the achieved success rates additionally per gesture exertion level, after first averaging the per-action-performances for each level-subject-combination. The subject- and level-based variances and medians are depicted for each of the methods. Again, kNN and DSM-kNN do not show major differences, despite the intensive dataset reduction of DSM-kNN. It is apparent that these methods performed better at higher intensity levels (median of 87.5% for full intensity). An exertion level of \(\frac{2}{3}\) exhibits intermediate performance, while gestures with only \(\frac{1}{3}\) of intensity yielded a mean success rate of 57% for each with high variance. In both methods, the difference between the lowest and the highest level success rate was significant.

When looking into the results from the RR-based methods, it can be observed that there is no level of intensity where those would have outperformed the kNN-based methods in median and mean of the achieved success rate. Interestingly, standard RR yielded a higher number of successes for low-intensity signal amplitudes than when incorporating random Fourier features. In contrast, RR-RFF performed better than RR for gestures of full intensity. For gestures of \(\frac{2}{3}\) exertion level, both had a similar performance, with a mean of 19% success rate, the lowest across the intensity levels. This resulted in significance between lowest and intermediate level for RR, as well as between intermediate and highest level for RR-RFF.

At the level of \(\frac{1}{3}\), there was no significance between any of the methods (\(\alpha =0.05\)). At intermediate level, both kNN and DSM-kNN performed significantly better than RR and RR-RFF (\(p<10^{-5}\)). For the full intensity gestures, both kNN-based methods were significantly better than RR and RR-RFF, while RR-RFF also exposed significantly better performance than RR (\(p<0.01\)).

The relation between individual gestures and success rate is presented in Fig. 13, basing on first averaging the per-level-performances for each action-subject-combination. The subject- and action-based variances and medians are depicted for each of the methods, again. It is noticeable that the performance trends were similar for kNN and reduced kNN. For them, the best success rates could be achieved for wrist extension (median of 100% for both, mean of 90% for kNN and 99%for DSM-kNN with small standard deviation).

Wrist flexion was the second best detected gesture for the kNN-based methods (about 76% mean for both), followed by power grasp (67% median), and concluded by the pointing gesture with the worst performance (about 55% mean).

For both kNN and DSM-kNN, the performance difference between wrist extension and pointing was significant. For DSM-kNN, the comparison of wrist extension and power grasp also yielded significance.

While RR exposed the same tendency of gesture performances as the kNN-based methods (on a lower baseline), for RR-RFF, the pointing gesture yielded the best success rate on average (median 58%, mean 51%). Interestingly, wrist extension exposed the worst performance of gestures for RR-RFF (33% median, 31% mean). Wrist flexion and power grasp revealed the same tendency as described for the other methods. For the RR-based methods, no significance could be shown between different gestures.

With regard to the individual gestures, the group of kNN-based methods performed significantly better than the RR-group for power grasp (\(p<0.05\)) as well as wrist extension (\(p<10^{-6}\)). For wrist flexion, the same holds (\(p<0.05\)) with the exception of the difference between standard kNN and RR not being significant (\(\alpha =0.05\)). Concerning the pointing gesture, the kNN-based schemes as well as RR-RFF performed better than standard RR (\(p<0.05\)).

The overall relations are summarized in Fig. 14, where the contribution of factor combinations to significance are illustrated.

In Table 6, the online classification times are given for the participants of the user study (ARM Cortex-A72), averaged for all classifications executed at sample rate during prediction, confirming the real-time control properties.

5.5.2 Extended user study (seven classes)

For the purpose of investigating the suitability of the developed methods when including even more gestures in the training, further experiments were conducted as an extension of the described user study. Four subjects who had no EMG experience before but participated in the basic user study were selected again (subjects S5, S7, S9 and S10). On the one hand, the previous participation in the main part of the study might have influenced the impartiality. On the other hand, this might give interesting insights in the algorithms’ performances in the case of low experience with EMG-based control.

Since standard RR showed to not perform well in the main part of the study, this was excluded in the extended evaluation in order to avoid participants’ demotivation. Instead, the wrist rotation gestures pronation and supination were added.

For training the system, data were again gathered for 2 s per gesture at maximum sampling rate of 200 Hz with three repetitions each. This was done for all considered classes (rs, pw, pn, fl, ex, pr, su) at full-intensity exertion. This results in 8400 training sample vectors (8 channels) per person (\(= 7\cdot 3\cdot 2s\cdot 200\frac{1}{s}\)), see Table 5.

By again repeating each task two times, the number of tasks performed per subject was 108 in total (6 gestures, 3 intensity levels, 3 learning methods, 2 repetitions). Besides these aspects, this part of the study was identical to the previous part. Again, the rest class was not explicitly tested.

The results of the extended user study’s evaluation are summarized in Fig. 15, after the per-level- and -gesture-performances were averaged for each subject-method combination to obtain the variance and median of the success rates based on the subjects. The kNN-based methods achieved success rate means and medians of over 70%, while RR-RFF performed significantly worse (median 19%, mean 21%) with \(p<0.005\). This time, the DSM-reduced kNN yielded slightly lower values than standard kNN (both medians and kNN mean at 78%, but kNN-DSM mean at 73%).

In Fig. 16, it is observable that the lowest exertion levels did not show the worst performance for any of the methods. Instead, the sucess rates at \(\frac{1}{3}\) exertion level were similar to the \(\frac{2}{3}\) level but had slighly higher means and medians. The best behaviour could be reached at full intensity (kNN: 92% median, 90% mean; DSM-kNN: 88% median and mean). All three tested methods showed the same tendency in terms of performance for individual levels—with RR-RFF’s success rates shifted towards a lower baseline (e.g. for full intensity median 42%, mean 40%). RR-RFF could not outperform kNN or DSM-kNN at any level. Between the different levels of a single method, there is no significance.

For each individual level, the success rates of RR-RFF and the group of kNN-based methods differ significantly (\(p<0.05\)), while there is no significance between kNN and DSM-kNN (\(\alpha =0.05\)).

The examination of the individual gestures (see Fig. 17) exposes a behaviour that was comparable between kNN and DSM-kNN. Wrist flexion and extension achieved the highest success rates (100% median for both methods). Pointing and pronation performed worst here (medians of 75% as well as 58% for kNN and 50% as well as 67% for DSM-kNN). In contrast, for RR-RFF, pronation performed the best with similar success rates (median 50%) as kNN and DSM-kNN, while power exposed severe issues (median 0%, mean 4%). The analysis of variances between the success rates of gesture performances for a single method could not show any significance within the method.

However, significant differences could be found between the methods for individual gestures: For power grasp (\(p<0.0005\)), extension (\(p<0.0001\)), flexion (\(p<0.001\)) and supination (\(p<0.05\)), RR-RFF was significantly worse than both kNN and DSM-kNN. There was no significant success rate difference for neither pronation nor pointing (\(\alpha =0.05\)).

In Fig. 18, the effects of the combinations of factors on significance are summarized. When examining the success rates for individual gestures at specific exertion levels, it can be seen that RR-RFF contributed to significantly more failures than the group of kNN-based methods at levels of \(\frac{1}{3}\) and \(\frac{2}{3}\), specifically for power grasp and wrist extension.

As for the basic user study, the computation times needed for each classification were measured and averaged per user and method. These are presented in Table 7, again providing a confirmation for the real-time capability of the proposed methods.

6 User study discussion

Overall, it could be shown in the user studies that both the standard kNN scheme as well as the DSM-reduced technique yielded significantly higher success rates than RR-RFF and RR in most of the scenarios. The behaviour that kNN-based methods performed significantly better at higher exertion levels in the basic user study could be due to the fact that gestures of low intensity are more often subject to misclassification. This might result from a too high rest magnitude threshold which causes movements with low signal amplitudes being classified as rest state. In the extended user study, there was no significance for this difference. However, the effect could probably be curtailed in general by a learning process where the subjects would get used to the specific behaviour of the algorithm and adapt to it. Furthermore, the limb position effect might influence the results with respect to the average rest signal magnitude, although the subjects sat in a standardized pose.

Since the principle idea behind the use of random Fourier features is to fit cosine functions in the regression space, this might lead to unwanted behaviour at intermediate levels while showing better performance for especially the full gesture exertion (and slightly also for low levels). For RR, a similar principle holds, with the exception of using linear instead of cosine functions. Probably, the assumption of linear dependency is valid for small intensities, hence showing better success rates for \(\frac{1}{3}\) exertion level when comparing RR to RR-RFF. For increasing intensities, the proportionality behaviour might change to other functional dependencies. Nevertheless, the regression approach should fit the level of full intensity since it was trained on that. This means a higher number of successes for full intensity gestures. Regarding the basic user study, it has to be noted that RR-RFF performed significantly better than RR at full gesture exertion. There, kNN and DSM-kNN also had significantly higher success rates than the RR-based methods at intermediate and full gesture level. In the extended user study, kNN and DSM-kNN were significantly better than RR-RFF at all gesture levels.

For the kNN-based methods, the highest success rates were achieved for wrist extension (and in the second user study also for wrist flexion). The reason for this could be that power grasp and pointing gesture are most probably mainly exerted by the same group of muscles, but the wrist gestures are not—thus leading to better separability of those classes. In the basic user study, precisely, the success rate difference between pointing gesture and wrist extension was significant; for DSM-kNN, power grasp also differed significantly from wrist extension. This might point towards the described explanation. In the extended user study, there was no significance for that.