Abstract

Peer feedback is regarded as playing a vital role in fostering preservice teachers’ noticing and reasoning skills during technology integration. However, novices in particular (e.g., pre-service teachers) tend to provide rather superficial feedback, which does not necessarily contribute to professional development. Against this background, we developed an online video-annotation tool, LiveFeedback + , which allows for providing peer feedback on the quality of technology integration during microteachings in a fine-grained manner. Applying a design-based research approach (2 design cycles, N = 42 pre-service teachers, quasi-experimental interrupted time-series design), we investigated whether the addition of prompts (Cycle 1) and strategy instruction combined with prompts (Cycle 2) contributed to the quality of peer feedback. Contrary to our predictions, piecewise regressions demonstrated that pre-service teachers provided more feedback comments with superficial praise and fewer feedback comments with substantial problem identification and solutions when prompts were available. However, when pre-service teachers were explicitly instructed in strategy use, the reasoning during peer feedback could be enhanced to some extent, as pre-service teachers provided less praise and more problem diagnosis in feedback comments when strategy instruction was available. These findings suggest that the addition of strategy instruction that explicitly models adequate feedback strategies based on prompts can help overcome mediation deficits during peer feedback in technology-based settings.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Digital transformation is regarded as one of the most drastic disruptions of the twenty-first century. As such, educational systems are required to continuously prepare students for a digitized society. In addition to other factors, such as school administration or the availability of infrastructure, there is a large consensus that teachers play a key role in technology integration and preparing students for the challenges of a digital future (e.g., Eickelmann et al., 2019). Therefore, (pre-service) teachers are required to notice potentials for educational technologies and adequately reason about these technologies in an instructionally meaningful manner (Backfisch et al., 2020). However, previous research has indicated that pre-service teachers are often challenged by the demand to notice and reason about the potential of educational technologies for teaching, as they simultaneously need to integrate technological knowledge (i.e., knowledge about the functions of technologies) and (subject-specific) pedagogical knowledge (i.e., knowledge about effective teaching strategies) across different teaching contexts.

A fruitful approach to support pre-service teachers’ noticing and reasoning skills in the context of technology integration is the implementation of authentic but guided teaching experiences, such as the use of video-based microteachings (Lachner et al., 2021a; Tondeur et al., 2012). In microteachings, pre-service teachers simulate scaled-down teaching situations (Cooper & Allen, 1970) with peer students and record them on video. The peer students subsequently provide formative feedback on the previously recorded teaching simulations. Peer feedback should trigger additional improvements by the receiver of the feedback and, even more so, by the provider of the feedback (Cho & MacArthur, 2010; Lachner et al., 2021b). Given that pre-service teachers often face difficulties in providing peer feedback in a substantial and constructive manner, a question is raised regarding how pre-service teachers can be supported to provide high-quality feedback on video-based microteachings.

In the current paper, we address this gap by providing a new educational approach, the LiveFeedback + system, to provide high-resolution peer feed in granular manner. Additionally, we present the findings of a design-based research study (2 cycles), in which we examined the role of prompts (Cycle 1) and strategy instruction combined with prompts (Cycle 2) based on worked examples regarding the quality of pre-service teachers’ peer feedback in authentic courses on subject-specific technology integration in economic education.

Professional knowledge as a prerequisite for technology integration

Teachers’ professional knowledge related to integrating technology is often explained via the technological pedagogical content knowledge framework (TPACK; cf. Mishra, 2019; Mishra & Koehler, 2006). TPACK is based on general conceptualizations by Shulman (1986), who proposed three knowledge components for professional teaching (see also Baumert et al., 2010; Hill et al., 2008; Kunter et al., 2013): (1) content knowledge (CK), which is conceptualized as teachers’ subject-specific knowledge related to the course content; (2) pedagogical knowledge (PK), which is regarded as generic knowledge for designing meaningful learning environments that support students’ learning processes (Baumert et al., 2010; Voss et al., 2011); and (3) pedagogical content knowledge (PCK), which is knowledge about content-specific teaching and diagnostic strategies that help teachers adapt content knowledge to students' potential needs (Baumert et al., 2010; Hill et al., 2008; Shulman, 1986).

In their TPACK framework, Mishra and Koehler (2006) added technological knowledge (TK), that is, knowledge regarding educational technologies, as another facet. By adding technological knowledge, three additional “t-intersections” emerged (Scherer et al., 2017): (1) technological content knowledge (TCK), which comprises knowledge about subject-specific technologies; (2) technological pedagogical knowledge (TPK), which regards domain-general knowledge of technology for teaching (Koehler & Mishra, 2009; Scherer et al., 2017); and (3) technological pedagogical content knowledge (TPACK), which specifically refers to knowledge about strategies for applying technologies in subject-specific teaching (Koehler & Mishra, 2009).

The TPTI model for modeling TPACK in action

Beyond the mere availability of professional knowledge, fostering the acquisition of applicable TPACK, which is contextualized in different situations, is of utmost importance for successfully integrating technology into classrooms (Harris & Hofer, 2014; Lachner et al., 2019). We therefore based our study on the model of teachers’ professional competence for technology integration (TPTI model; see Fig. 1; Lachner et al., 2024), which describes teachers’ professional knowledge at two levels: the level of teaching processes and the level of learning processes.

The TPTI model of Teachers’ Professional Competences for Technology Integration (Lachner et al., 2024)

At the level of teaching processes, the TPTI model comprises teachers’ professional competences for technology integration and their underlying processes for technology integration (see Fig. 1). Based on generic models of teachers’ professional competence (Baumert & Kunter, 2013) and professional vision (Goodwin, 1994; Jarodzka et al., 2021; Seidel & Stürmer, 2014; van Es & Sherin, 2002), teachers’ professional competences are considered multidimensional facets of professional competence in integrating technology, which includes TPACK (i.e., professional knowledge), teachers’ motivation (for empirical evidence, see, e.g., Backfisch et al., 2020, 2021,), and teachers’ beliefs and the ability to self-regulate (e.g., Baier et al., 2019; Brianza et al., 2022; Fabian et al., 2024; Kunter et al., 2013). However, a pivotal aspect of applying professional competence in an authentic classroom setting is the underlying processes of technology integration. In this respect, the TPTI model postulates that technology integration is an iterative and reciprocal process in three stages. The first stage of noticing encompasses the teacher’s ability to pay attention to critical teaching situations in the classroom and, thus, to the relevant potentials of technology to enhance student learning. The second stage involves the teacher’s ability to critically reflect on and reason relevant features of classroom instruction to attain learning (i.e., their professional vision; Lachner et al., 2016; Seidel & Stürmer, 2014), which is based on evidence-based principles of technology-related teaching and learning (Lachner et al., 2016, 2024). The third stage includes the teacher’s ability to successfully integrate technology to enhance students’ learning. In this regard, it has to be emphasized that noticing, reasoning, and acting are considered to be highly constrained by the situational context (Turner & Meyer, 2000), for example, by the availability of infrastructure and tools and by the involved students. These processes depend on each other and interact with the facets of technology-related professional competence.

At the level of learning processes, the technology-enhanced learning activities offered are seen as learning opportunities that the students can use to foster relevant processes of self-regulated learning (Nückles et al., 2020; Weinstein & Mayer, 1986).

Peer feedback to support technology integration

The TPTI model illustrates that technology integration is a demanding and complex teaching activity that requires adequate support in preservice teacher education (Backfisch et al., 2020, 2021). Previous research has shown that, in particular, the use of formative feedback in the context of authentic experiences may boost preservice teachers’ technology integration (Lachner et al., 2021a; Tondeur et al., 2012). Formative feedback can be seen as distinct information provided by an agent (e.g., teacher, peer, or a computer) regarding distinct aspects of a student’s performance (Hattie & Timperley, 2007), such as technology integration during teaching. As such, formative feedback may help with noticing and reasoning critical events during technology integration. However, providing formative feedback is generally very laborious and time consuming (e.g., Cho & MacArthur, 2010). Thus, peer feedback is discussed as a useful alternative, as both the student who provides the feedback and the one who receives the feedback may benefit from formative feedback (Cho & MacArthur, 2010; Kleinknecht & Gröschner, 2016). In particular, generating feedback may engage preservice teachers in evaluative judgments about the technology integration of their peers and may trigger reflection on their own technology integration (for related evidence in the context of writing, see Nicol et al., 2014; Patchan & Schunn, 2015). However, in such peer contexts, the quality of peer feedback is critical to attaining learning by providing feedback. Patchan and Schunn (2015), for example, showed that most peer comments comprise simple praise and often ignore critical aspects of the peers’ products, which suggests that peer feedback requires additional assistance to be effective.

The LiveFeedback + technology

To improve the quality of peer feedback, we developed the LiveFeedback + technology (see Fig. 2). LiveFeedback + was developed in cooperation between the University of Tübingen and the University of Kaiserslautern-Landau (RPTU) and will be further developed and maintained under the name smallPART (Self-Directed Media-Assisted Learning Lectures & Process Analysis Reflection Tool). LiveFeedback + is a web-based application that can be integrated into common learning management systems, such as Moodle or the open-source learning management system ILIAS (translated to English: "Integrated Learning, Information, and Co-Working System"). LiveFeedback + is an online learning environment that supports the immediate and collaborative reflection and analysis of video-recorded classroom activities (for more generic approaches, see Seidel & Stürmer, 2014). For a systematic analysis, classroom activities can be segmented within LiveFeedback + into specific (video) units. Additionally, it allows for providing peer feedback regarding distinct sequences of the assigned microteachings in a high-resolution and fine-grained manner.

In the current study, we integrated LiveFeedback + to let pre-service teachers observe video-recorded microteachings of peer students and provide formative feedback on peers’ quality of technology integration on selected video sequences. To scaffold the noticing, reasoning, and interpreting processes during the provision of feedback, we investigated the effects of adding prompts (Cycle 1) and strategy instruction combined with prompts (Cycle 2, see Fig. 3).

Enhancing the effectiveness of LiveFeedback +

LiveFeedback + could be a suitable technology for implementing fine-grained peer feedback regarding the quality of technology integration in authentic teaching settings, such as microteachings. Given that previous studies have emphasized that students require additional support to make peer feedback effective (i.e., students may benefit from both providing and receiving feedback), the aim of the current study was to develop an effective peer feedback environment. This environment aims to provide critical and substantial feedback regarding technology integration that emphasizes noticing, reasoning, and interpreting critical events during technology integration. We borrowed evidence-based instructional activities from general research on the science of learning (i.e., prompts and strategy instruction) and investigated whether adding these activities contributed to the quality of peer feedback (Table 1).

Peer feedback prompts

To stimulate distinct strategies during the provision of feedback, prompts have been shown to be a beneficial instructional support tool for focusing students’ attention (Gan & Hattie, 2014; Gielen et al., 2010; Nückles et al., 2009). Prompts are questions or hints that induce deep-level processing (King, 1992; Nückles et al., 2009; Pressley et al., 1992). They are regarded as strategy activators that may help activate students’ professional knowledge and contribute to the quality of the provided peer feedback. As such, prompts may be particularly effective when students already have the respective feedback strategy in their repertoire but lack the ability to realize it during the provision of feedback (i.e., production deficiency; see Nückles et al., 2009). In the context of technology integration, prompts that focus on the (subject-specific) instructional aspects of technology integration (i.e., TPACK) may help preservice teachers realize feedback that targets noticing, reasoning, and interpreting the potential of technology.

Peer feedback strategy instruction

When students do not have the particular strategies available (i.e., mediation deficiency; see Nückles et al., 2009), more direct instructional approaches may be necessary to support peer feedback. Strategy instruction is often rooted in theories of example-based learning, in which students acquire new skills (e.g., providing feedback) by observing prototypical examples of how to perform them adequately (Renkl, 2014). Novice students can profit from studying a detailed step-by-step solution to a task before attempting to realize the task (i.e., feedback) themselves (Renkl, 2014; Van Gog & Rummel, 2010). As such, studying with strategy instruction reduces extraneous processing and may free available memory resources so that students can construct an adequate task schema (Cooper & Sweller, 1987; Renkl, 2014). Strategy instruction may also include a screen recording of the expert model’s behavior and verbal explanations of the problem-solving steps (Hoogerheide et al., 2016; McLaren et al., 2008; Omarchevska et al., 2021; van Wermeskerken et al., 2018).

Overview of the current study

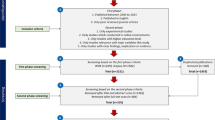

In the current in-situ study, we investigated whether and how providing peer feedback may foster the ability to notice the potentials of educational technologies and to adequately reason about these technologies in an instructionally meaningful manner. Therefore, we investigated whether technology-mediated peer feedback via LiveFeedback + could be enhanced by adding feedback prompts and strategy instruction. An interdisciplinary research team consisting of subject pedagogy experts in economic education, learning researchers and educational technologists was involved in designing the learning environment. The study was implemented in two subject pedagogy courses (economic education). In these courses, pre-service teachers provided feedback on the quality of the video-recorded microteachings of their peers. We implemented two design cycles in our study to investigate whether adding prompts (Cycle 1) and strategy instruction combined with prompts (Cycle 2) aided in the provision of high-quality peer feedback (see Fig. 3).

Our aim was not to experimentally investigate whether prompts are more effective scaffolds than strategy instruction but rather to explore effective conditions under which subject-specific technology-mediated peer feedback can be implemented in authentic and ecologically valid microteaching scenarios to support pre-service teachers in providing elaborated feedback. By applying an interrupted time-series design approach, which can be regarded as a robust quasi-experimental approach (Hudson et al., 2019), we compared control phases comprising several measuring points in which preservice teachers interacted without additional scaffolds (Cycle 1: no prompts; Cycle 2: no strategy instruction combined with prompts) to those with intervention phases with a scaffold (Cycle 1: with prompts; Cycle 2: strategy instruction combined with prompts). This procedure allowed us to control for potential variability in our population.

We addressed the following research questions:

-

(1)

Does the addition of feedback prompts (Cycle 1) contribute to the quality of peer feedback?

-

(2)

Does the further addition of strategy instruction combined with prompts (Cycle 2) contribute to the quality of peer feedback?

To explore our research questions, we applied a multifaceted coding scheme to assess the quality of the peer feedback. From a process-oriented perspective, we assessed the level of feedback specificity by adopting the framework by Patchan and Schunn (2015), in which we investigated whether pre-service teachers provided only praise or were able to notice, reason, and interpret critical events in the microteachings. From a content perspective, we analyzed the focus of the feedback, that is, whether pre-service teachers focused on superficial features (e.g., off-task behavior) or deep-structure features (e.g., cognitive activation, instructional support, classroom management, and technology exploitation) of teaching quality (see Backfisch et al., 2021). Given that most pre-service teachers were novices in technology-enhanced teaching, we mainly assumed systematic differences between the control and intervention phases regarding the feedback specificity, but not regarding the feedback content.

Methods

Research context

To improve pre-service teachers’ quality of peer feedback, we implemented an intervention (i.e., online learning environment) in two regular courses in economic education at a University of Tübingen with approximately 28,000 students. The topic of the courses included subject-specific teaching approaches, with an emphasis on technology integration.

The courses were part of the compulsory curriculum in the Bachelor of Education program. Both courses were comparable in content and instructional structure and were implemented in two consecutive semesters. The courses comprised 14 weekly sessions. The first course was conducted face to face. The second course was conducted online with eight synchronous and six asynchronous sessions due to the COVID-19 pandemic. In the two courses, pre-service teachers were trained in planning economics lessons and integrating technology.

In the introduction phase, pre-service teachers received direct instruction regarding the potential of educational technologies for teaching economics. In the planning phase, they developed lesson plans on a selected economic topic (e.g., budget plan, sustainable consumption, free trade, or protectionism). Subsequently, the lesson plans were implemented in microteachings, which were about 20 min. long and covered different topics of economic education. In both variants (offline and online), some of the pre-service teachers simulated the role of the students during the microteachings. A total of 32 microteachings were conducted and video-recorded.

In the peer feedback phase for both cycles of the research, the pre-service teachers provided feedback only via the online learning environment LiveFeedback + . The students were required to assess three videos on average per cycle.

In total, 42 pre-service teachers agreed to participate in the study (Cycle 1: n = 20; Cycle 2: n = 22). In the first course, only one person did not participate in the study. No data were collected from this person. In the second course, all pre-service teachers who were enrolled participated in the study. All pre-service teachers who participated were German native speakers, and 69% were female. They were undergraduate students with a major in teaching economics and, on average, were in their fourth semester. Based on their advancement within the curriculum, the pre-service teachers had scarce formal experience in technology integration and low levels of teaching experience. As such, they can be regarded as novices in technology integration. All pre-service teachers gave written consent to participate in the study.

Design of the peer feedback intervention

In the present study, we adopted a design-based research approach (Anderson & Shattuck, 2012; Collins et al., 2004) and conducted an in-situ quasi-experimental study in an authentic teaching environment comprising comparisons of control and intervention phases. The design and implementation of the peer feedback intervention were realized by adopting a co-design approach comprising an interdisciplinary research team (Roschelle et al., 2006). In total, we conducted two design cycles over the course of two semesters in which we examined the role of prompts (Cycle 1) and strategy instruction combined with prompts (Cycle 2) on the quality of peer feedback (see Fig. 3). In the beginning, we intended to have a theory- and requirements-driven design for the learning environment (Anderson & Shattuck, 2012). Depending on the analyzed findings from the first design cycle, a re-design phase, including an enhanced implementation of strategy instruction, was initiated.

The peer feedback intervention was implemented within the last third of the courses.

Cycle 1: design of prompts

To enhance the quality of feedback during interaction with LiveFeedback + , we deliberately designed a set of prompts to trigger noticing, reasoning, and interpretation processes during the provision of peer feedback. To this end, we focused on both the specificity of the feedback and the focus of the feedback by providing pre-service teachers with a set of prompts to judge the quality of the observed microteachings in a granular manner. The prompts should guide pre-service teachers’ noticing, reasoning, and interpreting and focus on substantial features of the videos during interaction with the LiveFeedback + system (see Table 1). Overall, pre-service teachers received nine specific prompts (three per category). The prompts were expected to help pre-service teachers’ activate distinct feedback strategies and serve as a strategy activator to overcome potential production deficiencies.

Cycle 2: strategy instruction combined with prompts

To further enhance the quality of peer feedback in Cycle 2, we implemented strategy instruction in addition to the prompts, taking a more direct approach to fostering pre-service teachers’ ability to provide high-quality feedback. We followed an example-based learning approach (Renkl, 2014) to design the strategy instruction. We developed an exhaustive demonstration video in which a female expert modeled specific feedback strategies on how a) to detect relevant classroom activities (i.e., noticing) and b) to diagnose (i.e., reasoning) and provide constructive solutions (i.e., interpretation) within substantial feedback. The process-oriented demonstration contained a worked-out procedure for providing high-quality feedback and conceptual information regarding why certain steps were accomplished (Lachner et al., 2019; Van Gog et al., 2008). The demonstration was provided through a screencast video and was 13 min long. Given that the demonstration video modeled explicit feedback strategies, the strategy instruction aimed to overcome mediation deficiencies.

Study design and analytic strategies

Study design

We realized an interrupted time-series design with a comparable duration of both cycles (Cycle 1: control phase [no prompts], May 14–May 28, 2019; intervention phase [with prompts], June 18–July 16, 2019; Cycle 2: control phase [no strategy instruction combined with prompts], June 20–July 1, 2020; intervention phase [with strategy instruction], July 2–July 23, 2020). Interrupted time-series designs are particularly suitable in authentic contexts, such as high-stakes classroom contexts (as was the case in our study), in which a direct manipulation of an intervention would not be warranted. At the same time, interrupted time-series designs allow controlling for variability and change. They are considered to be relatively robust quasi-experimental approaches (Hudson et al., 2019).

Analysis of the quality of peer feedback

Our main dependent variable was peer feedback quality. To assess peer feedback quality, we first segmented the peer feedback; that is, if a feedback sequence comprised several ideas, it was segmented into further idea units (see Chi, 1997). Each feedback segment was assessed with regard to feedback specificity and feedback focus.

For feedback specificity, we adopted a coding scheme by Patchan and Schunn (2015) that aligns with the TPTI model’s focus on the iterative processes of noticing and reasoning of technology integration in the classroom. For instance, within the context of technology-enhanced teaching, pre-service teachers are required (1) to notice, that is, recognize the potential of educational technologies in learning contexts, and (2) to interpret and reason, that is, evaluate the potential of technologies for enhancing teaching quality. Therefore, our feedback categories were structured as follows: (a) praise, which comprised feedback segments that solely provided positive feedback at the self-level without reasoning (for examples, see Table 2); (b) problem detection, which included feedback segments in which preservice teachers only noticed critical events during the observed microteaching; (c) problem diagnosis, which refers to feedback segments in which pre-service teachers explicitly reasoned and justified within their feedback; and (d) solution suggestion, which comprised feedback segments in which pre-service teachers interpreted and justified the problem situation and proposed potential solutions to address the problem.

For feedback focus, we applied generic assessments of (technology-enhanced) teaching to measure the focus of the feedback based on the dimensions of teaching quality. In addition to the three generic dimensions of teaching quality, we coded technology exploitation as a further dimension of technology-enhanced teaching (Backfisch et al., 2020; Praetorius et al., 2018). Off-task behavior constituted feedback segments, which were related to superficial aspects of teaching (see Table 2 for examples).

Each feedback sequence was assessed for feedback specificity and feedback focus. A second rater coded 25% of the peer feedback. Interrater reliability was good, with Cohen’s κ > 0.79 (Wirtz & Caspar, 2002).

Statistical analyses

As we had a nested data structure (videos were nested within pre-service teachers as each pre-service teacher provided feedback on multiple videos), we conducted mixed models (Hox, 2013) to account for the multilevel data structure in our analyses and to account for intra-individual variance. These approaches are considered superior to more common approaches such as repeated measures ANOVAS. Additionally, to contrast differences between the control phase and the intervention phase, we used piecewise multilevel regression models (Ning & Luo, 2017) to segment our measurement points into meaningful time units (i.e., control phase vs. intervention phase) by applying simple dummy coding. We used the lme4 package in R software to account for the nested structure of our data (Hox, 2013). The dependent variables comprised the different dimensions of peer feedback quality (feedback specificity and feedback focus; see Table 3).

Findings

Descriptive findings

Across the two cycles, 42 pre-service teachers provided 2,646 peer feedback segments over the course of the two design cycles (Cycle 1 = 1,898; Cycle 2 = 748). The descriptive statistics of the two cycles can be seen in Table 3. The means and ranges of the percentage values were calculated for each variable. The number of peer feedback comments per video was not limited; that is, participants could provide as many peer feedback annotations as they wanted. Therefore, we added the range for each variable to consider the varying percentages of annotations for each variable. As expected, the pre-service teachers were more likely to provide praise than critical feedback. Likewise, pre-service teachers were more likely to concentrate on aspects of classroom management but not on content-specific or technology-related factors.

In the first step, we computed simple Pearson correlations to detect potential relationships among our dependent measures. In both cycles, the different types of feedback specificity (i.e., praise, problem detection, problem diagnosis, and solution) were not substantially related to each other, which suggests that the occurrence of a distinct level of feedback specificity was not necessarily dependent on another level. The same holds true for the type of feedback focus, as only weak correlations were found among measures of teaching quality (see Tables 3, 4, 5).

RQ (1): Does the addition of feedback prompts (Cycle 1) contribute to the quality of peer feedback?

To investigate whether the availability of feedback prompts contributed to the quality of peer feedback, we computed piecewise multilevel regressions (for the complete test statistics, see Table 6). Regarding feedback specificity, we obtained a significant effect for praise (β = 0.80, large effect), problem diagnosis (β = -0.51, medium effect), and solution suggestion (β = -0.49, medium effect). However, contrary to our research question, the effect was in the negative direction, as preservice teachers in the intervention phase provided a lower level of feedback specificity than those in the control phase. Regarding the focus of feedback, none of the effects approached significance (see Table 6). Therefore, our findings suggest that the addition of prompts does not contribute to the quality of feedback. Given that the overall occurrence of substantial comments and adequate strategies was rather low, our findings suggest that students had a mediation deficit and did not possess adequate strategies for providing adequate peer feedback. Consequently, we conducted another research cycle.

RQ (2): Does the further addition of strategy instruction combined with prompts (Cycle 2) contribute to the quality of peer feedback?

In Cycle 2, we investigated whether strategy instruction combined with prompts that explicitly modeled adequate feedback strategies with a high level of specificity contributed to the quality of peer feedback. The analyses (for complete test statistics, see Table 6) partly confirmed our predictions, as pre-service teachers offered fewer praise comments (β = -0.60, medium effect) and more diagnosis comments (β = 0.75, large effect) in the intervention phase than in the control phase. Again, we did not find differences between the control and intervention phases regarding the feedback focus (see Table 6).

Discussion

In the present study, we implemented a video-based feedback system in an authentic pre-service teacher education course to support pre-services teachers’ noticing, reasoning, and interpreting of processes during technology integration (for related approaches in generic teaching, see Kleinknecht & Gröschner, 2016; Seidel & Stürmer, 2014). The TPTI model (Lachner et al., 2024), which synthesizes generic research on professional competence (e.g., Baumert & Kunter, 2013), professional vision (e.g., Seidel & Stürmer, 2014; van Es & Sherin, 2002), and technology integration (Backfisch et al., 2021; Mishra & Koehler, 2006), served as a basis to design our peer feedback intervention. In the empirical part of the paper, we investigated whether and how prompts and strategy instruction combined with prompts can enhance technology-mediated peer feedback (via Livefeedback +).

Cycle 1: intervention phase with prompts

In Cycle 1, we did not find any benefits of prompts. Instead, we found that pre-service teachers provided more praise statements but fewer statements regarding problem diagnosis and problem solution in the intervention phase than in the control phase. However, the proportion of specific statements was rather low, as pre-service teachers primarily relied on praise statements, which may be an indicator that, in contrast with our presumptions, pre-service teachers did not have proficient feedback strategies to provide considerable critical feedback (see also Patchan & Schunn, 2015). Therefore, we attribute these null findings to a mediation deficiency because pre-service teachers probably did not have sufficient strategic knowledge to enact productive feedback strategies (Nückles et al., 2009).

Cycle 2: intervention phase with strategy instruction combined with prompts

In Cycle 2, we added a more direct instruction approach by realizing video-based strategy instruction in which adequate feedback strategies were explicitly modeled by means of example-based learning (Renkl, 2014; Van Gog & Rummel, 2010). We demonstrated that pre-service teachers provided fewer praise statements and more problem diagnosis statements in the intervention phase, suggesting that pre-service teachers were inclined to provide more feedback statements that incorporated distinct reasoning processes, which are regarded to be beneficial for technology integration. However, the feedback content still concentrated more on superficial aspects of teaching (i.e., classroom management) than technology-specific aspects (i.e., technology integration). We attribute this finding to the fact that the strategy instruction primarily relied on modeling feedback strategies but not on content-specific aspects.

These findings corroborate the scarce evidence that the combination of prompts and strategy instruction does not necessarily result in additive effects for learning (for related findings, see Omarchevska et al., 2021; Wischgoll, 2017), particularly when pre-service teachers have low prior knowledge in the content domain (e.g., technology integration). For instance, in the domain of scientific reasoning, Omarchevska et al. (2021) did not demonstrate the effects of monitoring prompts in addition to video-based modeling examples, as only the provision of examples accounted for learning. We would consequently argue that more content-specific instruction, such as pretraining in the target domain, should be mandatory so that pre-service teachers have sufficient prior knowledge available to provide rich content-specific feedback on technology integration, thereby further advancing their learning (for general discussions of prior knowledge in the context of generative activities, see Lachner et al., 2021a). Adding content-specific instruction in a third cycle could therefore help fully exploit the potential of technology-mediated feedback systems, such as Livefeedback +. To this end, more research that explicitly investigates the orchestration of instructional approaches (e.g., combinations of instructional strategies, such as feedback, pretraining, and strategy instruction) is needed.

Limitations

Despite the potential merits of our study, we also need to address some limitations. First, a third cycle would have been helpful to substantiate the results regarding the provision of prompt-based feedback. At the same time, our findings are in line with experimental research that has combined strategy instruction and prompt-based feedback in different contexts (e.g., metacognitive feedback, Omarchevska et al., 2021; professional vision: Tschönhens et al., 2023). Furthermore, given that the majority of publications have often only realized one design cycle (for a systematic review, see Zheng, 2015), we argue that two cycles could suffice to make a sensible contribution to the field of pre-service teacher education and educational technology. Second, regarding the generalizability of our findings, we opted to implement our in-situ study in an authentic pre-service teacher education course in economic education with a relatively restricted sample size. This procedure likely contributed to the ecological validity of our findings. However, we see a need for more experimental designs with higher levels of control, as large variances, i.e. inter- but also intra-individual variability per variable, are more likely to occur in natural settings. In addition, we must note that our findings cannot be interpreted as causal, since we compared the effects of interventions within and across two different pre-service teacher populations and settings (i.e., face to face vs. online setting during COVID-19). Nevertheless, we want to note that, as we applied an interrupted time-series design, which can be regarded as a relatively strong quasi-experimental design, at least some conclusions regarding the design of technology-mediated peer feedback can be drawn.

Future research and conclusions

Further research should replicate the obtained findings by realizing more robust experimental designs with sufficient test power to draw causal conclusions regarding the role of instructional interventions in the context of technology-mediated peer feedback. Finally, from the perspective of learning by providing feedback (Cho & MacArthur, 2010), it would be interesting to discover whether the addition of prompts and strategy instruction combined with prompts resulted not only in higher levels of noticing and reasoning during the provision of peer feedback but also in higher levels of professional knowledge. Therefore, future studies should add additional performance assessments to investigate whether providing peer feedback aids learning.

Altogether, our findings show that technology-mediated peer feedback can be enhanced by adding strategy instruction (combined with prompts). Strategy instruction particularly contributed to pre-service teachers’ reasoning during peer feedback.

Thus, our findings demonstrate how technology-mediated peer feedback can be made fruitful in pre-service teacher education. As such, our results can contribute to a better understanding of the potential boundary conditions of supporting pre-service teachers’ technology integration.

Data availability

Not applicable.

References

Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16–25. https://doi.org/10.3102/0013189X1142881

Backfisch, I., Lachner, A., Hische, C., Loose, F., & Scheiter, K. (2020). Professional knowledge or motivation? Investigating the role of teachers’ expertise on the quality of technology-enhanced lesson plans. Learning and Instruction, 66, 101300. https://doi.org/10.1016/j.learninstruc.2019.101300

Backfisch, I., Lachner, A., Stürmer, K., & Scheiter, K. (2021). Variability of teachers’ technology integration in the classroom: A matter of utility! Computers & Education, 66, 104159. https://doi.org/10.1016/j.compedu.2021.104159

Baier, F., Decker, A.-T., Voss, T., Kleickmann, T., Klusmann, U., & Kunter, M. (2019). What makes a good teacher? The relative importance of mathematics teachers’ cognitive ability, personality, knowledge, beliefs, and motivation for instructional quality. British Journal of Educational Psychology, 89, 767–786. https://doi.org/10.1111/bjep.12256

Baumert, J., & Kunter, M. (2013). Professionelle Kompetenz von Lehrkräften. In I. Gogolin, H. Kuper, H. H. Krüger, & J. Baumert (Eds.), Stichwort: Zeitschrift für Erziehungswissenschaft (pp. 277–337). Springer VS. https://doi.org/10.1007/978-3-658-00908-3_13

Baumert, J., Kunter, M., Blum, W., Brunner, M., Voss, T., Jordan, A., Klusmann, U., Krauss, S., Neubrand, M., & Tsai, Y.-M. (2010). Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. American Educational Research Journal, 47(1), 133–180. https://doi.org/10.3102/0002831209345157

Brianza, E., Schmid, M., Tondeur, J., & Petko, D. (2022). Investigating contextual knowledge within TPACK: How has it been done empirically so far? In Society for Information Technology & Teacher Education international conference (pp. 2204–2212). Association for the Advancement of Computing in Education (AACE).

Chi, M. T. H. (1997). Quantifying qualitative analyses of verbal data: A practical guide. The Journal of the Learning Sciences, 6(3), 271–315. https://doi.org/10.1207/s15327809jls0603_1

Cho, K., & MacArthur, C. (2010). Student revision with peer and expert reviewing. Learning and Instruction, 20(4), 328–338. https://doi.org/10.1016/j.learninstruc.2009.08.006

Collins, A., Joseph, D., & Bielaczyc, K. (2004). Design research: Theoretical and methodological issues. Journal of the Learning Sciences, 13(1), 15–42. https://doi.org/10.1207/s15327809jls1301_2

Cooper, J., & Allen, D. W. (1970). Microteaching: History and present status. ERIC Clearinghouse on Teacher Education.

Cooper, G., & Sweller, J. (1987). Effects of schema acquisition and rule automation on mathematical problem-solving transfer. Journal of Educational Psychology, 79(4), 347–362.

Eickelmann, B., Gerick, J., Labusch, A., & Vennemann, M. (2019). Schulische Voraussetzungen als Lern- und Lehrbedingungen in den ICILS-2018-Teilnehmerländern. In B. Eickelmann, W. Bos, J. Gerick, F. Goldhammer, H. Schaumburg, K. Schwippert, M. Senkbeil, & J. Vahrenhold (Eds.), ICILS 2018 #Deutschland. Computer- und informationsbezogene Kompetenzen von Schülerinnen und Schülern im zweiten internationalen Vergleich und Kompetenzen im Bereich Computational Thinking (pp. 137–171). Waxmann.

Fabian, A., Fütterer, T., Backfisch, I., Lunowa, E., Paravicini, W., Hübner, N., & Lachner, A. (2024). Unraveling TPACK: Investigating the inherent structure of TPACK from a subject-specific angle using test-based instruments. Computers & Education, 217, 105040. https://doi.org/10.1016/j.compedu.2024.105040

Gan, M. J. S., & Hattie, J. (2014). Prompting secondary students’ use of criteria, feedback specificity and feedback levels during an investigative task. Instructional Science, 42, 861–878. https://doi.org/10.1007/s11251-014-9319-4

Gielen, S., Peeters, E., Dochy, F., Onghena, P., & Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learning and Instruction, 20, 304–315. https://doi.org/10.1016/j.learninstruc.2009.08.007

Goodwin, C. (1994). Professional vision. American Anthropologist, 96(3), 606–633. https://doi.org/10.1525/aa.1994.96.3.02a00100

Harris, J. B., & Hofer, M. J. (2014). Technological pedagogical content knowledge (TPACK) in action. Journal of Research on Technology in Education, 43(3), 211–229. https://doi.org/10.1080/15391523.2011.10782570

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

Hill, H. C., Blunk, M. L., Charalambous, C. Y., Lewis, J. M., Phelps, G. C., Sleep, L., & Ball, D. L. (2008). Mathematical knowledge for teaching and the mathematical quality of instruction: An exploratory study. Cognition and Instruction, 26(4), 430–511. https://doi.org/10.1080/07370000802177235

Hoogerheide, V., van Wermeskerken, M., Loyens, S. M., & Van Gog, T. (2016). Learning from video modeling examples: Content kept equal, adults are more effective models than peers. Learning and Instruction, 44, 22–30. https://doi.org/10.1016/j.learninstruc.2016.02.004

Hox, J. J. (2013). Multilevel regression and multilevel structural equation modeling. The Oxford Handbook of Quantitative Methods, 2(1), 281–294.

Hudson, J., Fielding, S., & Ramsay, C. R. (2019). Methodology and reporting characteristics of studies using interrupted time series design in healthcare. BMC Medical Research Methodology, 19, 137. https://doi.org/10.1186/s12874-019-0777-x

Jarodzka, H., Skuballa, I., & Gruber, H. (2021). Eye-tracking in educational practice: Investigating visual perception underlying teaching and learning in the classroom. Educational Psychology Review, 33(1), 1–10. https://doi.org/10.1007/s10648-020-09565-7

King, A. (1992). Comparison of self-questioning, summarizing, and notetaking-review as strategies for learning from lectures. American Educational Research Journal, 29(2), 303–323. https://doi.org/10.3102/00028312029002303

Kleinknecht, M., & Gröschner, A. (2016). Fostering preservice teachers’ noticing with structured video feedback: Results of an online- and video-based intervention study. Teaching and Teacher Education, 59, 45–56. https://doi.org/10.1016/j.tate.2016.05.020

Koehler, M. J., & Mishra, P. (2009). What is technological pedagogical content knowledge? Contemporary Issues in Technology and Teacher Education (CITE), 9(1), 60–70.

Kunter, M., Klusmann, U., Baumert, J., Richter, D., Voss, T., & Hachfeld, A. (2013). Professional competence of teachers: Effects on instructional quality and student development. Journal of Educational Psychology, 105(3), 805–820. https://doi.org/10.1037/a0032583

Lachner, A., Backfisch, I., & Stürmer, K. (2019). A test-based approach of modeling and measuring technological pedagogical knowledge. Computers & Education, 142, 103645. https://doi.org/10.1016/j.compedu.2019.103645

Lachner, A., Jarodzka, H., & Nückles, M. (2016). What makes an expert teacher? Investigating teachers’ professional vision and discourse abilities. Instructional Science, 44(3), 197–203. https://doi.org/10.1007/s11251-016-9376-y

Lachner, A., Fabian, A., Franke, U., Preiß, J., Jacob, L., Führer, C., Küchler, U., Paravicini, W., Randler, T., & Thomas, P. (2021a). Fostering pre-service teachers’ technological pedagogical content knowledge (TPACK): A quasi-experimental field study. Computers & Education, 174, 104304. https://doi.org/10.1016/j.compedu.2021.104304

Lachner, A., Hoogerheide, V., Van Gog, T., & Renkl, A. (2021b). Learning-by-teaching without audience presence or interaction: When and why does it work? Educational Psychology Review, 34(2), 575–607. https://doi.org/10.1007/s10648-021-09643-4

Lachner, A., Backfisch, I., & Franke, U. (2024). Towards an integrated perspective of teachers' technology integration: A preliminary model and future research dirctions. Frontline Learning Research, 12(1), 1–15. https://doi.org/10.14786/flr.v12i1.1179

McLaren, B. M., Lim, S., & Koedinger, K. R. (2008). When and how often should worked examples be given to students? New results and a summary of the current state of research. In B. C. Love, K. McRae, & V. M. Sloutsky (Eds.), Proceedings of the 30th annual conference of the Cognitive Science Society (pp. 2176–2181). Cognitive Science Society.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge (TPCK): Confronting the wicked problems of teaching with technology. In C. Crawford et al. (Eds.), Proceedings of Society for Information Technology and Teacher Education international conference 2007 (pp. 2214–2226). Association for the Advancement of Computing in Education.

Mishra, P. (2019). Considering contextual knowledge: The TPACK diagram gets an upgrade. Journal of Digital Learning in Teacher Education, 35(2), 76–78. https://doi.org/10.1080/21532974.2019.1588611

Nicol, D., Thomson, A., & Breslin, C. (2014). Rethinking feedback practices in higher education: A peer review perspective. Assessment & Evaluation in Higher Education, 39(1), 102–122. https://doi.org/10.1080/02602938.2013.795518

Ning, L., & Luo, W. (2017). Specifying turning point in piecewise growth curve models: Challenges and solutions. Frontiers in Applied Mathematics and Statistics. https://doi.org/10.3389/fams.2017.00019

Nückles, M., Hübner, S., & Renkl, A. (2009). Enhancing self-regulated learning by writing learning protocols. Learning and Instruction, 19(3), 259–271. https://doi.org/10.1016/j.learninstruc.2008.05.002

Nückles, M., Roelle, J., Glogger-Frey, I., Waldeyer, J., & Renkl, A. (2020). The self-regulation-view in writing-to-learn: Using journal writing to optimize cognitive load in self-regulated learning. Educational Psychology Review, 32(4), 1089–1126. https://doi.org/10.1007/s10648-020-09541-1

Omarchevska, Y., Lachner, A., Richter, J., & Scheiter, K. (2021). Do video modeling and metacognitive prompts improve self-regulated scientific inquiry? Educational Psychology Review, 34(2), 1025–1061.

Patchan, M. M., & Schunn, C. D. (2015). Understanding the benefits of providing peer feedback: How students respond to peers’ texts of varying quality. Instructional Science, 43, 591–614. https://doi.org/10.1007/s11251-015-9353-x

Praetorius, A.-K., Klieme, E., Herbert, B., & Pinger, P. (2018). Generic dimensions of teaching quality: The German framework of three basic dimensions. ZDM, 50, 407–426. https://doi.org/10.1007/s11858-018-0918-4

Pressley, M., El-Dinary, P. B., Marks, M. B., Brown, R., & Stein, S. (1992). Good strategy instruction is motivating and interesting. In K. A. Renninger, S. Hidi, & A. Krapp (Eds.), The role of interest in learning and development (pp. 333–358). Lawrence Erlbaum Associates Inc.

Renkl, A. (2014). Toward an instructionally oriented theory of example-based learning. Cognitive Science, 38(1), 1–37. https://doi.org/10.1111/cogs.12086

Roschelle, J., Penuel, W., & Shechtman, N. (2006). Co-design of innovations with teachers: Definition and dynamics. In S. A. Barab, K. E. Hay, & D. T. Hickey (Eds.), The international conference of the learning sciences: Indiana University 2006. Proceedings of ICLS 2006 (Vol. 2, pp. 606–612). International Society of the Learning Sciences.

Scherer, R., Tondeur, J., & Siddiq, F. (2017). On the quest for validity: Testing the factor structure and measurement invariance of the technology-dimensions in the technological, pedagogical, and content knowledge (TPACK) model. Computers & Education, 112, 1–17. https://doi.org/10.1016/j.compedu.2017.04.012

Seidel, T., & Stürmer, K. (2014). Modeling and measuring the structure of professional vision in preservice teachers. American Educational Research Journal, 51(4), 739–771. https://doi.org/10.3102/0002831214531321

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14. https://doi.org/10.3102/0013189X015002004

Tondeur, J., van Braak, J., Sang, G., Voogt, J., Fisser, P., & Ottenbreit-Leftwich, A. (2012). Preparing pre-service teachers to integrate technology in education: A synthesis of qualitative evidence. Computers & Education, 59(1), 134–144. https://doi.org/10.1016/j.compedu.2011.10.009c

Tschönhens, F., Fütterer, T., Franke, U., Stürmer, K., & Lachner, A. (2023, August). Video annotations to support pre-service teachers’ professional vision for technology integration [Presentation]. EARLI 2023. Thessaloniki, Greece.

Turner, J. C., & Meyer, D. K. (2000). Studying and understanding the instructional contexts of classrooms: Using our past to forge our future. Educational Psychologist, 35(2), 69–85. https://doi.org/10.1207/S15326985EP3502_2

van Es, E. A., & Sherin, M. G. (2002). Learning to notice: Scaffolding new teachers’ interpretations of classroom interactions. Journal of Technology and Teacher Education, 10(4), 571–596. https://www.learntechlib.org/primary/p/9171/

Van Gog, T., Paas, F., & van Merriënboer, J. (2008). Effects of studying sequences of process-oriented and product-oriented worked examples on troubleshooting transfer efficiency. Learning and Instruction, 18(3), 211–222. https://doi.org/10.1016/j.learninstruc.2007.03.003

Van Gog, T., & Rummel, N. (2010). Example-based learning—Integrating cognitive and social-cognitive research perspectives. Educational Psychology Review, 22(2), 155–174. https://doi.org/10.1007/s10648-010-9134-7

van Wermeskerken, M., Ravensbergen, S., & Van Gog, T. (2018). Effects of instructor presence in video modeling examples on attention and learning. Computers in Human Behavior, 89, 430–438.

Voss, T., Kunter, M., & Baumert, J. (2011). Assessing teacher candidates’ general pedagogical and psychological knowledge: Test construction and validation. Journal of Educational Psychology, 103(4), 952–969. https://doi.org/10.1037/a0025125

Weinstein, C., & Mayer, R. (1986). The teaching of learning strategies. In M. Wittrock (Eds.), Handbook of research on teaching (pp. 315–327). Macmillan.

Wirtz, M. A., & Caspar, F. (2002). Beurteilerübereinstimmung und Beurteilerreliabilität: Methoden zur Bestimmung und Verbesserung der Zuverlässigkeit von Einschätzungen mittels Kategoriensystemen und Ratingskalen. Hogrefe.

Wischgoll, A. (2017). Improving undergraduates’ and postgraduates’ academic writing skills with strategy training and feedback. Frontiers in Education. https://doi.org/10.3389/feduc.2017.00033

Zheng, L. (2015). A systematic literature review of design-based research from 2004 to 2013. Journal of Computers in Education, 2, 399–420. https://doi.org/10.1007/s40692-015-0036-z

Acknowledgements

We would like to thank Louisa Döderlein for her assistance in coding the data. In addition, we would like to thank Michelle Rudeloff for her support in conducting the study.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Franke, U., Backfisch, I., Scherzinger, L. et al. Do prompts and strategy instruction contribute to pre-service teachers’ peer-feedback on technology-integration?. Education Tech Research Dev (2024). https://doi.org/10.1007/s11423-024-10403-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s11423-024-10403-8