Abstract

The current study investigated students’ gameplay behavioral patterns as a function of in-game learning supports delivery timing when played a computer-based physics game. Our sample included 134 secondary students (M = 14.40, SD = .90) from all over the United States, who were randomly assigned into three conditions: receiving instructional videos before a game level (n = 40), receiving instructional videos after a game level (n = 41), and without instructional videos (n = 53) while playing the game for about 150 min. We collected students’ gameplay behavior data using game log files and employed sequential analysis to compare their problem-solving and help-seeking behaviors upon receiving instructional videos at different timings. Results suggested that the instructional videos, delivered either before or after a game level, helped students identify the correct game solution at the beginning of medium-difficulty game levels. Moreover, receiving the instructional videos delayed students’ help-seeking behaviors—encouraging them to figure out game problems on their own before asking for help. However, receiving the instructional videos may possibly restrict students from creating diverse gaming solutions. Suggestions on design and implementation of in-game learning supports based on the findings are also presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Educational games can be promising tools to intrinsically motivate students and facilitate content learning (Gee, 2005; Kiili, 2005; Reiber, 1996; Squire & Jan, 2007; Squire & Klopfer, 2007; Young et al., 2012). Designing games that present reasonable challenges—those that require an effort but are within the players’ ability—are essential to maintaining students’ engagement and motivation (Adams, 2013; Gee, 2005; Kiili, 2005; Reiber, 1996). Reasonably challenging games provide students a “pleasantly frustrating” (Gee, 2005, p. 10) experience, i.e., students struggle yet still believe that they can solve the problems. Alternatively, challenges that exceed students’ level of competence may cause excessive frustration and even elicit quitting behaviors if students are not provided guidance and support (Kiili, 2005; Schrader & Bastiaens, 2012). Moreover, Ke (2008) and Young et al. (2012) have argued that students engaged in gameplay tend to lack the ability to discern the underlying content knowledge without instructional support, leading to poor learning or even misconceptions. It is difficult for students to make sense of their gameplay experience and build connections between gameplay and targeted content knowledge without extra instruction (Barab et al., 2009; Furtak & Penuel, 2018; Ke, 2008). For example, students may become too focused on accomplishing gameplay objectives that they neglect the learning opportunities within an educational game (Cheng et al., 2014).

One way to maintain an appropriate balance of frustration and the promotion of learning in educational games is to provide in-game learning supports (Kafai, 1996; Moreno & Mayer, 2005; Shute et al., 2021; Wouters & Van Oostendorp, 2013). For example, instructional videos presenting learning content within the guidance on overcoming game challenges could unobtrusively help students make connections between targeted knowledge and gameplay (Barab et al., 2009; Kafai, 1996; Moreno & Mayer, 2005; Yang et al., 2021). One problem that remains unresolved relates to the delivery timing of effective learning supports. Previous studies (e.g., Gee, 2005; Gresalfi & Barnes, 2016; Hamlen, 2014; Higgins, 2001; Kulik & Kulik, 1988) have explored the effect of delivery timing of support in both game-based and non-game-based learning environments. However, more empirical studies are needed to understand when to provide support (e.g., instructional videos)—i.e., before a game level or after a game level in educational games. Analyzing students’ gameplay behaviors (e.g., manipulating game mechanics, solving or quitting a game level) in response to different timings of learning supports can help game designers understand how such supports affect students’ learning and gaming performance. Thus, more empirical studies on the effects of delivery timing of learning supports from the perspective of students’ gameplay behavioral patterns are warranted to inform the design and implementation of learning supports in educational games. The current study aims to fill the gap by examining temporal patterns of students’ gameplay behaviors among those who received instructional videos before or after a game level, and those who did not receive any instructional videos when playing a physics game. The findings of this study can shed light on our understanding of when and how to provide learning supports (e.g., instructional videos) during game-based learning. This information is helpful to educational game designers, researchers, and educators. Next, we describe the relevant literature on learning supports delivered before and after a game level, and temporal patterns in educational gameplay behaviors.

Literature review

Supports before a game level

Supports given before a game level may serve as advance organizers to help students recall their prior knowledge (Barzilai & Blau, 2014; Liao et al., 2019; Mayer, 1983). Guided by the meaningful learning theory, Ausubel (1963) proposed the idea of advance organizers, providing relevant and inclusive materials in advance of the learning process. Advance organizers prime students’ prior knowledge to aid the process of encoding new knowledge within the existing schema. Mayer (1983) investigated the effects of advance organizers in digital learning environments and found they could facilitate conceptual understanding and problem solving. Liao and colleagues (2019) examined the use of an instructional video given before gameplay and its impact on students’ learning and cognitive load. The instructional videos explain the underlying concepts and their relations using various representations such as graphics, animation, sound, and narratives in application contexts. For example, there was one instructional video explaining concepts of displacement and length of path in the context of shooting a basketball. According to Liao et al. (2019), such videos could serve as advance organizers as they presented the targeted disciplinary knowledge to be used in solving upcoming game problems. They found that students who played the game with the instructional video showed significantly lower extraneous cognitive load and larger learning gains than students who played the same game without the supports. Rothkopf (1966) and Richards and DiVesta (1974) explain that pre-level supports help direct students’ attention to essential information presented during subsequent gameplay (e.g., content knowledge), which is crucial for learning in exploratory environments, e.g., educational games (Kirschner et al., 2006; Moreno & Mayer, 2005).

Offering well-designed pre-level supports does not guarantee positive impacts (Gee, 2005; Higgins, 2001). For example, Gee (2005) argued that students might ignore supports provided prior to gameplay because they are more interested in completing the game objectives. Higgins (2001) found that participants who received help at the beginning of the learning task held significantly more negative expectations of their outcome than their peers. She randomly assigned 97 participants to play a basketball shooting game in four conditions: (1) without any help, (2) with help at the beginning of their trial, (3) with help after their first trial, and (4) with help both before and after a trial. Results indicated that people tend to perceive help given at the beginning of their trial as expectations of negative evaluation. In other words, people believe that they receive support before gameplay because the game or instructor anticipates that their performance will be unsatisfactory. The researcher further argued that students’ negative perceptions of early support might shift their attention from learning to protecting their self-esteem. To reduce such negative effects, Higgins suggested emphasizing to students that the purpose of offering support is to improve their future performance and not a judgment of their current abilities.

Supports after a game level

Offering learning supports after students have engaged in gameplay is supported by a different set of instructional theories. According to impasse-driven learning and productive failure theory, letting students struggle or encounter failure is necessary to engage them in assembling new information and exploring multiple strategies (Kapur, 2008, 2012; Van Lehn et al., 2003). Researchers have argued that help or guidance should be provided after students try to solve problems or complete tasks on their own (Hamlen, 2014; Kapur, 2008, 2012; Kapur & Kinzer, 2009; Van Lehn et al., 2003).

Kapur (2008) proposed a productive failure design including two phases: exploration and consolidation. In the exploration phase, students activate their prior knowledge to explore and generate multiple solutions to a new problem. In the consolidation phase, external supports (e.g., direct instruction) are provided for students to compare and contrast their solutions with the canonical solution. Kapur and colleagues (2008; 2009; 2012) conducted multiple experiments to validate the productive failure design in classroom learning environments. For example, Kapur (2012) compared the direct instruction with the productive failure for learning the concept of variance in mathematics classrooms. In the direct instruction condition, students were taught by the instructor of the canonical solution of a mathematical problem using worked examples, followed by solving similar problems on their own. In the productive failure condition, students tried to solve the same problem without support, followed by teachers’ modeling and explaining the canonical solution to the problem. Findings showed that students from the productive failure condition generated more diverse representations and solutions, and significantly outperformed the direct instruction students on the understanding of the targeted concept (Kapur, 2012). Kapur and colleagues (2009; 2012) explained that students who received supports before exploration might feel constrained, believing their solution must emulate the one presented in the support. Exploration before direct instruction, however, is critical to activate and differentiate students’ prior knowledge, leading to various representations and solutions. In the subsequent instruction session, students could further compare and contrast their solutions to the canonical ones, which could facilitate their conceptual understanding (Kapur, 2012; Van Lehn et al., 2003). That is, students who receive supports after exploration might struggle with solving problems in a canonical manner but could yield greater content knowledge compared to students given supports early on (Kapur, 2012). More empirical studies are needed to validate the productive failure design in the context of educational games with embedded learning supports (i.e., supports as a part of gameplay rather than teacher-led instruction).

Additionally, supports provided after a game level may be perceived as an assessment of individuals’ prior performance (Conati et al., 2013; Gresalfi & Barnes, 2016; Higgins, 2001). How students react to such supports might depend on their performance (Conati et al., 2013). Conati and colleagues (2013) designed adaptive textual feedback (e.g., the definition of key math concepts, elaboration of why certain game moves were incorrect in terms of targeted knowledge) in a math game called Prime Climb. Players’ eye-tracking data were collected to measure how students interacted with the feedback during gameplay. Results indicated that students paid closer attention to the feedback provided after a correct move than feedback provided after an incorrect move. Researchers explained that students might treat support after correct moves as positive feedback and confirmation of their satisfactory performance, and thus were motivated to pay more attention to supports provided after correct moves than supports provided after incorrect moves.

In addition to supports designed to prompt content learning (e.g., instructional videos), researchers have also suggested including “game-related supports” such as hints to provide partial information on how to solve game levels (e.g., Schrader & Bastiaens, 2012; Sun et al., 2011; Yang et al., 2021). Research shows that game-related support could reduce students’ cognitive load and regulate students’ frustration during gameplay (Schrader & Bastiaens, 2012). However, Sun and colleagues (2011) have warned that students may overly rely on such supports before exploring on their own.

Temporal patterns in educational gameplay behaviors

Researchers have been investigating students’ game-based learning behaviors’ temporal patterns to gain an in-depth view into students’ gameplay and learning procedures and to answer not only what happens but also why and how it happens in game-based learning (Conati et al., 2013; Hou, 2015; Sung & Hwang, 2018). Researchers have been focused on sequence of behaviors such as interactions with game mechanics and in-game learning supports (e.g., Cheng et al., 2014; Conati et al., 2013; Hou, 2015; Sung & Hwang, 2018; Yang, 2017). One commonly used method to detect temporal patterns in educational gameplay behaviors is sequential analysis (Bakeman & Quera, 2011).

For example, Hou (2015) adopted sequential analysis to investigate students’ gameplay behavioral patterns across flow states when playing a role-play simulation science game. Eighty-six college students played the game for 30 min individually, followed by completing a flow scale. Their gameplay behaviors were video-recorded and manually coded, such as correct manipulation, incorrect manipulation, and analysis (i.e., comparing and selecting specific chemical equipment or materials). Results showed that students who scored low on the flow scale tended to execute incorrect manipulations repeatedly without returning to analysis, while students with high flow scores showed a reflective behavioral pattern (i.e., a significant transition from incorrect manipulation to analysis).

Unlike Hou who manually coded participants’ behaviors, Sung and Hwang (2018) and Yang (2017) analyzed students’ gameplay behaviors that were automatically recorded and coded in game log files. For instance, Yang (2017) investigated elementary school students’ behavioral patterns when they played a learning game with different types of feedback. Yang hypothesized that elaborated feedback would be more beneficial for students’ learning than verification feedback, but pretest and posttest showed no significant difference in learning achievement between students receiving verification feedback and elaboration feedback. The researcher used students’ playing (e.g., starting the game) and in-game learning behaviors (e.g., referring to the learning materials) data from the game log files and conducted sequential analysis to explore how students interacted with these two types of feedback. Results showed that students with verification feedback tended to refer to the learning materials (e.g., in-game digital textbook) more often when starting and completing the game than those with elaborated feedback. The researcher posited that verification feedback did not inform why an answer was correct or incorrect, forcing students to go back to the learning materials to check themselves. The regular referring of learning materials might compensate for the disadvantage of verification feedback and thus result in equivalent learning achievements between verification and elaboration feedback groups.

In summary, providing supports before a game level can facilitate content learning and problem-solving during gameplay (Barzilai & Blau, 2014; Liao et al., 2019; Mayer, 1983), but special cautions are needed to prevent negative perceptions of students toward such supports (Gee, 2005; Higgins, 2001). Supports delivered after a game level, based on productive failure design, can also scaffold knowledge acquisition (Kapur, 2008, 2012; Van Lehn et al., 2003). However, students’ performance may mediate the effects of post-level supports (Conati et al., 2013). Moreover, students with post-level supports tend to have diverse solutions but solve fewer problems compared to those with pre-level supports. Research on the effects of supports delivery timing in educational games is still scarce. Investigating the timing of learning supports by employing behavior analysis on temporal patterns (e.g., sequential analysis) can provide an in-depth understanding of how students react to supports delivered in different timings.

Current study

In this study, we used a 2D computer-based game called Physics Playground (Shute et al., 2019) with in-game instructional videos explaining targeted physics concepts in the context of the game. We collected game log data and conducted sequential analysis (Bakeman & Quera, 2011) to investigate students problem-solving behaviors across students who played the game with the instructional videos delivered (a) before a game level, (b) after a game level, or (c) without videos for the control condition.

In addition to the instructional videos, we also included hints in the game to provide partial advice on game solutions to prevent students from becoming unproductively frustrated. All three groups could access hints at any time. Seeking help before it is needed demonstrates a lack of self-regulation, and self-regulation is critical to academic performance (Zimmerman, 2008). Therefore, we also examined the effects of delivery timing of the instructional videos on students’ behaviors related to accessing hints before exploring game problems on their own. The research questions we addressed and corresponding hypotheses are below.

RQ1: Are students who play the game with the instructional videos (either delivered before or after a game level) more likely to determine correct game solutions than those who play the game without the instructional videos?

H1: Students who play the game with the instructional videos before or after a game level are more likely to quickly figure out correct game solutions than those who play the game without the instructional videos, because receiving the instructional videos before or after a game level can facilitate students’ content learning during gameplay (Barzilai & Blau, 2014; Kapur, 2008, 2012; Liao et al., 2019; Mayer, 1983; Van Lehn et al., 2003).

RQ2: Do students who receive the instructional videos after a game level generate more diverse solutions than those with instructional videos delivered before a game level?

H2: Students who receive the instructional videos after a game level would generate more diverse solutions than those with the instructional videos before a game level because the instructional videos given after a game level allows students the opportunity to explore the game problems on their own (Kapur, 2008, 2012).

RQ3: Are students who receive the instructional videos before or after a game level less likely to seek hints before trying to solve game problems on their own than those without the videos?

H3: Students who receive the instructional videos before or after a game level would be less likely to seek hints before trying to solve game problems on their own than those without the videos, because receiving the instructional videos can facilitate students’ content learning which may enhance their sense of competence, thus intrinsically motivate them during gameplay (via self-determination theory; Rogers, 2017).

Method

Participants

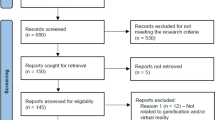

We recruited 134 students from all over the United States between the ages of 12 and 17 (M = 14.40, SD = .90). Of those that reported their gender, 62 reported as female, 64 reported as male, one reported as other. Participating students came from diverse ethnic backgrounds (i.e., White = 31.3%; Black or African American = 26.6%; Asian = 11.7%; Hispanic = 7.0%; Others or Mixed = 23.4%). We randomly assigned these students into one of three conditions: before (n = 40), after (n = 41), and control (n = 53). The unequal sample sizes across conditions were due to the fact that some students signed up for the study and were assigned to certain condition but did not participate the actual experiment. All students played the same game for the same total amount of time. In the before condition, students watched an instructional video immediately before starting a game level; in the after condition, students watched an instructional video immediately upon solving or quitting (i.e., giving up) a game level; in the control condition, students received no instructional videos. A total of 12 students (four from the before condition, six from the after condition, and two from the control condition) were excluded from further analysis because they did not complete the study (i.e., their pretest or posttest data were missing; the tests will be discussed below). There was not a statistically significant association between missing data and assignment to conditions (χ2 (2) = 3.42, p = .181). That is, we ended with 122 students across the before (n = 36), after (n = 35), and control (n = 51) conditions.

Educational game

Physics Playground is a 2D computer-based game created for 7th to 11th graders targeting nine Newtonian physics competencies. In the current study, we focused on two of them, i.e., energy can transfer and properties of torque. The goal in this game is to hit a red balloon with a green ball by drawing objects and creating simple machines on the screen. Similar to real life, gravity and laws of physics act on the objects and the ball. The game used in this study included five tutorial levels teaching students the basic game mechanics (e.g., how to nudge the ball; how to draw a springboard), two warm-up levels, and 28 focal levels which were designed to be solved by one of the four simple machines (i.e., ramp, lever, springboard, pendulum). Figure 1 shows an example level called Trunk Slide. In this example, the student created a springboard (the right panel) and attached a weight to it (in pink color). The next action would be to delete the weight to launch the ball at the proper angle to hit the balloon.

As mentioned earlier, we included Hints to provide textual advice on applicable simple machines for each game level (e.g., “Try drawing a ramp”). Students could access the hints via a button that was available during gameplay, located at the bottom of the screen.

Instructional videos

We used instructional videos as the focal learning support in this study based on the results of a previous study where we examined the effectiveness of eight different learning supports and found that only the usage of instructional videos was related to student learning gains (Shute et al., 2021). The instructional videos were created based on Mayer’s Principles of Multimedia Learning and Merrill’s First Principles of Instruction (see Kuba et al., 2021) with the help from two physics experts. The instructional videos used animations, on-screen text, and audio narration to explain physics concepts in the game environment (i.e., directing a green ball to hit a red balloon). For example, a level explaining property of torque with a lever solution would be matched with an instructional video using a lever to demonstrate torque (see Fig. 2). The videos were created using gameplay footage and followed the same structure (i.e., first the narrator would introduce and define the physics concept to be presented in the video, then the concept was explained via a failed attempt followed by a successful attempt).

A screenshot of an instructional video explaining property of torque using a lever. See https://bit.ly/3ydsWJt for the full video

We counterbalanced the delivery of the videos. We assigned half of the students in the treatment conditions to counterbalance order A and they received the videos on 14 focal levels. The other half of the treatment students received order B with videos on the other 14 focal levels. Orders A and B were organized in an alternating fashion. The first time viewing a video on a game level, students had to watch the video in its entirety. Subsequent views of the same video when replaying the game level could be exited at any time. Table 1 shows the list of focal levels, associated instructional videos, and counterbalance for the videos.

For example, “ECT-Ramp” means that this video explained energy can transfer in a ramp solution

ECT energy can transfer, POT property of torque

As indicated by Higgins (2001), game designers should emphasize that help is provided to improve students’ performance rather than served as a judgment of their abilities. In the current study, students assigned to the before condition received a message leading them to watch a video to help them solve the upcoming level. Students in the after condition received a similar message when they wanted to quit a level or when they solved a level (see Fig. 3).

Procedure

Students participated in the study across a 3-day virtual summer camp or a 5-day afterschool program. Due to the COVID-19 pandemic, all sessions were held online. Students and parents electronically signed the assent and consent forms before the study, respectively. During the summer camp or afterschool program, students first completed a questionnaire including demographic questions and the physics understanding pretest (this part took about 25 min). Then all students played through the game for about 150 min individually (actual gameplay time per day slightly varied between the summer camp and afterschool program). We assume that the potential impact of the difference in the actual gameplay time per day between the afterschool and summer camp participants is minimal as their total gameplay time was identical. Finally, students completed the posttest (25 min) and we distributed awards (certificates) to high-performing students including a monetary award ($10 e-Gift card) given to the student(s) who had the highest gain in score on the physics test. Note that the current study focuses on the temporal patterns in behaviors, while the results of the pretest and posttest of physics understanding were reported in another paper (see Rahimi et al., 2022).

Coding scheme

We collected behavioral data that were automatically recorded and coded in the game log files. When players played the game, the game engine logged students’ behaviors (e.g., drawing simple machines, starting a level) along with their user ID, the game level in which the behaviors occurred, and the event’s timestamp. To answer our three research questions, eight behaviors concerning solving game problems and assessing hints were collected and coded (Table 2).

Draw Ramp, Draw Pendulum, Draw Lever, and Draw Springboard behaviors refer to students drawing the corresponding simple machines. Drawing the correct simple machine demonstrated that students figured out the correct game solution to the given level (Karumbaiah et al., 2019). Start Level, Pass Level, and Quit Level refer to students starting a game level, successfully solving a game level, and quitting a game level without solving it. Hint refers to students accessing a textual hint.

Data analysis

In the current study, we adopted sequential analysis (Bakeman & Quera, 2011) as the data analysis method. Sequential analysis is a statistical method to be used to examine dynamic behavioral sequences (i.e., to what extent one behavior might occur after another behavior) based on timed-event data of group interactions (Bakeman & Quera, 2011). We selected sequential analysis because our research questions could be answered by comparing the behavioral sequences between two continuous pre-defined events. For example, in answering RQ1, we could compare the behavioral sequences from starting a game level to determining the correct game solution across conditions. Other analysis methods aiming to detect sequential patterns including sequential pattern mining (Mooney & Roddick, 2013) and process mining (van der Aalst, 2012) are not applicable because they do not focus on the sequence between two specific events. Instead, sequential pattern mining is to find frequent subsequences from sequence databases while process mining is to discover the real operational processes from event logs.

We filtered out the data from the five tutorial levels and two warm-up levels (as they did not have instructional videos) and coded students’ behaviors in playing the remaining 28 focal game levels based on the coding schema. A total of 30,050 behavioral codes produced by 115 students were coded (note that four students from the before condition, one student from after condition, and two students from the control condition did not play any focal levels).

As mentioned earlier, each game level was designed to be solved by one out of four simple machines. To investigate how students determined the correct game solutions (i.e., simple machines) across conditions, we grouped students’ coded behaviors by game levels with the same solvable simple machine. In total, 12 sequential analyses (3 conditions × 4 simple machines) were conducted.

In each sequential analysis, we first computed a behavior frequency transition matrix presenting the frequency of each behavior transition (i.e., from an initial behavior to a subsequent behavior). Then, we computed the adjusted residuals table (z-score table) to identify the statistical significance of individual transition, i.e., to what extent that sequence of behaviors can be predicted by prior behavior (Bakeman & Quera, 2011). A z-score of a transition greater than 1.96 indicated that the specific transition was statistically significant (p < .05). Next, we drew the behavioral transition diagram to visualize the significant transitions shown in the adjusted residuals table. Finally, to compare sequence strength between different behavioral patterns, we calculated the index of effect size: Yule’s Q. According to Bakeman and Quera (2011), Yule’s Q is an index of the strength of sequential association. Similar to the Pearson correlation coefficient, Yule’s Q ranges from − 1 to 1 and a larger absolute value indicates stronger sequential association (i.e., the subsequent behavior is more likely to occur after the initial behavior). The adjusted residuals and Yule’s Q were calculated via an HTML5 application for sequential analysis published on GitHub by Yung-Ting Chen (2007). To validate the application, we calculated the adjusted residuals of sample data in three different ways: (1) Chen’s application, (2) pencil and paper by following the equations in Bakeman and Quera’s (2011, p. 109) book, and (3) another sequential analysis software program called GSEQ 5.1 (Bakeman & Quera, 2011). Results from all these three approaches were consistent.

Results

Our random assignment of the participants into the three conditions resulted in balanced conditions in terms of age, gaming experience, and prior knowledge. In the demographic questionnaire at the beginning of the experiment, students were asked “How often do you play video games?” Seven students (three from the before condition and four from the after condition) did not complete that questionnaire. We did not exclude them from the sequential analysis as long as they have behavior data on the focal game levels. One-way ANOVAs showed no significant difference among conditions regarding age (F (2, 112) = .29, p = .750) and gaming experience (F (2, 112) = .30, p = .742). To validate the consistency in prior knowledge across conditions, we conducted another one-way ANOVA to compare the pretest scores between the three conditions (see Rahimi et al., 2022 for full results of the pretest and posttest). Results revealed no significant difference in students’ pretest scores across conditions (F (2, 119) = 2.89, p = .059). Furthermore, there was no significant difference among the before (M = 10.58, SD = 10.02), after (M = 11.22, SD = 9.07), and control (M = 10.00, SD = 7.10) conditions in terms of total focal levels solved (F (2, 117) = .21, p = .813). We met the assumption of homogeneity of variance with all the ANOVAs above.

Sequential analyses were conducted to investigate behavioral patterns in terms of solving game problems and accessing help before trying any simple machines across students who received instructional videos before a game level, after a game level, and no videos. The adjusted residual tables of students’ targeted gameplay behaviors by conditions for ramp, pendulum, lever, and springboard levels are shown in Appendix. In these tables, the first column represents the initial behaviors, and the first row presents the subsequent behaviors that occurred after the corresponding initial behaviors. We selected all statistically significant sequences and drew behavioral transition diagrams by conditions and by simple machines (see Figs. 4, 5, 6, 7).

RQ1: determining correct game solutions

Regarding the first research question, we hypothesized that students who played the game with the instructional videos before or after a game level would be more likely to determine correct game solutions than those who played the game without any instructional videos. To test the hypothesis, we compared the sequence from starting a level to drawing the correct simple machine across students who played the game with the instructional videos before a level, after a level, and without the videos. To better compare across conditions, we summarized these sequences from Figs. 4, 5, 6, 7 in Table 3.

As shown in Table 3, when playing ramp and pendulum levels, all conditions showed a significant sequence from starting a level to drawing the correct simple machine (i.e., Start Level → Draw Ramp, Start Level → Draw Pendulum, respectively). However, when playing lever and springboard levels, no significant sequence from starting a level to drawing the correct simple machine was found. The results indicated that students, regardless of condition, were more likely to determine the correct game solutions after starting a ramp or pendulum level than lever or springboard level. This suggests that lever and springboard levels might be harder than ramp and pendulum levels for students (more discussion can be found in the next section). Yule’s Q was computed to compare the strength of the significant sequence Start Level → Draw the correct simple machine in ramp and pendulum levels across conditions (see Table 3). In ramp levels, the Yule’s Q of this sequence for the before (.91), after (.94), and control conditions (.93) were all close to 1, indicating that almost all students figured out the correct simple machine right after starting a ramp level. In pendulum levels, the Yule’s Q of this sequence for the before, after, and control conditions were .60, .58, .49, respectively. That is, only in pendulum levels, students with instructional videos, either before or after a game level, were more likely to adopt the correct simple machine at the beginning of a game level compared to those without videos. Therefore, our hypothesis that students who play the game with the instructional videos before or after a game level would be more likely to determine correct game solutions than those who play the game without the instructional videos was partially accepted.

RQ2: generating diverse game solutions

Regarding the second research question, we hypothesized that students with the instructional videos after a game level would generate more diverse solutions than those with the instructional videos before a game level. To test the hypothesis, we compared the significant sequence from drawing simple machines to passing a level across conditions. For the convenience of comparison, we summarized all the significant sequences from drawing simple machines to passing a level from Figs. 4, 5, 6, 7 in Table 4.

As shown in Table 4, there was a significant sequence Draw Ramp → Pass Level for all three conditions when students played ramp levels. However, compared to the before and after conditions, students from the control condition showed another significant sequence Draw Springboard → Pass Level. That is, most students tended to solve ramp levels by drawing a ramp, but students in the control condition also solved these levels by using springboards. In playing pendulum levels, there was no significant sequence from drawing simple machines to passing a level, indicating that students might not solve the pendulum levels even though they determined the correct simple machine. Regarding lever levels, we found students in the before and after conditions tended to solve these levels by ramp (Draw Ramp → Pass Level), while students from the control condition tended to solve these levels by pendulum or springboard (Draw Pendulum → Pass Level, Draw Springboard → Pass Level). All students tended to solve springboard levels with springboards (Draw Springboard → Pass Level). In summary, students from the control condition were more likely to have diverse solutions than those in the before and after conditions when playing ramp and level levels. Therefore, our hypothesis that students with the instructional videos after a game level would generate more diverse solutions than those with the instructional videos before a game level was rejected.

RQ3: accessing hints before exploration

Regarding the third research question, we hypothesized that students with the instructional videos before or after a game level would be less likely to access hints before trying to solve game problems on their own compared to those without videos. To test the hypothesis, we compared the sequence from starting a level to accessing hints across conditions. For the convenience of comparison, we summarized all these sequences from Figs. 4, 5, 6, 7 in Table 5.

As shown in Table 5, there was no significant sequence Start Level → Hint for all three conditions when students played the ramp levels. However, when playing all other levels, students, regardless of condition, tended to refer to hints right after starting a lever level. We computed Yule’s Q to compare the strength of this sequence across conditions. Results showed that students in the control group had a stronger sequential pattern of accessing hints after starting a pendulum, lever, or springboard level than those in the before and after conditions. That is, students with the instructional videos before or after a game level were less likely to access hints before playing on their own than those without the videos in playing the most levels. Therefore, our hypothesis that students with the instructional videos before or after a game level would be less likely to access hints before exploring on their own than those without the videos was partially accepted.

Additionally, in the before condition, we found the strength of the sequence Start Level → Hint was greater when playing pendulum levels than playing lever and springboard levels. However, in the after and control conditions, the strength of that sequence was greater when playing lever and springboard levels than playing pendulum levels.

Discussion

In-game learning supports are crucial to ensure a “pleasantly frustrating” experience for the learner and draw the connection between gameplay and content knowledge (Kafai, 1996; Moreno & Mayer, 2005; Shute et al., 2021; Wouters & Van Oostendorp, 2013). The delivery timing of learning supports might influence how students perceive the supports and students’ learning via gameplay (Barzilai & Blau, 2014; Gee, 2005; Gresalfi & Barnes, 2016; Hamlen, 2014; Higgins, 2001; Kulik & Kulik, 1988; Liao et al., 2019). The current study investigated the effects of differential timings of instructional videos on students’ problem-solving and help-seeking behaviors as they played Physics Playground.

Determining correct game solutions

Correctly figuring out the simple machine to use at a given level is key to problem solving and demonstrates students’ conceptual understanding of physics when playing the game used in the current study (Karumbaiah et al., 2019; Shute et al., 2013). Karumbaiah and colleagues (2019) adopted an Epistemic Network Analysis to compare gameplay behaviors between students who quit a level and those who did not when playing Physics Playground. Results showed that students who quit a level tended to miss the correct simple machines compared to those who did not quit the level. In line with Mayer (1983), we found that receiving supports before a game level could facilitate students’ problem-solving performance in some game levels. In contrast to the findings by Kapur and colleagues (2009; 2012), we found that receiving supports after students played a level on their own also helped them to quickly determine the correct game solutions to some extent. One possible explanation is that, unlike the productive failure design in Kapur (2012) where students received instruction after the whole exploration, in the current after condition, the instructional video provided at the end of one game level could work as support to students’ gameplay on subsequent levels to some extent. Although the simple machine demonstrated in one instructional video shown at the end of a certain game level might not be used to solve the subsequent level (see Table 1), the physics concept explained in the video could be applied to other levels.

We suspect that the instructional videos, delivered either before or after a game level, would help students to solve game problems, indicating an enhanced understanding of the targeted physics. However, such an advantage of instructional videos in facilitating gaming performance only existed in pendulum levels. We conjecture that this was because pendulum levels were neither too easy (like ramp levels) nor too hard (like lever and springboard levels) for students.

On the one hand, almost all students figured out the correct simple machine right after starting a ramp level, indicating that ramp levels were relatively easy for students. Therefore, students might have simply ignored the instructional video as they already knew the solution and were eager to dive into gameplay (Gee, 2005; Higgins, 2001). On the other hand, when playing lever and springboard levels, no significant sequence was found from starting a level to drawing the correct simple machine. Instead, students tended to refer to hints after starting a level. The results suggest that students found these levels relatively hard (probably because students were unfamiliar with the concept of torque) and might have had more unsuccessful attempts than the other levels. As indicated by Conati and colleagues (2013), students were more likely to perceive supports that were delivered after incorrect actions as negative feedback, and thus give the supports less attention. Therefore, we suspect that students might have held negative perceptions and paid insufficient attention to the instructional videos provided in the hard levels. As indicated by Higgins (2001), game designers should emphasize that supports are provided to improve students’ performance rather than serve as a judgment of their abilities to avoid such negative perceptions.

In conclusion, instructional videos may possibly help students adopt the correct solutions on game levels that are not too easy or too hard. We argue that game designers should match students’ competency levels with the difficulty of the game levels when designing in-game learning supports. In other words, supports should be provided only when game levels exceed a student’s current level of competence, and add detailed guidance when more help is needed (Vygotsky, 1978).

Generating diverse gaming solutions

We designed game levels in Physics Playground with one solvable simple machine in mind. However, similar to our previous research (Shute et al., 2021), students sometimes created different solutions than expected. Results showed that students without instructional videos were more likely to solve game levels using diverse solutions compared to those who received videos. Kapur (2008, 2012) and Van Lehn et al. (2003) have argued that allowing students to explore ill-structured problems on their own first would promote diverse problem-solving strategies and solutions compared to providing supports at the beginning of problem solving. Again, this result might be due to the different implementation of the productive failure design between Kapur (2012) and the after condition in the current study. The control condition in our study was more like the exploration phase in the productive failure design by Kapur (2012) because students in this condition received no support at all. Therefore, our findings that the control condition generated more diverse solutions compared to the before and after conditions provided partial support for the productive failure design in the game-based learning context. We suggest future educational game research examine the effects of providing support after the gameplay session (e.g., the current control condition followed by instructional videos) on students’ game performance and conceptual understanding.

The instructional videos used in the current study only highlighted one simple machine when explaining the targeted physics concepts, which might restrict students’ solution strategies. Therefore, we suggest that game designers do not refer to specific game solutions when explaining the underlying content in instructional videos. Alternatively, game designers could include more than one representation of a game solution in designing instructional videos to avoid constraining students’ problem-solving strategies.

Furthermore, we found no significant sequence from drawing pendulum to passing a pendulum level. That is, students could not solve a pendulum level even though they correctly figured out which simple machine to use. This finding can be explained by our recent study (see Karumbaiah et al., 2019), which found that students may struggle with the drawing or execution of the solution (e.g., related to timing and placement of simple machines) even though they correctly figured out the correct solution.

Help-seeking behaviors before exploration

Students’ asking for help after exploration on their own is a self-regulation strategy crucial to academic achievement (Hamlen, 2014; Zimmerman, 2008). However, we found that students significantly accessed hints right after starting a game level when playing all levels, except ramp levels. Game designers could consider inactivating the ever-present supports (e.g., hints in the current game) for a certain period of time during the beginning of a game level.

We found that students who received instructional videos were less likely to access hints before drawing any simple machines at the beginning of some game levels compared to those without such videos. This suggests that receiving instructional videos before or after a game level could delay students’ help-seeking behaviors and encourage them to figure out game problems on their own before asking for help. Also note that sequential analysis can only reveal the sequence of two events, rather than the actual interval time between the two events. That is, a significant sequential association from starting a level to accessing hints provides no information about the time between the occurrence of these two events.

We conjecture that students perceived the instructional videos as helpful in promoting their understanding of the targeted subject knowledge and game performance, which enhanced their sense of competence (Ryan et al., 2006). The sense of competence, according to the self-determination theory (Rogers, 2017), is crucial to an individual’s intrinsic motivation. Therefore, students who received the instructional videos were more engaged in gameplay and more likely to explore the game problems themselves before seeking extra help than those without the videos.

As discussed earlier, lever and springboard levels might be harder to solve than pendulum levels for students. We found that students in the after and control conditions were less likely to access hints when starting a relatively easy level (i.e., pendulum level) than starting a relatively hard level (i.e., lever or springboard level). Chances are that students felt more competent when they encountered relatively easy levels and chose to try these levels on their own first compared to relatively hard levels. In contrast, students who watched instructional videos before entering a game level were more likely to access hints when starting relatively easy levels than relatively hard levels. We suspect that it was because students were more likely to appreciate the support prior to gameplay in more difficult levels. That is, receiving instruction before gameplay might not facilitate the instant generation of canonical solutions to difficult game levels (see findings regarding RQ1), but would make students feel more confident in exploring these levels on their own.

Conclusions and implications

In the current study, we employed sequential analysis to investigate students’ problem-solving and help-seeking behaviors when playing a physics game with instructional videos before a game level, after a game level, or without videos. We found that the instructional videos helped students adopt the correct simple machines at the beginning of reasonably difficult game levels but might constrain students’ possible solutions to game problems. Moreover, the videos delayed students’ help-seeking behaviors at the beginning of a game level and encouraged students to figure out game problems on their own before asking for external help.

The current study yields contributions to methodology and practice in the field of game-based learning. In terms of methodology, this study collected students’ gameplay data via game log files and analyzed the data using sequential analysis. Compared to other behavioral data collection methods (e.g., video recording or eye-tracking), game log file data are more efficient in collecting and coding. As such, this analysis approach could detect and visualize statistically significant behavioral patterns to help uncover students’ behaviors when playing educational games. Future researchers can also adopt this methodology to explore how people learn via educational games.

With regard to practical implications, the study provided empirical evidence indicating that instructional videos delivered before or after a game level could enhance students’ game performance and encourage them to explore on their own before seeking help. Suggestions regarding the design of in-game learning supports were also discussed based on the findings of the current study. For example, the supports should adapt to the difficulty of game levels. Game instructions could highlight that the supports are aimed to improve students’ performance, not judge their abilities. And to avoid imposing constraints, designers may consider providing multiple voluntary supports with a variety of solution strategy suggestions. Further work is needed to provide additional evidence to verify these in-game learning support design suggestions.

Limitations

The current study has some limitations. First, as mentioned earlier, the first view of the instructional video on a game level was mandatory, while subsequent views of the video on the game level were optional (i.e., students could skip the video on a certain game level if they have viewed it before). Thus, students in the treatment conditions could view the instructional video of each game level a different number of times. In the current study, we treated students who viewed the videos once versus multiple times without distinction, although the frequency of views can affect their content learning based on our previous study (Shute et al., 2021). Second, previous research demonstrated that students’ problem-solving performance in this physics game corresponded to their understanding of the underlying physics concepts (Karumbaiah et al., 2019; Shute et al., 2013). To gain a better understanding of how students solve game problems differently when received instructional videos before or after a game level compared to a control condition (without the videos), the current study examined the effects of delivery timing of instructional videos on students’ in-game problem-solving performance in terms of correctness and diversity of solutions. While we made some tentative interpretations regarding the effects of the instructional videos’ delivery timing on students’ conceptual understanding based on the literature, data analyzed in the current study provided no direct evidence on students’ subject learning as a function of supports’ delivery timing.

References

Adams, E. (2013). Fundamentals of game design (3rd ed.). New Riders.

Ausubel, D. P. (1963). Cognitive structure and the facilitation of meaningful. Journal of Teacher Education, 14(2), 217–222. https://doi.org/10.1177/002248716301400220

Bakeman, R., & Quera, V. (2011). Sequential analysis and observational methods for the behavioral sciences. Cambridge University Press. https://doi.org/10.1017/CBO9781139017343

Barab, S. A., Scott, B., Siyahhan, S., Goldstone, R., Ingram-Goble, A., Zuiker, S. J., & Warren, S. (2009). Transformational play as a curricular scaffold: Using videogames to support science education. Journal of Science Education and Technology, 18(4), 305–320. https://doi.org/10.1007/s10956-009-9171-5

Barzilai, S., & Blau, I. (2014). Scaffolding game-based learning: Impact on learning achievements, perceived learning, and game experiences. Computers and Education, 70, 65–79. https://doi.org/10.1016/j.compedu.2013.08.003

Chen, Y-T. (2017). An HTML5 widget for lag sequential analysis [Apparatus]. https://github.com/pulipulichen/HTML-Lag-Sequential-Analysis

Cheng, M. T., Su, T., Huang, W. Y., & Chen, J. H. (2014). An educational game for learning human immunology: What do students learn and how do they perceive? British Journal of Educational Technology, 45(5), 820–833. https://doi.org/10.1111/bjet.12098

Conati, C., Jaques, N., & Muir, M. (2013). Understanding attention to adaptive hints in educational games: An eye-tracking study. International Journal of Artificial Intelligence in Education, 23(1–4), 136–161. https://doi.org/10.1007/s40593-013-0002-8

Furtak, E. M., & Penuel, W. R. (2018). Coming to terms: Addressing the persistence of “hands-on” and other reform terminology in the era of science as practice. Science Education, 103(1), 167–186. https://doi.org/10.1002/sce.21488

Gee, J. P. (2005). Learning by design: Good video games as learning machines. E-Learning and Digital Media, 2(1), 5–16. https://doi.org/10.2304/elea.2005.2.1.5

Gresalfi, M. S., & Barnes, J. (2016). Designing feedback in an immersive videogame: Supporting student mathematical engagement. Educational Technology Research and Development, 64(1), 65–86. https://doi.org/10.1007/s11423-015-9411-8

Hamlen, K. R. (2014). Video game strategies as predictors of academic achievement. Journal of Educational Computing Research, 50(2), 271–284. https://doi.org/10.2190/EC.50.2.g

Higgins, M. C. (2001). When is helping helpful? Effects of evaluation and intervention timing on basketball performance. The Journal of Applied Behavioral Science, 37(3), 280–298. https://doi.org/10.1177/0021886301373002

Hou, H. T. (2015). Integrating cluster and sequential analysis to explore learners’ flow and behavioral patterns in a simulation game with situated-learning context for science courses: A video-based process exploration. Computers in Human Behavior, 48, 424–435. https://doi.org/10.1016/j.chb.2015.02.010

Kafai, Y. B. (1996). Learning design by making games. In Y. B. Kafai & M. Resnick (Eds.), Constructionism in practice: Designing, thinking and learning in a digital world (pp. 71–96). Routledge.

Kapur, M. (2008). Productive failure. Cognition and Instruction, 26(3), 379–424. https://doi.org/10.1080/07370000802212669

Kapur, M. (2012). Productive failure in learning the concept of variance. Instructional Science, 40(4), 651–672. https://doi.org/10.1007/s11251-012-9209-6

Kapur, M., & Kinzer, C. K. (2009). Productive failure in CSCL groups. International Journal of Computer-Supported Collaborative Learning, 4(1), 21–46. https://doi.org/10.1007/s11412-008-9059-z

Karumbaiah, S., Baker, R. S., Barany, A., Shute, V. J. (2019). Using epistemic networks with automated codes to understand why players quit levels in a learning game. In B. Eagan, M. Misfeldt, & A. Siebert-Evenstone (Eds.), ICQE 2019: Advances in quantitative ethnography (Vol. 1112, pp. 106–116). Springer. https://doi.org/10.1007/978-3-030-33232-7_9

Ke, F. (2008). A case study of computer gaming for math: Engaged learning from gameplay? Computers and Education, 51(4), 1609–1620. https://doi.org/10.1016/j.compedu.2008.03.003

Kiili, K. (2005). Digital game-based learning: Towards an experiential gaming model. Internet and Higher Education, 8(1), 13–24. https://doi.org/10.1016/j.iheduc.2004.12.001

Kirschner, P. A., Sweller, J., & Clark, R. E. (2006). Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educational Psychologist, 41(2), 111–127. https://doi.org/10.1207/s15326985ep4102

Kuba, R., Rahimi, S., Smith, G., Shute, V., & Dai, C. P. (2021). Using the first principles of instruction and multimedia learning principles to design and develop in-game learning support videos. Educational Technology Research and Development, 69(2), 1201–1220. https://doi.org/10.1007/s11423-021-09994-3

Kulik, J. A., & Kulik, C. L. C. (1988). Timing of feedback and verbal learning. Review of Educational Research, 58(1), 79–97. https://doi.org/10.3102/00346543058001079

Liao, C.-W., Chen, C.-H., & Shih, S.-J. (2019). The interactivity of video and collaboration for learning achievement, intrinsic motivation, cognitive load, and behavior patterns in a digital game-based learning environment. Computers & Education, 133, 43–55. https://doi.org/10.1016/j.compedu.2019.01.013

Mayer, R. E. (1983). Can you repeat that? Qualitative effects of repetition and advance organizers on learning from science prose. Journal of Educational Psychology, 75(1), 40–49. https://doi.org/10.1037/0022-0663.75.1.40

Mooney, C. H., & Roddick, J. F. (2013). Sequential pattern mining—Approaches and algorithms. ACM Computing Surveys (CSUR), 45(2), 1–39. https://doi.org/10.1145/2431211.2431218

Moreno, R., & Mayer, R. E. (2005). Role of guidance, reflection, and interactivity in an agent-based multimedia game. Journal of Educational Psychology, 97(1), 117–128. https://doi.org/10.1037/0022-0663.97.1.117

Pierce, W. D., & Cheney, C. D. (2013). Behavior analysis and learning (5th ed.). Psychology Press. https://doi.org/10.4324/9780203441817

Rahimi, S., Shute, V. J., Fulwider, C., Bainbridge, K., Kuba, R., Yang, X., Smith, G., Baker, R. S. & D’Mello, S. K. (2022). Is it better to provide embedded learning supports in educational games before or after attempting game levels? [Manuscript submitted for publication]. University of Florida.

Reiber, L. P. (1996). Seriously considering play: Designing interactive learning environments based on the blending of microworlds, simulations, and games. Educational Technology Research and Development, 44(2), 43–58. https://doi.org/10.1007/BF02300540

Richards, J. P., & Di Vesta, F. J. (1974). Type and frequency of questions in processing textual material. Journal of Educational Psychology, 66(3), 354–362. https://doi.org/10.1037/h0036349

Rogers, R. (2017). The motivational pull of video game feedback, rules, and social interaction: Another self-determination theory approach. Computers in Human Behavior, 73, 446–450. https://doi.org/10.1016/j.chb.2017.03.048

Rothkopf, E. Z. (1966). Learning from written instructive materials: An exploration of the control of inspection behavior by test-like events. American Educational Research Journal, 3(4), 241–249. https://doi.org/10.3102/00028312003004241

Ryan, R. M., Rigby, C. S., & Przybylski, A. (2006). The motivational pull of video games: A self-determination theory approach. Motivation and Emotion, 30(4), 347–363. https://doi.org/10.1007/s11031-006-9051-8

Schrader, C., & Bastiaens, T. (2012). Learning in educational computer games for novices: The impact of support provision types on virtual presence, cognitive load, and learning outcomes. International Review of Research in Open and Distance Learning, 13(3), 206–227. https://doi.org/10.19173/irrodl.v13i3.1166

Shute, V. J., Almond, R. G., & Rahimi, S. (2019). Physics Playground (Version 1.3) [Computer software]. Tallahassee, FL: Retrieved from https://pluto.coe.fsu.edu/ppteam/pp-links/

Shute, V. J., Rahimi, S., Smith, G., Ke, F., Almond, R., Dai, C. P., Kuba, R., Liu, Z., Yang, X., & Sun, C. (2021). Maximizing learning without sacrificing the fun: Stealth assessment, adaptivity and learning supports in educational games. Journal of Computer Assisted Learning, 37(1), 127–141. https://doi.org/10.1111/jcal.12473

Shute, V. J., Ventura, M., & Kim, Y. J. (2013). Assessment and learning of qualitative physics in Newton’s Playground. The Journal of Educational Research, 106, 423–430. https://doi.org/10.1080/00220671.2013.832970

Squire, K. D., & Jan, M. (2007). Mad city mystery: Developing scientific argumentation skills with a place-based augmented reality game on handheld computers. Journal of Science Education and Technology, 16(1), 5–29. https://doi.org/10.1007/s10956-006-9037-z

Squire, K. D., & Klopfer, E. (2007). Augmented reality simulations on handheld computers. The Journal of the Learning Sciences, 16(3), 371–413. https://doi.org/10.1080/10508400701413435

Sun, C. T., Wang, D. Y., & Chan, H. L. (2011). How digital scaffolds in games direct problem-solving behaviors. Computers and Education, 57(3), 2118–2125. https://doi.org/10.1016/j.compedu.2011.05.022

Sung, H. Y., & Hwang, G. J. (2018). Facilitating effective digital game-based learning behaviors and learning performances of students based on a collaborative knowledge construction strategy. Interactive Learning Environments, 26(1), 118–134. https://doi.org/10.1080/10494820.2017.1283334

Van Der Aalst, W. (2012). Process mining. Communications of the ACM, 55(8), 76–83. https://doi.org/10.1145/2240236.2240257

Van Lehn, K., Siler, S., Murray, C., Yamauchi, T., & Baggett, W. B. (2003). Why do only some events cause learning during human tutoring? Cognition and Instruction, 21(3), 209–249. https://doi.org/10.1207/S1532690XCI2103_01

Vygotsky, L. (1978). Mind in society: The development of higher psychological functions. Harvard University Press.

Wouters, P., & Van Oostendorp, H. (2013). A meta-analytic review of the role of instructional support in game-based learning. Computers and Education, 60(1), 412–425. https://doi.org/10.1016/j.compedu.2012.07.018

Yang, K. H. (2017). Learning behavior and achievement analysis of a digital game-based learning approach integrating mastery learning theory and different feedback models. Interactive Learning Environments, 25(2), 235–248. https://doi.org/10.1080/10494820.2017.1286099

Yang, X., Rahimi, S., Shute, V., Kuba, R., Smith, G., & Alonso-Fernández, C. (2021). The relationship among prior knowledge, accessing learning supports, learning outcomes, and game performance in educational games. Educational Technology Research and Development, 69(2), 1055–1075. https://doi.org/10.1007/s11423-021-09974-7

Young, M. F., Slota, S., Cutter, A. B., Jalette, G., Mullin, G., Lai, B., et al. (2012). Our princess is in another castle: A review of trends in serious gaming for education. Review of Educational Research, 82(1), 61–89. https://doi.org/10.3102/0034654312436980

Zimmerman, B. J. (2008). Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. American Educational Research Journal, 45(1), 166–183. https://doi.org/10.3102/000283120

Acknowledgements

This work was supported by the US National Science Foundation [Award Number #037988] and the US Department of Education [Award Number #039019].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Yang, X., Rahimi, S., Fulwider, C. et al. Exploring students’ behavioral patterns when playing educational games with learning supports at different timings. Education Tech Research Dev 70, 1441–1471 (2022). https://doi.org/10.1007/s11423-022-10125-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-022-10125-9