Abstract

Textbooks are a vital component in many higher education contexts. Increasing textbook prices, coupled with general rising costs of higher education have led some instructors to experiment with substituting open educational resources (OER) for commercial textbooks as their primary class curriculum. This article synthesizes the results of 16 studies that examine either (1) the influence of OER on student learning outcomes in higher education settings or (2) the perceptions of college students and instructors of OER. Results across multiple studies indicate that students generally achieve the same learning outcomes when OER are utilized and simultaneously save significant amounts of money. Studies across a variety of settings indicate that both students and faculty are generally positive regarding OER.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Textbooks are a traditional part of the educational experience for many college students. An underlying assumption of the use of textbooks is that students who utilize them will have enriched academic experiences and demonstrate improved class performance. Skinner and Howes (2013) point out that there are multiple benefits that stem from students reading their assigned materials, including increasing the baseline understanding that students bring to class. Darwin (2011) found positive correlations for students in accounting classes between completing the assigned reading and class performance. Similarly, Bushway and Flower (2002) found that when students were motivated to read by being quizzed on the material, their overall performance in the class improved.

At the same time, textbooks are not as widely read as professors might hope. Berry et al. (2010) surveyed 264 students taking finance courses and found that “only 18 % of the students reported that they frequently or always read before coming to class. In contrast, 53 % reported that they never or rarely read the textbook before coming to class” (p. 34). Part of the reason that textbooks are underutilized is that they are expensive. A survey of 22,129 post-secondary students in Florida found that 64 % of students reported having not purchased a required textbook because of its high cost (Florida Virtual Campus 2012).

While increased access to textbooks alone will not ensure the success of college students, textbooks are generally recognized as being important learning resources. Because textbooks represent a significant percentage of expenses faced by college students, efforts should be made where possible to ameliorate these costs, as this could potentially increase student success. This is particularly true in the instances in which high-quality Open Educational Resources (OER) are available as a free substitute for commercial textbooks.

The purpose of this study is to provide a synthesis of published research performed in higher education settings that utilized OER. I will describe and critique the 16 published studies that investigate the perceived quality of OER textbooks and their efficacy in terms of student success metrics. I first provide a general review of the literature relating to OER.

Review of literature

The term “Open Educational Resources” comes from the 2002 UNESCO Forum on the Impact of Open Courseware for Higher Education in Developing Countries, in which the following definition for OER was proffered: “The open provision of educational resources, enabled by information and communication technologies, for consultation, use and adaptation by a community of users for non-commercial purposes” (UNESCO 2002, p. 24). The vision of OER was to enable the creation of free, universally accessible educational materials, which anyone could use for teaching or learning purposes.

In the intervening years much has been done to bring to pass the vision stated at that 2002 UNESCO meeting. Many OER have been created, including courses, textbooks, videos, journal articles, and other materials that are usually available online and are licensed in such a way (typically with a Creative Commons license) so as to allow for reuse and revision to meet the needs of teachers and students (Johnstone 2005; Bissell 2009; D’Antoni 2009; Hewlett 2013). In addition, much has been written about the history and theory of OER (Wiley et al. 2014). OER has moved from theory into practice; currently several options are available to locate high-quality open textbooks, a subset of OER often used to substitute for traditional textbooks. Among those providers are Openstax (openstaxcollege.org), The Saylor Foundation (saylor.org), and Washington State’s Open Course Library (opencourselibrary.org). The Minnesota Open Textbook Library (open.umn.edu/opentextbooks/) provides a clearinghouse of open textbooks and includes faculty reviews of these materials.

Notwithstanding the growth in resources relating to OER, Morris-Babb and Henderson (2012), in a survey of 2707 faculty members and administrators of colleges and universities in Florida, found that “only 7 % of that group were ‘very familiar’ with open access textbooks, while 52 % were ‘not at all familiar’ with open access textbooks” (p. 151). More recently, Allen and Seaman (2014) in their nationally representative survey of 2144 faculty members in the United States found that only 34 % of respondents expressed awareness of OER.

In order for faculty to replace commercial textbooks with OER, they not only need to be aware of OER, they also want to know that OER have proven efficacy and trusted quality (Allen and Seaman 2014). The purpose of this study is to identify and discuss the 16 published research studies regarding the efficacy of OER in higher education and/or the perceptions of college students and teachers regarding the quality of OER. In the following section I describe the method utilized in selecting these articles.

Method

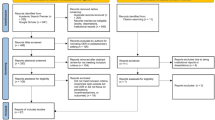

Six criteria were used to determine inclusion in the present study. First, the resource(s) examined in the study needed to be OER that were the primary learning resource(s) used in a higher education setting and be compared with traditional learning resources. It is important to note that OER vary widely in how they are presented. In some instances they may be a digital textbook (which could printed for or by students). OER can also be electronic learning modules. All types of OER were included in the present study. Second, the research needed to have been published by a peer-reviewed journal, or be a part of an institutional research report or dissertation. Third, the research needed to have data regarding either teacher and/or student perceptions of OER quality, or educational outcomes. Fourth, the study needed to have at least 50 participants and clearly delineated results in terms of the numbers of research subjects who expressed opinions about OER and/or had their learning measured. Finally, the study needed to have been published in English, and be published prior to October of 2015.

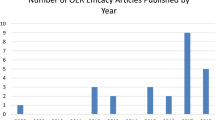

I identified potential articles for inclusion based on three approaches. One was to examine the literature cited in key efficacy and perceptions studies. A second was to perform a search of the term “Open Educational Resources” on Google Scholar, which yielded 993 articles. Many of these were easily excluded because based on the title or venue they clearly did not meet the above criteria. OER was not the main topic of some of these articles; moreover, a high number of the articles provided introductory approaches to OER or focused on theoretical applications of OER. Those that appeared to have the potential for inclusion were read to determine whether they met the above-mentioned criteria. The third and final approach was that I sent the studies I had identified to 246 researchers who had published on OER related topics and asked them if they were aware of additional studies that I had missed. The result of these approaches is the 16 studies discussed in the present study.

Results—studies pertaining to student learning outcomes

To date, nine studies have been published that focus on analyzing student learning outcomes when OER are substituted for traditional textbooks in higher education settings. In this section I review these studies and synthesize their overall results.

Lovett et al. (2008) measured the result of implementing an online OER component of Carnegie Mellon University’s Open Learning Initiative (OLI). In fall 2005 and spring 2006 researchers invited students who had registered for an introductory statistics class at Carnegie Mellon to participate in an experimental online version of the course which utilized OER. Volunteers for the experimental version of the course were randomly assigned to either treatment or control conditions, with those who did not volunteer also becoming part of the control group, which was taught face-to-face and used a commercial textbook.

In the fall of 2005 there were 20 students in the treatment group and 200 in the control group. In spring of 2006 there were 24 students in the treatment group and an unspecified number of students in the control group. Researchers compared the test scores (three midterm and one final exam) between students in the experimental and control versions of the course for each of these two semesters and found no statistically significant differences.

In a follow up experiment reported in the same study, students in the spring of 2007 were given an opportunity to opt into a blended learning environment in which students who utilized OER in combination with face-to-face instruction would complete the course materials in half the time used by those taking the traditional version of the course. In this instance, the treatment and control groups (22 and 42 students respectively) were only drawn from those who volunteered to participate in the accelerated version of the course. The authors stated that “as in the two previous studies, in-class exams showed no significant difference between the traditional and online groups.…[however] students in OLI-Statistics learned 15 weeks’ worth of material as well or better than traditional students in a mere 8 weeks” (pp. 10, 12). Five months after the semester ended (seven months after the end for the treatment students), a follow up test was given to determine how much of the material had been retained. No significant difference was found between the two groups.

In addition to comparing student exam scores, researchers examined student understanding of basic statistical concepts as measured by the national exam known as “Comprehensive Assessment of Outcomes in a first Statistics course” (CAOS). Research subjects in the spring of 2007 took this test at the beginning and end of the semester in order to measure the change in their statistics understanding. Students in the blended version of the course improved their scores by an average of 18 %; those in the control group on average improved their scores by 3 %, a statistically significant difference. This study is notable both for being the first published article to examine comparative learning outcomes when OER replace traditional learning materials and for its selection criteria of participation. The method used in the spring of 2007, when treatment and control groups were randomly selected from the same set of participants, represents an important attempt at randomization that has unfortunately rarely been replicated in OER studies. At the same time, it should be noted that the sample sizes are relatively small and there was a confound between the method in which students were taught and the use of OER.

Bowen et al. (2012) can be seen as an extension of the study just discussed. They compared the use of a traditional textbook in a face-to-face class on introductory statistics with that of OER created by Carnegie Mellon University’s Open Learning Initiative taught in a blended format. They extended the previous study by expanding it to six different undergraduate institutions. As in the spring 2007 semester reported by Lovett et al. (2008), Bowen et al. (2012) contacted students at the beginning or before each semester to ask for volunteers to participate in their study. Treatment and control groups were randomly selected from those who volunteered to participate, and researchers determined that across multiple characteristics the two groups were essentially the same.

In order to establish some benchmarks for comparison, both groups took the same standardized test of statistical literacy (CAOS) at the beginning and end of the semester, as well as a final examination. In total, 605 students took the OER version of the course, while 2439 took the traditional version. Researchers found that students who utilized OER performed slightly better in terms of passing the course as well as on CAOS and final exam scores; however, these differences were marginal and not statistically significant.

Bowen et al. (2012) is the largest study of OER efficacy that both utilized randomization and provided rigorous statistical comparisons of multiple learning measures. A weakness of this study in terms of its connection with OER is that those who utilized the OER received a different form of instruction (blended learning as opposed to face-to-face); therefore, the differences in instruction method may have confounded any influence of the open materials. Nevertheless, it is important to note that the use of free OER did not lead to lower course outcomes in this study (Bowen et al. (2014) model how their 2012 results could impact the costs of receiving an education).

A third study (Hilton and Laman 2012), focuses on an introductory Psychology course, taught at Houston Community College (HCC). In 2011, in order to help students save money on textbooks, HCC’s Psychology department selected an open textbook as one of the textbooks that faculty members could choose to adopt. The digital version was available for free, and digital supplements produced by faculty were also freely available to HCC students.

In the fall of 2011, seven full-time professors taught twenty-three sections using the open textbook as the primary learning resource; their results were compared with those from classes taught using commercial textbooks in the spring of 2011. Results were provided for 740 students with roughly 50 % treatment and control conditions. Researchers used three metrics to gauge student success in the course: GPA, withdrawals, and departmental final exam scores. They attempted to control for a teacher effect by comparing those measures across the sections of two different instructors. Each of these instructors taught one set of students using a traditional textbook in spring of 2011 and other students using the open textbooks in fall of 2012.

Their overall results showed that students in the treatment group had a higher class GPA, a lower withdrawal rate, and higher scores on the department final exam. These same results occurred when only comparing students that had been taught by the same teacher. While this research demonstrated what may appear to be learning improvements, there were many methodological problems with this study. These limitations are significant, including the fact that the population of individuals who take an introductory psychology course in the spring may be different from the one that takes the same course in the fall. There was no attempt made to contextualize this potential difference by providing information about the difference between fall and spring semesters in previous years. In addition, changes were made in the course learning outcomes and final exam during the time period of the study. While there is no indication that the altered test was harder or easier than previous tests, it is a significant weakness. Moreover, there was no analysis performed to determine whether the results were statistically significant.

A fourth study, Feldstein et al. 2012, took place at Virginia State University (VSU). In the spring of 2010 the School of Business at VSU began implementing a new core curriculum. Faculty members were concerned because an internal survey stated that only 47 % of students purchased textbooks for their courses, largely because of affordability concerns. Consequently, they adopted open textbooks in many of the new core curriculum courses. Across the fall of 2010 and spring of 2011, 1393 students took courses utilizing OER and their results were compared with those of 2176 students in courses not utilizing OER.

These researchers found that students in courses that used OER more frequently had better grades and lower failure and withdrawal rates than their counterparts in courses that did not use open textbooks. While their results had statistical significance, the two sets of courses were not the same. Thus while these data provide interesting correlations, they are weak because the courses being compared were different, a factor that could easily mask any results due to OER. In other words, while this study establishes that students using OER can obtain successful results, the researchers compared apples to oranges, leading to a lack of power in their results.

In the fifth study, Pawlyshyn et al. (2013) reported on the adoption of OER at Mercy College. In the fall of 2012, 695 students utilized OER in Mercy’s basic math course, and their pass rates were compared with those of the fall of 2011, in which no OER were utilized. They found that when open materials were integrated into Mercy College, student learning appeared to increase. The pass rates of math courses increased from 63.6 % in fall 2011 (when traditional learning materials were employed) to 68.9 % in fall 2012 when all courses were taught with OER. More dramatic results were obtained when comparing the spring of 2011 pass rate of 48.4 % (no OER utilized) with the pass rate of 60.2 % in the spring of 2013 (all classes utilized OER). These results however, must be tempered with the fact that no statement of statistical significance was included. Perhaps a more important limitation is that simultaneous with the new curriculum came the decision to flip classroom instruction, thus introducing a significant confound into the research design. Mercy’s supplemental use of explanatory videos and new pedagogical model may be responsible for the change in student performance, rather than the OER.

In addition to the change in the math curriculum, Mercy College also adopted OER components based on reading in some sections of a course on Critical Inquiry, a course that has a large emphasis on reading skills. In the fall of 2011, 600 students took versions of the course that used OER, while an unspecified number of students enrolled in other sections did not use the OER. In the critical reading section of the post-course assessment, students who utilized OER scored 5.73, compared with those in the control group scoring 4.99 (the highest possible score was 8). In the spring of 2013, students enrolled in OER versions of the critical inquiry course performed better than their peers; in a post-course assessment with a maximum score of 20, students in the OER sections scored an average of 12.44 versus 11.34 in the control sections. As with the math results, no statement of statistical significance was included; in addition, no efforts were made to control for any potential differences in students or teachers. Another weakness of this aspect of the study is that there was significant professional development that went into the deployment of the OER. It is conceivable that it was the professional development, or the collaboration across teachers that led to the improved results rather than the OER itself. If this were to be the case, then what might be most notable about the OER adoption was its use as a catalyst for deeper pedagogical change and professional growth.

A sixth study (Hilton et al. 2013), took place at Scottsdale Community College (SCC), a community college in Arizona. A survey of 966 SCC mathematics students showed that slightly less than half of these students (451) used some combination of loans, grants and tuition waivers to pay for the cost of their education. Mathematics faculty members were concerned that the difficulties of paying for college may have been preventing some students from purchasing textbooks and determined that OER could help students access learning materials at a much lower price.

In the fall of 2012 OER was used in five different math courses; 1400 students took these courses. Each of these courses had used the same departmental exam for multiple years; researchers measured student scores on the final exam in order to compare student learning between 2010 and 2011 (when there were no OER in place) and 2012 (when all classes used OER). Issues with the initial placement tests made it so only four of the courses could be appropriately compared. Researchers found that while there were minor fluctuations in the final exam scores and completion rates across the four courses and three years, these differences were not statistically significant. As many of the studies discussed in this section, this study did not attempt to control for any teacher or student differences due to the manner in which the adoption that took place. While it is understandable that the math department wished to simultaneously change all its course materials it would have provided a better experimental context had only a portion of students and teachers been selected for an implementation of OER.

The seventh study (Allen et al. 2015), took place at the University of California, Davis. The researchers wanted to test the efficacy of an OER called ChemWiki in a general chemistry class. Unlike some of the studies previously discussed, researchers attempted to approximate an experimental design that would control for the teacher effect by comparing the results of students in two sections taught by the same instructor at back-to-back hours. One of these sections was an experimental class of 478 students who used ChemWiki as its primary learning resource, the other was a control class of 448 students that used a commercial textbook. To minimize confounds, the same teaching assistants worked with each section and common grading rubrics were utilized. Moreover, they utilized a pretest to account for any prior knowledge differences between the two groups.

Students in both sections took identical midterm and final exams. Researchers found no significant differences between the overall results of the two groups. They also examined item-specific questions and observed no significant differences. Comparisons between beginning of the semester pre-tests and final exam scores likewise showed no significant differences in individual learning gains. This pre/post analysis was an important measure to control for initial differences between the two groups.

Researchers also administered student surveys in order to determine whether students in one section spent more time doing course assignments than those in the other section. They found that students in both sections spent approximately the same amount of time preparing for class. Finally, they administered the chemistry survey known as “Colorado Learning Attitudes about Science Survey” (CLASS) in order to discern whether student attitudes towards chemistry varied by treatment condition. Again, there was no significant difference.

The eighth study (Robinson 2015) examined OER adoption at seven different institutions of higher education. These institutions were part of an open education initiative named Kaleidoscope Open Course Initiative (KOCI). Robinson focused on the pilot adoption of OER resources at these schools in seven different courses (Writing, Reading, Psychology, Business, Geography, Biology, and Algebra). In the 2012–2013 academic year, 3254 students across the seven institutions enrolled in experimental versions of these courses that utilized OER and 10,819 enrolled in the equivalent versions of the course that utilized traditional textbooks. In order to approximate randomization, Robinson used propensity score matching on several key variables in order to minimize the differences between the two groups. After propensity score matching was completed, there were 4314 students remaining, with 2157 in each of the two conditions.

Robinson examined the differences in final course grade, the percentage of students who completed the course with a grade of C- or better, and the number of credit hours taken, which was examined in order explore whether lower textbook costs were correlated with students taking more courses. Robinson found that in five of the courses there were no statistically significant differences between the two groups in terms of final grades or completion rates. However, students in the Business course who used OER performed significantly worse, receiving on average almost a full grade lower than their peers. Those who took the OER version of the psychology course also showed poorer results; on average, they received a half-grade lower for their final grade (e.g. B + to a B). Students in these two courses were significantly less likely to pass the course with a C- or better.

In contrast, students who took the biology course that used OER were significantly more likely to complete the course, although there were no statistically significant differences between groups in the overall course grades. Across all classes there was a small but statistically significant difference between the two groups in terms of the number of credits they took, with students taking OER versions of the course taking on average .25 credits more than their counterparts in the control group. This study is notable in higher education OER efficacy studies in terms of its rigorous attempts to use propensity score matching to control for potentially important confounding variables.

In the ninth study, Fischer et al. (2015) performed follow-up research on the institutions participating in KOCI. Their study focused on OER implementation in the fall of 2013 and spring of 2014. Their original sample consisted of 16,727 students (11,818 control and 4909 treatment). From this sample, there were 15 courses for which some students enrolled in both treatment (n = 1087) and control (n = 9264) sections (the remaining students enrolled in a course which had either all treatment or all control sections and were therefore excluded). While this represents a large sample size, students in treatment conditions were only compared with students in control conditions who were taking the same class in which they were enrolled. For example, students enrolled in a section of Biology 111 that used OER were only compared with students in Biology 111 sections that used commercial textbooks (not students enrolled in a different course). Thus when diffused across 15 classes, there was an insufficient number of treatment students to do propensity score matching for the grade and completion analyses.

The researchers found that in two of the 15 classes, students in the treatment group were significantly more likely to complete the course (there were no differences in the remaining 13). In five of the treatment classes, students were significantly more likely to receive a C- or better. In nine of the classes there were no significant differences and in one study control students were more likely to receive a C- or better. Similarly, in terms of the overall course grade, students in four of the treatment classes received higher grades, ten of the classes had no significant differences, and students in one control class received higher grades than the corresponding treatment class.

Researchers utilized propensity score matching before examining the number of credits students took in each of the semesters as this matching could be done across the different courses. Drawing on their original sample of 16,727 students, the researchers matched 4147 treatment subjects with 4147 controls. There was a statistically significant difference in enrollment intensity between the groups. Students in fall 2013 who enrolled in courses that utilized OER took on average two credit hours more than those in the control group, even after controlling for demographic covariates. ANCOVA was then used to control for differences in fall enrollment and to estimate differences in winter enrollment. Again, there was a significant difference between the groups, with treatment subjects enrolling in approximately 1.5 credits more than controls.

This study is unique in its large sample size and rigorous analysis surrounding the amount of credits taken by students. In some ways, its strength is also a weakness. Because of the large number of contexts, OER utilized, number of teachers involved, and so forth, it is difficult to pinpoint OER as the main driver of change. For example, it is possible that the level of teacher proficiency at the college that taught Psychology using open resources was superior than that of the college where traditional textbooks were used. A host of other variables, such as student awareness of OER, the manner in which the classes were taught were not analyzed in this study; these could have overwhelmed any influence of OER. Moreover, the authors neglect to provide an effect size, limiting the ability to determine the magnitude of difference between the control and treatment courses. At the same time, one would expect that if using OER does significantly impact learning (for good or bad), that that finding would be visible in the results. The lack of difference between the groups indicates that substituting OER for traditional resources was not a large factor in influencing learning outcomes.

Table 1 summarizes the results of the nine published research studies that compare the student learning outcomes in higher education based on whether the students used OER or traditional textbooks.

Results—studies pertaining to student and teacher perceptions of OER

Two of the studies referenced in the above section on student learning outcomes also included data that pertained to student and/or faculty perceptions of OER. Feldstein et al. (2012), surveyed the 1393 students who utilized OER. Of the 315 students who responded to this survey, 95 % strongly agreed or agreed that the OER were “easy to use” and 78 % of respondents felt that the OER “provided access to more up-to-date material than is available in my print textbooks.” Approximately two-thirds of students strongly agreed or agreed that the digital OER were more useful than traditional textbooks and that they preferred the OER digital content to traditional textbooks.

Hilton et al. (2013) surveyed 1400 students and forty-two faculty members who utilized math OER; 910 students and twenty faculty members completed these surveys. The majority of students (78 %) said they would recommend the OER to their classmates. Similarly, 83 % of students agreed with the statement that “Overall, the materials adequately supported the work I did outside of class.” Twelve percent of students neither agreed nor disagreed. An analysis of the free responses to the question, “What additional comments do you have regarding the quality of the open materials used in your class?” showed that 82 % were positive. Faculty members were likewise enthusiastic about the open materials. Of the 18 faculty members who responded to questions comparing the materials, nine said the OER were similar in quality to the texts they used in other courses, and six said that they were better.

In addition to these two studies, I identified seven other articles that focus on teacher and/or student perceptions of OER. As will be discussed in a later section, many of these articles share significant weaknesses, namely the limitations of student perceptions and the potential biases of teachers involved in the creation or adoption of OER.

The first of these studies (Petrides, Jimes, Middleton-Detzner, Walling, & Weiss, Petrides et al. 2011), drew on surveys of instructors and students who utilized an open statistics textbook called Collaborative Statistics (a revised version of this textbook is now published by OpenStax and is titled Introductory Statistics). In total, 31 instructors and 45 students participated in oral interviews or focus groups that explored their perceptions of this OER.

The researchers stated that “Cost reduction for students was the most significant factor influencing faculty adoption of open textbooks.” (p. 43). The majority of students (74 %) reported they typically utilized the book materials online, rather than printing or purchasing a hard copy. Cost was cited as the primary factor behind this decision. In addition, 65 % of students stated they would prefer to use open textbooks in future courses because they were generally easier to use.

The second study (Pitt et al. 2013) examined student perceptions of two pieces of OER that were used to help students improve in their mathematics and personal development skills. These OER were used in a variety of pilot projects, including as resources for community college students who had failed mathematics entrance exams.

In total, 1830 learners used the two OER. For a variety of reasons only 126 of these students took surveys regarding their perceptions of the learning materials. Of those who completed the surveys, 79 % reported overall satisfaction with the quality of the OER. An additional 17 % stated they were undecided about their satisfaction with the OER, and only 4 % expressed dissatisfaction with the materials. While this study reported overall positive perceptions of OER it is limited by the extremely low response rate.

The third study (Gil et al. 2013) reported on a blog that heavily utilized OER. Students enrolled in the Computer Networks course at the University of Alicante (located in Spain) used this blog in conjunction with their coursework. Between June 2010 and February 2013, 345 students enrolled in the course. Of these students, 150 (43 %) completed surveys about their perceptions of the blog that featured OER in contrast with blogs they had used in other courses.

Students were asked questions such as, “In terms of organisation, were you more or less satisfied with the Computer Networks blog versus other blogs at the University of Alicante?” On average, 40 % of students said that the blogs featuring OER were of equal quality to the blogs that did not feature OER, 45 % of students said the blogs with OER were superior and 15 % said they were inferior. While this study shows that a strong majority of users ranked the OER blog as good as or better than non-open blogs it is limited given the generally accessible nature of blogs. It is not clear from the article what it was about the blogs with OER that made them superior to the blogs that did not feature them. Thus it is difficult to determine the degree to which it was the OER or some other factors that led to the favorable student views.

The fourth and fifth studies (Bliss et al. 2013a, 2013b) both examined OER adoption at the KOCI institutions that used OER. Bliss et al. (2013a) reported on surveys taken by eleven instructors and 132 students at seven KOCI colleges. Seven of the instructors believed that their students were equally prepared (in comparison with previous semesters) when OER replaced traditional texts; three reported that their students were more prepared, with one feeling that students were less prepared. All instructors surveyed said they would be very likely to use open texts in the future. Students in this study were also very positive regarding OER materials. When invited to compare the OER with the types of textbooks they traditionally used, only 3 % felt the OER were worse than their typical textbooks. In contrast, 56 % said they were the same quality; 41 % said they were better than typical textbooks.

Bliss et al. (2013b) extended this study by surveying an additional 58 teachers and 490 students across the eight KOCI colleges regarding their experiences with OER. They found that approximately 50 % of students said the OER textbooks had the same quality as traditional textbooks and nearly 40 % said that they were better. Students focused on several benefits of the open textbooks. The free nature of their open texts seemed vital to many students. For example, one student said, “I have no expendable income. Without this free text I would not be able to take this course.” Researchers found that 55 % of KOCI teachers reported the open materials were of the same quality as the materials that had previously been used, and 35 % felt that they were better. Lower cost and the ability to make changes to the text were reasons that many teachers felt that the OER materials were superior.

The sixth study, Lindshield and Adhikari (2013), sought to understand student perceptions of a course “flexbook” being utilized in face-to-face and distance courses in a class called “Human Nutrition,” offered at Kansas State University. This flexbook is a digital OER textbook that is easily adaptable by instructors and available to students in a variety of formats. The authors wanted to determine if perceptions and use of flexbooks were different in an online section of a Human Nutrition class as compared to a face-to-face class, which also used the flexbook. Out of the 322 students who took the course between spring 2011 and spring 2012, 198 completed a survey in which they answered questions about their experience with the OER.

The researchers found that both online and face-to-face students had favorable perceptions of the OER flexbooks they utilized, with the online classes having higher, but not statistically significant, levels of satisfaction. On a seven point scale (7 = strongly agree) students gave an average response of 6.4 to the question, “I prefer using the flexbook versus buying a textbook for HN [Human Nutrition] 400.” Moreover, they found that students disagreed or somewhat disagreed with statements to the effect that they would like to have a traditional textbook in addition to the OER.

The seventh study (Allen and Seaman 2014) surveyed 2144 college professors regarding their opinions on OER. They used a nationally representative faculty sample randomly selecting faculty members from a database that purportedly includes 93 % of all higher education teaching faculty in the United States. Faculty respondents were equally split between male and female and approximately three-quarters were full-time faculty members.

Of those surveyed, 729 (34 %) expressed awareness of OER. Of the subset that was aware of OER, 61.5 % of respondents said that OER materials had about the same “trusted quality” as traditional resources, 26.3 % said that traditional resources were superior, 12.1 % said that OER were superior. 68.2 % said that the “proven efficacy” were about the same, 16.5 % said that OER had superior efficacy and 15.3 % said that traditional resources had superior efficacy. It is important to note that the faculty members in this study expressed awareness of OER, but had not necessarily utilized OER in their pedagogy, as had the instructors in the previously cited perception studies. Thus we cannot be certain about the object of their perceptions or the extent to which they accurately define OER. This research would have been significantly strengthened had it provided information about a subset of teachers who had used OER as the primary learning material in their classroom.

Table 2 summarizes the results of the nine published research studies that provide data regarding student and/or teacher perceptions of OER.

Discussion

In total 46,149 students have participated in studies relating to the influence of OER on learning outcomes. Only one of the nine studies on OER efficacy showed that the use of OER was connected with lower learning outcomes in more instances than it was with positive outcomes, and even this study showed that the majority of the classes were non-significant differences. Three had results that significantly favored OER, three showed no significant difference and two did not discuss the statistical significance of their results. In synthesizing these nine OER efficacy studies, an emerging finding is that utilizing OER does not appear to decrease student learning.

These results must be interpreted with caution however, for many reasons. First, it is important to note that, as stated previously, it is not clear how OER might have been used in each of the above contexts. In some instances, open textbooks are printed and utilized just as traditional textbooks. In other contexts students access OER only through digital methods. These design differences make it difficult to directly connect learning gains/losses with the OER directly. For example, it is theoretically possible that adopting an open digital textbook led to increased access but that students obtained sub-optimal results because they read them online instead of in print. It cannot be determined whether differences in design did make a difference in these studies; however, Daniel and Woody (2013) have shown that in some contexts it appears that there is no difference in student performance when they read electronic versus print versions of a textbook.

It is also important to note that the research designs discussed in this paper were insufficient to claim causality, and some were quite weak. Significant design flaws such as changing final exam metrics between comparison years or comparing different (rather than identical) courses severely curtail the usefulness of some of these studies. Likewise, a consistent problem with confounding the adoption of OER with a change in the delivery method (e.g., from traditional to blended learning) is an issue that needs to be addressed in future studies that attempt to determine the impact of OER adoption.

In some respects, these limitations are not surprising. Confrey and Stohl (2004) examine 698 peer-reviewed studies of the 13 mathematics curriculum that are supported by the National Science Foundation as well as six different commercial products. They found that “The corpus of evaluation studies as a whole across the 19 programs studied does not permit one to determine the effectiveness of individual programs with a high degree of certainty, due to the restricted number of studies for any particular curriculum, limitations in the array of methods used, and the uneven quality of the studies” (p. 3). If such heavily funded curriculum across nearly 700 studies have only inconclusive results, we should not be surprised that the effects of OER adoption are relatively modest.

Those who wish to engage in further OER efficacy research may benefit from adapting aspects of the studies that incorporate stronger research designs. For example, the techniques used by Allen et al. (2015) represent an important attempt to control for teacher and student effects. The approach taken by Lovett et al. (2008) and Bowen et al. (2012) to randomize treatment and control groups based on those who volunteer is another technique that could benefit further OER efficacy studies. Studying patterns of enrollment intensity connected to OER, subject to propensity score matching (as did Robinson (2015) and Fischer et al. (2015)) may be an important approach to testing the hypothesis that open textbooks can help hasten progress toward graduation. While not evenly administered throughout all of the studies, the collective implementation of techniques such as randomization and attempts to control for student and teacher differences do indicate that some serious efficacy research has been done, and much more is needed.

Ideally future research could be structured in such a way that students are randomly assigned to open and traditional textbooks, an option that admittedly would be difficult to pursue. The approach taken by Allen et al. (2015) of administering a pretest at the beginning of a course to account for any pre-existing student differences may be a more realistic approach. I believe that replicating Allen et al. (2015) in different contexts is the most viable approach to increasing the base of significant efficacy studies on OER. In addition, researchers could explore questions such as, “How do students use OER as opposed to traditional textbooks?” All of the OER efficacy research that has been done presupposes that the textbook (whether traditional or open) influences learning. Is this in fact the case? Does the amount of time or manner in which students engage with the learning resource influence outcomes?

In terms of student and teacher perspective of OER, a total of 4510 students and faculty members were surveyed across nine studies regarding perceptions of OER. In no instance did a majority of students or teachers report a perception that the OER were less likely to help students learn. In only one study did faculty state that traditional resources had a higher “trusted quality” than OER (however nearly two-thirds said they were the same). Across multiple studies in various settings, students consistently reported that they faced financial difficulties and that OER provided a financial benefit to them. A general finding seemed to be that roughly half of students found OER to be comparable to traditional resources, a sizeable minority believed they were superior, and a smaller minority found them to be inferior. This is particularly noteworthy given some research that indicates that students tend to read electronic texts more slowly than their counterparts who read in print (Daniel and Woody 2013).

These findings however must be tempered first with the notion that they rely heavily on student perceptions, which in some instances appear to revolve more around improving efficiency rather than learning (Kvavik 2005). The fact that students saved significant amounts of money by using OER likely colored their perceptions of the value of OER as learning resources. It may be that cost-savings or convenience (e.g., not having to carry around heavy backpacks) influenced student perceptions more than learning growth. Similarly, many of the teachers who were surveyed in these studies were involved in the creation or selection of the OER used in their classes. This has the potential to significantly bias their perception of the quality of the resources.

I propose that future perceptions study overcome these limitations by providing a context in which students and teachers evaluate traditional and open textbooks in less-biased settings. For example, students and teachers could be recruited to compare textbooks that they have not created, used or purchased. They could blindly (without knowing which textbooks are OER) evaluate the textbooks on a variety of metrics including their ease of use, accuracy of information and so forth. While this would have the disadvantage of people giving more cursory evaluations (not having utilized the textbooks throughout a semester) it would have the advantage of mitigating the potential biases described in the previous paragraph.

Conclusion

The collective results of the 16 studies discussed in this article provide timely information given the vast amount of money spent on traditional textbooks. Because students and faculty members generally find that OER are comparable in quality to traditional learning resources, and that the use of OER does not appear to negatively influence student learning, one must question the value of traditional textbooks. If the average college student spends approximately $1000 per year on textbooks and yet performs scholastically no better than the student who utilizes free OER, what exactly is being purchased with that $1000?

The decision to employ OER appears to have financial benefits to students (and the parents and taxpayers who support them) without any decrease in their learning outcomes. This last statement must be said tentatively, given the varying rigor of the research studies cited in this paper. Nevertheless, based on the 16 studies I have analyzed, researchers and educators may need to more carefully examine the rationale for requiring students to purchase commercial textbooks when high-quality, free and openly-licensed textbooks are available.

References

Allen, G., Guzman-Alvarez, A., Molinaro, M., & Larsen, D. (2015). Assessing the impact and efficacy of the open-access ChemWiki textbook project. Educause Learning Initiative Brief. January 2015. https://net.educause.edu/ir/library/pdf/elib1501.pdf.

Allen, E., & Seaman, J. (2014). Opening the curriculum: Open educational resources in U.S.

Berry, T., Cook, L., Hill, N., & Stevens, K. (2010). An exploratory analysis of textbook usage and study habits: Misperceptions and barriers to success. College Teaching, 59(1), 31–39.

Bissell, A. (2009). Permission granted: Open licensing for educational resources. Open Learning, The Journal of Open and Distance Learning, 24, 97–106.

Bliss, T., Hilton, J., Wiley, D., & Thanos, K. (2013a). The cost and quality of open textbooks: Perceptions of community college faculty and students. First Monday, 18, 1.

Bliss, T., Robinson, T. J., Hilton, J., & Wiley, D. (2013b). An OER COUP: College teacher and student perceptions of open educational resources. Journal of Interactive Media in Education, 17(1), 1–25.

Bowen, W. G., Chingos, M. M., Lack, K. A., & Nygren, T. I. (2012). Interactive learning online at public universities: Evidence from randomized trials. Ithaka S + R. Retrieved from http://mitcet.mit.edu/wp-content/uploads/2012/05/BowenReport-2012.pdf

Bowen, W. G., Chingos, M. M., Lack, K. A., & Nygren, T. I. (2014). Interactive learning online at public universities: Evidence from a six-campus randomized trial. Journal of Policy Analysis and Management, 33(1), 94–111.

Bushway, S. D., & Flower, S. M. (2002). Helping criminal justice students learn statistics: A quasi-experimental evaluation of learning assistance. Journal of Criminal Justice Education, 13(1), 35–56.

Confrey, J., & Stohl, V. (Eds). (2004). On evaluating curricular effectiveness: Judging the quality of K-12 mathematics evaluations. National Academies Press.

D’Antoni, S. (2009). Open educational resources: Reviewing initiatives and issues. Open Learning, The Journal of Open and Distance Learning, 24, 3–10.

Daniel, D. B., & Woody, W. D. (2013). E-textbooks at what cost? Performance and use of electronic v. print texts. Computers & Education, 62, 18–23.

Darwin, D. (2011). How much do study habits, skills, and attitudes affect student performance in introductory college accounting courses? New Horizons in Education, 59(3).

Feldstein, A., Martin, M., Hudson, A., Warren, K., Hilton, J., & Wiley, D. (2012). Open textbooks and increased student access and outcomes. European Journal of Open, Distance and E-Learning.

Fischer, L., Hilton, J, I. I. I., Robinson, T. J., & Wiley, D. A. (2015). A multi-institutional study of the impact of open textbook adoption on the learning outcomes of post-secondary students. Journal of Computing in Higher Education, 27(3), 159–172.

Florida Virtual Campus. (2012). 2012 Florida student textbook survey. Tallahassee. http://www.openaccesstextbooks.org/pdf/2012_Florida_Student_Textbook_Survey.pdf.

Gil, P., Candelas, F., Jara, C., Garcia, G., & Torres, F. (2013). Web-based OERs in computer networks. International Journal of Engineering Education, 29(6), 1537–1550.

Hewlett. (2013). Open educational resources. http://www.hewlett.org/programs/education-program/open-educational-resources

Hilton, J., Gaudet, D., Clark, P., Robinson, J., & Wiley, D. (2013). The adoption of open educational resources by one community college math department. The International Review of Research in Open and Distance Learning, 14(4), 37–50.

Hilton, J., & Laman, C. (2012). One college’s use of an open psychology textbook. Open Learning: The Journal of Open and Distance Learning, 27(3), 201–217.

Johnstone, S. M. (2005). Open educational resources serve the world. Educause Quarterly, 28(3), 15.

Kvavik, R. B. (2005). Convenience, communications, and control: How students use technology. In D. G. Oblinger, & J.L. Oblinger (Eds.), Educating the net generation. EDUCAUSE Center for Applied Research

Lindshield, B., & Adhikari, K. (2013). Online and campus college students like using an open educational resource instead of a traditional textbook. Journal of Online Learning & Teaching, 9(1), 1–7. Retrieved from http://jolt.merlot.org/vol9no1/lindshield_0313.htm

Lovett, M., Meyer, O., & Thille, C. (2008). JIME-The open learning initiative: Measuring the effectiveness of the OLI statistics course in accelerating student learning. Journal of Interactive Media in Education, 2008(1).

Morris-Babb, M., & Henderson, S. (2012). An experiment in open-access textbook publishing: Changing the world one textbook at a time 1. Journal of Scholarly Publishing, 43(2), 148–155.

Pawlyshyn, N., Braddlee, D., Casper, L., & Miller, H. (2013). Adopting OER: A case study of cross-institutional collaboration and innovation. Educause Review, http://www.educause.edu/ero/article/adopting-oer-case-study-cross-institutional-collaboration-and-innovation

Petrides, L., Jimes, C., Middleton-Detzner, C., Walling, J., & Weiss, S. (2011). Open textbook adoption and use: Implications for teachers and learners. Open learning, 26(1), 39–49.

Pitt, R., Ebrahimi, N., McAndrew, P., & Coughlan, T. (2013). Assessing OER impact across organisations and learners: Experiences from the bridge to success project. Journal of Interactive Media in Education, 2013(3), http://jime.open.ac.uk/article/view/2013-17/501

Robinson, T. J. (2015). Open textbooks: The effects of open educational resource adoption on measures of post-secondary student success. Doctoral dissertation.

Skinner, D., & Howes, B. (2013). The required textbook—Friend or foe? Dealing with the dilemma. Journal of College Teaching & Learning (TLC), 10(2), 133–142.

UNESCO. (2002). Forum on the impact of open courseware for higher education in developing countries. Final report. Retrieved from www.unesco.org/iiep/eng/focus/opensrc/PDF/OERForumFinalReport.pdf

Wiley, D., Bliss, T. J., & McEwen, M. (2014). Open educational resources: A review of the literature. In Handbook of research on educational communications and technology (pp. 781–789). New York: Springer.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hilton, J. Open educational resources and college textbook choices: a review of research on efficacy and perceptions. Education Tech Research Dev 64, 573–590 (2016). https://doi.org/10.1007/s11423-016-9434-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-016-9434-9