Abstract

Ensuring fairness in instruments like survey questionnaires or educational tests is crucial. One way to address this is by a Differential Item Functioning (DIF) analysis, which examines if different subgroups respond differently to a particular item, controlling for their overall latent construct level. DIF analysis is typically conducted to assess measurement invariance at the item level. Traditional DIF analysis methods require knowing the comparison groups (reference and focal groups) and anchor items (a subset of DIF-free items). Such prior knowledge may not always be available, and psychometric methods have been proposed for DIF analysis when one piece of information is unknown. More specifically, when the comparison groups are unknown while anchor items are known, latent DIF analysis methods have been proposed that estimate the unknown groups by latent classes. When anchor items are unknown while comparison groups are known, methods have also been proposed, typically under a sparsity assumption – the number of DIF items is not too large. However, DIF analysis when both pieces of information are unknown has not received much attention. This paper proposes a general statistical framework under this setting. In the proposed framework, we model the unknown groups by latent classes and introduce item-specific DIF parameters to capture the DIF effects. Assuming the number of DIF items is relatively small, an \(L_1\)-regularised estimator is proposed to simultaneously identify the latent classes and the DIF items. A computationally efficient Expectation-Maximisation (EM) algorithm is developed to solve the non-smooth optimisation problem for the regularised estimator. The performance of the proposed method is evaluated by simulation studies and an application to item response data from a real-world educational test.

Similar content being viewed by others

Psychometric models to analyse data from instruments such as survey questionnaires and educational tests rely on an equivalence assumption on the item parameters across groups of respondents. That is, conditioning on the latent construct measured by the instrument, a respondent’s response to each item is independent of their group membership. This assumption is known as measurement invariance. If violated, the psychometric property of the item(s) is not constant across groups, which can cause measurement bias (Millsap, 2012). The measurement invariance assumption is typically investigated through differential item functioning (DIF) analysis, a class of statistical methods that compares respondent groups at the item level and detects non-invariant, i.e., DIF, items.

Traditional DIF detection methods assume that both the comparison groups and a set of non-DIF items, commonly referred to as the anchor set, are known a priori. The anchor items are used to identify the latent construct the instrument measures, and a DIF detection method compares the performances of the comparison groups, controlling for their performance on the anchor items as a proxy of the latent construct level. Depending on their specific assumptions, these DIF detection methods can be divided into Item-Response-Theory-based (IRT-based) methods (e.g., Kim et al., 1995; Lord, 1977; Lord, 1980; Steenkamp & Baumgartner, 1988; Tay et al., 2012; Thissen et al., 1988; Thissen & Steinberg, 1998; Wainer, 2016; Woods et al., 2013) and non-IRT-based methods (e.g., Cao et al., 2017; Dorans & Kulick, 1986; Drabinová & Martinková, 2017; Holland & Thayer, 1986; Holland & Wainer, 1993; Shealy & Stout, 1993; Swaminathan & Rogers, 1990; Tay et al., 2015; Woods et al., 2013; Zwick et al., 2000); see Millsap (2012) for a review of traditional DIF analysis methods. Generally speaking, IRT-based methods tend to provide a clearer definition of DIF effects through a generative probabilistic model at the price of a risk of model misspecification.

Unfortunately, comparison groups and anchor items may not always be available in real-world applications, in which cases the aforementioned traditional methods are not applicable. Even if we have some information about anchor items, the result may be sensitive to the specific anchor items we use, when we only have a small number of such, and there will be a big issue if the anchor items are misspecified. Modern DIF analysis methods have been developed in situations where either the comparison groups or the anchor items are unknown. When anchor items are unknown, the latent construct is not identified, in which case, DIF detection is an ill-posed problem if no additional assumptions are made. A reasonable assumption in this situation is sparsity – the number of DIF items is relatively small, under which the detection of DIF items is turned into a model selection problem. To tackle the model selection problem, item purification methods have been proposed (e.g., Candell & Drasgow, 1988; Clauser et al., 1993; Fidalgo et al., 2000; Kopf et al., 2015a; b; Wang et al., 2009; Wang & Su, 2004; Wang & Yeh, 2003), where stepwise model selection methods are used to detect DIF items. More recently, Lasso-type regularised estimation methods have been proposed to solve the model selection problem (Magis et al., 2015; Tutz and Schauberger, 2015; Belzak and Bauer, 2020; Bauer et al., 2020; Schauberger and Mair, 2020). In these methods, the DIF effects are represented by item-specific parameters under an IRT model, where a zero coefficient encodes no DIF effect for an item, and Lasso-type penalties are imposed on the DIF parameters to obtain a sparse solution, i.e., many items are DIF-free. A drawback of regularised estimation methods is that, due to the bias brought by Lasso regularisation, they do not provide valid p-values for testing whether each item is DIF-free. Recently, Chen et al. (2023) considered a limiting case of a regularised estimator and showed that the estimator can simultaneously identify the latent construct and yield valid statistical inferences on the individual DIF effects. An alternative direction of DIF analysis without anchor items is based on the idea of differential item pair functioning. Under the Rasch model, Bechger and Maris (2015) showed that although a Rasch model with group-specific difficulty parameters is not identifiable, the relative difficulties of item pairs are identifiable and can be used for detecting DIF items. Based on this idea, Yuan et al. (2021) introduced visualisation methods for DIF detection. We lastly point out that there is related literature on \(L_1\) regularisation for general mixture models such as Gaussian mixture models (e.g., Bhattacharya & McNicholas, 2014; Bouveyron & Brunet-Saumard, 2014; Luo et al., 2008), which also consider model-based clustering but are based on continuous instead of categorical data. However, it is worth noting that these works all consider a high-dimensional data setting, and the regularisation is used for dimension reduction. The current paper focuses on a relatively low-dimensional setting, and a regularised estimator is proposed for the purpose of model selection.

The comparison groups may sometimes be unavailable, and DIF analysis in this situation is typically referred to as latent DIF analysis (Cho et al., 2016; De Boeck et al., 2011). As suggested in De Boeck et al. (2011), latent DIF analysis is needed when we do not know the crucial groups for comparison, we cannot observe the groups of interest, or there are validity concerns regarding the true group membership of the respondents. For example, for self-reported health and mental health instruments (Teresi and Reeve, 2016; Reeve and Teresi, 2016; Teresi et al., 2021), many covariates are collected, such as age, gender, ethnicity, and other background variables, but the crucial groups for DIF analysis are typically unclear. For another example, when analysing data from an educational test in which a subset of test takers have preknowledge on some leaked items (Cizek and Wollack, 2017), the two comparison groups of interest – the ones with and without item preknowledge – are not directly observable. Moreover, the observed group membership may sometimes poorly indicate the “true” group membership that causes the DIF pattern in the item response data (e.g., Bennink et al., 2014; Cho & Cohen, 2010; Finch & Hernández Finch, 2013; Von Davier et al., 2011). Most existing latent DIF analysis methods assume that an anchor set is known and use a mixture IRT model – a model that combines IRT and latent class analysis– to identify the unknown groups and detect the DIF items simultaneously (Cho and Cohen, 2010; Cohen and Bolt, 2005; De Boeck et al., 2011); see Cho et al. (2016) for a review.

In practice, both the comparison groups and the anchor set may be unknown. For example, besides the aforementioned challenges of identifying the crucial comparison groups, the DIF analysis of self-reported health and mental health instruments also faces the challenge of identifying anchor items (Teresi and Reeve, 2016; Reeve and Teresi, 2016). In the item preknowledge example above, not only the comparison groups are unobserved, but also prior knowledge about non-leaked items is likely unavailable, and thus, correctly specifying an anchor set is a challenge (O’Leary et al., 2016). Almost no general methods are available for latent DIF analysis when the anchor set is unavailable. Two notable exceptions are Chen et al. (2022) and Robitzsch (2022). In Chen et al. (2022), a Bayesian hierarchical model for latent DIF analysis is proposed and applied for the simultaneous detection of item leakage and preknowledge in educational tests. In this model, latent classes are imposed among the test takers to model the comparison groups, and also among the items to model the DIF and non-DIF item sets. In addition, both the person- and item-specific parameters are treated as random variables and inferred via a fully Bayesian approach. However, the inference of this model relies on a Markov chain Monte Carlo algorithm, which suffers from slow mixing. Moreover, as most traditional DIF analysis methods adopt a frequentist setting, it is of interest to develop a frequentist approach to latent DIF analysis when the anchor set is unknown. Robitzsch (2022) proposed a latent DIF procedure based on a regularised estimator under a mixture Rasch model. In this work, a nonconvex penalty called the Smoothly Clipped Absolute Deviation (SCAD) penalty (Fan and Li, 2001) other than the \(L_1\) penalty is investigated. The methodology proposed in the current paper is similar in spirit to that of Robitzsch (2022) but developed independently. The proposed framework focuses on the two-parameter logistic (2-PL) model (Birnbaum, 1968) with an \(L_1\) penalty and further provides a scope to generalise to other item response theory models.

This paper proposes a frequentist framework for DIF analysis when both the comparison groups and the anchor set are unknown. The proposed framework combines the ideas of mixture IRT modeling for latent DIF analysis and regularised estimation for manifest DIF analysis with unknown anchor items. More specifically, the unknown groups are modelled by latent classes, and the DIF effects are characterised by item-specific DIF parameters. An \(L_1\)-regularised marginal maximum likelihood estimator is proposed, assuming that the number of DIF items is relatively small. This estimator penalises the DIF parameters by a Lasso regularisation term so that the DIF items can be selected by the non-zero pattern of the estimated DIF parameters. Computing the \(L_1\)-regularised estimator involves solving a non-smooth optimisation problem. We propose a computationally efficient Expectation-Maximisation (EM) algorithm (Dempster et al., 1977; Bock and Aitkin, 1981), where the non-smoothness of the objective function is handled by a proximal gradient method (Parikh and Boyd, 2014). We evaluate the proposed method through simulation studies and an application to item response data from a real-world educational test. For the real-world application, we consider data from a midwestern university in the United States. This data set has been studied in Bolt et al. (2002), where end-of-test items are believed to cause DIF due to insufficient time. Both the comparison groups, i.e. the speeded and non-speeded respondents, and the anchor items are unknown. In Bolt et al. (2002), the DIF items and comparison groups are detected by borrowing information from an additional test form which is carefully designed so that the potential speededness-DIF items in the original form are administered at earlier locations, and thus, are unlikely to suffer from speededness-DIF. Thanks to the proposed procedure, we are able to identify the unknown DIF items and comparison groups without utilising information from the additional test form, and our findings are consistent with those of Bolt et al. (2002).

The rest of the paper is organised as follows. In Sect. 1, we propose a modelling framework for latent DIF analysis with unknown groups and anchor items and a regularised estimator that simultaneously identifies the unknown groups and detects the DIF items. In Sect. 2, we propose a computationally efficient EM algorithm. The proposed method is evaluated by simulation studies in Sect. 3 and further applied to data from a real-world educational test in Sect. 4. We conclude with discussions in Sect. 5. Details about the computational algorithm are given in the Appendix.

1 Proposed Framework

1.1 Measurement Model

Consider N respondents answering J binary items. Let \(Y_{ij} \in \{0, 1\}\) for \(i = 1, \ldots , N\) and \(j = 1, \ldots , J\) be a binary random variable recording individual i’s response to item j. The response vector of individual i is denoted by \({\textbf{Y}}_i = (Y_{i1}, \ldots , Y_{iJ})^\top \). We assume that the items measure a unidimensional construct, which is modelled by a latent variable \(\theta _i\). We further assume that the respondents are random samples from \(K+1\) unobserved groups, where the group membership is denoted by the latent variable \(\xi _i \in \{0, 1,..., K\}\). Given the latent trait \(\theta _i\) and the latent class \(\xi _i\), consider the two-parameter item response model with a logit link (2-PL) (measurement model) (Birnbaum, 1968)

where \(a_j\) and \(d_j\) are known as the discrimination and easiness parameters respectively and \(\delta _{j \xi _i}\) is referred to as the DIF-effect parameter, as it quantifies the DIF effect of latent class k on item j.

We treat \(\xi _i = 0\) as the baseline group, also known as the reference group, and set \(\delta _{j0} = 0\) for all \(j = 1, \ldots , J\). In that case, \( a_j \theta _i + d_j\) denotes the item response function for the reference group. When \(a_j\) is common across all items, the baseline model becomes the Rasch model (Rasch, 1960). We focus on the 2-PL model here, but the proposed method easily adapts to other baseline IRT models.

The parameter \(\delta _{jk}\) characterises how respondents in group k differ from those in the reference group in terms of the item response behaviour on item j. For the reference group, the DIF parameter remains zero for all items, serving as a reference point. For the remaining latent classes, the DIF parameter can be non-zero for certain items. Crucially, the magnitude of this parameter is allowed to differ across these latent classes. This flexibility accounts for varying degrees of DIF effects across different latent groups, when comparing with the reference group. The DIF effect parameter can also be expressed in terms of log-odds. Specifically, under the 2-PL model,

i.e., \(\delta _{jk}\) is the log-odds-ratio when comparing two respondents from group k and the reference group given that they have the same latent construct level.

1.2 Structural Model

The structural model specifies the joint distribution of the latent variables \((\theta _i, \xi _i)\). We assume that the latent classes follow a categorical distribution,

where \(P(\xi _i = k) = \nu _k\). There are consequently \(K+1\) latent classes with class probabilities \(\varvec{\nu }= (\nu _0, \nu _1, \ldots , \nu _K)^\top \) such that \(\nu _k \ge 0\) and \(\sum _{k=0}^{K} \nu _k = 1\). We further assume that conditional on \(\xi _i\), the latent ability \(\theta _i\) follows a normal distribution with class-specific mean and variance, i.e.,

To ensure model identification, we fix the mean and variance of the reference group, i.e. \(\mu _0 = 0\) and \(\sigma _0^2 = 1\).

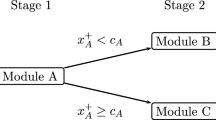

The path diagram of this model is given in Fig. 1. We note that the model coincides with a MIMIC model (Jöreskog and Goldberger, 1975) for manifest DIF analysis (Muthen and Lehman, 1985; Muthén, 1989; Woods, 2009; Woods and Grimm, 2011) when conditioning on the latent class \(\xi _i\) (i.e., viewing \(\xi _i\) as observed). However, since \(\xi _i\) is unobserved, the statistical inference of the proposed model differs substantially from that of the MIMIC model. More specifically, the inference of the proposed model will be based on the marginal likelihood function where both latent variables \(\xi _i\) and \(\theta _i\) are marginalised out. When the baseline IRT model is the 2-PL model, the marginal likelihood function takes the form

where \(\phi (\theta \vert \mu _k, \sigma ^2_k)\) denotes the density function of a normal distribution with mean \(\mu _k\) and variance \(\sigma _k^2\), and we use vector \(\Delta \) to denote all the unknown parameters, including the item parameters \(a_j\) and \(d_j\), \(\delta _{jk}\), \(\nu _k\), \(\mu _k\) and \(\sigma _k^2\), for \(j = 1,..., J\) and \(k = 0, 1,..., K\).

1.3 Model Identifiability

The current model suffers from two sources of unidentifiability. The first source of unidentifiability comes from not knowing the anchoring items, which occurs even if we condition on the latent class \(\xi _i\), i.e., when the model becomes a MIMIC model for manifest DIF analysis. That is, for any constants \(c_1,..., c_K\), if we simultaneously replace \(\mu _k\) by \(\mu _k + c_k\) and replace \(\delta _{jk}\) by \(\delta _{jk} - a_j \mu _k\) for all \(j = 1,..., J\) and \(k=1,..., K\), the likelihood function value \(L(\Delta )\) remains the same. This source of unidentifiability can be avoided when one or more anchor items are known a priori. Suppose that item j is known to be DIF-free. Under the proposed model framework, it implies the constraints \(\delta _{jk} = 0\) for all k. Consequently, the aforementioned transformation can no longer apply, as otherwise, the zero constraints for the anchor items will be violated. As discussed in Sect. 1.4, this source of unidentifiability can be handled by a regularised estimation approach under a sparsity assumption that many DIF parameters \(\delta _{jk}\) are zero.

The second source of unidentifiability is the label-switching phenomenon of latent class models (Redner and Walker, 1984), as a result of the exchangeability of the latent classes. Under the current model, the baseline class is uniquely identified through the constraints \(\delta _{j0} =0\), \(\mu _0 = 0\) and \(\sigma _0^2 = 1\). However, the remaining latent classes are exchangeable, and the likelihood function value remains the same when switching their labels. While label switching often causes trouble when inferring a latent class model with Bayesian Markov chain Monte Carlo (MCMC) algorithms (Stephens, 2000), it is not a problem for the estimator to be discussed in Sect. 1.4. Our estimator is proposed under the frequentist setting and computed by an EM algorithm. When the EM algorithm converges, it will reach one of the equivalent solutions in the sense of label switching.

1.4 Sparsity, Model Selection and Estimation

As explained above, the latent trait cannot be identified without anchor items. In that case, additional assumptions are needed to solve the DIF analysis problem. Specifically, we adopt the sparsity assumption, i.e., many DIF parameters \(\delta _{jk}\) are zero. This is a common assumption in the manifest DIF literature, see for example (Magis et al., 2015; Tutz and Schauberger, 2015; Belzak and Bauer, 2020; Bauer et al., 2020; Schauberger and Mair, 2020). In many applications, for example, the detection of aberrant behaviour or parameter drift in educational testing, the number of DIF items is low, suggesting that this assumption is meaningful.

Under the above sparsity assumption, we propose an \(L_1\) regularised estimator to simultaneously estimate the unknown model parameters and learn the sparsity pattern of the DIF-effect parameters. This estimator takes the form

where \(L(\Delta )\) is the marginal likelihood function defined in (2), and \(\lambda > 0\) is a tuning parameter. The computation of this estimator will be discussed in Sect. 2. Similar to Lasso regression (Tibshirani, 1996), the \(L_1\) regularisation term \(\lambda \sum _{j=1}^J \sum _{k=1}^K|\delta _{jk}|\) in (3) tends to shrink some of the DIF-effect parameters to be exactly zero. In the most extreme case where \(\lambda \) goes to infinity, all the DIF-effect parameters will shrink to zero. Under suitable regularity conditions and when \(\lambda \) is chosen properly (i.e., \(\lambda \) goes to infinity at a suitable speed), the \(L_1\) regularised estimator yields both estimation and selection consistency (Zhao and Yu, 2006; van de Geer, 2008). In that case, the latent trait is consistently identified, and the consistently selected sparse patterns of the estimated DIF-effect parameters can be used to classify items as DIF and non-DIF items.

We select the tuning parameter \(\lambda \) based on the Bayesian Information Criterion (BIC; Schwarz, 1978) using a grid search approach. Specifically, we consider a pre-specified set of grid points for \(\lambda \), denoted by

\(\lambda _1\),... \(\lambda _{M}\). For each value of \(\lambda _m\), we solve the optimisation problem (3) and obtain \({\tilde{\Delta }}^{(\lambda _m)}\). To compute the BIC value for the model encoded by \({\tilde{\Delta }}^{(\lambda _m)}\), we compute a constrained maximum likelihood estimator, fixing \(\delta _{jk}\) to zero if \({\tilde{\delta }}_{jk}^{(\lambda _{m})} = 0\). That is,

The BIC corresponding to \(\lambda _m\) is calculated as

where \(\text{ Card }({\hat{\Delta }}^{(\lambda _m)})\) denotes the number of free parameters in \({\hat{\Delta }}^{(\lambda _m)}\) that equals to the total number of free parameters in \(\Delta \) minus the corresponding number of zero constraints. The tuning parameter is then selected as

Thanks to the asymptotic properties of the BIC (Shao, 1997), the true model will be consistently selected if it can be found by one of the tuning parameters.

We use the constrained maximum likelihood estimator \({\hat{\Delta }}^{({{\hat{\lambda }}})}\) as the final estimator of the selected model and declare an item j to be a DIF item if \(\Vert ({{\hat{\delta }}}_{j1}^{({{\hat{\lambda }}})},..., {{\hat{\delta }}}_{jK}^{({{\hat{\lambda }}})})^\top \Vert \ne 0\). We summarise this procedure in Algorithm 1 below.

1.5 Other Inference Problems

The latent class membership can be inferred by an empirical Bayes procedure, i.e., by the maximum a posteriori (MAP) estimate under the estimated model. For the MAP estimator, the goal is to find the most probable latent class k for each respondent i, given their observed responses \({\varvec{y}}_i\). This is done by maximizing the posterior probability of \(\xi _i\) being equal to k, conditioned on the observed responses \({\varvec{y}}_i\):

We get these posterior probabilities through

based on the estimated model parameters.

Lastly, the number of latent classes, i.e., the choice of K, can be determined using the BIC. That is, we solve the optimisation problem in (3) for different values of K, yielding \({\hat{\Delta }}^{(K)}\). We thereafter compute the BIC as

and select the K that yields the smallest BIC:

1.6 Extensions

The proposed model is possible to extend in several ways to accommodate different data types and more than one factor. To make this clear, our model can be expressed as

The function g determines the parametrisation of the person and item parameters, denoted by \(\theta \) and \(\beta \) respectively. If for example the Rasch model (Rasch, 1960) is adopted, \(\beta _j = (a, d_j)\) where \(a_j=a\) for all \(j=1, \ldots , J\),

and \(f(x) = \exp (x)/(1+\exp (x))\).

It is also possible to consider link functions other than the logistic function considered in this paper, such as the probit link:

DIF analysis using multidimensional IRT models with unknown anchor items has recently been considered in Wang et al. (2023). As in the unidimensional case, no method can handle situations where both the groups and the anchor items are missing. Our proposed framework can however be extended to handle such situations. Consider the extension of (9) where each respondent, in addition to the latent class membership \(\xi _i\), is represented by an L-dimensional latent vector \(\varvec{\theta }_i = (\theta _{i1}, \ldots , \theta _{iL})^\top \). Each item is represented by an intercept parameter \(d_j\) and L loading parameters \( {\varvec{a}}_j = (a_{j1}, \ldots , a_{jL})^\top \). This extension of the model can be expressed as a multidimensional 2-PL model with an added DIF component, i.e.,

The proposed modeling framework can also be extended to accommodate ordinal data. Denoting the response categories of \(Y_{ij}\) by \(c = 1, \ldots , C\), such model can, using the logistic link, be expressed as

The model without the DIF parameter is known as the proportional odds model (Samejima, 1969). Note the negative sign in front of the slope parameter so that if \(a_j\) is positive, increasing \(\theta _i\) will increase the probability of higher-numbered levels of \(Y_{ij}\).

Lastly, we mention the possibility of extending the model to accommodate for DIF effects in the discrimination parameter, known as non-uniform DIF. To consider such a case, we introduce a similar DIF-effect parameter for \(a_j\), just as we have for \(d_j\). Let’s denote the DIF effect on the discrimination parameter \(\alpha _{j \xi _i}\). Given that \(\xi _i=0\) is treated as the reference group, we set \(\alpha _{j0}=0\) for all \(j=1,\ldots , J\). In this case, the modified 2-PL model with a logit link which accounts for DIF in both \(a_j\) and \(d_j\) can be written as:

To include DIF in the \(L_1\) regularised estimator, the penalty term needs to be modified to penalize both \(\alpha _{jk}\) and \(\delta _{jk}\) terms. The modified estimator is given by

2 Computation

The computation of the optimisation problems (3) and (4) is carried out using the EM algorithm (Dempster et al., 1977; Bock and Aitkin, 1981). An EM algorithm is an iterative algorithm, alternating between an Expectation (E) step and a Maximisation (M) step. Optimisation problem (4) involves maximising the marginal likelihood function of a regular latent variable model, and thus, can be solved by a standard EM algorithm. Thus, its details are skipped here. However, the optimisation problem (3) involves a non-smooth \(L_1\) term. Consequently, the M step of the algorithm cannot be carried out using a gradient-based numerical solver, such as a Newton-Raphson algorithm. We develop an efficient proximal-gradient-based EM algorithm that uses a proximal gradient update (Parikh and Boyd, 2014) to carry out the non-smooth optimisation problem in the M-step. In what follows, we elaborate on this algorithm using the 2-PL model as the baseline IRT model, while pointing out that the algorithm easily extends to other baseline IRT models.

Suppose that t iterations of the algorithm have been run and let \(\Delta ^{(t)}\) be the current parameter value. In the E-step of the tth iteration, we construct a local approximation of the negative objective function at \(\Delta ^{(t)}\) in the form of

We note that the expectation in (12) is with respect to the conditional distribution of the latent variables \((\theta _i, \xi _i)\) given \({\textbf{Y}}_i\), evaluated at the current parameters \(\Delta ^{(t)}\).

In the M-step, we find \(\Delta ^{(t+1)}\) such that

or equivalently,

By Jensen’s inequality, it consequently guarantees that the objective function of (3) decreases, i.e.,

More specifically, we write \( \Delta = (\Delta _1^\top , \Delta _2^\top )^\top , \) where \(\Delta _1 = (\nu _0,..., \nu _K)^\top \) and \(\Delta _2\) contains the rest of the parameters. We notice that \(-Q(\Delta | \Delta ^{(t)})\) in (12) can be decomposed as the sum of a smooth function

\( D_t(\Delta _1) = -\sum _{i=1}^N {\mathbb {E}}\left[ \log \left( \nu _{\xi _i} \big \vert {\textbf{Y}}_i, \Delta ^{(t)}\right) \right] , \) a smooth function \(F_t(\Delta _2)\), defined as

and a non-smooth function \( G(\Delta _2) = \lambda \sum _{j=1}^J\sum _{k=1}^K |\delta _{jk}|. \) We note that \(\Delta _1^{(t+1)}\) can be obtained by solving the following constrained optimisation problem

Using the method of Lagrangian multiplier, this optimisation problem has a closed-form solution; see the Appendix for the details.

We then find \(\Delta _2^{(t+1)}\) such that \(F_t(\Delta _2^{(t+1)}) + G(\Delta _2^{(t+1)}) < F_t(\Delta _2^{(t)}) + G(\Delta _2^{(t)})\). Consider the optimisation problem \(\min F_t(\Delta _2) + G(\Delta _2)\). Due to the non-smoothness of G, this objective function is not differentiable everywhere. Consequently, gradient-based methods are not applicable. We find \(\Delta _2^{(t+1)}\) by using a proximal gradient method (Parikh and Boyd, 2014). Denote the dimension of \(\Delta _2\) by d, where d equals the number of free parameters which is determined by the choice of baseline model (\(2J + 2K\) for the 2-PL model). We define the proximal operator of G at \(\Delta _2\) as

We update \(\Delta _2\) by

where \(\nabla F_t(\Delta _2^{(t)})\) denotes the gradient of \(F_t\) at \(\Delta _2^{(t)}\), and \(\alpha \) is a step size. According to Parikh and Boyd (2014, sect.4.2), for a sufficiently small step size \(\alpha \), it is guaranteed that \(F_t(\Delta _2^{(t+1)}) + G(\Delta _2^{(t+1)}) < F_t(\Delta _2^{(t)}) + G(\Delta _2^{(t)})\), unless \(\Delta _2^{(t)}\) is already a stationary point. Thus, we select \(\alpha \) by a line search procedure, whose details are provided in the Appendix. We note that this proximal operator has a closed-form solution. Specifically, each DIF-effect parameter \(\delta _{jk}\) is updated by solving

which has a closed-form solution given by soft-thresholding (Chapter 3, Hastie et al., 2009). The rest of the parameters in \(\Delta _2\) do not appear in the non-smooth function \(G(\Delta _2)\), and thus, the resulting update of (13) degenerates to a vanilla gradient descent update. For example,

Further details of the proximal gradient update can be found in the Appendix. We summarise the main steps of this EM algorithm in Algorithm 2 below.

An EM algorithm for solving (3).

Remark. While differentiable approximations, such as the smoothed Lasso, can allow the use of gradient-based methods, they often come with their own set of challenges. Introducing a smoothing parameter can make the method sensitive to its choice, and in some situations, the approximation might not be close enough to the original problem, especially when the smoothing parameter is not sufficiently small. We opt for an approach based on non-smooth optimisation. We believe that directly tackling the non-smoothness ensures that we do not compromise on the sparsity of the solution, which is critical for our analysis. We have designed our algorithm around the EM algorithm, which inherently possesses certain convergence properties. Specifically, the EM algorithm is guaranteed to increase the log-likelihood in each iteration, and under mild regularity conditions, it converges to at least a local maximum of the log-likelihood. While we acknowledge that there are potential pitfalls in the convergence of non-smooth optimisation algorithms, we have implemented strategies to ensure the stability of our algorithm, such as adaptive step sizes and convergence checks.

3 Simulation Study

In this simulation, we evaluate the performance of the proposed method, treating the number of latent classes K as fixed. We consider cases with two and three latent classes, respectively. For each simulation scenario, we run \(B = 100\) independent replications.

3.1 Settings

We examine 16 scenarios under the two-group setting and 8 scenarios under the three-group setting, considering \(J \in \{25, 50\}\), \(N \in \{500, 1000\}\), and \(K \in \{1, 2\}\). Note that \(K=1\) represents a two-group setting and \(K=2\) indicates a three-group setting. In the two-group setting, the class proportions are varied, with the reference group consisting of either 50%, 80%, or 90% of the respondents. In the three-group scenario, 50% of the respondents belong to the reference group, 30% belong to the second latent class, and 20% belong to the third latent class. The number of DIF items is set to 10 for all cases with \(J=25\) and 20 for all cases with \(J=50\), i.e., the proportion of DIF items remains the same.

The intercept parameters \(d_j\) are generated from the \(\text {Uniform}(-2, 2)\) distribution and the slope parameters \(a_j\) from the \(\text {Uniform}(0.5, 1.5)\) distribution. In the two-group setting, we consider three cases for the class proportions, where \(\nu = (0.1, 0.9)\), \(\nu = (0.2, 0.8)\), and \(\nu = (0.5, 0.5)\), respectively. The latent construct \(\theta \) within each latent class \(\xi \) follows

and for the three-group case, we additionally let \(\theta _i|\xi = 2 \sim {\mathcal {N}}(1, 1.2)\). The DIF effect parameters are generated as \(\delta _{jk} \sim \text {Uniform}(0.5, 1.5)\) for the non-zero elements and set to 0 for the remaining items. For the three-group case, the DIF effects for the second and third latent class are generated from a \(\text {Uniform}(0.5, 1)\) and \(\text {Uniform}(1, 1.5)\) distribution, respectively. In the two-group setting, the DIF items are positioned at the beginning of the scale (items 1-10 when \(J=25\) and 1-20 when \(J=50\)). In the three-group setting, the DIF items are positioned at the end of the scale (items 16-25 when \(J=25\) and 31-50 when \(J=50\)). The true model parameters are given in the supplementary material.

3.2 Evaluation Criteria

Detection of DIF items. We check whether the DIF items can be accurately detected by the proposed method. In this analysis, we assume the number of latent classes K is known. We calculate the average True Positive Rate (TPR) as

where \( \{ {\hat{\delta }}^{(b)}_{jk} \}_{J \times K} \) is the estimated DIF effect matrix in the b-th replication and \(\{\delta _{jk}^{\!{*}}\}_{J\times K}\) denotes the true DIF effect matrix. Similarly, we calculate the average true negative rate (\(\overline{\text {TNR}}\)), which is the failure rate of identifying zero elements:

To better evaluate the performance of the proposed method in detecting DIF items, we compare the FPR and TPR with the results under an oracle setting where the group membership \(\xi _i\) is known while anchor items are unknown. Under this oracle setting, we solve the following regularised estimation problem as in Bauer et al. (2020):

where

and \(\Xi \) includes the parameters in \(\Delta \) except for those in \(\varvec{\nu }\). The tuning parameter \(\lambda \) is chosen by BIC. The FPR and TPR for detecting DIF items are also calculated under this setting and compared with those from the proposed method where \(\xi _i\)s are unknown.

Classification of respondents. We then consider the classification of respondents based on the MAP estimate. Again, we assume the number of latent focal groups K is known. We calculate the average classification error rate, given by the fraction of incorrect classifications to the overall number of classifications averaged over the number of replications:

where

and \({\hat{P}}({\hat{\xi }} = k | {\textbf{y}}_i)\) is the posterior probability of category k given in (6). We notice that there is a label-switching problem under the setting with \(K = 2\) when calculating the classification error. This problem is solved by a post-hoc label switching based on the estimated \(\nu _1\) and \(\nu _2\), using the ordering information that class 2 is larger than class 1, i.e., \(\nu _2 > \nu _1\). We also calculate the MAP classification error under the true model and compare it with the classification error of the proposed method.

Parameter estimation accuracy. We further evaluate the accuracy of our final estimator \({\hat{\Delta }}^{({{\hat{\lambda }}})}\), assuming that K is known. For each unknown parameter, the root mean square error (RMSE) and the absolute bias are calculated based on the 100 replications.

3.3 Results

The classification performance in the simulation study is presented in Tables 1 and 2, which display the respondent and item classification accuracy for the two-group and three-group settings, respectively. First, it is observed that the classification error is predominantly influenced by the number of items, with larger item sizes resulting in better respondent classification performance. This observation is consistent with previous literature on DIF detection using IRT models (Chen et al., 2022; Kuha and Moustaki, 2015).

For respondent classification in the two-group setting, we observe in Table 1 that the classification error is small for all simulation scenarios, and always better than a naïve classifier that assigns all respondents to the reference group. This is true even when the focal group only consists of 10% of the respondents. As the proportion in the focal group (in Table 1 denoted by \(\pi \)) increases, the proposed method’s enhancement over the naïve classifier, which assigns all respondents to the baseline group, becomes more pronounced. We also note that the AUC values increase when the proportion of respondents in the focal group increases, but the increments are small. Table 1 furthermore shows that the classification accuracy is only slightly worse when using the estimated parameters compared to when the true model parameters are used.

For item classification in the two-group setting, the true positive rates are very high, and with no item being falsely flagged as a DIF item, across all scenarios. This suggests that the proposed framework is effective in identifying DIF items and minimizing false positives for various numbers of items and proportions in the focal group. We also note that the oracle estimator performance is only slightly better, i.e., knowing the group membership of the respondents only leads to marginal improvement in item classification accuracy.

In the three-group setting, Table 2 shows that the classification error rates are generally higher than those observed in the two-group case, which is expected given the increased complexity of the DIF detection problem when more than two groups are involved. However, note that the classification error is always clearly smaller than the naïve classifier that assigns all of the respondents to the reference group. This increase in classification performance is particularly clear in the simulation scenarios with \(J=50\). We also observe that the AUC values for classes 2 and 3 are within reasonable ranges, suggesting that the proposed method is capable of adequately classifying respondents even in more challenging settings. The TPR and FPR values for item classification in the three-group setting are furthermore high for both class 2 and class 3 items and with no misclassified DIF-free item. This further supports the effectiveness of the proposed framework in identifying DIF items across different group configurations.

Figures 2 and 3 show the RMSEs (Root Mean Squared Errors) for all the item parameter estimates for the two-group setting (\(K=1\)). The RMSEs are small for all estimates across the items, with the exemption of one or two items that show larger RMSEs for the estimated DIF parameter. The RMSEs for the estimated DIF parameters for the DIF-free items are zero as the proposed estimation procedure successfully classifies the DIF items. We also see that the number of items and proportion of respondents in the focal group have essentially no influence on the RMSE, for the configurations considered in this simulation study.

In Fig. 4, we can observe the RMSEs specifically for the three-group scenario. It is important to note that in this case, there exist two DIF effects, one for each focal group. For the first focal group, the true DIF effects are drawn from values in the range [0.5 – 1] and for the second focal group, they are drawn from the range [1 – 1.5]. The increased difficulty of estimating smaller DIF effects is reflected by larger RMSEs for the first focal group.

In Table 3 and Table 4, the absolute bias and RMSE under the 2-group setting, averaged over the number of items of the same type, are displayed for both sample sizes and every focal group proportion \(\pi \) considered. The absolute bias and RMSE are small for all parameters, and differences are very small between different values of \(\pi \) and different values of N. The most notable difference is seen for the estimate of the focal group proportion \(\pi \), where the bias and RMSE clearly decrease when the sample size increases. We also see that the DIF effect parameter is estimated with only a small bias and RMSE. In Table 5, the absolute bias and RMSE are shown for the 3-group setting. As in the two-group setting, the bias and RMSE values are small under all settings.

In summary, the simulation results presented in Tables 1–5, and Figs. 2–4 demonstrate the potential of the proposed framework for DIF analysis with unknown anchor items and comparison groups. The framework performs well in terms of respondent and item classification accuracy across a range of scenarios, and with good parameter recovery, suggesting its applicability in various real-world settings.

4 Real Data Analysis

To illustrate the proposed method, we analyse a mathematics test from a midwestern university in the United States. This data set has been analysed in both Bolt et al. (2002) and De Boeck et al. (2011). The data contains 3000 examinees answering 26 binary-scored items. The original dataset contains two test forms, with 8 items in common.Footnote 1 Six of the common items are of particular interest as they are positioned at the end of the test. Bolt et al. (2002) hypothesised the existence of two latent classes: one speeded class that answered end-of-test items with insufficient time, and another non-speeded class. The identification of speeded items was conducted using a two-form design. Specifically, they examined common items across two test administrations, where the common items were placed at the end of the test in one form and earlier in the other form. By estimating the item difficulty for the end-of-test common items and comparing it to the difficulty estimates from the other form, they were able to quantify the DIF effect. Our goal is to detect the DIF items, i.e., the speeded items, and classify respondents into latent classes. Thanks to our procedure, we can analyse only one form, without using information from the second form. A similar analysis using simulated data was also conducted in Robitzsch (2022) but using a Rasch mixture model.

We start by fitting the proposed model to the data for different values of K, which determines the number of latent classes. The BIC for a model with \(K=0\), i.e. no latent classes other than the reference group, equals 117,552.2, the BIC for \(K=1\) equals 92,300.1, and for \(K=2\), the BIC equals 92,522.8. We therefore proceed with a model using \(K=1\), i.e., two latent classes. This aligns with the two-group model considered in Bolt et al. (2002). It took the proposed EM algorithm 37.04, 144.760, and 277.390 s to convergeFootnote 2 for the 1-. 2-, and 3-class solutions, respectively.Footnote 3 The proposed model classifies 25.8% of the respondents into the second latent class. If we interpret the two classes as a speeded and non-speeded class, this means that about 26% of the respondents belong to the speeded class. The estimated mean ability in the speeded class equals \(-\)0.351 with the estimated standard deviation equal to 1.075. Since the reference group (the non-speeded class) has a prespecified ability mean and standard deviation equal to 0 and 1, respectively, our results therefore indicate that the speeded class has a lower ability on average compared to the non-speeded class. These findings align closely with the results presented in Bolt et al. (2002).

In Table 6 we give the estimated item parameters from the educational test data. The estimated item discrimination and easiness parameters, \({\hat{a}}\) and \({\hat{d}}\) respectively, are provided together with the estimated DIF effect \({\hat{\delta }}\). Common items are denoted by asterisks. For the majority of the items, the DIF effects are estimated to be zero, indicating that these items do not exhibit any significant measurement bias between different groups. However, items 20-26 exhibit non-zero DIF effects, suggesting that these items might be functioning differently for the two latent classes. Among these, items 20, 21, 22, 23, and 24 are also common items, which may require further investigation to ensure fair assessment across test administrations. Since the DIF effect for these end-of-test items is all negative, it suggests that these items become more difficult for the second latent class. This class could therefore consist of respondents that ran out of time and had insufficient time to answer these items. This is known as a speededness effect. As a result, the item difficulty is inflated, which could lead to biased subsequent analyses. The presence of non-zero DIF effects for some end-of-test items highlights the need to scrutinize these items more closely and potentially revise the test administration to minimize the impact of speededness. For instance, increasing the allocated time for the test or redistributing the items more evenly throughout the test could help alleviate the speededness effect and create a more unbiased assessment.

5 Concluding Remarks

In this paper, we presented a comprehensive framework for DIF analysis that overcomes several limitations of existing methods. Our approach can deal with the situation in which both anchor items and comparison groups are unknown, a setting commonly encountered in real-world applications. The use of latent classes in our approach allows us to model heterogeneity among the observations. In this sense, our approach relates to an exploratory dimensionality analysis where there is, in addition to the primary latent dimension, a second dimension that is treated as unknown. In our empirical analysis, this additional dimension is labeled as a speededness effect. In addition to modeling the additional latent dimension(s), the proposed regularised estimator enables us to identify DIF items and quantify their effect on the intercept parameter of the model. We also propose an efficient EM algorithm for the estimation of the model parameters.Footnote 4 One merit of our framework is its flexibility. While focusing on the 2-PL model as the baseline model, our approach can be easily extended to accommodate other widely used IRT models, such as the Rasch model and the proportional odds model. We can also allow the baseline model to be a multidimensional IRT model, as shown in Sect. 1.6. Our framework is furthermore able to accommodate more than two comparison groups, allowing DIF effects to vary between the groups. Lastly, the proposed method can be extended as shown in Section 2.6 to detect non-uniform DIF. This flexibility makes our framework applicable to a wide range of contexts.

Although our approach shows promising results, there are still several limitations to be addressed in future research. For example, we do not provide confidence intervals for the DIF effect parameters which would be useful for practitioners and researchers in interpreting the magnitude and significance of the DIF effects. In Chen et al. (2023) for example, where the comparison groups are known but the anchor items are unknown, the distribution of \({\hat{\delta }}_{jk} - \delta _{jk}\) is approximated by Monte Carlo simulation to yield valid statistical inference. This procedure does in essence apply to our case as well. In addition, we have not linked the latent classes to covariates, as in for example Vermunt (2010) and Vermunt and Magidson (2021). By doing so, researchers can gain insights into the underlying characteristics of the different classes and better understand the factors that may contribute to DIF. This would enhance the interpretability of the results and help identify potential sources of DIF that could be addressed in the development of assessment instruments. To address this limitation, future research could explore the integration of covariates within a structural equation modeling (SEM) framework. This would enable the simultaneous modelling of both the measurement model (i.e., the IRT model) and the structural model (i.e., relationships between latent variables and covariates). Incorporating covariates in this manner would not only improve the interpretability of the results but could also provide a more comprehensive understanding of the relationships between the items, latent traits, and potential sources of DIF. Our framework could also be extended to accommodate non-uniform DIF, i.e., DIF in the slope parameter, such as in Wang et al. (2023) that considers a multidimensional IRT model with known comparison groups and unknown anchor items.

In this study, we focus on the Lasso penalty for its simplicity, computational efficiency, and well-documented ability to perform both variable selection and regularisation. The Lasso’s convex optimisation problem is easier to solve computationally than some non-convex penalties like the SCAD (Fan and Li, 2001) and the Minimax Concave Penalty (MCP; Zhang, 2010). We acknowledge that the Lasso penalty can introduce some bias into parameter estimates. However, in our proposed method we use the Lasso for model selection. As we thereafter refit the selected model there will be no bias, asymptotically, supposing that the model selection based on the Lasso is consistent (Zhao et al., 2021). Alternative penalties, including the adaptive Lasso (Zou, 2006), SCAD, and MCP, have their own merit. However, they also come with some challenges, especially in terms of computational complexity and algorithm stability. We, therefore, argue that the Lasso penalty is a suitable choice for the proposed model and its identifying assumptions. We believe it would be interesting in the future to compare the performance of estimators with different penalty functions under the current latent DIF setting.

Our proposed framework provides a powerful tool for DIF analysis with unknown anchor items and comparison groups. The framework has the potential to inform the development of fair and unbiased assessments. Future research can build upon our approach by addressing the limitations and exploring other applications. In terms of the potential impact of our work, the framework could be particularly beneficial in specific contexts, such as educational assessment, where identifying and addressing DIF is critical to ensure that tests fairly measure students’ abilities across heterogeneous populations, thereby promoting equal access to educational opportunities. It could also be considered in employment selection, where unbiased assessment instruments are crucial to creating a diverse and inclusive workforce that complies with legal requirements related to fairness in employment practices (Ployhart and Holtz, 2008; Hough et al., 2001). Another application is psychological evaluations, where accurate identification of DIF can help improve diagnostic tools and treatment recommendations, leading to better outcomes for individuals from diverse backgrounds (Teresi et al., 2021). By addressing the limitations and further refining our approach, this framework has the potential to contribute to the development of more fair assessment practices in these and other domains, ultimately benefiting a wide range of stakeholders.

Notes

The original dataset contains 36 items, with 12 common items. We follow the procedure in Bolt et al. (2002) and only analyse items 1-18 and 29-36. In this analysis, items 19-26 therefore actually occupied item position 29-36.

In our implementation of the proposed EM algorithm, we stop the iterations when the increase in the log-likelihood is smaller than \(10^{-4}\).

CPU configuration: 11th Gen Intel(R) Core(TM) i7-1165G7 2.80GHz 3200MHz.

The R code for the proposed method is available from https://github.com/gabrieltwallin/LatentDIF/

References

Bauer, D. J., Belzak, W. C., & Cole, V. T. (2020). Simplifying the assessment of measurement invariance over multiple background variables: Using regularized moderated nonlinear factor analysis to detect differential item functioning. Structural Equation Modeling: a Multidisciplinary Journal, 27(1), 43–55.

Bechger, T. M., & Maris, G. (2015). A statistical test for differential item pair functioning. Psychometrika, 80(2), 317–340.

Belzak, W., & Bauer, D. J. (2020). Improving the assessment of measurement invariance: Using regularization to select anchor items and identify differential item functioning. Psychological Methods, 25(6), 673–690.

Bennink, M., Croon, M. A., Keuning, J., & Vermunt, J. K. (2014). Measuring student ability, classifying schools, and detecting item bias at school level, based on student-level dichotomous items. Journal of Educational and Behavioral Statistics, 39(3), 180–202.

Bhattacharya, S., & McNicholas, P. D. (2014). A lasso-penalized bic for mixture model selection. Advances in Data Analysis and Classification, 8(1), 45–61.

Birnbaum, A. (1968). Some latent trait models and their use in inferring an examinee’s ability. In F. M. Lord & M. R. Novick (Eds.), Statistical theories of mental test scores (pp. 397–472). Addison-Wesley.

Bock, R. D., & Aitkin, M. (1981). Marginal maximum likelihood estimation of item parameters: Application of an EM algorithm. Psychometrika, 46(4), 443–459.

Bolt, D. M., Cohen, A. S., & Wollack, J. A. (2002). Item parameter estimation under conditions of test speededness: Application of a mixture Rasch model with ordinal constraints. Journal of Educational Measurement, 39(4), 331–348.

Bouveyron, C., & Brunet-Saumard, C. (2014). Model-based clustering of high-dimensional data: A review. Computational Statistics & Data Analysis, 71, 52–78.

Candell, G. L., & Drasgow, F. (1988). An iterative procedure for linking metrics and assessing item bias in item response theory. Applied Psychological Measurement, 12(3), 253–260.

Cao, M., Tay, L., & Liu, Y. (2017). A monte carlo study of an iterative wald test procedure for dif analysis. Educational and Psychological Measurement, 77(1), 104–118.

Chen, Y., Li, C., Ouyang, J., & Xu, G. (2023). DIF statistical inference and detection without knowing anchoring items [To appear]. Psychometrika.

Chen, Y., Lu, Y., & Moustaki, I. (2022). Detection of two-way outliers in multivariate data and application to cheating detection in educational tests. The Annals of Applied Statistics, 16(3), 1718–1746.

Cho, S.-J., & Cohen, A. S. (2010). A multilevel mixture IRT model with an application to DIF. Journal of Educational and Behavioral Statistics, 35(3), 336–370.

Cho, S.-J., Suh, Y., & Lee, W.-Y. (2016). An NCME instructional module on latent DIF analysis using mixture item response models. Educational Measurement: Issues and Practice, 35(1), 48–61.

Cizek, G. J., & Wollack, J. A. (2017). Handbook of quantitative methods for detecting cheating on tests. NY: Routledge New York.

Clauser, B., Mazor, K., & Hambleton, R. K. (1993). The effects of purification of matching criterion on the identification of DIF using the Mantel-Haenszel procedure. Applied Measurement in Education, 6(4), 269–279.

Cohen, A. S., & Bolt, D. M. (2005). A mixture model analysis of differential item functioning. Journal of Educational Measurement, 42(2), 133–148.

De Boeck, P., Cho, S.-J., & Wilson, M. (2011). Explanatory secondary dimension modeling of latent differential item functioning. Applied Psychological Measurement, 35(8), 583–603.

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1), 1–22.

Dorans, N. J., & Kulick, E. (1986). Demonstrating the utility of the standardization approach to assessing unexpected differential item performance on the scholastic aptitude test. Journal of Educational Measurement, 23(4), 355–368.

Drabinová, A., & Martinková, P. (2017). Detection of differential item functioning with nonlinear regression: A Non-IRT approach accounting for guessing. Journal of Educational Measurement, 54(4), 498–517.

Fan, J., & Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American statistical Association, 96(456), 1348–1360.

Fidalgo, A., Mellenbergh, G. J., & Muñiz, J. (2000). Effects of amount of DIF, test length, and purification type on robustness and power of Mantel-Haenszel procedures. Methods of Psychological Research Online, 5(3), 43–53.

Finch, W. H., & Hernández Finch, M. E. (2013). Investigation of specific learning disability and testing accommodations based differential item functioning using a multilevel multidimensional mixture item response theory model. Educational and Psychological Measurement, 73(6), 973–993.

Hastie, T., Tibshirani, R., Friedman, J. H., & Friedman, J. H. (2009). The elements of statistical learning: Data mining, inference, and prediction. Springer.

Holland, P. W., & Thayer, D. T. (1986). Differential item functioning and the Mantel-Haenszel procedure. ETS Research Report Series, 1986(2), i–24.

Holland, P. W., & Wainer, H. (1993). Differential item functioning. Psychology Press.

Hough, L. M., Oswald, F. L., & Ployhart, R. E. (2001). Determinants, detection and amelioration of adverse impact in personnel selection procedures: Issues, evidence and lessons learned. International Journal of Selection and Assessment, 9(1–2), 152–194.

Jöreskog, K. G., & Goldberger, A. S. (1975). Estimation of a model with multiple indicators and multiple causes of a single latent variable. Journal of the American statistical Association, 70(351a), 631–639.

Kim, S.-H., Cohen, A. S., & Park, T.-H. (1995). Detection of differential item functioning in multiple groups. Journal of Educational Measurement, 32(3), 261–276.

Kopf, J., Zeileis, A., & Strobl, C. (2015). Anchor selection strategies for DIF analysis: Review, assessment, and new approaches. Educational and Psychological Measurement, 75(1), 22–56.

Kopf, J., Zeileis, A., & Strobl, C. (2015). A framework for anchor methods and an iterative forward approach for DIF detection. Applied Psychological Measurement, 39(2), 83–103.

Kuha, J., & Moustaki, I. (2015). Nonequivalence of measurement in latent variable modeling of multigroup data: A sensitivity analysis. Psychological Methods, 20(4), 523–536.

Lord, F. M. (1977). A study of item bias, using item characteristic curve theory. In Y. H. Poortinga (Ed.), Basic problems in cross-cultural psychology (pp. 19–29). Swets & Zeitlinger Publishers.

Lord, F. M. (1980). Applications of item response theory to practical testing problems. Routledge.

Luo, R., Tsai, C.-L., & Wang, H. (2008). On mixture regression shrinkage and selection via the mr-lasso. International Journal of Pure and Applied Mathematics, 46, 403–414.

Magis, D., Tuerlinckx, F., & De Boeck, P. (2015). Detection of differential item functioning using the lasso approach. Journal of Educational and Behavioral Statistics, 40(2), 111–135.

Millsap, R. E. (2012). Statistical approaches to measurement invariance. Routledge.

Muthen, B., & Lehman, J. (1985). Multiple group IRT modeling: Applications to item bias analysis. Journal of Educational Statistics, 10(2), 133–142.

Muthén, B. O. (1989). Latent variable modeling in heterogeneous populations. Psychometrika, 54(4), 557–585.

O’Leary, L. S., & Smith, R. W. (2016). Detecting candidate preknowledge and compromised content using differential person and item functioning. In Handbook of quantitative methods for detecting cheating on tests (pp. 151–163). Routledge.

Parikh, N., & Boyd, S. (2014). Proximal algorithms. Foundations and Trends®in Optimization, 1(3), 127–239.

Ployhart, R. E., & Holtz, B. C. (2008). The diversity-validity dilemma: Strategies for reducing racioethnic and sex subgroup differences and adverse impact in selection. Personnel Psychology, 61(1), 153–172.

Rasch, G. (1960). Studies in mathematical psychology: I. probabilistic models for some intelligence and attainment tests. Nielsen & Lydiche.

Redner, R. A., & Walker, H. F. (1984). Mixture densities, maximum likelihood and the EM algorithm. SIAM review, 26(2), 195–239.

Reeve, B. B., & Teresi, J. A. (2016). Overview to the two-part series: Measurement equivalence of the Patient Reported Outcomes Measurement Information System®(PROMIS®) short forms. Psychological Test and Assessment Modeling, 58(1), 31–35.

Robitzsch, A. (2022). Regularized mixture Rasch model. Information, 13(11), 534.

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika monograph supplement.

Schauberger, G., & Mair, P. (2020). A regularization approach for the detection of differential item functioning in generalized partial credit models. Behavior Research Methods, 52(1), 279–294.

Schwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464.

Shao, J. (1997). An asymptotic theory for linear model selection. Statistica Sinica, 7(2), 221–242.

Shealy, R., & Stout, W. (1993). A model-based standardization approach that separates true bias/DIF from group ability differences and detects test bias/DTF as well as item bias/DIF. Psychometrika, 58(2), 159–194.

Steenkamp, J.-B.E., & Baumgartner, H. (1998). Assessing measurement invariance in cross-national consumer research. Journal of Consumer Research, 25(1), 78–90.

Stephens, M. (2000). Dealing with label switching in mixture models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 62(4), 795–809.

Swaminathan, H., & Rogers, H. J. (1990). Detecting differential item functioning using logistic regression procedures. Journal of Educational Measurement, 27(4), 361–370.

Tay, L., Huang, Q., & Vermunt, J. K. (2016). Item response theory with covariates (IRT-C) assessing item recovery and differential item functioning for the three-parameter logistic model. Educational and Psychological Measurement, 76(1), 22–42.

Tay, L., Meade, A. W., & Cao, M. (2015). An overview and practical guide to irt measurement equivalence analysis. Organizational Research Methods, 18(1), 3–46.

Teresi, J. A., & Reeve, B. B. (2016). Epilogue to the two-part series: Measurement equivalence of the Patient Reported Outcomes Measurement Information System®(PROMIS®) short forms. Psychological Test and Assessment Modeling, 58(2), 423–433.

Teresi, J. A., Wang, C., Kleinman, M., Jones, R. N., & Weiss, D. J. (2021). Differential item functioning analyses of the Patient-Reported Outcomes Measurement Information System (PROMIS®) measures: Methods, challenges, advances, and future directions. Psychometrika, 86(3), 674–711.

Thissen, D., Steinberg, L., & Wainer, H. (1988). Use of item response theory in the study of group differences in trace lines. In H. Wainer & H. I. Braun (Eds.), Test validity (pp. 147–172). Lawrence Erlbaum Associates Inc.

Thissen, D., & Steinberg, L. (1988). Data analysis using item response theory. Psychological Bulletin, 104(3), 385–395.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological), 58(1), 267–288.

Tutz, G., & Schauberger, G. (2015). A penalty approach to differential item functioning in Rasch models. Psychometrika, 80(1), 21–43.

van de Geer, S. A. (2008). High-dimensional generalized linear models and the lasso. The Annals of Statistics, 36(2), 614–645.

Vermunt, J. K. (2010). Latent class modeling with covariates: Two improved three-step approaches. Political Analysis, 18(4), 450–469.

Vermunt, J. K., & Magidson, J. (2021). How to perform three-step latent class analysis in the presence of measurement non-invariance or differential item functioning. Structural Equation Modeling: A Multidisciplinary Journal, 28(3), 356–364.

Von Davier, M., Xu, X., & Carstensen, C. H. (2011). Measuring growth in a longitudinal large-scale assessment with a general latent variable model. Psychometrika, 76(2), 318–336.

Wainer, H. (2012). An item response theory model for test bias and differential test functioning. In Differential item functioning (pp. 202–244). Routledge.

Wang, C., Zhu, R., & Xu, G. (2023). Using lasso and adaptive lasso to identify dif in multidimensional 2pl models. Multivariate Behavioral Research, 58(2), 387–407.

Wang, W.-C., Shih, C.-L., & Yang, C.-C. (2009). The mimic method with scale purification for detecting differential item functioning. Educational and Psychological Measurement, 69(5), 713–731.

Wang, W.-C., & Su, Y.-H. (2004). Effects of average signed area between two item characteristic curves and test purification procedures on the DIF detection via the Mantel-Haenszel method. Applied Measurement in Education, 17(2), 113–144.

Wang, W.-C., & Yeh, Y.-L. (2003). Effects of anchor item methods on differential item functioning detection with the likelihood ratio test. Applied Psychological Measurement, 27(6), 479–498.

Woods, C. M. (2009). Evaluation of mimic-model methods for dif testing with comparison to two-group analysis. Multivariate Behavioral Research, 44(1), 1–27.

Woods, C. M., Cai, L., & Wang, M. (2013). The langer-improved wald test for DIF testing with multiple groups: Evaluation and comparison to two-group IRT. Educational and Psychological Measurement, 73(3), 532–547.

Woods, C. M., & Grimm, K. J. (2011). Testing for nonuniform differential item functioning with multiple indicator multiple cause models. Applied Psychological Measurement, 35(5), 339–361.

Yuan, K.-H., Liu, H., & Han, Y. (2021). Differential item functioning analysis without a priori information on anchor items: QQ plots and graphical test. Psychometrika, 86(2), 345–377.

Zhang, C.-H. (2010). Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics, 38(2), 894–942.

Zhao, P., & Yu, B. (2006). On model selection consistency of Lasso. The Journal of Machine Learning Research, 7, 2541–2563.

Zhao, S., Witten, D., & Shojaie, A. (2021). In defense of the indefensible: A very naive approach to high-dimensional inference. Statistical Science, 36(4), 562–577.

Zou, H. (2006). The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101(476), 1418–1429.

Zwick, R., Thayer, D. T., & Lewis, C. (2000). Using loss functions for DIF detection: An empirical Bayes approach. Journal of Educational and Behavioral Statistics, 25(2), 225–247.

Funding

Gabriel Wallin is supported by the Vetenskapsrådet (Grant No. 2020-06484).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest statement

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Gradients for the Proximal Gradient Descent

In the M-step of the proposed EM-algorithm, we implement a proximal gradient descent. This algorithm requires the gradients of the objective function, which for the proposed model can be expressed as

where \(\eta _j\) is a generic notation for the item parameters \((a_j, d_j, \delta _{jk})\), and \(\varphi _{ij} = d_j + a_j\theta _i + \delta _{j\xi _i}\),

To give an example, we give the explicit expressions for the two-group case. We start by parametrising the latent construct of the focal group as \(\theta _2 = \mu _2 + \sigma _2 \theta _1\), where \(\mu \) and \(\sigma \) is the mean and standard deviation of the latent construct in the focal group, and \(\theta _1 \sim {\mathcal {N}}(0,1)\) is the latent construct in the reference group. We have that \(\varphi _{ij}^{(1)} = d_j + a_j \theta _{i,1}\) for the reference group and \(\varphi _{ij}^{(2)} = d_j + \delta _j + a_j \theta _{i,2}\) for the focal group. The partial derivatives in (17) are given by

The Line Search Procedure

The implemented line search algorithm attempts to find an appropriate step size for the proximal gradient descent implemented in the M-step of the proposed EM algorithm. It does so by iteratively adjusting the step size until the change in the objective function (i.e., the penalised log-likelihood) is within a specified tolerance. The algorithm starts with an initial step size and iteratively reduces it by a factor (in this case, dividing by 2) until the new objective function value satisfies the tolerance condition. If the maximum number of iterations is reached without finding a satisfactory step size, the algorithm returns the current step size. The implemented line search algorithm is given in the following Line Search Algorithm.

The Soft-Thresholding Procedure

The soft threshold function and the proximal gradient function are used to identify the DIF-free items, i.e., the anchor items, from the data. The soft threshold function takes a vector x and a scalar \(\lambda \) as inputs and applies element-wise thresholding to x. It sets elements with absolute values less than or equal to \(\lambda \) to zero, subtracts \(\lambda \) from elements greater than \(\lambda \), and adds \(\lambda \) to elements less than \(-\lambda \). The proximal gradient function takes the (estimated) DIF effect \(\delta \), its gradient \(\nabla _x\), a regularisation parameter \(\lambda \), and a step size \(\gamma \) as inputs. It calls the soft threshold function with the updated vector \(\delta - \gamma \nabla _\delta \) and the product \(\lambda \gamma \). The output of the proximal gradient function is the updated DIF effect parameter estimate after applying the soft threshold function. These algorithms are summarised below.

The Closed-Form Solution of the Latent Class Proportion

Using the Lagrange multiplier method, we obtain

where \(\gamma _{ik}^{(t)} = P(\xi _i = k | {\textbf{y}}_i)\)

We can see this from the following argument. Given that

The expectation can be expressed as

Thus, we have

where \(\gamma _{ik}^{(t)} = P(\xi _i = k \big \vert {\textbf{Y}}_i, \Delta ^{(t)})\).

Construct the Lagrangian function with the constraints:

where \(\alpha _k \ge 0\) for \(k = 0, 1, \dots , K\).

Now, take the partial derivative of the Lagrangian function with respect to \(\nu _k\):

Setting the partial derivatives to zero, we get

From the above equation, we have:

To find the values of \(\alpha _k\) and \(\beta \), we need to apply the constraint \(\sum _{k=0}^K \nu _k = 1\). Plugging in the expression for \(\nu _k^{(t+1)}\), we have

Since the constraint only involves the sum of the \(\nu _k^{(t+1)}\), we can eliminate the Lagrange multipliers \(\alpha _k\) by normalizing the solution:

However, since \(\sum _{k=0}^K \gamma _{ik}^{(t)} = 1\) for all \(i = 1, 2, \dots , n\), the denominator simplifies to the total number of observations N. Therefore, we have the closed-form solution:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wallin, G., Chen, Y. & Moustaki, I. DIF Analysis with Unknown Groups and Anchor Items. Psychometrika (2024). https://doi.org/10.1007/s11336-024-09948-7

Received:

Published:

DOI: https://doi.org/10.1007/s11336-024-09948-7