Abstract

This paper proposes a new boundary uncertainty-based estimation method that has significantly higher accuracy, scalability, and applicability than our previously proposed boundary uncertainty estimation method. In our previous work, we introduced a new classifier evaluation metric that we termed “boundary uncertainty.” The name “boundary uncertainty” comes from evaluating the classifier based solely on measuring the equality between class posterior probabilities along the classifier boundary; satisfaction of such equality can be described as “uncertainty” along the classifier boundary. We also introduced a method to estimate this new evaluation metric. By focusing solely on the classifier boundary to evaluate its uncertainty, boundary uncertainty defines an easier estimation target that can be accurately estimated based directly on a finite training set without using a validation set. Regardless of the dataset, boundary uncertainty is defined between 0 and 1, where 1 indicates whether probability estimation for the Bayes error is achieved. We call our previous boundary uncertainty estimation method “Proposal 1” in order to contrast it with the new method introduced in this paper, which we call “Proposal 2.” Using Proposal 1, we performed successful classifier evaluation on real-world data and supported it with theoretical analysis. However, Proposal 1 suffered from accuracy, scalability, and applicability limitations owing to the difficulty of finding the location of a classifier boundary in a multidimensional sample space. The novelty of Proposal 2 is that it locally reformalizes boundary uncertainty in a single dimension that focuses on the classifier boundary. This convenient reduction with a focus toward the classifier boundary provides the new method’s significant improvements. In classifier evaluation experiments on Support Vector Machines (SVM) and MultiLayer Perceptron (MLP), we demonstrate that Proposal 2 offers a competitive classifier evaluation accuracy compared to a benchmark Cross Validation (CV) method as well as much higher scalability than both CV and Proposal 1.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A fundamental problem in statistics, machine learning, and pattern classification is to obtain an accurate estimate for the generalization ability of a learning algorithm trained on a finite dataset. The generalization ability of a pattern classifier is traditionally measured in terms of classification error probability. The goal of pattern classification is to execute the optimal classification rule (aka a Bayes decision rule) that corresponds to the minimum classification error probability (aka a Bayes error). However, the estimate of the error probability based on a finite amount of training data is so seriously biased that it cannot directly indicate the error probability [1].

A major conventional approach to classification error probability is Structural Risk Minimization (SRM)[2]. SRM provides an analytic estimation of the classification error probability. However, the resulting estimation can be loose in practice. Furthermore, the difficulty of deriving the necessary SRM in a case-by-case manner hinders its application [1, 3].

Hold Out (HO) evaluation bypasses the bias intrinsic to the training data by splitting the available data into training data and validation data. Then, the empirical error rate of the validation data is used as an estimate of the error probability. However, the error probability estimate obtained from a particular validation split may also be biased. To resolve this issue, Cross Validation (CV) [4] averages the error probability estimates obtained in turn from different validation sets partitioned from the data. The particular case where every sample is subsequently used as a validation set is called Leave-One-Out (LOO). LOO is known to converge to the expected error probability [5].

Bootstrap [6] reduces the variance of the error probability estimation by averaging the estimations obtained from different training sets by sampling with replacement. One drawback of the resampling approaches (CV, Bootstrap) is their costly training repetition that can be prohibitive in real-world tasks [7], along with the sacrifice of separate data for evaluation. In principle, training and evaluation on the training data to directly target the Bayes error would be preferable, although this has been difficult so far.

Moreover, error rate smoothing methods can improve error probability estimation [8] based only on the training set. For example, Minimum Classification Error training [9] optimally determines the degree of smoothing of the empirical error rate to target the Bayes error. However, the settings and effect of this automatic smoothing are ongoing research issues [10].

Likelihood methods such as information criteria [11] and Bayesian model selection [12, 13] rely on class posterior probability estimation instead of estimating the error probability. However, classification focuses the quality of the estimation on the boundaries that delineate class distributions, not on the class distributions themselves. Therefore, likelihood approaches are not necessarily optimal for classifier evaluation [14, 15].

The limitations described above come from the intrinsic difficulty of estimating the error probability. To circumvent these limitations, we proposed finding the optimally trained classifier through a new classifier evaluation metric that is uniquely easy to estimate in principle, which we termed “boundary uncertainty” or alternately “Bayes-boundary-ness” [16,17,18]. We chose the name “boundary uncertainty” because this evaluation metric measures the generalization ability of a classifier based on how equal class posterior probabilities are along the classifier boundary. It is known that the optimal classifier boundary is defined by equality among the class posterior probabilities, and this situation can be described as “uncertainty” along the boundary. Boundary uncertainty is fundamentally easier to estimate for two reasons: focusing the estimation on the classifier boundary instead of integrating estimations over the entire multidimensional space is easier, and the classifier boundary is defined based on classifier parameters that are precisely known. Furthermore, the value of boundary uncertainty implies how close we are to the Bayes risk.

In addition to defining boundary uncertainty, our previous work also proposed a boundary uncertainty estimation method that here we refer to as “Proposal 1.” Through experiments and theoretical analysis, we showed that Proposal 1 could perform classifier evaluation using neither sample distribution assumptions nor data resampling methods [16,17,18]. However, Proposal 1 relied on largely unclear settings and heavy treatment that seriously limited both its accuracy and its scalability. This motivates our work on a new boundary uncertainty estimation method that we call “Proposal 2” and comprehensively introduce in this paper.

The paper is organized as follows. In Section 2, we prepare the formalization of the classification problem. In Section 3, we summarize the previous method, i.e., Proposal 1. In Section 4, we introduce Proposal 2 as a new method. Then, in Section 5, we discuss the time costs of classifier evaluation for the two methods. In Section 6, we perform experiments using these two methods and provide extensive discussion. In Section 7, we finally summarize the paper.

Table 1 presents the main notations used in this paper. The first column of the table indicates the section where each notation is first used.

2 Formalization of Classification Problem

2.1 Bayes Decision Rule

We assume a d-dimensional sample space \( \mathcal {X} \), where we intend to discriminate between J classes. Given j ∈ [ [1,J] ] and \( \boldsymbol {x} \in \mathcal {X} \), we denote by P(Cj|x) the (true) class posterior probability of Cj given x. The goal of a classifier is to minimize the following classification risk:

We can see that Eq. 1 operates over the entire multidimensional sample space. C(x) represents the classification decision for sample x (decision is one of the classes Cr, where r ∈ [ [1,J] ]). R(C(x)|x) represents the risk when assigning class C(x) to x:

where λ(Cr|Cj) denotes the cost of classifying a member of class Cj as a member of class Cr. We assume the following cost:

This leads to the risk R(Cr|x) = 1 − P(Cr|x). This risk is minimized by choosing Cr such that P(Cr|x) is the highest among all of the class posterior probabilities at x. Based on this consideration, the decision rule that achieves the minimum classification error probability (aka a Bayes error) is [19]

This optimal classification decision is called the Bayes decision rule. Unfortunately, class posterior probabilities are unknown and difficult to accurately estimate in practice.

2.2 Classifier Decision Rule

We denote by \( \mathcal {T} = \{ (\boldsymbol {x}_{n}, y_{n}) \}_{n \in [\![1, N]\!] } \) the training set that consists of N pairs of a training sample \( \boldsymbol {x}_{n} \in \mathcal {X} \) and of its class index yn ∈ [ [1,J] ]. We emphasize the difference between y(xn) = yn, the given class index at xn, and C(xn;Λ), the class label predicted by the classifier at any sample xn. The decision of most classifiers is formalized as

The parameter vector Λ encompasses trainable parameters that are optimized on the training data (e.g., network weights) as well as hyperparameters that are traditionally set using validation data (e.g., regularization parameters). We refer to a specific value of Λ as “classifier status.” gj(⋅;Λ) is called a discriminant function. Its value at a sample x estimates the degree to which x belongs to Cj. gj(x;Λ) does not necessarily directly estimate P(Cj|x) [20]. The goal of this training is to find Λ so that the values of gj(⋅;Λ) result in a classifier decision rule (5) that executes the Bayes decision rule (4).

2.3 Classifier Evaluation and Classifier Selection

A classifier evaluation metric measures the generalization ability of a classifier. This evaluation metric is traditionally the error probability, and its estimate is usually obtained using validation data. A lower classification error probability value indicates a classifier decision that has higher generalization ability.

As illustrated in Fig. 1, classifier selection is the process of evaluating different classifier statuses ΛTR = {Λm}m∈[ [1,L] ] (green boxes on the left feed into the blue evaluation box in the center) in terms of a classifier evaluation metric (values for L different classifier statuses obtained are represented by red boxes on the right) and then selecting the status that scores the best (black box on the right).

3 Previous Boundary Uncertainty Estimation: Proposal 1

3.1 Previous Definition of Boundary Uncertainty

3.1.1 Bayes Boundary

We denote by B∗ the classification boundary that is uniquely defined by Eq. 4, and we term it “Bayes boundary.” B∗ is the optimal classification boundary that a classifier should execute. B∗ is locally defined by the equality between the highest class posterior probabilities. At a given \( \boldsymbol {x} \in \mathcal {X} \), there can be equality between the three or more highest class posterior probabilities. For simplicity, we assume that equality is achieved only between the two highest class posterior probabilities at x in practice. We denote by {i∗(x),j∗(x)} the indexes of the two highest (true) class posterior probabilities at x. By convention, we order them so that i∗(x) < j∗(x). With these notations,

This definition conveys the true impossibility of deciding for a single class along B∗. We interpret this situation as uncertainty along B∗.

3.1.2 Classifier Boundary

We denote by B(Λ) the classifier boundary that corresponds to the decision rule given by Eq. 5. Similarly to Section 3.1.1, we assume that B(Λ) involves equality between only the two classes that yield the highest discriminant function values at x. We denote by {i(x;Λ),j(x;Λ)} the indexes of these two classes at x; by convention, we order them so that i(x;Λ) < j(x;Λ). With these notations,

3.1.3 Boundary Uncertainty

Boundary uncertainty relies on two principles. First, the Bayes boundary B∗ solely consists of uncertain samples, whose two highest class posterior probabilities are equal. Second, there is a one-to-one relationship between a classifier decision and its corresponding classification boundary. Based on these considerations, we previously proposed to evaluate the generalization ability of a classifier parameterized by Λ in terms of the optimality of its classifier boundary. We defined boundary uncertainty as a classifier evaluation metric that measures the degree of equality between B∗ and B(Λ).

In order to define boundary uncertainty, our previous work considered the “local uncertainty” of samples x that are on B(Λ) and then denoted it by \( \hat {U}(\boldsymbol {x}; {{{\varLambda }}})\).Footnote 1 In principle, the local uncertainty U(x;Λ) takes as input the class posterior probability P(Ci(x;Λ)|x).Footnote 2U(x;Λ) measures the degree of equality between class posterior probabilities P(Ci(x;Λ)|x) and P(Cj(x;Λ)|x). We only expect from U(⋅;Λ) that it takes a higher value, since P(Ci(x;Λ)|x) and P(Cj(x;Λ)|x) are closer to equality, and lower values otherwise. U(x;Λ) implicitly contains the top indexes {i(x;Λ),j(x;Λ)} through its arguments x and Λ.

Figure 2 illustrates two possible choices of local uncertainty function: a triangle function (orange curve) and the binary Shannon entropy (blue curve). The horizontal axis of the graph is indifferently P(Ci(x;Λ)|x) or P(Cj(x;Λ)|x). The minimum and maximum values of these local uncertainty functions are Umin = 0 and Umax = 1, respectively. This results in a convenient range of values [0,1] that is the same regardless of the dataset, where “1” indicates optimality of the boundary at x.

Then, given a classifier status Λ, Proposal 1 empirically defined the following classifier evaluation metric that we estimated from the training set [18] and termed “boundary uncertainty”:

\( \mathcal {N}_{B}({{{\varLambda }}}) \) refers to a “near-boundary set” that ideally would consist of samples exactly on B(Λ). \( \mathcal {N}_{B}({{{\varLambda }}}) \) acted as a practical approximation of B(Λ) consisting of training samples that were very close to B(Λ), all along B(Λ). |⋅| refers to the cardinal operator. The notation \( \hat {\cdot } \) in this paper refers to estimated quantities based on a finite dataset, in order to contrast with their expected value (based on an infinite dataset).

In Eq. 8, boundary uncertainty is defined as the finite expectation of the local uncertainty function over the \( \mathcal {N}_{B}({{{\varLambda }}}) \). Owing to the characterization of the Bayes boundary B∗ and to the property of the local uncertainty function, boundary uncertainty reaches a higher value because the classifier boundary is more highly optimal, and lower values otherwise. Furthermore, the relationship between classification error probability and boundary uncertainty was the equivalence between achieving Umax and achieving the minimum error probability (Bayes error). We describe this matter in more detail in later sections.

To compute (8), Proposal 1 consists of two steps described in Sections 3.3 and 3.4, respectively. Before reviewing these two steps, we review the k Nearest Neighbor (k NN) regression rule that Proposal 1 relied upon to estimate the class posterior probability P(Ci(x;Λ)|x) input into the local uncertainty.

3.2 Nonparametric Posterior Probability Estimation using k NN Regression

To estimate local uncertainties, both Proposal 1 and Proposal 2 rely on the k NN regression because it estimates class posterior probabilities without globally imposing a probability model on the class distributions. Given \( \boldsymbol {x} \in \mathcal {X} \) and j ∈ [ [1,J] ], in order to estimate P(Cj|x), k NN regression requires a small volume V (x) that contains x. We denote by k(x) the number of samples that are contained in V (x), and by kj(x) the number of samples among them that carry the label Cj (the basic version of k NN regression considers a uniform k across \( \mathcal {X} \), but adaptative k NN regression methods adapt k to each sample x).

k NN regression gives \( \hat {P}(C_{j} | \boldsymbol {x}) = k_{j}(\boldsymbol {x}) / k(\boldsymbol {x}) \). A higher k(x) provides more samples for the estimation (lower variance), but these samples are farther away from x (higher bias). This requires a tradeoff. k(x) is usually set using validation data.

3.3 Step 1: Generation of Anchors

Sections 3.3 and 3.4 refer to Panels 1A to 1D of Fig. 3. Panel 1A illustrates Step 1 of Proposal 1: We consider a training set \( \mathcal {T} \) whose samples of given labels C1 and C2 are represented by triangles and squares, respectively. Proposal 1 evaluates a candidate classifier status Λ that it takes as Input (left-hand side of Fig. 3). We illustrate B(Λ) with a blue curve.

Estimation of \( \hat {U}({{{\varLambda }}}) \) by our previous Proposal 1 on two-class and two-dimensional data. Samples of given class labels C1 and C2 are represented by yellow squares and green triangles, respectively. For a given Λ, B(Λ) is represented by a blue curve. We provide step-by-step explanations in Sections 3.3 and 3.4.

Proposal 1 directly applied the definition of B(Λ) (7) to find B(Λ). It searched for zeros of the function f(x;Λ) = gi(x;Λ)(x;Λ) − gj(x;Λ)(x;Λ) in \( \mathcal {X} \). a is characterized by f(a;Λ) = 0, so the theorem of intermediate values applied to f(⋅;Λ) guarantees the existence of a sample a ∈ B(Λ) on any segment \( [ \boldsymbol {x}, \boldsymbol {x}^{\prime } ] \) that satisfies f(x;Λ) > 0 and \( f(\boldsymbol {x}^{\prime }; {{{\varLambda }}}) < 0 \). We termed such an on-boundary sample as a an “anchor” (anchors are represented by red dots in Panel 1A). Proposal 1 therefore searched for anchors along the segments that join randomly picked couples of training samples \( \{ \boldsymbol {x}; \boldsymbol {x}^{\prime } \}\) that satisfy f(x;Λ) > 0 and \( f(\boldsymbol {x}^{\prime }; {{{\varLambda }}}) < 0 \). The search along \( [\boldsymbol {x}; \boldsymbol {x}^{\prime }] \) can be done by a dichotomy: at each dichotomy iteration, we checked the sign of f(⋅;Λ) at the middle of the segment, and then considered only the half of the segment that contains a change of sign of f(⋅;Λ) (namely, an anchor). In practice, we imposed a maximum number of dichotomy iterations that we denoted by imax.

How many and where anchors should be generated was a priori not obvious. In Eq. 6, the equality of class posterior probabilities should be checked all along B(Λ). To get as close to this ideal situation as possible, Proposal 1 generated a large number of anchors denoted by RA, each of which we obtained from a random pair of training samples as described above. In the multiclass case, Proposal 1 had to check i(⋅;Λ),j(⋅;Λ) at each dichotomy iteration. Indeed, if we generate an anchor defined by equality between two discriminant functions whose class indexes are not locally the highest, then this anchor is actually generated on a non-existing classifier boundary (in the sense of Eq. 7).

3.4 Step 2: Near-Boundary Local Uncertainty Estimation

3.4.1 Filtering Out Off-Boundary Samples

Given an anchor a ∈ B(Λ), the most direct way to apply k NN regression would be to use as target volume V (a), the volume defined by the k training samples that are nearest to a. However, this approach led to poor quality of the boundary uncertainty estimate, especially for higher-dimensional data, because a naive spherical target volume largely contains off-boundary samples that are biased in terms of boundary uncertainty estimation. Proposal 1 filtered out this bias by forming target volumes that only contain near-boundary samples. Proposal 1 defined the set of near-boundary samples denoted by \( \mathcal {N}_{B}({{{\varLambda }}}) \) as the set of one-nearest training sample for each anchor generated in Step 1 (Panel 1B).

3.4.2 Partitioning Near-Boundary Set into Target Volumes

Proposal 1 used Tree Divisive Clustering (TDC), of which imposed number of repetitions is RT, to break down \( \mathcal {N}_{B}({{{\varLambda }}}) \) into clusters that we used as target volumes for k NN regression. We used such a clustering method so that the obtained target volumes could adapt to the distribution. As described in the next paragraph, the iterative nature of TDC enabled us to progressively keep some control over the size of clusters.

Every step of the TDC consists of a 2-means clustering that ends after an imposed iK number of iterations: TDC thus adaptatively broke down \( \mathcal {N}_{B}({{{\varLambda }}}) \) into clusters (Panel 1C). We denote by ¶Λ the set of resulting clusters, and by \( \mathcal {C} \in \P _{{{{\varLambda }}}} \) one of the clusters. Here, the dependency in Λ emphasizes that the partition obtained by clustering is defined for a given \( \mathcal {N}_{B}({{{\varLambda }}}) \). Proposal 1 applied the k NN regression by using each \( \mathcal {C} \) as a target volume (Panel 1D), or in other words, the “k” of “k NN regression” is set to \( | \mathcal {C} | \) for each cluster \( \mathcal {C} \).

Clusters should contain enough samples to perform k NN regression, while focusing on B(Λ) as locally as possible (local uncertainties ideally measure equality between class posteriors on points along B(Λ)). Proposal 1 therefore continued to divide \( \mathcal {N}_{B}({{{\varLambda }}}) \) into smaller clusters so long as the clusters obtained at a given TDC step contained more than Nmax samples. Then, Proposal 1 only retained clusters that contained more than Nmin samples.

3.4.3 Local Uncertainty Computation

Proposal 1 adopted binary Shannon entropy as the local uncertainty function (blue curve in Fig. 2). When using the k NN regression to estimate class posterior probabilities, we do not consider individual samples but rather target volumes (in our case, elements of ¶Λ). Therefore, in the following, we explicitly describe the local uncertainty of clusters rather than that of individual samples.

Given a near-boundary cluster \( \mathcal {C} \in \P _{{{{\varLambda }}}} \), for each given class index j ∈ [ [1,J] ], we count the number of samples that have predicted class index j. To estimate \( \{ i(\mathcal {C}; {{{\varLambda }}}), j(\mathcal {C}; {{{\varLambda }}}) \} \) defined in Section 3.1.2, we define \( \{ \hat {i}(\mathcal {C}; {{{\varLambda }}}), \hat {j}(\mathcal {C}; {{{\varLambda }}}) \} \) as the pair of predicted class indexes that have the most samples in \( \mathcal {C} \). Then, we estimate the local uncertainty of this cluster as

Recall that our estimation goal is ideally B(Λ) itself, so we hope that \( \mathcal {C} \) is centered on B(Λ). Unfortunately, the TDC may result in some \( \mathcal {C} \)s “completely on one side of B(Λ),” which results in a kind of bias in terms of local uncertainty estimation.

3.4.4 Boundary Uncertainty Computation

Sampling elements (clusters) from the partition ¶Λ means that we are sampling independent outcomes (clusters) from the vicinity \( \mathcal {N}_{B}({{{\varLambda }}}) \) of the classifier boundary. Each cluster \( \mathcal {C} \) has probability \( \frac { | \mathcal {C} | }{ | \P _{{{{\varLambda }}}} | } \). The finite expectation of the local uncertainty function over ¶ is thus

Superscript “(1)” refers to Proposal 1, in order to distinguish it from the boundary uncertainty estimate obtained by Proposal 2. ¶Λ obtained by a TDC depends on the random clustering seeds used at each TDC step (i.e., a 2-means clustering). Step 2 of Proposal 1 accordingly performed RT > 1 different runs of TDCs. Each run indexed by r ∈ [ [1,RT] ] has its own different set of random clustering seeds and results in a partition we denote by \( \P ^{(r)}_{{{{\varLambda }}}} \). Then, Proposal 1 estimates \( \hat {U}({{{\varLambda }}}) \) in Eq. 8 by averaging over the boundary uncertainty estimates obtained by each run. This results in the following estimate denoted by \( \hat {U}^{(1)}({{{\varLambda }}}) \):

We illustrate (11) with Panel 1D contained in the black box that indicates RT repetitions of Step 2.

3.5 Issues in Step 1 of Proposal 1

On the one hand, anchors and then the selection of their nearest training samples answered the difficult question “what is closest to B(Λ)?” by accurately executing the definition of B(Λ). On the other hand, an approach that revolves around anchors to search for B(Λ) may not be practical, since it is impossible to generate infinitely many anchors as required by the definition of B(Λ).

Moreover, generating anchors from random pairs of training samples (that may be far from each other) results in costly treatments as described in Section 3.3. For example, resuming the notations of Section 3.3, for every checked position xc along \( [\boldsymbol {x}, \boldsymbol {x}^{\prime }] \), finding i(xc;Λ),j(xc;Λ) using QuickSort costs \( O(J \log (J)) \) comparisons between J discriminant function values. This cost explodes as it is multiplied by the number imax of dichotomy iterations, then by the large number RA of anchors, and finally by the number of classifier statuses to evaluate.

3.6 Issues in Step 2 of Proposal 1

On the one hand, previous experiments showed that the restriction to \( \mathcal {N}_{B}({{{\varLambda }}}) \) of target volumes used for k NN regression efficiently increases the accuracy of \( \hat {U}^{(1)}({{{\varLambda }}}) \) [18]. On the other hand, a discrete selection of “what is close to B(Λ) or not” unavoidably leads to the following dilemma: either taking too few samples that are not representative of B(Λ) or taking too many samples that are farther from B(Λ).

Moreover, the centering of target volumes on B(Λ) is not explicitly guaranteed, which can result in a bias of the boundary uncertainty estimate. Furthermore, the formation of clusters obeys a certain clustering criterion, and we thus do not have direct control over the size of clusters (e.g., when applying the 2-means clustering, some clusters may persistently be broken into most of the cluster itself, and a “leftover” that consists of just one or two samples). This may result in a partition that has some persistently large-sized clusters that do not measure locally the boundary uncertainty, as well as remaining “leftover” clusters that are too small to estimate local uncertainty. Last but not least, the RT repetitions of Step 2 increase both time and memory costs.

4 New Boundary Uncertainty Formalization and Estimation: Proposal 2

4.1 Overview of Proposal 2

To avoid the costly generation of anchors in the multidimensional space (Section 3.5), Proposal 2 smoothly and implicitly filters off-boundary samples in a single shot. This is made possible by using a single dimension that represents a kind of distance of a sample to the classifier boundary (Section 3.1.3).

In contrast to Proposal 1, which did not guarantee that near-boundary clusters are centered on B(Λ) (Section 3.6), Proposal 2 can explicitly focus its estimation on B(Λ) (Section 4.4.1). This is possible because the value “zero” of the aforementioned single dimension is equivalent to being on B(Λ).

In contrast to the difficulty of controlling the size of clusters in Proposal 1 (Section 3.6), Proposal 2 uses target volumes where the number of samples is directly specified. Then, the effectively used number of samples in each target volume is adjusted automatically in one shot by Proposal 2. The determination of the effective number of samples for each cluster and each candidate classifier status is both cheap and justified (Section 4.4.2): it is determined so that the local uncertainty estimation appropriately focuses on B(Λ) (Section 4.4.1).

The empirical formalization of boundary uncertainty used in Proposal 1 actually left some unclear items, such as the possible confusion of whether to use {i∗(x),j∗(x)} (top given class indexes) or {i(x;Λ),j(x;Λ)} (top predicted class indexes). Such confusion was especially possible in the several intricate branching treatments that appeared in Proposal 1, as well as in the treatment of multiclass data [18]. In contrast, this paper introduces a more complete formalization of boundary uncertainty (Section 4.3). This formalization clarifies these items and permits a more systematic approach to boundary uncertainty estimation.

4.2 Near-Boundary-Ness Measurement

4.2.1 Definition of Near-Boundary-Ness Measurement

Given any two class indexes k,l ∈ [ [1,J] ], a classifier status Λ, and \( \boldsymbol {x} \in \mathcal {X} \), we define the near-boundary-ness measurement as follows:

A smaller value of |fkl(x;Λ)| means that x is closer to Bkl(Λ), hence we termed fkl(x;Λ) a “near-boundary-ness measurement.” The sign of fkl(x;Λ) indicates which “side” of Bkl(Λ) the sample x is, namely whether the classifier assigns x to Ck or to Cl. To simplify notations, we shorten fi(x;Λ)j(x;Λ)(x;Λ) to f(x;Λ) in the particular case {k,l} = {i(x;Λ),j(x;Λ)}, as well as in the two-class case. fkl(⋅;Λ) can be computed for any classifier whose formalization follows Section 2. For example, in the case of a neural network, fkl(x;Λ) is obtained by monitoring the output layer.

4.2.2 Estimation of Near-Boundary-Ness Measurement Values at Perturbated Samples Instead of at Training Samples

The goal of this section is to provide a reliable representation of \( \mathcal {X} \) in the near-boundary-ness measurement space. This lengthy section describes a preliminary treatment that we use throughout Proposal 2, so we cover it here before describing Proposal 2 itself to provide the necessary clarity for following the later sections. We temporarily assume two-class data for simplicity of notation in this section.

Application of the function f(⋅;Λ) on training set \( \mathcal {T} \) can be understood as sampling a mapped training set in the near-boundary-ness measurement space. We denote by \( f(\mathcal {T}; {{{\varLambda }}}) \) this projection. As covered in the next sections, Proposal 2 performs some density estimations on \( f(\mathcal {T}; {{{\varLambda }}}) \) in the near-boundary-ness measurement space. The goal of such density estimations is to estimate the density of \( f(\mathcal {X}; {{{\varLambda }}}) \), or in other words to generalize effectively to the density of the entire mapped sample space. However, f(⋅;Λ) reflects the classifier decision, and it is well known that the classifier decision values can be quite different on the training set and on some (unseen) testing set \( \mathcal {X}^{te} \). This phenomenon is one aspect of overfitting.

In other words, even if \( \mathcal {T} \) and \( \mathcal {X}^{te} \) follow similar distributions in the sample space, their mapped counterparts \( f(\mathcal {T}; {{{\varLambda }}}) \) and \( f(\mathcal {X}^{te}; {{{\varLambda }}}) \) may follow quite different distributions in the near-boundary-ness measurement space. This sampling issue in the near-boundary-ness measurement space may be seen as a case of covariate shift [21].

Naively using \( f(\mathcal {T}; {{{\varLambda }}}) \) may not lead to an accurate estimation of the density of \( f(\mathcal {X}; {{{\varLambda }}}) \). We propose slightly perturbating training samples and then applying f(⋅;Λ) at such perturbated versions of the training samples instead of at the training samples themselves. We denote by \( \widetilde {\mathcal {T}} \) this perturbated version, where \( \widetilde {\cdot } \) (tilde notation) denotes the operation of “perturbation.” Our hope is that \( f(\widetilde {\mathcal {T}}; {{{\varLambda }}}) \) suffers the covariate shift issue to a lesser extent than \( f(\mathcal {T}; {{{\varLambda }}}) \).

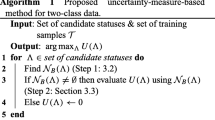

Algorithm 1 describes the generation of \( \widetilde {T} \). Given a training sample x, we denote by x(m) its m-th nearest training sample. The perturbation is uniform across the features of each training sample. The amplitude of the perturbation is adaptatively set small to stay as close as possible to the training set in the sample space. A detailed description of Proposal 2 itself is given in the next section to explain why we want to stay close to the training set in the sample space.

\( \widetilde {\mathcal {T}} \) may be simple to obtain in principle for a wide range of classification tasks. For example, in the case of speech data, our perturbation can be obtained by adding some noise to the speech signal, which seems to be a common practice when preparing speech data [22].

4.3 Formalization of Boundary Uncertainty

In order to more adequately derive an estimation procedure for boundary uncertainty, we start by defining the expected boundary uncertainty that we denote by U(Λ).

4.3.1 Notations

Recall that we denoted by {i∗(x),j∗(x)} in Eq. 6 the indexes of the two class posterior probabilities whose values are highest at x. We denote by \( \mathcal {I}^{*} \) the set of pairs of indexes that compose the Bayes boundary:

\( \forall \{ k, l \} \in \mathcal {I}^{*} \), and we denote by B∗(k,l) the set of training samples whose two top indexes are k and l:

4.3.2 Expected Boundary Uncertainty

Similarly to the definition of the risk in Eq. 1, we propose

where \( R_{U} \left (C(\boldsymbol {x}) | \boldsymbol {x} \right ) \) represents the penalization of non-boundary uncertainty of the classification decision C(x) at x. We consider − U(Λ) instead of U(Λ), since U(Λ) is the opposite of a loss: Higher values of U(Λ) correspond to more optimal classifier decisions. The infinitesimal width δV is introduced to avoid a probability measure equal to zero. We will clarify this formalization item in a future work. p(x) denotes the density at x.

We now consider a classification decision C(x;Λ) made by a classifier that is parameterized by Λ, where C(x;Λ) is described in Eq. 5. In the boundary uncertainty framework, our quantity of interest in C(x;Λ) is the pair of the two top predicted indexes (Sections 3.1.1 and 3.1.2). In this section, we write Ci(x;Λ)j(x;Λ)(x;Λ) instead of C(x;Λ) in order to emphasize this quantity of interest. The penalization of non-boundary uncertainty of the classifier at x is

where we introduced the output variable Ckl. Once more, the subscript in Ckl involves two class indexes {k,l} owing to the focus of boundary uncertainty on the pair of class indexes that define the boundaries. We define Ckl through the following discrete probability distribution: ∀{k,l}∈ [ [1,J] ]2,k≠l,

To evaluate the classification decision at x, the “boundary-wise cost” \( \lambda _{U}\left (C_{i (\boldsymbol {x}; {{{\varLambda }}}) j (\boldsymbol {x}; {{{\varLambda }}})}(\boldsymbol {x}; {{{\varLambda }}}) \left | C_{kl} \right .\right ) \) penalizes at x the non-uncertainty of the two-class classifier boundary that is relative to top indexes i(x;Λ),j(x;Λ) as follows:

The integration of Eqs. 17 and 18 into Eq. 16 reveals that at a sample x, we consider only the two true top class indexes {i∗(x),j∗(x)}, and then we evaluate the optimality of the two-class classifier boundary Bi(x;Λ)j(x;Λ)(Λ) at x. If the indexes {i(x;Λ),j(x;Λ)} do not even coincide with the true top indexes {i∗(x),j∗(x)}, this indicates that the classifier boundary is strongly biased around x, and thus (18) locally assigns the default worst uncertainty value − Umin at x. If {i(x;Λ)j(x;Λ)} = {i∗(x),j∗(x)} (as it should be in most cases if the classifier training did not fail), then we evaluate the classifier boundary at x using the sign-reversed local uncertainty function − U(x;Λ). This branching treatment in our formalization reflects the branching treatments that appeared in Proposal 1 [18].

The Bayes theorem gives P(Ckl|x)p(x) = p(x|Ckl)P(Ckl), where P(Ckl) corresponds to the overall probability in the sample space \( \mathcal {X} \) that {k,l} are the true top class indexes. Substituting (17), (18) and using the Bayes theorem in Eq. 16 gives

where we note that B∗(k,l) is defined in Eq. 14 and \( \lambda _{U}\left (C_{i (\boldsymbol {x}; {{{\varLambda }}}) j (\boldsymbol {x}; {{{\varLambda }}})}(\boldsymbol {x}; {{{\varLambda }}}) \left | C_{kl} \right .\right ) \) is − U(x;Λ) in most cases. Equation 19 appears as a sum only over pairs \( \{ k, l \} \in \mathcal {I}^{*} \) because the definition in Eq. 17 implies that the terms of Eq. 19 are non-zero only for pairs \( \{ k, l \} \in \mathcal {I}^{*} \). \( \forall \{ k, l \} \in \mathcal {I}^{*} \), we define

Equation 19 shows that in a multiclass setting, U(Λ) appears as a weighted combination of two-class uncertainty boundaries \( \{ U_{kl}({{{\varLambda }}}) \}_{\{ k,l \} \in \mathcal {I}^{*} } \). The above expressions generalize both our previous empirical understanding of boundary uncertainty defined by Eq. 8 and Proposal 1.

4.3.3 Property of Boundary Uncertainty

As we described in Section 3.1.3, our previous work implicitly assumed equivalence between Umax and achieving the Bayes decision rule. We now consider this statement more carefully:

Section 6.9 discusses the successive equivalences (21) to (24) in more detail. The next sections describe the estimation of U(Λ).

4.4 Estimation of Expected Boundary Uncertainty

4.4.1 Empirical Boundary Uncertainty and its Implicit Centering on Classifier Boundary

In Proposal 2, we attempt to estimate the expected boundary uncertainty defined by Eq. 19 with the following empirical sum:

\( \hat {B}^{*}(k, l) \) is the approximation of B∗(k,l) obtained from the training set, and \( \hat {\lambda }_{U}\left (C_{i (\boldsymbol {x}; {{{\varLambda }}}) j (\boldsymbol {x}; {{{\varLambda }}})}(\boldsymbol {x}; {{{\varLambda }}}) \left | C_{kl} \right .\right ) \) is the empirical version of Eq. 18, which is discussed in detail in Section 4.4.4. We note that execution of the sum defined by Eq. 25 requires a preliminary estimation of \( \mathcal {I}^{*} \), and we give details of its estimate denoted by \( \hat {\mathcal {I}}^{*} \) in Section 4.4.3.

A key point of Proposal 2 is to implicitly perform the filtering expressed by “\( \cap \left (B({{{\varLambda }}} ) + \delta V \right ) \)” in Eq. 25 without having to explicitly generate anchors. This actually draws inspiration from a work that used a one-dimensional space based on the discriminant functions to estimate the classification error probability [23]. Here, we describe how to implicitly define clusters that are centered on B(Λ).

Given an integer M > 0, \( \forall \boldsymbol {x} \in \mathcal {T} \), we denote by \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) the set (cluster) formed by x and its M − 1 nearest neighbors in \( \mathcal {T} \):

We consider a given \( \boldsymbol {x} \in \mathcal {T} \). Given a kernel function (e.g., Gaussian kernel) and a small hx > 0 to specify, we define ∀m ∈{i(x;Λ),j(x;Λ)},

where we note that \( y(\boldsymbol {x}^{\prime }) \) is the given class index of training sample \( \boldsymbol {x}^{\prime } \) and that x ∈ B(Λ) ⇔ fi(x;Λ)j(x;Λ)(x;Λ) = 0. An important factor is the tilde notation (operation of perturbation) when computing the near-boundary-ness measurement in Eq. 27 according to the considerations mentioned in Section 4.2.2. \( \hat {k}^{m}(\boldsymbol {x} ; {{{\varLambda }}}) \) counts how many samples with given class index m fall within a distance hx of B(Λ) in the near-boundary-ness measurement space.Footnote 3 We define

\( \hat {k}(\boldsymbol {x}; {{{\varLambda }}}) \) implicitly delineates an on-boundary cluster contained in [−hx;hx] in the near-boundary-ness measurement space, and that only contains (a total of \( \hat {k}(\boldsymbol {x}; {{{\varLambda }}}) \)) samples with given class labels Ci(x;Λ) and Cj(x;Λ). As we describe in Section 4.4.2, hx is usually a small value. The probability mass contained in a small volume is conserved through a change of variable. Therefore, the “implicitly defined on-boundary cluster that is centered on fi(x;Λ)j(x;Λ)(⋅;Λ) = 0 in the near-boundary-ness measurement space” corresponds to a “small region δV (x) that is centered on B(Λ) around x in the sample space.” We denote by δV the union of these individual volumes δV (x); Eqs. 27 and 28 thus adaptatively execute the filtering expressed by “ \( \cap \left (B({{{\varLambda }}} ) + \delta V \right ) \) ” in Eq. 25, without having to explicitly generate anchors nor select their nearest training samples.

Figure 4 illustrates (28) with M = 5 on a two-class dataset. We illustrate the cluster \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) with a red circle, and the projection of (the perturbated) \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) onto f(⋅;Λ) with a zooming effect. The Parzen kernels are represented by black kernels centered on each projection of a perturbated sample in the cluster.

Illustration of the locally one-dimensional count by Proposal 2 on a two-class and two-dimensional dataset. Samples of given class labels C1 and C2 are represented by yellow squares and green triangles, respectively. For a given Λ, we represent B(Λ) with a blue curve. The horizontal axis f(⋅;Λ) is described in Section 4.2.

For convenience, we introduce \( \forall \{ k, l \} \in \hat { \mathcal {I} }^{*} \),

Each on-boundary implicit cluster contains \( \hat {k}(\boldsymbol {x}; {{{\varLambda }}}) \) samples, and thus the probability of each cluster among all clusters for \( \hat {B}^{*}(k, l) \) is k(x;Λ)/wkl(Λ). Therefore, we can re-write (25) as the finite expectation of the local uncertainty function over the set of implicit on-boundary clusters described above:

where superscript “(2)” distinguishes this from the estimate obtained by Proposal 1 in Eq. 11. For convenience we define the estimated two-class boundary uncertainty: \( \forall \{ k, l \} \in \hat { \mathcal {I} }^{*} \),

4.4.2 Determination of Parameters Defining On-Boundary Implicit Clusters

Given \( \boldsymbol {x} \in \mathcal {T} \), the parameters hx and M determine the on-boundary cluster that is implicitly defined by Eq. 27 (M determines the “radius” of \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \), and then hx is set in the direction orthogonal to B(Λ)). The appropriate setting of hx temporarily requires us to consider a density estimation instead of a count estimation, since a count goes to infinity as the size of the dataset goes to infinity. The density estimation that corresponds to Eq. 27 is obtained by dividing (27) by Mhx [24]. A possible way of setting hx for optimal density estimation is to minimize the Mean Squared Integrated Error (MISE). This results in “Silverman’s rule of thumb” [25]:

where \( \hat {\sigma }_{\boldsymbol {x}} \) and IQRx correspond to the standard deviation and the interquartile range estimated on the distribution \( \left \{ f_{i (\boldsymbol {x}; {{{\varLambda }}}) j (\boldsymbol {x}; {{{\varLambda }}})}(\widetilde {x^{\prime }}) \right \}_{\boldsymbol {x}^{\prime } \in \mathcal {N}^{\text {M}}(\boldsymbol {x})} \), respectively.

Given m ∈{i(x;Λ),j(x;Λ)}, Eq. 32 may return hx = 0 if all values \( \left \{ f_{i (\boldsymbol {x}; {{{\varLambda }}}) j (\boldsymbol {x}; {{{\varLambda }}})}(\widetilde {x^{\prime }}) \right \}_{\boldsymbol {x}^{\prime } \in \mathcal {N}^{\text {M}}(\boldsymbol {x})} \) are identical. In this case, provided this unique value is not zero (which we can safely assume), we set \( \hat {k}^{m}(\boldsymbol {x}; {{{\varLambda }}}) = 0 \).

Equation 27 also depends on the hyperparameter M. We discuss how the setting of M is quite insensitive and is actually more controlled by practical constraints. Can the value of M be too high? Even if M is set quite high, the Parzen counts will automatically smooth out samples that are away from B(Λ). Nevertheless, M should be as small as possible to locally estimate uncertainty. Can the value of M be too low? The estimation of hx using Eq. 32 requires a minimum number of samples to be meaningful. These two questions compelled us to set a uniform M = 40 in practice (same value M for all the samples to obtain \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \)).

4.4.3 Estimation of \( \mathcal {I}^{*} \) and its Related Quantities

By “quantities that are related to \( \mathcal {I}^{*} \),” we refer to \( \forall \{ k, l \} \in \mathcal {I}^{*} , B^{*}(k, l) \) and P(Ckl). \( \mathcal {I}^{*} \) assumes knowledge of true class posterior probabilities. However, rather than requiring an accurate knowledge of true class posterior probabilities, \( \mathcal {I}^{*} \) only requires knowledge of the indexes of the two highest class posterior probabilities at each sample. In other words, a rougher estimate of class posterior probabilities is sufficient for our purpose, so long as the two top indexes are correctly estimated.

Independently from the classifier model that we want to evaluate, in order to estimate class posterior probabilities, we propose using a generative classifier model that we explain in detail in Eq. 35. We denote by Λgen the trained classifier status of this generative classifier model. Then, \( \forall \boldsymbol {x} \in \mathcal {T} \), we estimate {i∗(x),j∗(x)} as \( \left \{ i(\boldsymbol {x}; {{{\varLambda }}}_{gen}), j(\boldsymbol {x}; {{{\varLambda }}}_{gen}) \right \} \). Application of Eq. 13 to these estimated pairs of indexes gives \( \hat {\mathcal {I}}^{*} \). Then, \( \forall \{ k, l \} \in \hat {\mathcal {I}}^{*} \),

and then

Regarding the choice of a generative classifier model, the above considerations imply that a simple model may be enough for our purpose. For this reason, we chose the following prototype-based classifier (PBC), which has low computation costs. ∀j ∈ [ [1,J] ], we denote by \( \mathcal {T}_{j} \) the set of training samples with given class label Cj, namely \( \mathcal {T}_{j} = \{ \boldsymbol {x} \in \mathcal {T} | y(\boldsymbol {x}) = j \} \). Our PBC represents \( \mathcal {T}_{j} \) by Kj prototypes. We denote by \( \left \{ {p_{j}^{k}} \right \}_{k \in [\![1, K_{j}]\!] } \) the corresponding set that consists of Kj prototypes. We obtain \( \left \{ {p_{j}^{k}} \right \}_{k \in [\![1, K_{j}]\!] } \) by performing K-means clustering on \( \mathcal {T}_{j} \). The discriminant functions for such PBC are \( \forall j \in [\![1, J]\!], \forall \boldsymbol {x} \in \mathcal {X} \),

where Λgen corresponds to the set of prototypes obtained by class-wise K-means clustering.

As one possibility, ∀j ∈ [ [1,J] ], we set the value of Kj based on the Akaike Information Criterion (AIC). AIC can be used to determine the number of a Gaussian mixture model, and a K-means algorithm is a particular instance of the Classification Expectation algorithm for a Gaussian mixture model with equal mixture weights and equal isotropic variances [26]. We used existing results to adapt AIC to each class-wise K-means [26]. We give these results in the Appendix. Algorithm 2 summarizes the estimation of \( \mathcal {I}^{*} \) and that of its related quantities.

4.4.4 Estimation of Boundary-Wise Cost

Given \( \{ k, l \} \in \hat {\mathcal {I}}^{*} \) and x ∈ T, the estimated boundary-wise cost that appears in Eq. 25 is

Instead of the binary Shannon entropy used by Proposal 1, Proposal 2 uses a triangle-shaped function (orange curve in Fig. 2) as a local uncertainty function. This corresponds to the following local uncertainty: ∀m ∈{i(x;Λ),j(x;Λ)},

The reason for this choice was to achieve a more neutral penalization of non-uncertainty than the binary Shannon entropy. The binary Shannon entropy weakly penalizes non-uncertainty in a wide range of [0.3;0.7] around 0.5, while outside this range it strongly penalizes non-uncertainty.

Just as done in Proposal 1, Proposal 2 estimates class posterior probabilities using the k NN regression rule (Section 3.2). ∀m ∈{i(x;Λ),j(x;Λ)}, the k NN regression applied in the near-boundary-ness measurement space gives

When we input \( \hat {P}(C_{m} | \boldsymbol {x}; {{{\varLambda }}}) \) in the local uncertainty function defined in Eq. 37, the first and second cases of the branching in Eq. 38 result in the first and second cases of the branching in Eq. 36, respectively.

∀j ∈ [ [1,J] ], we denote by \( \hat {P}_{j} \) the estimate of class prior probability, and Nj is the number of training samples whose given class label is Cj: \( \hat {P}_{j} = N_{j} / N \). In order to address class imbalance [29], Proposal 1 replaced each estimate \( \hat {P}(C_{l} | \boldsymbol {x}; {{{\varLambda }}}) \) by \( \hat {P}(C_{l} | \boldsymbol {x}; {{{\varLambda }}}) / \hat {P}_{l} \) [18]. Proposal 2 does not perform such adjustment. Indeed, if we assume an adequate sampling, then unequal class prior probabilities are implicitly handled by the k NN regression.

4.5 Two-Class Boundary Uncertainty Estimate \( \hat {U}_{kl}({{{\varLambda }}}) \) in Separability Case

This section assumes a pair \( \{ k, l \} \in \hat { \mathcal {I} }^{*} \) and refines (31) to handle the case where wkl(Λ) = 0 (29). This case occurs when class distributions in the sample space are well-separated.

Let us assume that class distributions are separated by a nearly empty region in the sample space. In this case, the Bayes boundary B∗ is located in the empty region. We consider the three possible cases of B(Λ) and illustrate them in Fig. 5.

-

Case 1: B(Λ) (green curve) is close to B∗ (red curve). In this case, we are likely to get wkl(Λ) = 0. As a result, Eq. 31 cannot be computed. However, we would like Proposal 2 to assign a best boundary uncertainty value Umax to \( U_{kl}^{(2)} \). We notice that all samples in \( \hat {B}^{*}(k, l) \) are correctly classified by such a B(Λ).

-

Case 2: B(Λ) is so biased that it crosses the class distributions. Proposal 2 returns the worst values of local uncertainties whenever it crosses the class distributions, since well-separated class distributions locally have only one class label with high probability (in Panel 2, every cluster \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) along B(Λ) contains either all yellow or all purple given class labels). As a result, Eq. 31 can return the worst value Umin, which is already what we want.

-

Case 3: B(Λ) is so biased that it lies in an empty region outside the range of the class distributions. Similarly to Case 1, Eq. 31 cannot be computed. However, we would like Proposal 2 to assign a worst value Umin. We note that samples of either class Ck or class Cl are all misclassified because, in this case, B(Λ) assigns all samples of \( \hat {B}^{*}(k, l) \) to the same class.

We must refine (31) so that it can output a reasonable value of \( \hat {U}_{kl}({{{\varLambda }}}) \) even in Cases 1 and 3. Based on the observation of Cases 1 and 3, we can quite simply identify these two cases by checking the classification error rate on \( \widetilde {\mathcal {T}} \). We do not check the error rate on \( \mathcal {T} \) but instead on \( \widetilde {\mathcal {T}} \), since the error rate itself directly depends on the values of the near-boundary-ness measurement. As described in Section 4.2.2, we always use \( f(\widetilde {\mathcal {T}}; {{{\varLambda }}}) \) instead of \( f(\mathcal {T}; {{{\varLambda }}}) \). We denote by \( \widetilde {L}_{tr}^{kl}({{{\varLambda }}}) \) the classification error rate on the perturbated version of \( \hat {B}^{*}(k, l) \) (namely, \( \left \{ \boldsymbol {x} + r_{\boldsymbol {x}} || \boldsymbol {x} - \boldsymbol {x}^{(1)} || \right \}_{\boldsymbol {x} \in \hat {B}^{*}(k, l)} \)). This results in the following extended definition of \( \hat {U}_{kl}({{{\varLambda }}}) \) that we use instead of Eq. 31:

Illustration of separability in the sample space on two classes Ci and Cj of a two-dimensional dataset called GMM_separable. The given class labels are represented by yellow and purple crosses, respectively. The class distributions of GMM_separable are separated by an empty region. B∗ is represented by a red curve in Panel 1. We represent different B(Λ)s by a green curve in Panels 1, 2, and 3. These three panels respectively correspond to Cases 1, 2, and 3, which we describe in Section 4.5.

We then re-define the boundary uncertainty estimate as

Superscript “(3)” distinguishes this from the estimate defined by Eq. 30, but we still consider it a part of Proposal “2”.

4.6 Implementation of Proposal 2

Algorithm 3 summarizes the implementation of Proposal 2.

5 Time Costs of Classifier Evaluation

One of the goals of Proposal 2 is to achieve scalability, since the scalability of resampling-based classifier evaluation methods such as CV is limited. Therefore, this section describes the time costs of Proposal 2 to evaluate ΛTR = {Λm}m∈[ [1,L] ]. We denote the cost of one addition, multiplication, computation of the exponential function, comparison, and classification as cadd,cmul,cexp,ccomp,cCL(d,J,Λ), respectively. cCL(d,J,Λ) increases with d, J and with the number of parameters in Λ. For comparison, we will also describe the time costs of Proposal 1.

5.1 Time Costs of Proposal 2

We denote by \( c_{shared}^{(2)} \) the costs of Proposal 2 that are shared across all classifier statuses, or in other words, that appear only once. Here, superscript “(2)” distinguishes them from the time costs of Proposal 1. We denote by \( c_{{{{\varLambda }}}}^{(2)} \) the costs that are exclusive to the evaluation of one classifier status Λ. The costs to estimate L classifier statuses ΛTR = {Λm}m∈[ [1,L] ] is

5.1.1 Estimation of \( c_{shared}^{(2)} \)

Regardless of the number of candidate statuses Λ to evaluate, Proposal 2 requires us to compute and store the set of clusters \( \{ \mathcal {N}^{\text {M}}(\boldsymbol {x}) \}_{\boldsymbol {x} \in \mathcal {T}} \), as well as the set of distances \( \{ || \boldsymbol {x} - \boldsymbol {x}^{(1)} || \}_{\boldsymbol {x} \in \mathcal {T}} \). We obtained these clusters and distances using a KDTree, so the total cost of the construction and search of the nearest neighbors is \( O(N \log (N)) \) distance computations [30]. The cost of one distance computation in \( \mathcal {X} \) is O(d)(cadd + cmul).

As summarized in Algorithm 3, the generation of \( \widetilde {\mathcal {T}} \) is done only once. The cost of generating \( \widetilde {\mathcal {T}} \) is O(dN)cadd.

K-means clustering costs KN distance computations at each K-means iteration. A distance computation costs O(d)(cadd + cmul). We denote by iK the imposed maximum number of iterations of K-means. If we assume that each class contains roughly the same number of training samples N/J, then the total time costs of obtaining our trained PBC in Algorithm 2 are O(iKdN)(cadd + cmul). We repeat these costs as many times as we attempt different values for the number of prototypes per class. We denote by RK the number of tried values of K. The preliminary time costs of Proposal 2 are thus

where

5.1.2 Estimation of \( c_{{{{\varLambda }}}}^{(2)} \)

Given one candidate classifier status Λ, finding the top indexes i(x;Λ),j(x;Λ) for the N training samples using QuickSort requires \( O(N J \log (J)) \) comparisons.

Then, the cost of the set of operations defined by Eqs. 27, 32, 38 is independent of J and d. As stated above, it only requires simple arithmetic operations (e.g., mean on M samples, multiplications, application of the exponential function) on a two-dimensional array of size at most (N,M), whose rows each correspond to a training sample \( \boldsymbol {x} \in \mathcal {T} \) and whose columns each correspond to a single perturbated element of \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \). The cost of computing near-boundary-ness measurement values on \( \widetilde {\mathcal {T}} \) is the cost of classifying \( \widetilde {T} \).

where

Overall, the cost of Proposal 2 scales reasonably with J, and d mainly shows up only once when computing the clusters \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) in the sample space. Obtaining the rest of the costs in \( c_{{{{\varLambda }}}}^{(2 )} \) boils down to operations on the one-dimensional data obtained from the projection of each (perturbated) \( \mathcal {N}^{\text {M}}(\boldsymbol {x}) \) on the axis fi(x;Λ)j(x;Λ)(⋅;Λ). The operations defined by Eqs. 27, 32, 38 define simple operations on N arrays that each contain exactly M elements. These operations can be efficiently parallelized on the hardware as operations on two-dimensional arrays of size (N,M), despite whether it’s on the CPUFootnote 4 or the GPU. This is another desirable characteristic of Proposal 2 in terms of scalability.

5.2 Time Costs of Proposal 1

We denote by c(1)(Λ) the time costs to estimate U(Λ) for a single candidate classifier status Λ. We denote by c(1,1)(Λ) and c(1,2)(Λ) the time costs of Step 1 and Step 2 of Proposal 1, respectively. We detail these costs in the following sections. Step 1 and Step 2 are executed independently, hence

The evaluation of each candidate classifier status Λ is performed independently by Proposal 1, so the costs of estimating L classifier statuses Λ1,⋯ ,ΛL are

5.2.1 Time Costs of Step 1 in Proposal 1

Here, we take up the notations from Section 3.3. The cost of Step 1 is the cost of generating RA anchors and then searching for their nearest neighbor. Given a random segment \( [\boldsymbol {x}; \boldsymbol {x}^{\prime }] \), the search for an anchor between x and \( \boldsymbol {x}^{\prime } \) is executed by dichotomy, whose maximum number of iterations we denote by imax. Each dichotomy iteration generates one artificial sample. Given one such artificial sample that we denote by xc, the corresponding treatments are the interpolation between x and \( \boldsymbol {x}^{\prime } \) to obtain xc (cost: O(d)cadd), the classification of xc (cost: cCL(d,J,Λ)), the sorting among the J discriminant function values to select {i(xc;Λ),j(xc;Λ)} (cost: \( O(J \log (J)) c_{comp} \)), and then one subtraction gi(x;Λ)(x;Λ) − gj(x;Λ)(x;Λ) (negligible cost: cadd).

The cost of searching for and generating one anchor is thus \( O\left (i_{max} (d c_{add} + c_{CL}(d, J, {{{\varLambda }}}) + J \log (J) c_{comp}) \right ) \). Then, searching for the nearest neighbor of an anchor with a KDTree costs \( O(\log (N)) \) distance computations [30], namely O(d)(cadd + cmul). The cost of Step 1 of Proposal 1 is thus as follows:

where

5.2.2 Time Costs of Step 2 in Proposal 1

We now take up the notations from Section 3.4. We assume for simplification that at the end of the TDC, each cluster contains Nmax samples. During the first step of a given TDC, the main cost of the 2-means clustering is to compute the distance between each of the N samples and the two cluster centroids. This results in 2N distance computations. There are iK iterations in 2-means clustering, so the cost of the 2-means clustering on N samples is O(2iKdN)cadd.

During the second step of a given TDC, 2-means clustering is applied to each of the two clusters that consist of N/2 samples. This corresponds to the computation of two times 2N/2 distances, namely 2N distance computations overall. More generally, we note that 2N distances are computed regardless of the dividing step in a given TDC, and thus the cost of each TDC step is always O(2iKdN)(cadd + cmul).

To obtain the total cost of a given TDC, we estimate the number of TDC steps that we denote by iT for convenience. Assuming clusters of roughly equal size within each step, iT satisfies \( N / 2^{i_{T}} = N_{max} \), and thus \( i_{T} = \left \lceil {\log (N / N_{max})}\right \rceil + 1 \). The cost of Step 2 of Proposal 1 is therefore

where

The time costs of Step 1 and Step 2 in Proposal 1 are simply added up, and we group operations by elementary costs for convenience:

6 Experiments

The goal of our experiments is three-fold. Goal (a): Assess whether Proposal 2 accurately estimates the boundary uncertainty defined by Eq. 15 even without costly traditional estimation methods such as re-sampling. Goal (b): Confirm once more that boundary uncertainty is a relevant quantity to perform classifier evaluation and classifier selection. Goal (c): Compare Proposal 2 in terms of the accuracy of classifier selection and scalability with existing widely applicable and powerful methods (HO and CV), but also with Proposal 1.

6.1 Classifiers

In this early stage of our research, we focus on the careful design and analysis of boundary uncertainty estimation and thus restrict our experiments to the selection of a single hyperparameter.

We assessed Proposal 2 in the evaluation and then selection of classifier statuses of the Gaussian kernel SVM classifier for two reasons. First, this classifier has only two hyperparameters: the Gaussian kernel width and the regularization coefficient. Second, we can easily obtain extreme cases of insufficient or excessive representation capability by controlling the values of these two hyperparameters, owing to the infinite VC capacity of Gaussian kernel SVMs and to the possibility of analytically obtaining the global minimum of training objectives of SVMs [27]. Gaussian kernel SVMs thus provide a simple way of analyzing Proposal 2 over a wide range of classifier boundary cases. We varied the hyperparameter values by powers of 2 in order to easily sweep a large range of values, while avoiding an excessively rough search (as powers of 10 may result in), e.g., 2− 15,2− 14,⋯ ,214,215.

To simplify the analysis, we preliminarily selected the regularization coefficient using CV [18]. Our experiments focused on the selection of the Gaussian kernel width. Following the notations of the SVM implementation that we used,Footnote 5 we denote by γ the inverse of the Gaussian kernel width. A higher γ corresponds to a higher capacity of the classifier to draw a more complex B(Λ). For each value of γ, we performed full SVM training, and then we evaluated the resulting classifier status with Proposal 2, HO, and CV.

Just to ensure that Proposal 2 can also perform evaluation of other classifier models, we also succinctly assessed Proposal 2 on a MultiLayer Perceptron (MLP). The goal in our experimental setting was not really to achieve state-of-the-art performance but rather to perform analysis of Proposal 2 on MLP. Therefore, we simply considered an MLP with two hidden layers of 128 and 64 units, respectively. Both layers use ReLu activation functions. The output layer uses cross entropy as the objective function that we optimized with RMSProp, using a library available online.Footnote 6 As an MLP selection experiment, we performed an early stopping experiment, namely we looked for the optimal number of training epochs inside a single instance of classifier training.

6.2 Datasets

We performed our experiments on real-world benchmark datasets available onlineFootnote 7 that are quite small and basic but that provide some diversity in terms of dimensionality, number of samples, nature of the features, and class overlap. Furthermore, we prepared three synthetic two-dimensional and two-class datasets using Gaussian Mixture Models: GMM, GMM_separable, and GMM_inclusion . We illustrate these datasets in Figs. 10, 14, and 15, respectively. For the GMM dataset, we generated a testing set from the same mother distribution as \( \mathcal {T} \) to provide more exhaustive experimental results. Owing to its larger number of available samples, we could also afford to split the Letter Recognition dataset into a training set and a testing set of equal size.

6.3 Data Preparation

On all of the datasets, we standardized independently each feature by removing the mean and then scaling to unit variance across the entire training set.Footnote 8 For the datasets that also have a testing set, we applied the same standardization to the testing set, based on the means and variances that were measured from the training set.

To obtain \(\widetilde {T} \), we applied Algorithm 1 on the standardized data, using the nearest distances ||x − x(1)|| that were measured on the standardized data.

The two hyperparameters of Proposal 2 are M and K, which appear in Sections 4.4.1 and 4.4.3, respectively. For all of the datasets, we set M to 40 because a meaningful computation of Eq. 32 seems to require at least 30-40 samples. In all of the datasets, we searched for K in the range [1,40] with a step of 2, and then we selected its value as described in the Appendix.

6.4 Accuracy of Boundary Uncertainty-Based Classifier Selection

6.4.1 Exhaustive Results on Synthetic Data

In Fig. 6, we display the SVM selection results for the GMM dataset. There are two ideal alternatives for assessing Goal (a) (for our three goals, see the beginning of Section 6). One alternative is to visualize the similarity between B∗ and B(Λ) in the multidimensional sample space; however, this is not practical. Another alternative is to use a large testing set (not to be confused with the validation sets that are used by HO and CV) as a reference truth that gives estimates as close as possible to the expected values. In this case, for each Λ that is preliminarily obtained by training the classifier on \( \mathcal {T} \), we obtain \( \hat {U}_{tr}({{{\varLambda }}}) \) by using \( \mathcal {T} \) as input of Algorithm 3, and then we separately obtain \( \hat {U}_{te}({{{\varLambda }}}) \) by using the testing set as input of Algorithm 3. If Proposal 2 is an accurate boundary uncertainty estimation method, then Proposal 2 should satisfy \( \forall {{{\varLambda }}}, \hat {U}_{tr}({{{\varLambda }}}) \approx \hat {U}_{te}({{{\varLambda }}})\).

SVM classifier selection results using Proposal 2 on the synthetic GMM dataset. Horizontal axis for Panels 1 to 3: inverse γ of the Gaussian kernel width. Panel 1: \( \hat {L}_{te}({{{\varLambda }}}) \) (red) on the left vertical axis, \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right vertical axis. Panel 2: LHO (dotted black), \( \hat {L}_{tr}({{{\varLambda }}}) \) (orange), \( \hat {L}_{val}({{{\varLambda }}}) \) (green) on the left vertical axis. Panel 3: \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue), \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right-hand axis. Following the same conventions as Panels 1 and 3, Panels 1bis and 3bis were obtained by applying Proposal 2 without using \( \widetilde {\mathcal {T}} \), namely by computing the values of the near-boundary-ness measurement on \( \mathcal {T} \).

In Panel 3 of Fig. 6, we plot \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue) and \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) against γ. We can see that the blue and black curves in Fig. 6 are nearly identical. This seems to validate the accurate estimation of the boundary uncertainty by Proposal 2 without relying on resampling methods, and thus it achieves Goal (a). We note that the boundary uncertainty estimation in Proposal 2 essentially relies on Eqs. 27 and 32. Accurate and scalable estimation of ratios for multidimensional data such as in the k NN regression usually requires advanced estimation methods [28]. In our case, accurate estimation of the ratio formed by Eqs. 27 and 28 with the accuracy shown in Fig. 6 may be explained by the local reduction of the boundary uncertainty task to the single dimension formed by the near-boundary-ness measurement. This local reduction enabled the use of analytic estimation rules such as Eq. 32, which may be sufficiently accurate on one-dimensional data by simply requiring training data.

We can assess Goal (b) by comparing the behavior of − U(Λ) with the behavior of well-established classifier evaluation metrics such as error probability. A more optimal classifier status corresponds to a lower error probability, and to a lower sign-reversed boundary uncertainty − U(Λ). We can thus expect the two evaluation metrics to mutually confirm their validity by following the same trends, and especially by hitting a minimum for the same Λ (hopefully the Bayes error status). Incidentally, we can assess Goal (a) by checking whether − U(Λ) reaches its minimum value -1 when the minimum error probability is achieved. We thus check \( -\hat {U}_{te}({{{\varLambda }}}) \) and \( \hat {L}_{te}({{{\varLambda }}}) \) against γ for the GMM dataset on Panel 1 of Fig. 7, and we see that Goal (b) is achieved.

SVM classifier selection results using Proposal 2 on the Letter Recognition dataset. Horizontal axis for Panels 1 to 3: inverse γ of the Gaussian kernel width. Panel 1: \( \hat {L}_{te}({{{\varLambda }}}) \) (red) on the left vertical axis, \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right vertical axis. Panel 2: LHO (dotted black), \( \hat {L}_{tr}({{{\varLambda }}}) \) (orange), and \( \hat {L}_{val}({{{\varLambda }}}) \) (green) on the left vertical axis. Panel 3: \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue) and \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right-hand axis.

In Panel 1, \( -\hat {U}_{te}({{{\varLambda }}}) \) actually shows a sharper minimum than \( \hat {L}_{te}({{{\varLambda }}}) \). This sharper trend can be explained by the focus of boundary uncertainty precisely on the classifier boundary: Overall similar error probabilities can correspond to quite different classifier boundaries. Boundary uncertainty-based classifier evaluation may discriminate between classifier statuses more finely than error probability, provided that the estimation method is accurate enough. This implies that even though we can expect some similar trend between \( -\hat {U}_{te}({{{\varLambda }}}) \) and \( \hat {L}_{te}({{{\varLambda }}}) \), expecting exactly the same trend between the two curves is not necessarily the goal of boundary uncertainty. We emphasize that the purpose here is not to provide exactly the same trend as \( \hat {L}_{te}({{{\varLambda }}}) \), and that \( \hat {L}_{te}({{{\varLambda }}}) \) is simply used as a default informative reference. This is the reason why we do not try to quantitatively measure the correlation between \( -\hat {U}_{te}({{{\varLambda }}}) \) and \( \hat {L}_{te}({{{\varLambda }}}) \), since this might imply that our goal is to fit \( \hat {L}_{te}({{{\varLambda }}}) \) with \( -\hat {U}_{te}({{{\varLambda }}}) \).

In practice, we may not always have access to a testing set. Instead, CV may be used to estimate the error probability. Therefore, we also assessed CV-based estimates of the classification error probability. Our experiments adopted stratified CVFootnote 9 with a high number of folds (10 to 40) to get closer to LOO, whose asymptotic unbiasedness is proven. We also tried HO, by viewing HO as a more practical competitor of Proposal 2 than CV in terms of computation cost. For HO, we applied a ratio (75%, 25%) for the split (train, validation).

We illustrate CV and HO in Panel 2 of Fig. 6: average \( \hat {L}_{tr}({{{\varLambda }}}) \) over the error probability estimated on the training folds of CV (orange); average \( \hat {L}_{val}({{{\varLambda }}}) \) over the error probability estimated on the validation folds of CV (green); error probability estimated on a holdout validation set \( \hat {L}_{\text {HO}}({{{\varLambda }}}) \) (dotted-black); error probability estimated on a large testing set \(\hat {L}_{te}({{{\varLambda }}}) \) (red); sign-reversed boundary uncertainty estimated on the training set \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue); and sign-reversed boundary uncertainty estimated on a large testing set \( -\hat {U}_{te}({{{\varLambda }}}) \) (black).

Curiously, \( \hat {L}_{\text {HO}}({{{\varLambda }}}) \) (dotted black) seems closer to \( \hat {L}_{te}({{{\varLambda }}}) \) (red) than \( \hat {L}_{val}({{{\varLambda }}}) \) (green). While \( \hat {L}_{tr}({{{\varLambda }}}) \) is a seriously biased estimate of the error probability, \( -\hat {U}_{tr}({{{\varLambda }}}) \) seems to estimate the expected boundary uncertainty quite accurately.

6.4.2 Results on Real-Life Data

For the Letter Recognition dataset, we have a testing set, so we separately display more detailed results in Fig. 7, that follows the same layout as in the upper row of Fig. 6. We note how \( -\hat {U}_{tr}({{{\varLambda }}}) \) and \( -\hat {U}_{te}({{{\varLambda }}}) \) are strikingly close in Panel 3, which seems to confirm that Proposal 2 can accurately estimate boundary uncertainty. This contrasts once more with the impossibility of estimating \( \hat {L}_{te}({{{\varLambda }}}) \) simply with \( \hat {L}_{tr}({{{\varLambda }}}) \) (orange curve in Panel 2).

We observe that the minimum value of \( \hat {L}_{val}({{{\varLambda }}}) \) on the Letter Recognition dataset is nearly zero, which implies that this dataset may be easy to classify and that performance for the Bayes risk can be achieved at this minimum value of \( \hat {L}_{val}({{{\varLambda }}}) \). The minimum of \( \hat {L}_{val}({{{\varLambda }}}) \) and \( \hat {U}_{tr}({{{\varLambda }}}) \) coincide, but the minimum value of \( -\hat {U}_{tr}({{{\varLambda }}}) \) is not -1, as it would be if the classifier executed B∗. If we assume that Proposal 2 accurately estimates boundary uncertainty (based on observations from Panel 3), then it would be reasonable to assume that a classifier status could be nearly optimal in terms of classification error probability, while boundary uncertainty may not appear quite optimal so long as B(Λ) does not even get closer to optimal B(Λ). In other words, boundary uncertainty may evaluate the generalization ability more strictly than the classification error probability.

For the other real-life datasets, we did not have access to a large testing set. In this case, the best available estimates of the classification error probability and of − U(Λ) are \( \hat {L}_{val}({{{\varLambda }}}) \) and \( -\hat {U}_{tr}({{{\varLambda }}}) \), respectively.Footnote 10 We apply the same checks as in Section 6.4.1 but by replacing \( \hat {L}_{te}({{{\varLambda }}}) \) and \( \hat {U}_{te}({{{\varLambda }}}) \) with \( \hat {L}_{val}({{{\varLambda }}}) \) and \( -\hat {U}_{tr}({{{\varLambda }}}) \), respectively. We expect similar trends and minimum values of \( \hat {L}_{val}({{{\varLambda }}}) \) and \( -\hat {U}_{tr}({{{\varLambda }}}) \) against the classifier status.

For comparison, we also consider \( -\hat {U}_{tr}({{{\varLambda }}}) \) obtained by Proposal 1 with Eq. 11. The slightly different trends of \( \hat {L}_{val}({{{\varLambda }}}) \) between Proposal 1 and Proposal 2 may be due to slightly different splittings between our former experiments of Proposal 1 [18] and the experiments in this paper. Owing to the range of values of the binary Shannon entropy in Proposal 1, we rescaled \( -\hat {U}_{tr}({{{\varLambda }}}) \) in the results of Proposal 1 by a factor \( \ln (2) \) so that the range of values becomes [0,1] as in Proposal 2. In Proposal 2, the minimum values of \( -\hat {U}_{tr}({{{\varLambda }}}) \) are closer to − 1 for each dataset, which achieves Goal (a).

Figure 8 shows that for most of the datasets, Proposal 2 matches the benchmark CV in terms of trends and minimum, which reaches Goal (b). Additionally, the trends of \( -\hat {U}_{tr}({{{\varLambda }}}) \) in Proposal 2 provide a sharper minimum than either their counterpart in Proposal 1 or \( \hat {L}_{val}({{{\varLambda }}}) \). This higher ability to sharply select an optimal classifier status achieves Goal (c).

SVM classifier selection results using Proposal 2 on real-world datasets (green boxes). From left to right, and top to bottom: Abalone, Banknote, Breast Cancer, Cardiotocography, German, Spambase, Landsat Satellite, Letter Recognition, Wine Quality Red, and Wine Quality White. For each dataset, horizontal axis: inverse γ of the Gaussian kernel width; left vertical axis: \( \hat {L}_{val}({{{\varLambda }}}) \) (green), LHO (dotted black); and right vertical axis: \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue). SVM classifier selection results using Proposal 1 on the Cardiotocography, Spambase, Wine Quality Red, and Wine Quality White datasets (red box), which we considered difficult.

Figure 9 shows the MLP selection results for the Letter Recognition dataset. The layout is the same as in Fig. 7, but in this case the horizontal axis corresponds to the training epoch. Once more, Proposal 2 seems to achieve the three goals we described in Section 6.4.1 for the GMM dataset.

MLP classifier selection results using Proposal 2 on the Letter Recognition dataset. Horizontal axis for Panels 1 to 3: index of training epochs. Panel 1: \( \hat {L}_{te}({{{\varLambda }}}) \) (red) on the left vertical axis, \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right vertical axis. Panel 2: LHO (dotted black), \( \hat {L}_{tr}({{{\varLambda }}}) \) (orange) on the left vertical axis. Panel 3: \( -\hat {U}_{tr}({{{\varLambda }}}) \) (blue), \( -\hat {U}_{te}({{{\varLambda }}}) \) (black) on the right-hand axis.

6.5 Effect of \( \widetilde {\mathcal {T}} \)

6.5.1 Illustration of Covariate Shift in Near-Boundary-Ness Measurement Space

In order to illustrate the covariate shift in the near-boundary-ness measurement space f(⋅;Λ), as well as the usefulness of \( \widetilde {\mathcal {T}}\) covered in Section 4.2.2, we selected an SVM classifier status Λ that has a rather high representation capability. We then plotted in Fig. 10 the three distributions \( f(\mathcal {T}; {{{\varLambda }}}), f(\widetilde {\mathcal {T}}; {{{\varLambda }}}), f(\mathcal {X}^{te}; {{{\varLambda }}}) \) in the sample space by representing the values of the near-boundary-ness measurement using a colormap. The plots confirm that \( f(\mathcal {T}; {{{\varLambda }}}) \) is quite different from \( f(\mathcal {X}^{te}; {{{\varLambda }}}) \), whereas \( f(\widetilde {\mathcal {T}}; {{{\varLambda }}}) \) is quite closer to \( f(\mathcal {X}^{te}; {{{\varLambda }}}) \).

Usefulness of \( \widetilde {\mathcal {T}} \). Panel 1: the GMM dataset with its given class labels represented by yellow and purple crosses, respectively. The classifier used is a Gaussian kernel SVM, whose regularization coefficient was preliminarily set. For a given Λ, the three distributions \( f(\mathcal {T}; {{{\varLambda }}}), f(\widetilde {T}; {{{\varLambda }}}), f(\mathcal {X}^{te}; {{{\varLambda }}}) \) in the sample space are represented using a colormap in Panels 2, 3, and 4, respectively. Cyan and pink colors correspond to lower and higher algebraic values of f(⋅;Λ), respectively. Negative and positive values of f(⋅;Λ) correspond to a classifier decision that assigns data to the yellow and purple classes, respectively.

6.5.2 Effect of \( \widetilde {\mathcal {T}} \) in Boundary Uncertainty Estimation