Abstract

We propose a novel practical method for finding the optimal classifier parameter status corresponding to the Bayes error (minimum classification error probability) through the evaluation of estimated class boundaries from the perspective of Bayes boundary-ness. While traditional methods approach classifier optimality from the angle of minimization of the estimated classification error probabilities, we approach it from the angle of optimality of the estimated classification boundaries. The optimal classification boundary consists solely of uncertain samples, whose posterior probability is equal for the two classes separated by the boundary. We refer to this essential characteristic of the boundary as “Bayes boundary-ness”, and use it to measure how optimal the estimated boundary is. Our proposed method achieves the optimal parameter status using the training data only once, in contrast to such traditional methods as Cross-Validation (CV), which demand separate validation data and often require a number of repetitions of training and validation. Moreover, it can be directly applied to any type of classifier, and potentially to any type of sample. In this paper, we first elaborate on our proposed method that implements the Bayes boundary-ness with an entropy-based uncertainty measure. Next, we analyze the mathematical characteristics of the uncertainty measure adopted. Finally, we evaluate the method through a systematic experimental comparison with CV-based Bayes boundary estimation, which is known to be highly reliable in the Bayes error estimation. From the analysis, we rigorously show the theoretical validity of our adopted uncertainty measure. Moreover, from the experiment, we successfully demonstrate that our method can closely approximate the CV-based Bayes boundary estimate and its corresponding classifier parameter status with only a single-shot training over the data in hand.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the statistical approach to the development of pattern classifiers, the ultimate goal of classifier training is to find the optimal classifier parameter (class model parameters) status that leads to the minimum classification error probability (also called Bayes error). As a result, many classifier training methods have been vigorously investigated to achieve this goal through accurate estimation of the classification error probability (e.g. [1,2,3]). However, the error probability requires an infinite number of samples. Therefore, its accurate estimation is inherently difficult in real-world situations, where only a finite number of samples are available [4].

Simple but standard approaches for solving the above difficulty include resampling methods such as Hold-Out (HO), Cross-Validation (CV), Leave-One-Out-CV (LOO-CV) [5], and Bootstrap [6]. HO splits a given sample set into a training sample subset and a validation sample subset, and then estimates the error probability (or classifier status) by using the pair of these two subsets. This raises the issue of how given samples should be split, which inevitably decreases either the training or the validation samples, and therefore degrades the error probability estimation. In contrast, CV, LOO-CV, and Bootstrap give an accurate and reliable estimate of the error probability conditioned by the number of resampling repetitions. CV solves the above degradation issue by producing multiple pairs of subsets with a certain splitting ratio, repeating the estimation for each pair, and then averaging the estimates over the pairs; LOO-CV increases the reliability of CV by using as many subset pairs as possible. Bootstrap produces many sample sets by applying sampling-with-replacement to the given set, and increases the estimation reliability by repeating the estimation on each resampled set, while averaging the estimates over those resampled sets. However, such repetitions can be prohibitively time-consuming and unsuitable for real-life trainings that use larger number of samples, large-scale classifiers, or longer classifier training (e.g. [7]).

In contrast to the resampling methods, Structural Risk Minimization (SRM) [8] provides an upper bound on the error probability using only training data; it is also applicable to any classifier in principle. However, its application to classifier parameter status selection is not straightforward. Indeed, the derivation of the upper bound for a given classifier is usually difficult [4, 9], and the upper bound itself is known to be loose in practice [4].

Information Criteria (IC) such as the Akaike Information Criterion (AIC) [10] and the Bayes Information Criterion (BIC) [11] also require only training data. However, they often impose assumptions on classifier models that do not necessarily hold in practice [12]; moreover, they are basically designed for modeling sample distribution. Classification tasks do not focus on accurately modeling sample distributions, but on modeling the boundary between classes. Therefore, IC are not best suited for the estimation of the classification error probability or of the classifier parameter status [13,14,15]. Moreover, IC are able to evaluate a selected number of parameters (selected model size), but they are not fully suited to the evaluation of trained parameter statuses (parameter values).

Among the recent discriminative training methods, Minimum Classification Error (MCE) training [16, 17] pursues the minimum classification error probability in a direct manner by virtually increasing the training samples and minimizing the classification error counts. However, the effectiveness of the virtual samples in bridging the gap between a practical finite-sample situation and the ideal infinite-sample condition is not yet optimal [18].

Motivated by the above limitations of existing methods, we explored a new method for finding the optimal classifier parameter status. Our new method: 1) provides optimal (exact) values for classifier parameters instead of bounds in contrast to SRM, 2) can be easily and directly applied to any type of classifier in contrast to the IC-based methods, and 3) avoids a prohibitively long repetition of training/validation in contrast to the resampling methods. To this end, we focused on the property of the optimal classifier’s parameter status (parameter value). The optimal parameter status corresponds to the Bayes error, and draws a class boundary in which the on-boundary samples have equal posterior probabilities in terms of two dominant classes [2]. We call such a boundary a Bayes boundary. For every point on the Bayes boundary, classification is uncertain; but for every point off the Bayes boundary, classification is certain. Therefore, the higher the classification uncertainty is, the higher the Bayes boundary-nessFootnote 1 is. Importantly, the classification uncertainty, and in turn the Bayes boundary-ness, holds for a practical finite number of samples as well as for an ideal infinite number of samples; in principle, this relation holds regardless of the number of samples. Moreover, the relation holds regardless of the dimensionality of (vector) samples and independently from classifier selection. Therefore, we assume that our general concept of Bayes boundary-ness can be used to develop an effective method for finding the optimal classifier parameter status: 1) a Bayes boundary-ness score directly represents optimality in classifier parameter values, 2) measuring the Bayes boundary-ness does not assume classifier types, and 3) in principle, measuring the Bayes boundary-ness can be done using a single set of training samples without training/validation repetitions.

Similarly to estimates of error probability (and its minimum, i.e., Bayes error), estimates of Bayes boundary-ness using a finite number of samples cannot completely avoid deterioration in estimation quality. However, in contrast to error probability estimation, whose deteriorated result is not straightforwardly linked to degradation in the quality of Bayes boundary estimation, a deteriorated estimate of Bayes boundary-ness directly degrades the Bayes boundary estimation. In other words, the estimation of Bayes error is inevitably affected by sample distribution and thus its quality is not linearly linked to the quality of Bayes boundary estimation, while the quality of Bayes boundary-ness estimation is itself equivalent to such quality for the Bayes boundary estimation.

Encouraged by the above considerations, we preliminarily implemented the Bayes-boundary-ness-based concept as a method adopting an entropy-based uncertainty measure and investigated its effectiveness in the task of finding the optimal size of a multi-prototype-based classifier, i.e. the number of prototypes per class for a small number of two-class classification problems [19]. Experimental results for the method showed its fundamental utility, but these results were not so helpful due to the insufficient implementation of procedures such as finding near-boundary samples, which are defined below, and calculating the entropy. We also applied our method to a task of optimally setting the width of a Gaussian kernel for Support Vector Machine (SVM), while making some improvements to the implementation [20, 21]. The experimental results [21] showed its utility more clearly than did the previous experiment [19], but there remained issues to be improved. For example, the improved implementation provided suboptimal classifier statuses for some difficult datasets; furthermore, even if the concept basically worked well, it was somewhat heuristically implemented, and thus its theoretical validity was not sufficiently supported.

Based on the above background, in this paper, we comprehensively introduce a new Bayes-boundary-ness-based method for selecting an optimal classifier parameter status. First, we elaborate on the concept and its implementation, where we use the entropy to measure the Bayes boundary-ness, i.e. classification uncertainty. Next, we mathematically analyze the procedure of estimating posterior probabilities for computing the uncertainty measure, and show its theoretical validity, namely its unbiased and small-variance nature in convergence as the number of training samples increases. Finally, we demonstrate its utility in comparison experiments with the most fundamental CV-based method, using SVM classifiers over one synthetic dataset and eleven real-life datasets.

In comparative experiments, the selection of competitors is an important issue. As cited above, only resampling methods like CV and Bootstrap can realistically compete with our proposed method in the sense of being classification-oriented and classifier model-free. On the other hand, especially for the SVM classifiers we adopt in our experiments, various attempts to find the optimal parameter status have been made, for example an approximation of LOO-CV-based Bayes error estimation [22], a minimum-description-length-like method for estimating the Bayes error [23], and an IC-based modeling for the Bayes error [24]. However, all these methods are specific to SVM, and they are not directly applicable to other types of classifiers. While [22] produces accurate results in small-sized datasets, it may be excessively time-consuming and inapplicable to large-sized datasets. Moreover, compared to [22], the other less time-consuming methods [23, 24] basically increase error in the Bayes error estimation, therefore they are basically not the most appropriate to estimate the Bayes boundary. Based on these previous results for SVM classifiers, and taking into account the fact that the previous studies treated LOO-CV or CV as the most reliable method for estimating the Bayes error, we adopted a CV-based method as our competitor in the comparative experiments (simply using a large test set to estimate the Bayes error would be the simplest competitor. However, for the real-life datasets, generating a large number of virtual samples is not necessarily appropriate because their sample distribution functions are unknown, and thus the CV-based method can be a reasonable selection for the competitor. For the synthetic dataset, we generated a large test set.). As shown in a later section, we carefully examined the necessary number of training/validation repetitions in CV to find accurate estimates of the Bayes boundary and its corresponding optimal classifier parameter status (via estimation of the Bayes error).

2 Classifier Training Problem

Given a pattern sample \( \boldsymbol {x} \in \mathcal {X}\), where \(\mathcal {X}\) is a D-dimensional pattern space, we consider a task of classifying x into one of J classes (C1,…,CJ), based on the following classification decision rule:

where C() is a classification operator, Λ is a set of classifier parameters, and gj(x;Λ) is a discriminant function for Cj, which represents the degree of confidence to which the classifier assigns x to class Cj. A higher value for gj(x;Λ) represents higher confidence.

The aim of classifier training is to find the parameter status Λ∗ for which {gj(x;Λ∗)}j∈[1,J] draws the Bayes boundary B∗ that corresponds to the ideal Bayes error (minimum classification error probability) condition.

At a given sample location x, one dominant discriminant function score gj(⋅) enables the classifier to assign x to class Cj. When the two highest scores are equal, the classifier cannot decide between the two corresponding classes, and we say that x lies on the estimated boundary between the two classes. The concept of boundary directly extends to three or more classes; however, equality among three or more scores at a sample location is less likely. Therefore, the rest of this paper basically assumes boundaries between two classes. We denote Biy(Λ) as the estimated boundary that separates Cy and Ci. Clearly, Biy(Λ) and Byi(Λ) are interchangeable.

3 Proposed Method for Two-Class Data

3.1 Outline

For simplicity of presentation, we first cover the case with only two classes, C0 and C1. In this case, we abbreviate the estimated boundary B01(Λ) to B(Λ). For this two-class problem, we aim to find as close an approximation as possible of the Bayes boundary among the estimated boundaries, each produced beforehand by classifier training.

Our procedure consists of two steps: Step 1 “near-boundary sample selection” and Step 2 “uncertainty measure computation” (Algorithm 1 and Fig. 1). Assume that some classifier parameter status Λ and its corresponding class boundary B(Λ) are given from classifier training. Then, we evaluate the similarity between B(Λ) and B∗, which we denote as U(Λ) and call the uncertainty measure. Using this, we find the status of Λ that corresponds to the highest U(Λ) and provides the boundary closest to (ideally the same as) B∗.

Accurate computation of U(Λ) requires on-boundary samples on B(Λ). However, in practical situations where only a finite number of samples are accessible, we do not necessarily have on-boundary samples. Therefore, to compute U(Λ), we have to use samples close to B(Λ), which we call near-boundary samples and denote as B(Λ). “Samples on B(Λ)” is replaced by “samples in B(Λ)” in practice. However, if no near-boundary set is found, then a default minimum classification uncertainty value of 0 is assigned to U(Λ). This case is covered later in Section 4.2.

3.2 Step 1: Selection of Near-Boundary Samples B(Λ)

Assume a set of training samples \( \mathcal {T} \) (a in Fig. 1). Then, to obtain B(Λ), we apply the definition of being closest to B(Λ) (Algorithm 2): first, generate anchor samples on B(Λ), denoted by xa (b in Fig. 1); next, select their nearest neighbors in , denoted by NN(xa, ) (c in Fig. 1); finally, add NN(xa, ) to B(Λ) (c and d in Fig. 1).

Searching the multi-dimensional pattern space for anchors can be costly. We can reduce this search to a single dimension based on the following observation (Case (A) in Fig. 2). Assume that a pair of training samples {x,x′} are assigned different estimated class labels \(\{\hat {C}_{0}, \hat {C}_{1} \}\) by a trained classifier, where \(\hat {C}_{j}\) means a class index estimated by classification decision. On the segment connecting x and x′, estimated boundary B(Λ) passes through at least once, namely ∃α ∈ [0,1] : αx + (1 − α)x′∈ B(Λ), i.e. α ∈ [0,1] : g0(αx + (1 − α)x′;Λ) = g1(αx + (1 − α)x′;Λ). For example, we can find α by dichotomy, which provides an interval [a,b] as tight as possible and satisfies sign(g0(a;Λ) − g1(a;Λ))≠sign(g0(b;Λ) − g1(b;Λ)).

Anchor generation based on a random pair [a,b] belonging to a pair of different estimated classes \( \hat {C}_{0} \) and \( \hat {C}_{1} \), where we illustrate the need for a case-by-case procedure. In the two-class data case, segment ab strides only two class regions, while multiple boundaries can pass through the segment (case (A)). In the multi-class data case, segment ab can stride multiple class regions and multiple boundaries can pass through the segment (case (B)); when the segment strides multiple class regions, we divide the segment in the class-by-class manner and set an anchor for every pair of two adjacent classes.

The selection process of a near-boundary sample can be stopped once the number of near-boundary samples \(n_{anc}^{curr} \) does not show an increase from its previous value \(n_{anc}^{prev}\), even after creating E more anchors or after a preset number LM of random pairs has been used to create anchors. If all of the training samples have the same estimated labels, then the boundary is not reasonable, and we set a default minimum uncertainty measure score of 0.

3.3 Step 2: Computation of Uncertainty Measure U(Λ)

As for our uncertainty measure, we only require that it reach a maximum when posterior probabilities are equal and that it show a lower score when posterior probabilities are imbalanced. As one possible choice among others (e.g. Gini impurity [25]), we adopt Shannon entropy H(x) to implement uncertainty measure U(Λ) in the remainder of this paper. The local uncertainty at near-boundary sample x is therefore

and the uncertainty measure of B(Λ) is

where NB is the number of samples in B(Λ), i.e. near-boundary samples.

Applying Eqs. 2 and 3 as such would require estimating the posterior probabilities for each near-boundary sample in B(Λ). Posterior probabilities can be simply estimated in an assumption-free way using the k-Nearest Neighbor (k NN) method. Given near-boundary sample x, the posterior probability estimate is P̂(Ci|x) = ki/k, where the number of Ci’s neighbors is ki (∑ i= 1Jki = k ). In this posterior estimation, we use only near-boundary samples in B(Λ) as candidates of k nearest neighbors. This restricted use can be seen as a filtering step to retain only samples relevant for the estimation of class posteriors on B(Λ) (d in Fig. 1)).

A simple idea would be to apply the k NN posterior probability estimation to each near-boundary sample in \( \mathcal {N}_{B}({\Lambda } )\) to obtain the entropy in Eq. 2 and then apply (3). However, obtaining the optimal setting of the number of neighbors k for each x (\(\in \mathcal {N}_{B}({\Lambda } )\)) could itself require a parameter selection procedure. Intuitively, we should locally adapt k to the local sample density, since a higher density allows for a higher k. To implement this adaptive selection, we adopt a procedure that first obtains adaptive neighbors by iteratively and hierarchically using 2-means clustering and then next applies (3) to obtain local uncertainty measure scores (Algorithm 3).

A key concept of the adopted procedure is to compute the uncertainty measure at cluster centroids, each representing near-boundary samples as cluster members, instead of near-boundary samples; here, the cluster expresses the local sample density around its centroid (e and f in Fig. 1)). The procedure consists of two loops: an outer loop for R iterations with different initialization of clustering and an inner loop for running the hierarchical clustering for \(\mathcal {N}_{B}({\Lambda } )\).

At the r-th iteration of the outer loop (r ∈ [1,R]) of Algorithm 3, we first hierarchically apply the 2-means clustering to the near-boundary samples in \(\mathcal {N}_{B}({\Lambda } )\) until we can satisfy the following condition for clusters: in each cluster p (\(\in \mathcal {P}^{(r)}\)), the number of its members card(p) is equal to or larger than Nm and equal to or smaller than NM (the inner loop of Algorithm 3). When executing 2-means clustering, we tested random initialization M times and then chose the initialization that provides the clusters with the smallest distortion rate. Here, card() represents cardinality, \(\mathcal {P}^{(r)}\) is the set of clusters produced at the r-th iteration of the outer loop, and the minimum of cluster members Nm and the maximum of cluster members NM are set simply to maintain reliability in the posterior probability estimation. Accordingly, we obtain multiple clusters or their corresponding cluster centroids. Next, at the centroid of each cluster p, we compute entropy \({\sum }_{j=0}^{1} P(C_{j} | p) \log (P(C_{j} | p) )\), or the local uncertainty measure, using the corresponding card(p) cluster members as the neighbors of the k NN method. Finally, we obtain uncertainty measure score \(\hat {U}^{(r)}({\Lambda } )\) by averaging the entropy scores over all clusters in \(\mathcal {P}^{(r)}\).

In the outer loop, we repeat the above inner loop of hierarchical clustering R times to further increase reliability in the uncertainty measure computation. Each run of the outer loop is controlled by the difference in random initialization for clustering. From the outer loop iteration, we finally obtain uncertainty measure score \(\hat {U}({\Lambda } )\), which is expected to appropriately represent the sample density around the class boundaries, by averaging \(\hat {U}^{(r)}({\Lambda } )\) (r ∈ [1,R]).

4 Proposed Method for Multi-Class Data

4.1 Outline

To enforce the Bayes error status, it is necessary and sufficient to impose an estimated boundary that satisfies the same configuration as that of the Bayes boundary. To do this, at each on-boundary sample on the estimated boundary, in accordance with Section 3, we consider only the class indexes of the two highest discriminant functions and then impose them as the class indexes of the two highest posterior probabilities. More precisely, for each estimated on-boundary sample, we want the equality between the two highest discriminant functions to correspond to the equality of the two corresponding posterior probabilities.

Therefore, even in the multi-class case, we formally come back to the two-class case because locally we consider only the two highest discriminant functions (Step 1) and then the balance between the corresponding posterior probabilities (Step 2). The procedures in the multi-class case are thus either similar to or a simple combination of the procedures given in Section 3 (Algorithm 4). Although the resulting procedure superficially looks like a concatenation of the two-class procedures from the previous sections, it inherently has a multi-class formalization.

4.2 Step 1: Selection of Near-Boundary Samples \( \mathcal {N}_{B}({\Lambda } )\)

For the case of two classes C0 and C1, Algorithm 2 used the magnitude of g0(⋅;Λ) − g1(⋅;Λ) along random segments [x,x′] to generate anchors on the estimated boundary between Ĉ0 and Ĉ1. However, in the multi-class case, if a third estimated class Ĉ2 lies between Ĉ0 and Ĉ1, then considering the difference between g0(⋅;Λ) and g1(⋅;Λ) no longer makes any sense (case (B) in Fig. 2). In such a case, before applying Algorithm 2, we must come back to a segment [xa,xb], along which we consider only two adjacent estimated classes, and then instead consider either g0(⋅;Λ) − g2(⋅;Λ) to generate anchors on B02(Λ) or g1(⋅;Λ) − g2(⋅;Λ) to generate anchors on B12(Λ).

A segment [xa,xb] is included in a region of adjacency between only two estimated classes, if ∀x ∈ [xa,xb] and the two highest discriminant function scores are \(g_{C(\boldsymbol {x}_a )}(\boldsymbol {x} , {\Lambda } )\) and \(g_{C(\boldsymbol {x}_b )}(\boldsymbol {x} , {\Lambda } )\) (this situation corresponds to case (A) in Fig. 2.). In practice, we approximate this property using the two highest discriminant function scores at only (xa + xb)/2 (Algorithm 5). For convenience, we call this approximated property (). So long as () is not satisfied, we halve the considered segment (case (B) in Fig. 2). Incidentally, after an anchor is produced on Bij(Λ), it is reasonable to search for only its one nearest neighbor among the near-boundary samples x ∈Ĉi or x ∈Ĉj.

Algorithm 5 permits the creation of anchors on specific estimated boundaries; however, its randomness leaves little control over which boundary Bij(Λ) should create an anchor. To address this potential issue, we preliminarily sorted all samples in a matrix A, whose element Aij contains the list of training samples for which the highest discriminant function score is gi(⋅;Λ) and the second highest score is gj(⋅;Λ) (Algorithm 6). When selecting candidates for near-boundary sample set ijB(Λ) for Ci and Cj, we used A to preferentially form anchors based on pairs of samples xa,xb ∈ Aij × Aji.

The number of anchors to be generated can be fixed similarly to that in the two-class case. The final near-boundary set B(Λ) is the concatenation of various sets {ijB(Λ)}.

4.3 Step 2: Computation of Uncertainty Measure U(Λ)

In the multi-class case, it is possible that the class indexes between the two highest local discriminant function scores are different from the indexes between the two highest local posterior probabilities. Therefore, we need to define an uncertainty measure that handles this situation.

Assuming that the two highest local discriminant function scores correspond to Ĉi and Ĉj, we define uncertainty measure Hij(x) as follows. Given sample x on Bij(Λ), we first choose the two highest posterior probabilities P(C(i)|x) and P(C(ii)|x), and next we set Hij(x) in the following conditional branching manner:

- I.

If {(i),(ii)}≠{i,j}, then set Hij(x) to 0.

- II.

Else normalize P(C(i)|x) and P(C(ii)|x) so that their sum equals 1, and then apply (2) to the normalized posterior probabilities by replacing class indexes {0,1} with {(i),(ii)}. Here, normalization is necessary because the definition of Eq. 2 assumes P(C(i)|x) + P(C(ii)|x) = 1, which does not necessarily hold.

The resulting normalized entropy is similar to the concept of entropy branching. In our above definition, the first step means that if the sample is not even on a region near (true) classes Ci and Cj, then by default we set the entropy to its lowest value 0.

Then, we define a local uncertainty measure score for Bij(Λ) similarly to Eq. 3:

where NijB is the number of samples in ijB(Λ). Finally, a multi-class uncertainty measure score U(Λ) is considered simply the mean over several local uncertainty scores Uij(Λ):

where \(S = {\sum }_{i,j} N^{B}_{ij} \). We accordingly select the classifier status Λ that provides the highest U(Λ). Under these conditions, the partitioning of each near-boundary set ijB(Λ) and the estimation of posterior probabilities are performed exactly as described in Section 3.3.

5 Optimality Analysis of Uncertainty Measure

5.1 Overview and Preparations

As can be seen from the definition in Sections 3 and 4, the reliability of our proposal relies on the quality of the computed uncertainty measure. Now, we mathematically analyze our entropy-based procedure for computing uncertainty measure scores and show how our uncertainty-measure-based method can reliably represent Bayes boundary-ness to find the optimal classifier parameter status, even if it uses only training samples in a single-shot manner.

We consider point x in a finite-dimensional Euclidian space; furthermore let X1,...,XN be N samples independently sampled from a probability distribution function (pdf) p(⋅).Footnote 2 Then, every point in the space is assumed to belong to one or multiple classes among J classes C1,...,CJ.Footnote 3 Moreover, letting \(\mathcal {R}^{(N)}(\boldsymbol {x})\) and V(N)(x) be a small region containing x and the volume of this region, respectively, we assume the following:

- I.

The distance between any two points in \(\mathcal {R}^{(N)}(\boldsymbol {x})\) goes to 0 as N →∞.

- II.

pdf p(⋅) and class likelihoods p(⋅|Cj) (j = 1,...,J) are continuous functions.

- III.

For all N and all x, \(\mathcal {R}^{(N)}(\boldsymbol {x})\) is a finite and closed set.

Note that we make no assumption about the shape of \(\mathcal {R}^{(N)}(\boldsymbol {x})\).

5.2 Convergence to True Value for Ratio-Based Probability Density Estimator

First, the probability P(N)(x) that sample X is contained in \(\mathcal {R}^{(N)}(\boldsymbol {x})\) is equal to the expected value of \(1_{\mathcal { R}^{(N)}(\boldsymbol { x})}(\boldsymbol {X})\):

where 1A(⋅) refers to the indicator function that outputs 1 if the predicate A holds true, and 0 otherwise. Then, there exists point \(\boldsymbol {x}^{(N)} \in \mathcal {R}^{(N)}(\boldsymbol {x})\) such that P(N)(x) = p(x(N)) ⋅ V(N)(x) (see Appendix 1). Furthermore, the variance of \( 1_{\mathcal { R}^{(N)}(\boldsymbol { x})}(\boldsymbol {X}) \) is

For the N i.i.d. samples, we next denote the number of samples contained in \(\mathcal {R}^{(N)}(\boldsymbol {x})\) by K(N)(x). Then, K(N)(x) is represented as

Because X1,...,XN are i.i.d. samples, the expectation and variance of K(N)(x) are

and accordingly the following holds:

If V(N)(x) is chosen so that it satisfies V(N)(x) → 0 and NV(N)(x) →∞,Footnote 4 then from the continuity of p(⋅) it follows that

furthermore, from Eqs. 11 and 12, we reach

Therefore, K(N)(x)/{NV(N)(x)} converges to p(x) in the mean-square (L2) sense, and in particular to p(x) in probability:

where \(\stackrel {\mathrm {P}}{\longrightarrow }\) denotes convergence in probability.

5.3 Convergence to True Value for Ratio-Based Joint Probability Density Estimator

The reasoning in this subsection is similar to that in the previous subsection.

The data available for training is a set of N realizations {x1,…,xn,…,xN} , where xn is a realization of Xn that is deterministically associated with class label (index) yn. On the other hand, each random variable Xn (n = 1,…,N) is probabilistically associated with multiple class labels, since different class regions can overlap in the Euclidean sample space. Therefore, we regard a class label for Xn (n = 1,…,N) as a random variable Yn (∈{1,…,J}) and discuss sample pairs {(X1,Y1),…,(Xn,Yn),…,(XN,YN)} as follows, assuming that these sample pairs are independent.

For one sample pair (X,Y ), we denote the expectation of \(1_{\mathcal {R}^{(N)}(\boldsymbol {x})}(\boldsymbol {X}) \cdot 1_{\{j\}}(Y)\) by \(P_{j}^{(N)}(\boldsymbol {x})\). Then, this expectation is the probability that X is included in V(N)(x) and labeled by Cj (see Appendix 2):

Moreover, because p(⋅|Cj) is continuous, there exists point \(\boldsymbol {x}^{(N,j)} \in \mathcal {R}^{(N)}(\boldsymbol {x})\), and \(P_{j}^{(N)}(\boldsymbol {x})\) is equal to Pr(Cj)p(x(N,j)|Cj) ⋅ V(N)(x) (see Appendix 1); the variance of \(1_{\mathcal {R}^{(N)}(\boldsymbol {x})}(\boldsymbol {X}) \cdot 1_{\{j\}}(Y)\) can be written as

For the N i.i.d. samples, we denote the number of samples included in \(\mathcal {R}^{(N)}(\boldsymbol {x})\) and labeled by Cj as \(K_{j}^{(N)}(\boldsymbol {x})\). Then, \(K_{j}^{(N)}(\boldsymbol {x})\) is represented as

Moreover, because (X1,Y1),…,(XN,YN) are i.i.d., the expectation and variance of \(K_{j}^{(N)}(\boldsymbol {x})\) are

and it follows that

Here, if we set V(N)(x) similarly to that used to derive (13), and then we reach the following, since p(⋅|Cj) is a continuous function:

Accordingly, \(K_{j}^{(N)}(\boldsymbol {x}) / \{ N V^{(N)}(\boldsymbol {x}) \}\) converges to Pr(Cj) p(x|Cj) in the mean-square sense, and in particular to Pr(Cj)p(x|Cj) in probability:

5.4 Probabilistic Convergence to True Value for Ratio-Based Posterior Probability Estimator

In previous subsections, we found that the simple ratio-based estimators for the probability density and the joint probability density converge to their true values in probability, respectively (see Eqs. 15 and 24). From these results and the nature of four arithmetic operations for the random variable sequences that converge in probability, we finally obtain

Because the above convergences in Eqs. 13 through 25 assume that V(N)(x) → 0 (N →∞), the following should be satisfied for K(N)(x), \(K_{j}^{(N)}(\boldsymbol {x})\) and N:

- I.

If p(x),p(x|Cj) > 0, then \(\displaystyle { K^{(N)}(\boldsymbol {x}), K_{j}^{(N)}(\boldsymbol {x}) \to \infty ~~ (N \to \infty ) }\).

- II.

\(\displaystyle { K^{(N)}(\boldsymbol {x})/N, K_{j}^{(N)}(\boldsymbol {x})/N \to 0 ~~ (N \to \infty ) }\).

The first condition is necessary because if K(N)(x) and \(K_{j}^{(N)}(\boldsymbol {x})\) do not go to infinity when N →∞, then they would tend to 0 for sufficiently small values of V(N)(x). The second condition ensures that K(N)(x)/{NV(N)(x)} and \(K_{j}^{(N)}(\boldsymbol {x}) / \{ N V^{(N)}(\boldsymbol {x}) \}\) do not diverge even when the first condition is satisfied.

5.5 Practical Advantages Supported by Optimality in Ratio-Based Posterior Probability Estimation

In addition to the property of convergence to the true posterior probability in Eq. 25, the formalization eloquently expresses the practical advantages, which will be useful in a real-life finite sample regime, of our own k NN-based posterior probability estimation using only near-boundary samples. Assuming that point x is sufficiently close to estimated boundary B(Λ), we summarize them in the following:

- I.

We should decrease region \(\mathcal {R}^{(N)}(\boldsymbol {x})\) to reduce the bias in the expectation of \(K_{j}^{(N)}(\boldsymbol {x}) / K^{(N)}(\boldsymbol {x})\) so that p(x(N)) and p(x(N,j)|Cj) become closer to p(x) in Eq. 11 and p(x|Cj) in Eq. 21, respectively.

- II.

By increasing \(\mathcal {R}^{(N)}(\boldsymbol {x})\), we can basically reduce the variance of k NN-based posterior probability estimate \(K_{j}^{(N)}(\boldsymbol {x}) / K^{(N)}(\boldsymbol {x})\). This result can also be proved in a more accurate manner using the perturbation technique [2].

- III.

When estimated boundary B(Λ) is close to the Bayes boundary, we can reduce both the bias and the variance, based on Eqs. 11, 12, 21, and 22, in the posterior probability estimate by applying region \(\mathcal {R}^{(N)}(\boldsymbol {x})\) to only the near-boundary samples in \(\mathcal {N}_{B} ({\Lambda } )\) and increasing its size (in other words, increasing the number of the near-boundary samples used for k NN-based posterior probability estimation). Here, all individual estimates P(Cj|x) will be close to 0.5, and, moreover, the numerators in Eqs. 12 and 22 are bounded by N/4; therefore, the corresponding variances are bounded by 1/{4N(V(N)(x))2} and tend to 0 as V(N)(x) grows larger. This valuable property enables our method to use large regions (clusters) for the posterior probability estimation in Algorithm 3 and leads to accurate and reliable performances even if it adopts a simple k NN-based estimation.

- IV.

When accepting the incursion of samples outside \(\mathcal {N}_{B} ({\Lambda } )\) to region \(\mathcal {R}^{(N)}(\boldsymbol {x})\) and increasing its size, we clearly face a dilemma between the bias and the variance: Increasing \(\mathcal {R}^{(N)}(\boldsymbol {x})\) decreases the variance but increases the bias, while decreasing \(\mathcal {R}^{(N)}(\boldsymbol {x})\) increases the variance but decreases the bias. This phenomenon, which is generally observed in a regular k NN-based posterior probability estimation, proves again the validity of using only near-boundary samples for posterior probability estimation.

- V.

All the estimators’ properties such as the convergence to true value hold regardless of the shape of region \(\mathcal {R}^{(N)}(\boldsymbol {x})\).

Usually, k NN-based posterior probability estimation controls the size and shape of a region whose samples are used for estimation, and basically it cannot avoid the tradeoff between bias and variance in the estimation. In contrast, our k NN-based method can basically reduce both bias and variance using only the near-boundary samples in a small region, which can be extended along an estimated boundary, for the estimation.

6 Experimental Evaluation

6.1 Datasets

We conducted evaluations on fixed-dimensional vector pattern datasets from the UCI Machine Learning Repository.Footnote 5 Especially for the Abalone, Wine Quality Red, and Wine Quality White datasets, we used our custom versions, where the original categories were grouped into three categories due to the presence of very few represented classes. For the Wine Quality White dataset, we used a randomly sampled subset of the mother dataset. For analysis purposes, we also prepared a two-dimensional two-class synthetic vector pattern dataset called GMM, which modeled each class with two Gaussian mixtures and 1100 samples. We summarize these datasets in Table 1, where N refers to the number of samples available, D to the dimensionality, and J to the number of classes.

For all datasets, we performed sample vector normalization, i.e. removing the mean and scaling to unit variance, in a vector-element-by-vector-element manner.

6.2 Classifier

As the classifier for evaluation, we chose SVM [27] using a Gaussian kernel whose implementation is available online.Footnote 6 In this case, Λ consists of a set of kernel weights optimized during the training, regularization parameter C, and Gaussian kernel width γ. To simplify the analysis, we fixed C beforehand using another CV-based preliminary experiment and then focused on the optimal setting (status selection) of the single parameter γ.

For multi-class data, we used a one-versus-all multi-class SVM. Actually, the one-versus-all formalization of the multi-class problem is different from the multi-class formalization described in Section 2. However, in our understanding, the SVM implementation that we used draws boundaries such that a region near the estimated boundary Bij(Λ) is characterized by gi(⋅;Λ) and gj(⋅;Λ), being the two highest discriminant function scores. This is adequate for Step 1, described in Section 4.2, to be applicable.

6.3 Evaluation Procedure

To evaluate the effectiveness of our uncertainty-measure-based boundary evaluation method, we need to use the Bayes boundary as a reference or true target for the adopted datasets. However, the Bayes boundary is rarely known for real-life data. Our comparison competitor, the CV-based method, compensated for this lack of information. To obtain accurate estimates of the Bayes boundary, we applied an SVM classifier to each adopted dataset in the CV manner, computed two kinds of classification error probability estimates, i.e. averaged estimate for the CV’s training sample folds (Ltr) and averaged estimate for the CV’s validation (testing) sample folds (Lval), for every setting of hyperparameter γ, and used the values of Lval as nearly true targets to be compared to our uncertainty measure values; therefore, we treated the minimum of Lval as a nearly true Bayes error value and its corresponding boundary estimate as a nearly true Bayes boundary.

For the above purpose, we applied five-fold CV to each of the adopted datasets, except for Breast Cancer, Ionosphere, and Sonar, for which we applied LOO-CV owing to the few samples available. In both the CV and LOO-CV procedures, we divided all of the samples for each dataset into training folds and validation folds. Moreover, to check the reliability of the targets, we preliminarily tested several different settings of fold numbers and selected the five-fold setting that produced sufficient reliability.

To compute the uncertainty measure, we used all of the samples for each dataset (\(\mathcal {T} = \) all of the given samples), differently from the CV-based method. The computed uncertainty measure U(Λ) is high when the estimated boundary is close to the Bayes boundary; conversely, the target value Lval becomes lower. For convenience of discussion, we use a sign-reversed measure − U(Λ) and analyze the similarity between − U(Λ) and Lval in later sections.

It is known that the quality of posterior probability estimation can quickly degrade when the samples are imbalanced in classes, probably due to the erroneous sampling from the mother set consisting of an infinite number of samples or to the imbalance in prior probabilities [26]. Traditional solutions to the presence of an under-represented minority class modify the classifier objective or resample the minority-class samples to force class balance [26]. In our method, rather than changing the samples or the classification process, we simply evaluate classification uncertainty in a non-intrusive way. Our solution is to superficially take the class imbalance into account during the posterior probability estimation. More precisely, we replace the estimated posterior probabilities P̂(Ci|x) with P̂(Ci|x)/P̂(Ci) in Eq. 2. By doing so, we will raise the estimated posterior probability for a low prior probability, while we will bring down the estimated posterior probability for a high prior probability. The next sections assume this simple measure.

6.4 Hyperparameters

Our proposed method contains several hyperparameters for controlling its process. Despite the presence of several such hyperparameters in our method, these hyperparameters do not need dataset-by-dataset tuning, and they can be quite insensitively set.

In Algorithm 2, we simply set the maximum number of dichotomy loops to 30 and the maximum number of repetitions lM for generating anchors to a high value such as 10,000.

In Algorithm 3, Nm and NM control the granularity of the clusters, each of which should ideally have as small a volume as possible and yet contain enough samples to perform a reliable entropy (or posterior probability) estimation. Computing the entropy based on clusters containing two or three samples is obviously rough, so we imposed clusters that would contain 10 or fewer samples by simply setting Nm = 8 and NM = 12. Moreover, initialization count M for each hierarchical clustering and count R of repeating the clustering should simply be higher than 1 to increase the reliability of the procedure; accordingly, we simply set them to 10.

6.5 Results and Discussions

6.5.1 Selection of B(Λ)

We first show the results obtained by Algorithm 2 with five different settings of γ on the GMM dataset (Fig. 3). A higher γ should correspond to a more complex boundary (a lower one for a simpler boundary). The results clearly show that the selected near-boundary samples were accurately selected along the estimated boundary. For excessively low values like γ = 2− 35,2− 22, the estimated boundaries were too simple and vague; the selected near-boundary samples are allocated along straight lines or bands. For excessively high values like γ = 2− 1,214, the estimated boundaries were very complex; the selected near-boundary samples are scattered. For γ = 2− 9, which was not necessarily the best but for the most part appropriately selected, the estimated boundary and its corresponding near-boundary samples almost exactly trace the true Bayes boundary.

Results obtained by our near-boundary sample selection step (Algorithm 2) with five different settings of γ on two-class GMM dataset. From left to right and up to down: near-boundary samples (blue crosses) and corresponding training samples with estimated class labels (gray or green) for γ = 2− 35,2− 22,2− 9,2− 1,214. Training samples with original class labels (gray and red) shown in bottom-right corner.

6.5.2 Overview of Results on Classifier Status Selection

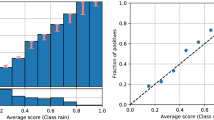

In Fig. 4, for each adopted dataset, we show Ltr (yellow curve), Lval (green curve), and our proposed uncertainty measure score − U(Λ) (blue curve). Note that we obtained the blue curve of − U(Λ) using all of the samples of each dataset for training. In all of the panels of these figures, the horizontal axis corresponds to the value of hyperparameter γ (kernel width) as well as its corresponding classifier parameter status (the values of SVM’s kernel weights): for every different value of γ, we trained the SVM classifier and obtained a set of trained kernel weights. Because we assume that Lval is an accurate estimate of the error probability, its minimum represents the Bayes boundary and its corresponding optimal status of kernel width γ and kernel weights. Therefore, our desired result for the blue curve of − U(Λ) is to reach a minimum at the same value of γ as the green curve of Lval.

Classifier parameter status selection results for synthetic GMM dataset and 11 real-life datasets. From top to bottom: GMM, Abalone, Breast Cancer, Cardiotocography, Ionosphere, and Landsat Satellite. Each panel shows the estimated error probability curve based on the CV’s training sample folds (Ltr,yellow), the estimated error probability curve based on the CV’s validation sample folds (Lval,green), and our uncertainty measure curve (− U, blue). In the top GMM panel, the red curve (Lval2) represents the estimated posterior probability over the 20,000 independent testing samples. Horizontal axis indicates the value of γ. From top to bottom: Letter Recognition, MNIST (test), Sonar, Spambase, Wine Quality Red, and Wine Quality White.

For all datasets, the yellow curve of Ltr goes down as γ increases; for lower boundary complexity (low γ), the yellow curve of Ltr is close to the green curve of Lval, but the gap between the yellow and green curves grows wider and wider as the classifier draws more complex boundaries (higher γ). Such a gap clearly illustrates the phenomenon of overlearning, or the underestimation of the Bayes error.

In particular, for the GMM dataset, we additionally generated 20,000 independent samples using the same Gaussian mixture model as that used for the original 1100 samples, approximated the true error probabilities, which should originally be computed over an infinite number of samples, and showed its results as the red curve (Lval2 of Fig. 4). Interestingly, thegreen CV-based curve and the red large-data-based curve closely fit each other. This consistent result shows that the CV-based method accurately approximated the true error probabilities and reliably served as a competitor or target in our comparison.

For all datasets, the blue curve of our uncertainty measure − U almost always shows the same trend as the green curve of the CV-based error probability estimates; in particular, the minimum of the blue curve is among the bottom points of the green curve, which should correspond to the optimal classifier parameter status. As found in the values of Lval, classification for the Abalone, Landsat Satellite, Wine Quality Red, and Wine Quality White datasets was rather difficult; for these datasets, the Bayes error values estimated by the CV-based method were higher than 0.3. Even for these difficult datasets, our blue curve closely followed the green curve. From all of these comparison results, we can infer the basic utility of our uncertainty-measure-based method for selecting the optimal classifier parameter status.

6.5.3 Influence of Data Imbalance on Classifier Status Selection

To better understand the influence of class imbalance, we focus on the Cardiotocography dataset (176 samples for C0 and 1,655 samples for C1) in Fig. 5. We break down the number of near-boundary samples (black curve) into the numbers of near-boundary samples belonging to C0 (gray curve) and to C1 (red curve). As γ increases, the number of near-boundary samples belonging to C0 rapidly increases, stays high, and drastically decreases in B(Λ), while the number of samples belonging to C1 almost always stays around 176; for most γ values, the total number of near-boundary samples is dominated by one of the two classes. In this case, the computation of the uncertainty measure is obviously biased and there is no way that uncertainty around B∗ can be achieved. The posterior probability computation with the superficial prior probability correction described in Section 6.3 indeed gives better results (blue curve) than without the prior correction (red dashed curve); however, more efficient posterior estimation methods must be applied for such an imbalanced case.

Parameter status selection results for Cardiotocography data. In the top panel, the blue curve represents uncertainty measure − U with the prior probability correction; the red dashed curve for − U without the correction. In the bottom panel, the black curve represents the number of near-boundary samples; the gray and red curves represent the numbers of near-boundary samples belonging to C0 and to C1, respectively.

6.5.4 Influence of Neighbor Selection

To further analyze the parameter status selection results in Section 6.5.2, we performed a step-by-step analysis of Step 2, which executes the posterior probability estimation using the k NN. However, instead of the traditional estimation based on a fixed-size neighborhood selected from the entire , we chose to consider only the near-boundary samples in B(Λ) as neighbors and also determined the number of neighbors adaptively by the hierarchical clustering procedure.

In this section, we analyze the influence of this choice on the quality of the estimation of posterior probabilities along the estimated boundary. To this end, we compared the overall parameter status selection results obtained on the Ionosphere dataset by three neighbor-selection schemes (Fig. 6): fixed k NN considering neighbors selected from the entire (top panel), fixed k NN considering neighbors selected from B(Λ) (middle panel), and adaptive partitioning in B(Λ) (bottom panel). For the top and middle panels, we first fixed k to 5 (results shown using different values of k). The setting of Nm,NM,r,R,pij for the adaptive partitioning was the same as in Section 6.5.2.

Comparison of three neighbor selection schemes in Step 2 (near-boundary sample selection) for Ionosphere dataset: fixed k NN considering neighbors selected from \( \mathcal {T} \) (top panel), fixed k NN considering neighbors selected from \( \mathcal {N}_{B}({\Lambda } )\) (middle panel), and adaptive partitioning in \( \mathcal {N}_{B}({\Lambda } )\) (bottom panel).

First, the top panel basically shows noise: there is no trend and the range of values for − U(Λ) is close to 0 (see the right vertical axis), which means the method does not measure any reliable uncertainty measure score around B(Λ), even when B(Λ) is close to B∗. By contrast, the middle panel seems to clearly detect a range of suitable candidate parameter values similar to the range provided by the CV-based method. The neat improvements from the top panel to the middle panel show the necessity of filtering out samples outside of B(Λ). Here, note that the range of − U(Λ) in the top panel is significantly smaller than that in the middle and bottom panels. Accordingly, these results can be understood as follows. To evaluate the classifier status, our measure strictly focuses on estimation of the posterior probability imbalance on the estimated boundary. In practice, with finite samples, B(Λ) contains only samples close to B(Λ). Step 1 can be seen as a filter that effectively cuts noise, i.e. samples away from the estimated boundary.

Second, the clear improvement from the middle panel to the bottom panel shows that the adaptive partition of the near-boundary samples further enhances the quality of the neighbors used in the k NN-based estimation. Intuitively, adapting the number of neighbors to the local density and ignoring excessively small neighbors improves the quality of the posterior estimation.

For exhaustiveness, using the Ionosphere dataset, we also tried k = 5,7,10,15 when using the k NN posterior estimation in the top and middle panels of Fig. 6, and we summarized the results in Fig. 7 (for top panel of Fig. 6) and Fig. 8 (for middle panel of Fig. 6). Compared to the choice of k for \(\mathcal {T}\), the choice of k for the near-boundary sample set \(\mathcal {N}_{B}({\Lambda } )\) clearly has a minor impact on the quality of the uncertainty measure computation.

6.5.5 Influence of Procedure Repetition on Classifier Status Selection

Figure 9 shows the effect of increasing the number of random trials for the 2-means clustering M and the number of near-boundary set partitions R over the reliability of the selection procedure. As can be seen, further repetition gives a neater blue curve, which is closer to the target green curve. The increase in similarity is striking, all the more so because the two curves are obtained from apparently independent evaluation criteria, i.e. classification error probability and uncertainty measure. Such results seem to confirm the following two conclusions. First, the results from CV and our procedure mutually confirm their reliability as methods of classifier parameter status evaluation. Second, increasing M and R increases the reliability of the posterior balance estimate on B(Λ). Although the increase in reliability is not negligible, even M = 1,R = 1 seems sufficient, at least for the Ionosphere dataset.

7 Conclusion

We introduced a new method to evaluate a general form of classifier. The purpose was to define a new way of selecting the optimal classifier parameter status to overcome the fundamental limitations of the standard methods (i.e. training repetition, data splitting in validation, difficulty in applying them). Accordingly, we defined a fundamentally different classifier evaluation criterion that directly takes the Bayes boundary status as a reference, and that can potentially be estimated without the need for validation data, while still being readily applicable to any type of classifier and fixed-dimension data. Moreover, we mathematically proved the validity of our posterior probability estimation procedure, which plays a central role in the proposed method for finding the optimal classifier parameter status. The experimental results and a comparison with the benchmark CV method indicate the possibility of selecting the optimal model on several real-life classification tasks. Despite the encouraging results, there is room for improvement in terms of accuracy and processing speed. To improve accuracy, our mathematical analysis of the posterior probability estimation along the estimated boundary may provide guidelines on the selection and use of the boundary neighborhood. To improve processing speed, we believe that a better use of the information provided by the discriminant functions and of the local information along the estimated boundary can simplify the current somewhat complicated process of Step 1. Taken together, these improvements would provide a practical competitive edge to our method, especially for expensive classifier training.

Notes

This term represents how close an estimated boundary is to the Bayes boundary.

The term “sample” means random variable in this subsection.

Class label (index) is basically a random variable in this subsection.

For example, given V > 0, we can set V(N)(x) as V/(Nα) (0 < α < 1) or V/(ln N)β (N > 1,β > 0), etc.

References

Duda, R.O., Hart, P.E., Stork, D.G. (2000). Chapter 2, Part 1. In Pattern classification. 2nd edn. New York: Wiley.

Fukunaga, K. (1990). Nonparametric classification and error estimation. In Introduction to statistical pattern recognition. 2nd edn. (pp. 313–321). San Diego: Academic Press.

Bishop, C. (2006). Decision theory. In Pattern recognition and machine learning (pp. 39–40). New York: Springer Science+Business Media.

Hastie, T., Tibshirani, R., Friedman, J. (2009). Optimism of the error rate (in the elements of statistical learning), 2nd edn., (pp. 228–230). New York: Springer Science+Business Media.

Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society, 36(2), 111–147.

Efron, B., & Tibshirani, R. (1993). An introduction to the bootstrap. New York: Springer Science+Business Media.

Demyanov, S., Bailey, J., Ramamohanarao, K., Leckie, C. (2012). AIC and BIC based approaches for SVM parameter value estimation with RBF kernels. Journal of Machine Learning Research, 25, 97–112.

Vapnik, V. (1998). The structural risk minimization principle. In Vapnik, V. (Ed.) Statistical learning theory. 1st edn. (pp. 219–268). New York: Wiley.

Guyon, I., Vapnik, V., Boser, B., Bottou, L., Solla, S.A. (1991). Structural risk minimization for character minimization. Proceedings of Advances in Neural Information Processing Systems, 4(NIPS 1991), 471–479.

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19(6), 716–723.

Shwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464.

Watanabe, S. (2010). Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. Journal of Machine Learning Research, 11, 3571–3594.

Bouchard, G., & Celleux, G. (2006). Selection of generative models in classification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(4), 544–554.

Biem, A. (2003). A model selection criterion for classification: application to HMM topology optimization. In Proceedings of IEEE seventh international conference on document analysis and recognition.

Guo, Y., & Greiner, R. (2005). Discriminative model selection for belief net structures. In Proceedings of AAAI twentieth national conference on artificial intelligence (AAAI-05) (pp. 770–776).

Juang, B.-H., & Katagiri, S. (1992). Discriminative learning for minimum error classification. IEEE Transactions on Signal Processing, 30(12), 3043–3054.

Watanabe, H., Ohashi, T., Katagiri, S., Ohsaki, M., Matsuda, S., Kashioka, H. (2014). Robust and efficient pattern classification using large geometric margin minimum classification error training. Journal of Signal Processing Systems, Springer, 74(3), 297–310.

Ohashi, T., Watanabe, H., Tokuno, J., Katagiri, S., Ohsaki, M., Matsuda, S., Kashioka, H. (2012). Increasing virtual samples through loss smoothness determination in large geometric margin minimum classification error training. In Proceedings of 2012 international conference on acoustics, speech and signal processing (ICASSP 2012) (pp. 2081–2084).

Ha, D., Maes, J., Tomotoshi, Y., Melle, C., Watanabe, H., Katagiri, S., Ohsaki, M. (2017). A class boundary selection criterion for classification. In Proceedings of IPSJ Kansai-Branch Convention 2017, G-11.

Ha, D., Maes, J., Tomotoshi, Y., Watanabe, H., Katagiri, S., Ohsaki, M. (2018). A classification-uncertainty-based criterion for classification boundary. IEICE Technical Report, 117(442, PRMU2017-166), 121–126.

Ha, D., Delattre, E., Tomotoshi, Y., Senda, M., Watanabe, H., Katagiri, S., Ohsaki, M. (2018). Optimal classifier model status selection using Bayes boundary uncertainty. In Proceedings of 2018 IEEE international workshop in machine learning for signal processing.

Chapelle, O, & Vapnik, V. (2000). Model selection for support vector machines. Advances in Neural Information Processing Systems, 12.

von Luxburg, U., Bousquet, O., Scholkopf, B. (2004). Compression approach to support vector model selection. Journal of Machine Learning Research, 5, 293–323.

Demyanov, S., Bailey, J., Ramamohanarao, K., Leckie, C. (2012). AIC and BIC based approaches for SVM parameter value estimation with RBF kernels, (Vol. 25.

Bishop, C. (2006). Combining models. In Bishop, C. (Ed.) Pattern recognition and machine learning (p. 666).

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P. (2002). SMOTE: synthetic minority over-sampling technique. Journal of Artificial Intelligence Research, 16, 321–357.

Burges, C. (1998). A tutorial for support vector machines for pattern recognition. Data Mining and Knowledge Discovery, 2(2), 121–167.

Acknowledgments

This work was supported in part by JSPS KAKENHI No.18H03266 and MEXT Supported Program for Strategic Research Foundation at Private Universities (2014-2018), named “Driver-in-the-Loop.”

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix: 1

Because p(⋅) is continuous, it has minimum value m and maximum value M within the bounded and closed set \(\mathcal {R}^{(N)}(\boldsymbol {x})\):

Then, integration over \(\mathcal {R}^{(N)}(\boldsymbol {x})\) gives

and thus

Because m and M correspond to values taken by p(⋅) on \(\mathcal {R}^{(N)}(\boldsymbol {x})\), the intermediate value theorem guarantees the existence of \(\boldsymbol {x}^{(N)} \in \mathcal {R}^{(N)}(\boldsymbol {x})\), which satisfies

Appendix: 2

We denote the probability space that governs the random variables by \(({\Omega },\mathcal {F},\Pr )\), where Ω is the space of all events, \(\mathcal F\) is a completely additive class over Ω, and Pr is a probability measure over \(\mathcal F\). Then, the following holds:

where for mapping f : U → V and set B (⊂ V), f− 1(B) represents the inverse image of B: f− 1(B) = {x ∈ U | f(x) ∈ B}, and also Pr(Cj) = Pr{Y = j} = Pr(Y− 1({j})).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ha, D., Tomotoshi, Y., Senda, M. et al. A Practical Method Based on Bayes Boundary-Ness for Optimal Classifier Parameter Status Selection. J Sign Process Syst 92, 135–151 (2020). https://doi.org/10.1007/s11265-019-01451-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-019-01451-y