Abstract

Timing verification of multi-core systems is complicated by contention for shared hardware resources between co-running tasks on different cores. This paper introduces the Multi-core Resource Stress and Sensitivity (MRSS) task model that characterizes how much stress each task places on resources and how much it is sensitive to such resource stress. This model facilitates a separation of concerns, thus retaining the advantages of the traditional two-step approach to timing verification (i.e. timing analysis followed by schedulability analysis). Response time analysis is derived for the MRSS task model, providing efficient context-dependent and context independent schedulability tests for both fixed priority preemptive and fixed priority non-preemptive scheduling. Dominance relations are derived between the tests, along with complexity results, and proofs of optimal priority assignment policies. The MRSS task model is underpinned by a proof-of-concept industrial case study. The problem of task allocation is considered in the context of the MRSS task model, with Simulated Annealing shown to provide an effective solution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Extended version

This paper builds upon and extends the ECRTS 2021 paper Schedulability Analysis for Multi-core Systems Accounting for Resource Stress and Sensitivity (Davis et al. 2021). Section 4.5 derives complexity results for the various schedulability tests. Section 8 considers the issues involved in allocating tasks to cores in a way that optimizes system schedulability and robustness under the MRSS task model. The difficulties of task assignment are highlighted via a worked example, based on data from the industrial case study. Simulated Annealing is then proposed as a potential solution, and its effectiveness demonstrated via experimental evaluation.

2 Introduction

2.1 Background

The survey published by Akesson et al. (2020, 2021), shows that about 80% of industry practitioners developing real-time systems are using multi-core processors, about twice the number that are using single-cores. On a single-core processor, when a task executes without interruption or pre-emption it has exclusive access to the hardware resources that it needs. The execution time of the task therefore depends only on its own behavior and the initial state of the hardware. This is in marked contrast to what happens when a task executes on one core of a multi-core processor. Multi-core processors are typically designed to provide high average-case performance at low cost, with hardware resources shared between cores. These shared hardware resources typically include, the interconnect, caches, and main memory, as well as other platform specific components. As a consequence, the execution time of a task running on one core of a multi-core system can be extended by interference due to contention for shared hardware resources emanating from co-running tasks on the other cores.

This problem of cross-core contention and interference has led to timing verification of multi-core systems becoming a hot topic of real-time systems research in the decade to 2020. The survey published by Maiza et al. (2019) classifies approximately 120 research papers in this area. Much of this research relies on detailed information about shared hardware resources and the policies used to arbitrate access to them. This information is then used to derive analytical bounds on the maximum interference possible due to contending tasks running on the other cores. In practice, however, there can be substantial difficulties in obtaining and using such detailed low-level information, since it is not typically disclosed by hardware vendors. This is because the complex resource arbitration policies and low-level hardware design features employed comprise valuable intellectual property. Further, even if such information is available, then the overall behavior can be so complex as to preclude a static analysis that provides meaningful bounds, as opposed to substantial overestimates.

The predominant industry practice is to use measurement-based timing analysis techniques to estimate worst-case execution timesFootnote 1 (WCETs). However, the simple extension of measurement-based techniques to multi-core systems cannot provide an adequate solution that bounds the impact of cross-core interference. This is because cross-core interference is highly dependent on the timing of accesses to shared hardware resources by both the task under analysis and its co-runners. In practice, it is not possible to choose the worst-case combination of behavior (inputs, paths, and timing) for co-running tasks that will result in the maximum interference occurring (Nowotsch and Paulitsch 2012). A potential solution to this problem, which is being taken up commercially (Rapita Systems 2019), is to employ a more nuanced measurement-based approach using micro-benchmarks (Radojkovic et al. 2012; Fernández et al. 2012; Nowotsch and Paulitsch 2012; Iorga et al. 2020). These micro-benchmarks sustain a high level of resource accesses, ameliorating the timing alignment issues inherent in the naive approach discussed above. Micro-benchmarks can be used to characterize tasks in terms of the interference that they can cause, or be subject to, due to contention over a particular shared hardware resource.

The timing verification of single-core systems has traditionally been solved via a two-step approach (Maiza et al. 2019). First context-independent WCET estimates are obtained, either via static or measurement-based timing analysis. Second, these estimates are used as parameter values in a task model, with schedulability analysis employed to determine if all of the tasks can meet their timing constraints when executed under a specific scheduling policy. This separation of concerns between timing analysis and schedulability analysis brings many benefits; however, its effectiveness is greatly diminished in multi-core systems due to the fact that execution times heavily depend on co-runner behavior and the cross-core interference that they bring. Inflating individual task execution time estimates to account for the maximum amount of context-independent interference that could potentially occur during the time interval in which each task executes can result in gross over-estimates that are not viable in practice (Kim et al. 2017). Rather, research (Altmeyer et al. 2015; Davis et al. 2018) has shown that it is more effective to consider contention over the longer time frame of task response times.

2.2 Contribution and organization

In Sect. 3, we introduce the Multi-core Resource Stress and Sensitivity (MRSS) task model that characterizes how much each task stresses shared hardware resources and how much each task is sensitive to such resource stress. The MRSS task model provides a simple interface and a separation of concerns between timing analysis and schedulability analysis, thus retaining the advantages of the traditional two-step approach to overall timing verification. The MRSS task model relies on timing analysis, either measurement-based or static, to provide task parameter values characterizing stand-alone (i.e. no contention) WCETs, resource stresses, and resource sensitivities. Thus, it provides the information needed by schedulability analysis to integrate cross-core interference into the computation of bounds on task response times, and hence determine the schedulability of tasks running on multi-core systems. The MRSS task model is generic and versatile. It supports different types of interference that occur via cross-core contention for shared hardware resources, as follows:

-

(i)

Limited interference where contention for the resource is ameliorated by parallelism in the hardware. Here, the interference is sub-additive, i.e. less than the time that the co-running task on another core spends accessing the resource.

-

(ii)

Direct interference where the bandwidth of the resource is shared between contending cores, for example with a Round-Robin bus. Here, the interference is additive, directly matching the time that co-running tasks spend accessing the resource.

-

(iii)

Indirect interference where contention causes additional interference, over and above the bandwidth consumed by co-running tasks (i.e. a super-additive effect), due to changes in the state of the resource that cause further delays to subsequent accesses. An example of indirect interference occurs with main memory (DRAM) (Hassan 2018) when interleaved accesses target different rows, resulting in additional row close and row open operations that increase memory access latency.

The MRSS task model is not however a panacea, it cannot support unbounded interference where task execution is disproportionately impacted by contending accesses. This includes cases where contenders can effectively lock a resource for an extended or unbounded amount of time. Further, it cannot support dependent interference where contention can change the information stored in a resource in such a way that it needs to be obtained from elsewhere, potentially creating additional (dependent) interference via another shared resource. Problems of cache thrashing (Radojkovic et al. 2012), cache coherence (Fuchsen 2010), and cache miss status handling registers (Valsan et al. 2016) can all cause unbounded and/or dependent interference. These issues need to be eliminated from systems aimed at providing real-time predictability.

Section 4 introduces schedulability analysis for the MRSS task model, considering task sets scheduled according to partitioned fixed priority preemptive scheduling (pFPPS) and partitioned fixed priority non-preemptive scheduling (pFPNS) policies.Footnote 2 Two types of schedulability test are derived: (i) context-dependent tests that make use of information about the co-running tasks on the other cores, and (ii) context-independent tests that use only information about the tasks running on the same core. The latter are less precise, but fully composable, meaning that if the tasks on one core are changed, then only those tasks need have their schedulability re-assessed; task schedulability on the other cores is unaffected. Composability is an important issue for industry, particularly when different companies or departments are responsible for the sub-systems running on different cores. The section ends by deriving the dominance relations between the schedulability tests, and assessing their complexity.

In systems that use fixed priority scheduling, appropriate priority assignment is a crucial aspect of achieving a schedulable system (Davis et al. 2016). Section 5 investigates optimal priority assignment, proving that Deadline Monotonic (Leung and Whitehead 1982) priority ordering is optimal for both the context-independent and the simpler context-dependent schedulability tests for pFPPS. Similarly, Audsley’s optimal priority assignment algorithm (Audsley 2001) is proven to be applicable and optimal for the equivalent tests for pFPNS. The more complex and precise context-dependent tests are proven incompatible with Audsley’s algorithm (Audsley 2001).

Section 6, provides a systematic evaluation of the effectiveness of the schedulability tests derived in Sect. 4. The results of this evaluation follow the dominance relationships demonstrated earlier, indicating the superiority of the more complex context-dependent schedulability tests, while also highlighting the additional contention that adding further cores brings.

Section 7 presents the findings from a case study examining 24 tasks from a Rolls-Royce aero-engine control system. These tasks were assessed using measurement-based timing analysis to obtain broad-brush estimates of their stand-alone WCETs, as well as characterizing their resource stress and resource sensitivity parameters. The purpose of the case study was not to try to determine definitive values for these parameters, in itself a challenging research problem, but rather to obtain proof-of-concept data to act as an exemplar underpinning the MRSS task model and its analysis.

Section 8 considers the issues involved in allocating tasks to cores in a way that optimizes system schedulability and robustness under the MRSS task model. The difficulties of task assignment are highlighted via a worked example, based on data from the industrial case study. This example shows that overall interference can typically be reduced by partitioning tasks such that those with high resource stress and sensitivity are assigned to a subset of the available cores, while those with low resource stress and sensitivity are assigned to the remaining cores. However, minimizing interference does not necessarily optimize schedulability and robustness. Simulated Annealing is proposed as a potential solution, and its effectiveness demonstrated via experimental evaluation.

Section 9 concludes with a summary and directions for future work.

2.3 Related work

Prior publications that relate to the research presented in this paper include work on micro-benchmarks (Radojkovic et al. 2012; Fernández et al. 2012; Nowotsch and Paulitsch 2012; Iorga et al. 2020; Rapita Systems 2019) that can be used to stress resources in multi-core systems, and work on the integration of interference effects into schedulability analysis. Many of the latter papers are summarized in Sect. 4 of the survey by Maiza et al. (2019). Unlike the analysis presented in this paper, which uses a generic task model that is applicable to many different types of interference and a variety of different shared hardware resources, most of these prior works focus on the details of one or more specific hardware resources. They require detailed information about the resource arbitration policy used, the number of resource accesses made by each task, and in some cases the timing of those accesses. By contrast, this paper takes a more abstract, but nonetheless valid view, that interference can be modeled in terms of its execution time impact via resource sensitivity and resource stress parameters for each task. This approach requires less detail about the resource behavior, and is more amenable to practical use, since it can still be used when full details of shared resource behavior are not available from the hardware vendor.

Early work on the integration of interference effects into schedulability analysis by Schliecker and Ernst (2010) used arrival curves to model the resource accesses of each task, and hence how resource access delays due to contention impact upon task response times. Schliecker’s work focused on contention over the memory bus. Further work in this area by Schranzhofer et al. (2010), Pellizzoni et al. (2010), Giannopoulou et al. (2012), and Lampka et al. (2014) used the superblock model that divides each task into a sequence of blocks and uses information about the number of resource accesses within different phases of these blocks.

Dasari et al. (2011) used a request function to model the maximum number of resource accesses from each task in a given time interval, and integrated this request function into response time analysis. Kim et al. (2016) and Yun et al. (2015) provided a detailed analysis of contention caused by DRAM accesses, accounting for access scheduling and variations in latencies due to differing states e.g. open and closed rows. The delays due to contention were then integrated into response time analysis.

Altmeyer et al. (2015); Davis et al. (2018) introduced a multi-core response time analysis framework, aimed at combining the demands that tasks place on difference types of resources (e.g. CPU, memory bus, and DRAM) with the resource supply provided by those hardware resources. The resulting explicit interference was then integrated directly into response time analysis. Rihani et al. (2016) built on this framework, using it to analyze complex bus arbitration policies on a many-core processor.

Huang et al. (2016) and Cheng et al. (2017) leveraged the symmetry between processing and resource access, viewing tasks as executing and then suspending execution while accessing a shared resource. Using this suspension model in the schedulability analysis, they obtained results that were broadly comparable to those of Altmeyer et al. (2015).

Paolieri et al. (2011) proposed using a WCET-matrix and WCET-sensitivity values to characterize the variation in task execution times in different execution environments (e.g. with different numbers of contending cores, and different cache partition sizes). This information was then used to determine efficient task partitioning and task allocation strategies.

Andersson et al. (2018) presented a schedulability test where tasks have different execution times dependent on their co-runners. Here, tasks are represented by a sequence of segments, each of which has execution requirements and co-runner slowdown factors with respect to sets of other segments that could execute in parallel with it. The schedulability test involves solving a linear program to bound the longest response time given the possible ways in which different segments could execute in parallel and the slowdown in execution that this would entail. The method has significant scalability issues that effectively limit the total number of tasks it can handle to approximately 32 tasks on a 4 core system (i.e. 8 tasks per core).

2.4 Inspiration

The research presented in this paper was inspired by the desire to combine a practical approach to characterizing contention via micro-benchmarks and measurement-based techniques with a generic form of schedulability analysis that can be applied to a wide range of homogeneous multi-core systems with different types of shared hardware resources. The aim being to provide an effective form of timing verification that, while retaining the traditional two-step approach, is able to avoid undue pessimism by accounting for interference over long time intervals equating to task response times rather than short time intervals equating to task execution times. With industry practice in mind, the schedulability analysis derived includes context-dependent (non-composable), context-independent (fully composable), and partially composable schedulability tests. The overall method enables task timing behavior on multi-cores to be assessed without necessitating recourse to detailed information about the hardware behavior, something that most chip vendors do not make publicly available.

3 System model and assumptions

We assume a multi-core system with m homogeneous cores that executes tasks under either partitioned fixed priority preemptive (pFPPS) or partitioned fixed priority non-preemptive (pFPNS) scheduling. With partitioning, tasks are assigned to a specific core and do not migrate. The tasks are assumed to be independent, but may access a set of shared hardware resources \(r \in H,\) thus causing interference on the execution of tasks on other cores via cross-core contention. We omit from consideration the effects of resource contention between tasks on the same core, since they are executed sequentially rather than in parallel. We assume that appropriate techniques are used to avoid substantial preemption effects when preemptive scheduling is employed, for example cache partitioning can be used to eliminate cache-related preemption delays. The costs of scheduling decisions and any context switching are assumed to be subsumed into the task execution times.

Each task \(\tau _i\) is characterised by: the minimum inter-arrival time or period between releases of its jobs, \(T_i\), its relative deadline, \(D_i\), and its WCET, \(C_i\), when executing stand-alone, i.e. with no co-runners. All task deadlines are assumed to be constrained i.e. \(D_i \le T_i\).

Further aspects of the model are based on the concept of resource sensitive contenders and resource stressing contenders. A resource stressing contender maximizes the stress on a resource r by repeatedly making accesses to it that cause the most contention. Hence, running a resource stressing contender in parallel with a task creates the maximum increase in execution time for the task due to contention over resource r from any single co-runner. A resource sensitive contender for a resource r, suffers the maximum possible interference by repeatedly making accesses to the resource that suffer from the most contention. Hence, running a resource sensitive contender in parallel with a task creates the maximum increase in execution time for any single co-running contender due to contention over resource r from the task. Note, resource stressing and resource sensitive contenders for a given resource are not necessarily one and the same.

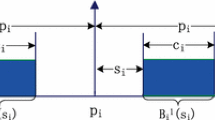

Each task is further characterised by its resource sensitivity \(X_i^r\) and resource stress \(Y_i^r\) for each shared hardware resource \(r \in H\). \(X_i^r\) captures the increase in execution time of task \(\tau _i\) (from \(C_i\) to \(C_i + X_i^r\)) when it is executed in parallel with a resource stressing contender for resource r. Thus \(X_i^r\) models how much task \(\tau _i\) behaves like a resource sensitive contender. Similarly, \(Y_i^r\) captures the increase in execution time of a resource sensitive contender (from C to \(C + Y_i^r\)) for resource r, when it is executed in parallel with task \(\tau _i\). Hence \(Y_i^r\) models how much task \(\tau _i\) behaves like a resource stressing contender. With this model, the execution time of a task \(\tau _i\) running on one core, subject to interference via shared hardware resource r from task \(\tau _k\) running in parallel on another core, is increased by at most \(\min (X_i^r,Y_k^r)\) i.e. from \(C_i\) to \(C_i + \min (X_i^r,Y_k^r)\).

The notation \(\varGamma _x\) is used to denote the set of tasks that execute on the same core (with index x) as the task of interest \(\tau _i\). Similarly, \(\varGamma _y\) is used to denote the set of tasks that execute on some other core (with index y).

Each task \(\tau _i\) is assumed to have a unique priority. hp(i) (resp. lp(i)) is used to denote the set of tasks with higher (resp. lower) priority than task \(\tau _i\). Similarly, hep(i) (resp. lep(i)) is used to denote the set of tasks with higher (resp. lower) than or equal priority to task \(\tau _i\).

The schedulability tests introduced in this paper are named using the following convention: Cp Sched-m-X, where C indicates a contention-based test for p partitioned scheduling, using scheduling policy Sched, which is either FPPS or FPNS. The test is applicable to systems with m cores, and makes use of information X, which is either D or R meaning the deadlines or the response times of the tasks on other cores, or fc meaning fully composable, i.e. the test does not rely on any information about the tasks running on the other cores.

The MRSS task model assumes that the resource sensitivity \(X_i^r\) and resource stress \(Y_i^r\) parameters for each task \(\tau _i\) are provided by timing analysis. Obtaining precise bounds for these parameters is a challenging timing analysis problem that is beyond the scope of this paper; nevertheless, below we give a brief overview of how such values could be estimated using either measurement-based or static timing analysis techniques.

Using measurement-based timing analysis techniques, the resource sensitivity \(X_i^r\) can be obtained by capturing the maximum difference between the execution time of task \(\tau _i\) when it runs in parallel with a resource stressing contender, and the corresponding execution time when it runs stand-alone, assuming that the same inputs and initial state are used in each case. Similarly, the resource stress \(Y_i^r\) can be obtained by capturing the maximum difference between the execution time of a resource sensitive contender when it runs in parallel with task \(\tau _i\), and the corresponding execution time of the contender when it runs stand-alone. As with measurement-based WCET estimation, such an approach needs to explore a representative set of inputs and initial states in order to obtain valid estimates. Further, resource stressing and resource sensitive contenders need to be carefully designed to meet their requirements in terms of creating/suffering the maximum amount of interference via contention over the resource (Iorga et al. 2020).

Bounds on resource sensitivity and resource stress can also be obtained via static timing analysis. Static analysis first needs to compute an upper bound on the maximum number of accesses \(A^r_i\) that task \(\tau _i\) can make to the resource. The resource sensitivity \(X_i^r\) can then be computed by determining the maximum increase in the execution time of task \(\tau _i\) assuming that \(A^r_i\) accesses are made in contention with an arbitrary number of accesses emanating from one other core. Similarly, the resource stress \(Y_i^r\) equates to the maximum increase in the execution time of any arbitrary resource sensitive contender, due to contention over the resource caused by \(A^r_i\) accesses emanating from one other core.

The schedulability analysis presented in Sect. 4 assumes that the total interference occurring via multiple different resources can be upper bounded by the sum of the interference occurring via each of those resources individually. This assumption can reasonably be expected to hold provided that the resource contention is independent. In other words, that contention over one resource does not create additional contention over another resource. An example that breaks this assumption occurs with a cache that is shared between cores. In this case, cache thrashing (Radojkovic et al. 2012) can result in additional accesses to main memory, and hence further contention and interference over that disparate resource. Cache partitioning (per core) would be an effective way of addressing this issue (Altmeyer et al. 2014, 2016), thus improving timing predictability.

The analysis also assumes that the total interference occurring due to co-running tasks on multiple other cores can be upper bounded by the sum of the interference occurring due to co-running tasks on each of those cores individually. This assumption can reasonably be expected to hold provided that there are no discontinuities in the amount of interference that can occur that can be triggered by co-running tasks on multiple cores, but not by co-runners on just one core. An example that breaks this assumption occurs with cache miss status handling registers (MSHR) (Valsan et al. 2016). In this case, contention from tasks on multiple cores can exhaust all of the available MSHRs, resulting in substantial blocking delays. Depending on the local memory level parallelism, utilizing all of the MSHRs is typically not possible with just one contending core. Increasing the number of MSHRs, or reducing the local memory level parallelism such that contention from all m cores cannot exhaust the set of MSHRs, are effective ways of addressing this problem (Valsan et al. 2016) and hence restoring timing predictability. To validate the use of the analysis given in Sect. 4, each of the above assumptions needs to be assessed for the hardware architecture considered.

4 Schedulability analysis

In this section, we introduce schedulability tests for the MRSS task model, assuming partitioned fixed priority preemptive scheduling (pFPPS) (Sect. 4.1), and partitioned fixed priority non-preemptive scheduling (pFPNS) (Section 4.2). In Sect. 4.3 we consider composability and derive context-independent schedulability tests for both pFPPS and pFPNS. The dominance relationships between the various tests are derived in Sect. 4.4.

First, we give a simple example. Consider four tasks executing on two cores under partitioned fixed priority preemptive scheduling, with all four tasks accessing the same shared hardware resource r. Tasks \(\tau _1\) and \(\tau _2\) execute on core 1 and tasks \(\tau _3\) and \(\tau _4\) execute on core 2. The stand-alone worst-case execution times \(C_i\) of the tasks are 100, 200, 150, and 150, their resource sensitivity values \(X_i^r\) are 16, 12, 10, and 10, and their resource stress values \(Y_i^r\) are 24, 12, 10, and 5 respectively. Further, the periods and deadlines of the tasks are much larger than their execution times. Considering the higher priority task \(\tau _1\) on core 1. During the response time of a single job of task \(\tau _1\), it could be subject to interference due to cross-core contention from one job of each of tasks \(\tau _3\) and \(\tau _4\) executing on core 2. This interference is bounded by the minimum of the resource sensitivity of the job of task \(\tau _1\), \(X_1^r = 16\), and the total resource stress due to one job of each of tasks \(\tau _3\) and \(\tau _4\), \(Y_3^r + Y_4^r = 10 + 5 = 15\). Hence the worst-case response time of task \(\tau _1\) is bounded by \(R_1 = 100 + \min (16, 15) = 115\). Considering the lower priority task \(\tau _2\) on core 1. During the response time of a single job of task \(\tau _2\), one job of \(\tau _1\) and one job of \(\tau _2\) can execute on core 1. These jobs could be subject to interference due to cross-core contention from one job of each of tasks \(\tau _3\) and \(\tau _4\) executing on core 2. This interference is bounded by the minimum of the total resource sensitivity of the jobs of tasks \(\tau _1\) and \(\tau _2\), \(X_1^r + X_2^r = 16+12 = 28\), and the total resource stress due to the jobs of tasks \(\tau _3\) and \(\tau _4\), \(Y_3^r + Y_4^r = 10 + 5 = 15\). Hence the worst-case response time of task \(\tau _2\) is bounded by \(R_2 = 100 + 200 + \min (28, 15) = 315\). Similar analysis for tasks \(\tau _3\) and \(\tau _4\) on core 2 yields bounds on their worst-case response times of \(R_3 = 150+\min (10,36) = 160\) and \(R_4 = 150+150+\min (20,36) = 320\). Note that this instructive example, and the detailed schedulability analysis given below, makes no assumptions about exactly when jobs execute within the response times considered, nor any assumptions about when within those time intervals cross-core resource contention can actually occur. Rather bounds on worst-case response times are derived using only the task timing parameters: stand-alone worst-case execution times, resource stress and sensitivity values, periods and deadlines.

4.1 pFPPS schedulability analysis

In the absence of any interference via shared hardware resources, the worst-case response time of task \(\tau _i\) under pFPPS is given via standard response time analysis (Joseph and Pandya 1986; Audsley et al. 1993):

Adding cross-core interference considering each resource \(r \in H\), we may compute the worst-case response time as follows:

where \(I^r_i (R_i)\) is an upper bound on the interference that may occur within the response time of task \(\tau _i\), via shared hardware resource r, due to tasks executing on the other cores.

The interference term \(I^r_i (R_i)\) depends on: (i) the total resource sensitivity for resource r, denoted by \(S^r_i(R_i,x)\), for the tasks executing on the same core x as task \(\tau _i\) within its response time \(R_i\); and (ii) the total resource stress on resource r, denoted by \(E^r_i(R_i,y)\), that can be produced by tasks executing on each of the other cores y within an interval of length \(R_i\). The total resource sensitivity \(S^r_i(R_i,x)\) is computed based on the jobs that may execute within the worst-case response time of task \(\tau _i\), hence with reference to (1) we have:

The total resource stress \(E^r_i(R_i,y)\) due to tasks that execute on another core y in the interval \(R_i\) can be upper bounded as follows. Here, unlike in (3), the worst-case does not occur when these tasks are released synchronously, but rather when the resource contention occurs as late as possible for one job of a task, and then as early as possible for subsequent jobs. Further, tasks of any priority can cause interference when executing on other cores. Thus we have:

The analysis in (4) does not make any assumptions about how long task \(\tau _j\) needs to execute in order to cause an increase in execution time of up to \(Y^r_j\) in a task running on another core. In particular, there is no assumption that task \(\tau _j\) needs to run for at least \(Y^r_j\), since \(Y^r_j\) is a measure of the maximum increase in execution time of another task due to contention from task \(\tau _j\), not a measure of the time for which task \(\tau _j\) needs to execute to cause that contention.

Assuming that the execution causing contention can occur instantaneously, as is done in (4), is potentially pessimistic; however, it ensures that the analysis is sound even when there is considerable asymmetry in the (small) execution time required to stress a resource and the (large) increase in execution time of another task, which is sensitive to that resource stress. Since \(X^r_k\) represents the maximum sensitivity of a task \(\tau _k\) when subject to continuous interference via resource r from a maximally resource stressing contender on one single other core, the maximum interference from other cores that can impact the response time of task \(\tau _i\) via resource r can be upper bounded by:

This is the case, since the maximum interference due to contention from each core y cannot exceed the total resource stress \(E^r_i(R_i,y)\) emanating from that core within a time \(R_i\).

We refer to the schedulability test given by (2), (3), (4), and (5) as the CpFPPS-m-D test, since this test uses information about the deadlines of the tasks running on other cores.

A more precise analysis may be obtained by substituting \(R_j\) for \(D_j\) in (4) as follows, since a schedulable job of task \(\tau _j\) cannot execute beyond its worst-case response time.

Using this formulation, the response times of the tasks become interdependent. This problem can be solved via fixed point iteration. Here, an outer iteration starts with \(R_i = C_i\), \(R_j = C_j\) etc. for all tasks in the system, and repeatedly computes the response times for all tasks on all cores. This is done using the \(R_j\) values in the right hand side of (6) from the previous round, until all response times either converge (i.e. are unchanged from the previous round) or one of them exceeds the associated deadline. Since \(E^r_i(R_i, y)\) in (6) is a monotonically non-decreasing function of each \(R_j\), then on each round, each \(R_j\) value can only increase or remain the same, it cannot decrease. Thus, the outer fixed point iteration is guaranteed to either converge giving the set of schedulable \(R_i \le D_i\) for all tasks in the system, or to result in some \(R_i > D_i\), in which case that task and the system as a whole is unschedulable. We refer to the schedulability test given by (2), (3), (5), and (6) as the CpFPPS-m-R test, since it uses information about the response times of the tasks running on the other cores.

4.2 pFPNS schedulability analysis

In the absence of any cross-core contention and interference via shared hardware resources, the worst-case response time of task \(\tau _i\) under pFPNS can be upper bounded via a sufficient response time analysis (Davis et al. 2007):

Here, we have reformulated the sufficient analysis for FPNS (Davis et al. 2007) into a single equation. The changes involve compacting the blocking term (\(\max ()\)), and bringing the execution time \(C_i\) of the task under analysis into the equation. To compensate for the latter, the time interval in which higher priority tasks can execute is changed to \((R_i - C_i)\). This excludes the time at the end of the interval when task \(\tau _i\) is executing non-preemptively. We also use a \(\lfloor \ \rfloor +1\) formulation rather than \(\lceil \ \rceil \) to avoid the need for a term equal to the time unit granularity.

Similar to the case for pFPPS in (2), adding cross-core interference considering each resource \(r \in H\), we may compute an upper bound on the worst-case response time as follows:

where \(I^r_i (R_i)\) is an upper bound on the interference that may occur within the response time of task \(\tau _i\), via shared hardware resource r, due to tasks executing on other cores. Here, we make the sound, but potentially pessimistic, assumption that even though the execution time of task \(\tau _i\) may be increased to more than \(C_i\) due to contention, only during the final \(C_i\) time units of the task’s response time are other tasks on core x precluded from executing (i.e. we continue to use \((R_i - C_i)\) in the \(\lfloor \ \rfloor \) function). Further, we use \(R_i\) in the final term, since cross-core contention still occurs during non-preemptive execution.

The interference term \(I^r_i (R_i)\) depends on: (i) the total resource sensitivity for resource r, denoted by \(S^r_i(R_i,x)\), for the tasks executing on the same core x as task \(\tau _i\) within its response time \(R_i\); and (ii) the total resource stress on resource r, denoted by \(E^r_i(R_i,y)\), that can be produced by tasks executing on each of the other cores y within an interval of length \(R_i\). The total resource sensitivity \(S^r_i(R_i,x)\) is computed based on the jobs that may execute within the worst-case response time of task \(\tau _i\), hence with reference to (7) we have:

The two equations (4) and (6) for the total resource stress \(E^r_i(R_i,y)\) due to tasks that execute on another core y in the interval \(R_i\) depend only on the tasks parameters and response times, but not the scheduling policy per se. Thus by redefining \(S^r_i(R_i,x)\) according to (9) for the non-preemptive case, we obtain the following pFPNS schedulability tests for the MRSS task model.

The CpFPNS-m-D test given by (8), (9), (4), and (5) makes use of the deadlines of the tasks running on the other cores.

The CpFPNS-m-R test given by (8), (9), (6), and (5) makes use of the response times of the tasks running on the other cores.

4.3 Composability

The schedulability analyses derived in Sects. 4.1 and 4.2 make use of information about the resource contention due to tasks executing on other cores. In other words, these analyses requires that the resource stress (\(Y^r_j\)) values are known for all tasks executing on the other cores, as well as their other parameters i.e. \(T_j\), \(D_j\), \(R_j\). While this results in tighter response time bounds, it also means that the analyses are not fully composable, since the schedulability of the tasks running on one core depend on the parameters of the tasks running on the other cores. A fully composable analysis can, however, be obtained by redefining (5) as follows:

This equates to assuming a worst-case scenario of resource stressing contenders for each resource r running on every core. This may be pessimistic on two counts: Firstly, the resource stressing contenders may cause significantly more interference than the tasks actually running on the other cores, and secondly, with more than one resource it may not be possible to maximally stress all resources simultaneously.

Using (10) results in fully composable context-independent schedulability tests. These tests are able to check the schedulability of task sets on each of the m cores in a system, without needing to know any of the parameters of the tasks on the other cores. We refer to the schedulability test given by (2), (3), and (10) as the CpFPPS-m-fc test. Similarly, we refer to the schedulability test given by (8), (9), and (10) as the CpFPNS-m-fc test.

Finally, an intermediate partially composable analysis can be provided if resource access regulation mechanisms (Yun et al. 2013) or budgets are employed to limit the amount of contention emanating from each core. Let \(F^r_i(t, y)\) be the maximum increase in execution time of a resource sensitive contender on another core that can occur due to contention over resource r caused by a resource stressing contender running on core y for a time period of t, subject to resource regulation. Partially composable analysis can be obtained by redefining (5) as follows:

Note, this analysis only holds if the resource regulation on each core y does not actually limit the accesses to each resource r made by tasks on that core over any time interval. Provided that is guaranteed, no actual runtime enforcement is necessary, the budget function \(F^r_i(t, y)\) simply acts as an intermediate value that permits a separation of concerns and composition. Stated otherwise, the budget function \(F^r_i(t, y)\) becomes a requirement that any set of tasks assigned to core y must guarantee not to exceed. This guarantee is relied upon by the schedulability analysis for tasks executing on the other cores. Hence, the analysis is partially composable, the tasks on core y may be changed or modified provided that the rely-guarantee is respected.

4.4 Dominance relations

A schedulability test S is said to dominate another test Z for a given task model and scheduling algorithm, if every task set that is deemed schedulable according to test Z is also deemed schedulable by test S, and there exists some task sets that are schedulable according to test S, but not according to test Z.

Comparing the definitions of \(E^r_i(R_i,y)\) given by (6) for the CpFPPS-m-R and CpFPNS-m-R tests and by (4) for the CpFPPS-m-D and CpFPNS-m-D tests, it is evident that each of the former tests deems schedulable all task sets that are schedulable according to the corresponding latter test. This is the case, since in any schedulable system, the response time of a task is no greater than its deadline (\(R_j \le D_j\)), and hence the \(E^r_i(R_i,y)\) term for the former tests, given by (6), is less then or equal to the equivalent term, given by (4), for the latter tests. Further, it is easy to see that there exist task sets that are schedulable according to each of the former tests, but not according to the corresponding latter test due to a larger contention contribution emanating from the larger \(E^r_i(R_i,y)\) term. Hence the CpFPPS-m-R test dominates the CpFPPS-m-D test, and the CpFPNS-m-R test dominates the CpFPNS-m-D test.

Comparing the definitions of \(I^r_i(R_i)\) given by (5) for the CpFPPS-m-D and CpFPNS-m-D tests and by (10) for the CpFPPS-m-fc and CpFPNS-m-fc tests, it is evident that the former tests deems schedulable all task sets that are schedulable according to the corresponding latter test. Further, it is easy to see that there exist task sets that are schedulable according to the each of the former tests, but not according to the corresponding latter test due to a larger contention contribution emanating from the larger \(I^r_i(R_i)\) term. Hence the CpFPPS-m-D test dominates the CpFPPS-m-fc test, and the CpFPNS-m-D test dominates the CpFPNS-m-fc test.

As dominance is transitive, we have: CpFPPS-m-R \(\rightarrow \) CpFPPS-m-D \(\rightarrow \) CpFPPS-m-fc and CpFPNS-m -R \(\rightarrow \) CpFPNS-m-D \(\rightarrow \) CpFPNS-m-fc where \(S \rightarrow Z\) indicates that test S dominates test Z.

Finally, comparing a system of m cores to one with \(m+1\) cores, where in each case the first m cores execute exactly the same sets of tasks, and the \(m+1\) core system has extra tasks that execute on core \(m+1\), then there is a dominance relationship between the systems as analysed by any of the schedulability tests. In other words, adding a core and the contention that it brings cannot improve schedulability for the tasks running on the existing cores, but may make their schedulability worse. Schedulability for m cores thus dominates that for \(m+1\) cores with added tasks: Cp Sched-m-X \(\rightarrow \) Cp Sched-\((m+1)\)-X

4.5 Complexity

The standard response time analysis (Joseph and Pandya 1986; Audsley et al. 1993) for FPPS on a single-core processor, given by (1), has pseudo-polynomial complexity of \(O(n^2 D^{max})\), where n is the number of tasks and \(D^{max}\) is the longest deadline of any task in the system. This can be seen by observing that there are n tasks for which response times need to be determined, and on each fixed-point iteration there is a summation over at most n tasks. Further, on each fixed-point iteration the response time can either increase by at least 1, or remain the same, in which case iteration terminates. Since iteration also terminates when the deadline is exceeded, the maximum number of iterations is bounded by \(D^{max}\). Hence the overall complexity of the test is \(O(n^2 D^{max})\). The sufficient response time test for FPNS (Davis et al. 2007) similarly has pseudo-polynomial complexity of \(O(n^2 D^{max})\). Considering partitioned scheduling on multi-core systems, with m cores, at most n tasks per core, and no cross-core contention or interference, these tests have \(O(mn^2 D^{max})\) complexity.

The schedulability tests for the MRSS task model are derived from the above tests; however, they also consider cross-core contention and interference over |H| shared hardware resources.

The CpFPPS-m-fc and CpFPNS-m-fc tests have pseudo-polynomial complexity of \(O(m|H|n^2 D^{max})\). This can be seen by observing that there are at most mn tasks for which response times need to be determined, and on each fixed-point iteration of (2) or (8) the interference term involves a nested summation over |H| resources, no summation over m cores – see (10), and lastly summation over n tasks within the expression for \(S^r_i(R_i,x)\). Finally, the maximum number of fixed point iterations is again bounded by \(D^{max}\).

The CpFPPS-m-D and CpFPNS-m-D tests have pseudo-polynomial complexity of \(O(m^2|H|n^2 D^{max})\). This can be seen by observing that there are at most mn tasks for which response times need to be determined, and on each fixed-point iteration of (2) or (8) the interference term involves a nested summation over |H| resources, m cores, and lastly over n tasks within the expressions for \(E^r_i(R_i,y)\) and \(S^r_i(R_i,x)\). Finally, the maximum number of fixed point iterations is again bounded by \(D^{max}\).

The CpFPPS-m-R and CpFPNS-m-R tests were described in Sects. 4.1 and 4.2 as requiring nested fixed point iterations to compute the interdependent response times. The efficiency of these tests can however be improved by considering the monotonicity of the expressions on the right hand side of each of the equations with respect to the values of both \(R_i\) and \(R_j\). This means that the tests can be implemented via an outer loop that performs fixed point iteration, combined with a simple inner loop that iterates over all of the tasks in the system. Below, we describe this implementation in more detail, followed by the complexity of the CpFPPS-m-R and CpFPNS-m-R tests.

In an efficient implementation of the CpFPPS-m -R test (resp. CpFPNS-m-R test), the outer loop iteration starts with \(R_i = C_i\), \(R_j = C_j\) etc. for all tasks. The inner loop iterates over all tasks, for each task it computes an updated response time by evaluating (2) (resp. (8)) just once using the \(R_i\) and \(R_j\) values from the previous outer loop iteration. Due to the right hand sides of (2), (3), (5), and (6) (resp. (8), (9), (5), and (6)) being monotonically non-decreasing functions of \(R_i\) and \(R_j\), then on each outer loop iteration, the response time value for each task can only increase or remain the same, it cannot decrease. Hence, the outer loop fixed point iteration is guaranteed to terminate, either due to convergence (i.e. all response times are unchanged from the previous iteration) indicating a schedulable system, or because the response time of at least one task has exceeded its deadline.

Observe that on each iteration of the outer loop, the response time of at least one task must increase by at least 1, otherwise the response times have converged and the test terminates. The maximum number of outer loop iterations is therefore upper bounded by \(mnD^{max}\). The inner loop evaluates (2) (resp. (8)) once for each of at most mn tasks, with each such evaluation requiring O(m|H|n) operations. It follows that the CpFPPS-m-R test and the CpFPNS-m-R test have pseudo-polynomial complexity of \(O(m^3|H|n^3 D^{max})\).

Comparing the complexity of the tests for the MRSS task model to those for partitioned fixed priority scheduling with no contention, we observe that: (i) the complexity of the CpFPPS-m-fc and CpFPNS-m-fc tests is higher by a factor of |H|, (ii) the complexity of the CpFPPS-m-D and CpFPNS-m-D tests is higher by a factor of m|H|, and (iii) the complexity of the CpFPPS-m-R and CpFPNS-m-R tests is higher by a factor of \(m^2|H|n\). Given the high performance of the standard response time tests for fixed priority scheduling (Davis et al. 2008), in practice, all of the tests for the MRSS task model scale well to realistic system sizes.

5 Priority assignment

To maximize schedulability it is necessary to assign task priorities in an optimal way (Davis et al. 2016). This section considers optimal priority assignment for the schedulability tests introduced in Sect. 4.

5.1 pFPPS priority assignment

Leung and Whitehead (1982) showed that Deadline Monotonic Priority Ordering (DMPO) is optimal for constrained-deadline task sets with parameters (C, D, T) under fixed priority preemptive scheduling. We observe that this result also holds for constrained-deadline MRSS task sets compliant with model described in Sect. 3 and analysed according to the CpFPPS-m-fc test introduced in Sect. 4.3. This is because that formulation can be re-arranged to match the basic response time analysis (1), with the execution time of each task \(\tau _k\) increased by \(\sum _{r \in H}(m-1)X^r_k\).

DMPO is also optimal for constrained-deadline MRSS task sets analysed according to the CpFPPS-m-D test, introduced in Sect. 4.1. Proof is given below using the standard apparatus for proving the optimality of such priority orderings, as described in Sect. IV of the review by Davis et al. (2016). This proof technique is applicable in cases where task priorities can be defined directly from fixed task parameters, for example periods and deadlines. To show that a priority assignment policy P (i.e. DMPO) is optimal, it suffices to prove that any task set that is schedulable according to the schedulability test considered using some priority assignment policy Q is also schedulable using priority ordering P. Proof is obtained by transforming priority ordering Q into priority ordering P, while ensuring that no tasks become unschedulable during the transformation. The proof proceeds by induction.

Theorem 1

Deadline Monotonic Priority Ordering is optimal for constrained-deadline MRSS task sets compliant with the model described in Sect. 3 and analysed according to the CpFPPS-m -D test introduced in Sect. 4.1.

Proof

Base case: The task set is schedulable with priority order \(Q= Q^k\), where k is the iteration count.

Inductive step: We select a pair of tasks that are at adjacent priorities i and j where \(j = i + 1\) in priority ordering \(Q^k\), but out of Deadline Monotonic relative priority order. Let these tasks be \(\tau _A\) and \(\tau _B\), with \(\tau _A\) having the higher priority in \(Q^k\). Note that \(D_A > D_B\) as the tasks are out of Deadline Monotonic relative order. Let i be the priority of task \(\tau _A\) in \(Q^k\) and j be the priority of task \(\tau _B\). We need to prove that all of the tasks remain schedulable with priority order \(Q^{k-1}\), which switches the priorities of these two tasks. There are four groups of tasks to consider:

hp(i): tasks in this set have higher priorities than both \(\tau _A\) and \(\tau _B\) in both \(Q^{k}\) and \(Q^{k-1}\). Since the schedulability of these tasks is unaffected by the relative priority ordering of \(\tau _A\) and \(\tau _B\), they remain schedulable in \(Q^{k-1}\).

\(\tau _A\): Let \(w = R_B\) be the response time of task \(\tau _B\) in priority order \(Q^{k}\). Since task \(\tau _B\) is schedulable in \(Q^{k}\), we have \(w=R_B \le D_B < D_A \le T_A\), hence in (2), the contribution from \(\tau _A\) within the response time of \(\tau _B\) is exactly one job (i.e. \(C_A\)), and there is also a contribution of \(C_B\) from task \(\tau _B\) itself. Considering interference, the total resource sensitivity \(S^r_B(w,x)\) given by (3) depends only on the value w and fixed parameters of the set of tasks with priorities higher than or equal to task \(\tau _B\) in \(Q^{k}\) that is \(\tau _A\), \(\tau _B\), and hp(i). Further, the total resource stress \(E^r_B(w,y)\) due to tasks executing on some other core y depends only on the value of w and the fixed parameters of the tasks executing on that core. It follows that the interference term \(I^r_B(w)\) given by (5) and used in (2) depends only on the value of w and the fixed parameters of the set of tasks \(\tau _A\), \(\tau _B\), and hp(i), as well as the fixed parameters of the tasks executing on all other cores. Now consider the response time of task \(\tau _A\) under priority order \(Q^{k+1}\). This response time is \(R_A=w\) , as there is exactly the same contribution from tasks \(\tau _A\), \(\tau _B\) and all the higher priority tasks, and further the interference due to resource contention is the same, in other words \(I^r_B(w)\) for \(Q^{k}\) equates to \(I^r_A(w)\) for \(Q^{k+1}\), since the value of w is the same, and the set of tasks that this term is dependent upon is unchanged (\(\tau _A\), \(\tau _B\), and hp(i) on core x, and all of the tasks on the other cores). Since \(w < D_A\), task \(\tau _A\) remains schedulable.

\(\tau _B\): as the priority of \(\tau _B\) has increased its response time is no greater in \(Q^{k+1}\) than in \(Q^{k}\). This is the case because the only change to the response time calculation for \(\tau _B\) is the removal of the contribution from task \(\tau _A\), and also the removal of its contribution to the total resource sensitivity, and hence from the interference term \(I^r_B(w)\). Thus \(\tau _B\) remains schedulable.

lp(j) : tasks in this set have lower priorities than tasks \(\tau _A\) and \(\tau _B\) in both \(Q^{k}\) and \(Q^{k+1}\). Since the schedulability of these tasks is unaffected by the relative priority ordering of tasks \(\tau _A\) and \(\tau _B\), they remain schedulable.

All tasks therefore remain schedulable in \(Q^{k+1}\).

At most \(k = n(n -1)/ 2\) steps are required to transform priority ordering Q into P without any loss of schedulability \(\square \)

Next, we consider optimal priority assignment with respect to the CpFPPS-m -R test introduced in Sect. 4.1. Davis and Burns (2011) proved that it is both sufficient and necessary to show that a schedulability test meets three simple conditions in order for Audsley’s Optimal Priority Assignment (OPA) algorithm (Audsley 2001) to be applicable.

-

Condition 1: The schedulability of a task according to the test must be independent of the relative priority order of higher priority tasks.

-

Condition 2: The schedulability of a task according to the test must be independent of the relative priority order of lower priority tasks.

-

Condition 3: The schedulability of a task according to the test must not get worse if the task is moved up one place in the priority order (i.e. its priority is swapped with that of the task immediately above it in the priority order).

Theorem 2

The CpFPPS-m-R test, given in Sect. 4.1, is not compatible with Audsey’s Optimal Priority Assignment (OPA) algorithm (Audsley 2001), and hence that algorithm cannot be used to obtain an optimal priority assignment with respect to the test.

Proof

To prove non-compatibility, it suffices to show that any one of the three conditions set out by Davis and Burns (2011) and listed above is broken by the test. In this case, we show that Condition 1 does not hold. According to the CpFPPS-m-R test, the schedulability of a task \(\tau _i\) on core x can depend on the response time of task \(\tau _j\) on a different core y via \(E^r_j(R_i,y)\) given by (6). In turn, the response time of task \(\tau _j\) can depend on the response time of some higher priority task \(\tau _k\) on the same core x as task \(\tau _i\) via \(E^r_k(R_j,x)\) also given by (6). Since the response time of task \(\tau _k\) depends on its relative priority order among those tasks with higher priority than task \(\tau _i\), Condition 1 does not hold and therefore the CpFPPS-m-R test is not compatible with Audsley’s OPA algorithm \(\square \)

Although the CpFPPS-m-R test is not compatible with Audsley’s OPA algorithm, the form of the test, with its dependence on the response times of other tasks, means that a back-tracking search, as proposed by Davis and Burns (2010), could potentially be used to obtain a schedulable priority assignment without having to explore all possible priority orderings. The same applies to the CpFPNS-m-R test discussed in Sect. 5.2.

5.2 pFPNS priority assignment

George et al. (1996) showed that Deadline Monotonic Priority Ordering (DMPO) is not optimal for constrained-deadline task sets with parameters (C, D, T) under fixed priority non-preemptive scheduling, and proved that Audsley’s algorithm (Audsley 2001) is able to provide an optimal priority ordering in this case. We observe that this result also holds for constrained-deadline MRSS task sets compliant with the model described in Sect. 3 and analysed according to the CpFPNS-m-fc test introduced in Sect. 4.3. This is the case because the formulation can be re-arranged to match the basic response time analysis (7), with the execution time of each task \(\tau _k\) increased by \((m-1)X^r_k\). Audsley’s algorithm (Audsley 2001) is also optimal with respect to the CpFPNS-m-D test, as proved below.

Theorem 3

Audsley’s algorithm (Audsley 2001) is optimal for constrained-deadline MRSS task sets compliant with the model described in Sect. 3 and analysed according to the CpFPNS-m-D test introduced in Sect. 4.2.

Proof

It suffices to show that the schedulability test meets the three conditions, given by Davis and Burns (2011) and set out in Sect. 5.1. With respect to Condition 1 and Condition 2, inspection of (8) shows that the first two terms are dependent on the set of lower and equal priority tasks lep(i) and the set of higher priority tasks hp(i) respectively, but do not depend on the relative priority order of the tasks within those sets. Considering the fourth term in (8), \(I^r_i(t)\) is given by (5). In the definition of \(I^r_i(t)\), the total resource sensitivity \(S^r_i(t,x)\) is given by (9), which is dependent on the set of tasks lep(i) and the set of tasks hp(i), but does not depend on the relative priority order of the tasks within those sets. Finally, the total resource contention \(E^r_i(t,y)\) given by (4) has no dependence on the relative priority order of the tasks in the sets hp(i) and lep(i) (or lp(i)), thus Condition 1 and Condition 2 hold.

With respect to Condition 3, moving task \(\tau _i\) up one place in the priority order is equivalent to moving another task \(\tau _h\) that also executes on core x from the set hp(i) to the set lep(i). Considering (8), such a change may increase the first term by no more than \(C_h\), but is guaranteed to also reduce the second term by at least \(C_h\). Further, with respect to the total resource sensitivity \(S^r_i(t,x)\), given by (9), such a change may increase the first term by no more than \(X^r_h\), but is guaranteed to also reduce the second term by at least \(X^r_h\). There is no change to the total resource stress \(E^r_i(t,y)\) given by (4). Hence the schedulability of task \(\tau _i\) cannot get worse if the task is moved up one place in the priority order \(\square \)

Finally, we note that the CpFPNS-m-R test is not compatible with Audsley’s OPA algorithm, since it breaks Condition 1, as proven below.

Theorem 4

The CpFPNS-m-R test given in Sect. 4.1, is not compatible with Audsey’s Optimal Priority Assignment (OPA) algorithm (Audsley 2001), and hence that algorithm cannot be used to obtain an optimal priority assignment with respect to the test.

Proof

Proof follows via exactly the same argument as given in the proof of Theorem 2 in Sect. 5.1, by replacing the words “CpFPPS-m-R test” with the words “CpFPNS-m-R test” \(\square \)

6 Evaluation

In this section, we present an empirical evaluation of the schedulability tests introduced in Sect. 4 for MRSS task sets executing on a multi-core system, assuming a single hardware resource shared between all cores. (Note, multiple shared hardware resources resulting in the same total interference would have the same impact on schedulability, due to the summation terms in (2) and (8)). Experiments were performed for 1, 2, 3, and 4 cores,Footnote 3 with the single core case considered for comparison purposes.

6.1 Task set parameter generation

The task set parameters used in our experiments were generated as follows:

-

Task utilizations (\(U_i = C_i/T_i\)) were generated using the Dirichlet-Rescale (DRS) algorithm (Griffin et al. 2020b) (open source Python software (Griffin et al. 2020a)) providing an unbiased distribution of utilization values that sum to the total utilization U required.

-

Task periods \(T_i\) were generated according to a log-uniform distribution (Emberson et al. 2010) with a factor of 100 difference between the minimum and maximum possible period. This represents a spread of task periods from 10 ms to 1 s, as found in many real-time applications. (When considering non-preemptive scheduling, a factor of 10 difference was used, otherwise most task sets would not be schedulable).

-

Task deadlines \(D_i\) were set equal to their periods \(T_i\).

-

The stand-alone execution time of each task was given by: \(C_i=U_i \cdot T_i\).

-

Task resource sensitivity values \(X^r_i\) were determined as follows. The DRS algorithm was used to generate task resource sensitivity utilization values \(V^r_i\), such that the total resource sensitivity utilization was SF (the Sensitivity Factor, default \(SF=0.25\)) times the total task utilization (i.e. \(\sum _{\forall i}V^r_i = U \cdot SF\)), and each individual task resource sensitivity utilization was upper bounded by the corresponding task utilization (i.e. \(V^r_i \le U_i\)). Each task resource sensitivity value was then given by \(X^r_i=V^r_i \cdot T_i\).

-

Task resource stress values \(Y^r_i\) were set to a fixed proportion of the corresponding resource sensitivity value \(Y^r_i = X^r_i \cdot RF\), where RF is the Stress Factor, default \(RF = 0.5\).

The default value for the Sensitivity Factor (\(SF=0.25\)) was set to approximately twice the average value (13.6%) obtained for the tasks in the proof of concept industry case study described in Sect. 7. This is justified since the case study considers a single shared hardware resource, whereas in practice contention would likely occur via multiple shared hardware resources, resulting in higher levels of interference. The default value for the Stress Factor (\(RF = 0.5\)) was set within the range found in the case study (0.23 to 1.58). Further, specific experiments were also used to evaluate performance over a wide range of values for these parameters.

6.2 Experiments

The experiments considered systems with 1, 2, 3, and 4 cores, with a different task set (generated according to the same parameters) assigned to each core. The per core task set utilization U (shown on x-axis of the graphs) was varied from 0.05 to 0.95. For each utilization value examined, 1000 task sets were generated for each core considered, (100 in the case of experiments using the weighted schedulability measure (Bastoni et al. 2010)). The default cardinality of the task sets on each core was \(n=10\).

In the experiments, a system was deemed schedulable if and only if the different task sets assigned to each of its m cores were schedulable, i.e. if all \(m \cdot n\) tasks in the system were schedulable. With a single core, there is no cross-core resource contention and hence no interference, and so task set schedulability can be determined via standard response time analysis. With multiple cores, contention and the resulting interference was modelled as described in Sect. 3. The experiments investigated the performance of the following schedulability tests for partitioned fixed priority preemptive and non-preemptive scheduling:

-

No-CpFPPS-m: The exact analysis of pFPPS (Joseph and Pandya 1986; Audsley et al. 1993) with no contention, recapped in Sect. 4.1, and given by (1).

-

CpFPPS-m-R: The response time based analysis of pFPPS with contention, introduced in Sect. 4.1, and given by (2), (3), (5), and (6).

-

CpFPPS-m-D: The deadline based analysis of pFPPS with contention, introduced in Sect. 4.1, and given by (2), (3), (4), and (5).

-

CpFPPS-m-fc: The fully composable analysis of pFPPS with contention, introduced in Sect. 4.3, and given by (2), (3), and (10).

-

No-CpFPNS-m: The sufficient analysis of pFPNS (Davis et al. 2007) with no contention, recapped in Sect. 4.2, and given by (7)).

-

CpFPNS-m-R: The response time based analysis of pFPNS with contention, introduced in Sect. 4.2, and given by (8), (9), (6), and (5).

-

CpFPNS-m-D: The deadline based analysis of pFPNS with contention, introduced in Sect. 4.2, and given by (8), (9), (4), and (5).

-

CpFPNS-m-fc: The fully composable analysis of pFPNS with contention, introduced in Sect. 4.3, and given by (8), (9), and (10).

For consistency of comparison, Deadline Monotonic Priority Ordering (DMPO) (Leung and Whitehead 1982) was used to assign priorities to tasks on the individual cores. As shown in Sect. 5, DMPO is optimal with respect to the No-CpFPPS-m, CpFPPS-m-fc, and CpFPPS-m-D tests, but only a heuristic policy with respect to the CpFPPS-m -R test and the tests for fixed priority non-preemptive scheduling.

Note, the results for the fully composable analyses (tests CpFPPS-m-fc and CpFPNS-m -fc) equate to the performance obtained via the use of resource sensitivity information only, as outlined in prior works (Radojkovic et al. 2012; Fernández et al. 2012; Nowotsch and Paulitsch 2012; Iorga et al. 2020).

6.3 Results

In the first experiment, we compared the performance of the various schedulability tests, assuming 1, 2, 3, and 4 cores, using the default parameters given in Sect. 6.1. The Success Ratio, i.e. the percentage of systems generated that were deemed schedulable, is shown for each of the pFPPS schedulability tests in Fig. 1, and for the pFPNS schedulability tests in Fig. 2. The dominance relationships between the tests, discussed in Sect. 4.4, are evidenced by the lines on the graphs. Note, schedulability depends on the number of cores even when contention is not taken into account. This is because for an m-core system the task sets on all m cores have to be schedulable for the system to be deemed schedulable.

Observe, that the performance advantage that the context-independent tests have over their context-dependent counterparts is more pronounced with pFPPS than with pFPNS. The reason for this is that the increased response times due to the blocking factor with pFPNS mean that the critical task(s) (those that become unschedulable as utilization is increased) are much more likely to be medium or high priority tasks than is the case with pFPPS. For higher priority tasks, the balance between total resource sensitivity \(S^r_i(R_i,x)\) and total resource stress \(E^r_i(R_i,y)\) tends towards the latter being larger, since \(E^r_i(R_i,y)\) includes a contribution from all of the tasks on core y, while \(S^r_i(R_i,x)\) only includes a contribution from a single lower priority (blocking) task in the case of pFPNS, and no lower priority tasks at all in the case of pFPPS. When \(E^r_i(R_i,y)\) exceeds \(S^r_i(R_i,x)\) then the performance of the context-independent tests is reduced to that of their context-dependent counterparts.

In the second set of experiments, we used the weighted schedulability measure (Bastoni et al. 2010) to assess schedulability test performance, while varying an additional parameter. In these experiments, the other parameters were set to their default values given in Sect. 6.1. In all of the weighted schedulability experiments the relative performance of the different tests follows the pattern illustrated in the first experiment, as dictated by the dominance relationships.

The results of varying the Sensitivity Factor SF from 0.05 to 0.5 in steps of 0.05, are shown in Fig. 3 for pFPPS, and Fig. 4 for pFPNS. Recall that the Sensitivity Factor determines the ratio of the total resource sensitivity utilization to the total task utilization. As expected, increasing the Sensitivity Factor and hence the amount of interference that tasks can be subject to due to cross-core contention for resources results in a rapid decline in the weighted schedulability measure for all of the tests that take into account contention.

The results of varying the Stress Factor RF from 0 to 1.2 in steps of 0.1 are shown in Fig. 5 for pFPPS, and Fig. 6 for pFPNS. Recall that the Stress Factor determines the ratio of the resource stress for each task to its resource sensitivity. Here, it is interesting to note that interference effectively saturates once the Stress Factor reaches 1.0. By then, the total resource stress \(E^r_i(t, y)\), given by (4) or (6), emanating from each additional core y in a time interval t tends to exceed the total resource sensitivity \(S^r_i(t, x)\), given by (3), for core x in that same time interval. Hence, for pFPPS the CpFPPS-m -R and CpFPPS-m -D tests reduce to exactly the same performance as the CpFPPS-m-fc test. Similarly, for pFPNS the CpFPNS-m -R and CpFPNS-m -D tests reduce to exactly the same performance as the CpFPNS-m -fc test. This is because the \(\min (E^r_i(t, y),S^r_i(t, x))\) term in (5) ceases to reduce the value in the summation below \(S^r_i(t, x)\). At the other extreme a Stress Factor RF of zero means that \(E^r_i(t, y) =0\) whether computed via (4) or (6).

For pFPPS, the CpFPPS-m-R and CpFPPS-m-D tests therefore have the same performance as the no contention No-CpFPPS-m test, and similarly for pFPNS the CpFPNS-m-R and CpFPNS-m-D tests have the same performance as the No-CpFPNS-m test. Between the two extremes, the smaller values of \(E^r_i(t, y)\) given by (6) as opposed to (4) mean that the CpFPPS-m-R test outperforms the CpFPPS-m-D test, and similarly the CpFPNS-m-R test outperforms the CpFPNS-m -D test.

The results of varying the cardinality of task sets running on each core from 2 to 32 in steps of 2 are shown in Fig. 7 for pFPPS, and Fig. 8 for pFPNS. In the preemptive case, task set cardinality typically has only a limited effect on schedulability test performance; however, in the non-preemptive case (Fig. 8), larger task sets equate to smaller execution times for each task and hence smaller blocking factors. Thus schedulability improves with increasing cardinality for all of the pFPNS schedulability tests. In the preemptive case (Fig. 7) the gap between the CpFPPS-m-R and CpFPPS-m-D tests and the CpFPPS-m-fc test increases with larger numbers of tasks. This is due to changes in the shape of the total resource stress function \(E^r_i(t, y)\), which typically consists of many small steps when there are a large number of tasks, and fewer larger steps when there are a smaller number of tasks. As the function \(E^r_i(t, y)\) is above the same gradient line in both cases, this difference acts to improve schedulability for the CpFPPS-m-R and CpFPPS-m-D tests at higher task set cardinality. The same effect is also evident in Fig. 7 for the pFPNS schedulability tests.

The effects of varying the range of task periods (ratio of the max/min possible task period) from \(10^{0.5}\approx 3\) to \(10^{4}=10,000\) are shown in Fig. 9 for pFPPS, and Fig. 10 for pFPNS. As expected, increasing the range of task periods results in a gradual improvement in pFPPS schedulability test performance, a well-known effect with fixed priority preemptive scheduling. In contrast, with non-preemptive scheduling, once the range of task periods exceeds 100 (i.e. \(r=2\)), hardly any task sets are schedulable. This happens because tasks with short periods (and deadlines) cannot tolerate being blocked by tasks with long periods and commensurate large execution times.

Finally, the results of varying task deadlines from 25% to 100% of the task’s period are shown in Fig. 11 for pFPPS, and Fig. 12 for pFPNS. As expected, schedulability improves for all approaches as task deadlines are increased. Further, the performance advantage of the CpFPPS-m-R test over the CpFPPS-m-D test increases with increasing deadlines. This occurs because larger deadlines provide a more pessimistic approximation of response times for schedulable tasks, impacting the total resource stress as assumed by the CpFPPS-m-D test.

7 Case study

In this section, we present a preliminary case study that investigates the resource stress and resource sensitivity of tasks from a real-time industrial application. The purpose of this case study is not to try to determine definitive values for task WCETs, resource sensitivities and resource stresses, in itself a challenging research problem that is beyond the scope of this work. Rather our aim is to obtain proof-of-concept data to act as an exemplar underpinning the MRSS task model and its accompanying schedulability analysis.

The case study focuses on a set of 24 tasks from a Rolls-Royce aero engine control system or FADEC (Full Authority Digital Engine Controller). The industrial software was developed in SPARK-Ada and has been verified according to DO-178C standards (level A). The software was provided in an anonymized object code format, derived from that used in the case studies reported by Law and Bate (2016) and Lesage et al. (2018). The tasks have object code libraries ranging in size from 300 KBytes to 40 MBytes, including compiled in data structures, but not including any framework or Linux additions. The software was originally designed to run on a Rolls-Royce specific packaged processor that integrates a single core, memory, I/O, and tracing interfaces; however, for research purposes, it was ported to run on a Raspberry Pi 3B+ (Lesage et al. 2018), along with a framework that facilitates taking timing measurements (Bate et al. 2020).

The Raspberry Pi 3B+ uses a Broadcom BCM2837 System-on-Chip with a quad-core ARM Cortex-A53 processor. It has a 16 KByte L1 data cache, 16 KByte L1 instruction cache, 512 KByte L2 shared cache, and 1 GByte of DDR2-DRAM. The L2 cache was, as is the default, configured for use as local memory for the GPU,Footnote 4 and so was not available to the four CPUs. The experimental hardware set-up involved a cluster of Raspberry Pi 3B+s, configured to run at a clock frequency of 600 MHz, so as to eliminate any possible disruption due to thermal throttling. The cluster was powered by specialized power rails to ensure a stable supply voltage and frequency. The Pi 3B+s used the Raspberry Pi OS Lite (updated on 01/25/2021) and the Linux Kernel 5.10.11-v7+. The isolcpus Linux option was used to minimize activity on the two cores used for the experiments. Timing measurements were obtained using a nanosecond clock, and cross-referenced against a 600 MHz cycle counter. Prior to each run of a task, the L1 data and L1 instruction caches were flushed. Given that the L2 cache was not accessible to the CPUs, the case study focussed on the key shared hardware resource, main memory (DDR2-DRAM).

7.1 Case study experiments

For each of the 24 tasks, we considered 10,000 randomly selected traces of execution. When a task was called for a specific trace, each of its input parameters was set to a random value based on the type (float, integer, or boolean) and the range of values permitted. The inputs were thus randomized, but nevertheless reproducible via the trace number, which controlled the random seed used. In the following, for brevity we use trace to mean a task with a specific set of input parameters corresponding to the trace number.

In Experiment A.1, for each trace we obtained the stand-alone execution time, the resource sensitivity, and the resource stress as measured against each of the three contenders described below. These values were obtained by: (i) running the trace stand alone, (ii) running the trace in parallel with the contender, (iii) running the contender stand alone. In (i) and (ii) the execution time of the trace was recorded. In addition, in (ii) the number of times L that the contender looped while the trace was running was recorded, along with the execution time of the contender for that number of loops. Finally, in (iii) the stand-alone execution time of the contender was recorded for L loops. The loop count L thus enabled comparable measurements to be made irrespective of the trace execution times. The stand-alone execution time of the trace came directly from (i), while the resource sensitivity (per contender) for the trace was given by the difference between the trace execution times in (i) and (ii), and the resource stress for the trace by the difference in the contender’s execution times in (ii) and (iii).

We repeated the runs for each trace 9 times to ensure consistency. Post processing of the raw timing data was used to eliminate anomalies caused by the kernel scheduler tick and the clock synchronization interrupt, neither of which could be disabled. The cycle counter was configured to pause when the scheduler was running, and so we were able to detect and eliminate anomalies due to the scheduler by comparing nanosecond clock and cycle counter readings. Measurement noise caused by the clock synchronization interrupt was more difficult to detect; however, we were able to filter out these anomalies by taking the median value for the 9 repeated runs for each trace, and by using the 95th percentile value (over the 10,000 traces) as the reference “maximum” increase in execution time for each task and contender.