Abstract

Bayes Rule specifies how probabilities over parameters should be updated given any kind of information. But in some cases, the kind of information provided by both simulation and physical experiments is information on how certain output parameters may change when other input parameters are changed. There are three different approaches to this problem, one of which leads to the Garbage-In/Garbage-Out Paradox, the second of which (Bayesian synthesis) violates the Borel Paradox, and the third of which (Bayesian melding) is a supra-Bayesian heuristic. This paper shows how to derive a fully Bayesian formula which avoids the Garbage-In/Garbage-Out and Borel Paradoxes. We also compare a Laplacian approximation of this formula with Bayesian synthesis and Bayesian melding and find that the Bayesian formula sometimes coincides with the Bayesian melding solution.

Similar content being viewed by others

References

Andradottir, S., & Bier, V. (2000). Applying Bayesian ideas in simulation. Simulation Practice and Theory, 8, 253–280.

Box, G., & Wilson, K. (1951). On the experimental attainment of optimum conditions. Journal of the Royal Statistical Society B, 13(1), 1–45.

Box, G., & Cox, D. (1964). An analysis of transformations. Journal of the Royal Statistical, Society, 26, 211–243.

Chambers, J., Cleveland, W., Kleiner, B., & Tukey, P. (1983). Graphical methods for data analysis. Belmont: Wadsworth.

Glynn, P.W. (1986). Problems in Bayesian analysis of stochastic simulation. In: J.R. Wilson, J.O. Henriksen, S.D. Roberts (eds.), Proceedings of the winter simulation conference.

Hoeting, J., Madigan, D., Raftery, A., & Volinsky, C. (1999). Bayesian model averaging: A tutorial. Statistical Science, 14, 382–417.

Poole, D., & Raftery, A. (2000). Inference for deterministic simulation models: The bayesian melding approach. Journal of the American Statistical Association, 95, 1222–1255.

Proschan, M., & Presnell, B. (1998). Expect the unexpected from conditional expectation. The American Statistician, 52(3), 248–252.

Redtke, P., Burk, T., & Bolstad, P. (2002). Bayesian melding of a forecast ecosystem with correlated inputs. Forest Sciences., 48(4), 505–512.

Roback, P., & Givens, G. (2001). Supra-Bayesian pooling of priors linked by a deterministic simulation model. Communications in Statistics: Simulation and Computing, 30, 447–476.

Schweder, T., & Hjort, N. (1996). Bayesian synthesis or likelihood synthesis–what does Borel’s paradox say? Report of the International Whaling Commission, 46, 475–480.

Singpurwalla, N., & Swift, A. (2001). Network reliability and Borel’s paradox. The American statistician, 55(3), 213–218.

Wolpert, R. (1995). Comment on inference from a deterministic population dynamics model for bowhead whales. by A. Raftery, G. Givens & J. Zeh. Journal of the American Statistical Association, 90, 427–427.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix I

First consider the case in which x instead of being prespecified is, like y, observed in the application of interest. Let \(E^*\) denote this information. Then the decision maker’s posterior probability over \(\mu \) and \(\nu \) is:

Now suppose the decision maker is given new information \(E^{**}\) indicating that x, instead of being observed from the complex system, was prespecified so that x, on its own, does not provide the client with any new information about the application of interest. Given this information \(E^{**}\), the client would update his probabilities in Eq. (2) to

Note that event E is the event of learning both \(E^*\) and \(E^{**}\). Note that

i.e., the decision maker’s prior beliefs about \(\mu \) and \(\nu \)—which were unaffected by the information \(E^{*}\)—will be equally unaffected by \(E^{**}\). Also note that

i.e., the probability conditioned on x being the true value of X (as well as \(\mu \) and \(\nu \)) will be unaffected by the information that x was chosen arbitrarily. However, the decision maker’s assessment of \(f(x|\mu ,\nu ,E^{**})\) will definitely be affected upon learning that x was chosen arbitrarily. To understand how it is affected, note that learning the value of x after learning that x was chosen arbitrarily should not change our beliefs about \(\mu \) and \(\nu \). Thus

This implies

Making all these substitutions into Eq. (3) gives the proposition.

Appendix II

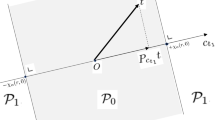

Define \(z_i\) as the \(n+m\)-dimensional vector whose first n elements are inputs to the experiment, i.e., \(x_i\), in trial i and whose last m elements are observed outputs, \(y_i\). For simplicity, we will temporarily suspend using the subscript i. Define Z as the \(n+m\)-dimensional vector whose first n elements are \(\mu \) and whose last m elements are \(\nu \). Conditioned on \(\mu \) and \(\nu \), the mean of z is Z. We can write the variance-covariance matrix of z as

where \(V_{XX}\) is an n by n matrix, \(V_{XY}\) is an n by m matrix, and \(V_{YY}\) is an m by m matrix.

It can be shown that the inverse variance-covariance matrix has the form

where \(R=-V_{XX}^{-1} V_{XY} S\) and \(SS^T=(V_{YY} - V_{XY}^T V_{XX}^{-1} V_{XY})^{-1}\)

Let \(\Omega ^{-1}=SS^T\) and define \(L=-\frac{1}{2}\ln [\frac{f(x_i,y_i|\mu ,\nu )}{f(x_i|\mu )} ]\). Then

Define B to be the \((n+m)\) by m matrix whose first n rows are \(-\Sigma ^{-1} \rho \) and whose last m rows are the identity matrix. Then

and

Given K independent experiments with \(\hat{z}\) being the mean of \(z_1, \ldots z_K\),

Let \(\nu _0=\hat{y}-\beta \hat{x}\) and \(u=\beta \mu \) so that

Defining a density \(h(u|\nu )\) with \(h(u|\nu ) \propto \int \limits _{\mu |u=\beta \mu } f(\mu |\nu )\) implies

Appendix III

Let \(L(u|\nu )=-\ln (h(u|\nu ))\) and define

so that \(f(E|\nu ) \propto \int _{u} \exp (-g(u|\nu )) d u) \). Suppose \(g(u|\nu )\) is convex in u with \(g',g''\) be the vector and matrix of first- and second-order derivatives of \(g(u|\nu )\). If \(u^*\) is the mode of \(g(u|\nu )\) with

Laplace’s approximation allows us to approximate \(f(E|\nu )\) by

Since the Gaussian term in the integral will heavily discount values of u for which u is significantly different from \(\nu -\nu _0\), we now approximate \(L(u|\nu )\) with a Taylor Series approximation about \(u=\nu -\nu _0\). If \(L'\) and \(L''\) are the first- and second-order derivatives of \(L(u|\nu )\) about the point \(u=\nu -\nu _0\), then the Taylor Series approximation is

Define \(v=(u-\nu +\nu _0)\) so that \(L(v|\nu )=L_0+v^Tv'+\frac{1}{2}v^TL''v\) and

Defining \(A=K \Omega ^{-1}+L''(v|\nu )\) yields \(g(v)=\frac{1}{2} v^T Av+v^T L' +L(\nu -\nu _0|\nu ) \). Defining \(v^*=u^*-\nu +\nu _0\) implies that the modal value of \(u^*\) satisfies

with \(g''=A\). Substituting in \(g(u|\nu )\)

Since \(g''(u^*|\nu )=A\) is a constant, we thus have

Rights and permissions

About this article

Cite this article

Bordley, R.F. Avoiding both the Garbage-In/Garbage-Out and the Borel Paradox in updating probabilities given experimental information. Theory Decis 79, 95–105 (2015). https://doi.org/10.1007/s11238-013-9369-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11238-013-9369-0