Abstract

In this contribution, we introduce, in analogy to penalized ambiguity resolution, the concept of penalized misclosure space partitioning, with the goal of directing the performance of the DIA-estimator towards its application-dependent tolerable risk objectives. We assign penalty functions to each of the decision regions in misclosure space and use the distribution of the misclosure vector to determine the optimal partitioning by minimizing the mean penalty. As each minimum mean penalty partitioning depends on the given penalty functions, different choices can be made, in dependence of the application. For the DIA-estimator, we introduce a special set of penalty functions that penalize its unwanted outcomes. It is shown how this set allows one to construct the optimal DIA-estimator, being the estimator that within its class has the largest probability of lying inside a user specified tolerance region. Further elaboration shows how these penalty functions are driven by the influential biases of the different hypotheses and how they can be used operationally. Hereby the option is included of extending the misclosure partitioning with an additional undecided region to accommodate situations when it will be hard to discriminate between some of the hypotheses or when identification is unconvincing. By extending the analogy with integer ambiguity resolution to that of integer-equivariant ambiguity resolution, we also introduce the maximum probability estimator within the similar larger class.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

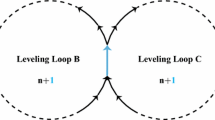

DIA-estimation captures the overall problem of detection, identification and adaptation (DIA) as one of estimation (Teunissen 2018). As its structure is similar to that of mixed integer estimation, one can cast its parameter solution in a similar form. In case of GNSS mixed integer estimation, the integer map \(\mathcal {I}: \mathbb {R}^{n} \mapsto \mathbb {Z}^{n}\) defines the ambiguity pull-in regions as \(\mathcal {P}_{z \in \mathbb {Z}^{n}}=\{ u \in \mathbb {R}^{n}\;|\; z = \mathcal {I}(u)\}\), resulting in the integer ambiguity-resolved baseline \(\check{\underline{b}}= \sum _{z \in \mathbb {Z}^{n}} \hat{\underline{b}}(z) p_{z}(\hat{\underline{a}})\), with \(\hat{\underline{a}}\) the ambiguity-float estimator, \(\hat{\underline{b}}(z)\) the conditional baseline estimator, and \(p_{z}(.)\) the indicator function of \(\mathcal {P}_{z}\). Similarly for DIA-estimation, the hypothesis map \(\mathcal {H}: \mathbb {R}^{r} \mapsto [0, 1, \ldots , k]\) defines the partitioning of misclosure space as \(\mathcal {P}_{i \in [0,\ldots ,k]}=\{ t \in \mathbb {R}^{r}\;|\; i = \mathcal {H}(t)\}\) and results in the DIA-estimator \(\bar{\underline{x}}= \sum _{i=0}^{k} \hat{\underline{x}}_{i} p_{i}(\underline{t})\), with \(\underline{t}\) the misclosure vector, \(\hat{\underline{x}}_{i}\) the hypothesis-conditioned BLUE, and \(p_{i}(.)\) the indicator function of \(\mathcal {P}_{i}\).

This analogy is extended in this contribution to penalized ambiguity resolution (Teunissen 2004). By assigning penalty functions to each of the decision regions in misclosure space, \(\mathcal {P}_{i \in [0,\ldots ,k]} \subset \mathbb {R}^{r}\), the mean penalty of any chosen misclosure space partitioning can be determined and compared. As a result, we determine and study the optimal DIA-estimator, being the estimator that within its class has the highest probability of lying inside a user defined tolerance region.

Although the presented theory is non-Bayesian throughout in the parameters, we also show how distributional information on the biases can be incorporated if available. The theory is applicable to a wide range of applications, for example, quality control of geodetic networks (DGCC 1982; Zaminpardaz and Teunissen 2019; Yang et al. 2021), geophysical and structural deformation analysis (Lehmann and Lösler 2017; Nowel 2020; Zaminpardaz et al. 2020), different GNSS applications (Perfetti 2006; Yu et al. 2023), and various configurations of integrated navigation systems (Gillissen and Elema 1996; Teunissen 1989; Salzmann 1991). For all these different applications, user-derived penalties can be set to direct the DIA-estimator perform according to its application-dependent tolerable risk objectives.

This contribution is organized as follows: After a brief review of DIA-estimation in Sect. 2, we introduce in Sect. 3, in analogy with penalized ambiguity resolution, the concept of penalized testing for estimation. As any testing procedure is unambiguously described by its partitioning of misclosure space, we show how the mean penalty of such partitionings can be evaluated, thus giving users the tool to compare different testing procedures using their own assigned penalties. We also determine the partitioning of misclosure space that results in the minimum mean penalty. Its operational use under known and unknown biases is discussed, and it is shown how the penalties need to be chosen to recover the misclosure space partitioning of classical multi-hypotheses datasnooping (Baarda 1968a; Teunissen 2000; Lehmann and Lösler 2016).

In Sect. 4, we focus attention to the consequences of testing decisions, rather than only to the correctness of the decisions. This is particularly of importance when the goal is not per se the correct identification of the active hypothesis, but rather being able to direct the performance of the DIA-estimator towards its application-dependent tolerable risk objectives. For that purpose, we introduce a special DIA-penalty function that penalizes unwanted outcomes of the estimator. We show how this penalty function maximizes the probability \(\textsf{P}[\bar{\underline{x}} \in \varOmega _{x}]\), thereby enabling the construction of the optimal DIA-estimator. By extending the analogy with integer estimation to that of integer-equivariant estimation, we also introduce and derive the maximum probability estimator in the similar larger class.

Further elaboration of the DIA-penalty functions is conducted in Sect. 5, thereby showing the prominent role played by the influential biases. Hereby we also present different operational simplifications of the penalty functions and associated minimum mean penalty partitionings. This includes the option of having an additional undecided region to accommodate situations where one lacks confidence in the decision making. In such cases, one may rather prefer to state that a solution is unavailable, than providing an actual, but possibly unreliable, parameter estimate. The theory is illustrated and supported by several worked out examples. Finally in Sect. 6, a summary and conclusions are given.

The following notation is used: \(\textsf{E}(.)\) and \(\textsf{D}(.)\) stand for the expectation and dispersion operator, respectively, and \(\mathcal {N}_{p}(\mu , Q)\) denotes a p-dimensional, normally distributed random vector, with mean (expectation) \(\mu \) and variance matrix (dispersion) Q. We denote a random variable or random vector with an underscore. Thus, \(\underline{y}\) is random, while x is not. If the same symbol is used with and without underscore, then the latter is a realisation of the former. Thus, \(\hat{x}_{0}\) is an outcome or realisation of the random \(\hat{\underline{x}}_{0}\). The probability of an event \(\mathcal {A}\) is denoted as \(\textsf{P}[\mathcal {A}]\), a proportional to b as \(a \propto b\), and the logical characters for and/or as \(\wedge /\vee \). For the probability of \(\mathcal {H}_{\alpha }\)-hypothesis occurrence, we use the shorthand notation \(\pi _{\alpha }=\textsf{P}[\mathcal {H}_{\alpha }]=\textsf{P}[\underline{\mathcal {H}}=\mathcal {H}_{\alpha }]\). The probability density function (PDF) of a random vector \(\underline{t}\) is denoted as \(f_{\underline{t}}(t)\). The noncentral Chi-square distribution with p degrees of freedom and noncentrality parameter \(\lambda \) is denoted as \(\chi ^{2}(p, \lambda )\) and its \(\delta \)-percentage critical value as \(\chi ^{2}_{\delta }(p,0)\). \(\mathbb {R}^{p}\) and \(\mathbb {Z}^{p}\) denote the p-dimensional spaces of real- and integer numbers, respectively. \(\mathbb {R}^{r}_{\ge 0}\) denotes the space of r-vectors having nonnegative entries and \(e_{r}\) is the r-vector of ones. \(||x||_{Q}^{2}=(x)^{T}Q^{-1}(x)\) denotes the squared Q-weighted norm of vector x and \(\delta _{i\alpha }\) the Kronecker-delta, with \(\delta _{i\alpha }=1\) if \(i=\alpha \) and \(\delta _{i\alpha }=0\) if \(i \ne \alpha \). The identity matrix is denoted as I and the projector that projects orthogonally, in the metric of Q, on the range space of matrix M as \(P_{M}=M(M^{T}Q^{-1}M)^{-1}M^{T}Q^{-1}\), where \(P_{M}^{\perp }=I-P_{M}\). The range space of a matrix M is denoted as \(\mathcal {R}(M)\).

2 A brief DIA review

In this section, we give a brief review of DIA-estimation and its properties.

2.1 Hypotheses, BLUEs and misclosure vector

We start by formulating our null-hypothesis \(\mathcal {H}_{0}\) and k alternative hypotheses \(\mathcal {H}_{i}\), \(i=1, \ldots ,k\). The null-hypothesis, also referred to as working hypothesis, consists of the model that one believes to be valid under normal working conditions. We assume it to read

with \(A \in \mathbb {R}^{m \times n}\) the given design matrix of rank n, \(x \in \mathbb {R}^{n}\) the to-be-estimated unknown parameter vector, and \(Q_{yy} \in \mathbb {R}^{m \times m}\) the given positive-definite variance matrix of \(\underline{y}\). The redundancy of \(\mathcal {H}_{0}\) is \(r=m-\textrm{rank}(A)=m-n\).

Although every part of the assumed null-hypothesis can be wrong, we assume that if a misspecification in \(\mathcal {H}_{0}\) occurred that it is confined to an underparametrization of the mean of \(\underline{y}\). The alternative hypotheses will therefore only differ from \(\mathcal {H}_{0}\) in their mean of \(\underline{y}\). The ith alternative hypothesis is assumed given as:

for some unknown vector \(C_{i}b_{i} \in \mathbb {R}^{m}{\setminus }{\{0\}}\), with \([{A}, {C_{i}}] \in \mathbb {R}^{m \times (n+q_{i})}\) a known matrix of full rank \(n+q_{i}\). Through \(C_{i}b_{i}\) one may model, for instance, the presence of one or more outliers in the data, satellite failures, antenna-height errors, cycle-slips in GNSS phase data, neglectance of atmospheric delays, or any other systematic effect that one failed to take into account under \(\mathcal {H}_{0}\). We will use the lowercase \(c_{i}\), instead of \(C_{i}\), when \(q_{i}=1\), i.e. when \(b_{i}\) is a scalar.

For our further considerations, it is useful to first bring (1) in canonical form. This is achieved by means of the Tienstra-transformation and its inverse,

in which \(A^{+}=(A^{T}Q_{yy}^{-1}A)^{-1}A^{T}Q_{yy}^{-1}\) and \(B^{+}=(B^{T}Q_{yy}B)^{-1}B^{T}Q_{yy}\) are the BLUE-inverses of A and B, respectively, and B is an \(m \times r\) basis-matrix of the null space of \(A^{T}\), i.e. \(B^{T}A=0\) and \(\textrm{rank}(B)=r\). Application of \(\mathcal {T}\) to \(\underline{y}\) gives under the null hypothesis (1),

in which \(\hat{\underline{x}}_{0}=A^{+}\underline{y}\in \mathbb {R}^{n}\) is the best linear unbiased estimator (BLUE) of x under \(\mathcal {H}_{0}\) and \(\underline{t}=B^{T}\underline{y}\in \mathbb {R}^{r}\) is the misclosure vector of \(\mathcal {H}_{0}\), having variance matrices \(Q_{\hat{x}_{0}\hat{x}_{0}}=(A^{T}Q_{yy}^{-1}A)^{-1}\) and \(Q_{tt}=B^{T}Q_{yy}B\), respectively. As the misclosure vector \(\underline{t}\) is zero-mean under the null-hypothesis and stochastically independent of \(\hat{\underline{x}}_{0}\), it contains all the available information useful for testing the validity of \(\mathcal {H}_{0}\).

Under the alternative hypothesis (2), \(\mathcal {T}\underline{y}\) becomes distributed as:

Thus, \(\hat{\underline{x}}_{0}\) and \(\underline{t}\) are still independent, but now have different means than under \(\mathcal {H}_{0}\). Due to the canonical structure of (5), it now becomes rather straightforward to infer the BLUEs of x and \(b_{i}\) under \(\mathcal {H}_{i}\). As \(\hat{\underline{x}}_{0}\) and \(\underline{t}\) are independent and the mean of \(\hat{\underline{x}}_{0}\) under \(\mathcal {H}_{i}\) depends on more parameters than only those of x, the estimator \(\hat{\underline{x}}_{0}\) will not contribute to the determination of the BLUE of \(b_{i}\). Hence, it is \(\underline{t}\) that is solely reserved for the determination of the BLUE of \(b_{i}\), which then on its turn can be used in the determination of the BLUE of x under \(\mathcal {H}_{i}\). The BLUEs of x and \(b_{i}\) under \(\mathcal {H}_{i}\) are therefore given as

in which \((B^{T}C_{i})^{+}=(C_{i}^{T}BQ_{tt}^{-1}B^{T}C_{i})^{-1}C_{i}^{T}BQ_{tt}^{-1}\) denotes the BLUE-inverse of \(B^{T}C_{i}\). The result (6) shows how \(\hat{\underline{x}}_{0}\) is to be adapted when switching from the BLUE of \(\mathcal {H}_{0}\) to that of \(\mathcal {H}_{i}\).

2.2 Testing and misclosure space partitioning

Which of the possible parameter solutions to deliver, \(\hat{x}_{0}\) or one of the \(\hat{x}_{i}\)’s, is decided through hypothesis testing, and as mentioned, it is the misclosure vector

that forms the input to hypothesis testing. Would one only have a single alternative hypothesis (\(k=1\)), one would likely use the uniformly most powerful invariant (UMPI) test statistic (Arnold 1981; Teunissen 2000),

where \(P_{C_{t_{i}}}=C_{t_{i}}(C_{t_{i}}^{T}Q_{tt}^{-1}C_{t_{i}})^{-1}C_{t_{i}}^{T}Q_{tt}^{-1}\), to accept \(\mathcal {H}_{0}\) when \(T_{q_{i}} \le \chi ^{2}_{\alpha }(q_{i},0)\) and otherwise reject \(\mathcal {H}_{0}\) in favour of \(\mathcal {H}_{i}\). Such binary decision making can be visualized through a corresponding binary partitioning of misclosure space. With the partitioning

one would then choose for \(\mathcal {H}_{0}\) if \(t \in \mathcal {P}_{0}\) and for \(\mathcal {H}_{i}\) if \(t \in \mathcal {P}_{i}\), see Fig. 1. If \(q_{i}=1\), then \(T_{q_{i}}\) can be expressed in Baarda’s w-statistic (Baarda 1968a) as \(T_{q_{i}=1}=w_{i}^{2}\), with

We note that the UMPI test statistic (8) can also be expressed in the BLUE of \(b_{i}\) under \(\mathcal {H}_{i}\) as Teunissen (2000)

where \(Q_{\hat{b}_{i}\hat{b}_{i}}=(C_{t_{i}}^{T}Q_{tt}^{-1}C_{t_{i}})^{-1}\). Here we have written the BLUE of \(b_{i}\) as \(\hat{b}_{i}(\underline{t})\) to explicitly show its dependence on the misclosure vector \(\underline{t}\), cf. (6). Expression (11) shows that the binary test between \(\mathcal {H}_{0}\) and \(\mathcal {H}_{i}\) can therefore also be interpreted as a significance test: choose \(\mathcal {H}_{0}\) if the bias-estimate is considered insignificant, else choose \(\mathcal {H}_{i}\).

In the multiple alternative hypotheses case (\(k>1\)), one cannot generalize the above binary decision making and expect the UMPI property to remain valid. However, although for now it is not yet clear how the actual multiple hypotheses decision making should look like, the idea of partitioning misclosure space for the purpose of such decision making can easily be generalized from the case \(k=1\) to \(k>1\), this in analogy with the pull-in regions of integer estimation and integer aperture estimation (Teunissen 2003a). Therefore, if we let the multiple hypotheses testing procedure be captured by the unambiguous mapping \(\mathcal {H}: \mathbb {R}^{r} \mapsto \{0, 1, \ldots , k\}\), the regions

form a partitioning of the r-dimensional misclosure space, i.e. \(\cup _{i=0}^{k} \mathcal {P}_{i} = \mathbb {R}^{r}\) and \(\mathcal {P}_{i} \cap \mathcal {P}_{j} = \emptyset \) for \(i \ne j\). Hence, by specifying (12), one would have automatically and unambiguously defined the multiple testing procedure as selecting \(\mathcal {H}_{i}\) if \(t \in \mathcal {P}_{i}\).

Formulation (12) is a very general one and applies in principle to any unambiguous multiple hypotheses testing problem. How the mapping \(\mathcal {H}\), or its partitioning \(\mathcal {P}_{i}\), \(i=0, \ldots ,k\), is defined determines how the actual testing procedure is executed. The following example shows how Baarda’s datasnooping (Baarda 1968b), being one of the more familiar outlier testing procedures, fits into the above partitioning framework.

Example 1

(Detection and 1-dim identification) Let the design matrices \([A, C_{i}]\) of the k hypotheses \(\mathcal {H}_{i}\) (cf. 2) be of order \(m \times (n+1)\), with \(i=1, \ldots , k\), denote \(C_{i}=c_{i}\) and \(B^{T}c_{i}=c_{t_{i}}\), and write Baarda’s test-statistic (Baarda 1968a; Teunissen 2000) as \( |\underline{w}_{i}|= ||P_{c_{t_{i}}}\underline{t}||_{Q_{tt}} \). Then,

form a partitioning of misclosure space, provided not two or more of the vectors \(c_{t_{i}}\) are parallel. The inference induced by this partitioning is thus that the null-hypothesis gets accepted if in the detection step the overall model test gets accepted, \(||t||_{Q_{tt}} \le \tau \), while in case of rejection, the largest value of the statistics \(|w_{j}|\), \(j=1, \ldots , k\), say \(|w_{i}|\), is used for identifying the ith alternative hypothesis. In the first case, \(\hat{x}_{0}\) is provided as the output estimate of x, while in the second case, \(\hat{x}_{0}\) is adapted to provide the output as \(\hat{x}_{i}\), cf. (6). In case \(k=m\) and the \(c_{i}\) are canonical unit vectors, the above testing reduces to Baarda’s single-outlier data-snooping, i.e. the procedure in which the individual observations are screened for possible outliers (Baarda 1968a; DGCC 1982; Kok 1984).

Figure 2 illustrates the geometry of partitioning (13) for the case \(A=[1, 1, 1]^{T}\), \(Q_{yy}=I_{3}\), \(c_{1}=[1,0,0]^{T}\), \(c_{2}=[0,1,0]^{T}\), and \(c_{3}=[0,0,1]^{T}\), cf. (1) and (2). With

the inverse variance matrix of the misclosure vector follows as

This matrix determines the shape of the elliptical detection region \(||t||_{Q_{tt}}^{2}=t^{T}Q_{tt}^{-1}t < \tau ^{2}\). The fault lines along which the \(\textsf{E}(\underline{t}|\mathcal {H}_{i})=B^{T}c_{i}b_{i}\) move when \(b_{i}\) varies, \(i=1,2,3\), have direction vectors \(c_{t_{1}}=B^{T}c_{1}=[1,0]^{T}\), \(c_{t_{2}}=B^{T}c_{2}=[-1,1]^{T}\), and \(c_{t_{3}}=B^{T}c_{3}=[0,-1]^{T}\). \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) (cf. Example 1): elliptical \(\mathcal {P}_{0}\) for detection, with \(\mathcal {P}_{i \in [1,2,3]}\) for outlier identification

2.3 The DIA-estimator and its PDF

Once testing has been concluded, one has either accepted the null-hypothesis \(\mathcal {H}_{0}\) and provided \(\hat{x}_{0}\) as the parameter estimate of x, or identified one of the alternative hypotheses, say \(\mathcal {H}_{i}\), \(i=1, \ldots ,k\), and provided \(\hat{x}_{i}\) as the parameter estimate of x. The first happens when \(t \in \mathcal {P}_{0}\), while the second when \(t \in \mathcal {P}_{i \ne 0}\). This shows that the whole of the detection, identification and adaptation (DIA) procedure to come to a final solution for the unknown parameter vector x, is a combination of estimation and testing, whereby the uncertainty of both would need to be accommodated for in the quality description of the final result. The actual estimator that DIA produces is therefore not \(\hat{\underline{x}}_{0}\) nor \(\hat{\underline{x}}_{i}\), but

in which \(p_{i}(t)\) denotes the indicator function of \(\mathcal {P}_{i}\), (i.e. \(p_{i}(t)=1\) for \(t \in \mathcal {P}_{i}\) and \(p_{i}(t)=0\) elsewhere). The DIA-estimator (16) represents a class of estimators, with each member in the class unambiguously defined through its misclosure space partitioning. Changing the testing procedure will change the partitioning and consequently also the DIA-estimator.

The structure of (16) resembles that of mixed integer estimation. As mentioned in Teunissen (2018) p.67, this similarity can be extended further to mixed integer-equivariant estimation. This is achieved if one would replace the indicator functions \(p_{i}(t)\) of (16), with misclosure weighting functions \(w_{i}(t): \mathbb {R}^{r} \mapsto \mathbb {R}\), satisfying \(\omega _{i}(t) \ge 0\), \(i=0, \ldots , k\), and \(\sum _{i=0}^{k}\omega _{i}(t)=1\). As a result, we obtain, in addition to the DIA-class, a second class of estimators, namely

which we will call the weighted solution-separation (WSS) class. Note, since the indicator functions satisfy the properties \(p_{i}(t) \ge 0\), \(i=0, \ldots ,k\), and \(\sum _{i=0}^{k}p_{i}(t)=1\), that the DIA-class is a subset of the WSS-class, just like the integer-class is a subset of the integer-equivariant class (Teunissen 2003b).

We have named estimators from the class (17) ’weighted solution-separation’ estimators, since they can alternatively be represented as

thus showing how \(\bar{\underline{x}}_{\textrm{WSS}}\) is obtained through a weighted solution-separation sum adjustment of the \(\mathcal {H}_{0}\)-solution \(\hat{\underline{x}}_{0}\). Note, as \(\underline{t}\) is independent of \(\hat{\underline{x}}_{0}\) and the solution separations \(\hat{\underline{x}}_{i}-\hat{\underline{x}}_{0}\) are functions of the misclosure vector \(\underline{t}\) only (cf. 6), that the weighted solution-separation sum of (18) is also independent of \(\hat{\underline{x}}_{0}\). With formulation (18) one should be aware, however, that the k weights \(\omega _{i}(t)\) sum up to \(1-\omega _{0}(t)\) and not to 1.

To be able to determine and judge the parameter estimation quality of (16) and (17), we need their probability density function. As (16) can be considered a special case of (17), the use of the subscripts ’DIA’ or ’WSS’ will only be used in the following if the need arises. We have the following result (Teunissen 2018).

Theorem 1

(PDF of \(\bar{\underline{x}}\)) The probability density function of (17) is given as

where \(\ell (t)=\sum _{i=1}^{k} L_{i}t\omega _{i}(t)\) and \(L_{i}=A^{+}C_{i}[B^{T}C_{i}]^{+}\). \(\blacksquare \)

Proof

We first express \(\bar{\underline{x}}\) in the two independent vectors \(\hat{\underline{x}}_{0}\) and \(\underline{t}\). With \(\sum _{i=0}^{k} \omega _{i}(t)=1\), substitution of \(\hat{\underline{x}}_{i}=\hat{\underline{x}}_{0}-L_{i}\underline{t}\) (cf. 6) into (17) gives \(\bar{\underline{x}}=\hat{\underline{x}}_{0}-\ell (\underline{t})\). Application of the PDF transformation rule to the pair \(\bar{\underline{x}}=\underline{\hat{x}}_{0}-\ell (\underline{t}), \;\underline{t}\), recognizing the Jacobian to be 1, gives then for their joint PDF \( f_{\bar{\underline{x}}, \underline{t}}(x, t)= f_{\underline{\hat{x}}_{0}, \underline{t}}(x+\ell (t), t) \). The marginal (19) follows then from integrating t out and recognizing that \(\underline{\hat{x}}_{0}\) and \(\underline{t}\) are independent. \(\square \)

The above result shows how the impact of the hypotheses is felt through the shifting over \(\ell (\tau )\) of the PDF of \(\hat{\underline{x}}_{0}\), and thus, how it can be manipulated, either through the choice of \(p_{i}(t)\), i.e. the choice of misclosure space partitioning, or through the choice of the misclosure weighting functions \(\omega _{i}(t)\). Note that (19) can be expressed in terms of an expectation as

thus showing that the PDF equals the average of random shifts \(\ell (\underline{t})\) of the PDF of \(\hat{\underline{x}}_{0}\). This expression is useful when one wants to Monte-Carlo simulate \(f_{\bar{\underline{x}}}(x)\) or integral-values of it Robert and Casella (2004). For example, to compute \(\textsf{P}[\bar{\underline{x}} \in \varOmega \subset \mathbb {R}^{n}]=\int _{\mathbb {R}^{n}}f_{\bar{\underline{x}}}(x)i_{\varOmega }(x)dx\), with \(i_{\varOmega }(x)\) being the indicator function of \(\varOmega \), we first express the probability in terms of an expectation, \(\textsf{P}[\bar{\underline{x}} \in \varOmega \subset \mathbb {R}^{n}] = V_{\varOmega } \int _{\mathbb {R}^{n}}f_{\bar{\underline{x}}}(x)u_{\underline{x}}(x)dx = V_{\varOmega } \textsf{E}(f_{\bar{\underline{x}}}(\underline{x}))\), with volume \(V_{\varOmega }=\int _{\varOmega } dx\) and PDF \(u_{\underline{x}}(x)=\frac{i_{\varOmega }(x)}{V_{\varOmega }}\) being the uniform PDF over \(\varOmega \). Then one may use the Monte-Carlo approximation \(\textsf{P}[\bar{\underline{x}} \in \varOmega \subset \mathbb {R}^{n}] \approx \frac{V_{\varOmega }}{k_{x}}\sum _{j=1}^{k_{x}}f_{\bar{\underline{x}}}(x_{j})\), in which \(x_{j}\), \(j=1, \ldots , k_{x}\), are the \(k_{x}\) samples drawn from the uniform PDF over \(\varOmega \subset \mathbb {R}^{n}\). This, together with a similar Monte-Carlo approximation of (20), gives then

in which \(t_{i}\), \(i=1, \ldots , k_{t}\), are the \(k_{t}\) samples drawn from the PDF \(f_{\underline{t}}(t)\). Standard Monte-Carlo simulation can be further improved with importance sampling and other variance-reduction techniques, see, e.g. Kroese et al. (2011).

An important difference between (16) and (17) is the use of binary weights \(p_{i}(t)\) in the DIA-estimator. It is through these binary weights that the DIA-estimator is unambiguously linked to hypothesis testing. In fact, the testing procedure is the defining trait of the DIA-estimator. No such link exists, however, when the WSS-estimator is based on smooth misclosure weight functions \(\omega _{i}(t)\). In that case, a weighted average of all \(k+1\) parameter solutions \(\hat{x}_{i}\) is taken, instead of the single ’winner-takes-all’ solution of (16). Although an explicit testing procedure is absent in case of the WSS-estimator with smooth weights, the estimator does reveal its hypothesis-preference through its choice of weighting functions. As the weight \(\omega _{i}(t)\) can be seen to be a measure of preference that is given to solution \(\hat{x}_{i}\) for a given t, it may be interpreted as the conditional probability \(\textsf{P}[\underline{i}=i|t]\). For the binary weight \(\omega _{i}(t)=p_{i}(t)\), it would then be the \(1-0\) probability of selecting the BLUE \(\hat{\underline{x}}_{i}\) given the outcome of the misclosure vector being t. For the conditional and unconditional expectations of the random weight \(\omega _{i}(\underline{t})\) we then have \(\textsf{E}(\omega _{i}(\underline{t})|\mathcal {H}_{j})=\textsf{P}[\underline{i}=i|\mathcal {H}_{j}]\) and \(\textsf{E}(\omega _{i}(\underline{t}))=\textsf{P}[\underline{i}=i]\), thus showing how the expectation of the weights can be read as probabilities assigned to the hypotheses. In case of the DIA-estimator, having the binary weight \(\omega _{i}(t)=p_{i}(t)\), the expectations specialize to \(\textsf{E}(p_{i}(\underline{t})|\mathcal {H}_{j})=\textsf{P}[\underline{t}\in \mathcal {P}_{i}|\mathcal {H}_{j}]\) and \(\textsf{E}(p_{i}(\underline{t}))=\textsf{P}[\underline{t}\in \mathcal {P}_{i}]\), which are the probabilities with which the hypotheses are identified by the testing procedure.

In our description of DIA-estimation, we so far assumed that always one of the estimates \(\hat{x}_{i}\), \(i=0, \ldots , k\), was provided as output, even, for instance, if it would be hard to discriminate between some of the hypotheses or when identification is unconvincing. However, when one lacks confidence in the decision making, one may rather prefer to state that a solution is unavailable, than providing an actual, but possible unreliable, parameter estimate. To accommodate such situations, one can generalize the procedure and introduce an additional undecided region \(\mathcal {P}_{k+1} \subset \mathbb {R}^{r}\) in the misclosure space partitioning. This is similar in spirit to the undecided regions of the theory of integer aperture estimation (Teunissen 2003a). With the undecided region \(\mathcal {P}_{k+1}\) in place, the DIA-estimator generalizes to

As parameter estimates are now only provided when \(t \in \mathbb {R}^{r}{\setminus } \mathcal {P}_{k+1}\), the evaluation of the DIA-estimator would now need to be based on its conditional PDF \(f_{\bar{\underline{x}}|t \notin \mathcal {P}_{k+1}}(x)\), the expression of which can be found in Teunissen (2018).

In practice one is quite often not interested in the complete parameter vector \(x \in \mathbb {R}^{n}\), but rather only in certain functions of it, say \(\theta = F^{T}x \in \mathbb {R}^{p}\). As its DIA-estimator is then computed as \(\bar{\underline{\theta }}= F^{T}\bar{\underline{x}}\), we need its distribution to evaluate its performance. In analogy with Theorem 1, the PDF of \(\bar{\underline{\theta }}\) is given as \( f_{\bar{\underline{\theta }}}(\theta )= \int _{\mathbb {R}^{r}} f_{\hat{\underline{\theta }}_{0}}(\theta +F^{T}\ell (\tau ))f_{\underline{t}}(\tau )d \tau \). Although we will be working with \(\bar{\underline{x}}\), instead of \(\bar{\underline{\theta }}\), in the remaining of this contribution, it should be understood that the results provided can similarly be given for \(\bar{\underline{\theta }}= F^{T}\bar{\underline{x}}\) as well.

We also note, although all our results are formulated in terms of the misclosure vector \(\underline{t}\in \mathbb {R}^{r}\), that they can be formulated in terms of the least-squares residual vector \(\hat{\underline{e}}_{0}=\underline{y}-A\hat{\underline{x}}_{0} \in \mathbb {R}^{m}\) as well. This follows, since \(\underline{t}= B^{T}\hat{\underline{e}}_{0}\).

3 Penalized testing

3.1 Minimum mean penalty testing

The DIA-estimator (16) represents a class of estimators, with each member in the class unambiguously defined through its misclosure partitioning. Any change in the partitioning will change the outcome of testing and thus also the quality of the testing procedure and its decision making. As, like in (22), the number of subsets of the partitioning need not be equal to the number of hypotheses, we put in the following no restriction on the number of subsets and thus let misclosure space \(\mathbb {R}^{r}\) be partitioned in \(l+1\) subsets \(\mathcal {P}_{i}\), \(i=0, \ldots , l\), thereby assuming that each subset is unambiguously linked to a decision, i.e. decision i is made when \(t \in \mathcal {P}_{i}\). To be able to compare the quality of different partitionings, we introduce a weighting scheme that weighs the envisioned risk of a decision i. This is done by assigning to decision i, a nonnegative risk penalizing function \(\texttt{r}_{i\alpha }(t)\), with \(t \in \mathcal {P}_{i}\), for each of the \(k+1\) hypotheses \(\mathcal {H}_{\alpha }\), \(\alpha =0, \ldots , k\). Note that we allow the penalty of the invoked risk depend on where t is located within \(\mathcal {P}_{i}\). Using the indicator function \(p_{i}(t)\) of \(\mathcal {P}_{i}\), we can write the hypothesis \(\mathcal {H}_{\alpha }\)-penalty function, for all \(t \in \mathbb {R}^{r}\), as

As the misclosure vector \(\underline{t}\) is random, the function values \(\texttt{r}_{\alpha }(t)\) may now be considered outcomes of a random risk penalty variable \(\underline{\texttt{r}}\) conditioned on \(\mathcal {H}_{\alpha }\). We therefore have the conditional means

and the unconditional mean

where

Would we change the partitioning of \(\mathbb {R}^{r}\), i.e. change the values of t for which decision i is made, then the mean penalty \(\textsf{E}(\underline{\texttt{r}})\) would change as well. Hence, we can now think of a best possible partitioning, namely one that would minimize the mean risk penalty.

To minimize \(\textsf{E}(\underline{\texttt{r}})\) in dependence on the \(l+1\) subsets \(\mathcal {P}_{i}\), \(i=0, \ldots , l\), we make use of the following lemma:

Lemma 1

(Optimal constrained partitioning) Let the \(l+1\) subsets \(\mathcal {P}_{i} \subset \mathbb {R}^{r}\), \(i=0, \ldots , l\), form a partitioning of \(\mathbb {R}^{r}\), i.e. \(\cup _{i=0}^{l} \mathcal {P}_{i} = \mathbb {R}^{r}\) and \(\mathcal {P}_{i}\cap \mathcal {P}_{j} = \emptyset \) for \(i \ne j\), and let \(f_{i}(t): \mathbb {R}^{r} \mapsto \mathbb {R}\) be \(l+1\) given non-negative functions. If \(\mathcal {P}_{0}\) is known, then the \(\mathcal {P}_{0}\)-constrained subsets that minimize the sum

are given as

\(\blacksquare \)

Proof

From writing the sum S as

and recognizing that the l subsets \(\mathcal {P}_{i}\), \(i=1, \ldots , l\), now form a partitioning of \(\mathbb {R}^{r}{\setminus } \mathcal {P}_{0}\), it follows that the second term of (29) is minimized when each of the subsets \(\mathcal {P}_{i}\) covers that part of the domain \(\mathbb {R}^{r}{\setminus }{\mathcal {P}_{0}}\) for which \(f_{i}\) attains the smallest function values. As a result, the l subsets are to be chosen as given by (28). \(\square \)

Note, by assuming the l subsets \(\mathcal {P}_{i}\) of (28) to form a partitioning of \(\mathbb {R}^{r}{\setminus }{\mathcal {P}_{0}}\), implicit properties are asked of the functions \(f_{i}(t)\). Such partitioning would for instance not be realized if all functions \(f_{i}(t)\) would be equal. Also note that in the unconstrained case, i.e. if \(\mathcal {P}_{0}\) is also unknown, \(\mathbb {R}^{r}{\setminus } \mathcal {P}_{0}\) needs to be replaced by \(\mathbb {R}^{r}\) in (28) and \([1, \ldots , l]\) by \([0, \ldots , l]\). We will have use for both the constrained and unconstrained cases in the sections following. In fact, expression (28) is also very useful for the unconstrained case, since it shows, once the unconstrained minimizer \(\mathcal {P}_{0}\) is found, that the function \(f_{j=0}(t)\) need not be considered anymore in the search for the remaining unconstrained minimizers \(\mathcal {P}_{i \in [1, \ldots ,l]}\). This will allow us, as we will see in the sections following, to provide compact and transparent formulations of the various misclosure partitionings. Finally note, to maximize the sum S, the minimization in (28) needs to be replaced by a maximization. With the minimum and maximum, one can bound the sum S as \(S_{\textrm{min}} \le S \le S_{\textrm{max}}\).

We now apply Lemma 1 so as to find the misclosure space testing partitioning that minimizes the mean penalty \(\textsf{E}(\underline{\texttt{r}})\).

Theorem 2a

(Minimum mean penalty testing) The misclosure space partitioning \(\mathcal {P}_{i \in [0, \ldots , l]} \subset \mathbb {R}^{r}\) that minimizes the mean penalty

is given by:

where \(F_{\alpha }(t)=f_{\underline{t}}(t|\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{\alpha }]\).

Proof

From writing the mean penalty as

with \(f_{i}(t)= \sum _{\alpha =0}^{k} \texttt{r}_{i \alpha }(t)f_{\underline{t}}(t|\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{\alpha }]\), the result follows when applying Lemma 1. \(\square \)

Note that the minimizer in (31) is invariant to a scaling of its objective function with an arbitrary nonnegative function of t. At various places in the following use will be made of this property. For instance, by using a common scaling, the values of the penalty functions may all be considered to lie between 0 and 1. Also note, by normalizing the objective function of (31) with the marginal PDF of the misclosure vector t, \(f_{\underline{t}}(t)= \sum _{\alpha =0}^{k}f_{\underline{t}}(t|\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{a}]\), and recognizing the result as \(\textsf{E}(\underline{\texttt{r}}_{j}|t)=\sum _{\alpha =0}^{k} \texttt{r}_{j \alpha }(t)\textsf{P}[\mathcal {H}_{\alpha }|t]\), that the optimal partitioning (31) can be written as

thus showing that each decision i, i.e. each subset \(\mathcal {P}_{i}\), is having the smallest possible conditional mean penalty.

Expressing the minimum mean penalty partitioning in \(\textsf{E}(\underline{\texttt{r}}_{i}|t)\) is also insightful in case one of the penalty functions is simply equal to a constant.

Corollary 1

(A constant penalty function) Let decision \(i=l\) has the constant penalty functions \(\texttt{r}_{l\alpha }(t)=\rho \) for \(\alpha = 0, \ldots ,k\). Then, the minimum mean penalty partitioning follows from (31) as

\(\blacksquare \)

As an application, one can think of decision \(i=l\) being the decision to not identify one of the hypotheses, thereby declaring the parameter solution unavailable, cf. (22). The solution would then be declared unavailable when the smallest misclosure-conditioned mean penalty is still considered too large, i.e. larger than \(\rho \).

Finally note that we considered the occurrence of hypotheses as a discrete random variable, with its probabilities of occurrence given by the function \(\textsf{P}[\mathcal {H}_{\alpha }]\) (a stricter, but longer notation would have been \(\textsf{P}[\underline{\mathcal {H}}_{\alpha }=\mathcal {H}_{\alpha }]\)). Specifying these probabilities may not be easy and may require extensive experience on the actual frequencies of their occurrence. In the absence of such experience however, guidance may be taken from considerations of symmetry or complexity. For instance, if there is no reason to believe that one alternative hypothesis is more likely to occur than another, then with \(\textsf{P}[\mathcal {H}_{0}]=\pi _{0}\), the probabilities of the alternative hypotheses are given as \(\textsf{P}[\mathcal {H}_{\alpha }]=(1-\pi _{0})/k\) for \(\alpha = 1, \ldots , k\). Also, with reference to the principle of parsimony, one could consider describing the probabilities of occurrence as decreasing functions of the bias-vector dimensions, \(q_{\alpha }\). For instance, in case of multiple outlier testing, it seems reasonable to attach a lower probability to the simultaneous occurrence of a higher number of outliers. As an example, having \(\pi \ll 1- \pi \) as the probability of a single-outlier occurrence, one could model the probability of occurrence of an m-observation, \(q_{\alpha }\)-outlier hypothesis as

Although the assignment of probabilities \(\textsf{P}[\mathcal {H}_{\alpha }]\) may in general not be an easy task, some consolation can perhaps be taken from the following two considerations. First note, as the PDF (19) can be computed rigorously for any partitioning, that the hypothesis-conditioned quality description of the corresponding DIA-estimator will not suffer from inaccuracies in specifying \(\textsf{P}[\mathcal {H}_{\alpha }]\). Second we note, as \(\textsf{P}[\mathcal {H}_{\alpha }]\) in (31) occurs in a product with \(\texttt{r}_{j \alpha }(t)\), that any inaccuracies in the probability assignment may be interpreted as a variation in the risk penalty assignment.

We now give four simple examples to illustrate the workings of (31). We often make use of the simpler short-hand notation \(\pi _{\alpha }=\textsf{P}[\mathcal {H}_{\alpha }]\).

Example 2

(Detection-only) Let \(k=l=1\), \(\texttt{r}_{10}=\texttt{r}_{11}=\rho \), \(\textsf{E}(\underline{y}) \overset{\mathcal {H}_{0}}{\sim } \mathcal {N}_{m}(Ax, Q_{yy})\) and \(\mathcal {H}_{1} \ne \mathcal {H}_{0}\). Then (31) simplifies to \(\mathcal {P}_{0}=\{t \in \mathbb {R}^{r}\;|\; \texttt{r}_{00}F_{0}(t)+\texttt{r}_{01}F_{1}(t) < \rho (F_{0}(t)+F_{1}(t))\}\), from which follows

In this case the null-hypothesis would only be accepted if its misclosure-conditioned mean penalty is small enough. This case is referred to as ’detection-only’ as no identification of particular alternative hypotheses is asked for Zaminpardaz and Teunissen (2023). Rejection of the null-hypothesis would thus automatically lead to an unavailability of a parameter solution for x. \(\square \)

Example 3

(The \(k=l=1\) case, with varying penalties) Without the assumption of the same penalty \(\texttt{r}_{10}=\texttt{r}_{11}\) for decision \(i=1\), (31) simplifies, with \(\texttt{r}_{00}<\texttt{r}_{10}\), to

with \(c = \frac{\texttt{r}_{01}-\texttt{r}_{11}}{\texttt{r}_{10}-\texttt{r}_{00}}\frac{1-\pi _{0}}{\pi _{0}}\). Note that \(\mathcal {P}_{0}\) increases in size when \(\pi _{0}\) gets larger at the expense of \(\pi _{1}=1-\pi _{0}\) and/or when the relative penalty ratio \(\frac{\texttt{r}_{01}-\texttt{r}_{11}}{\texttt{r}_{10}-\texttt{r}_{00}}\) gets smaller. This is also what one would like to happen: for a larger occurrence probability of the null-hypothesis, a larger acceptance region, with in the limit no rejection at all when \(\pi _{0} \rightarrow 1\). Similarly, also with a decreasing relative risk of making the wrong decision \(i=0\) while \(\mathcal {H}_{1}\) is true, one would like the acceptance region to increase in size. \(\square \)

Example 4

\((k=l=2\) and \(\mathcal {P}_{0}\) is given) In this case we have three hypotheses and three decisions. We assume \((\texttt{r}_{21}-\texttt{r}_{11})\pi _{1}= (\texttt{r}_{12}-\texttt{r}_{22})\pi _{2}\). Then, with \(c= \tfrac{\pi _{0}}{(\texttt{r}_{21}-\texttt{r}_{11})\pi _{1}}>0\), the partitioning for the three hypotheses reads

This shows that if \(\texttt{r}_{20}\) gets larger, i.e. the penalty of choosing \(\mathcal {H}_{2}\) while \(\mathcal {H}_{0}\) is true gets larger, then the region \(\mathcal {P}_{1}\) gets larger at the expense of \(\mathcal {P}_{2}\). \(\square \)

Example 5

\((k=1\), \(l=2\), with undecided and \(\mathcal {P}_{0}\) is given) In this case, we have two hypotheses and three decisions. The partitioning for the three decisions follows then from (31), with \(\texttt{r}_{21} > \texttt{r}_{11}\), as

with \(c = \frac{\texttt{r}_{10}-\texttt{r}_{20}}{\texttt{r}_{21}-\texttt{r}_{11}}\frac{\pi _{0}}{1-\pi _{0}}\) and \(\mathcal {P}_{2}\) the undecided region \(\square \)

3.2 Creating an operational misclosure partitioning

Partitioning (31) is only operational if the PDFs \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })\) would be completely known. In our case, however, we also have to deal with the bias vectors \(b_{\alpha }\) of \(\mathcal {H}_{\alpha }\), cf. (5), and therefore, we only have the PDFs

available. We can now discriminate between the following three cases:

Case (a): When all the bias vectors are known, also the PDFs \(f_{\underline{t}}(t|\mathcal {H}_{\alpha }):=f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\) are known and partitioning (31) can be applied directly.

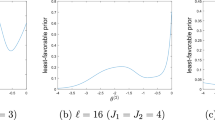

Case (b): When the bias vectors are considered random with known PDF \(f_{\underline{b}_{\alpha }}(b_{\alpha } | \mathcal {H}_{\alpha })\), the marginal PDF \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })\) can be constructed from the joint PDF \(f_{\underline{t}, \underline{b}_{\alpha }}(t, b_{\alpha }|\mathcal {H}_{\alpha })=f_{\underline{t}|b_{\alpha }}(t |b_{\alpha }, \mathcal {H}_{\alpha })f_{\underline{b}_{\alpha }}(b_{\alpha }|\mathcal {H}_{\alpha })\) as

where \(f_{\underline{t}|b_{\alpha }}(t |\beta , \mathcal {H}_{\alpha }):=f_{\underline{t}}(t|\beta , \mathcal {H}_{\alpha })\). Using (42), partitioning (31) can again be applied directly. For example, if it is believed that the distributional information on the biases can be captured by \(f_{\underline{b}_{\alpha }}(b|\mathcal {H}_{\alpha }) = \mathcal {N}_{q_{\alpha }}(0, Q_{\alpha })\), then the marginal PDF (42) becomes \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })=\mathcal {N}_{r}(0, Q_{tt}+C_{t_{\alpha }}Q_{\alpha }C_{t_{\alpha }}^{T})\), thus showing that the prior on the biases results under \(\mathcal {H}_{\alpha }\) in a variance-inflation of \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })\) in the hypothesized fault-direction \(\mathcal {R}(C_{t_{\alpha }})\). Would, alternatively, the PDF of \(\underline{b}_{\alpha }\) be so peaked that it becomes equal to a Dirac delta-function, \(f_{\underline{b}_{\alpha }}(\beta |\mathcal {H}_{\alpha })=\delta (\beta - b_{\alpha })\), with \(b_{\alpha }\) known, then substitution into (42) gives

thus recovering (40), but now with \(b_{\alpha }\) known.

Case (c): As the above two cases, bias-known or bias-random, may generally not be applicable, one will have to work with an alternative approach to cope with the unknown bias vectors. We present two such approaches. If \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })\) in (30) is replaced by \(f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\), the mean penalty is obtained as function of the unknown biases, \(\textsf{E}(\underline{\texttt{r}}|b_{1}, \ldots , b_{k})\). To cope with the unknown biases we try to capture the characteristics of the function by using its average \(\bar{\textsf{E}}(\underline{\texttt{r}})\) or by using an estimate \(\hat{\textsf{E}}(\underline{\texttt{r}})\). The first approach is realized if we replace \(f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\) in \(\textsf{E}(\underline{\texttt{r}}|b_{1}, \ldots , b_{k})\) by its average

in which \(|G_{\alpha }| = \int _{G_{\alpha }}d \beta \). To determine this average, we still need to choose the function \(g_{\alpha }(\beta )\). As we generally do not know more about the biases \(b_{\alpha } \in \mathbb {R}^{q_{\alpha }}\) than that they can occur freely around the origin, it seems reasonable to choose the function \(g_{\alpha }(\beta )\) as a flat function, symmetric about the origin, and having sufficient domain to include all the practically sized biases. In the unweighted case, this would be the function \(g_{\alpha }(\beta ) = |G_{\alpha }|\) over the domain \(G_{\alpha }\).

In the second approach, we use the bias-estimates \(\hat{b}_{\alpha }\) to estimate the mean penalty as \(\hat{\textsf{E}}(\underline{\texttt{r}})=\textsf{E}(\underline{\texttt{r}}|\hat{b}_{1}, \ldots , \hat{b}_{k})\). This approach is generally simpler than constructing the average \(\bar{\textsf{E}}(\underline{\texttt{r}})\). Furthermore, as the following Lemma shows, it provides a strict upper bound on the mean penalty function.

Lemma 2

(Maximum mean penalty): Let the mean penalty be estimated as \(\hat{\textsf{E}}(\underline{\texttt{r}})=\textsf{E}(\underline{\texttt{r}}|\hat{b}_{1}, \ldots , \hat{b}_{k})\), where \(\hat{b}_{\alpha }=\arg \max \limits _{\beta \in \mathbb {R}^{q_{\alpha }}} f_{\underline{t}}(t|\beta , \mathcal {H}_{\alpha })\), \(\alpha =1, \ldots ,k\). Then

\(\blacksquare \)

Proof

The proof follows by noting that the bias-dependent functions \(f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\) occur in a decoupled form in the nonnegative linear combinations of \(\textsf{E}(\underline{\texttt{r}}| b_{1}, \ldots , b_{k})\). Hence, its joint bias-maximizer is provided by the bias-maximizers of the individual functions \(f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\). \(\square \)

The relevance of this result is that by replacing in the mean penalty function the unknown bias vectors with their estimates \(\hat{b}_{\alpha }\), we automatically obtain a strict upperbound on the mean penalty, i.e. for none of the possible values that the bias vectors may take will the mean penalty be larger than this upperbound. Hence, by working with \(\hat{\textsf{E}}(\underline{\texttt{r}})\) instead of \(\bar{\textsf{E}}(\underline{\texttt{r}})\), one is assured of a conservative approach. As this property may be considered attractive in case of safety-critical applications, we will work in the following, when the biases are unknown, with \(\hat{\textsf{E}}(\underline{\texttt{r}})\). From using the above Lemma, its best partitioning is obtained as follows.

Theorem 2b

(Minimum mean penalty testing) The misclosure space partitioning \(\mathcal {P}_{i \in [0, \ldots , l]} \subset \mathbb {R}^{r}\) that minimizes the mean penalty upperbound

where \(\hat{b}_{\alpha }(t)=\arg \max \limits _{\beta \in \mathbb {R}^{q_{\alpha }}}f_{\underline{t}}(t|\beta , \mathcal {H}_{\alpha })\), is given by

where \(\hat{F}_{\alpha }(t)=f_{\underline{t}}(t|\hat{b}_{\alpha }(t),\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{\alpha }]\). \(\blacksquare \)

Note that all the results obtained so far in this section do not require the misclosure vector to be normally distributed. Also in the sections following we provide results that generally do not require such assumption. However, in all the examples following, we will assume the misclosure vector to be normally distributed as (7) and therefore that the bias-maximizer of \(f_{\underline{t}}(t|b_{\alpha }, \mathcal {H}_{\alpha })\) is given as \(\hat{b}_{\alpha }=(C_{t_{\alpha }})^{+}t\), cf. (6). The results of (31) and (47) then specialize to the following.

Theorem 2c

(Minimum mean penalty testing) Let the PDF of the misclosure vector be given as

Then, the misclosure space partitionings of (31), for known bias \(b_{\alpha }\), and (47), for estimated bias \(\hat{b}_{\alpha }(t)\), specialize to

where

with \(T_{q_{\alpha }}(t)= ||P_{C_{t_{\alpha }}}t||_{Q_{tt}}^{2}=||\hat{b}_{\alpha }(t)||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}\), \(T_{q_{\alpha }=0}(t)=0\), and \(\pi _{\alpha }=\textsf{P}[\mathcal {H}_{\alpha }]\). \(\blacksquare \)

Proof

As \(||t-C_{t_{\alpha }}b_{\alpha }||_{Q_{tt}}^{2}=||P_{C_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}+||\hat{b}_{\alpha }(t)-b_{\alpha }||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}\) and \(||P_{C_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}=||t||_{Q_{tt}}^{2}-||\hat{b}_{\alpha }(t)||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}\), we have

and therefore

which upon substitution into (31) proves the result. \(\square \)

Note, if \(\texttt{r}_{i\alpha }^{(\text {32})}(t)= \texttt{r}_{i\alpha }^{(\text {48})}(t)\exp \{+\tfrac{1}{2}||\hat{b}_{\alpha }(t)-b_{\alpha }||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}\}\), with \(\texttt{r}_{i\alpha }^{(\text {32})}(t)\) and \(\texttt{r}_{i\alpha }^{(\text {48})}(t)\) being the penalty functions of (31) and (47), respectively, that the bias-known case transforms into the bias-estimated case, thus showing that the switch from the bias-known to the bias-estimated case, cf. (50), can also be interpreted as a use of different penalty functions.

We now give two simple examples to show the workings of (49).

Example 6

(Detection only): Let \(k=l=1\), with \(\mathcal {H}_{1}\) being the most relaxed alternative hypothesis, \(\textsf{E}(\underline{t}) \in \mathbb {R}^{r} {\setminus } \{0\}\). Then \(T_{q_{1}=r}=||t||_{Q_{tt}}^{2}\), from which it follows with (49), and \(\texttt{r}_{\alpha \alpha }(t) < \texttt{r}_{i \alpha }(t)\), \(i \ne \alpha \), that

with \(\tau ^{2}=\ln \left[ \frac{\texttt{r}_{10}(t)-\texttt{r}_{00}(t)}{\texttt{r}_{01}(t)-\texttt{r}_{11}(t)}\frac{\pi _{0}}{\pi _{1}}\right] ^{2} \). This shows how the overall-model test statistic \(T_{q_{1}=r}=||t||_{Q_{tt}}^{2}\) is used in the acceptance or rejection of \(\mathcal {H}_{0}\). \(\square \)

Example 7

(Undecided included): Let \(k=1\), \(l=2\) and assume that \(\mathcal {P}_{0}\) is a-priori given. Thus we have two hypotheses and three decisions. As alternative hypothesis, we take \(\mathcal {H}_{1}: \textsf{E}(\underline{t})=C_{1}b_{1} \ne 0\). Then \(T_{q_{1}}=||P_{C_{1}}t||_{Q_{tt}}^{2}=||\hat{b}(t)||_{Q_{\hat{b}\hat{b}}}^{2}\), from which it follows with (49), and \(\texttt{r}_{11}(t) < \texttt{r}_{21}(t)\), that

with \(\tau ^{2}=\ln \left[ \frac{\texttt{r}_{10}(t)-\texttt{r}_{20}(t)}{\texttt{r}_{21}(t)-\texttt{r}_{11}(t)}\frac{\pi _{0}}{\pi _{1}}\right] ^{2}\). The misclosure space partitioning is shown in Fig. 3 for \(q_{1}=1\) and \(r=2\). Note how the size of the undecided region \(\mathcal {P}_{2}\) is driven by \(\texttt{r}_{20}\) and \(\texttt{r}_{21}\). If both get larger then \(\mathcal {P}_{1}\) gets larger and \(\mathcal {P}_{2}\) smaller. \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\): \(\mathcal {P}_{0}\) for detection, \(\mathcal {P}_{1}\) for identifying \(\mathcal {H}_{1}\), and \(\mathcal {P}_{2}\) for unavailability decision of Example 7

3.3 Maximizing the probability of correct decisions

The minimum mean penalty partitioning of misclosure space simplifies if an additional simplifying structure is given to the set of penalty functions. This is the case for instance when \(l=k\) and all correct decisions are given the same penalty and the penalties for incorrect decisions are symmetrized.

Corollary 2a

(Symmetric penalties) For symmetric and identical correct-decision penalties, \(\texttt{r}_{i\alpha }(t)=\texttt{r}_{\alpha i}(t)\) and \(\texttt{r}_{ii}(t)=r(t)\), \(i, \alpha \in [0, \ldots , l=k]\), the minimum mean penalty misclosure partitionings of (31) and (49) simplify, respectively, to

and

with

where \(\mu _{ij\alpha }(t)=\tfrac{\texttt{r}_{i\alpha }(t)-\texttt{r}_{j\alpha }(t)}{\texttt{r}_{ij}(t)-r(t)}\), \(\texttt{r}_{ij}(t)>r(t)\) for \(i \ne j\). \(\blacksquare \)

This result shows how the g- and h-functions drive the difference between the individual partitioning subsets. For instance, if \(g_{ij}(t)>0\) and \(h_{ij}(t)>0\), then \(\mathcal {P}_{i}\) can expected to be smaller than \(\mathcal {P}_{j}\) for when their probability of hypothesis occurrence is equal. This happens when the penalties of decision i are larger than those of decision j, \(\texttt{r}_{i\alpha }(t)>\texttt{r}_{j\alpha }(t)\).

A further simplification is reached when all penalties for incorrect decisions are taken to be equal, since then the g- and h-functions of (55) and (56) vanish, \(g_{ij}(t)=h_{ij}(t)\equiv 0\). As an example consider the case that \(\texttt{r}_{\alpha \alpha }(t)=r_{\alpha }\) and \(\texttt{r}_{i\alpha }(t)=1\) for \(i \ne \alpha \). Then the penalty functions become

with \(\delta _{i\alpha }=1\) for \(i=\alpha \) and \(\delta _{i\alpha }=0\) otherwise, from which the mean penalty follows as

with reward \(\rho _{\alpha } = 1-r_{\alpha }\). Minimizing the mean penalty is now the same as maximizing a reward-weighted probability sum of correct decisions. This simplification also translates into the solution of the testing partitioning.

Corollary 2b

(Maximum correct decision probability) Let \(l=k\) and the penalty functions be given as (58). Then (31) and (49) simplify respectively to

and

with \(\rho _{\alpha }=1-r_{\alpha }\). \(\blacksquare \)

Note, since \(F_{\alpha }(t)=\pi _{\alpha }f_{\underline{t}}(t|\mathcal {H}_{\alpha })\), that through products \(\rho _{\alpha }\pi _{\alpha }\), \(\alpha =0, \ldots ,k\), credence is given to hypotheses. The larger \(\rho _{\alpha }\pi _{\alpha }\) gets, the more credence is given to \(\mathcal {H}_{\alpha }\). Although the reward \(\rho _{\alpha }=1-r_{\alpha }\) and the hypothesis occurrence probability \(\pi _{\alpha }\) both come together as a product, and therefore, as such, can create the same effect on \(\mathcal {P}_{\alpha }\), it is important to realize that they have a different origin, i.e. the reward \(\rho _{\alpha }\) is user-driven, while the probability \(\pi _{\alpha }\) is model-driven. Furthermore, the \(\pi _{\alpha }\)’s have to sum up to 1, while such is not required for the \(\rho _{\alpha }\)’s.

To provide a clearer description of the detection and identification steps in the above testing procedure, we may separate the conditions for \(\mathcal {P}_{0}\) and \(\mathcal {P}_{i \in [1, \ldots ,k]}\). For (61) this gives,

This shows, given the misclosure vector t, that the detection step consists of computing the maximum of \(T_{\alpha }(t)+\ln \rho _{\alpha }^{2}\) over all k alternative hypotheses and checking whether this is smaller than the constant \(\ln [\rho _{0}\pi _{0}]^{2}\). If it is, then the null-hypothesis is accepted. If not, then the maximum determines which of the alternative hypotheses is identified as the reason for the rejection of the null-hypothesis.

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) for single-outlier data snooping (cf. Example 9)

In the following examples, we illustrate how the above determined testing partitioning compares or specializes to some of the testing procedures used in practice.

Example 8

(Bias known vs. bias unknown) In this example, we illustrate the role the bias plays in the transition from (60) to (61). From the decomposition

it follows, with \(F_{\alpha }(t)=\pi _{\alpha }f_{\underline{t}}(t|\mathcal {H}_{\alpha }) \propto \exp \{-\tfrac{1}{2}||t-C_{t_{\alpha }}b_{\alpha }||_{Q_{tt}}^{2}\), that the objective function \(\rho _{\alpha }F_{\alpha }(t)\) of (60) is driven by two different measures of inconsistency: the inconsistency of t with the range space of \(C_{t_{\alpha }}\) as measured by \(||P_{C_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}\) and the difference between the estimated and known bias, as measured by \(||\hat{b}_{\alpha }(t)-b_{\alpha }||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}\). This second discrepancy measure disappears in case of (61), as then the unknown bias is replaced by its estimate, thus giving

and therefore (61). Note, as alternative to the conservative approach (cf. Lemma 2), that one may also consider using the approximation \(||t-C_{t_{\alpha }}b_{\alpha }||_{Q_{tt}}^{2} \approx ||P_{C_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}+q_{\alpha }\), since \(\textsf{E}(||\hat{\underline{b}}_{\alpha }-b_{\alpha }||_{Q_{\hat{b}_{\alpha }\hat{b}_{\alpha }}}^{2}|\mathcal {H}_{\alpha })=q_{\alpha }\). \(\square \)

Example 9

(Datasnooping with \(\mathcal {P}_{0}\) known) In this example, we consider the detection subset \(\mathcal {P}_{0}\) to be given and equal to the acceptance region of the overall model test,

Furthermore, we consider the case that the C-matrices of all alternative hypotheses are one-dimensional, i.e. \(q_{\alpha }=1\), \(C_{\alpha }=c_{\alpha }\) for \(\alpha =1, \ldots , k\). This is the case, for instance, when only single blunders in the \(k=m\) observations are considered. As there are no differences in the complexities of the k alternative hypotheses and no reason for assuming certain alternative hypotheses to be more likely than others, the choice \(\pi _{\alpha }= \textrm{constant}\), \(\alpha =1, \ldots , k\), seems a reasonable one. Additionally, we assume that no penalties are assigned to correct decisions, i.e. \(r_{\alpha }=0\), \(\alpha =1, \ldots ,k\). Then, \(T_{\alpha }=T_{q_{\alpha }}+\textrm{constant}\), which, together with \(T_{q_{\alpha }}=w_{\alpha }^{2}\), gives for (61),

This is the partitioning corresponding to Baarda’s standard datasnooping procedure (Baarda 1968b) in case \(k=m\) and the \(c_{\alpha }\) are equal to the canonical unit vectors.

The above partitioning, cf. (65) and (66), is shown in Fig. 4 for the same B-matrix and same \(Q_{tt}\)-matrix as used in Example 1. In this case, however, \(k=4\) with \(c_{1}=[1,0,0]^{T}\), \(c_{2}=[0,1,0]^{T}\), \(c_{3}=[0,0,1]^{T}\) and \(c_{4}=[1,2,3]^{T}\). Furthermore, so as to view misclosure space in the standard metric, the misclosure vector was transformed with

such that the transformed misclosure vector \(\bar{\underline{t}}=R\underline{t}\) has identity variance matrix, \(Q_{\bar{t}\bar{t}}=I_{2}\). The detection-region \(\mathcal {P}_{0}\) shows therefore as a circle instead of an ellipse, cf. Fig. 2. The \(c_{\bar{t}}\)-vectors are then given as \(c_{\bar{t}_{i}}=RB^{T}c_{i}\), \(i=1,2,3,4\). \(\square \)

Example 10

(Datasnooping with \(\mathcal {P}_{0}\) unknown) In this example, the same settings are used as in the previous example, except that now the detection subset \(\mathcal {P}_{0}\) is assumed unknown. Using \(\pi _{\alpha }=\tfrac{1}{k}(1-\pi _{0})\), \(\alpha =1, \ldots ,k\), and \(||P_{c_{t_{\alpha }}}t||_{Q_{tt}}^{2}=w_{\alpha }^{2}\), \(\mathcal {P}_{0}\) follows from the first expression of (61) as

with \(a=\ln \left( \tfrac{k \pi _{0}}{1-\pi _{0}}\right) ^{2}\). The k corresponding subsets \(\mathcal {P}_{i}\) are given by (66).

Expression (68) shows that \(\mathcal {P}_{0}\) is determined as the intersection of k pairs of \((k-1)\)-dimensional hyperplanes, having the direction vectors \(c_{t_{\alpha }}\), \(\alpha = 1, \ldots , k\), as their normals. The distance of the origin to these hyperplanes is governed by the constants \(\ln \left( \frac{k \pi _{0}}{1-\pi _{0}}\right) ^{2}\), \(\alpha = 1, \ldots , k\). These distances, and thereby the volume of \(\mathcal {P}_{0}\), get larger when k and/or \(\pi _{0}\) increases. Hence, the acceptance region \(\mathcal {P}_{0}\) increases in size when the probability of \(\mathcal {H}_{0}\)-occurrence increases and/or the number of alternative hypotheses increases.

The above partitioning is shown in Fig. 5 for the same model and hypotheses as used in Example 9. Compare this geometry with that of Fig. 4. \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) for single-outlier data snooping, showing the detection region \(\mathcal {P}_{0}\) as a polygon (cf. Example 10)

3.4 Including an undecided region \(\mathcal {P}_{k+1}\)

We now extend Corollary 2b so as to also include an undecided region.

Corollary 2c

(Maximum correct decision probability) Let \(l=k+1\), the penalty functions be given as (58) and the undecided penalties as \(\texttt{r}_{k+1, \alpha }(t)=u_{\alpha }\), \(\alpha =0, \ldots ,k\). Then (31) and (49) simplify, respectively, to

and

where \(\rho _{\alpha }=1-r_{\alpha }\), \(\mu _{\alpha }=1-u_{\alpha }\), \(G(t)=\sum _{\alpha =0}^{k} \mu _{\alpha }F_{\alpha }(t)\) and \(H(t)= \ln [\sum _{\alpha =0}^{k} \mu _{\alpha }\exp \{+\tfrac{1}{2}T_{\alpha }(t)\}]^{2}\). \(\blacksquare \)

Note, when the detection region \(\mathcal {P}_{0}\) would be a-priori given, that (69) would change to

with a similar change to (70). Also note, when comparing Corollary 2c with Corollary 2b, that the defining conditions for \(\mathcal {P}_{i \in [0, \ldots ,k]}\) look the same, cf. (60) vs (69), but are actually not the same, since they apply, in case of Corollary 2c, to the restricted space \(\mathbb {R}^{r}{\setminus }{\mathcal {P}_{k+1}}\), i.e. misclosure space with the undecided region excluded. The characteristics of the undecided region \(\mathcal {P}_{k+1} \subset \mathbb {R}^{r}\) are driven by the undecided penalties \(u_{\alpha }\) one assigns to the hypotheses \(\mathcal {H}_{\alpha }\), \(\alpha =0, \ldots ,k\). One can expect \(\mathcal {P}_{k+1}\) to be empty if one assigns the maximum penalty to all. And indeed, if \(u_{\alpha }=1\) for \(\alpha =0, \ldots ,k\), then the inequality in (69) will never be satisfied, implying \(\mathcal {P}_{k+1} = \emptyset \). Similarly, if no penalty at all is put on an undecided decision and thus \(u_{\alpha }=0\) for \(\alpha =0, \ldots ,k\), then the inequality of (69) is trivially fulfilled, implying that \(\mathcal {P}_{k+1}=\mathbb {R}^{r}\). Hence, in this case no other decision than an undecided decision will be made.

If all the undecided penalties are equal, \(u_{\alpha }=u\), and all the rewards equal one, \(\rho _{\alpha }=1\), \(\alpha =0, \ldots ,k\), then division by \(f_{\underline{t}}(t)= \sum _{\alpha =0}^{k} \pi _{\alpha }f_{\underline{t}}(t|\mathcal {H}_{\alpha })\) of both sides of (69)’s inequality, gives for the undecided region the inequality

As the probability \(\textsf{P}[\mathcal {H}_{\alpha }|t]\) for \(t \in \mathcal {P}_{\alpha }\) tends to decrease towards the boundaries of \(\mathcal {P}_{\alpha }\), one can expect the undecided region to be located at the boundaries of these regions and therefore indeed provide an undecided decision if identifiability between two hypotheses becomes problematic.

Example 11

(Two hypotheses and three decisions) As an application of Corollary 2c, let \(k=1\), \(l=2\), and assume \(\mu _{\alpha }=\mu \) for \(\alpha =0,1\). The two hypotheses considered are \(\underline{t}\overset{\mathcal {H}_{0}}{\sim } \mathcal {N}_{r}(0, Q_{tt})\) and \(\underline{t}\overset{\mathcal {H}_{1}}{\sim } \mathcal {N}_{r}(C_{t_{1}}b_{1}, Q_{tt})\). For the three decisions, we need to determine the partitioning \(\mathbb {R}^{r}=\mathcal {P}_{0} \cup \mathcal {P}_{1} \cup \mathcal {P}_{2}\). For \(\mathcal {P}_{0}\) and \(\mathcal {P}_{1}\), we obtain from (70),

which can be rewritten as

We now determine the undecided region \(\mathcal {P}_{2}\). According to (70), \(\mathcal {P}_{2}\) is defined by the two inequalities

which can be rewritten as

with the bounds given as

It follows from (74) that \(\mathcal {P}_{2}\) is empty if \(\textrm{LB}>\textrm{UB}\), in which case \(\mathcal {P}_{0}\) and \(\mathcal {P}_{1}\) follow from (73) as

Since \(\textrm{LB} > \textrm{UB}\) if \(\mu < (\tfrac{1}{\rho _{0}}+\tfrac{1}{\rho _{1}})^{-1}\), it follows that \(\mathcal {P}_{k+1}\) is empty if \(\mu \) is small enough.

The undecided region \(\mathcal {P}_{2}\) is nonempty if \(\textrm{LB}<\textrm{UB}\). As both inequalities of (74) need then to be satisfied, its complement \(\mathbb {R}^{r}{\setminus }{\mathcal {P}_{2}}\) requires that only one of the following two inequalities need to be satisfied,

Since \(\textrm{LB}<\ln \left[ \tfrac{\rho _{0}\pi _{0}}{\rho _{1}\pi _{1}}\right] ^{2}\) and \(\textrm{UB}>\ln \left[ \tfrac{\rho _{0}\pi _{0}}{\rho _{1}\pi _{1}}\right] ^{2}\) if \(\textrm{LB}<\textrm{UB}\), it follows from (73) and (77) that in case \(\mathcal {P}_{2}\) is nonempty, the three subsets are given as

An illustration of this misclosure partitioning is given in Fig. 6. Compare this with the partitioning of Example 7 and Fig. 3. \(\square \)

Misclosure space partitioning of Example 11: \(\mathcal {P}_{0}\) for detection, \(\mathcal {P}_{1}\) for identifying \(\mathcal {H}_{1}\), and \(\mathcal {P}_{2}\) for unavailability decision

Example 12

(Datasnooping with \(\mathcal {P}_{0}\) given and undecided included) Consider the null- and alternative hypotheses \(\underline{y}\overset{\mathcal {H}_{0}}{\sim } \mathcal {N}_{m}(Ax, Q_{yy})\) and \(\underline{y}\overset{\mathcal {H}_{\alpha }}{\sim } \mathcal {N}_{m}(Ax+c_{\alpha }b_{\alpha }, Q_{yy})\), with \(\pi _{\alpha }=\tfrac{1-\pi _{0}}{k}\), \(\alpha = 1, \ldots ,k\), and assume the penalty functions (58) with \(r_{\alpha }=1-\rho \), together with the undecided penalties \(u_{\alpha }=1-\mu \). As the a-priori chosen detection region we take the acceptance region of the overall model test. Then the misclosure space partitioning follows from Corollary 2c, cf. (71), as

where

Compare this partitioning with that of Example 9. Would the undecided region \(\mathcal {P}_{k+1}\) be empty, then the above partitioning reduces back to that of Example 9, cf. (65) and (66). The undecided region is empty, \(\mathcal {P}_{k+1}=\emptyset \), if the undecided reward is zero, \(\mu =1-u=0\). The \(\mathcal {P}_{k+1}\)-defining inequality can then never be satisfied. Also note that h(t) gets larger if the undecided reward \(\mu =1-u\) gets larger, which then, as expected, also increases the size of the undecided region \(\mathcal {P}_{k+1}\).

With the above partitioning, the testing would proceed as follows. First one would execute the detection-step by checking whether or not \(t \in \mathcal {P}_{0}\). If so, then \(\mathcal {H}_{0}\) would be accepted. If not, then one would proceed to check whether or not \(t \in \mathcal {P}_{k+1}\). This is done by computing the largest \(w_{\alpha }^{2}(t)\), \(\alpha =1, \ldots ,k\), say \(w_{i}^{2}(t)\), and checking whether it is less than h(t). If not, then \(\mathcal {H}_{i}\) is the identified hypothesis. If so, then \(t \in \mathcal {P}_{k+1}\) and the decision is made that no parameter solution can be provided.

Note that the ith alternative hypothesis is identified if \(w_{i}^{2}(t) \ge w_{\alpha }^{2}(t)\), \(\forall \alpha \), while \(w_{i}^{2}(t) \ge h(t)\) and \(w_{i}^{2}(t)>\tau ^{2}-||P_{c_{t_{i}}}^{\perp }t||_{Q_{tt}}^{2}\). The latter two conditions ensure that such identification only happens if the in absolute value largest w-statistic is also sufficiently large.

The above partitioning is shown in Fig. 7 for the same model and hypotheses as used in Example 9. Compare this geometry with that of Fig. 5 and note how the undecided region \(\mathcal {P}_{5}\) separates the regions \(\mathcal {P}_{i \in [0, \ldots ,4]}\) when the biases are large enough to be detected, but yet too small to be identified. \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) for single-outlier data snooping, with an undecided region \(\mathcal {P}_{5}\) included (cf. Example 12)

Example 13

(Datasnooping with \(\mathcal {P}_{0}\) given and alternative undecided region included) This example is to illustrate that the liberal definition of penalty functions in Sect. 3.1 allows one to interpret existing testing procedures in terms of assigned penalties. In Teunissen (2018), p.66, the following partitioning was considered,

Its geometry is shown in Fig. 8 for the same model and hypotheses as used in Example 9. The idea behind this chosen undecided region \(\mathcal {P}_{k+1}\) is that the sample of \(\underline{t}\) should lie close enough to a fault line \(c_{t_{\alpha }}b_{\alpha }\) for that hypothesis to be identifiable.

We can now use the results of Corollary 2c, cf. (70), to show the penalties that result in partitioning (81). If we assume \(\rho _{\alpha }=0\) and take the undecided rewards as

it follows with \(||P_{C_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}=||t||_{Q_{tt}}^{2}-T_{\alpha }(t)+\ln \pi _{\alpha }^{2}\), from (70) that

which indeed reduces to that of (81) if \(q_{\alpha }=1\), \(\pi _{\alpha }=(1-\pi _{0})/k\) and \(\bar{\tau }'^{2}=\tau ^{2}-\ln \tfrac{1-\pi _{0}}{k}\). Hence, to obtain the linearly structured undecided region of (81), its \(k+1\) reward functions need to be chosen as exponentially increasing functions of the squared-distances \(||P_{c_{t_{\alpha }}}^{\perp }t||_{Q_{tt}}^{2}\) to the respective fault lines. \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) for single-outlier data snooping, with an alternative undecided region \(\mathcal {P}_{5}\) included (cf. Example 13)

Example 14

(Datasnooping with undecided included) The same assumptions are made as in Example 12, with (80), except that now \(\mathcal {P}_{0}\) is not assumed to be a-priori given. Then, the misclosure space partitioning of \(\mathbb {R}^{r}\) follows from Corollary 2c as

Note that this partitioning reduces to that of Example 10 in case the undecided region \(\mathcal {P}_{k+1}\) would be empty. This happens when the undecided reward is equal to zero, \(\mu =1-u=0\).

With the above ordering of the partitioning, one would first check whether a parameter solution would be available by checking whether or not \(t \in \mathcal {P}_{k+1}\). Only when \(t \notin \mathcal {P}_{k+1}\) would one then check on the acceptability of the null-hypothesis \(\mathcal {H}_{0}\). As in the majority of our applications the occurrence-probability of the null-hypothesis, \(\textsf{P}[\mathcal {H}_{0}]=\pi _{0}\), will be high, as well as the probability of its correct acceptance, \(\textsf{P}[t \in \mathcal {P}_{0}|\mathcal {H}_{0}]\), it is more advantageous to seek an ordering in the partitioning that starts with \(\mathcal {P}_{0}\) rather than with \(\mathcal {P}_{k+1}\). This can be achieved by noting that the complements of \(\mathcal {P}_{k+1}\) and \(\mathcal {P}_{0}\) are given as:

Combining this result with that of (84) allows us to write the partitioning in the following order:

With this ordering, we can now also compare the partitioning directly with that of Example 12, cf. (79), thus clearly showing how they differ in their definition of the detection region \(\mathcal {P}_{0}\).

The above partitioning is shown in Fig. 9 for the same model and hypotheses as used in Example 9. \(\square \)

Misclosure space partitioning in \(\mathbb {R}^{r=2}\) for single-outlier data snooping, with an undecided region \(\mathcal {P}_{5}\) included (cf. Example 14)

4 Maximum probability estimators

In the previous section, we have shown how the choice of penalty functions leads to corresponding minimum mean penalty partitionings of misclosure space. One such choice leads to a partitioning maximizing the probability of correct decisions. Although this property is attractive indeed from the perspective of testing, it may not be sufficient from the viewpoint of estimation. Afterall, having a maximized probability of correct hypothesis identification does not necessarily imply good performance of the DIA-estimator. The first is driven by the misclosure vector \(\underline{t}\), while the second is also driven by the \(\hat{\underline{x}}_{i}\)’s. Thus instead of focussing on correct decisions, one would be better off focussing on the consequences of the decisions made. In this section we will therefore introduce penalty functions that penalize unwanted outcomes of the DIA-estimator. As a result two new estimators with corresponding misclosure space partitionings are identified and derived. They are the optimal DIA-estimator and the optimal WSS-estimator.

4.1 The optimal DIA-estimator

To determine an appropriate penalty function for the DIA-estimator \(\bar{\underline{x}}\), we should think of one that penalizes its unwanted outcomes when a decision i is made under hypothesis \(\mathcal {H}_{\alpha }\). As decision i corresponds with an outcome of \(\underline{\hat{x}}_{i}\) and since such outcome is unwanted when it lies in the complement of the tolerance or safety region, \(\varOmega _{x}^{c}=\mathbb {R}^{n}{\setminus }{\varOmega _{x}}\), we would like the probability of such outcomes happening under \(\mathcal {H}_{\alpha }\) to be small. We therefore introduce this probability, for a given misclosure vector t, as our penalty function. Then, if the probability of such an unwanted outcome is large, the penalty will be large as well.

Definition (DIA-penalty function): The penalizing function of the DIA-estimator \(\bar{\underline{x}}_{\textrm{DIA}}=\sum _{i=0}^{k} \underline{\hat{x}}_{i}p_{i}(\underline{t})\) is defined as:

We will now show how this penalty function can be used to find the optimal DIA-estimator, i.e. the DIA-estimator that within its class has the largest probability of lying inside its safety region, \(\textsf{P}[ \bar{\underline{x}}_{\textrm{DIA}} \in \varOmega _{x}]\), or equivalently, has the smallest integrity risk, \(\textsf{P}[ \bar{\underline{x}}_\textrm{DIA} \in \varOmega _{x}^{c}]\). We have the following result.

Theorem 3

(Optimal DIA-estimator) Let \(\bar{\mathcal {P}}_{i \in [0, \ldots , k]} \subset \mathbb {R}^{r}\) denote the misclosure space partitioning that of all such partitionings maximizes the DIA-estimator’s probability \(\textsf{P}[\bar{\underline{x}}_{\textrm{DIA}} \in \varOmega _{x}]\). Then,

where

\(\square \)

Proof

We first proof that the mean penalty (30) becomes identical to the integrity risk if the penalty functions are chosen as (87),

We have

Step (a) follows from substituting \(\texttt{r}_{i\alpha }=\bar{\texttt{r}}_{i\alpha }\), cf. (87), and recognizing that \(f_{\underline{t}}(t|\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{\alpha }]=\textsf{P}[\mathcal {H}_{\alpha }|t]f_{\underline{t}}(t)\). Step (b) follows from recognizing that \(\textsf{P}[ \underline{\hat{x}}_{i} \in \varOmega _{x}^{c}\;|\;t]=\sum _{\alpha =0}^{k} \textsf{P}[ \underline{\hat{x}}_{i} \in \varOmega _{x}^{c}\;|\;t, \mathcal {H}_{\alpha }]\textsf{P}[\mathcal {H}_{\alpha }|t]\). Step (c) introduces the indicator function \(p_{i}(t)\) of \(\mathcal {P}_{i}\). Step (d) recognizes, since \(\bar{\underline{x}}_{\textrm{DIA}}=\sum _{i=1}^{k} \hat{\underline{x}}_{i}p_{i}(\underline{t})\), the conditional probability equality \(\textsf{P}[ \underline{\hat{x}}_{i} \in \varOmega _{x}^{c}\;|\;t]= \textsf{P}[ \bar{\underline{x}}_{\textrm{DIA}} \in \varOmega _{x}^{c}\;|\;t]\) for \(t \in \mathcal {P}_{i}\). Step (e) follows from using \(\sum _{i=1}^{k} p_{i}(t)=1\) and step (f) from the continuous version of the total probability rule.

Having established (90), the result (88) follows from applying Theorem 2a, cf. (31). \(\square \)

In analogy with (33), also the partitioning (89) can be given an insightful probabilistic interpretation. As \(\textsf{P}[\underline{\hat{x}}_{i} \in \varOmega _{x}^{c}|t]f_{\underline{t}}(t)=\sum _{\alpha =0}^{k} \textsf{P}[\underline{\hat{x}}_{i} \in \varOmega _{x}^{c}|t, \mathcal {H}_{\alpha }]f_{\underline{t}}(t|\mathcal {H}_{\alpha })\textsf{P}[\mathcal {H}_{\alpha }]\), we have

thus showing that each of the defining regions \(\bar{\mathcal {P}}_{i}\) of the optimal DIA-estimator is characterized by having the smallest misclosure-conditioned integrity risk.

If we also include a no-identification or undecided region for which the DIA-estimator is said to be unavailable, cf. (22), then we have, for the case of a constant unavailability penalty \(\bar{\texttt{r}}_{(k+1)\alpha }(t)=u\), in analogy with Corollary 1, cf. (34), the following optimal partitioning.

Corollary 3

(Unavailability included) Let the DIA-penalty function (87) be extended with the unavailability penalty \(\bar{\texttt{r}}_{(k+1)\alpha }(t)=u\). Then the minimum mean penalty partitioning follows from (31) as

\(\square \)

Hence, a no-identification or unavailability decision is made when the smallest misclosure-conditioned integrity risk is still considered too large.

4.2 DIA-penalty function and the choice for \(\varOmega _{x}\)

The DIA-penalty function \(\bar{\texttt{r}}_{i\alpha }(t)\) is defined in (87) as a conditional probability of \(\hat{\underline{x}}_{i} \in \varOmega _{x}^{c}\) under the alternative hypothesis \(\mathcal {H}_{\alpha }\). The following Lemma shows how this probability can be computed directly from the distribution of \(\hat{\underline{x}}_{0}\) under \(\mathcal {H}_{0}\).

Lemma 3

(DIA-penalty function): The required probability for the DIA-penalty function (87) can be computed under \(\mathcal {H}_{0}\) for a general x-centred region \(\varOmega _{x} \subset \mathbb {R}^{n}\) as

and specifically for the ellipsoidal region

as

\(\square \)

Proof

We first prove (93). We have

from which (93) follows. Step (a) follows from substituting \(\underline{\hat{x}}_{i}=\underline{\hat{x}}_{0}-A^{+}C_{i}\hat{\underline{b}}_{i}(t)\) and recognizing that the conditioning on t makes \(\hat{b}_{i}(t)\) nonrandom. Step (b) follows by recognizing that \(\underline{t}\) is independent of \(\underline{\hat{x}}_{0}\), cf. (4), and for step (c) we made use of the relation \(\underline{\hat{x}}_{0}|\mathcal {H}_{\alpha }=\underline{\hat{x}}_{0}|\mathcal {H}_{0}+A^{+}C_{\alpha }b_{\alpha }\).

For the special ellipsoidal case, we have \(\bar{\texttt{r}}_{i\alpha }(t) \overset{(\text {93})}{=} 1-\textsf{P}[\hat{\underline{x}}_{0} \in \varOmega _{x+\varDelta x_{i\alpha }(t)}|\mathcal {H}_{0}] = 1- \textsf{P}[||\underline{\hat{x}}_{0}-x-\varDelta x_{i\alpha }||_{Q_{\hat{x}_{0}\hat{x}_{0}}}^{2}<\tau ^{2}|\mathcal {H}_{0}]\), from which, with \(\hat{\underline{x}}_{0} \overset{\mathcal {H}_{0}}{\sim } \mathcal {N}_{n}(x, Q_{\hat{x}_{0}\hat{x}_{0}})\), (95) follows. \(\square \)