Abstract

The debates between Bayesian, frequentist, and other methodologies of statistics have tended to focus on conceptual justifications, sociological arguments, or mathematical proofs of their long run properties. Both Bayesian statistics and frequentist (“classical”) statistics have strong cases on these grounds. In this article, we instead approach the debates in the “Statistics Wars” from a largely unexplored angle: simulations of different methodologies’ performance in the short to medium run. We used Big Data methods to conduct a large number of simulations using a straightforward decision problem based around tossing a coin with unknown bias and then placing bets. In this simulation, we programmed four players, inspired by Bayesian statistics, frequentist statistics, Jon Williamson’s version of Objective Bayesianism, and a player who simply extrapolates from observed frequencies to general frequencies. The last player served a benchmark function: any worthwhile statistical methodology should at least match the performance of simplistic induction. We focused on the performance of these methodologies in guiding the players towards good decisions. Unlike an earlier simulation study of this type, we found no systematic difference in performance between the Bayesian and frequentist players, provided the Bayesian used a flat prior and the frequentist used a low confidence level. The Williamsonian player was also able to perform well given a low confidence level. However, the frequentist and Williamsonian players performed poorly with high confidence levels, while the Bayesian was surprisingly harmed by biased priors. Our study indicates that all three methodologies should be taken seriously by philosophers and practitioners of statistics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

If there is any suspicion that philosophy of science is an ivory tower subject, it should be extinguished by what Deborah Mayo has called the “Statistics Wars” between classical statisticians, Bayesians, and a prismatic assortment of variations of these views (Ioannidis, 2005; Howson and Urbach, 2006; Wasserstein and Lazar, 2016; Mayo, 2018; van Dongen et al., 2019; Sprenger and Hartmann, 2019; Romero and Sprenger, 2020). Even apparently recondite questions about concepts like evidence, probability, and rational belief are connected with questions of statistical practice. These questions have been given particular salience by the “replication crisis”, in which the rates of replication in published statistical research across a range of scientific fields are apparently below what would be expected from random variation alone (Gelman, 2015; Open Science Collaboration, 2015; Smaldino and McElreath, 2016; Fidler and Wilcox, 2018; Trafimow, 2018). All factions within the Statistics Wars make plausible (but often incompatible!) cases that their particular methodology, properly applied, can mitigate some of the malpractices behind the replication crisis. Furthermore, far from being dry debates, the Statistics Wars are frequently characterised by the sort of aggressive rhetoric, bombastic manifestos, and political maneuvering that their name would suggest. And this war-like atmosphere is understandable, because the Wars affect statistical practice, and thereby the health, wealth, and happiness of nations.

Statisticians are often very practical people, so when they are faced with these debates, many of them naturally think that either classical or Bayesian methods can be appropriate in various contexts. Statisticians often say very reasonable things such as “What matters is not who is right about issues in philosophical analysis, but what works best”. Some philosophers of science might be attracted to such attempts to pacify the conflicts. Unfortunately, by itself, this pacification strategy fails, because assessing whether a methodology will “work” in a context depends on standards for evaluating what constitutes “working”, and as soon as we start determining those standards, we start making choices about the proper analysis of terms like “evidence”, “test”, “severe test”, and for that matter “work”.

Yet there is wisdom in the practicing statistician’s pragmatism. The philosophical debates within the Statistics Wars are rumbling on with no end in sight, as philosophical debates tend to do. One problems is that the rival statistical methodologies often have different goals, e.g. Bayesians often focus more on the decisions of an ideally rational agent, whereas frequentists are often more interested in the reliability of a particular type of test. Statisticians could reasonably use different tools for different contexts. This opens up the opportunity to examine particular types of problem and see which method performs better at achieving some goal that all statisticians share.

One approach to such a question would be historical case studies of real-life cases where one or other method (or both) were used, and then comparing the two. However, it is rarely, if ever, possible to make such comparisons fairly and rigorously. Instead, we employ and expand the use of simulation studies for comparing statistical methodologies. Our simulations use a simple decision problem to pit four players against each other: (1) a confidence interval approach, which classical (“frequentist”) statisticians might use for such a problem; (2) conditionalization upon a beta distribution, which many Bayesians would regard as an appropriate family of priors in this context; (3) a hybrid approach based on Jon Williamson’s “Objective Bayesian” methodology, which involves forming beliefs about the relative frequencies using confidence intervals and then combines them with an updated version of the Principle of Indifference in order to generate precise probabilities; and (4) a “Sample” player who simply uses the relative frequencies in their observed samples to make point estimates of probabilities, akin to maximum likelihood estimation.

All the methodologies we discuss have some intuitively attractive performance properties as our sample sizes tend towards infinity. Long run performance properties are often given as the raison d’être of frequentist methods, while—in the right sorts of problems and with the right sorts of priors—Bayesian methods will also lead to credences (also called “degrees of belief”) that, in the long run, approximate or even match the true relative frequencies (De Finetti, 1980). That is to these theories’ credit: we never reach the long run, but information about it arguably provides defeasible information about the short run. If all the methodologies do well in the long run, then the short run is a more promising place to look for divergent performances. For this reason, we used simulations to compare the performance of players inspired by the methodologies when these players were given only small or moderately large samples.

Vituperative rhetoric and dismissive criticisms are common in the Statistics Wars. However, our results indicate that such disrespect is unfounded, at least for the decision problem we study. We found that Bayesianism, frequentism, and (what we call) Williamsonianism can all perform well with suitable player settings. As the number of games becomes large, this similarity can be explained by similar decisions. In the short run, we found differences in decisions. However, with the right player settings, all three of the statistical methodologies can exceed the benchmark that we set for them, and this similarity should increase the respect that the different factions of the Wars have for each other. Our results thus are contrary to what some dogmatic statistical warriors might expect. From each perspective, the other factions might seem absurd, but our study shows how all of them can sometimes lead to good decisions in two senses: (1) the players based on methodologies of statistics do not do significantly worse than the naïve sample-extrapolating player and (2) given the right settings, the players’ performances with small samples are not bad in comparison to their performances with more information.

We briefly review the relevant literature in Sect. 2. We then explain our methods and the “players” in Sect. 3. We display the results and analyse them in Sect. 4, discuss them in Sect. 5, and conclude in Sect. 6.

2 The Statistics Wars: a multi-dimensional dispute

The Statistics Wars have a long history, dating back centuries, and they feature a “Who’s Who” of statistics. The Wars are sometimes framed in terms of a simple conflict between Bayesian statisticians and classical (or “frequentist”) statisticians, but this greatly oversimplifies the debates, as Mayo has detailed (Mayo, 2018). Indeed, no brief summary is possible, but for understanding our simulation study, it helps to note that the participants disagree across multiple dimensions, which we now broadly summarise.

2.1 The concept of probability

From a philosopher’s perspective, perhaps the most fundamental dimension is which concepts of probability that a statistician regards as appropriate within statistical reasoning. As a preliminary, we must distinguish two potentially divergent domains in which we use probabilistic language: (i) everyday language like “The traffic will probably not be bad today” and (ii) the use of mathematical probability in science, such as a statistic for the probable error of a test. According to some philosophers of probability (including many labelled “frequentist”) there is no need for a formal analysis of probabilistic language of type (i). In everyday life, we use “probability” and cognate terms in all sorts of ways, but it is debatable whether there is much of a connection between this ordinary usage and the proper place of probability in scientific reasoning. Insofar as the Statistics Wars are disputes over the proper concept of probability, they are disputes about which concept is appropriate in scientific reasoning.

-

1a

Some theorists adopt physical interpretations of probability, in which probability is variously defined as finite relative frequencies (Venn, 1876), long run hypothetical relative frequencies (von Mises, 1957), propensities (Popper, 1959) or some other type of objective physical magnitude. According to this family of theories, probabilistic statements are generally logically contingent and non-psychological.Footnote 1 Epistemologically, there is no fundamental difference between knowledge of probabilities and other types of knowledge. While probabilities might appear in scientific hypotheses, no hypothesis itself has a probability: it is either true or false, but there is no sense in which statements like “This hypothesis has a probability of 0.5” can be true with a physical interpretation.

-

1b

Epistemic interpretations analyse probabilistic concepts in terms of an epistemological framework—a system of concepts to do with evidence, belief etc. Within this family, we can distinguish two subgroups. The first subgroup interpret probability psychologically, as either unique (“Objective Bayesian”) (Jaynes, 1957) or non-unique (“Subjective Bayesian”) (De Finetti, 1980) rational credences. In both cases, probability is analysed as the degree of confidence of either idealized or actual reasoners. The second subgroup of epistemic theorists regard probabilities as “partial entailment” relations between evidential statements and hypotheses (Keynes, 1921; Benenson, 1984; Kyburg, 2001). These “logical” probabilities are arguably guides to rational credences,Footnote 2 but they are not strictly speaking the same thing, just as deductive logical relations (on any non-psychologist philosophy of logic) can often guide rational belief, but cannot be reduced to psychological relations. On either a psychological or partial entailment interpretation, scientific hypotheses can have probabilities.

-

1c

Pluralist combinations of 1a and 1b. For example, Carnap (1945b) combined a partial entailment interpretation of the probabilities of hypotheses (“H’s probability relative to our total evidence is 0.5” etc.) with a long run relative frequency interpretation of the probabilities mentioned in hypotheses, such as assertions about radioactive half-lives in physics. Pluralistic interpretations are very common among philosophers of science, e.g. Popper (2002), Howson and Urbach (2006), Williamson (2010).

To appreciate these differences in the conceptual analysis of probability, consider how these approaches might interpret some sentences that might occur within scientific reasoning:

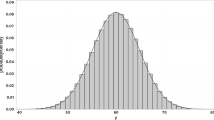

\(H_{1}:\) “There is a high probability that height will be approximately normally distributed in a large subset of humans.”

-

Physical: There is a tendency (interpreted in terms of relative frequencies or propensities) of large subsets of humans to be approximately normally distributed with respect to their height.

-

Epistemic: On a pure psychological interpretation (such as an uncompromising Subjective Bayesianism) this is an assertion of high confidence that height will be approximately distributed in a large subset of humans, or that a high degree of confidence is rationally appropriate. However, note that most contemporary Subjective Bayesians are pluralists: see below. On a pure partial entailment interpretation, \(H_1\) is best interpreted as a claim about a probability relation between statements, plus the assertion that one of those statements is true. For instance, we can interpret \(H_{1}\) as the combination of three assertions: (i) a statistical hypothesis \(H_{s}\), which asserts a physical probability statement such as “There is a high relative frequency of an approximately normal distribution of height among large subsets of human beings”, (ii) the claim that \(H_{s}\) is our best evidence regarding the single-case hypothesis \(H_{t}\) “A particular randomly selected large subset of humans is approximately normally distributed with respect to height”, and (iii) a claim that \(H_{s}\) has a high partial entailment relation in favour of \(H_{t}\) (Benenson, 1984; Kyburg, 1990).

-

Pluralist: Most pluralists would adopt a physical interpretation of \(H_{1}\), in most contexts.

\(H_{2}:\) “It is 99\(\%\) probable that there is no life currently on Mars.”

-

Physical: No relative frequency interpretation of \(H_{2}\) is possible, without being false. \(H_{2}\) might have some function in unscientific language as a way of indicating confidence, so a supporter of a relative frequency interpretation might interpret \(H_{2}\) as an informal, extra-scientific expression of confidence. On a propensity interpretation, \(H_{2}\) could be an assertion about the physical chances of life on Mars at this time.

-

Epistemic: On a subjectivist psychological interpretation (as in Subjective Bayesianism) \(H_{2}\) is an assertion about the speaker’s confidence. On an objectivist psychological interpretation (as in Objective Bayesianism) \(H_{2}\) asserts that 99% is the degree of confidence, for the hypothesis that there is life on Mars, that is uniquely rational relative to some body of evidence. On a partial entailment interpretation, \(H_2\) is an assertion about a partial entailment relation between the hypothesis that there is life on Mars and some body of evidence, such as the total body of evidence in astrobiology.

-

Pluralist: Most pluralists would adopt some epistemic interpretation of \(H_{2}\), in most contexts.

2.2 The evaluation of statistical methods

If we are testing a statistical hypothesis, then we need to select a method. Often, we might look at what has worked well in the past, but that just pushes the question back: “This method generally works well” is itself a (vague) statistical hypothesis. Additionally, if we are investigating some new or relatively new topic, we might not know what “works”. There are three broad families of answers to this question in the Statistics Wars.

-

2a

One criterion is the method’s long run performance. For example, suppose that we kept on using a particular procedure for estimating some relative frequency as our sample sizes grew larger and larger. Suppose also that there is a limit that the relative frequency would tend in the long run.Footnote 3 Will the margin of error of our estimates tend towards zero if we stick to this method? If so, does the estimation method have other attractive properties, e.g. does it not depend on the language in which we formulate our hypothesis (Reichenbach, 1938; Feigl, 1954; Salmon, 1967)? Alternatively, we might ask whether the test would almost always provide statistically significantFootnote 4 results in the long run (Fisher, 1947, p. 14)? Alternatively, we may ask whether, in the long run, the test provides a satisfactoryFootnote 5 combination of mistaken rejections (Type I errors) and mistaken non-rejections (Type II errors) (Neyman, 1949)?

-

2b

In terms of the doxastic (belief-modifying) properties of the test (Howson and Urbach, 2006; Williamson, 2010). Is the testing procedure a rational way to update our beliefs? Put differently, if we adopt a doxastic approach to evaluation, we assess the test based on how its use would affect the structure of our beliefs. For example, diachronic Dutch Book Arguments are intended to show that, if we adopt some systematic procedure for revising our beliefs that is not an application of Bayesian conditionalization, then we shall be vulnerable to accepting a series of bets in which we will inevitably lose money. Others advocate conditionalization based on its benefits for “epistemic” utilities (Greaves and Wallace, 2006). However, not all philosophers who adopt a doxastic approach to evaluating tests have endorsed conditionalization as a general norm (Bacchus et al., 1990).

-

2c

In terms of whether the testing procedure provides a severe test in a particular context (Mayo, 1996; Spanos, 2010; Mayo, 2018). A “severe” test adequately probes the possible sources of error in our inference, like extrapolations from spurious correlations in the data. In particular, there should be a good chance that our test would uncover a flaw in the hypothesis that we are investigating, if such a flaw exists. A hypothesis might have an excellent fit for our data, but it has not been severely tested unless it was at risk of being rejected by our test, if it were false. Put another way, there should be a low probability that using the testing procedure will lead us to a mistaken inference from the data, i.e. a low “error probability”. The role of probability in this methodology is to assess the extent to which tests have probed (or would probe) our hypotheses. Note that severity requires more than just the long term performance properties of 2a: a test might have good long run properties, but if the test is unlikely to detect a flaw in a single usage and we only evaluate a hypothesis once using that test, then that hypothesis has not been severely tested. In general, according to a severe testing (“error statistical”, “probative”) evaluation approach, there are problems (such as p-hacking) that do not involve the lack of good long run performance properties, but nonetheless can make our tests inadequate (Mayo, 2018, pp. 13–14).

Naturally, there are interrelations between the criteria used by these three approaches to evaluation. For example, adherents of 2a and 2c care about revising our beliefs in rational ways, but evaluate what is a “rational” method for belief revision in terms of long run performance or probative capacity. They believe that the formal models of belief used by adherents of 2b are not the right place to start.Footnote 6 Similarly, one intuitive feature of proper doxastic states is that there should be proper coherence between (a) beliefs about long run performance of tests and (b) beliefs in how to update one’s beliefs given individual tests. Meanwhile, error statisticians (2c) think that the long run performance qualities of a test are one aspect of its probative quality (Mayo, 2018).

2.3 Testing procedures and statistics

Finally, there are testing procedures and test statistics that different methodologists judge to be appropriate in general or in some context. Even given a particular interpretation of probability and shared standards to evaluate our methods, selecting among types of tests can be controversial.

-

3a

Classical tests and test statistics. The former include confidence interval estimation, null-hypothesis significance testing, maximum likelihood estimation, and so on. For our test statistics, we might use p-values, confidence levels, and other descriptive statistics that indicate the long run properties of the testing procedure that we shall implement.

-

3b

Bayesian tests and test statistics, involving the use of conditional probabilities and Bayes’ Theorem to update a prior using conditionalization. There are associated test statistics like Bayes factors and posterior probabilities to indicate, respectively, (i) the performance of the tested hypothesis in comparison to another hypothesis and (ii) the warranted degree of belief in the tested hypothesis given the initial prior and the acceptance of the new data.

-

3c

Pluralist combinations of 3a and 3b. For example, according to Kyburg and Teng (2001), we should use Bayesian methods when we have prior probabilities based on previously inferred statistical information about relative frequencies. However, when such background knowledge is lacking, we should use classical statistics.

Often, in methodological debates, the immediate battleground is one of these dimensions, but disagreements in the others intrude and further complicate the discussions. A simple type of example occurs when, during debates about the adequacy of particular testing procedures, the term “probability” is insufficiently clarified. Researcher A can then say things to Researcher B that are obviously absurd given the other’s interpretation of “probability”, even though they might be able to develop a practical consensus on the immediate point of disagreement if they made some disambiguations. In such cases, a fundamental disagreement across dimension 1 (Sect. 2.1) unnecessarily frustrates the possibility of consensus on dimension 3 (supra, Sect. 2.3). Moreover, while some of these viewpoints are closely correlated in practice, a great variety of permutations are logically consistent.

Despite the complexities of this debate, we were able to identify three ideas to inspire the three non-benchmark players in our simulations. Thus, in addition to our benchmark player (who naïvely uses their observed sample frequencies) we based on our players on the following methodologies:

-

A.

Frequentism, in the sense of adopting 3a, at least for the reasoning problem that we use in this paper. Thus, we are specifically referring to the methods of classicial statistics and their direct epistemic consequences of providing estimates of relative frequencies. For classical methods, we only need interpretation 1a, although these methods are compatible with other interpretations of probability. In particular, we shall focus on confidence interval estimation. Note that this choice of statistical method for our problem might be justified by any of the evaluative methodologies falling under the categories 2a, 2b, or 2c. For the sake of convenience, we shall assume that a frequentist will also interpret probabilities as relative frequencies, even though this is not necessitated by confidence interval estimation methods.

-

B.

Bayesianism, in the sense of using 3b for the problem in our study. Bayesians can adopt either a strictly epistemic interpretation, 1b, or a pluralist interpretation, 1c. Bayesians more or less invariably arrive at their view via a doxastic approach to evaluating statistical methods, 2b.

-

C.

Williamsonianism, by which we mean Jon Williamson’s combination of “calibrating” via reasoning that is fundamentally based on classical methods, 3a, but using these relative frequency estimates to guide credences, in accordance with 1b. Given the constraints from beliefs about relative frequencies, the credences are subsequently determined by the Maximum Entropy Principle, described below in Sect. 3.3.3. The overall package is justified by Williamson on doxastic grounds (Williamson, 2010).Footnote 7 In other words, Williamson has an epistemic theory of probability, but there is a large role for classical statistical methods in his theory of inference, leading to divergences from conditionalization (Williamson, 2010, pp. 167–169). Williamsonianism hence offers an interesting combination of ideas that are typically attributed to either frequentism or Bayesianism alone.

Since these methodologies tend to differ across the evaluative dimension, it is extremely difficult to find ways of comparing them that do not beg crucial questions. However, a shared goal is that statistics should help scientists achieve their aims. Scientists have many aims where statistics could reasonably be expected to help: prediction, explanation, control etc. However, one aim of science that seems amenable to formal study is making good decisions under conditions of uncertainty. We mean “decisions” in a broad sense of choices among actions. As Jerzy Neyman pointed out, this is a broad enough definition to include most or all of scientific practice. Decision-making includes choosing to publish a paper, to make an expert pronouncement, to invest in developing an experiment, as well as non-scientific decisions like financial investing or trying to design a better mousetrap (Neyman, 1941).

In our simulation, we programmed four different “players” using belief models and choice models. These players were inspired by the three positions in the Statistics Wars that we identified above, plus a benchmark player. The latter enables to see if these methodological positions fulfill a reasonable basic criterion of adequacy: they should do at least as well as someone who just expects future frequencies to match the sample frequency that they have observed thus far. We focused on non-asymptotic decision making, i.e. performance in a finite number of decisions. This might seem to prejudge the case against frequentists, because many of them emphasise long run performance as the proper criterion for testing. However, frequentists are just as interested in making good decisions in the relatively short run as any other other sensible people; that a test procedure has good long run properties is arguably indicative that it will help us to make good decisions in the short run, and frequentists have acknowledged this goal as one objective of statistical inference.

We have no pretense that our study (or simulation studies in general) will or should end the Statistics Wars. Given the persistence of these conflicts throughout the history of statistics, it is probable (in more than one sense) that they are not going to be resolved any time soon. Nonetheless, simulations provide a useful way to assess which methods help guide us towards better decisions and under what conditions. In particular, they can help us evaluate whether frequentism, Bayesianism, or Williamsonianism will enable us to perform systematically better in a particular type of decision problem.

3 Methods

3.1 Simulations

Simulations are a relatively new tool in the philosophy of science. For a review up to 2009, see Winsberg (2009). There is an even sparser literature that applies simulations to the Statistics Wars. However, within statistics, there are many studies using simulations to assess Bayesian tools versus frequentist tools for particular problems. To give just a few examples, Avinash Singh et al. compare Bayesian and frequentist methods for estimating parameters of small sub-populations using simulations (Singh et al., 1998). Gilles Celeux et al. use simulations to compare Bayesian regularization methods against frequentist methods (Celeux et al., 2012). Daniel McNeish applies simulations within an investigation of the strengths and weaknesses of Bayesian estimation with small samples (McNeish, 2016).Footnote 8 There is also a literature on Bayesian estimation versus frequentist estimation in structural equation modelling (Smid et al., 2019).

Within philosophy, we found only two earlier studies comparing Bayesian and frequentist methodologies using simulations. Felipe Romero and Jan Sprenger employ simulations to argue in favour of a Bayesian approach to the replication crisis (Romero and Sprenger, 2020). Their study is interesting, but its topic is inferential success under different sorts of scientific institutions, and therefore very different from our own. Instead, our study builds on a philosophically-motivated use of simulations by Kyburg and Teng (1999) to compare frequentist statistics versus Bayesian statistics in a simple decision problem. They use simulations to investigate a game (against Nature) in which a frequentist player and a Bayesian player separately make bets based on a random binomial event—a toss of a coin with unknown bias or fairness. The frequentist player used confidence intervals and the Bayesian player used conditionalization. To serve as a benchmark, Kyburg and Teng also use a player, Sample, who simply estimates that the probabilities will be equal to the frequency in the sum of their samples. After observing some randomly generated coin tosses, players had the option to buy a ticket for heads at a price t or a reversed ticket (effectively betting that the toss will land tails) at a price \(\left( 1 - t\right) \). The variable t had a randomly generated price in the interval \(\left[ 0,1\right] \). Each player was given a decision algorithm for deciding when and how to bet.

The study then compares the average profits across a range of conditions. The Bayesian player performed at about the benchmark level. Across the full range of possible coin biases that Kyburg and Teng investigated, the Bayesian performed best when their prior corresponded to that required by the Principle of Indifference.Footnote 9 The frequentist tended to make better profits than the Bayesian and the benchmark. Kyburg and Teng do not explain their result, and it has been noted as a puzzle (Schoenfield, 2020, p. 2).

However, comparisons were difficult because the frequentist (unlike the Bayesian) would sometimes refuse to bet. In particular, the frequentist player’s algorithm meant that it would not bet if t was within their confidence interval \([x , y ]\) where \(0 \le x \le 1\), \(0 \le y \le 1\), and \(x \le y\). Kyburg and Teng believed that this feature of the frequentist algorithm meant that this player did much better than the Bayesian in absolute profits. While Kyburg and Teng attempted to address this issue by using average profits rather than total profits, the difficulty remains that the players are being compared over an unequal number of bets. Consequently, it is possible that their results are partly explained by this asymmetry.

Additionally, Kyburg and Teng’s study was published in conference proceedings, so quite a few details are obscure, such as whether the players confronted the same sequences of coin tosses and ticket prices. Additionally, they only report the results of 100 simulations for each parameter setting, which means that many of the variations in performance that they find could be explained by random error.

Despite its limitations, the Kyburg and Teng study offers a novel and relatively simple way to compare the decision-theoretic performance of Bayesianism and frequentism in a short run reasoning problem. On the other hand, there was a lot of scope for the expansion, modification, and clarification of their study. Our study design enables comparisons with Kyburg and Teng (1999), but also explores several novel directions; it also attains a level of rigour and detail beyond their conference paper-style investigation. We also sought to reduce the problem of random error by conducting our simulations many times, as we detail in Sect. 3.5. Overall, we conducted two sets of 1000 simulations for each player setting. We also varied the random parameters—the ticket prices and the coin toss results—in the decision problem. Each simulation involved 1000 games, with 1000 simulations for each of five coin biases, and therefore there was a total of 5 million games per player setting in each set of simulations. In this way, by using modern computational power and software, we were able to use a “Big Data” approach that was missing in the study by Kyburg and Teng (1999).

3.2 Decision problem

The game that the players faced in our simulations is based around a finite sequence of Bernoulli trials with an initially unknown physical probability. For convenience, we use the terminology of coin tosses, but we stress that, whereas a real-world player would know that a coin is likely to land heads/tails with roughly equal long run frequency (they know that there is a very low proportion very biased coins in the world) in our game they have no such background knowledge about the coin being tossed. However, the players do all know that the tosses are random, in the sense that each toss has an equal (but unknown) chance of landing heads. They also know that the order of coin tosses is irrelevant, i.e. patterns in the sequences of tosses provide no information. Thus, they will only regard sample statistics as relevant evidence for the coin biases.

The basic unit of the decision problem is a decision to bet on heads (action \(b_{h}\)), bet on tails (action \(b_{t}\)), or to hold (action \({\bar{b}}\)). Players have known and fixed payoffs for the decisions that we depict in Fig. 1.

The variable t is a US dollar (USD) value. It was randomly generated for each game; since it takes values in the [0, 1] interval, its average price over the full series of simulations was very probably around 0.5. Action \(b_{h}\) gives a return of t if the result is heads, but incurs a loss \(-t\) if the results is tails, because the player bought the ticket at a price t and did not win any money. Action \(b_{t}\) gives a return of \(\left( 1 - t\right) \) if the result is tails and incurs a loss \(-\left( 1 - t\right) \) if the result is heads, again reflecting the price of purchasing the ticket and the absence of a return. Action \({\bar{b}}\) gives a guaranteed result of \(-\varepsilon \). We set \(\varepsilon \) to be in the unit interval [0, 1].

In Kyburg and Teng (1999), a decision to hold has a guaranteed result of 0. However, as they note (pp. 364–365) it is interesting to see what happens if players are forced to bet. “Force” implies a sanction, and by setting \(\varepsilon \) high enough, we can make it rational for a payoff-maximising player to bet. In particular, if \(\varepsilon > t\) or \(\varepsilon > \left( 1 - t\right) \), then there will always be at least one bet that is rational for the player, because the bet will have a non-negative expected payoff, whereas \(-\varepsilon \) is greater than the loss \(-t\) or \(-\left( 1 - t\right) \). By setting \(\varepsilon \) to 1, we can ensure that all players will bet in each game.

Our simulation presents players with sequences of coin tosses. After a certain number of trials, the players are given a choice whether and how to bet. The players’ decisions are completely independent and non-interdependent, so they will not convert to another player’s approach if they see that player outperforming them.

3.3 The players

Our simulations feature four players, Bayes, Frequentist, Williamsonian, and Sample. The player Sample does not correspond to a standard approach to our decision problem, but it is useful, because a statistical methodology should at least match Sample’s performance. Sample can also be interpreted as one way that a frequentist might act if they were forced to give precise relative frequency estimates and use these estimates to guide their decisions. The players have significant differences in their learning rules and decision rules, so we shall discuss them each individually.

3.3.1 The Bayesian

Bayes is inspired by Subjective Bayesianism, so there are infinitely many prior probability distributions that they might adopt. However, in our simulations, Bayes will use a type of prior that many Subjective Bayesians would use for such a reasoning problem. Bayes knows that the tosses are random. Therefore, they can estimate the probability that a particular toss will land heads simply by deciding one degree of belief in heads for each and every toss. Since they know that each toss will land heads or tails, the probability of tails will be one minus the probability of heads. The beta distribution is a popular option in Bayesian statistics for this type of estimation problem (Mun, 2008, p. 906). The beta distribution enabled us to generate a wide variety of priors for Bayes using just two parameters. For these reasons, we used this procedure for characterising initial priors for Bayes.

Bayes’s belief revision procedure in this particular game can be represented with an epistemic model \(\mathrm {K}:=\left\{ {\Omega },{\Theta },H,c,p \right\} \), where \({\Omega }:=\left\{ heads,tails\right\} \) is the set of possible outcomes of a single coin toss (the decision-relevant states), \({\Theta }:=\left\{ \theta \in {\mathbb {R}}_{\ge 0}:0\le \theta \le 1\right\} \) is the set of values representing all the possible biases of the coin towards heads (from this point onward, we will simply call \(\theta \) “coin bias”), \(H:=\left\{ \mathrm {H}\cup \left\{ \emptyset \right\} \right\} \) is the set of possible historiesFootnote 10 with a typical element h, where \(\mathrm {H}:=\left\{ heads,tails\right\} ^{n}\) is the set of possible histories that can be generated by \(n>0\) coin tosses, such that each \(\mathrm {h}\in \mathrm {H}\) is a sequence \(\left\{ e_{1},\ldots ,e_{n}\right\} \) where each \(e_{i}\in \left\{ heads,tails\right\} \), \(c:H\rightarrow {\mathbb {Z}}_{\ge 0}\) is a heads event counting function and for every history \(h\in H\), \(c\left( h\right) =\left| \left\{ e_{i}\in h:e_{i}=heads\right\} \right| \), and \(p: H\rightarrow \varDelta \left( {\Theta }\right) \) is a probability measure which assigns, to every history \(h\in H\), a probability distribution on \({\Theta }\). After observing some history \(h\in H\), Bayes revises their beliefs about each possible coin bias \(\theta \in {\Theta }\) via the standard Bayes rule

Since each coin toss is a Bernoulli trial and the game generates a binomial distribution, we can use a counting function c to reformulate the Bayes rule for each history \(h\in H\) as

where \(p\left( c\left( h\right) ,n|\theta \right) =\left( \begin{array}{cc} n\\ c\left( h\right) \end{array}\right) \theta ^{c\left( h\right) }\left( 1-\theta \right) ^{n-c\left( h\right) }\).

Since our setup ensures that, for every history \(h\in H\), the posterior probability distribution \(p\left( \theta |c\left( h\right) ,n\right) \) will be in the same family of probability distributions as prior \(p\left( \theta \right) \), we can use a conjugate prior and represent the prior by a standard beta distribution with parameters a and b. By denoting beta distribution as \(\mathrm {B}\left( a,b\right) \), we can express the prior as

The Bayes rule for a setup with a beta distribution and some history \(h\in H\) can be defined as

Notice that this Bayes rule is just another beta distribution with parameters \(a+c\left( h\right) \) and \(b+n-c\left( h\right) \). Armed with the prior and the Bayes Rule, Bayes now has all they need both to assign initial priors in the coin toss simulations and to update them in accordance with conditionalization.

Bayes’s choices can be represented with a choice model \(\mathrm {C_{b}}:=\left\{ {\Omega }, \mathrm {D}, H, \mu , \pi \right\} \), where \(\mathrm {D}:=\left\{ b_{h},b_{t},{\bar{b}}\right\} \) is the set of possible actions, while \(b_{h}\) represents the decision to bet on heads, \(b_{t}\) represents the decision to bet on tails, and \({\bar{b}}\) represents the decision to hold, \({\Omega }\) represents the outcomes of a single coin toss, \(\mu :{\Omega }\times H\rightarrow X\), where \(X:=\left\{ x\in {\mathbb {R}}_{\ge 0}:0\le x\le 1\right\} \) is the conditional probability function which assigns, to every history \(h\in H\), some probability \(q\in \left[ 0,1\right] \) on heads and \(1-q\) on tails, and \(\pi : \mathrm {D}\times {\Omega }\rightarrow {\mathbb {R}}\) is the payoff function which assigns, to every possible action-state combination, a real number representing player’s payoff. The payoff function assigns payoffs to the action-state combinations in the same way as they are represented in Fig. 1, so that

-

\(\pi \left( b_{h},heads\right) =t\); \(\pi \left( b_{h},tails\right) =-t\);

-

\(\pi \left( b_{t},heads\right) =-\left( 1-t\right) \); \(\pi \left( b_{t},tails\right) =\left( 1-t\right) \);

-

\(\pi \left( {\bar{b}},heads\right) =-\varepsilon \); \(\pi \left( {\bar{b}},tails\right) =-\varepsilon \).

The conditional probability function \(\mu \) is such that, for history \(h=\emptyset \), the probability of heads is

while \(\mu \left( tails|h=\emptyset \right) =1-\mu \left( heads|h=\emptyset \right) \).

For every history \(h\in \mathrm {H}\) the probability of heads is

while \(\mu \left( tails|h\right) =1-\mu \left( heads|h\right) \).

The expected payoff from some action \(\mathrm {d}\in \mathrm {D}\) given some history \(h\in H\) is

We assume that Bayes is a rational player who seeks to maximise the expected payoff. Thus, for any history \(h\in H\), Bayes always chooses an action \(\mathrm {d}\in \mathrm {D}\), such that

When more than one action satisfies this requirement, Bayes will make a random choice among these actions. Since hold is never a strictly dominant option, we simplified Bayes by supposing that they never choose this option.

3.3.2 The Frequentist

Just as Subjective Bayesians might adopt many different priors for a coin tossing problem, so there are multiple ways that a frequentist might estimate the relative frequencies involved, i.e. the frequencies of heads and tails among the tosses. However, many frequentists would regard estimation using confidence intervals as a reasonable method for our decision problem, in which Frequentist starts out with no initial estimate of what the relative frequency might be and where they want to estimate the general relative frequency for the purpose of guiding their betting behaviour on particular tosses. Additionally, it is this approach that Kyburg and Teng, who are frequentists (in the broad sense that we are using in this article) adopt for their frequentist player.

We explain the technical details of how Frequentist works below, but we shall also provide an informal summary beforehand. Frequentist will estimate that, in the population of coin tosses, the relative frequency of heads is within a confidence interval. Since they know that all the tosses will land heads or tails, that confidence interval also provides them with an estimate of the relative frequency of tails. A “confidence interval” is an estimate that a population frequency is within some range. Let us focus on the long run relative frequency of heads in the coin tosses. This is the relative frequency that a hypothetical infinite series of tosses would approach in the limit as the number of tosses increased. A confidence interval of [0.4, 0.6] for the long run frequency of heads in the population of coin tosses is an estimate that the relative frequency of the coin landing heads is at least 40% of the coin tosses and no more than 60% of them. Frequentist will tentatively believe that the relative frequency for the coin toss population is somewhere in the estimated interval. They will tentatively dismiss values outside this range, such as 0.2 or 0.9, as possible values for the relative frequency. Frequentist’s beliefs are “tentative” in the sense that, with more sample data, they can change the interval to very different values; a confidence interval using one sample for estimation could be [0, 0.5], but the interval using an enlarged sample could be [0.9, 0.99].

The confidence level is not a probability, but instead a value describing the procedure used to estimate the intervals. We stipulate that Frequentist knows that the sampling assumptions for confidence interval testing are satisfied. The confidence level is set via a parameter \(\alpha \), between 0 and 0.5, such that the level is \((1 - \alpha )\). The value of \(\alpha \) is exogenous to the confidence interval methodology, so we simulated how Frequentist will perform with a range of values of \(\alpha \). The confidence level tells us, for a particular sample size, the minimum possible long run relative frequency of correct estimates of a confidence interval of that width using that sample size. For example, if \(\alpha = 0.05\), then the confidence level for the intervals estimated using this method is 0.95, or 95%. The level is only the minimum, because if the population is homogeneous—all tosses land heads or all tosses land tails—then the success rate will be 100%. Therefore, the success rate in the example could be anywhere from 95 to 100%. Once Frequentist has estimated a confidence interval given their existing observations, they will use it to guide their betting behaviour for the individual tosses on which they can bet.Footnote 11

We now formulate this belief revision procedure in detail. Frequentist revises their estimate of the coin bias in a way that can be represented with a model \(\mathrm {F}:=\left\{ {\Omega },\mathrm {H},\mathrm {k}_{\alpha },\mathrm {c}\right\} \), where \({\Omega }\) is the set of possible outcomes of a single coin toss, \(\mathrm {H}=\left\{ heads,tails\right\} ^{n}\) is the set of possible histories which can be generated with \(n>0\) coin tosses,Footnote 12\(\mathrm {c}:\mathrm {H}\rightarrow {\mathbb {Z}}_{\ge 0}\) is the heads event counting function and for every history \(\mathrm {h}\in \mathrm {H}\), \(\mathrm {c}\left( \mathrm {h}\right) =|\left\{ e_{i}\in \mathrm {h}:e_{i}=heads\right\} |\), and \(\mathrm {k}_{\alpha }:\mathrm {H}\rightarrow \mathrm {P}\left( X\right) \), where \(X:=\left\{ x\in {\mathbb {R}}_{\ge 0}:0\le x\le 1\right\} \), is a function which assigns, to every history \(\mathrm {h}\in \mathrm {H}\), an interval \(\mathrm {k}_{\alpha }\left( \mathrm {h}\right) =\left( k_{l},k_{u}\right) \) with lower bound \(k_{l}\) and upper bound \(k_{u}\ge k_{l}\). Since the game is a sequence of Bernoulli trials that generates a binomial distribution, we can determine Frequentist’s confidence interval by calculating the Clopper-Pearson interval. For any history \(\mathrm {h}\in \mathrm {H}\), the lower and upper boundaries of the Clopper-Pearson interval can be represented as the following beta distribution quantiles

Thus, after observing a history of n coin tosses \(\mathrm {h}\in \mathrm {H}\) with \(\mathrm {c}\left( \mathrm {h}\right) \ge 0\) heads, if Frequentist adopts a an level \(1-\alpha \), they will estimate the actual coin bias to be within an interval \(\mathrm {k}_{\alpha }\left( \mathrm {h}\right) =\left( \mathrm {B}\left( \frac{\alpha }{2};\mathrm {c}\left( \mathrm {h}\right) ,n-\mathrm {c}\left( \mathrm {h}\right) +1\right) ,\mathrm {B}\left( 1-\frac{\alpha }{2};\mathrm {c}\left( \mathrm {h}\right) +1,n-\mathrm {c}\left( \mathrm {h}\right) \right) \right) \), and they will not take into account any value \(k\notin \mathrm {k}_{\alpha }\left( \mathrm {h}\right) \).

There is no consensus on how to model decisions with interval-valued beliefs. There are many options in the literature (Resnik, 1987). However, we programmed Frequentist to use what seems to be the decision procedure in Kyburg and Teng (Kyburg and Teng, 1999), which we also interpreted using other publications by Kyburg, in particular (Kyburg, 1990, 2003).

Frequentist’s choices can be represented with a choice model \(\mathrm {C_{f}}:=\left\{ {\Omega },\mathrm {D},\mathrm {H},\mathrm {k}_{\alpha },\pi ,\right. \left. \phi \right\} \), where \({\Omega }\) is the set of possible outcomes of a coin toss, \(\mathrm {D}\) is the set of possible actions, \(\mathrm {H}\) is the set of possible histories, \(\mathrm {k}_{\alpha }\) is the function which assigns a Clopper-Pearson interval to every history \(\mathrm {h}\in \mathrm {H}\), \(\pi \) is a payoff function identical to the one defined for Bayes player, and \(\phi :\mathrm {D}\times \mathrm {H}\rightarrow \mathrm {P}\left( {\mathbb {R}}\right) \) is a function which assigns, to every action-history pair \(\left( \mathrm {d},\mathrm {h}\right) \in \mathrm {D}\times \mathrm {H}\), an expected payoff vector \(\phi \left( \mathrm {d},\mathrm {h}\right) =\left( {\mathbb {E}}_{\pi }\left[ \mathrm {d},{\underline{k}}\right] ,\ldots ,{\mathbb {E}}_{\pi }\left[ \mathrm {d},{\overline{k}}\right] \right) \), where \({\underline{k}}=\mathrm {min}\left( \mathrm {k}_{\alpha }\left( \mathrm {h}\right) \right) \), \({\overline{k}}=\mathrm {max}\left( \mathrm {k}_{\alpha }\left( \mathrm {h}\right) \right) \), and \({\mathbb {E}}_{\pi }\left[ \mathrm {d},k\right] =k\pi \left( \mathrm {d},heads\right) +\left( 1-k\right) \pi \left( \mathrm {d},tails\right) \) for every \(k\in \mathrm {k}_{\alpha }\left( \mathrm {h}\right) \).

The choice behaviour of Frequentist can be defined with the interval-dominance principle. Action \(\mathrm {d}\in \mathrm {D}\) interval-dominates action \({\bar{\mathrm {d}}}\in \mathrm {D}\) if and only if, given history \(\mathrm {h}\in \mathrm {H}\), \(\mathrm {min}\left( \phi \left( \mathrm {d},\mathrm {h}\right) \right) >\mathrm {max}\left( \phi \left( {\bar{\mathrm {d}}},\mathrm {h}\right) \right) \). For any pair of actions \(\mathrm {d}\in \mathrm {D}\) and \({\bar{\mathrm {d}}}\in \mathrm {D}\), Frequentist always chooses action \(\mathrm {d}\) if \(\mathrm {d}\) interval-dominates action \({\bar{\mathrm {d}}}\), and is indifferent between \(\mathrm {d}\) and \({\bar{\mathrm {d}}}\) when neither action interval-dominates the other. Reasoning this way almost corresponds extensionally to the frequentist player’s behaviour in Kyburg and Teng (1999), where no general decision rule is provided.Footnote 13

We now apply this approach to the coin tossing problem. We define \(\triangleright \) as denoting the interval-dominance of one action over another and \(\circ \) for when neither action interval-dominates the other. For a particular confidence interval and the fixed utilities given in Fig. 1, the actions \(b_{h}\), \(b_{t}\), and \({\bar{b}}\) can stand in the following relations:

-

(i)

\(b_{h}\) \(\triangleright \) \(b_{t}\) and \(b_{h}\) \(\triangleright \) \({\bar{b}}\);

-

(ii)

\(b_{t}\) \(\triangleright \) \(b_{h}\) and \(b_{t}\) \(\triangleright \) \({\bar{b}}\);

-

(iii)

\({\bar{b}}\) \(\triangleright \) \(b_{h}\) and \({\bar{b}}\) \(\triangleright \) \(b_{t}\);

-

(iv)

\(b_{h}\) \(\triangleright \) \(b_{t}\) and \(b_{h}\) \(\circ \) \({\bar{b}}\);

-

(v)

\(b_{h}\) \(\circ \) \(b_{t}\) and \(b_{h}\) \(\triangleright \) \({\bar{b}}\);

-

(vi)

\(b_{t}\) \(\triangleright \) \(b_{h}\) and \(b_{t}\) \(\circ \) \({\bar{b}}\);

-

(vii)

\(b_{h}\) \(\circ \) \(b_{t}\) and \(b_{h}\) \(\circ \) \({\bar{b}}\).

Frequentist will choose \(b_{h}\) in case (i), \(b_{t}\) in case (ii), and \({\bar{b}}\) in case (iii). They will make random choices between \(b_{h}\) and \({\bar{b}}\) in case (iv), \(b_{h}\) and \(b_{t}\) in case (v), \(b_{t}\) and \({\bar{b}}\) in case (vi), and between all three actions in case (vii). Our decision algorithm for Frequentist in the code embodies this behaviour.Footnote 14

3.3.3 The Williamsonian

Williamsonian is somewhat of a hybrid player. Consequently, after our discussion of the previous two players, there are few new notions involved in their reasoning. Williamson (2010) argues for three fundamental principles about probabilistic reasoning:

-

1.

\(\mathbf {Probabilism}\): Beliefs should be representable as credences satisfying the additive probability calculus.

-

2.

\(\mathbf {Calibration}\): These credences should reflect any relevant knowledge about relative frequencies.

-

3.

\(\mathbf {Equivocation}\): Subject to the constraints from Calibration, the credences should be maximally equivocal among the different possible states of the world.

The principle of Probabilism is a familiar Bayesian idea. For Calibration, Williamson endorses Kyburg’s system of “Evidential Probability” (Wheeler and Williamson, 2011) and this system requires using confidence intervals where (as in our simulations) there is no background knowledge of the relevant conditional probabilities for events (Kyburg and Teng, 2001, p. 264). Consequently, Williamsonian will estimate confidence intervals in the same way as Frequentist.

However, Equivocation and Probabilism entail that Williamsonian will differ from Frequentist in the other parts of their learning procedure. To determine equivocal credences in a systematic manner, Williamsonian uses the “maximum entropy principle”. Williamson gives the full formal connections between entropy maximisation and formal beliefs in Williamson (2010), building on research such as Jaynes (1957). The salient point is that, given a confidence inferred at some exogenously determined confidence level \((1 - \alpha )\) and a set of \(m\ge 2\) exhaustive and mutually exclusive states of the world that are consistent with the relative frequencies estimated using that confidence level, a Williamsonian will try to minimise the distance between their probabilities for each state of the world and the value 1/m implied by the Principle of Indifference.

Williamsonian’s belief revision can be represented with a model \(\mathrm {W}:=\left\{ {\Omega },{\Theta },\mathrm {H},\mathrm {p},\mathrm {k}_{\alpha }, \mathrm {c}, p_{w}\right\} \), where \({\Omega }\) is the set of possible outcomes of a single coin toss, \({\Theta }\) is the set of values representing all the possible coin biases, \(\mathrm {H}\) is the set of possible histories that can be generated by \(n>0\) coin tosses, \(\mathrm {c}\) is the heads event counting function, \(\mathrm {p}:{\Theta }\rightarrow \varDelta \left( {\Theta }\right) \) is a function which assigns a uniform probability distribution on \({\Theta }\), \(\mathrm {k}_{\alpha }\) is a function which assigns a Clopper-Pearson interval to every history \(\mathrm {h}\in \mathrm {H}\), and \(p_{w}:\mathrm {H}\rightarrow {X}\), where \(X:=\left\{ x\in {\mathbb {R}}_{\ge 0}:0\le x\le 1\right\} \), is a Williamsonian belief function which assigns, to every history \(\mathrm {h}\in \mathrm {H}\), a belief \(p_{w}\left( \mathrm {h}\right) \), such that

Since function \(\mathrm {p}\) assigns a uniform distribution on \({\Theta }\), we have a case where \(\int _{0}^{1}\left( \mathrm {p}\left( \theta \right) \theta \right) \mathrm {d}\theta =1/2\). Thus, we can rewrite condition 11 as

Therefore, in our coin tossing problem, Williamsonian first estimates confidence intervals for heads and tails in general at a confidence level \((1 - \alpha )\). Secondly, subject to this constraint, they set a Bayesian probability. For example, suppose that the Williamsonian has estimated a confidence interval [0.4, 0.6] for heads. This is consistent with assigning a probability of 0.5 for each particular toss landing heads, so Williamsonian makes 0.5 their degree of belief. However, if the interval is [0.1, 0.4], then 0.5 is not consistent with their beliefs about the relative frequencies. Instead, 0.4 is the available value that maximises entropy, and hence Williamsonian makes 0.4 their degree of belief in each particular toss landing heads.

Williamsonian’s choices can be characterised with choice model \(\mathrm {C_{w}}:=\left\{ {\Omega },\mathrm {H},\mathrm {D},\pi ,\right. \left. p_{w}\right\} \), where \({\Omega }\) is the set of coin toss outcomes, \(\mathrm {H}\) is the set of histories, \(\mathrm {D}\) is the set of actions, \(\pi \) is the payoff function identical to that which we defined for Bayes and Frequentist players, and \(p_{w}\) is a Williamsonian belief function. Williamsonian’s expected payoff associated with some action \(\mathrm {d}\in \mathrm {D}\) given some history \(\mathrm {h}\in \mathrm {H}\) is

We assume that Williamsonian is an expected payoff maximizer and thus, for any history \(\mathrm {h}\in \mathrm {H}\), always chooses action \(\mathrm {d}\in \mathrm {D}\), such that \({\mathbb {E}}_{\pi }\left[ \mathrm {d}|\mathrm {h}\right] \ge {\mathbb {E}}_{\pi }\left[ {\bar{\mathrm {d}}}|\mathrm {h}\right] \), for all \({\bar{\mathrm {d}}}\in \mathrm {D}\).

Despite the similarities in the learning procedures for Williamsonian and Frequentist, the principle of \(\mathbf {Equivocation}\) leaves Williamsonian in a very different place, because the latter has a precise probability when they face each next coin toss. Therefore, Williamsonian makes decisions, for a particular set of credences, in an identical way to Bayes.

3.3.4 Sample

Our final player, Sample, serves two primary functions. Firstly, they can be interpreted as Frequentist in a situation where they are forced to make precise estimates of the relative frequencies of heads. In that case, Frequentist might estimate the general relative frequencies of heads to be the limit as \(\alpha \rightarrow 0\), which is just the sample frequency. Thus, Sample may be interpreted as estimating that the long run frequency of heads is just the relative frequency in the total sample that they have observed so far. These estimates then guide Sample’s bets on each given toss. Sample also thereby has estimates for tails, given their knowledge of the coin tossing set-up. Sample’s second function is as a benchmark: if a statistical methodology’s performance is inferior to just estimating a precise probability using the sample frequency, then this is a bad mark against that methodology.

Conceptually, there is nothing novel in Sample’s belief revision procedure. They can be interpreted in multiple ways: as a version of Frequentist with precise estimates of relative frequencies, as a quasi-Bayesian reasoner (similar to the “\(\lambda = 0\)” agent in Carnap, 1952) and so on. The divergence with Frequentist is mainly in how different sample sizes are treated: given a 0.5 sample frequency of heads and a sample of 4, Sample is certain that the limiting relative frequency of heads in the tosses is 0.5, whereas Frequentist has a very wide confidence interval around 0.5; given a 0.5 sample frequency of heads and a sample size of 1000, Sample’s beliefs are the same as when they had just 4 observations, whereas Frequentist has a very narrow confidence interval. Even this divergence can be reduced if we interpret Sample as a frequentist reasoner who uses the sample frequency as a decision-making tool, without interpreting the concomitant probability distributions as degrees of belief. This type of reasoner is briefly discussed by Williamson (2007). The divergence with Bayesianism is that Sample has no initial priors, and thus does not update by conditionalization. While Sample is not a true Bayesian reasoner, in our simulations they will always have some sample data prior to making decisions, and therefore there is no need to specify the beliefs of Sample when they lack sample data.

For the choice behaviour of Sample, we model them as possessing a precise probability (interpreted either as a relative frequency estimate or as a non-Bayesian credence) for each possible outcome of each toss. Given this probability, it is natural for Sample to use Bayesian decision theory, because even many frequentists would say that such choices would be appropriate if one legitimately possessed the relevant relative frequency estimates, and Sample views themselves as having this information. Thus, for given credences, Sample makes decisions in the same way as Bayes. This common decision algorithm has the added advantage that Sample only differs from Bayes in their learning methods, which helps comparisons between the two players.

3.4 Coding and simulation architecture

Starting from the common decision problem and particular settings specified for each player in Sects. 3.2 and 3.3, we coded six different Python 3 scripts (version 3.8.1), based on the statsmodel econometric and statistical library (Seabold and Perktold, 2010) to perform the simulations. The first two scripts generated coin tosses and ticket prices. This data was subsequently employed as an input for the other four scripts. The output of the latter scripts gave us the cumulative monetary mean profits for each player, like those shown below in Tables 1, 2, 3, 4, 5 and 6 and, focusing on the Frequentist, the statistics of the conditions met by this player (see end of Sect. 3.3.2) in the simulations, as outlined in Tables 7 and 8. All simulations were performed on an Ubuntu Linux server powered by an 8 cores (16 threads) Intel Xeon (Skylake type) processor @ 2.2 GHz. High performance data multiprocessing meant that the overall computational time was approximately 1.5 hours. The simulation results were stored in 310,000 different txt files for about 3.5 gigabytes. The code is available from the authors upon request.

3.5 Variations

The games each consisted of a number of observations of coin tosses, which were used by the players to update their initial belief state. Once these beliefs were updated, players used their decision algorithms to choose an action. Players retained information from game-to-game within a particular simulation, but they did not retain any information from one simulation to another.

We made two sets of simulations. Each set consisted of 1000 simulations. In the first set of simulations, there were 1000 games. In each game, players observed 9 tosses and then bet on the 10th toss. In the initial game, players had no prior observations. They updated using their 9 observations and made a decision on how (and whether) to bet on the result of the 10th toss. After the 10th toss, players updated on the 10th toss. In subsequent games, retained knowledge of their past observations of tosses. Thus, for example, in a particular simulation, players had 500 observations after 50 games. In total, there were 1000 opportunities to bet per simulation, corresponding to the 1000 games.

In the second set of simulations, there were also 1000 games in each of the 1000 simulations. In these simulations, players had just 4 observations in each game, and they bet on the result of the 5th toss. The players’ updating was otherwise identical to the first set of simulations. Therefore, after 50 games, players had 250 observations in a particular simulation. In total, there were 1000 opportunities to bet per simulation. We conducted this second set of simulations to investigate how players performed with very small samples. In each simulation, every player observed the same sequence of tosses.

For each of the 1000 games in a simulation we used 1000 randomly generated ticket prices. We used different ticket prices for each simulation. However, for a given simulation, every player faced the same ticket prices. We recorded the overall mean payoffs and standard deviations for each player in each simulation. We also recorded the overall mean payoffs and standard deviations at different points during the simulations, as we show in the tables below. Finally, we recorded how often Frequentist chose to bet and how often they chose to withhold from betting.

The sample sizes for players’ observations (9 new observations per game for the first set of simulations, 4 for the second set) seemed to offer a reasonable balance between enabling us to look at short run performances and yet also giving players some data to use. No player will update their beliefs in a radical way in response to just several tosses. Therefore, with extremely small samples and just a few games, we would mainly be comparing the players’ initial choice models—interesting in some respects, but not very informative about the differences in the players’ statistical learning procedures. On the other hand, the players will almost always make identical decisions with very large samples, due to washing-out of priors and narrowing of confidence intervals. Our choice of sample sizes is intended to find a reasonable medium between comparisons with extremely large and extremely small samples.

In addition to varying the sample sizes, we also varied the coin bias across the values 0.1, 0.3, 0.5, 0.7, and 0.9. For each simulation and each particular coin bias, we randomly generated a single history of coin tosses and simulated each player’s behaviour with that history. Our choice to keep the histories fixed ensured that any differences in performance between players were not due to random variation in the coin toss histories that they faced.

We also made variations in players’ exogenous parameters—the parameters in their belief and decision algorithms that are not set by the methodologies. For Bayes, we varied the values for the beta distribution parameters of (a, b) to (100, 1), (1,1), and (100,1). For Frequentist and Williamsonian, we varied the values for \(\alpha \) to 0.01, 0.05, and 0.5. Finally, following the suggestion of Kyburg and Teng (1999), we varied the penalty parameter \(\epsilon \) for Frequentist, with the intention of varying the willingness of Frequentist to place bets rather than hold. For Sample, there were no parameters to vary.

Each simulation’s results were independent of every other simulation, and their basic stochastic parameters were the same for a given setting, e.g. a given coin bias. Consequently, our simulations might be regarded as independent and identically distributed draws from the overall population of possible simulations under these settings. In the next section, we use the standard error under that interpretation and a 95% confidence interval. However, we strongly stress our study is descriptive rather than inferential: we have provided some relevant descriptive statistics, but proper testing of hypotheses regarding our simulations is a project for further research. Like our simulation’s code, our datasets are available upon request.

4 Results and analysis

We report our results in Tables 1, 2, 3, 4, 5, 6, 7 and 8. We begin with some comparative points, always using the standard errors reported in the tables. To evaluate the players in relation to the benchmark Sample player, we compared the best performance of a player in the confidence interval for that player’s results with the worst performance of Sample in its confidence interval. Using this analysis, if a player fails to outperform Sample for a particular number of games (on average over the 1000 simulations) then we have starred the cell in Tables 2 and 4. In starless cells, the player outperforms Sample according to the interval analysis. This interval analysis acknowledges the random errors involved in such an assessment, while also indicating the descriptive basis for the evaluations that we make in this section.

When Bayes sets a B(1,1) priorFootnote 15 and Frequentist sets a high value of \(\alpha \), we found that they both reliably either matched or exceeded the performance of the Sample player: see Tables 2 and 4 for B(1,1). In this respect, our results differ from those of Kyburg and Teng (1999). The very similar performance is unsurprising in a large number of games, where these players’ performances were more or less identical. As sample sizes become large, then Bayes’s posterior probabilities become very close the cumulative observed sample frequency, while Frequentist’s confidence interval estimates are very narrow around that frequency. However, it is surprising with 10 games, where players had less than 100 observations to make their decisions in each game. Bayes with a B(1,1) prior and Frequentist with \(\alpha = 0.5\) are behaving differently, but neither performed detectably better across all the coin biases. The same was true for Williamsonian with \(\alpha = 0.5\). Overall, for each of the three statistical methodologies, there were player settings under which they passed the benchmark that we set.

We did find inferior performance relative to Sample when Bayes’s beta prior was biased towards either heads, B(100,1), or tails, B(1,100). Indeed, in Tables 1 and 3, we can see that this player setting is the only one under which any player made a loss in the short-term. It is not surprising that, with a biased prior that differs from the coin bias, Bayes would perform badly. It is perhaps more surprising that these biases never yielded detectable special advantages relative to the flat B(1,1) prior. Even in the short run, there were no simulation settings where Bayes performed less well (in either the short run or the long run) than Sample using a B(1,1) prior but not with a biased prior for the 1000 simulations as a whole. Consequently, a biased prior created the risk of some very bad performances for Bayes, without identifiable benefits relative to flat priors. Furthermore, these costs of biased priors were persistent: even with thousands of observations over many games, the Bayes with a badly inappropriate prior was still performing badly. While a washing-out of priors would occur in the long run, it can take a long time in this sort of decision problem.

The absence of special benefits from a biased prior can be explained by the “flatness” of the B(1,1) Bayesian prior. Although this prior is an equivocal and thus would generally have some advantages for a 0.5 coin bias, it is not this equivocation that is the key to its success in our decision problem. Instead, the advantages come from the speed with which Bayes revises their beliefs using this prior. Suppose that the coin is biased towards heads, such that its frequency of heads is 0.9. Such a bias will tend to produce samples that are themselves usually biased towards heads, and the Bayesian will quickly update on this sample data if they have a B(1,1) prior, thus quickly eliminating any special advantages that the B(100,1) prior will have from being biased towards heads. Some other equivocal priors—e.g. a B(100,100) prior—would not perform so well when observing the tosses of a biased coin.

In terms of common points, all players tended to do better when the coin bias was set further away from 0.5. This result is unsurprising, because the chance of a very unrepresentative sample is greatest when the coin is fair. In contrast, if the coin bias is 0.1 or 0.9, then the randomisation process in the Python code will generate very unrepresentative samples at a lower rate. Kyburg and Teng found the same result (Kyburg and Teng, 1999, pp. 362–363).

We now turn to particular players. As previously noted, Bayes did best with a B(1,1) beta prior. While this result might seem to be favourable to Objective Bayesian approaches, according to which such a prior would be mandatory, note that Subjective Bayesians regard flat priors as permissible, provided that they are coherent with the total credence distribution. They just deny that they are rationally required. Furthermore, as a matter of contingent fact, most real-world Subjective Bayesian statisticians would choose such a prior if faced with the decision we describe. Since Bayesian reasoning with such a “flat” prior is similar to maximum likelihood estimation, it is also unsurprising that Bayes with a B(1,1) beta prior would at least match the performance of Sample.

We now consider the Williamsonian. They differ from the players studied in Kyburg and Teng (1999). Although Williamson’s theory of statistics is not as prominent as the Bayesian or frequentist methodologies, it provided us with the basis for an intriguing “hybrid” player.Footnote 16 Notably, Williamsonian matched the performance of Bayes and Frequentist. In some cases, with low values of \(\alpha \), the Williamsonian failed to match the performance of Sample in the very short run. However, this varied with different coin bias settings, so it was not a consistent pattern. Much more investigation is needed to infer anything definite about the best values of \(\alpha \) for Williamsonian’s performance. The problem might be that low values of \(\alpha \) increase the importance of the Equivocation norm as their confidence intervals will detect coin biases more slowly. If the coin bias is 0.5, then this behaviour is unproblematic: see Tables 2 and 4. However, when the coin is biased, the Equivocation norm can repeatedly drag Williamsonian’s credences towards 0.5. Our results are consistent with Williamson’s principal justification for the Equivocation norm: its advantages in minimising loss for the worst-case scenarios (Williamson, 2007). For combinatorial reasons, 0.5 is the least favourable stochastic condition for binomial sampling, because it maximises the number of unrepresentative samples.

At least in our simulations, Williamsonian was able to “have their cake and eat it” by using a (maximally) low value of \(\alpha = 0.5\). They were still able to match or exceed the performance of Sample when the coin bias was 0.5, in both the short and long run. Yet the greater receptiveness to sample data when \(\alpha = 0.5\) meant that they were also able to pass our benchmark with other coin biases. Nonetheless, one cannot extrapolate to all decision problems and say that a low value of \(\alpha \) will always be better for Williamsonian. In some decision problems, perhaps with rare extreme risks, being firmly equivocal could be beneficial.

Regarding biased priors, Williamsonian excludes these via the Equivocation norm, and thus they do not face the problem of having such a prior in this decision problem. Consequently, even the worst performances of Williamsonian were not as bad as the worst performances of Bayes with a prior that was biased in the wrong direction. For instance, see Table 4, for coin bias = 0.1 or 0.9, and runs of 10 games, where Williamsonian with \(\alpha = 0.01\) does far better than Bayes with B(100,1).

Finally, we consider Frequentist. For \(\epsilon \), we firstly found that this parameter achieved its intended function of increasing Frequentist’s propensity to bet, as we report in Tables 7 and 8. On the other hand, \(\epsilon \) does not seem to reliably affect Frequentist’s performance, as we see in Table 6. As for \(\alpha \), we found the same result as Kyburg and Teng (1999). In the very short run, there were some suggestive but indefinite signs that very low values of \(\alpha \)—particularly \(\alpha = 0.01\)—could lead to a poor performance relative to Sample, but more research is needed; the differences were not as clear as with Bayes using a biased prior. For \(\alpha = 0.05\) and \(\alpha = 0.5\), there were at least some occasions where Frequentist matched Sample. When \(\alpha = 0.5\), this above-benchmark performance was consistent.

Why might high values of \(\alpha \) hurt the performance of Frequentist? This question was not a focus of our study and thus it would benefit from more comprehensive analysis. Nonetheless, one notable point from Tables 7 and 8 is that a lower value of \(\alpha \) made an identifiable difference to the rate of using randomisation. Recall that, in Frequentist’s mixed strategy decision rule, they randomise between buying a ticket, buying a reversed ticket, or withholding from betting in situation (vii), and between buying a ticket or a buying a reversed ticket in situation (v), as we detail in Sect. 3.3.2.Footnote 17 In situation (v), neither betting heads nor betting on tails interval dominates the other given Frequentist’s estimated confidence interval. Thus, they randomise between these two bets using an additional fair coin. In situation (vii), none of their three possible actions is interval dominated, and therefore Frequentist randomises between all three. Both these randomisation procedures mean that Frequentist is acting equivocally between heads and tails. Consequently, if \(\alpha \) is low, then they are not making much use of sample data indicating bias, but if \(\alpha \) is high, then Frequentist quickly detects bias and exceeds the minimum performance of Sample even in the very short run, as in Tables 2 and 4. It does not follow that a high value of \(\alpha \) is better for decision problems in general. Instead, our results suggest high values are better for this kind of decision problem, because it makes prompter use of the sample information, and hence helps avoid acting equivocally when the coin is biased.

5 Discussion

Although our simulations did not detect any reason to use one statistical methodology rather than another, it does not follow that the choice is underdetermined. Firstly, Bayesians might note that, unlike Bayesian decision theory, Frequentist’s decision algorithm has no axiomatic derivation; Kyburg acknowledges that it is produced by unsystematic intuitive considerations (Kyburg, 2003, p. 148). We were able to use these intuitive considerations to infer how Frequentist should extend the algorithm from Kyburg and Teng (1999) to cases where there was a non-zero penalty for holding, but it would be an extreme exaggeration to call it a decision “theory”. More generally, there is no agreement on how to make decisions with interval-valued beliefs.Footnote 18 Secondly, frequentists might argue that there are broader aspects like long run decisions, social decisions, or epistemological points that we do not address in our simulations, which only involve individual decision-making in the short run. Thirdly, Williamsonians also think that their approach has long run virtues that favour their view for decision-making (Williamson, 2007). Our results suggest that either (1) we need a different sort of challenge for comparing their short run performance or (2) the choice between them—even when we focus on the relatively narrow issue of making good decisions—must be approached via questions of systematic coherence, the social consequences of their implementation in science, asymptotic strengths or weaknesses, results of debates in formal learning theory (Schulte, 2018) and other angles.