Abstract

This work introduces a refinement of the Parsimonious Model for fitting a Gaussian Mixture. The improvement is based on the consideration of clusters of the involved covariance matrices according to a criterion, such as sharing Principal Directions. This and other similarity criteria that arise from the spectral decomposition of a matrix are the bases of the Parsimonious Model. We show that such groupings of covariance matrices can be achieved through simple modifications of the CEM (Classification Expectation Maximization) algorithm. Our approach leads to propose Gaussian Mixture Models for model-based clustering and discriminant analysis, in which covariance matrices are clustered according to a parsimonious criterion, creating intermediate steps between the fourteen widely known parsimonious models. The added versatility not only allows us to obtain models with fewer parameters for fitting the data, but also provides greater interpretability. We show its usefulness for model-based clustering and discriminant analysis, providing algorithms to find approximate solutions verifying suitable size, shape and orientation constraints, and applying them to both simulation and real data examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Footnote 1In this paper we introduce methodological applications arising of cluster analysis of covariance matrices. Throughout, we will show that appropriate clustering criteria on these objects provide useful tools in the analysis of classic problems in Multivariate Analysis. The chosen framework is that of multivariate classification under a Gaussian Mixture Model, a setting where a suitable reduction of the involved parameters is a fundamental goal leading to the Parsimonious Model. We focus on this hierarchized model, designed to explain data with a minimum number of parameters, by introducing intermediate categories associated with clusters of covariance matrices.

Gaussian Mixture Models approaches to discriminant and cluster analysis are well-established and powerful tools in multivariate statistics. For a fixed number K, both methods aim to fit K multivariate Gaussian distributed components to a data set in \({\mathbb {R}}^d\), with the key difference that labels providing the source group of the data are known (supervised classification) or unknown (unsupervised classification). In the supervised problem, we handle a data set with N observations \(y_1,\ldots ,y_N\) on \({\mathbb {R}}^d\) and associated labels \(z_{ {i},{ {k}}}, {i}=1,\ldots ,N\), \({ {k}}=1,\ldots , {K}\), where \(z_{ {i},{ {k}}}=1\) if the observation \(y_{ {i}}\) belongs to the group k and 0 otherwise. Denoting by \(\phi (\cdot \vert \mu ,\Sigma )\) the density of a multivariate Gaussian distribution on \({\mathbb {R}}^d\) with mean \(\mu \) and covariance matrix \(\Sigma \), we seek to maximize the complete log-likelihood function

with respect to the weights \(\pmb {\pi }=(\pi _1,\ldots ,\pi _{ {K}})\) with \(0 \le \pi _{ {k}}\le 1, \ \sum _{ {k}=1}^{ {K}} \pi _{ {k}}=1\), the means \(\pmb {\mu }=(\mu _1,\ldots ,\mu _{ {K}})\) and the covariance matrices \(\pmb {\Sigma }=(\Sigma _1,\ldots ,\Sigma _{ {K}})\). In the unsupervised problem the labels \(z_{ {i},{ {k}}}\) are unknown, and fitting the model involves the maximization of the log-likelihood function

with respect to the same parameters. This maximization is more complex, and it is usually performed via the EM algorithm (Dempster et al. 1977), where we repeat iteratively the following two steps. The E step, which consists in computing the expected values of the unobserved variables \(z_{ {i},{ {k}}}\) given the current parameters, and the M step, in which we are looking for the parameters maximizing the complete log-likelihood (1) for the values \(z_{ {i},{ {k}}}\) computed in the E step. Therefore, both model-based techniques require the maximization of (1), for which optimal values of the weights and the mean are easily computed:

With these optimal values, if we denote \(S_{ {k}}=(1/n_{ {k}})\sum _{{ {i}}=1}^N z_{ {i},{ {k}}} (y_{ {i}}-\mu _{ {k}})(y_{ {i}}-\mu _{ {k}})^T\), the problem of maximizing (1) with respect to \(\Sigma _1,\ldots ,\Sigma _{ {K}}\) is equivalent to the problem of maximizing

where \( W_d( \cdot \vert n_{ {k}},\Sigma _{ {k}})\) is the d-dimensional Wishart distribution with parameters \(n_{ {k}},\Sigma _{ {k}}\). For even moderate dimension d, the large number of involved parameters in relation with the size of the data set could result in a poor behavior of standard unrestricted methods. In order to improve the solutions, regularization techniques are often invoked. In particular, many authors have proposed estimating the maximum likelihood parameters under some additional constraints on the covariance matrices \(\Sigma _1,\ldots ,\Sigma _{ {K}}\), which lead us to solve the maximization of (4) under these constraints. Between these proposals, a prominent place is occupied by the so called Parsimonious Model, a broad set of hierarchized constraints capable of adapting to conceptual situations that may occur in practice.

A common practice in multivariate statistics consists in assuming that covariance matrices share a common part of their structure. For example, if \(\Sigma _1=\ldots =\Sigma _{ {K}}=I_d\), the clustering method described in (2) gives just the k-means. If we assume common covariance matrices \(\Sigma _1=\ldots =\Sigma _{ {K}}=\Sigma \), the procedure coincides with linear discriminant analysis (LDA) in the supervised case (1), and with the method proposed in Friedman and Rubin (1967) in the unsupervised case (2). General theory to organize these relationships between covariance matrices is based on the spectral decomposition, beginning with the analysis of Common Principal Components (Flury 1984, 1988). In the discriminant analysis setting, the use of the spectral decomposition was first proposed in Flury et al. (1994), and in the clustering setting in Banfield and Raftery (1993). The term “Parsimonious model" and the fourteen levels given in Table 1 were introduced in Celeux and Govaert (1995) for the clustering setting and later, in Bensmail and Celeux (1996), for the discriminant setup.

Given a positive definite covariance matrix \(\Sigma _{ {k}}\), the spectral decomposition of reference is

where \(\gamma _{ {k}}={\text {det}}(\Sigma _{ {k}})^{1/d}> 0\) governs the size of the groups, \(\Lambda _{ {k}}\) is a diagonal matrix with positive entries and determinant equal to 1 that controls the shape, and \(\beta _{ {k}}\) is an orthogonal matrix that controls the orientation. Given K covariance matrices \(\Sigma _1,\ldots ,\Sigma _{ {K}}\), the spectral decomposition enables to establish the fourteen different parsimonious levels in Table 1, allowing differences or not in the parameters associated to size, shape and orientation. To fit a Gaussian Mixture Model under a parsimonious level \({\mathscr {M}}\) in the Table 1, we must face the maximization of (4) under the parsimonious restriction. That is, we should find

where we say that \( {\pmb \Sigma }=(\Sigma _1,\ldots ,\Sigma _{ {K}})\in {\mathscr {M}}\) if the K covariance matrices verify the level. We should remark that the Common Principal Components model (Flury 1984, 1988) plays a key role in this hierarchy, which in any case is based on simple geometric interpretations.

Restrictions are also often used to solve a well-known problem that appears in model-based clustering, the unboundedness of the log-likelihood function (2). With no additional constraints, the problem of maximizing (2) is not even well defined, a fact that could lead to uninteresting spurious solutions, where some groups would be associated to a few, almost collinear, observations. Although we will also use these restrictions, we will not discuss on this line in this work. A review of approaches for dealing with this problem can be found in García-Escudero et al. (2017).

The aim of this paper is to introduce a generalization of equation (5), that allows us to give a likelihood-based classification associated to intermediate parsimonious levels. Let \(G \in \{1,\ldots ,K\}\) and \(\pmb u=(u_1,\ldots ,u_K)\) be any vector in \(\lbrace 1,\ldots , G \rbrace ^{K}\). Given a parsimonious level \({\mathscr {M}}\), we can formulate a model in which we assume that the theoretical covariance matrices \(\Sigma _1,\ldots ,\Sigma _K\) verify a parsimonious level \({\mathscr {M}}\) within each of the G classes defined by \(\pmb u\). For instance, let \(K=7\), \(G=3\), \({\mathscr {M}}\) = VVE and take \(\pmb u=(1,1,2,3,1,2,1)\). This implies

Following (5), the estimation of the original covariance matrices involves maximizing (4) within \({\mathscr {M}}_{\pmb u}\), the set of covariance matrices satisfying \(\{\Sigma _k: u_k=g\} \in {\mathscr {M}}\) for all \(g=1,\ldots ,G\). Using the maximized log-likelihood as a measure for the appropriateness of \(\pmb u\), the optimal \(\hat{\pmb u}\) would provide a classification for \(S_1,\ldots ,S_K\) according to the level \({\mathscr {M}}\). Precise definitions will be provided in Sect. 2. We will present an iterative procedure to simultaneously compute the optimal classification and covariance matrix estimators through the modification of equation (5) given by

Solving this equation will allow us to fit Gaussian Mixture Models with intermediate parsimonious levels, in which the common parameters of a parsimonious level will be shared within each of the G classes given by the vector of indexes \( {\pmb {\hat{u}}}\), but varying between the different classes. In the previous example, we obtain three classes of covariance matrices that share their principal directions within each class, resulting in a better interpretation of the final classification and allowing a considerable reduction of the number of parameters to be estimated. We will use these ideas for fitting Gaussian Mixture Models in discriminant analysis and cluster analysis. To avoid unboundedness of the objective function in the clustering framework, we will impose the determinant and shape constraints of García-Escudero et al. (2020), which are fully implemented in the MATLAB toolbox FSDA (Riani et al. 2012). We will analyze some examples where the proposed models result in less parameters and more interpretability fitting the data, being better suited when compared with the 14 parsimonious models. We point out that, as it is becoming usual in the literature, to carry out the comparisons between different models, we will use the Bayesian Information Criterion (BIC). This applies to all examples considered in the text. It has been noticed by many authors that BIC selection works properly in model based clustering, as well as in discriminant analysis. Fraley and Raftery (2002) includes a detailed justification for the use of BIC, based on previous references. A summary of the comparison of BIC with other techniques for model selection can also be found in Biernacki and Govaert (1999).

The paper is organized as follows. Section 2 approaches the problem of the parsimonious classification of covariance matrices given by equation (6), focusing on its computation for the most interesting restrictions in terms of dimensionality reduction and interpretability. Throughout, we will only work with models based on the parsimonious levels of proportionality (VEE) and common principal components (VVE), although the extension to other levels is straightforward. Section 3 applies the previous theory for the estimation of Gaussian Mixture Models in cluster analysis and discriminant analysis, including some simulation examples for their illustration. Section 4 includes real data examples, where we will see the gain in interpretability that can arise from these solutions. Some conclusions are outlined in Sect. 5. Finally, Appendix A includes theoretical results, Appendix B provides some additional simulation examples and Appendix C explains technical details about the algorithms. Additional graphical material is provided in the Online Supplementary Figures document.

2 Parsimonious classification of covariance matrices

Given \(n_1,\ldots ,n_{ {K}}\) independent observations from K groups with different distributions, and \(S_1,\ldots ,S_{ {K}}\) the sample covariance matrices, a group classification may be provided according to different similarity criteria. In the general case, given a similarity criterion f depending on the sample covariance matrices and the sample lengths, the problem of classifying K covariance matrices in G classes, \(1\le G \le { {K}}\), typically would consist in solving the equation

where \({\mathscr {H}} = \bigl \lbrace {\pmb u}=(u_1,\ldots ,u_K) \in \lbrace 1,\ldots , G\rbrace ^{ {K}}: \forall \ g = 1,\ldots ,G \quad \exists \ {k} \text { verifying } {u}_{ {k}}=g \bigr \rbrace \). In this work, we focus on the Gaussian case, proposing different similarity criteria based on the parsimonious levels that arise from the spectral decomposition of a covariance matrix.

Multivariate procedures based on parsimonious decompositions assume that the theoretical covariance matrices \(\Sigma _1,\ldots ,\Sigma _{ {K}}\) jointly verify one level \({\mathscr {M}}\) out of the fourteen in Table 1. To elaborate on this idea, we include now some useful notation. In a parsimonious model \({\mathscr {M}}\), we write \((\Sigma _1,\ldots ,\Sigma _{ {K}}) \in {\mathscr {M}}\) if these matrices share some common parameters C, and they have variable parameters \(\pmb V = (V_1,\ldots ,V_{ {K}})\) (specified in the model \({\mathscr {M}}\)). We will denote by \(\Sigma (V_{ {k}},C)\) the covariance matrix with the size, shape and orientation parameters associated to \((V_{ {k}},C)\). Therefore, under the parsimonious level \({\mathscr {M}}\), we are assuming that

If the \(n_{ {k}}\) observations of group k are independent and arise from a distribution \(N(\mu _{ {k}},\Sigma _{ {k}})\), according to the arguments in the introduction, it is natural to consider the maximized log-likelihood (5) under the parsimonious level \({\mathscr {M}}\) as a similarity criterion for the covariance matrices. This allows us to measure their resemblance in the features associated to the common part of the decomposition in the theoretical model. Thus, the similarity criterion for the parsimonious level \({\mathscr {M}}\) is

Consequently, given a level of parsimony \({\mathscr {M}}\), the covariance matrix classification problem in G classes consists in solving the equation

In order to avoid the combinatorial problem of maximizing within \({\mathscr {H}}\), denoting the variable parameters by \(\pmb V=(V_1,\ldots ,V_{ {K}})\) and the common parameters by \(\pmb C=(C_1,\ldots ,C_G)\), we focus on the problem of maximizing

since the value \( {{ {\pmb u}}}\) maximizing this function agrees with the optimal \( {\hat{ {\pmb u}}}\) in (7). This problem will be referred to as Classification \(\pmb G\)-\(\pmb {{\mathscr {M}}}\). From the expression of the d-dimensional Wishart density, we can see that maximizing W is equivalent to minimizing with respect to the same parameters the function

Maximization can be achieved through a simple modification of the CEM algorithm (Classification Expectation Maximization, introduced in Celeux and Govaert (1992)), for any of the fourteen parsimonious levels. A sketch of the algorithm is presented here:

Classification \(\pmb G\)-\(\pmb {{\mathscr {M}}:}\) Starting from an initial estimation \(\pmb {C^0}=(C_1^0,\ldots ,C_G^0)\) of the common parameters, which may be taken as the parameters of G different matrices \(S_{ {k}}\) randomly chosen between \(S_1,\ldots ,S_{ {K}}\), the \(m^{th}\) iteration consists of the following steps:

-

u-V step: Given the common parameters \(\pmb {C^m}=(C_1^m,\ldots , C_G^m)\), we maximize with respect to the partition \( {\pmb u}\) and the variable parameters \(\pmb {V}\). For each \( {k} = 1,\ldots , {K}\), we compute

$$\begin{aligned} {\tilde{V}} _{ {k},g} = \quad \underset{V}{{\text {argmax }}}\ W_d\Bigl (n_{ {k}} S_{ {k}} \big \vert n_{ {k}},\Sigma (V,C_g)\Bigr ) \quad \nonumber \end{aligned}$$for \(1\le g\le G\), and we define:

$$\begin{aligned} {u}_{ {k}}^{m+1} = \underset{g\in \lbrace 1,\ldots ,G\rbrace }{{\text {argmax }}} \ W_d\Bigl (n_{ {k}} S_{ {k}} \big \vert n_{ {k}}, \Sigma ({\tilde{V}}_{ {k},g},C_g)\Bigr ) . \end{aligned}$$ -

V-C step: Given the partition \(\pmb { {u}^{m+1}}\), we compute the values \((\pmb {V^{m+1}},\pmb {C^{m+1}})\) maximizing \(W(\pmb { {u}^{m+1}},\pmb { V}, \pmb {C})\). The maximization can be done individually for each of the groups created, by maximizing for each \(g=1,\ldots ,G\) the function

$$\begin{aligned} (\lbrace&V_{{k}} \rbrace _{k: {u_k}=g}, C_g) \\&\longmapsto \sum _{k: {u_k}=g}\hspace{0.1cm}\log \Bigl (W_d\bigl (n_k S_k\vert n_k,\Sigma (V_k,C_g)\bigr )\Bigr ) \ , \end{aligned}$$The maximization for each of the 14 parsimonious levels can be done, for instance, with the techniques in Celeux and Govaert (Celeux and Govaert 1995). The methodology proposed therein for common orientation models uses modifications of the Flury algorithm (Flury and Gautschi 1986). However, for these models we will use the algorithms subsequently developed by Browne and McNicholas (2014a, 2014b), often implemented the software available for parsimonious model fitting, which allow more efficient estimation of the common orientation parameters.

For each of the fourteen parsimonious models, the variable parameters in the solution \(\pmb {{\hat{V}}}\) may be computed as a function of the parameters \(( {\pmb {\hat{u}}},\pmb {{\hat{C}}})\), the sample covariance matrices \(S_1,\ldots ,S_{ {K}}\) and the sample lengths \(n_1,\ldots ,n_{ {K}}\). Therefore, the function W could be written as \(W(\pmb { {u}},\pmb {C})\), and the maximization could be seen as a particular case of the coordinate descent algorithm explained in Bezdek et al. (1987).

As it was already noted, we focus on the development of the algorithm only for two particular (the most interesting) parsimonious levels. First of all, we are going to keep models flexible enough to enable the solution of (6), when taking \(G= {K}\) (no grouping is assumed), to coincide with the unrestricted solution, \({{\hat{\Sigma }}}_{ {k}}=S_{ {k}}\). The first six models do not verify this condition. For the last eight models, the numbers of parameters are

where \(\delta _{\text {VOL}}, \delta _{\text {SHAPE}}\) and \(\delta _{\text {ORIENT}}\) take the value 1 if the given parameter is assumed to be common, and K if it is assumed to be variable between groups. When d and K are large, the main source of variation in the number of parameters is related to considering common or variable orientation, followed by considering common or variable shape. For example, if \(d=9,k=6\), the number of parameters related to each constraint are detailed in Table 2.

Our primary motivation is exemplified through Table 2: to raise alternatives for the models with variable orientation. For that, we look for models with orientation varying in G classes, with \(1\le G \le { {K}}\). We consider the case where size and shape are variable across all groups (G different Common Principal Components, G-CPC) and also the case where shape parameters are additionally common within each of the G classes (proportionality to G different matrices, G-PROP). Apart from the parameter reduction, these models can provide an easier interpretation of the variables involved in the problem, which is often a hard task in multidimensional problems with several groups. We keep the size variable, since it does not cause a major increase in the number of parameters, and it is easy to interpret. Therefore, the models we are considering are:

-

\({\textbf {Classification G-CPC}}\): We are looking for G orthogonal matrices \(\pmb {\beta }=(\beta _1,\ldots ,\beta _G)\) and a vector of indexes \( {\pmb {u}}=( {u}_1,\ldots , {u}_{ {K}}) \in {\mathscr {H}}\) such that

$$\begin{aligned} \Sigma _{ {k}} = \gamma _{ {k}} \beta _{ {u}_{ {k}}} \Lambda _{ {k}} \beta _{ {u}_{ {k}}}^T \quad {k}=1,\ldots , {K}\, \end{aligned}$$where \(\pmb {\gamma }=(\gamma _1,\ldots ,\gamma _{ {K}})\) and \(\pmb {\Lambda }=(\Lambda _1,\ldots ,\Lambda _{ {K}})\) are the variable size and shape parameters. The number of parameters is \( { {K}}+ { {K}}(d-1) + G d(d-1)/2\). In the situation of Table 2, taking \(G=2\) the number of parameters is 126, while allowing for variable orientation it is 270. To solve (7), we have to find a vector of indexes \( {\pmb {\hat{u}}}\), G orthogonal matrices \(\pmb {{{\hat{\beta }}}}\) and variable parameters \(\pmb {{{\hat{\gamma }}}}\) and \(\pmb {{{\hat{\Lambda }}}}\) minimizing

$$\begin{aligned}&( {\pmb {u}},\pmb {\Lambda },\pmb {\gamma }, \pmb {\beta }) \nonumber \\&\longmapsto \sum _{g=1}^G \sum _{ {k}: {u}_{ {k}}=g} n_{ {k}} \left( d\log \bigl (\gamma _{ {k}}\bigr )+ \frac{1}{\gamma _{ {k}}}{\text {tr}}\left( \Lambda _{ {k}}^{-1}\beta _g^TS_{ {k}}\beta _g\right) \right) . \end{aligned}$$(8) -

\({\textbf {Classification G-PROP}}\): We are looking for G orthogonal matrices \(\pmb {\beta }=(\beta _1,\ldots ,\beta _G)\), G shape matrices \(\pmb {\Lambda }=(\Lambda _1,\ldots ,\Lambda _G)\) and \( {\pmb u}=( {u}_1,\ldots , {u}_{ {K}})\in {\mathscr {H}}\) such that

$$\begin{aligned}\Sigma _{ {k}} = \gamma _{ {k}} \beta _{ {u}_{ {k}}} \Lambda _{ {u}_{ {k}}} \beta _{ {u}_{ {k}}}^T \quad {k}=1,\ldots , { {K}}\,\end{aligned}$$where \(\pmb {\gamma }=(\gamma _1,\ldots ,\gamma _{ {K}})\) are the variable size parameters. The number of parameters is \( { {K}}+ G (d-1) + G d(d-1)/2\). In the situation of Table 2, the number of parameters if we take \(G=2\) is 94. To solve (7), we have to find a vector of indexes \( {\pmb {\hat{u}}}\), G orthogonal matrices \(\pmb {{{\hat{\beta }}}}\), G shape matrices \(\pmb {{{\hat{\Lambda }}}}\) and the variable size parameters \(\pmb {{{\hat{\gamma }}}}\) minimizing

$$\begin{aligned}&( {\pmb {u}},\pmb {\Lambda },\pmb {\gamma }, \pmb {\beta }) \nonumber \\&\longmapsto \sum _{g=1}^G \sum _{ {k}: {u}_{ {k}}=g} n_{ {k}} \left( d\log \bigl (\gamma _{ {k}}\bigr )+ \frac{1}{\gamma _{ {k}}}{\text {tr}}\left( \Lambda _g^{-1}\beta _g^TS_{ {k}}\beta _g\right) \right) . \end{aligned}$$(9)

Explicit algorithms for finding the minimum of (8) and (9) are given in Section C.2 in the Appendix. The results given by both algorithms are illustrated in the following example, where we have randomly created 100 covariance matrices \(\Sigma _1,\ldots ,\Sigma _{100}\) according:

where \({\text {U}}(\alpha )\) represents the rotation of angle \(\alpha \), \({\text {Diag}}(1,Y)\) is the diagonal matrix with entries 1, Y, and \(X,Y,\alpha \) are uniformly distributed random variables with distributions:

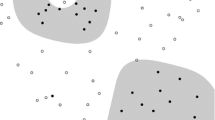

For each \( {k}=1,\ldots ,100\), we have taken \(S_{ {k}}\) as the sample covariance matrix computed from 200 independent observations from a distribution \(N(0,\Sigma _{ {k}})\), and we have applied 4-CPC and 4-PROP to obtain different classifications of \(S_1,\ldots ,S_{100}\). The partitions obtained by both methods allow us to classify the covariance matrices according to both criteria. Figure 1 shows the 95% confident ellipses representing the sample covariance matrices associated to each class (coloured lines) together with the estimations of the common axes or the common proportional matrix within each class (black lines).

3 Gaussian mixture models

In a Gaussian Mixture Model (GMM), data are assumed to be generated by a random vector with probability density function:

where \(0\le \pi _{ {k}} \le 1, \ \sum _{ {k}=1}^{ {K}} \pi _{ {k}}=1\). The idea of introducing covariance matrix restrictions given by parsimonious decomposition in the estimation of GMMs has become a common tool for statisticians, and methods are implemented in the software R in many packages. In this paper we use for the comparison the results given by the package mclust (Fraley and Raftery 2002; Scrucca et al. 2016), although there exists many others widely known (Rmixmod: Lebret et al. (2015); mixtools: Benaglia et al. (2009)). The aim of this section is to explore how we can fit GMMs in different contexts with the intermediate parsimonious models explained in Sect. 2, allowing the common part of the covariance matrices in the decomposition to vary between G classes. That is, with the same notation as in Sect. 2, we want to study GMMs with density function

where \( {\pmb u}=( {u}_1,\ldots , {u}_{ {K}})\in {\mathscr {H}}\) is a fixed vector of indexes, \(\pmb V = (V_1,\ldots ,V_{ {K}})\) are the variable parameters, \(\pmb {C}=( C_1,\ldots , C_G)\) are the common parameters among classes and \(\Sigma (V_{ {k}},C_g)\) is the covariance matrix with the parameters given by \((V_{ {k}},C_g)\). The following subsections exploit the potential of these particular GMMs for cluster analysis and discriminant analysis. A more general situation where only part of the labels are known could also be considered, following the same line as in Dean et al. (2006), but it will not be discussed in this work.

As already noted in the Introduction, the criterion we are going to use for model selection between all the estimated models is BIC (Bayesian Information Criterion), choosing the model with a higher value of the BIC approximation given by

where N is the number of observations and p is the number of independent parameters to be estimated in the model. This criterion is used for the comparison of the intermediate models G-CPC and G-PROP with the fourteen parsimonious models estimated in the software R with the functions in the mclust package. In addition, within the framework of discriminant analysis, the quality of the classification given by the best models, in terms of BIC, is also compared using cross validation techniques.

3.1 Model-based clustering

Given \(y_1,\ldots ,y_N\) independent observations of a d-dimensional random vector, clustering methods based on fitting a GMM with K groups seek to maximize the log-likelihood function (2). From the fourteen possible restrictions considered in Celeux and Govaert (1995), we can compute fourteen different maximum likelihood solutions in which size, shape and orientation are common or not between the K covariance matrices. For a particular level \({\mathscr {M}}\) in Table 1, the fitting requires the maximization of the log-likelihood

where \(\pmb {\pi }=(\pi _1,\ldots ,\pi _{ {K}})\) are the weights, with \(0\le \pi _{ {k}}\le 1,\) \( \sum _{ {k}=1}^{ {K}} \pi _{ {k}}=1\), \(\pmb {\mu }=(\mu _1,\ldots ,\mu _{ {K}})\) the means, \(\pmb {V}=(V_1,\ldots ,V_{ {K}})\) the variable parameters and C the common parameters. Estimation under the parsimonious restriction is performed via the EM algorithm. In the GMM context, we can see the complete data as pairs \((y_{ {i}},z_{ {i}})\), where \(z_{ {i}}\) is an unobserved random vector such that \(z_{ {i},{ {k}}}=1\) if the observation \(y_{ {i}}\) comes from distribution k, and \(z_{ {i},{ {k}}}=0\) otherwise.

With the ideas of Sect. 2, we are going to fit Gaussian Mixture Models with parsimonious restrictions, but allowing the common parameters to vary between different classes. Assuming a parsimonious level of decomposition \({\mathscr {M}}\) and a number \(G\in \lbrace 1,\ldots , { {K}}\rbrace \) of classes, we are supposing that our data are independent observations from a distribution with density function (10). The log-likelihood function given a fixed vector of indexes \( {\pmb u}\) is

For each \( {\pmb u}\in {\mathscr {H}}\), we can fit a model. In order to choose the best value for the vector of indexes \( {\pmb u}\), we should compare the BIC values given by the different models estimated. As the number of parameters is the same, the best value for \( {\pmb u}\) can be obtained by taking

In order to avoid the combinatorial problem of maximizing within \({\mathscr {H}}\), we can take \( {\pmb u}\) as if it were a parameter, and we are going to focus on the problem of maximizing

that will be referred to as Clustering G-\(\pmb {{\mathscr {M}}}\). Therefore, given the unobserved variables \(z_{ {i},{ {k}}}\), for \( {k}=1,\ldots , {K}\) and \({ {i}}=1,\ldots ,N\), the complete log-likelihood is

The proposal of this section is to fit this model given a parsimonious level \({\mathscr {M}}\) and fixed values of K and \(G \in \lbrace 1,\ldots , { {K}}\rbrace \), introducing also constraints to avoid the unboundedness of the log-likelihood function (11). For this purpose, we introduce the determinant and shape constraints studied in García-Escudero et al. (2020). For \( {k}=1,\ldots , {K}\), denote by \((\lambda _{ {k},1},\ldots ,\lambda _{ {k},d})\) the diagonal elements of the shape matrix \(\Lambda _{ {k}}\) (which may be the same within classes). We impose K constraints controlling the shape of each group, in order to avoid solutions that are almost contained in a subspace of lower dimension, and a size constraint in order to avoid the presence of very small clusters. Given \(c_{sh},c_{vol}\ge 1\), we impose:

Remark 1

With these restrictions, the theoretical problem of maximizing (11) is well defined. If Y is a random vector following a distribution \({\mathbb {P}}\), the problem consists in maximizing

with respect to \(\pmb {\pi },\pmb {\mu }, {\pmb {u}},\pmb {V},\pmb {C}\), defined as above, and verifying (13). If \({{\mathbb {P}}} _N\) stands for the empirical measure \({{\mathbb {P}}} _N=(1/ N) \sum _{ {i}=1}^N \delta _{\left\{ y_{ {i}}\right\} }\), by replacing \({{\mathbb {P}}} \) by \({{\mathbb {P}}} _N\), we recover the original sample problem of maximizing (11) under the determinant and shape constraints (13). This approach guarantees that the objective function is bounded, allowing results to be stated in terms of existence and consistence of the solutions (see Section A in the Appendix).

Now, we are going to give a sketch of the EM algorithm used for the estimation of these intermediate parsimonious clustering models, for each of the fourteen levels.

Clustering G-\(\pmb {{\mathscr {M}}: }\) Starting from an initial solution of the parameters \(\pmb {\pi ^{0}},\pmb {\mu ^{0}},\pmb { {u}^{0}}\), \(\pmb {V^{0}},\pmb {C^{0}}\), we have to repeat the following steps until convergence:

-

E step: Given the current values of the parameters \(\pmb {\pi ^{m}},\pmb {\mu ^{m}},\pmb { {u}^{m}}\), \(\pmb {V^{m}},\pmb {C^{m}}\), we compute the posterior probabilities

$$\begin{aligned} z_{ {i},{ {k}}} = \frac{\pi _{ {k}}^m \phi \Bigl (y_{ {i}}\vert \mu _{ {k}}^m,\Sigma \bigl (V_{ {k}}^m,C_{ {u}_{ {k}}}^m\bigr )\Bigr )}{\sum _{l=1}^{ {K}} \pi _l^m \phi \Bigl (y_{ {i}}\vert \mu _l^m,\Sigma \bigl (V_l^m,C_{ {u}_l}^m\bigr )\Bigr )} \end{aligned}$$(15)for \( {k}=1,\ldots , {K}, \ { {i}}=1,\ldots ,N\).

-

M step: In this step, we have to maximize (12) given the expected values \(\lbrace z_{ {i},{ {k}}} \rbrace _{ {i},{ {k}}}\). The optimal values for \(\pmb {\pi ^{m+1}},\pmb {\mu ^{m+1}}\) are given by (3). With these optimal values, if we denote \(S_{ {k}}= (1/n_{ {k}})\sum _{{ {i}}=1}^N z_{ {i},{ {k}}} (y_{ {i}}-\mu _{ {k}}^{m+1})(y_{ {i}}-\mu _{ {k}}^{m+1})^T\), then we have to find the values \(\pmb { {u}^{m+1}}\), \(\pmb {V^{m+1}},\pmb {C^{m+1}}\) verifying the determinant and shape constraints (13) maximizing

$$\begin{aligned} (&{\pmb u}, \pmb {V},\pmb C) \longmapsto CL\Bigl (\pmb {\pi ^{ {m+1}}},\\&\quad \pmb {\mu ^{ {m+1}}} , {\pmb u},\pmb {V},\pmb C\Big \vert y_1,\ldots ,y_N,z_{1,1},\ldots ,z_{ {N,K}}\Bigr ) \ . \end{aligned}$$If we remove the determinant and shape constraints, the solution of this maximization coincides with the classification problem presented in Sect. 2 for the computed values of \(n_1,\ldots ,n_{ {K}}\) and \(S_1,\ldots ,S_{ {K}}\). A simple modification of that algorithm, computing on each step the optimal size and shape constrained parameters (instead of the unconstrained version) with the optimal truncation algorithm presented in García-Escudero et al. (2020) allows the maximization to be completed. Determinant and shape constraints can be incorporated in the algorithms together with the parsimonious constraints following the lines developed in García-Escudero et al. (2022).

As already noted in Sect. 2, we keep only the clustering models G-CPC and G-PROP, the most interesting in terms of parameter reduction and interpretability. For these models, explicit algorithms are explained in Section C.3 in the Appendix. Now, we are going to illustrate the results of the algorithms in two simulation experiments:

-

Clustering G-CPC: In this example, we simulate \(n=100\) observations from each of 6 Gaussian distributions, with means \(\mu _1,\ldots ,\mu _6\) and covariance matrices verifying

$$\begin{aligned} \Sigma _{ {k}} =&{\gamma }_{ {k}}\beta _1\Lambda _{ {k}} \beta _1^T, \quad {k}=1,2,3, \\ \Sigma _{ {k}} =&{\gamma }_{ {k}}\beta _2\Lambda _{ {k}} \beta _2^T ,\quad {k}=4,5,6 \ . \end{aligned}$$In Fig. 2, we can see in the first plot the 95 % confidence ellipses of the six theoretical Gaussian distributions together with the 100 independent observations simulated from these distributions. The second plot represents the clusters created by the maximum likelihood solution for the 2-CPC model, taking \(c_{sh}=c_{vol}=100\). The numbers labeling the ellipses represent the class of covariance matrices sharing the orientation. Finally, the third plot represents the best solution estimated by mclust for \( {K}=6\), corresponding to the parsimonious model VEV, with equal shape and variable size and orientation. The BIC value in the 2-CPC model (31 d.f.) is \(-\)3937.08, whereas the best model VEV (30 d.f.) estimated with mclust has BIC value \(-\)3960.07. Therefore, the GMM estimated with the 2-CPC restriction has higher BIC than all the parsimonious models. Finally, the number of observations assigned to different clusters from the original ones is 82 for the 2-CPC model and 91 for the VEV model.

-

Clustering G-PROP: In this example, we simulate \(n=100\) observations from each of 6 Gaussian distributions, with means \(\mu _1,\ldots ,\mu _6\) and covariance matrices verifying:

$$\begin{aligned} \Sigma _{ {k}} =&{\gamma }_{ {k}} A_1, \quad {k}=1,2,3, \\ \Sigma _{ {k}} =&{\gamma }_{ {k}} A_2 ,\quad {k}=4,5,6 \ . \end{aligned}$$Figure 3 is analogous to Fig. 2, but in the proportionality case. The BIC value for the 2-PROP model (27 d.f.) with \(c_{sh}=c_{vol}=100\) is \(-\)3873.127, whereas the BIC value for the best model fitted by mclust is \(-\)3919.796, which corresponds to the unrestricted model VVV (35 d.f.). Now, the number of observations wrongly assigned to the source groups is 64 for the 2-PROP model, while it is 71 for the VVV model.

Remark 2

Note that, by imposing appropriate constraints in the clustering problem, we can significantly decrease the number of parameters while keeping a good fit of the data. Figure 3 shows this effect. However, constraints also have a clear interpretation in cluster analysis problems, since we are looking for groups that are forced to have a particular shape. Therefore, different constraints can lead to clusters with different shapes. This is what happens in Fig. 2, where by introducing the right constraints we have managed to make the clusters created more similar to the original ones. Of course, in the absence of prior information, it is not possible to know the appropriate constraints, and the most reasonable approach is to select a model according to a criterion that penalizes the fit with the number of parameters such as the BIC.

To evaluate the sensitivity of BIC for the detection of the true underlying model, we have used the models described in the two previous examples. Once a model and a particular sample size n (=50, 100, 200) have been chosen, the simulation planning produces a sample containing n random elements generated from each \(N(\mu _{ {k}},\Sigma _{ {k}}),\ {k}=1,\ldots ,6\). We repeated every simulation plan 1000 times, comparing for every sample the BIC obtained for the underlying clustering model vs the best parsimonious model estimated by mclust. Table 3 includes the proportions of times in which 2-CPC or 2-PROP model improves the best mclust model in terms of BIC for each value of n. Of course, the accuracy of the approach should depend on the dimension, the number of groups, the overlapping... However, even in the case of a large overlapping, as in the present examples, the proportions reported in Table 3 show that moderate values of n suffice to get very high proportions of success. Appendix B contains additional simulations supporting the suitability of BIC in this framework.

3.2 Discriminant analysis

The parsimonious model introduced in Bensmail and Celeux (1996) for discriminant analysis has been developed in conjunction with model-based clustering. The R package mclust (Fraley and Raftery 2002; Scrucca et al. 2016) also includes functions for fitting these models, denoted by EDDA (Eigenvalue Decomposition Discriminant Analysis). In this context, given a parsimonious level \({\mathscr {M}}\) and a number G of classes, we can also consider fitting an intermediate model for each fixed \( {\pmb u}\in {\mathscr {H}}\), by maximizing the complete log-likelihood

Model comparison is done through BIC, and consequently we could try to choose \( {\pmb u}\) maximizing the log-likelihood (11). However, given that in the model fitting we are maximizing the complete log-likelihood (16), it is not unreasonable trying to find the value of \( {\pmb u}\) maximizing (16). Proceeding in this manner, we can think of \( {\pmb u}\) as a parameter, and the problem consists in maximizing (12). Model estimation is simple from model-based clustering algorithms: with a single iteration of the M step, we can compute the values of the parameters. A new set of observations can be classified computing the posterior probabilities, with the formula (15) of the E step, and assigning each new observation to the group with higher posterior probability. Since the groups are known, the complete log-likelihood (12) is bounded under mild conditions, and it is not required to impose eigenvalue constraints, although it may be interesting in some examples with almost degenerated variables. To summarize the quality of the classification given by the best models (selected through BIC) in the different examples, other indicators based directly on classification errors are provided:

-

MM: Model Misclassification, or training error. Proportion of observations misclassified by the model fitted with all observations.

-

LOO: Leave One Out error.

-

CV(R,p): Cross Validation error. Considering each observation as labeled or unlabeled with probability p and \(1-p\), we compute the proportion of unlabeled observations misclassified by the model fitted with the labeled observations. The indicator CV(R,p) represents the mean of the proportions obtained in R repetitions of the process. When several classification methods are compared, the same R random partitions are used to compute the values of this indicator.

In the line of the previous section, only the discriminant analysis models G-CPC and G-PROP are considered. Table 4 and 5 show the results of applying these models to the simulation examples of Figs. 2, 3. In both situations, the classification obtained with our model slightly improves that given by mclust.

As we did in the clustering setting, in order to evaluate the sensitivity of BIC for the detection of the true underlying model, simulations have been repeated 1000 times, for each sample size n (=30, 50, 100, 200). Table 6 shows the proportions of times in which 2-CPC or 2-PROP model improves the best mclust model in terms of BIC for each value of n.

Remark 3

In discriminant analysis, the weights \(\pmb \pi =(\pi _1,\ldots ,\pi _{ {K}})\) might not be considered as parameters. Model-based methods assume that observations from the \( {k}^{th}\) group follow a distribution with density function \(f(\cdot ,\theta _{ {k}})\). If \(\pi _{ {k}}\) is the proportion of observations of group k, the classifier minimizing the expected misclassification rate is known as Bayes classifier, and it assigns an observation y to the group with higher posterior probability

The values of \(\pmb \pi ,\theta _1,\ldots ,\theta _{ {K}}\) are usually unknown, and the classification is performed with estimations \(\pmb {{{\hat{\pi }}}},{\hat{\theta }}_1,\ldots ,{\hat{\theta }}_{ {K}}\). Whereas \({\hat{\theta }}_1,\ldots ,{\hat{\theta }}_{ {K}}\) are always parameters estimated from the sample, the values of \(\pmb {{{\hat{\pi }}}}\) may be seen as part of the classification rule, if we think that they represent a characteristic of a particular sample we are classifying, or real parameters, if we assume that the observations \((z_{ {i}},y_{ {i}})\) arise from a GMM such that

where mult() denotes the multinomial distribution, and the weights verify \(0\le \pi _{ {k}}\le 1\), \(\sum _{ {k}=1}^{ {K}} \pi _{ {k}} =1\). In accordance with mclust, for model comparison we are not considering \(\pmb {\pi }\) as parameters, although its consideration would only mean adding a constant to all BIC values computed. However, in order to define the theoretical problem, the situation where we are considering \(\pmb {\pi }\) as a parameter is more interesting. If (Z, Y) is a random vector following a distribution \({\mathbb {P}}\) in \(\lbrace 1,\ldots , { {K}}\rbrace \times {\mathbb {R}}^d\), the theoretical problem consists in maximizing

with respect to the parameters \(\pmb \pi , \pmb \mu , {\pmb u},\pmb V, \pmb C\). Given N observations \((z_{ {i}},y_{ {i}}), \ { {i}}=1,\ldots ,N\) of \({\mathbb {P}}\), the problem of maximizing (18) agrees with the sample problem presented above the remark when taking the empirical measure \({\mathbb {P}}_N\), with the obvious relation \(z_{ {i},{ {k}}}={\text {I}}(z_{ {i}}= {k})\). Arguments like those presented in Section A in the Appendix for the cluster analysis problem would give existence and consistency of solutions also in this setting.

4 Real data examples

To illustrate the usefulness of the G-CPC and G-PROP models in both settings, we show four real data examples in which our models outperform the best parsimonious models fitted by mclust, in terms of BIC. The two first examples are intended to illustrate the methods in simple and well-known data sets, while the latter involve greater complexity.

4.1 Cluster analysis: IRIS

Here we revisit the famous Iris data set, which consists of observations of four features (length and width of sepals and petals) of 50 samples of three species of Iris (setosa, versicolor and virginica), and is available in the base package of R. We apply the functions of package mclust for model-based clustering, letting the number of clusters to search equal to 3, to obtain the best parsimonious model in terms of BIC value. Table 7 compares this model with the models 2-CPC and 2-PROP, fitted with \(c_{sh}=c_{vol}=100\). With some abuse of notation, we include in the table the Model Misclassification (MM), representing here the number of observations assigned to different clusters than the originals, after identifying the clusters created with the originals in a logical manner.

Clustering obtained from 2-PROP model in the Iris data set. Color represents the clusters created. The ellipses are the contours of the estimated mixture densities, grouped into the classes given by indexes in black. Point shapes represent the original groups. Observations lying on different clusters from the originals are marked with red circles

From Table 7 we can appreciate that the best clustering model in terms of BIC is the 2-PROP model. In Fig. 4 we can see the clusters created by this model. These clusters coincide with the real groups, except for four observations. From this example, we can also see the advantage of the intermediate models G-CPC and G-PROP in terms of interpretability. In the solution found with G-PROP the covariance matrices associated to two of the three clusters are proportional. Each cluster represents a group of individuals with similar features, which in absence of labels, we could see as a subclassification within the Iris specie. In this subclassification associated to the groups with proportional covariance matrices, both groups share not only the principal directions, but also the same proportion of variability between the directions. In many biological studies, principal components are of great importance. When working with phenotypic variables, principal components may be interpreted as “growing directions" (see e.g. Thorpe 1983). From the estimated model, we can conclude that in the Iris data, it is reasonable to think that there are three groups, two of them with similar “growing pattern", since not only the principal components are the same, but also the shape is common. However, this biological interpretation will become even more evident in the following example.

4.2 Discriminant analysis: CRABS

The data set consists of measures of 5 features over a set of 200 crabs from two species, orange and blue, and from both sexes, and it is available in the R package MASS (Venables and Ripley 2002). For each specie and sex (labeled OF, OM, BF, BM) there are 50 observations. The variables are measures in mm of the following features: frontal lobe (FL), rear width (RW), carapace length (CL), carapace width (CW) and body depth (BD). Applying the classification function of the mclust library, the best parsimonious model in terms of BIC is EEV. Table 8 shows the result for the EEV model, together with the discriminant analysis models 2-CPC and 2-PROP, with \(c_{sh}=c_{vol}=100000\) (with these values, the solutions agrees with the unrestricted solutions).

The results show that the comparison given by BIC can differ from those obtained by cross validation techniques, partially because BIC mainly measures the fit of the data to the model. However, in the parsimonious context, model selection is usually performed via BIC, in order to avoid the very time-consuming process of evaluating every possible model with cross validation techniques.

Figure 1 in the Online Supplementary Figures represents the solution estimated by 2-PROP model. The solution given by this model allows for a better biological interpretation than the one given by the parsimonious model EEV, where orientation varies along the 4 groups, making the comparison quite complex. In the 2-PROP model, the groups of males of both species share proportional matrices, and the same is true for the females. Returning to the biological interpretation of the previous example, under the 2-PROP model, we can state that crabs of the same sex have the same “growing pattern”, despite of being from different species.

4.3 Cluster analysis: gene expression cancer

In this example, we work with the Gene expression cancer RNA-Seq Data Set, which can be downloaded from the UCI Machine Learning Repository. This data set is part of the data collected by “The Cancer Genome Atlas Pan-Cancer analysis project"" (Weinstein et al. 2013). The considered data set consists of a random extraction of gene expressions of patients having different types of tumor: BRCA (breast carcinoma), KIRC (kidney renal clear-cell carcinoma), COAD (colon adenocarcinoma), LUAD (lung squamous carcinoma) and PRAD (prostate adenocarcinoma). In total, the data set contains the information of 801 patients, and for each patient we have information of 20531 variables, which are the RNA sequencing values of 20531 genes. To reduce the dimensionality and to apply model-based clustering algorithms, we have removed the genes with almost zero sum of squares (\(< 10^{-5}\)) and applied PCA to the remaining genes. We have taken the first 14 principal components, the minimum number of components retaining more than 50 \(\%\) of the total variance. Applying model-based clustering methods looking for 5 groups to this reduced data set, we have found that 3-CPC, fitted with \(c_{sh}=c_{vol}=1000\), improves the BIC value obtained by the best parsimonious model estimated by mclust. The results obtained from 3-CPC, presented in Table 9, significantly improve the assignment error made by mclust. Figure 2 in the Online Supplementary Figures shows the projection of the solution obtained by 3-CPC onto the first six principal components computed in the preprocessing steps.

4.4 Discriminant analysis: Italian olive oil

The data set contains information about the composition in percentage of eight fatty acids (palmitic, palmitoleic, stearic, oleic, linoleic, linolenic, arachidic and eicosenoic) found in the lipid fraction of 572 Italian olive oils, and it is available in the R package pdfCluster (Azzalini and Menardi 2014). The olive oils are labeled according to a two level classification: 9 different areas that are grouped at the same time in three different regions.

-

SOUTH: Apulia North, Calabria, Apulia South, Sicily.

-

SARDINIA: Sardinia inland, Sardinia coast.

-

CENTRE-NORTH: Umbria, Liguria east, Liguria west.

In this example, we have evaluated the performance of different discriminant analysis models, for the problem of classifying the olive oils between areas. The best parsimonious model fitted with mclust is the VVE model, with variable size and shape and equal orientation. Note that due to the dimension \(d=8\), there is a significant difference in the number of parameters between models with common or variable orientation. Therefore, BIC selection will tend to choose models with common orientation, despite the fact that this hypothesis might not be very precise. This suggests that intermediate models could be of great interest also in this example. Given that the last variable eicosenoic is almost degenerated in some areas, we fit the models with \(c_{sh}=c_{vol}=10000\), and the shape constraints are effective in some groups. We have found 3 different intermediate models improving the BIC value obtained with mclust. Results are displayed in Table 10.

The best solution found in terms of BIC is given by the 3-CPC model, which is also the solution with the best values for the other indicators. The classification of the areas in classes given in this solution is:

-

CLASS 1: Umbria.

-

CLASS 2: Apulia North, Calabria, Apulia South, Sicily.

-

CLASS 3: Sardinia inland, Sardinia coast, Liguria east, Liguria west.

Note that areas in class 2 exactly agree with areas from the South Region. This classification coincides with the separation in classes given by 3-PROP, whereas 2-PROP model grouped together class 1 and class 3. These facts support that our intermediate models have been able to take advantage of the apparent difference in the structure of the covariance matrices from the South region and the others. When we are looking for a three-class separation, instead of splitting the areas from the Centre-North and Sardinia into these two regions, all Centre-North and Sardinia areas are grouped together, except Umbria, which forms a group alone. Figure 3 in the Online Supplementary Figures represents the solution in the principal components of the group Umbria, and we can appreciate the characteristics of this area. The plot corresponding to the second and third variables allows us to see clear differences in some of its principal components. Additionally, we can see that it is also the area with less variability in many directions. In conclusion, a different behavior of the variability in the olive oils from this area seems to be clear. This could be related to the geographical situation of Umbria (the only non-insular and non-coastal area under consideration).

5 Conclusions and further directions

Cluster analysis of structured data opens up interesting research prospects. This fact is widely known and used in applications where the data themselves share some common structure, and thus clustering techniques are a key tool in functional data analysis. More recently, the underlying structures of the data have increased in complexity, leading, for example, to consider probability distributions as data, and to use innovative metrics, such as earth-mover or Wasserstein distances. This configuration has been used in cluster analysis, for example, in del Barrio et al. (2019), from a classical perspective, but also including new perspectives: meta-analysis of procedures, aggregation facilities.... Nevertheless, to the best of our knowledge, this is the first occasion in which a clustering procedure is used as a selection (of an intermediate model) step in an estimation problem. Our proposal allows improvements in the estimation process and, arguably, often a gain in the interpretability of the estimation thanks to the chosen framework: Classification through the Gaussian Mixture Model.

The presented methodology enhances the so-called parsimonious model leading to the inclusion of intermediate models. They are linked to geometrical considerations on the ellipsoids associated to the covariance matrices of the underlying populations that compose the mixture. These considerations are precisely the essence of the parsimonious model. The intermediate models arise from clustering covariance matrices, considered as structured data, and using a similarity measure based in the likelihood. The consideration of clustering these objects through other similarities could be appropriate looking for tools for different goals. In particular, we emphasize on the possibility of clustering based on metrics like the Bures–Wasserstein distance. The role played here by the BIC would have to be tested in the corresponding configurations or, alternatively, replaced by appropriate penalties for choosing between other hierarchical models.

Feasibility of the proposal is an essential requirement for a serious essay of a statistical tool. The algorithms considered in the paper are simple adaptations of Classification Expectation Maximization algorithm, but we think that they could be still improved. We will pursuit on this challenge, looking also for feasible computations for similarities associated to new pre-established objectives.

In summary, through the paper we have used clustering to explore similarities between groups according to predetermined patterns. In this wider setup, clustering is not a goal in itself, it can be an important tool for specialized analyses.

6 Supplementary material

Supplementary figures: Online document with additional graphs for the real data examples. Repository: Github repository containing the R scripts with the algorithms and workflow necessary to reproduce the results of this work. Simulation data of the examples are also included. (https://github.com/rvitores/ImprovingModelChoice).

Notes

The research leading to these results received funding from MCIN/AEI/10.13039/501100011033/FEDER under Grant Agreement No PID2021-128314NB-I00.

References

Azzalini, A., Menardi, G.: Clustering via nonparametric density estimation: the R package pdfCluster. J. Stat. Softw. 57(11), 1–26 (2014). https://doi.org/10.18637/jss.v057.i11

Banfield, J.D., Raftery, A.E.: Model-based Gaussian and non-Gaussian clustering. Biometrics 49(3), 803–821 (1993). https://doi.org/10.2307/2532201

Benaglia, T., Chauveau, D., Hunter, D., et al.: mixtools: an R package for analyzing finite mixture models. J. Stat. Softw. 32(6), 1–29 (2009). https://doi.org/10.18637/jss.v032.i06

Bensmail, H., Celeux, G.: Regularized Gaussian discriminant analysis through eigenvalue decomposition. J. Am. Stat. Assoc. 91(436), 1743–1748 (1996). https://doi.org/10.1080/01621459.1996.10476746

Bezdek, J.C., Hathaway, R.J., Howard, R.E., et al.: Local convergence analysis of a grouped variable version of coordinate descent. J. Opt. Theory Appl. 54(3), 471–477 (1987). https://doi.org/10.1007/bf00940196

Biernacki, C., Govaert, G.: Choosing models in model-based clustering and discriminant analysis. J. Stat. Comput. Simul. 64(1), 49–71 (1999). https://doi.org/10.1080/00949659908811966

Browne, R.P., McNicholas, P.D.: Estimating common principal components in high dimensions. Adv. Data Anal. Classif. 8(2), 217–226 (2014). https://doi.org/10.1007/s11634-013-0139-1

Browne, R.P., McNicholas, P.D.: Orthogonal Stiefel manifold optimization for eigen-decomposed covariance parameter estimation in mixture models. Stat. Comput. 24(2), 203–210 (2014). https://doi.org/10.1007/s11222-012-9364-2

Celeux, G., Govaert, G.: A classification EM algorithm for clustering and two stochastic versions. Comp. Stat. Data Anal. 14(3), 315–332 (1992). https://doi.org/10.1016/0167-9473(92)90042-E

Celeux, G., Govaert, G.: Gaussian parsimonious clustering models. Pattern Recognit. 28(5), 781–793 (1995). https://doi.org/10.1016/0031-3203(94)00125-6

Dean, N., Murphy, T.B., Downey, G.: Using unlabelled data to update classification rules with applications in food authenticity studies. J. R. Stat. Soc. Ser. C Appl. Stat. 55(1), 1–14 (2006). https://doi.org/10.1111/j.1467-9876.2005.00526.x

del Barrio, E., Cuesta-Albertos, J.A., Matrán, C., et al.: Robust clustering tools based on optimal transportation. Stat. Comput. 29(1), 139–160 (2019). https://doi.org/10.1007/s11222-018-9800-z

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B Stat Methodol. 39(1), 1–22 (1977). https://doi.org/10.1111/j.2517-6161.1977.tb01600.x

Flury, B.: Common principal components in k groups. J. Am. Stat. Assoc. 79(388), 892–898 (1984). https://doi.org/10.1080/01621459.1984.10477108

Flury, B.: Common Principal Components and Related Multivariate Models. Wiley, New York (1988)

Flury, B.N., Gautschi, W.: An algorithm for simultaneous orthogonal transformation of several positive definite symmetric matrices to nearly diagonal form. SIAM J. Sci. Comput. 7(1), 169–184 (1986). https://doi.org/10.1137/0907013

Flury, B.W., Schmid, M.J., Narayanan, A.: Error rates in quadratic discrimination with constraints on the covariance matrices. J. Classif. 11, 101–120 (1994). https://doi.org/10.1007/bf01201025

Fraley, C., Raftery, A.E.: Model-based clustering, discriminant analysis, and density estimation. J. Am. Stat. Assoc. 97(458), 611–631 (2002). https://doi.org/10.1198/016214502760047131

Friedman, H.P., Rubin, J.: On some invariant criteria for grouping data. J. Am. Stat. Assoc. 62(320), 1159–1178 (1967). https://doi.org/10.1080/01621459.1967.10500923

Fritz, H., García-Escudero, L.A., Mayo-Iscar, A.: tclust: an R package for a trimming approach to cluster analysis. J. Stat. Softw. 47(12), 1–26 (2012). https://doi.org/10.18637/jss.v047.i12

Fritz, H., García-Escudero, L.A., Mayo-Iscar, A.: A fast algorithm for robust constrained clustering. Comp. Stat. Data Anal. 61, 124–136 (2013). https://doi.org/10.1016/j.csda.2012.11.018

García-Escudero, L.A., Gordaliza, A., Matrán, C., et al.: A general trimming approach to robust cluster analysis. Ann. Stat. 36(3), 1324–1345 (2008). https://doi.org/10.1214/07-AOS515

García-Escudero, L., Gordaliza, A., Matrán, C., et al.: Avoiding spurious local maximizers in mixture modeling. Stat. Comput. 25, 619–633 (2015). https://doi.org/10.1007/s11222-014-9455-3

García-Escudero, L., Gordaliza, A., Greselin, F., et al.: Eigenvalues and constraints in mixture modeling: geometric and computational issues. Adv. Data Anal. Classif. 12, 203–233 (2017). https://doi.org/10.1007/s11634-017-0293-y

García-Escudero, L., Mayo, A., Riani, M.: Model-based clustering with determinant-and-shape constraint. Stat. Comput. 30, 1363–1380 (2020). https://doi.org/10.1007/s11222-020-09950-w

García-Escudero, L.A., Mayo-Iscar, A., Riani, M.: Constrained parsimonious model-based clustering. Stat. Comput. 32(1), 2 (2022). https://doi.org/10.1007/s11222-021-10061-3

Lebret, R., Iovleff, S., Langrognet, F., et al.: Rmixmod: the R package of the model-based unsupervised, supervised, and semi-supervised classification mixmod library. J. Stat. Softw. 67(6), 1–29 (2015). https://doi.org/10.18637/jss.v067.i06

Riani, M., Perrotta, D., Torti, F.: FSDA: a MATLAB toolbox for robust analysis and interactive data exploration. Chemom. Intell. Lab. Syst. 116, 17–32 (2012). https://doi.org/10.1016/j.chemolab.2012.03.017

Scrucca, L., Fop, M., Murphy, T., et al.: mclust 5: clustering, classification and density estimation using gaussian finite mixture models. R J. 8, 205–233 (2016). https://doi.org/10.32614/RJ-2016-021

Thorpe, R.: A review of the numerical methods for recognising and analysing racial differentiation. In: Felsenstein, J. (ed.) Numerical Taxonomy, pp. 404–423. Springer, Berlin (1983). https://doi.org/10.1007/978-3-642-69024-2_43

Venables, W.N., Ripley, B.D.: Modern Applied Statistics With S, 4th edn. Springer, New York (2002)

Weinstein, J.N., Collisson, E.A., Mills, G.B., et al.: The cancer genome atlas pan-cancer analysis project. Nat. Genet. 45, 1113–1120 (2013). https://doi.org/10.1038/ng.2764

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A Theoretical results

In this section we are going to further on the comments of Remark 1. Given a parsimonious model \({\mathscr {M}}\) and fixed values of \( {K},G,c_{vol}\) and \(c_{sh}\), the problem consists in maximizing the function (14) in \(\Theta _{c_{vol},c_{sh}}^{{\mathscr {M}},G}\), the set of parameters \(\pmb {\pi },\pmb {\mu }, {\pmb {u}},\pmb {V},\pmb {C}\) associated with the clustering model G-\({\mathscr {M}}\) verifying the size and shape constraints (13). Using the same notation as in García-Escudero et al. (2020), denote

where \({\mathbb {S}}_{>0}^d\) is the set of positive definite symmetric real matrices. If we define the map

where \(\pmb {\Sigma }\bigl ( {\pmb {u}},\pmb {V},\pmb {C}\bigr )\) is the collection of K covariance matrices created from the parameters \( {\pmb {u}},\pmb {V},\pmb {C}\), it is obvious that \(T( \Theta _{c_{vol},c_{sh}}^{{\mathscr {M}},G} )\subset \Theta _{c_{vol},c_{sh}}\). This and Lemma 1 in García-Escudero et al. (2020) allow us to replicate the proofs of Proposition 1 and Proposition 2 in García-Escudero et al. (2015) to prove the following theorems on the existence and consistence of the solutions.

Theorem 1

If \({\mathbb {P}}\) is a probability that is not concentrated on K points, and \({\text {E}}_{{\mathbb {P}}}\vert \vert \cdot \vert \vert ^2<\infty \), the maximum of (14) is achieved at some \((\pmb {{{\hat{\pi }}}},\pmb {{{\hat{\mu }}}},\pmb { {{\hat{u}}}},\pmb {{\hat{V}}},\pmb {{\hat{C}}} )\in \Theta _{c_{vol},c_{sh}}^{{\mathscr {M}},G}\).

Given \(\lbrace y_{ {i}} \rbrace _{ {i}=1}^\infty \) independent observations of the distribution \({{\mathbb {P}}}\), for each N we can define the empirical distribution \({{\mathbb {P}}}_N=(1 / N)\sum _{ {i}=1}^N \delta _{\left\{ y_{ {i}}\right\} }\). The sample problem of maximizing (14) under the constraint (13) coincides with the distributional problem presented here, when we take the probability \({{\mathbb {P}}}_N\). Therefore, Theorem 1 also guarantees the existence of the solution of the empirical problem corresponding to large enough samples drawn from an absolutely continuous distribution.

We use the notation \(\theta _0\) for any constrained maximizer of the theoretical problem for the underlying distribution \({\mathbb {P}}\), and let

be a sequence of empirical solutions for the sequence of empirical sample distributions \(\lbrace {\mathbb {P}}_N \rbrace _{N=1}^\infty \). The following result states consistency under similar assumptions as in Theorem 1 if the maximizer of the theoretical problem is assumed to be unique.

Theorem 2

Let us assume that \({\mathbb {P}}\) is not concentrated on K points, \({\text {E}}_{{\mathbb {P}}}\vert \vert \cdot \vert \vert ^2<\infty \) and that \(\theta _0 \in \Theta _{c_{vol},c_{sh}}^{{\mathscr {M}},G} \) is the unique constrained maximizer of (14) for \( {\mathbb {P}}\). If \(\lbrace \theta _n \rbrace _{n=1}^\infty \) is a sequence of empirical maximizers of (14) with \(\theta _n \in \Theta _{c_{vol},c_{sh}}^{{\mathscr {M}},G}\), then \(\theta _n \underset{ }{\longrightarrow }\ \theta _0 \) almost surely.

Appendix B Additional simulations

At the suggestion of a reviewer, we present two additional simulation examples that reinforce the ideas presented in Sect. 3.1. For the sake of brevity, we only give the results for the more involved clustering problem. We point out two basic ideas. Since we have introduced a broader family of models, model selection will be more challenging than within the fourteen parsimonious models. This is clearly seen in the former example, but with a sufficiently large sample size, BIC is still able to select the true model. In the latter example, we emphasize that our extension of the parsimonious model is not redundant.

First, we repeat the two-dimensional simulation experiment described in Sect. 3.1, but assuming the VVE model:

This example allows us to deal with two different situations. The true underlying model verifies the VVE (1-CPC) model, so it also verifies the 2-CPC model, but it does not verify the 2-PROP model. For a sample with \(n=50\) observations from each group, we compute the VVE, 2-CPC and 2-PROP solutions for clustering. Results are shown in Fig. 5, where we can appreciate that both VVE and 2-CPC models fit the data perfectly, while the constraint of 2-PROP does not allow a good fitting of the data. This is also reflected in Table 11, where the BIC values are computed. The best model in terms of BIC is VVE, but 2-CPC is also competitive. 2-PROP gives much worse BIC values.

Finally, as we did in Table 3, simulations have been repeated 1000 times, for different sample sizes n. In each simulation, we are comparing the BIC value obtained for 2-CPC and 2-PROP with the BIC value obtained for the true underlying model VVE. Results are shown in Table 12.

The results are consistent with the ideas set out above. Since 2-PROP model is not verified, the clustering models fitted with this constraint give lower BIC value than VVE. 2-CPC model is verified, it is more flexible than VVE, and the difference in the number of parameters is only one. Thus, this is a rather complicated setting for model selection. Even in this case, if the sample size n is large enough, BIC is able to select the true model in almost all cases.

The second example is similar to the 2-CPC example in 3.1, but now in dimension \(d=10\). We consider \(K=6\) distributions, with \(G=2\) classes given by

Parameters were created so that we get a favorable but not trivial situation for applying clustering algorithms. Figure 4 in the Online Supplementary Figures shows a sample created with \(n=100\) observations from each group. For this sample, we fit the clustering model 2-CPC, and we compare it with the best model estimated by mclust. The results of this simulation are given in Table 13.

The main advantage of considering our intermediate models against the 14 parsimonious models estimated by mclust in this particular example is that mclust is selecting the model VVE, which it is not exactly verified, because the VVV model involves a substantially larger number of parameters (395 for clustering, 390 for discriminant analysis). This leads to a significant improvement in the BIC value of the 2-CPC model. As a result of this, when we repeated the simulation 1000 times with different sample sizes \(n (=50,100,200)\), our model 2-CPC improved in terms of BIC the best model estimated by mclust in 100% of the simulations, for all the values of n considered.

Appendix C Algorithms

1.1 C.1 Optimal truncation

In the algorithms presented, we will repeatedly use the optimal truncation algorithm explained in Section 3.1 in García-Escudero et al. (2020), which was introduced in Fritz et al. (2013).

Given \(d \ge 0\) and a fixed restriction constant \(c \ge 1\), the m-truncated value is defined by

Given \(\left\{ n_{j}\right\} _{{j}=1}^J \in {\mathbb {R}}^J_{>0}\) and \(\left\{ d_{{j} 1}, \ldots , d_{{j} L}\right\} _{{j}=1}^J \in [0, \infty )^{J \times L}\), we define the operator

which returns \(\left\{ d_{{j} 1}^*, \ldots , d_{{j} L}^*\right\} _{{j}=1}^J \in [0, \infty )^{J \times L}\) with \(d^*_{{j} l}=d_{{j} l}^{m_{opt}}\) for \(m_{opt}\) being the optimal threshold value obtained as

Obtaining that optimal threshold value only requires the maximization of a real-valued function and \(m_{opt}\) can be efficiently obtained by performing only \(2 \cdot J \cdot L + 1\) evaluations through a procedure which can be fully vectorized (Fritz et al. 2013).

In the algorithms of the following sections, when working with proportionality models, we will minimize in several situations a function of the type

being \(\beta \) an orthogonal matrix and \(\beta _l,l=1,\ldots ,d\) its columns, \(\gamma _1,\ldots ,\gamma _r\) size parameters verifying the size constraint for \(c_{vol}\) and \(\lambda _1, \ldots , \lambda _d\) the common shape parameters verifying the shape constraint for \(c_{sh}\) and \(\prod _{l=1}^d \lambda _l=1\). In this situation, the minimization can be made iteratively, taking into account that:

-

Fixed the sizes and shapes, the minimization with respect to \(\beta \) can be done with the algorithms proposed in Browne and McNicholas (2014b).

-

Fixed the orientation and shapes, the optimal unconstrained values of the size are

$$\begin{aligned}\gamma _{ {k}}^{opt} = \frac{1}{d} \sum _{l=1}^d \frac{\beta _{l}^T S_{ {k}} \beta _l}{\lambda _{l}} \quad {k}=1,\ldots ,r.\end{aligned}$$Therefore, the optimal restricted values for the size are \( {\text {OT}}_{c_{vol}} \Bigl ( \lbrace n_{ {k}} \rbrace _{ {k}=1}^r; \lbrace \gamma _{ {k}}^{opt} \rbrace _{ {k}=1}^r\Bigr )\).

-

Fixed the orientation and sizes, the optimal unconstrained values of the shapes are:

$$\begin{aligned}\lambda _l^{opt} = \frac{1}{N} \sum _{ {k}=1}^r n_{ {k}} \frac{\beta _{l}^T S_{ {k}} \beta _l}{\gamma _{ {k}}} \quad l=1,\ldots ,d.\end{aligned}$$The optimal values verifying the constraint \(c_{sh}\) are \( {\text {OT}}_{c_{sh}} \Bigl ( \lbrace 1 \rbrace ; \lbrace \lambda _1^{opt},\ldots ,\lambda _d^{opt} \rbrace \Bigr )\), and because of the reasoning in Section 3.3 in García-Escudero et al. (2020), the optimal values verifying also \(\prod _{l=1}^d \lambda _l=1\) are obtained normalizing the result of the optimal truncation operator.

When working with CPC models, many times we will come to the conclusion that we have to minimize a slightly different type of function:

In this case, we can repeat analogous comments for the minimization with respect to the sizes and the orientation matrix. For the shape matrices:

-

Fixed the orientation and sizes, the optimal unconstrained values of the shapes are

$$\begin{aligned}\lambda _{ {k},l}^{opt} = \frac{\beta _{l}^T S_{ {k}} \beta _l}{\gamma _{ {k}}} \quad {k}=1,\ldots ,r,\ l=1,\ldots ,d.\end{aligned}$$For each \( {k}=1,\ldots ,r\), the optimal values verifying the constraint \(c_{sh}\) are the result of the operator \( {\text {OT}}_{c_{sh} } \Bigl ( \lbrace 1 \rbrace ; \lbrace \lambda _{ {k},1}^{opt}\ldots ,\lambda _{ {k},d}^{opt} \rbrace \Bigr )\), and the optimal values verifying also \(\prod _{l=1}^d \lambda _l=1\) are obtained normalizing the result of that truncation.

1.2 C.2 Classification G-CPC/G-PROP

In this section we are going to develop the algorithms for the covariance matrices classification models G-CPC and G-PROP minimizing (8) and (9). Since these algorithms are included in the algorithms for cluster analysis, determinant and shape constraints are also included. When focusing on the original problem of Sect. 2, these constraints should be omitted, which can be done taking \(c_{vol}=c_{sh}=\infty \). The input of the algorithm is

where \(S_1,\ldots ,S_{ {K}}\) are the sample covariance matrices, \(n_1,\ldots ,n_{ {K}}\) the sample lengths, G the number of classes, \(c_{sh},c_{vol}\) the values of the constants for the determinant and shape constraints and \(nstart_1\) the number of random initializations. The parameters of the minimization are \( {\pmb {u}}=( {u}_1,\ldots , {u}_{ {K}})\), \(\pmb {\gamma }=(\gamma _1,\ldots ,\gamma _{ {K}})\) \(\pmb {\beta }=(\beta _1,\ldots ,\beta _G)\) and \(\pmb {\Lambda }=(\Lambda _1,\ldots ,\Lambda _s)\), where \(s= {K}\) in G-CPC and \(s=G\) in G-PROP, and they are also the output of the algorithm. A detailed presentation of the algorithm is given as follows:

- 1.:

-

Initialization: We start taking a random vector of indexes \(\pmb { {u}^0} \in {\mathscr {H}}\). Then we take:

- \(\pmb {\beta ^0:}\):

-

For each \(g = 1,\ldots ,G\), we take k such that \( {u}_{ {k}}^0=g\), and we define \(\beta _g\) as the eigenvectors of \(S_{ {k}}\).

- \(\pmb {\Lambda ^0:}\):

-

\(\rightarrow \) G-PROP: For each \(g=1,\ldots , {K}\), taking the same k as before,

$$\begin{aligned} \Lambda _g^0 =&{\text {OT}}_{c_{sh}}\Bigl ( \lbrace 1 \rbrace ;{\text {diag}}(\beta _{g}^TS_{ {k}}\beta _{g})\Bigr ), \\ \Lambda _g^0 =&\frac{\Lambda _g^0 }{{\text {prod}}(\Lambda _g^0 )^{1/d}} \ . \end{aligned}$$\(\rightarrow \) G-CPC: For each \( {k}=1,\ldots , {K}\),

$$\begin{aligned} \Lambda _{ {k}}^0 =&{\text {OT}}_{c_{sh}}\Bigl ( \lbrace 1 \rbrace ;{\text {diag}}\bigl ( \beta _{ {u}_{ {k}}^0}^TS_{ {k}}\beta _{ {u}_{ {k}}^0}\bigr )\Bigr ), \\ \Lambda _{ {k}}^0 =&\frac{\Lambda _{ {k}}^0 }{{\text {prod}}(\Lambda _{ {k}}^0 )^{1/d}} \ . \end{aligned}$$ - \(\pmb {\gamma ^0:}\):

-

For each \( {k}=1,\ldots , {K}\),

$$\begin{aligned}&\rightarrow \text {G-PROP:} \ {}&\gamma _{ {k}}^0 = \frac{1}{d}{\text {tr}}\Bigl ((\Lambda _{ {u}_{ {k}}^0}^0)^{-1}\beta _{{ {u}_{ {k}}^0}}^TS_{ {k}}\beta _{{ {u}_{ {k}}^0}}\Bigr ) \\&\rightarrow \text {G-CPC:}\&\gamma _{ {k}}^0 = \frac{1}{d}{\text {tr}}\Bigl ((\Lambda _{ {k}}^0)^{-1}\beta _{{ {u}_{ {k}}^0}}^TS_{ {k}}\beta _{{ {u}_{ {k}}^0}}\Bigr ) \ . \end{aligned}$$Constrained values:

$$\begin{aligned} (\gamma _1^0,\ldots ,\gamma _d^0)={\text {OT}}_{c_{vol}} \Bigl ( \lbrace n_{ {k}} \rbrace _{ {k}=1}^{ {K}}; \lbrace \gamma _{ {k}}^{0} \rbrace _{ {k}=1}^{ {K}}\Bigr ).\end{aligned}$$

- 2.:

-

Iterations: The following steps are repeated until convergence:

-

u-V step: Based on the current parameters \(\pmb { {u}^m},\pmb {\gamma ^m},\pmb {\beta ^m},\pmb {\Lambda ^m}\), we are going to optimize with respect to \(\pmb { {u}}\) and the variable parameters of each parsimonious model. The variable parameters will be also optimized in the following step, thus its value will not be updated here. Size parameters \(\pmb {\gamma }\) don’t affect the selection of the best \( {\pmb u}\), thus it is enough to find for each \( {k}=1,\ldots , {K}\) the value of \( {u}_{ {k}}\) for which taking the common parameters \(C_{ {u_k}}\) we obtain a lower value in the minimization with respect to the variable parameters of

$$\begin{aligned} R(\beta ,\Lambda )=\sum _{l=1}^d \frac{\beta _{l}^T S_{ {k}} \beta _{l}}{\lambda _{l}}. \end{aligned}$$\(\rightarrow \) G-PROP: The parameters \(\pmb \Lambda , \pmb \beta \) are common, we are only minimizing with respect to \( {\pmb u}\). For each \( {k} = 1,\ldots , {K}\),

$$\begin{aligned} {u}_{ {k}}^{m+1} = \underset{g\in \lbrace 1,\ldots ,G\rbrace }{{\text { {argmin}}}}\quad R(\beta _g^m,\Lambda _g^m) \ . \end{aligned}$$\(\rightarrow \) G-CPC: The parameters \(\pmb \beta \) are common. For each \( {k}=1,\ldots , {K}\),

$$\begin{aligned} \tilde{\Lambda }_{ {k},g}=&{\text {OT}}_{c_{sh}}\Bigl ( \lbrace 1 \rbrace ;{\text {diag}}\bigl ( (\beta _{g}^m)^T S_{ {k}}\beta _{g}^m\bigr )\Bigr ) \ , \\ \tilde{\Lambda }_{ {k},g} =&\frac{\tilde{\Lambda }_{ {k},g} }{{\text {prod}}(\tilde{\Lambda }_{ {k},g} )^{1/d}} \ , \\ {u}_{ {k}}^{m+1} =&\underset{g\in \lbrace 1,\ldots ,G\rbrace }{{\text { {argmin}}}}\quad R(\beta _g^m,\tilde{\Lambda }_{ {k},g}) \ .\end{aligned}$$ -

V-C step: Based on the current parameters \(\pmb { {u}^{m+1}},\pmb {\gamma ^m},\pmb {\beta ^m},\pmb {\Lambda ^m}\), we are going to optimize with respect to \(\pmb {\gamma },\pmb {\beta },\pmb {\Lambda }\). This optimization requires iterations. Setting \(s=0\), and considering the initial solutions

$$\begin{aligned} \pmb {{{\bar{\gamma }}}^0 }= \pmb {\gamma ^{m}} \quad \quad \pmb {{{\bar{\beta }}}^0 }= \pmb {\beta ^{m}} \quad \quad \pmb {{{\bar{\Lambda }}}^0 }= \pmb {\Lambda ^{m}} \, \end{aligned}$$the following steps are repeated until convergence:

- \(\pmb {s:}\):

-

\(s= s + 1\)

- \(\pmb {{{\bar{\gamma }}}^{s}:}\):

-

Update the size parameters. For each \( {k}=1,\ldots , {K}\), \(\rightarrow \) G-PROP:

$$\begin{aligned} {{\bar{\gamma }}}_{ {k}}^{s} =\frac{1}{d}{\text {tr}}\Bigl (({{\bar{\Lambda }}}_{ {u}_{ {k}}^{m+1}}^{s-1})^{-1}({{\bar{\beta }}}_{ {u}_{ {k}}^{m+1}}^{s-1})^TS_{ {k}}{{\bar{\beta }}}_{ {u}_{ {k}}^{m+1}}^{s-1}\Bigr ) \ . \end{aligned}$$\(\rightarrow \) G-CPC:

$$\begin{aligned} {{\bar{\gamma }}}_{ {k}}^{s} = \frac{1}{d}{\text {tr}}\Bigl (({{\bar{\Lambda }}}_{ {k}}^{s-1})^{-1}({{\bar{\beta }}}_{ {u}_{ {k}}^{m+1}}^{s-1})^T S_{ {k}}{{\bar{\beta }}}_{ {u}_{ {k}}^{m+1}}^{s-1}\Bigr ) \ . \end{aligned}$$Then we apply the size constraint:

$$\begin{aligned} \pmb {{{\bar{\gamma }}}^{s}}={\text {OT}}_{c_{vol}} \Bigl ( \lbrace n_{ {k}} \rbrace _{ {k}=1}^r; \lbrace {{\bar{\gamma }}}_{ {k}}^s \rbrace _{ {k}=1}^{ {K}}\Bigr ).\end{aligned}$$ - \(\pmb {{{\bar{\Lambda }}}^{s}:}\):

-

Update the shape parameters. \(\rightarrow \) G-PROP: For each \(g=1,\ldots ,G\),

$$\begin{aligned} {{{\bar{\Lambda }}}_g^{s}}&={\text {OT}}_{c_{sh}}\Biggl ( \lbrace 1 \rbrace ; \\&{\text {diag}}\Biggl (\frac{1}{N} \sum _{ {k}: {u}_{ {k}}^{m+1}=g} n_{ {k}} \frac{({{\bar{\beta }}}_{g}^{s-1})^T S_{ {k}} {{\bar{\beta }}}_g^{s-1}}{{{\bar{\gamma }}}_{ {k}}^s}\Biggr ) \Biggr ), \\ {{{\bar{\Lambda }}}_g^{s}}&= \frac{{{{\bar{\Lambda }}}_g^{s}}}{{\text {prod}}({{{\bar{\Lambda }}}_g^{s}})^{1/d}} \ . \end{aligned}$$\(\rightarrow \) G-CPC: For each \( {k}=1,\ldots , {K}\),