Abstract

Either intentionally or unintentionally, sub-sampling is a common occurrence in image processing and can lead to aliasing if the highest frequency in the underlying process is higher than the Nyquist frequency. Several techniques have already been suggest in order to prevent aliasing from occurring (for example applying anti-aliasing filters), however there is little work describing methods to detect for it. Recently, Eckley and Nason (Biometrika 105(4), 833–848, 2018) developed a test for the absence of aliasing and/or white noise in locally stationary wavelet processes. Following Eckley and Nason (Biometrika 105(4), 833–848, 2018), we derive the corresponding theoretical consequences of sub-sampling a two-dimensional locally stationary wavelet process and develop a procedure to test for the absence of aliasing and/or white noise confounding at a fixed point, demonstrating its effectiveness and use through appropriate simulation studies and an example. In addition, we outline some possibilities for extending these methods further, from images to videos.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When sampling a time series at a fixed rate, if the Nyquist frequency is lower than the highest frequency in the process, aliasing occurs, since high frequency components cannot be distinguished from low frequency ones due to the sampling rate not being high enough. This can lead to unreliable analyses and distorted spectral and auto-covariance estimates. For example [see Priestley (1983)], when sampling a real valued stationary process X(t) with corresponding non-normalised spectral density function \(h_X(\omega )\) at intervals \(\Delta t\), we obtain a new process \(Y_t = X(t\cdot \Delta t)\) with corresponding non-normalised spectral density function

Hence, if the sampling rate is too low, without prior knowledge, high frequency information will be indistinguishable from low frequency components in the spectrum.

Images may be intentionally down-sampled for memory or processing purposes. For example, convolutional neural networks often down-sample images in various layers, the effects of which are explored in Ribeiro and Schön (2021), Vasconcelos et al. (2021), Vasconcelos et al. (2020), with Vasconcelos et al. (2021), Vasconcelos et al. (2020) also describing the benefits of applying anti-aliasing filters at certain locations within the network architecture. Recently, Li et al. (2021) even suggest applying wavelets to convolutional neural networks in order to counteract the effects of aliasing. Therefore, it seems that aliasing can definitely impact the performance of these models. As another example, consider autonomous vehicles. These systems often have to carry out many tasks simultaneously and have a limited amount of memory and computing power available (Liu et al. 2019, 2021). Due to these limitations, the sampling rate when collecting a video feed might be set too low or one might adjust the sampling rate in order to free computing power for other tasks. As a result, aliasing may be induced, which in turn may have a serious impact if critical high frequency information is confounded. The ability to detect aliasing would allow these systems to adjust their sampling rate accordingly.

Aliasing can be prevented if one knows the highest frequency in the process prior to sampling it, but this is rare in practice. Alternatively one could apply anti-aliasing filters (as in the convolutional neural network example above (Vasconcelos et al. 2021, 2020)) that ensure the sampling rate is high enough in order to capture all the high frequency information in the process. However, this is not always applicable and is not guaranteed to prevent aliasing from occurring. Aliasing and possible methods to prevent it are described in more detail in Priestley (1983), Gonzalez and Woods (2018), Burger and Burge (2010). Since it is not always possible to prevent aliasing, developing a test to detect for it becomes crucial.

Some methods for detecting aliasing in images have already been developed. For example, Hinich and Wolinsky (1988) use the bispectrum (Hinich and Messer 1995) of a signal to formulate a test for aliasing by using a modified version of the Hinich bispectrum test for Gaussianity. More recently, Coulange and Moisan (2010) suggest using the Fourier domain of an image in order to develop an aliasing detection algorithm. Alternatively, Patney and Lefohn (2018) and Douglas (2012) propose using deep neural networks and non-linear factor analysis respectively to detect for aliasing a sequence of images. Our approach differs in that we use locally stationary wavelet processes and are not constrained to using a sequence of images when carrying out our test.

Locally stationary wavelet processes were first introduced in Nason (2000) and were used in order to develop a test for the absence of aliasing and/or white noise confounding in locally stationary wavelet time series in Eckley and Nason (2018). In this paper, we extend these methods to two dimensions with a specific application to images.

PhD thesis (Gott 2012) proposed extending (Eckley and Nason 2018), but only did so for the case of Shannon wavelet processes, assumed that identical dyadic sub-sampling was carried out in both directions and also used an invalid testing regime (which was subsequently fixed in Eckley and Nason (2018)). Use of Shannon wavelets causes considerable simplification of the mathematics underlying the problem, but the assumption is probably far too strong in practice as the wavelets are infinite in extent. Likewise, assuming identical sub-sampling in horizontal and vertical image directions is strong, when one is usually uncertain on how the image has been processed before acquisition. Our work extends the methodology to Daubechies’ (Daubechies 1992) compactly supported wavelets, permits different dyadic sub-sampling in horizontal and vertical directions and provides a rigorous testing framework inherited from Eckley and Nason (2018).

This paper is organised as follows: Sect. 2 provides a review of the necessary background material on locally stationary wavelet processes, Sect. 3 derives the theoretical consequence of sub-sampling on two-dimensional locally stationary wavelet processes and the raw wavelet periodogram, Sect. 4 outlines the test procedure, Sect. 5 demonstrates the test using simulation studies, Sect. 6 gives an example and finally Sect. 7 outlines some possibilities for extending this procedure from images to videos.

2 Locally stationary wavelet processes

We review the main concepts from the theory of locally stationary wavelet processes as described by Nason (2000). These were later extended to two-dimensions in Eckley (2001). For more information on locally stationary wavelet process, please see Nason (2000), Eckley and Nason (2018), Eckley (2001) or the book by Nason (2008).

Definition 1

(One-dimensional discrete mother and father wavelets). Let \(\{h_n\}\) and \(\{g_n\}\) be the low and high-pass quadrature mirror filters used in the construction of the Daubechies (1992) compactly supported orthogonal continuous time wavelets. The one-dimensional discrete mother and father wavelets at scale j, denoted by \(\psi _j=(\psi _{j,n})_{n=0, \ldots ,N_j}\) and \(\phi _j=(\phi _{j,n})_{n=0, \ldots ,N_j}\) respectively, are \(N_j\)-dimensional vectors obtained using the following formulae

where \(N_j = (2^j-1)(N_h-1)+1\) and \(N_h\) denotes the number of non-zero elements of \(\{h_k\}\).

Definition 2

(Two-dimensional discrete wavelets). The two-dimensional discrete mother wavelets at scale \(j\in {\mathbb {N}}\), denoted by \(\psi _j^\ell = (\psi _{j,u,v}^\ell )_{u,v\in {\mathbb {Z}}}\), for \(\ell =h,v,d\) corresponding to horizontal, vertical and diagonal directions respectively, are \(N_j\times N_j\) dimensional matrices obtained using the following formulae

where \(\psi _{j,n}\) and \(\phi _{j,n}\) are the one-dimensional mother and father wavelets respectively.

Remark 1

Combining Definitions 1 and 2, we can obtain the following relations for the two-dimensional discrete wavelets

for \(n_1,n_2 = 0,\ldots , N_{j+1}-1\) for level \(j+1\) and \(N_j\) the same as in Definition 1.

Definition 3

(Two-dimensional locally stationary wavelet process). A two-dimensional locally stationary wavelet process is a sequence of stochastic processes \(\{X_{r,s}\}\), \(r=0,\ldots , R,\) \(s=0,\ldots , S\), with the representation

where \(\{\xi _{j,u,v}^\ell \}\) are uncorrelated mean zero random variables with unit variance, \(\{\psi _{j,u,v}^\ell \}\) are two-dimensional discrete mother wavelets and \(\{w_{j,u,v}^\ell \}\) are amplitudes satisfying the following conditions: \(\forall \,j\in {\mathbb {N}}\), and \(\ell =h,v,d\) there exists a Lipschitz continuous function \(W_j^\ell :(0,1)\times (0,1)\mapsto {\mathbb {R}}\) such that

-

(i)

\(\sum _\ell \sum _{j=1}^\infty |W_j^\ell ({\varvec{z}})|^2\) uniformly in \({\varvec{z}}\in (0,1)\times (0,1)\),

-

(ii)

the Lipschitz constants \(L_j^\ell \), corresponding to the functions \(W_j^\ell \), are uniformly bounded in j and \(\ell \) and \(\sum _\ell \sum _{j=1}^\infty 2^j L_j<\infty \),

-

(iii)

there exist \(\{C_j^\ell \}\) such that \(\sum _\ell \sum _{j=1}^\infty C_j^\ell <\infty \) and for each R and S,

$$\begin{aligned} \sup _{u,v}\left| w_{j,u,v}^\ell - W_j^\ell \left( \frac{u}{R},\frac{v}{S}\right) \right| \le \frac{C_j^\ell }{\max (R,S)}, \end{aligned}$$(12)where, for each \(j\in {\mathbb {N}}\) and \(\ell =h,v,d\), the supremum is taken over \(u=0,\ldots , R-1\) and \(v = 0,\ldots , S-1\).

Definition 4

(Evolutionary Wavelet Spectrum). The evolutionary wavelet spectrum of the locally stationary wavelet process \(X_{r,s}\) is defined as

and \(\varvec{z}\in (0,1)\times (0,1).\)

Definition 5

(One-dimensional discrete autocorrelation wavelets). The one-dimensional discrete autocorrelation wavelet and scaling functions are given by

respectively, for \(\tau \in {\mathbb {Z}}\) and \(j\in {\mathbb {N}}\).

Definition 6

(One-dimensional inner product operators). The inner product operators \(A=(A_{j,k})_{j,k\in {\mathbb {N}}}\), \(B=(B_{j,k})_{j,k\in {\mathbb {N}}}\) and \(C=(C_{j,k})_{j,k\in {\mathbb {N}}}\) are \(J\times J\) dimensional matrices constructed from the autocorrelation wavelets as follows

Although not strictly necessary for defining for defining two-dimensional locally stationary wavelet processes, these inner product operators will be useful in Sect. 3 when studying the effects of dyadically sampling such a process.

Remark 2

We note that, for real valued discrete wavelets, although \(A=A^T\) and \(B = B^T\), it is not generally true that \(C=C^T\).

Definition 7

(Two-dimensional raw wavelet periodogram). The raw wavelet periodogram of \(Y_{r,s}\) is defined as \(I^\ell _{j,u,v} = (d^\ell _{j,u,v})^2\) for \(j\in {\mathbb {N}}\), \(u,v\in {\mathbb {Z}}\) and \(\ell =h,v,d\), where \(d^\ell _{j,u,v}\) are the non-decimated wavelet coefficients of \(Y_{r,s}\) given by

for \(j\in {\mathbb {N}}\), \(u,v\in {\mathbb {Z}}\) and \(\ell =h,v,d\).

Definition 8

(Two-dimensional discrete autocorrelation wavelets). The two-dimensional autocorrelation wavelets are given by

where \(j\in {\mathbb {N}},\) and \(\ell =h,v,d.\)

Definition 9

(Two-dimensional inner product operator). The two-dimensional inner product operator \(A^{(2)}\) is a \(3J\times 3J\) dimensional matrix constructed from the autocorrelation wavelets as follows

where

and \(1\le j,k\le J.\)

Remark 3

Note that the one-dimensional inner product matrices A, B and C are related to the two-dimensional inner product matrix \(A^{(2)}\) as follows

where \(1\le j,k\le J\).

3 Theoretical consequences of dyadic sub-sampling

Following the work in Eckley and Nason (2018), we now examine the effects of dyadic sub-sampling on a two-dimensional locally stationary wavelet process. We note that some work on the two-dimensional case is presented in Gott (2012), however, only processes represented using Shannon wavelets and the effects of dyadic sub-sampling that is the same in both directions is considered. Hence, in this work we generalise these concepts by deriving results that are applicable to any choice of wavelets and to dyadic sub-sampling that is not necessarily the same in both dimensions. Analogously to the one-dimensional case (Eckley and Nason 2018), dyadic sub-sampling of a two-dimensional locally stationary wavelet process results in a new process defined on a smaller grid which is the sum of two separate components: a locally stationary wavelet process and a process which is asymptotically white noise. The variance of the latter depends on the \(S^\ell _1({\varvec{z}})\), which may not be constant, at the points that remain after the sub-sampling has occurred, in essence, the information at the finest scale that was lost. This is formalised in the following theorem:

Theorem 1

Let \(X_{r,s}\) be a two-dimensional stationary wavelet process with evolutionary wavelet spectrum given by \(\{S_j^\ell ({\varvec{z}})\}_{j=1}^\infty \), \(\ell =h,v,d\). If (a) \(Y_{r,s} = X_{r,2s}\), (b) \(Y_{r,s} = X_{2r,s}\) or (c) \(Y_{r,s} =X_{2r,2\,s}\), the \(\{Y_{r,s}\}_{r,s\in {\mathbb {Z}}}\) can be decomposed as \(Y_{r,s}=L_{r,s}+F_{r,s}\) where \(L_{r,s}\) is a two-dimensional locally stationary wavelet process with the same underlying wavelets as \(X_{r,s}\), with raw wavelet periodogram expectation given by (24), (25) and (26) for directions \(\ell =h\), \(\ell =v\) and \(\ell =d\) respectively, and \(F_{r,s}\) is a zero mean process and autocovariance

-

(a)

\(\text {cov}(F_{r,s}, F_{r+\tau _1, s+\tau _2}) = \Big \{\sum _\ell S^\ell _1\left( \frac{r}{R},\frac{2\,s}{S}\right) + O(\min (R,S)^{-1})\Big \}\delta _{0,\tau _1}\delta _{0,\tau _2}\),

-

(b)

\(\text {cov}(F_{r,s}, F_{r+\tau _1, s+\tau _2}) = \Big \{\sum _\ell S^\ell _1\left( \frac{2r}{R},\frac{s}{S}\right) + O(\min (R,S)^{-1})\Big \}\delta _{0,\tau _1}\delta _{0,\tau _2}\),

-

(c)

\(\text {cov}(F_{r,s}, F_{r+\tau _1, s+\tau _2}) = \Big \{\sum _\ell S^\ell _1\left( \frac{2r}{R},\frac{2\,s}{S}\right) + O(\min (R,S)^{-1})\Big \}\delta _{0,\tau _1}\delta _{0,\tau _2}\).

If \(S_1^\ell ({\varvec{z}})\), \(\ell =h,v,d\), is constant for \({\varvec{z}}\in (0,1)^2\), then \(F_t\) is stationary white noise with variance \(\sum _\ell S_1^\ell \).

By repeatedly applying the above theorem to a process, we obtain:

Corollary 1

Let \(X_{r,s}\) be a two-dimensional stationary wavelet process with evolutionary wavelet spectrum given by \(\{S_j^\ell ({\varvec{z}})\}_{j=1}^\infty \), \(\ell =h,v,d\). If \(Y_{r,s} = X_{2^pr,2^qs}\), then it can be decomposed as \(Y_{r,s} = L_{r,s}+F_{r,s}\), where \(L_t\) is a two-dimensional locally stationary wavelet process with the same underlying wavelets as \(X_{r,s}\), with raw wavelet periodogram expectation given by (24), (25) and (26) for directions \(\ell =h\), \(\ell =v\) and \(\ell =d\) respectively, and \(F_{r,s}\) is a zero mean process and autocovariance

where \(p\vee q = \max (p,q)\). If \(S_j^\ell ({\varvec{z}})\), \(\ell =h,v,d\), is constant for \({\varvec{z}}\in (0,1)\times (0,1)\), then \(F_t\) is stationary white noise with variance \(\sum _\ell \sum _{j=1}^{p\vee q} S^\ell _j\).

Our next result shows what happens to the expectation of the raw wavelet periodograms for the vertical, horizontal and diagonal wavelet directions.

Theorem 2

Let \(X_{r,s}\) be a two-dimensional stationary wavelet process with evolutionary wavelet spectrum given by \(\{S_j^\ell ({\varvec{z}})\}_{j=1}^\infty \), \(\ell =h,v,d\), with Daubechies compactly supported wavelets. Without loss of generality, let \(p \le q\) (the case \(p \ge q\) is the same but with the expectations of the horizontal and vertical raw wavelet periodograms switched). Then the expectations of the raw wavelet periodograms of \(Y_{r,s} = X_{2^pr, 2^qs}\) are given by

for \(\ell =h,v,d\), where \(j\in {\mathbb {N}}\), \(u,v\in {\mathbb {Z}}\), A, B, C are the inner product matrices from Definition 5, \(p\wedge q = \min (p,q)\) and \(p\vee q = \max (p,q)\).

Note that the first terms in the above expressions are equivalent to the folded spectrum for stationary processes as stated in equation (1). Due to the non-orthogonality of the autocorrelation wavelets, we obtain additional terms consisting of information at finer scales that has been lost due to sub-sampling and which reappears as confounded information at coarser scales.

Remark 4

Note that if \(p=q\) then the expectation of the raw wavelet periodogram is given by

for \(i=h,v,d\), which is analogous to the result for one-dimensional locally stationary wavelet processes derived in Eckley and Nason (2018).

To aid our comparison, we write the expectation of the raw wavelet periodogram for the original process using inner product operators given in Definition 5:

where

Comparing these to the expectations of the raw wavelet periodograms for processes that have undergone dyadic sub-sampling, we note a few key differences. The first term on the right hand side of (24), (25) and (26) appears at all scales and locations and is the same for all directions. This corresponds to power at finer scales from all wavelet directions re-appering at coarser scales due to dyadic sub-sampling in both dimensions at the same rate. The second term in each of (24), (25) and (26) is also surplus, however in this case it differs between directions - the terms in the sum are weighted by the one-dimensional inner product operators from Definition 5. Note also that these are shifted by p. This corresponds to power at finer scales being redistributed to coarser scales due to additional dyadic sub-sampling in only one dimension (in this case the first dimension, since \(p \ge q\)). Finally, similarly to the one-dimensional case (Eckley and Nason 2018), the terms on the right hand sides of (24), (25) and (26) have their inner product matrices shifted by p in one dimension and q in the other and now lie on the grid defined by \((2^pu/R,2^q/S)\).

As described in Eckley and Nason (2018), a locally stationary wavelet process that is white noise with variance \(\sigma ^2\) has a raw wavelet periodogram expectation of \(\sigma ^2\) at all scales and locations, which means that we cannot distinguish between white noise or aliasing. In essence, if spectral power appears at all scales at a given location than white noise confounding or aliasing has occurred, although we can’t tell them apart. We formalise this in the next section.

4 Test procedure

4.1 Testing for the absence of aliasing or white noise confounding

Let’s assume that we would like to test whether there is evidence of aliasing or white noise confounding at a point \({\varvec{z}}_0 = (r_0,s_0)\in [0,R]\times [0,S]\). We test whether aliasing occurred in each wavelet direction \(\ell = h,v,d\) independently. In an ideal situation, we would have access to the true underlying evolutionary wavelet spectrum \(S_j^\ell \) at all scales and so we would test null hypothesis

against the alternate hypothesis

for \(\ell \in \{h,v,d\}\). However, as described by Eckley and Nason (2018), we have to estimate \(S_j^\ell \) from the realisation of the process and, due to boundary effects, only obtain reliable estimates for a finite set of scales \(j\le J^\dagger < J\). Therefore, we would have to test the null hypothesis

against the alternate hypothesis

where in this case, \(S^\ell _j\) is the estimated wavelet spectrum of the process. As noted in Eckley and Nason (2018), if \(H_0\) is true then automatically

\(H_0^{(I)}\) is true as well. The same cannot be said for the alternate hypothesis, since there could exist a scale \(j>J^\dagger \) such that \(S_j^\ell (\varvec{z_0}) = 0\). For this reason, the test becomes a test for the absence of aliasing or white noise confounding in a locally stationary wavelet process.

Following Eckley and Nason (2018), we describe how the test can be carried out in practice. Let \(b\in {\mathbb {N}}_{>0}\), define the set

and collect the sample \(\{{\hat{S}}_j^\ell ({\varvec{z}})\}_{{\varvec{z}}\in \Lambda }\), where \({\hat{S}}_j^\ell ({\varvec{z}})\) denotes the estimate of the wavelet evolutionary spectrum at the point \({\varvec{z}}\) computed as described in Eckley (2001). In order to do so, we first obtain an estimate of the raw wavelet periodogram \(\hat{I}_j^\ell (\varvec{z})\), which is a biased estimate of the evolutionary wavelet spectrum. We then use the inner product operator A from Definition 9 to correct for this bias, obtaining the unbiased estimator \({\hat{S}}_j^\ell ({\varvec{z}})\). Note that the above set corresponds to the set of spectral estimates lying on a \(2b+1\times 2b+1\) square grid centred at \(\varvec{z_0}\). From the Lipschitz continuity assumption on \(S_j({\varvec{z}})\), we have that

and so under \(H_0\) we have that \(\exists \, j\in \{1,\ldots ,J^\dagger \}\) such that

which is approximately zero for small u and v and large R and S. Therefore we would like to test whether the mean of the sample \(\{{\hat{S}}_j^\ell ({\varvec{z}})\}_{{\varvec{z}}\in \Lambda }\) is equal to zero for each scale \(j\in \{1,\ldots ,J^\dagger \}\). To do this we can use a Student’s t-test on the sample \(\{{\hat{S}}_j^\ell ({\varvec{z}})\}_{{\varvec{z}}\in \Lambda }\) with test statistic

where \({\overline{S}}_{j,\varvec{z_0}}^\ell \) and \({\hat{\sigma }}_{j,\varvec{z_0}}^\ell \) are the sample mean and standard deviation respectively. Since we are testing multiple hypotheses simultaneously, we use Holm’s method (Holm 1979) to ensure that the family-wise error rate is not too high.

As the size of the image increases, we can estimate the evolutionary wavelet spectrum more reliably for a larger number of scales and so it is only natural to increase the number of scales \(J^\dag \) to consider in the test. The choice of b also varies with the size of the image. As the latter increases, a constant value of b means that the sample becomes increasingly localised around the point \(\varvec{z_0}\). In essence, there is a trade-off between size and localisation of the sample—a larger sample (and b) loses local information. Hence, with larger images one can choose a larger value of b if desired.

As previously noted, rejecting the null hypothesis does not guarantee that aliasing or white noise confounding definitely occurred, but rather suggests that this may have happened. Furthermore, the test does not distinguish between the two cases. Hence, further investigation would need to be carried out. For example, one could sample the process at a higher rate and check the finer scales of the corresponding wavelet spectrum, however, it is not always possible to carry this out in practice.

4.2 Dealing with correlation in the samples

As noted in Eckley and Nason (2018), due to the Lipschitz continuity assumption, the samples at each individual scale are likely to contain high autocorrelation between observation in close proximity to one another. In this case, the Student’s t test will perform poorly as the independence assumption is violated. Some possible solutions to this problem are discussed in Zwiers and Storch (1995) and Thiebaux and Zwiers (1984). A very simple solution is to sub-sample the data; Zwiers and Storch (1995) argue that, in the case of temperature data, observations separated by 5 days from one another could be assumed to be independent. The Student’s t test would then be carried out on this smaller sample. Since we are testing data that lies on a regularly spaced square grid, this method can be easily adapted by choosing observations that lie a certain distance from one another on this grid. However, this requires that a significant proportion of the data be discarded, resulting in a loss of information, a concern that was also raised in Zwiers and Storch (1995). Furthermore, one would have to determine an appropriate distance between observations that are kept for the test.

Another approach is to compute the equivalent sample size (ESS) (Zwiers and Storch 1995; Thiebaux and Zwiers 1984). This can be interpreted as the number of effectively independent observations in the sample. For spatial data lying on an \(n\times n\) grid, the equivalent sample size is defined as

where \(\rho (\tau _1,\tau _2)\) is the spatial autocorrelation function with lags \(\tau _1\) and \(\tau _2\) (a full derivation of this result, following the one-dimensional one in Thiebaux and Zwiers (1984), is available in the appendix). However, in most cases, this cannot be reliably estimated from the data. Some improvements can be obtained by truncating the sum in (40) after a certain number of terms (Zwiers and Storch 1995; Thiebaux and Zwiers 1984). Further improvements to the estimate can also be obtained by constraining the equivalent sample size to values that are deemed to be realistic, as suggested by Zwiers and Storch (1995), and so for an \(n\times n\) grid,

Since we are testing a small region of the spectrum at each scale, we have a few possible ways for estimating the ESS. The natural way of doing so, is to estimate the ESS directly from the sample we are testing. Alternatively we could estimate the ESS for the entire spectrum at a given scale and then re-scale this to the number of observations contained in our sample. Since we are now using a significantly larger number of observations, our estimate of the spatial autocorrelation function (and hence the ESS) will be more robust. The cost of doing so is that regardless of the region we are testing, the ESS will be the same and hence we may lose some local information.

4.3 Computational feasibility, parallelisation and speed up

In this subsection, we first address the estimation of the evolutionary wavelet spectrum, focusing on the number of computations required to perform this operation and ways in which this can be parallelised. Consider the two-dimensional process \(\{X_{m,n}\}\), defined on the square grid \(m,n = 0,\ldots , N\). To compute an estimate of the evolutionary wavelet spectrum, several steps are involved. Firstly, the non-decimated wavelet transform of the data \(\{X_{m,n}\}\) needs to be computed, as explained in greater detail in Nason (2008) and Eckley (2001). Using the pyramid scheme due to Mallat (1989), this can be performed in O\((N^3\log N)\) operations. The resulting transform is then squared to obtain the raw wavelet periodogram, requiring a further O(\(N^2\log (N)\)) operations. However, due to the inherent bias of the wavelet periodogram, it is necessary to correct it using the inner product operator from Definition 9. This correction yields an unbiased estimate of the evolutionary wavelet spectrum and has a computational complexity of O\((N^3\log N)\), since we are performing a matrix multiplication at each scale. For further information, please refer to Nason (2000), Nason (2008) or Eckley (2001). Therefore, overall, the computational complexity of this procedure is O\((N^3\log N)\).

Now we turn to the possibility for parallelisation. We can parallelise the non-decimated discrete wavelet transform. As the two-dimensional wavelet transform is composed of sequences of one-dimensional transforms performed on the rows and columns of the data, these sequences can be distributed across multiple processing units, allowing for simultaneous computation. A more comprehensive description of this approach can be found in Marino et al. (1999) and Chaver et al. (2002). Additionally, Nielsen (1998) provides insights into this approach and discusses methods for parallelising the one-dimensional wavelet transform as well.

Following this, parallelising the computation of the wavelet periodogram is quite simple. As it involves squaring the non-decimated wavelet coefficients, this operation can be easily divided among multiple processors.

We now turn our attention to the correction of the raw wavelet periodogram, which involves multiple matrix multiplications, specifically the inverses of the inner product operators with the wavelet periodogram. This is quite straightforward, as matrix multiplications are simply sequences of inner products between the rows of the first matrix and the columns of the second, which can easily be distributed over multiple processors. Numerous existing procedures already address this step, and a detailed discussion is beyond the scope of this section. Comprehensive coverage can be found in Schatz et al. (2016) and Gunnels et al. (1998), for example. By parallelising each step involved in the estimation of the evolutionary wavelet spectrum, notable advancements in computational speed can be achieved.

When testing for aliasing in a two-dimensional locally stationary wavelet process, the natural approach would be to apply the test at each point. However, in the case of images, conducting such tests on every individual pixel can be computationally infeasible, especially for large-scale data sets.

To mitigate this computational burden, an alternative approach is to test regions or specific pixels in the image instead of individually testing every pixel. This approach takes advantage of the fact that testing for the absence of aliasing and/or white noise confounding at a point necessitates analysing the entire region around it. By selecting representative regions or points, the computational workload can be significantly reduced while still providing reliable insights into the presence or absence of aliasing and/or white noise. This sampling strategy enables faster computation, making the test more feasible for practical applications involving large data sets. Additionally, parallelisation techniques can be applied to the testing process itself by distributing the test computations across multiple pixels at the same time.

5 Simulation study

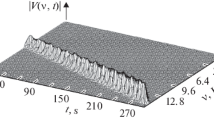

The test-bed spectrum used in our simulation study is defined in Eq. (42) and Fig. 1.

We begin by conducting the experiment on realisations without sub-sampling and test the points \({\varvec{z}}_0 = (1/2,1/2)\) and \({\varvec{z}}_0 = (1/4,1/4)\). For the true spectrum defined above, at these points, we expect to accept \(H_0\) and reject \(H_0\) in favour of \(H_A\) respectively for all directions. We drew 1000 realisations of size \(1024\times 1024\) from the test-bed spectrum using Daubechies D5 wavelets and test each wavelet direction \(l=h,v,d\) independently for scales \(j=1,\ldots ,4\) using the method described above and a nominal size of 5%. The results are displayed in Tables 1 and 2. As we can see, for appropriately chosen window widths b (\(b \ge 32\)), the tests accept and reject the null hypothesis as expected. For smaller values of b, the test is unreliable as it never rejects the null hypothesis. We also note that in Table 2, our rejection rates for large window widths could be viewed as being too high, and we attribute this to the difficulty in correcting for sample correlations when running the tests.

Our next experiment uses the same test-bed spectrum as before, except that in this case the entire spectrum in the horizontal direction is set to zero as well as the spectrum at scale \(j=3\) in the diagonal direction. We again drew 1000 realisations of size \(1024 \times 1024\), however in this case we sub-sample in both dimensions, reducing these to processes of size \(512\times 512\). As before, we test the points \({\varvec{z}}_0 = (1/2,1/2)\) and \({\varvec{z}}_0 = (1/4,1/4)\) and for each wavelet direction independently, with the only difference being that we only test \(j=1,2,3\) (since we lost a scale due to sub-sampling). In this case for the true underlying spectrum we would reject \(H_0\) for \(\ell =v\) and accept \(H_0\) for \(\ell =h\) and d at \({\varvec{z}}_0 = (1/2,1/2)\) and reject \(H_0\) for all wavelet directions at \({\varvec{z}}_0 = (1/4,1/4)\). The results are summarised in Tables 3 and 4.

When testing the point \({\varvec{z}}_0 = (1/2,1/2)\), we see that in most cases our test does not reject the null hypothesis in the horizontal and diagonal directions. This is to be expected, as the true spectrum for \(\ell =h\) is set to zero for all scales j and for \(\ell =d\) it is set to zero for \(j=3\). Since in all directions there is no spectral power at \({\varvec{z}}_0 = (1/2,1/2)\) for \(j=1\), we expect no power to be redistributed at finer scales due to sub-sampling. On the other hand, since there is spectral power at \({\varvec{z}}_0 = (1/4,1/4)\) for \(\ell =v\) and d at the finest scale prior to sub-sampling, power is redistributed at all coarser scales including all scales in the horizontal direction which used to have no spectral power. Although for \(b=32\) and \(b=64\) we would reject \(H_0\), further investigating would need to be carried out in order to conclude that aliasing occurred with certainty. Furthermore, we note that our rejection rates for \(b=32\) in the horizontal and diagonal directions are significantly lower than in the vertical direction, which is due to power already being present in this direction, as well as difficulties in correcting the test for correlation in the samples.

6 A real world example

In this section we demonstrate the use of the test on a real image of size \(2048\times 2048\). We tested for the absence of aliasing/white noise confounding at each pixel for \(J^\dag =5\) using Daubechies D6 wavelets. Computational time overall was quite long, taking approximately a week in total. However a significant amount of that computational time was used to compute the evolutionary wavelet spectrum (which we did only once) since the software we used was not parallelised. We note therefore that efficient parallelisation of the software would reduce computational times significantly, for example by following the suggestions outlined in Sect. 4.3.

The image and results are displayed in the top row of Fig. 2, corresponding to vertical, horizontal and diagonal directions respectively. Pixels where we would reject the null hypothesis \(H_0\) are displayed in gray. We note that this is rejected more frequently at pixels located in rougher region of the image, for example the trees at the center, compared to the smother regions such as parts of the sky and ground. If aliasing did occur, then this is to be expected, as rougher patches of the image contain more information, making them susceptible to aliasing, whereas smooth regions are constant in value and therefore do not lose information when down-sampled.

As pointed out in previous sections, although we can conclude that aliasing/white noise confounding did not occur in the white regions of the image, we cannot conclude with certainty that it did occur in the gray regions. Further investigation would be required to reach such a conclusion and, if reached, to determine which of the two (aliasing or white noise confounding) occurred.

We now sub-sample the original image twice in both the horizontal and vertical dimensions, reducing it to an image of size \(512\times 512\). We carry the test out again using the same underlying wavelets and for \(J^\dag =3\). Due to the smaller size of the image, the computational times are significantly lower, taking only a few hours. The sub-sampled image and the corresponding results are displayed in the lower row of Fig. 2, where again pixels for which the null hypothesis \(H_0\) was rejected are coloured in gray.

The test is rejected for a proportionally larger number of pixels which correspond to rougher patches containing more information. Since we have access to the original version of the image, we can conclude that aliasing occurred in areas that were not previously coloured in gray.

Top left - original image. Bottom left - original image sub-sampled twice in both dimensions. For each pixel we test \(H_0\) against \(H_A\). Pixels for which we would reject \(H_0\) are displayed in gray. From left to right (excluding first column) - results for vertical, horizontal and diagonal wavelet directions. Top left image borrowed from Koentjoro (2018)

7 Extension to videos

A natural extension to the work in this paper is to develop a similar procedure for testing for the absence of aliasing/white noise confounding in videos. We can distinguish between two scenarios: the first involves a fixed camera and lighting, while the second assumes variability in both. In the former case, each pixel in the video consistently represents the same object throughout the entire duration, eliminating the need for specific modifications. Conversely, in the latter case, while each pixel may potentially correspond to a different object across different frames, it is worth noting that it may not be strictly necessary to perform adjustments in all cases. This presents a more intricate challenge in determining the pixel-to-object correspondence. One possible avenue for addressing this situation is to explore methods that compensate for camera movements relative to its initial position and adapt to changes in lighting. However, it is important to note that such an approach would require precise information about camera movements and lighting conditions, which may not always be readily available, and therefore alternative solutions will need to be explored. Consequently, it becomes evident that the challenge of handling a moving camera with changing lighting requires careful consideration.

We now briefly outline two possibilities for going from images to videos. The first is to treat videos as three-dimensional processes and therefore treat them as three-dimensional locally stationary wavelet processes. Testing for the absence of aliasing/white noise confounding would require extending the methods outlined in Eckley and Nason (2018) and this paper to three-dimensional processes.

Unlike time series or images, the dimensions in a video are not all of the same kind and instead consist of one temporal and two spatial dimensions. When dealing with spatio-temporal data, it is common practice to handle the spatial and temporal components as distinct entities, assuming that this separability is valid. For further insights into spatio-temporal statistics, please refer to Cressie and Wikle (2011) for example. This leads naturally to our second suggestion, which is to apply both the one-dimensional and two-dimensional tests to a given pixel at a given time.

More formally, given a point \(z_0\) in the video, this can be viewed as both a point in the time series of a pixel and as a pixel in an image corresponding to that frame. Hence, one could apply the one-dimensional version of the test developed in Eckley and Nason (2018) to the point in time series and the two-dimensional version of the test to the point in the image simultaneously, obtaining results (i.e. whether to accept or reject the null hypothesis) in time and in the tree wavelet directions. To then determine whether there is evidence for aliasing or white noise confounding at that point, one could then apply a multiple hypothesis scheme. This and other possibilities are left for future work.

8 Closing remarks

In this paper we have extended the absence of aliasing or white noise confounding test developed in Eckley and Nason (2018) to two-dimensional locally stationary wavelet processes and demonstrated it’s application to images. Additionally, we gave some suggestions for extending these methods further and, in particular, a procedure for applying the test to videos.

A clear avenue for future work would be to investigate solutions for dealing with correlation in the samples in more detail. Although the methods outline in this work are able correct the Student’s t test for correlation, they are still unreliable at times. Further work could also be conducted on choosing an appropriate window width in which to conduct the test. Finally, it would also be interesting to see these methods being applied to videos as well, as this is a natural extension to the work developed here.

References

Burger, W., Burge, M.: Principles of Digital Image Processing: Core Algorithms. Springer (2010)

Chaver, D., Prieto, M., Pinuel, L., Tirado, F.: Parallel wavelet transform for large scale image processing. In: Proceedings 16th International Parallel and Distributed Processing Symposium (2002)

Coulange, B., Moisan, L.: An aliasing detection algorithm based on suspicious colocalizations of Fourier coefficients. In: 2010 IEEE International Conference on Image Processing, pp. 2013–2016 (2010)

Cressie, N., Wikle, C.K.: Statistics for Spatio-Temporal Data. Wiley Series in Probability and Statistics, Wiley (2011)

Daubechies, I.: Ten Lectures on Wavelets. SIAM, Philadelphia (1992)

Douglas, S.C.: Detection of Aliasing in Image Sequences Using Nonlinear Factor Analysis. Springer (2012)

Eckley, I.A.: Wavelet methods for time series and spatial data. Ph.D Thesis, University of Bristol (2001)

Eckley, I.A., Nason, G.P.: Efficient computation of the discrete autocorrelation wavelet inner product matrix. Stat. Comput. 15, 83–92 (2005)

Eckley, I.A., Nason, G.P.: A test for the absence of aliasing or local white noise in locally stationary wavelet time series. Biometrika 105(4), 833–848 (2018)

Gonzalez, R., Woods, R.: Digital Image Processing. Pearson (2018)

Gott, A.N.: Wavelet methods for locally stationary data. Ph.D Thesis, Lancaster University (2012)

Gunnels, J., Lin, C., Morrow, G., van de Geijn, R.: A flexible class of parallel matrix multiplication algorithms. In: Proceedings of the First Merged International Parallel Processing Symposium and Symposium on Parallel and Distributed Processing, pp. 110–116 (1998)

Hinich, M.J., Messer, H.: On the principal domain of the discrete bispectrum of a stationary signal. IEEE Trans. Signal Process. 43(9), 2130–2134 (1995)

Hinich, M.J., Wolinsky, M.A.: A test for aliasing using bispectral analysis. J. Am. Stat. Assoc. 83(402), 499–502 (1988)

Holm, S.: A simple sequentially rejective multiple hypothesis test procedure. Scand. J. Stat. 6(2), 65–70 (1979)

Koentjoro, H.: Monochromatic Minimalism with the X1D. (2018)

Li, Q., Shen, L., Guo, S., Lai, Z.: WaveCNet: wavelet integrated CNNs to suppress aliasing effect for noise-robust image classification. IEEE Trans. Image Process. 30, 7074–7089 (2021)

Liu, S., Liu, L., Tang, J., Yu, B., Wang, Y., Shi, W.: Edge computing for autonomous driving: opportunities and challenges. Proc. IEEE 107(8), 1697–1716 (2019)

Liu, L., Lu, S., Zhong, R., Wu, B., Yao, Y., Zhang, Q., Shi, W.: Computing systems for autonomous driving: state of the art and challenges. IEEE Internet Things J. 8(8), 6469–6486 (2021)

Mallat, S.G.: A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 11(7), 674–693 (1989)

Marino, F., Piuri, V., Swartzlander, E.E.: A parallel implementation of the 2-d discrete wavelet transform without interprocessor communications. IEEE Trans. Signal Process. 47(11), 3179–3184 (1999)

Nason, G.P., et al.: Wavelet processes and adaptive estimation of the evolutionary wavelet spectrum. J. R. Stat. Soc. Ser. B 62(2), 271–292 (2000)

Nason, G.P.: Wavelet Methods is Statistics with R. Springer, New York (2008)

Nielsen, O.: Parallel wavelet transforms. pp. 385–389 (1998)

Patney, A., Lefohn, A.: Detecting aliasing artifacts in image sequences using deep neural networks. New York. Association for Computing Machinery (2018)

Priestley, W.B.: Spectral Analysis and Time Series, Volumes I and II. Academic Press, London (1983)

Ribeiro, A.H., Schön, T.B.: How convolutional neural networks deal with aliasing. In: ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp 2755–2759 (2021)

Schatz, M.D., van de Geijn, R.A., Poulson, J.: Parallel matrix multiplication: a systematic journey. SIAM J. Sci. Comput. 38(6), C748–C781 (2016)

Thiebaux, H.J., Zwiers, F.W.: The interpretation and estimation of effective sample size. J. Clim. Appl. Meteorol. 23, 800–811 (1984)

Vasconcelos, C., et al.: An effective anti-aliasing approach for residual networks (2020)

Vasconcelos, C., et al.: Impact of aliasing on generalization in deep convolutional neural networks. In: International Conference on Computer Vision ICCV 2021. IEEE/CVF (2021)

Zwiers, F.W., Storch, H.V.: Taking serial correlation into account in tests of the mean. J. Clim. 8, 336–351 (1995)

Author information

Authors and Affiliations

Contributions

HAP and GP wrote the main manuscript text. HAP prepared the figures and tables.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A - Proofs

Proof of Theorem 1

The proof is similar to the one-dimensional case in Eckley and Nason (2018), although it requires some adaptations to two-dimensions.

(a) We begin by substituting s with 2s into Eq. (11) to obtain

where \(L_{r,s}\) corresponds to the \(j>1\) terms and \(F_{r,s}\) to the \(j=1\) term, i.e.

and

We focus first on the term \(L_{r,s}\), which we can decompose as

where (e, e) denotes terms that are evenly-indexed in both components, (e, o) denotes terms that are evenly-indexed in the first component and oddly-indexed in the second, and so on. Consider

where we assume, without loss of generality, that r is even. Note that if r was odd, then the expression on the right hand side of (47) would simply equal \(L_{r,s}^{(o,e)}\). Now, using the definition of the two-dimensional mother wavelet, we have

where in (50) we summed over \(m=r+a\) and \(n=s+b\) instead of a and b and in (51) we summed over \(p=u-m\) and \(q=v-n\) instead of m and n. Substituting (51) back into (47), we obtain

where

Summing over \(a=u-p\) and \(b=v-q\) instead of p and q we have

Define \(\xi _{j-1,a+p,b+q}^{*\ell } = \xi _{j,2(a+p),2(b+q)}^\ell \), \(W^*_{j-1}({\varvec{z}}) = W_{j}({\varvec{z}})\) and \(w_{j-1,a+p,b+q}^{*\ell } = w_{j,2(a+p),2(b+q)}^\ell \) for \(j=2,3, \ldots \) \( a,b\in {\mathbb {Z}}\) and \({\varvec{z}}\in (0,1)^2\). Then

From assumption (iii) in Definition 3, we have

and so \(U_{r,s}^{(p,q)}\) is itself a two-dimensional locally stationary wavelet process. Since only a finite number of the \(h_{2p+r}\) and \(h_{2q}\) terms are non-zero, \(L^{(e,e)}_{r,s}\) is equivalent to the sum of a finite number of two-dimensional locally stationary wavelet processes with constant coefficients, which does not depend on r or s, and so is a two-dimensional stationary wavelet process as well. We can proceed in a similar manner for \(L^{(e,e)}_{r,s}\), \(L^{(e,e)}_{r,s}\) and \(L^{(e,e)}_{r,s}\) to conclude that \(L_{r,s}\) is a two-dimensional locally stationary wavelet process with the same underlying wavelets as \(X_{r,s}\).

We now compute the covariance of \(F_{r,s}\). This process has mean zero since \(\xi _{j,u,v}^\ell \) has mean zero for all j, u, v and \(\ell \). Now,

We include the last step, namely (62) to show that we can consider each wavelet direction separately. We begin by considering the horizontal component \(\ell =h\) of the autocovariance of \(F_{r,s}\) and so

where in (64) we used the fact that \(\psi ^h_{1,u,v}=h_ug_v\). We can obtain similar expressions for the vertical \(\ell =v\) and diagonal \(\ell =d\) directions as well.

We now consider two cases: firstly, if \(w_{1,u,v}^\ell = w_1^\ell = \) is constant, then, for \(\ell =h\), we have

We obtain analogous results for \(\ell =v\) and \(\ell =d\), and so

Now, if \(w_{1,u,v}^\ell \) is not constant, then

where in (70), we sum over \(m=u-r\) and \(n=v-2s\) instead of u and v. (71) follows from assumption (iii) in Definition 3 and in (72) we used the Lipschitz continuity of the process. Again, this is analogous for \(\ell =v\) and \(\ell =d\), and so

(b) Analogous to (a)

(c) Almost identical to (a) and (b) and the proof for the one-dimensional case in Eckley and Nason 2018.

Proof of Corollary 1

Apply Theorem 1(c) for \(\min (p,q)\) times and then apply either (a) or (b) for \(\max (p,q)-\min (p,q)\) times (i.e. (a) if \(q>p\) and (b) if \(p>q\)).

Proof of Theorem 2

We begin by computing the expectation of the wavelet periodogram of the process \(Y_{r,s} = X_{2^pr, 2^qs}\), for \(i=h,v,d\).

where in (77) we substitute in the definition of the two-dimensional locally stationary wavelet process, in (80) we set

and in (81) we sum over \(m=a-2^pu\) and \(n=b-2^qv\) instead of a and b. In (82) we use assumption (iii) from Definition 3 and in (83) we make use of the Lipschitz continuity assumption. Now,

where in (89) we used the definition of the two-dimensional autocorrelation wavelet and in (90), we sum over \(a=x-r\) and \(b=y-s\) instead of x and y. Therefore

Assuming without loss of generality that \(p< q\) and considering the case \(i=h\), we have

Note that here we used the result derived in Eckley and Nason (2005) that \(\Psi _k(2^q b) = \Psi _{k-q}(b)\) for \(k >q\) and \(\Psi _k(2^q b) = \delta _{0,b}\) for \(k\le q\). An analogous property can be derived for the father autocorrelation wavelet \(\Phi _j\), which we also used above.

Furthermore, we can decompose the expectation as follows

where

and so

Using similar arguments, we can obtain corresponding expressions for \({\mathbb {E}} \left\{ \left( d^h_{j,u,v}\right) ^2\right\} ^v\) and \({\mathbb {E}} \left\{ \left( d^h_{j,u,v}\right) ^2\right\} ^d\) and so

Similarly we can compute the expectations of \(\left( d^v_{j,u,v}\right) ^2\) and \(\left( d^d_{j,u,v}\right) ^2\). As we noted previously, the case where \(p>q\)simply results in the expectations for the vertical and horizontal directions being switched.

Derivation of the Spatial Equivalent Sample Size

Let \(X_{i,j}\), \(i,j = 1,\ldots ,n\) be observations on an \(n\times n\) equally spaced grid, with mean \(\mu \) and variance \(\sigma ^2\). Following the derivation in Thiebaux and Zwiers (1984),

where \(C(\tau _1,\tau _2)\) and \(\rho (\tau _1, \tau _2)\) are the symmetric spatial covariance and autocovariance functions, respectively, at lags \(\tau _1\) and \(\tau _2\). In the case where we have \(n_e\) independent observations, we would have \(\sigma _{{{\overline{X}}}}^2 = \sigma ^2/n_e\). Equation the two, we obtain the following expression for the equivalent sample size

Appendix B - Additional tables of results

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Palasciano, H.A., Nason, G.P. A test for the absence of aliasing or white noise in two-dimensional locally stationary wavelet processes. Stat Comput 33, 108 (2023). https://doi.org/10.1007/s11222-023-10269-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10269-5