Abstract

We consider the problem of estimating expectations with respect to a target distribution with an unknown normalizing constant, and where even the unnormalized target needs to be approximated at finite resolution. Under such an assumption, this work builds upon a recently introduced multi-index sequential Monte Carlo (SMC) ratio estimator, which provably enjoys the complexity improvements of multi-index Monte Carlo (MIMC) and the efficiency of SMC for inference. The present work leverages a randomization strategy to remove bias entirely, which simplifies estimation substantially, particularly in the MIMC context, where the choice of index set is otherwise important. Under reasonable assumptions, the proposed method provably achieves the same canonical complexity of MSE\(^{-1}\) as the original method (where MSE is mean squared error), but without discretization bias. It is illustrated on examples of Bayesian inverse and spatial statistics problems.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

We consider the computational approach to Bayesian inverse problems (Stuart 2010), which has attracted a lot of attention in recent years. One typically requires the expectation of a quantity of interest \(\varphi (x)\), where unknown parameter \(x \in \mathsf X\subset \mathbb R^{d}\) has posterior probability distribution \(\pi (x)\), as given by Bayes’ theorem.Footnote 1 Assuming the target distribution cannot be computed analytically, we instead compute the expectation as

where \(Z = \int _{\mathsf X}f(x)\pi _0(dx)\), f is prescribed up to a normalizing constant, \(\pi _0\) is the prior and \(\pi (x) \propto f(x) \pi _0(x)\). Markov chain Monte Carlo (MCMC) (Geyer 1992; Robert and Casella 1999; Bernardo et al. 1998; Cotter et al. 2013) and sequential Monte Carlo (SMC) (Chopin and Papaspiliopoulos 2020; Del Moral et al. 2006) are two methodologies which can be used to achieve this. In this paper, we use the degenerate notations \(d\pi (x) = \pi (dx) = \pi (x)dx\) to mean the same thing, i.e. the probability under \(\pi \) of an infinitesimal volume element dx (Lebesgue measure by default) centered at x.

Standard Monte Carlo methods can be costly, particularly in the case where the problem involves approximation of an underlying continuum domain, a problem setting which is becoming progressively more prevalent over time (Tarantola 2005; Cotter et al. 2013; Stuart 2010; Law et al. 2015; Van Leeuwen et al. 2015; Oliver et al. 2008). The multilevel Monte Carlo (MLMC) method was developed to reduce the computational cost in this setting, by performing most simulations with low accuracy and low cost (Giles 2015), and successively refining the approximation with corrections that use fewer simulations with higher cost but lower variance. The MLMC approach has attracted a lot of interest from those working on inference problems recently, such as MLMCMC (Dodwell et al. 2015; Hoang et al. 2013) and MLSMC (Beskos et al. 2018, 2017; Moral et al. 2017). The related multi-fidelity Monte Carlo methods often focus on the case where the models lack structure and quantifiable convergence behaviour (Peherstorfer et al. 2018; Cai and Adams 2022), which is very common across science and engineering applications. It is worthwhile to note that MLMC methods can be implemented on the same class of problems, and do not require structure or a priori convergence estimates in order to be implemented. However, convergence rates provide a convenient mechanism to deliver quantifiable theoretical results. This is the case for both multilevel and multi-fidelity approaches.

Recently, an extension of the MLMC method has been established called multi-index Monte Carlo (MIMC) (Haji-Ali et al. 2016). Instead of using first-order differences, MIMC uses high-order mixed differences to reduce the variance of the hierarchical differences dramatically. MIMC has first been considered to apply to the inference context in Cui et al. (2018) and Jasra et al. (2018b, 2021b). The state-of-the-art research of MIMC in inference is presented in Law et al. (2022), in which the MISMC ratio estimator for posterior inference is given, and the theoretical convergence rate of this estimator is guaranteed. Although a canonical complexity of MSE\(^{-1}\) for the MISMC ratio estimator can be achieved, it is still suffers from discretization bias. This bias constrains the choice of index set and estimators of the bias often suffer from high variance, which means implementation can be cumbersome for challenging problems where the method is expected to be particularly advantageous otherwise.

Debiasing techniques were first introduced in Rhee and Glynn (2012, 2015), McLeish (2011) and Strathmann et al. (2015), with many more works using or developing it further (Agapiou et al. 2014; Glynn and Rhee 2014; Jacob and Thiery 2015; Lyne et al. 2015; Walter 2017). These debiasing techniques are based on a similar idea as MLMC, but in addition to reducing the estimator variance, the former focus on building unbiased estimators. The connection between the debiasing technique and the MLMC method has been pointed out by Dereich and Mueller-Gronbach (2015), Giles (2015) and Rhee and Glynn (2015). Vihola (2018) has further clarified the connection within a general framework for unbiased estimators. The first work to combine the debiasing technique and MLMC in the context of inference is Chada et al. (2021). A recent breakthrough involves using double randomization strategies to remove the bias of the increment estimator (Heng et al. 2021; Jasra et al. 2021a, 2020).

The starting point of our current work is the MISMC ratio estimator introduced in Law et al. (2022). Our new randomized MISMC (rMISMC) ratio estimator will be reformulated in the framework of Rhee and Glynn (2015) to remove discretization bias entirely. Like the MISMC ratio estimator, our estimator provably enjoys the complexity improvements of MIMC and the efficiency of SMC for inference. Theoretical results will be given to show that it achieves the canonical complexity of MSE\(^{-1}\) under appropriate assumptions, but without any discretization bias and the consequent requirements for its estimation. From a practical perspective, estimating this bias, and balancing it along with the variance and cost in order to select the index set, comprises a significant overhead for existing multi-index methods. In addition to convenience and simplification, the particular formulation of our un-normalized estimators is novel, and may prove useful in other contexts where one cannot obtain i.i.d. samples from the increments. The unbiased estimators of the normalizing constant and un-normalized integral can also be useful in their own right, in the context of Robbins-Monro Robbins and Monro (1951) or other stochastic approximation algorithms Kushner and Clark (2012); Law et al. (2019); Jasra et al. (2021a).

The paper is organized as follows. In Sect. 2, we present the motivating problems considered in the following numerical experiments. In Sect. 3, the original MISMC ratio estimator is reviewed for convenience, and the rMISMC ratio estimator and its theoretical results are stated. In Sect. 4, we apply MISMC and rMISMC methods on Bayesian inverse problems for elliptic PDEs and log Gaussian process models.

2 Motivating problems

Here, we introduce the Bayesian inference for a D-dimensional elliptic partial differential equation and two statistical models, the log Gaussian Cox model and the log Gaussian process model. We will apply the methods that we present in Sect. 3 to these motivating problems in order to show their efficacy.

2.1 Elliptic partial differential equation

We consider a D-dimensional elliptic partial differential equation defined over an open domain \(\Omega \subset \mathbb R^{D}\) with locally continuous boundary \(\partial \Omega \), i.e. the boundary is the graph of a continuous function in a neighbourhood of any point. Given a forcing function \(\textsf{f}(x): \Omega \rightarrow \mathbb R\) and a diffusion coefficient function \(a(x): \Omega \rightarrow \mathbb R\), depending on a random variable \(x \sim \pi \), the partial differential equation for \(u(x): {\bar{\Omega }} \rightarrow \mathbb R\) (where \({\bar{\Omega }}\) is the closure of \(\Omega \)) is given by

The dependence of the solution u of (2) on x is raised from the dependence of a and \(\textsf{f}\) on x.

In particular, we assume the prior distribution in the numerical experiment as

and a(x) as

where \(\psi _i\) are smooth functions with \(\left\Vert \psi _i\right\Vert _{\infty }:= \sup _{z\in \Omega } \vert \psi (z) \vert \le 1\) for \(i=1,...,d\), and \(a_0 > \sum _{i=1}^{d}x_i\).

2.1.1 Finite element method

Consider 1D piecewise linear nodal basis functions \(\phi _{j}^{K}\) for meshes \(\{ z_{i}^{K} = i/(K+1) \}_{i=0}^{K+1}\), \(j=1,2,...,K\), which is defined as

For an index \(\alpha = (\alpha _1,\alpha _2) \in \mathbb {N}^{2}\), we can form the tensor product grid over \(\Omega =[0,1]^2\) as

where \(K_{1,\alpha }=2^{\alpha _1}\) and \(K_{2,\alpha }=2^{\alpha _2}\) and the mesh size in each direction is \(K_{1,\alpha }^{-1}\) and \(K_{2,\alpha }^{-1}\), respectively. Then the bilinear basis function is constructed by the product of nodal basis functions in two directions:

where \(i=i_1+K_{1,\alpha }i_2\) for \(i_1=1,...,K_{1,\alpha }\) and \(i_2 = 1,...,K_{2,\alpha }\) and \(K_{\alpha } = K_{1,\alpha }K_{2,\alpha }\).

A Galerkin approximation can be written as

where \(u_{\alpha }^i\) for \(i=1,...,K_{\alpha }\) are approximate values of the solution u(x) at mesh points that we want to obtain. Using Galerkin approximation to solve the weak solution of PDE (2), we can derive a corresponding Galerkin system:

where \(\textbf{A}_{\alpha }(x)\) is the stiffness matrix whose components are given by

where \(j = j_1+j_2 \,K_{1,\alpha }\) for \(j_1 = 1,...,K_{1,\alpha }\) and \(j_2 =1,...,K_{2,\alpha }\),

and

2.1.2 The Bayesian inverse problem

Under an elliptic partial differential equation model, we wish to infer the unknown parameter value \(x \in \mathsf X\subset \mathbb R^{d}\) given n evaluations of the solution \(y \in \mathbb R^{n}\) (Stuart 2010). We aim to analyse the posterior distribution \(\mathbb {P}(x \vert y)\) with density \(\pi (x) = \pi (x \vert y)\). In practice, one can only expect to evaluate a discretized version of \(x\in \mathsf X\). \(\pi (dx)\) then can be obtained up to a constant of proportionality by applying Bayes’ theorem:

where \(\pi _0(dx)\) is a density of the prior distribution and L(x) is the likelihood which is proportional to the probability density of the data y was created with a given value of the unknown parameter x.

Define the vector-valued function as follows

where n is the number of data, \(v_i \in L^2\) and \(v_i(u(x)) = \int v_i(z)u(x)(z)dz\) for \(i=1,...,n\). Then the data can be modelled as

where \(\mathcal {N}(0,\Sigma )\) denotes the Gaussian distribution with mean zero and variance-covariance matrix \(\Sigma \). Then the likelihood of the evaluations y can be derived as

where \(\vert w \vert _{\Sigma } = (w^{\top }\Sigma ^{-1}w)^{1/2}\).

When the solution of the elliptic PDE can only be solved approximately, we denote the approximate solution at resolution multi-index \(\alpha \) as \(u_{\alpha }\) as described above and the approximate likelihood is given by

and the posterior density is given by

2.2 Log Gaussian process models

Now, we consider the log Gaussian Cox model and the log Gaussian process model. A log Gaussian process (LGP) \(\Lambda (z)\) is given by

where \(x = \{ x(z): z\in \Omega \subset \mathbb R^{D} \}\) is a real-valued Gaussian process (Rasmussen 2003; Stuart 2010). The log Gaussian process model provides a flexible approach to non-parametric density modelling with controllable smoothness properties. However, inference for the LGP is intractable. The LGP model for density estimation (Tokdar and Ghosh 2007) assumes data \(z_i \sim p\), where \(p(z) = \Lambda (z)/\int _{\Omega } \Lambda (z) dz\). As such, the likelihood of x associated to observations \({\textsf{Z}}=\{z_1,\dots ,z_n\}\) is given by

The log Gaussian Cox (LGC) model assumes the observations are distributed according to a spatially inhomogeneous Poisson point process with intensity function given by \(\Lambda \). The likelihood of observing \({\textsf{Z}}=\{z_1,\dots ,z_n\}\) under the LGC model is (Møller et al. 1998; Murray et al. 2010; Law et al. 2022; Cai and Adams 2022)

This construction has an elegant simplicity, which is flexible and convenient due to the underlying Gaussian process. Some example applications are presented in Diggle et al. (2013).

We consider a dataset comprised of the location of \(n=126\) Scots pine saplings in a natural forest in Finland (Møller et al. 1998), denoted \(z_1,...,z_n \in [0,1]^2\). This is modeled with both LGC, following Heng et al. (2020), and LGP, following Tokdar and Ghosh (2007). The prior is defined in terms of a KL-expansion with a suitable parameter \(\theta = (\theta _1,\theta _2,\theta _3)\) as follows, for \(z \in [0,2]^2\),

where \(\mathcal {C}\mathcal {N}(0,1)\) denotes a standard complex normal distribution, \(\xi _{k}^{*}\) is the complex conjugate of \(\xi _{k}\), \(\phi _{k}(z) \propto \exp [\pi i z \cdot k]\) are Fourier series basis functions (with \(i=\sqrt{-1}\)) and

The coefficient r controls the smoothness, and here we will choose \(r=1.6\). Note that the periodic prior measure is defined on \([0,2]^2\) so that no boundary conditions are imposed on the sub-domain \([0,1]^2\). Then, the posterior distribution is given by

where \(\pi _0\) is constructed in (20) and L(x) is constructed in (19) (or (18)).

2.2.1 The finite approximation problem

One typically use a grid-based approximation to approximate the inferences in LGC (Murray et al. 2010; Diggle et al. 2013; Teng et al. 2017; Cai and Adams 2022) and in LGP (Riihimäki and Vehtari 2014; Griebel and Hegland 2010; Tokdar 2007). We approximate the likelihoods and priors of LGC and LGP by the fast Fourier transform (FFT) respectively, as described below. First, we truncate the KL-expansion of prior as follows, for an index \(\alpha = (\alpha _1,\alpha _2) \in \mathbb {N}^{2}\),

where \(\mathcal {A}_{\alpha }:= \{ -2^{\alpha _1/2},-(2^{\alpha _1/2}-1),...,2^{\alpha _1/2}-1,2^{\alpha _1/2} \} \times \{ 1,2,...,2^{\alpha _2/2}-1,2^{\alpha _2/2} \} \cup \{ 1,2,...,2^{\alpha _2/2}-1,2^{\alpha _2/2} \} \times 0\). The cost for approximating \(x_{\alpha }(z)\) over the grid is \(\mathcal {O}((\alpha _1+\alpha _2)2^{\alpha _1+\alpha _2})\). The finite approximations of the likelihood of LGC and LGP are then defined by

where \({\hat{x}}_{\alpha }(z)\) is defined as an interpolant over the grid output from FFT and Q denotes a quadrature rule, such that \(Q(\exp (x_{\alpha })) \approx \int _{[0,1]^2} \exp (x(z)) dz\). Then, the finite approximations of the posterior distribution of LGC and LGP are defined by

The quantity of interest for these models will be \(\varphi (x) = \int _{[0,1]^2} \exp (x(z)) dz\), and we will estimate its expectation \(\pi (\varphi ) = \mathbb {E}( \varphi (x) \vert z_1,\dots , z_n )\).

3 Randomized multi-index sequential Monte Carlo

The original MISMC estimator has been considered in Cui et al. (2018) and Jasra et al. (2018b, 2021b). Convergence guarantees have been established in Law et al. (2022), which demonstrates the importance of selecting a reasonable index set, by comparing the results with the tensor product index set and the total degree index set. Then, a very interesting extension to multi-index sequential Monte Carlo is introduced, which is called randomized multi-index sequential Monte Carlo. The basic methodology of randomized multi-index Monte Carlo is first introduced in Rhee and Glynn (2012, 2015). Instead of giving an index set in advance, we choose \(\alpha \) randomly from a distribution. Another advantage of this approach is that it can give an unbiased unnormalized estimator, which is discretization-free.

Define the target distribution as \(\pi (x) = f(x)/Z\), where \(Z = \int _{\mathsf X} f(x) dx\) and \(f(x):= L(x) \pi _0(x)\). Given a quantity of interest \(\varphi : \mathsf X\rightarrow \mathbb R\), for simplicity, we define

where \(f(1) = \int _{\mathsf X} f(x) dx = Z\). Define their approximations at finite resolution \(\alpha \in \mathbb {Z}_+^D\) by \(\pi _\alpha (x) = f_\alpha (x)/Z_\alpha \), where \(Z_\alpha = \int _{\mathsf X} f_\alpha (x) dx\) and \(f_\alpha (x):= L_\alpha (x) \pi _0(x)\), and \(\varphi _{\alpha }:\mathsf X\rightarrow \mathbb R\), where \(\lim _{\vert \alpha \vert \uparrow \infty } f_\alpha = f\) and \(\lim _{\vert \alpha \vert \uparrow \infty } \varphi _\alpha = \varphi \).

Consider the ratio decomposition

where \(\Delta \) is the first-order mixed difference operator

which is defined recursively by the first-order difference operator \(\Delta _i\) along direction \(1 \le i \le D\). If \(\alpha _i>0\),

where \(e_i\) is the canonical vectors in \(\mathbb R^{D}\), i.e. \((e_i)_j=1\) for \(j=i\) and 0 otherwise. If \(\alpha _i=0\), \(\Delta _i \varphi _{\alpha }(x_{\alpha }) = \varphi _{\alpha }(x_{\alpha })\).

For convenience, we denote the vector of multi-indices

where \(\varvec{\alpha }_1(\alpha ) = \alpha \), \(\varvec{\alpha }_{2^D}(\alpha ) = \alpha - \sum _{i=1}^{D}e_i\) and \(\varvec{\alpha }_i(\alpha )\) for \(1< i < 2^D\) correspond to the intermediate multi-indices while computing the mixed difference operator \(\Delta \).

Throughout this section \(C>0\) is a constant whose value may change from line to line.

3.1 Original MISMC ratio estimator

In order to make use of (28), we need to construct estimators of \(\Delta ( f_\alpha (\zeta _\alpha ) )\), both for our quantity of interest \(\zeta _\alpha = \varphi _\alpha \) and for \(\zeta _\alpha =1\). The natural and naive way to estimate \(\Delta ( f_\alpha (\zeta _\alpha ) )\) is based on sampling from a coupling of \((\pi _{\varvec{\alpha }_1(\alpha )},...,\pi _{\varvec{\alpha }_{2^D}(\alpha )})\). However, this is not a trivial approach, instead we construct an approximate coupling \(\Pi _{\alpha }: \sigma (\mathsf X^{2^D}) \rightarrow [0,1]\) as follows. We first define the coupling prior distribution as

where \(\varvec{x}= (\varvec{x}_1,...,\varvec{x}_{2^D}) \in \mathsf X^{2^D}\) and \(\delta _{\varvec{x}_1}\) denotes the Dirac delta function at \(\varvec{x}_1\). Note that this is an exact coupling of the prior in the sense that for any \(j \in \{1,\dots , 2^D\}\)

Here we denote \(\varvec{x}_{-j} = (\varvec{x}_1,...,\varvec{x}_{j-1},\varvec{x}_{j+1},...,\varvec{x}_{2^D})\) which omits the jth coordinate. Indeed it is the same coupling used in MIMC (Haji-Ali et al. 2016).

In order to provide estimates analogous to the variance rate in the MIMC (Haji-Ali et al. 2016), we use the SMC sampler (Chopin and Papaspiliopoulos 2020; Del Moral et al. 2006) to compute. We hence adapt Algorithm 1 to an extended target which is an approximate coupling of the actual target as in Jasra et al. (2018a, 2018b, 2021b), Cui et al. (2018) and Franks et al. (2018), and utilize a ratio of estimates, similar to Franks et al. (2018). To this end, we define a likelihood on the coupled space as

The approximate coupling is defined by

Example 1

(Approximate Coupling) Let \(D=2\), \(d=1\) and \(\alpha =(1,1)\), an example of the approximate coupling constructed in (32), (34) and (35) is given by, for \(\varvec{x}=(\varvec{x}_1,\varvec{x}_2,\varvec{x}_3,\varvec{x}_4) \in \mathsf X^{4}\),

where \(\textbf{L}_{(1,1)}(\varvec{x}_1,\varvec{x}_2,\varvec{x}_3,\varvec{x}_4) = \max \{ L_{00}(\varvec{x}_1),L_{01}(\varvec{x}_2),L_{10}(\varvec{x}_3),L_{11}(\varvec{x}_4) \}\) and \( \Pi _0(\varvec{x}_1,\varvec{x}_2,\varvec{x}_3,\varvec{x}_4) = \pi _0(\varvec{x}_1)\delta _{\varvec{x}_1}(\varvec{x}_2) \delta _{\varvec{x}_1}(\varvec{x}_3) \delta _{\varvec{x}_1}(\varvec{x}_4)\). For our choice of prior coupling (32), we effectively have a single distribution, for \(x \in \mathsf X\),

Note that any suitable prior which preserves the marginal as in (33) is admissible.

Let \(H_{\alpha ,j} = F_{\alpha , j+1}/F_{\alpha ,j}\) for some intermediate distributions \(F_{\alpha ,1}, \dots , F_{\alpha ,J}=F_{\alpha }\), for example \(F_{\alpha ,j} = \textbf{L}_\alpha (\varvec{x})^{\tau _j} \Pi _0(\varvec{x})\), where the tempering parameter satisfies \(\tau _1=0\), \(\tau _j<\tau _{j+1}\), and \(\tau _J=1\) (for example \(\tau _j = (j-1)\tau _0\)). Now let \(\varvec{\mathcal {M}}_{\alpha ,j}\) for \(j=2,\dots ,J\) be Markov transition kernels such that \((\Pi _{\alpha ,j} \varvec{\mathcal {M}}_{\alpha ,j})(d\varvec{x}) = \Pi _{\alpha ,j}(d\varvec{x})\), analogous to \(\mathcal {M}\) as any suitable MCMC kernel (Geyer 1992; Robert and Casella 1999; Cotter et al. 2013).

SMC sampler for coupled estimation of \(\Delta ( f_\alpha (\zeta _\alpha ) )\)

For \(j=1,\dots , J\) define

and then define

The following Assumption will be needed.

Assumption 1

Let \(J\in \mathbb {N}\) be given, and let \(\mathsf X\) be a Banach space. For each \(j\in \{1,\dots ,J\}\) there exists some \(C>0\) such that for all \((\alpha ,x)\in \mathbb {Z}_+^D\times \mathsf X\),

Then, we have the following convergence result (Del Moral 2004).

Proposition 1

Assume 1. Then for any \((J,p)\in \mathbb {N}\times (0,\infty )\) there exists a \(C>0\) such that for any \(N\in \mathbb {N}\), \(\psi : \mathsf X^{2^D} \rightarrow \mathbb R\) bounded and measurable, and \(\alpha \in \mathbb {Z}_+^D\),

In addition, the estimator is unbiased \(\mathbb {E}[F_{\alpha }^N(\psi )] = F_\alpha (\psi )\).

Now, we define the function \(\psi \) with respect to an arbitrary test function \(\zeta _\alpha \), as follows

where \(\iota _k \in \{-1,1\}\) is the sign of the \(k^\textrm{th}\) term in \(\Delta f_\alpha \)Footnote 2. The function \(\psi _{\zeta _{\alpha }}\) gives the mixed difference of the quantity of interest \(\zeta _{\alpha }\) among \(2^D\) intermediate multi-indices. Of particular interest in our estimator are the functions \(\zeta _{\alpha } = \varphi _{\alpha }\), for arbitrary \(\varphi _\alpha \), and \(\zeta _{\alpha } = 1\).

Example 2

(Mixed Difference) Following from Example 1, an example of the mixed difference of the quantity of interest \(\zeta _{(1,1)}\) constructed in (38) is given by

Note that the signs of the terms in the mixed difference are \(\iota _1 = 1\), \(\iota _2 = -1\), \(\iota _3 = -1\) and \(\iota _4 = 1\).

Following from Proposition 1 we have that

and there is a \(C>0\) such that

Now given \(\mathcal{I}\subset \mathbb {Z}_+^D\) and \(\{N_\alpha \}_{\alpha \in \mathcal{I}}\), and \(\varphi :\mathsf X\rightarrow \mathbb R\), for each \(\alpha \) run an independent SMC sampler as in Algorithm 1 with \(N_\alpha \) samples, and define the MIMC estimator as

where \(Z_\textrm{min}\) is a lower bound on Z.

A finer analysis than provided in Proposition 1 in order to achieve rigorous MIMC complexity results is shown in Theorem 1 given in Law et al. (2022).

Theorem 1

Assume 1. Then for any \(J\in \mathbb {N}\) there exists a \(C>0\) such that for any \(N\in \mathbb {N}\), \(\psi : \mathsf X^{2^D} \rightarrow \mathbb R\) bounded and measurable and \(\alpha \in \mathbb {Z}_+^D\)

where \(\psi _{\zeta _\alpha }(\varvec{x})\) is as (38).

Proof

The result is proven in Law et al. (2022). \(\square \)

3.2 Random sample size version

Consider drawing \(N/N_\textrm{min}\) i.i.d. samples \(\alpha _i \sim \textbf{p}\), where \(\textbf{p}\) is a probability distribution on \(\mathbb {Z}_+^D\) with \(\textbf{p}(\alpha ) =: p_{\alpha } > 0\), to be specified later. Define the allocations \(\textbf{A} \in \mathbb {Z}_+^{D \times N/N_\textrm{min}}\) by \(\textbf{A}_i = \alpha _i\), and the (scaled) counts for each \(\alpha \in \mathbb {Z}_+^D\) by \(N_\alpha = N_\textrm{min} \#\{ i; \alpha _i = \alpha \} \in \mathbb {Z}_+\), collectively denoted \(\textbf{N}\). Note that \(\mathbb {E}N_\alpha = N p_\alpha \) and \(N_\alpha \rightarrow N p_\alpha \).

Now consider constructing a MISMC estimator of the type in (42) using a random number \(N_\alpha \) of samples, \(F_\alpha ^{N_\alpha }(\psi _{\zeta _\alpha })\), and recall the properties (40) and Theorem 1. Conditioned on \(\textbf{A}\), or equivalently conditioned on \(\textbf{N}\), these properties still hold. For \(\zeta : {\textsf{X}} \rightarrow \mathbb R\) define the estimator

3.3 Theoretical results

The following standard MISMC assumptions will be made.

Assumption 2

For any \(\zeta : \mathsf X\rightarrow \mathbb R\) bounded and Lipschitz, there exist \(C, \beta _i, s_i, \gamma _i >0\) for \(i=1,\dots , D\) such that for resolution vector \((2^{-\alpha _1},\dots , 2^{-\alpha _D})\), i.e. resolution \(2^{-\alpha _i}\) in the \(i^\textrm{th}\) direction, the following holds

-

(B)

\(\vert \Delta f_\alpha (\zeta ) \vert =: B_{\alpha } \le C \prod _{i=1}^D 2^{-\alpha _i s_i}\);

-

(V)

\(\int _\mathsf X(\Delta ( L_\alpha (x)\zeta _\alpha (x) ))^2 \pi _0(dx) =: V_{\alpha } \le C \prod _{i=1}^D 2^{-\alpha _i \beta _i}\);

-

(C)

\(\textrm{COST}(F_\alpha (\psi _{\zeta })) \propto \prod _{i=1}^D 2^{\alpha _i \gamma _i}\).

First, we need to examine the bias of the estimator (43).

Proposition 2

Assume 1, and let \(\zeta : \mathsf X\rightarrow \mathbb R\). For any multi-index \(\alpha \), we have \(p_{\alpha } >0\). Then the randomized MISMC estimator (43) is free from discretization bias, i.e.

Proof

The proof is given in Appendix A.1. \(\square \)

Now that unbiasedness has been established, the next step is to examine the variance.

Proposition 3

Assume 1 and 2, and let \(\zeta : \mathsf X\rightarrow \mathbb R\). For any multi-index \(\alpha \), we have \(p_{\alpha } >0\). Then the variance of the randomized MISMC estimator (43) is given by

In particular, if

then one has the canonical convergence rate.

Proof

The proof is given in Appendix A.2. \(\square \)

The randomized MISMC ratio estimator is now defined for \(\varphi : \mathsf X\rightarrow \mathbb R\) by

where \({\widehat{F}}^\textrm{rMI}\) is defined in (43).

Before presenting the main result of the present work, we first recall the main result of Law et al. (2022) which is derived by Theorem 1. Law et al. (2022) considers two index sets for the original MISMC ratio estimator, tensor product index set and total degree index set. Compared to the tensor product index set, the total degree index set abandons some expensive indices, with much looser conditions in the convergence theorem. The convergence result for tensor product index set is given in Theorem 2 of Law et al. (2022) and for total degree index set it is given in Theorem 3 of Law et al. (2022).

Theorem 2

Assume 1 and 2, with \(\sum _{j=1}^D \frac{\gamma _j}{s_j} \le 2\) and \(\beta _i>\gamma _i\) for \(i=1,\dots ,D\). Then for any \(\varepsilon >0\) and bounded and Lipschitz \(\varphi : \mathsf X\rightarrow \mathbb R\), it is possible to choose a tensor product index set \(\mathcal{I}_{L_1:L_D}:= \{\alpha \in \mathbb {Z}_{+}^{D}:\alpha _1\in \{0,...,L_1\},...,\alpha _D \in \{0,...,L_D\}\}\) and \(\{N_\alpha \}_{\alpha \in \mathcal{I}_{L_1:L_D}}\), such that for some \(C>0\) that is independent of \(\varepsilon \)

and \(\textrm{COST}({\widehat{\varphi }}^\textrm{MI}_{\mathcal{I}_{L_1:L_D}} ) \le C \varepsilon ^{-2}\), the canonical rate. The estimator \(\widehat{\varphi }^\textrm{MI}_{\mathcal{I}_{L_1:L_D}} \) is defined in equation (42).

Proof

The proof is given in Law et al. (2022). \(\square \)

Theorem 3

Assume 1 and 2, with \(\beta _i>\gamma _i\) for \(i=1,\dots ,D\). Then for any \(\varepsilon >0\) and bounded and Lipschitz \(\varphi : \mathsf X\rightarrow \mathbb R\), it is possible to choose a total degree index set \(\mathcal{I}_{L}:= \{\alpha \in \mathbb {Z}_{+}^{D}: \sum _{i=1}^{D} \delta _i\alpha _i \le L, \sum _{i=1}^{D} \delta _i=1 \}\), \(\delta _i \in (0,1]\) and \(\{N_\alpha \}_{\alpha \in \mathcal{I}_{L_1:L_D}}\), such that for some \(C>0\) that is independent of \(\varepsilon \)

and \(\textrm{COST}({\widehat{\varphi }}^\textrm{MI}_{\mathcal{I}_{L}} ) \le C \varepsilon ^{-2}\), the canonical rate. The estimator \(\widehat{\varphi }^\textrm{MI}_{\mathcal{I}_{L}} \) is defined in equation (42).

Proof

The proof is given in Law et al. (2022). \(\square \)

Theorem 4

Assume 1 and 2 (V,C), with \(\beta _i>\gamma _i\) for \(i=1,\dots ,D\). Then, for bounded and Lipschitz \(\varphi : \mathsf X\rightarrow \mathbb R\), it is possible to choose a probability distribution \(\textbf{p}\) on \(\mathbb {Z}_+^D\) such that for some \(C>0\) that is independent of N

and expected \(\textrm{COST}({\widehat{\varphi }}^\textrm{rMI}) \le C N\), i.e. the canonical rate. The estimator \({\widehat{\varphi }}^\textrm{rMI}\) is defined in equation (46).

Proof

The proof is given in Appendix A.3. \(\square \)

The noticeable differences in Theorem 4 with respect to Theorem 2 and 3 are that (i) discretization bias does not appear and so the bias rates as in Assumption 2 (B) are not required, nor is the constraint related to them shown in Table 1, and (ii) no index set \(\mathcal{I}\) needs to be selected since the estimator sums over \(\alpha \in \mathbb {Z}_+^D\) (noting that many of these indices do not get populated).

4 Numerical results

The problems considered here are the same as in Law et al. (2022), and we intend to compare our rMISMC ratio estimator with the original MISMC ratio estimator.

The codes used for the numerical results in this paper can be found in https://github.com/liangxinzhu/rMISMCRE.git.

4.1 Verification of assumption

Discussions in connection with the required Assumption 2 for the 2D PDE and 2D LGP models are revisited here. Verification of the 1D PDE model is naturally satisfied according to the discussion of the 2D PDE model. Propositions 4, 5 and 6 and their proofs are given in Law et al. (2022).

We define the mixed Sobolev-like norms as

where \(A=\sum _{k\in \mathbb {Z}^2} a_k\phi _k \otimes \phi _k\), for the orthonormal basis \(\{\phi _k\}\) defined above (21), \(a_k = k_1^2k_2^2\), and \(\left\Vert \cdot \right\Vert \) is the \(L^2(\Omega )\) norm. Note that the approximation of the posteriors of the motivating problems have the form \(\exp (\Phi _{\alpha }(x))\) for some \(\Phi _{\alpha }:\mathsf X\rightarrow \mathbb R\).

Proposition 4

Let \(\mathsf X\) be a Banach space with \(D=2\) s.t. \(\pi _0(\mathsf X)=1\), with norm \(\left\Vert \cdot \right\Vert _{\mathsf X}\). For all \(\epsilon > 0\), there exists a \(C(\epsilon ) > 0\) such that the following holds for \(\Phi _{\alpha } = \log (L_{\alpha })\) given by (25) or (15), respectively:

The variance rate required in Assumption 2 (V) for PDE and LGP models are verified following Proposition 5 and 6. However, it is difficult to give theoretical verification for the variance rate in the LGC model. Since it involves a factor of double exponentials, like \(\exp (\int \exp (x(z))dz)\), the Fernique Theorem does not guarantee that such a term is finite. Instead we verify it numerically, which is given in the Appendix B.

Proposition 5

Let \(u_{\alpha }\) be the solution of (2) at the resolution \(\alpha \), as described in Sect. 2.1.1, for a(x) given by (4) and uniformly over \(x \in [-1,1]^d\), and \(\textsf{f}(x) \in L^2(\Omega )\). Then there exists a \(C>0\) such that

Since \(L_{\alpha }(x) \le C < \infty \) in the PDE problem by Assumption 1, the constant in Proposition 4 can be made uniform over x, so the variance rate in Assumption 2 is obtained.

Proposition 6

Let \(x \sim \pi _0\), where \(\pi _0\) is a Gaussian process of the form (20) with spectral decay corresponding to (21), and let \(x_{\alpha }\) correspond to truncation on the index set \(\mathcal {A}_{\alpha } = \cap _{i=1}^2\{ \vert k_i \vert \le 2^{\alpha _i} \}\) as in (23). Then there is a \(C>0\) such that for all \(q<(\beta -1)/2\)

Furthermore, this rate is inherited by the likelihood with \(\beta _i=\beta \).

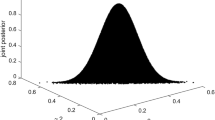

4.2 1D toy problem

We consider a 1D PDE toy problem which has already been applied in Jasra et al. (2021a) and Law et al. (2022). Let the domain be \(\Omega =[0,1]\), \(a = 1\) and \(\textsf{f}=x\) in PDE (2). This toy PDE problem has an analytical solution, \(u(x)(z) = \frac{x}{2}(z^2-z)\). Given the quantity of interest \(\varphi (x) = x^2\), we aim to compute the expectation of the quantity of interest \(\mathbb {E}[\varphi (x)]\). In the following implementation, we take the observations at ten points in the interval [0, 1], which are [0.1, 0.2, ..., 0.9, 1], so the observations are generated by

where \([z_1,...,z_{10}] = [0.1, 0.2,...,0.9,1]\), \(x^*\) is sampled from \(U[-1,1]\) and \(\nu _i \sim \mathcal {N}(0,0.2^2)\). The reference solution is computed as in Jasra et al. (2021a) and Law et al. (2022). From Fig. 5, we have \(\alpha = 2\), \(\beta = 4\). The value of \(\gamma \) is 1 because we use linear nodal basis functions in FEM and tridiagonal solver.

Comparison among three methods for the 1D inverse toy problem. We use 100 realisations to compute the MSE for each experiment and use the rate of convergence to compare the different methods. The red circle line is MLSMC with ratio estimator; the yellow diamond line is rMLSMC with ratio estimator; the purple square line is single-level sequential Monte Carlo. Rate of regression: (1) MLSMC: \(-\)1.008; (2) rMLSMC: \(-\)1.016; (3) SMC: \(-\)0.812

From Fig. 1, the convergence behaviour for rMLSMC and MLSMC is nearly the same and the convergence rate for them is approximately \(-1\) which is the canonical rate. The difference in performance between (r)MLSMC and SMC is the rate of convergence, where the convergence rate for SMC is approximately \(-4/5\). With the same total computational cost, the MSE of (r)MLSMC is larger than SMC until the cost reaches \(10^4\). We conclude that (r)MLSMC performs better than SMC in terms of the rate of convergence as expected.

4.3 2D elliptic partial differential equation

Applying rMLSMC in a 1D analytical PDE problem is only an appetizer, we now focus on applying rMISMC to high-dimensional problems. We now consider a 2D non-analytical elliptic PDE on the domain \(\Omega =[0,1]^2\) with \(\textsf{f} = 100\) and a(x) taking the form as

We let the prior distribution be a uniform distribution \(U[-1,1]^2\) and set the quantity of interest to be \(\varphi (x) = x_1^2 + x_2^2\), which is a generalisation of the one-dimensional case. We take the observations at a set of four points: {(0.25, 0.25), (0.25, 0.75), (0.75, 0.25), (0.75, 0.75)}, and the corresponding observations are given by

where \(u_{\alpha }(x^{*})\) is the approximate solution at \(\alpha = [10,10]\), \(x^*\) samples from \(U[-1,1]^2\) and \(\nu \sim \mathcal {N}(0,0.5^2\textbf{I}_{4})\). The 2D PDE solver applied in this report is modified based on a MATLAB toolbox IFISS (Elman et al. 2007).

Due to the zero Dirichlet boundary condition, the solution is zero at \(\alpha _i=0\) and \(\alpha _i=1\) for \(i=1,2\). So we set the starting index as \(\alpha _1=\alpha _2 = 2\). From Fig. 6, we have \(s_1=s_2=2\) and \(\beta _1=\beta _2=4\) for the mixed rates. Since we use the bilinear basis function and MATLAB backslash code, one has \(\gamma _1=\gamma _2=1\).

Comparison between two methods for the 2D non-analytical PDE. We use 200 realisations to compute the MSE for each experiment and use the rate of convergence to compare the different methods. The blue triangle line is MISMC with ratio estimator and tensor product set; the red circle line is MISMC with ratio estimator and total degree set; the yellow diamond line is rMISMC with ratio estimator. Rate of regression: (1) MISMC_TP: \(-\)0.964; (2) MISMC_TD: \(-\)0.925; (3) rMISMC: \(-\)1.015

We consider two index sets in the MISMC approach, which are the tensor product (TP) index set and the total degree (TD) index set. From Fig. 2, MISMC with two different sets and rMISMC have similar convergence behaviour with convergence rate approximately being \(-1\). Although we do not show SMC method in Fig. 2, the theoretical convergence rate of SMC will drop from \(-4/5\) (1D) to \(-2/3\) (2D), whose rate of convergence suffers the curse of dimensionality. Up to now, the convergence behaviour of MISMC (with TP index set or TD index set) and rMISMC is similar when applied to 1D and 2D PDE problems, which both achieve the canonical rates, but we will see a difference in the following two statistical models.

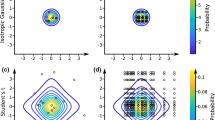

4.4 Log Gaussian Cox model

Now, we consider the LGC model introduced in Sect. 2.2. We set the parameters as \(\theta = (\theta _1,\theta _2,\theta _3) = (0,1,(33/\pi )^2)\) in the LGC model. When using rMISMC and MISMC on the 2D log Gaussian Cox model, we need to set the starting level to \(\alpha _1 = \alpha _2 = 5\), since the regularity shows up when \(\alpha _1 \ge 5\) and \( \alpha _2 \ge 5\). Further, from Fig. 7, we have mixed rates \(s_1=s_2=0.8\) and \(\beta _1=\beta _2=1.6\). Since we use the FFT for approximation, one has an asymptotic rate for the cost, \(\gamma _i=1 + \omega < \beta _i\) for \(\omega >0\) and \(i=1,2\).

Comparison between two methods for the 2D LGC model. We use 200 realisations to compute the MSE for each experiment and use the rate of convergence to compare the different methods. The blue triangle line is MISMC with ratio estimator and tensor product set; the red circle line is MISMC with ratio estimator and total degree set; the yellow diamond line is rMISMC with ratio estimator. Rate of regression: (1) MISMC_TP: \(-\)0.502; (2) MISMC_TD: \(-\)0.972; (3) rMISMC: \(-\)1.008

Comparison between two methods for the 2D LGP Model. We use 200 realisations to compute the MSE for each experiment and use the rate of convergence to compare the different methods. The blue triangle line is MISMC with ratio estimator and tensor product set; the red circle line is MISMC with ratio estimator and total degree set; the yellow diamond line is rMISMC with ratio estimator. Rate of regression: (1) MISMC_TP: \(-\)0.565; (2) MISMC_TD: \(-\)1.017; (3) rMISMC: \(-\)1.195

The rate of convergence of MISMC TD and rMISMC is approximately \(-1\), and both of them achieve the canonical complexity of MSE\(^{-1}\). However, the constant for MISMC TD is smaller than rMISMC. We have set a relatively large number of the minimum number of sample, \(N_0\), in SMC sampler to alleviate the unexpected high variance caused by the few samples. It is reasonable to expect a higher variance for the randomized method, however, since it involves infinitely many terms compared to finite. MISMC TD achieves a canonical rate, but MISMC TP only has a sub-canonical rate. This is because the assumption \( \sum _{i=1}^{2} \frac{\gamma _i}{s_i} \le 2\) is violated \(\left( \sum _{i=1}^{2} \frac{\gamma _i}{s_i} = \frac{5}{2}\right) \) in MISMC, and this assumption is only needed in the tensor product index set, not in the total degree index set. This indicates that an improper choice of an index set in MISMC will result in dropping the canonical rate to the sub-canonical rate, which highlights the benefit of rMISMC since it achieves the canonical rate without providing an index set in advance.

4.5 Log Gaussian process model

We set the parameters as \(\theta = (\theta _1, \theta _2, \theta _3) = (0,1,(33/\pi /2)^2)\) in the LGP model. Similar to the setting in LGP, when using rMISMC and MISMC on the 2D log Gaussian model, we need to set the starting level to \(\alpha _1 = \alpha _2 = 5\), since the regularity shows when \(\alpha _1 \ge 5\) and \( \alpha _2 \ge 5\). Further, from Fig. 8, we set \(s_1=s_2=0.8\) and \(\beta _1=\beta _2=1.6\). Same as the cost rate in LGC, one has \(\gamma _i=1 + \omega < \beta _i\) for \(\omega >0\) and \(i=1,2\).

In the LGP model, we can interpret similar results as in the LGC model: MISMC TP has the sub-canonical rate, and the constant for MISMC TD is smaller than rMISMC. However, the difference between constants for rMISMC and MISMC TD in LGP is much greater than in LGC. There may be other unidentified sources of high variance with respect to the rMISMC. This is the subject of ongoing investigation. In addition, it should be noted that the LGP model is much more sensitive to parameter values (\(\theta =(\theta _1,\theta _2,\theta _3)\)) than the LGC model.

References

Agapiou, S., Roberts, GO., Vollmer, S.J.: Unbiased Monte Carlo: posterior estimation for intractable/infinite-dimensional models. arXiv preprint arXiv:1411.7713 (2014)

Bernardo, J., Berger, J., Dawid, A., et al.: Regression and classification using Gaussian process priors. Bayesian Stat. 6, 475 (1998)

Beskos, A., Jasra, A., Law, K.J.H., et al.: Multilevel sequential Monte Carlo samplers. Stoch. Process. Appl. 127(5), 1417–1440 (2017)

Beskos, A., Jasra, A., Law, K.J.H., et al.: Multilevel sequential Monte Carlo with dimension-independent likelihood-informed proposals. SIAM/ASA J. Uncertain. Quantif. 6(2), 762–786 (2018)

Cai, D., Adams, R.P.: Multi-fidelity Monte Carlo: a pseudo-marginal approach. arXiv preprint arXiv:2210.01534 (2022)

Chada, N.K., Franks, J., Jasra, A., et al.: Unbiased inference for discretely observed hidden Markov model diffusions. SIAM/ASA J. Uncertai. Quantif. 9(2), 763–787 (2021)

Chopin, N., Papaspiliopoulos, O., et al.: An Introduction to Sequential Monte Carlo. Springer, Berlin (2020)

Cotter, S.L., Roberts, G.O., Stuart, A.M., et al.: MCMC methods for functions: modifying old algorithms to make them faster. Stat. Sci. 28(3), 424–446 (2013)

Cui, T., Jasra, A., Law, K.J.H.: Multi-index sequential Monte Carlo methods. Preprint (2018)

Del Moral, P.: Feynman-Kac formulae. In: Feynman-Kac Formulae. Springer, pp. 47–93 (2004)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 68(3), 411–436 (2006)

Dereich, S., Mueller-Gronbach, T.: General multilevel adaptations for stochastic approximation algorithms. arXiv preprint arXiv:1506.05482 (2015)

Diggle, P.J., Moraga, P., Rowlingson, B., et al.: Spatial and spatio-temporal log-Gaussian Cox processes: extending the geostatistical paradigm. Stat. Sci. 28(4), 542–563 (2013)

Dodwell, T.J., Ketelsen, C., Scheichl, R., et al.: A hierarchical multilevel Markov chain Monte Carlo algorithm with applications to uncertainty quantification in subsurface flow. SIAM/ASA J. Uncertain. Quantif. 3(1), 1075–1108 (2015)

Elman, H.C., Ramage, A., Silvester, D.J.: Algorithm 866: IFISS, a Matlab toolbox for modelling incompressible flow. ACM Trans. Math. Softw. (TOMS) 33(2), 14-es (2007)

Franks, J., Jasra, A., Law, K.J.H., et al.: Unbiased inference for discretely observed hidden Markov model diffusions. arXiv preprint arXiv:1807.10259 (2018)

Geyer, C.J.: Practical Markov chain Monte Carlo. Stat. Sci. pp. 473–483 (1992)

Giles, M.B.: Multilevel Monte Carlo methods. Acta Numer 24, 259 (2015)

Glynn, P.W., Rhee, Ch.: Exact estimation for Markov chain equilibrium expectations. J. Appl. Probab. 51(A), 377–389 (2014)

Griebel, M., Hegland, M.: A finite element method for density estimation with Gaussian process priors. SIAM J. Numer. Anal. 47(6), 4759–4792 (2010)

Haji-Ali, A.L., Nobile, F., Tempone, R.: Multi-index Monte Carlo: when sparsity meets sampling. Numer. Math. 132(4), 767–806 (2016)

Heng, J., Bishop, A.N., Deligiannidis, G., et al.: Controlled sequential Monte Carlo. Ann. Stat. 48(5), 2904–2929 (2020)

Heng, J., Jasra, A., Law, K.J.H., et al,: On unbiased estimation for discretized models. arXiv preprint arXiv:2102.12230 (2021)

Hoang, V.H., Schwab, C., Stuart, A.M.: Complexity analysis of accelerated MCMC methods for Bayesian inversion. Inverse Prob. 29(8), 085,010 (2013)

Jacob, P.E., Thiery, A.H.: On nonnegative unbiased estimators. Ann. Stat. 43(2), 769–784 (2015)

Jasra, A., Kamatani, K., Law, K.J.H., et al.: Bayesian static parameter estimation for partially observed diffusions via multilevel Monte Carlo. SIAM J. Sci. Comput. 40(2), A887–A902 (2018)

Jasra, A., Kamatani, K., Law, K.J.H., et al.: A multi-index Markov chain Monte Carlo method. Int. J. Uncertain. Quantif. 8(1) (2018)

Jasra, A., Law, K.J.H., Yu, F.: Unbiased filtering of a class of partially observed diffusions. Adv. Appl. Probab. pp. 1–27 (2020)

Jasra, A., Law, K.J.H., Lu, D.: Unbiased estimation of the gradient of the log-likelihood in inverse problems. Stat. Comput. 31(3), 1–18 (2021)

Jasra, A., Law, K.J.H., Xu, Y.: Multi-index sequential Monte Carlo methods for partially observed stochastic partial differential equations. Int. J. Uncertain Quantif 11(3) (2021)

Kushner, H.J., Clark, D.S.: Stochastic Approximation Methods for Constrained and Unconstrained Systems, vol. 26. Springer, Berlin (2012)

Law, K., Stuart, A., Zygalakis, K.: Data Assimilation, p. 52. Springer, Cham (2015)

Law, K., Jasra, A., Bennink, R., et al.: Estimation and uncertainty quantification for the output from quantum simulators. Found. Data Sci. 1(2), 157–176 (2019)

Law, K.J.H., Walton, N., Yang, S., et al.: Multi-index sequential Monte Carlo ratio estimators for Bayesian inverse problems. arXiv preprint arXiv:2203.05351 (2022)

Lyne, A.M., Girolami, M., Atchadé, Y., et al.: On Russian roulette estimates for Bayesian inference with doubly-intractable likelihoods. Stat. Sci. 30(4), 443–467 (2015)

McLeish, D.: A general method for debiasing a Monte Carlo estimator. Monte Carlo Methods Appl. 17(4), 301–315 (2011)

Møller, J., Syversveen, A.R., Waagepetersen, R.P.: Log Gaussian Cox processes. Scand. J. Stat. 25(3), 451–482 (1998)

Moral, P.D., Jasra, A., Law, K.J.H., et al.: Multilevel sequential Monte Carlo samplers for normalizing constants. ACM Trans. Model Compu.t Simul. (TOMACS) 27(3), 1–22 (2017)

Murray, I., Adams, R., MacKay, D.: Elliptical slice sampling. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics. In: JMLR Workshop and Conference Proceedings, pp. 541–548 (2010)

Oliver, D.S., Reynolds, A.C., Liu, N.: Inverse Theory for Petroleum Reservoir Characterization and History Matching. Cambridge University Press, Cambridge (2008)

Peherstorfer, B., Willcox, K., Gunzburger, M.: Survey of multifidelity methods in uncertainty propagation, inference, and optimization. SIAM Rev. 60(3), 550–591 (2018)

Rasmussen, C.E.: Gaussian processes in machine learning. In: Summer School on Machine Learning, Springer, pp. 63–71 (2003)

Rhee, Ch., Glynn, P.W.: A new approach to unbiased estimation for SDE’s. In: Proceedings of the 2012 Winter Simulation Conference (WSC), IEEE, pp. 1–7 (2012)

Rhee, Ch., Glynn, P.W.: Unbiased estimation with square root convergence for SDE models. Oper. Res. 63(5), 1026–1043 (2015)

Riihimäki, J., Vehtari, A.: Laplace approximation for logistic Gaussian process density estimation and regression. Bayesian Anal. 9(2), 425–448 (2014)

Robbins, H., Monro, S.: A Stochastic Approximation Method. The Annals of Mathematical Statistics, pp. 400–407 (1951)

Robert, C.P., Casella, G.: Monte Carlo Statistical Methods, vol. 2. Springer, Berlin (1999)

Strathmann, H., Sejdinovic, D., Girolami, M.: Unbiased Bayes for big data: paths of partial posteriors. arXiv preprint arXiv:1501.03326 (2015)

Stuart, A.M.: Inverse problems: a Bayesian perspective. Acta Numer. 19, 451–559 (2010)

Tarantola, A.: Inverse Problem Theory and Methods for Model Parameter Estimation. SIAM, Philadelphia (2005)

Teng, M., Nathoo, F., Johnson, T.D.: Bayesian computation for log-Gaussian Cox processes: a comparative analysis of methods. J. Stat. Comput. Simul. 87(11), 2227–2252 (2017)

Tokdar, S.T.: Towards a faster implementation of density estimation with logistic Gaussian process priors. J. Comput. Graph. Stat. 16(3), 633–655 (2007)

Tokdar, S.T., Ghosh, J.K.: Posterior consistency of logistic Gaussian process priors in density estimation. J. Stat. Plan. Inference 137(1), 34–42 (2007)

Van Leeuwen, P.J., Cheng, Y., Reich, S.: Nonlinear Data Assimilation. Springer, Berlin (2015)

Vihola, M.: Unbiased estimators and multilevel Monte Carlo. Oper. Res. 66(2), 448–462 (2018)

Walter, C.: Point process-based Monte Carlo estimation. Stat. Comput. 27(1), 219–236 (2017)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Financial Interests

KJHL and XL gratefully acknowledge the support of IBM and EPSRC in the form of an Industrial Case Doctoral Studentship Award.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proofs

The proofs of the various results in the paper are presented here, along with restatements of the results.

1.1 Proof relating to Proposition 2

Proposition 2

Assume 1, and let \(\zeta : \mathsf X\rightarrow \mathbb R\). For any multi-index \(\alpha \), we have \(p_{\alpha } >0\). Then the randomized MISMC estimator (43) is free from discretization bias, i.e.

Proof

Using the law of iterated conditional expectations, and (40) conditioned on \(N_\alpha \), one has

\(\square \)

1.2 Proof relating to Proposition 3

Proposition 3

Assume 1 and 2, and let \(\zeta : \mathsf X\rightarrow \mathbb R\). For any multi-index \(\alpha \), we have \(p_{\alpha } >0\). Then the variance of the randomized MISMC estimator (43) is given by

In particular, if

then one has the canonical convergence rate.

Proof

One has

The diagonal and off-diagonal terms will be treated separately. First, for the diagonal terms, adding \(\pm \frac{N_\alpha }{N p_\alpha } \Delta f_\alpha (\zeta _\alpha )\) in the square term of the diagonal term and using the triangle inequality, we have

Since \(\textbf{p}\) is proportional to a multinomial distribution, we have \(\mathbb {E}[N_{\alpha }^2] = Np_{\alpha }(1-p_{\alpha })+N^2p_{\alpha }^2\). Then, for the first term of (60), the same decomposition of (56), along with the result of Theorem 1 (conditioned on \(N_\alpha \)) and Assumption 2 (V) gives

For the second term of (60), Applying Jensen’s inequality to \(\left( \Delta f_{\alpha }(\zeta _{\alpha })\right) ^2\), we have

Hence, we derive the bound for the diagonal term as follows

Now, the off-diagonal terms are more subtle because of the correlation between \(N_\alpha \) and \(N_{\alpha '}\), which are (proportional to) draws from a multinomial distribution with \(N/N_\textrm{min}\) samples and probability \(\textbf{p}\), and so

Using the law of iterated conditional expectations

Line (65) follows from the conditional independence of \(F_\alpha ^{N_\alpha }(\psi _{\zeta _\alpha })\) and \(F_{\alpha '}^{N_{\alpha '}}(\psi _{\zeta _{\alpha '}})\) given \(\textbf{N} = \{N_\alpha \}_{\alpha \in \mathbb {Z}_+^D}\) and some simple algebra. Line (66) follows from (64) and Assumption 2 (V), along with Jensen’s inequality applied to \((\Delta f_\alpha (\zeta _\alpha ))^2\) and \((\Delta f_{\alpha '}(\zeta _{\alpha '}))^2\).

Furthermore,

Since \(\beta _i >0\), \(1-2^{-\beta _i} <1\) and \(1-2^{-\beta _i/2} <1\), we can found a constant C to bound the last line. \(\square \)

1.3 Proof relating to Theorem 4

Theorem 4

Assume 1 and 2 (V,C), with \(\beta _i>\gamma _i\) for \(i=1,\dots ,D\). Then, for suitable \(\varphi : \mathsf X\rightarrow \mathbb R\), it is possible to choose a probability distribution \(\textbf{p}\) on \(\mathbb {Z}_+^D\) such that for some \(C>0\)

and expected \(\textrm{COST}({\widehat{\varphi }}^\textrm{rMI}) \le C N\), i.e. the canonical rate. The estimator \({\widehat{\varphi }}^\textrm{rMI}\) is defined in equation (46).

Proof

Since our unnormalized estimator is unbiased, we have

Then, \(\mathbb {E}[({\hat{\varphi }}^{\text {rMI}} - \pi (\varphi ))^2]\) is less than C/N as long as \(\max _{\zeta \in \{\varphi ,1\}} \mathbb {E}\big [ ({\widehat{F}}^\textrm{rMI}(\zeta ) - f(\zeta ))^2 \big ]\) is of \(\mathcal {O}(N^{-1})\).

Convergence parameters fitting for the 1D inverse toy problem. Empirical values for strong and weak convergence parameters. All plots use 100 realizations with 1000 samples in each index as the maximum level \(L_{\max }=6\) at each experiment. (Left) Logarithm plot with base 2 for the mean of difference and reference line with slope -2; (Right) Logarithm plot with base 2 for the variance of difference and reference line with slope -4

Following from Proposition 3, we need

to let \(\mathbb {E}\big [ ({\widehat{F}}^\textrm{rMI}(\zeta ) - f(\zeta ))^2 \big ]\) be of \(\mathcal {O}(N^{-1})\). Additionally, we let the distribution follows from a simple constrained minimization of the expected cost for one sample

Since \(p_{\alpha }>0\), one finds that

Convergence parameters fitting for the 2D inverse elliptic PDE problem. Empirical values for strong and weak convergence parameters. All plots use 20 realizations with 1000 samples in each index as the maximum multi-index \(\alpha _1=6\) and \(\alpha _2=6\) for each experiment. (Left) Logarithm plots with base 2 for the mean of unnormalized increments of increments; (Right) logarithm plots with base 2 for the variance of unnormalized increments of increments

Convergence parameters fitting for the 2D LGC model. Empirical values for strong and weak convergence parameters. All plots use 20 realizations with 1000 samples in each index as the minimum multi-index \(\alpha _1=\alpha _2=5\) and maximum multi-index \(\alpha _1=\alpha _2=8\) at each experiment. (Left) Logarithm plots with base 2 for the mean of unnormalized increments of increments; (Right) logarithm plots with base 2 for the variance of unnormalized increments of increments

Convergence parameters fitting for the 2D LGP model. Empirical values for strong and weak convergence parameters. All plots use 20 realizations with 1000 samples in each index as the minimum multi-index \(\alpha _1=\alpha _2=5\) and maximum multi-index \(\alpha _1=\alpha _2=8\) at each experiment. (Left) Logarithm plots with base 2 for the mean of unnormalized increments of increments; (Right) logarithm plots with base 2 for the variance of unnormalized increments of increments

is one suitable choice for probabilities to satisfy the two conditions stated in (70) and (71). Then the expected cost \(\textrm{COST}({\widehat{\varphi }}^\textrm{rMI}) = \sum _{\alpha \in \mathbb {Z}_+^D} N p_\alpha \prod _{i=1}^D 2^{\alpha _i \gamma _i}.\) and (70) are then given respectively by

By assumption \(\gamma _i-\beta _i <0\), the expected cost is bounded by CN and (70) is finite. Hence, \(\mathbb {E}\big [ ({\widehat{F}}^\textrm{rMI}(\zeta ) - f(\zeta ))^2 \big ]\) is of \(\mathcal {O}(N^{-1})\). \(\square \)

Figures

The plots of best log linear fit of convergence parameters for the various problems are illustrated in Figs. 5, 6, 7 and 8.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, X., Yang, S., Cotter, S.L. et al. A randomized multi-index sequential Monte Carlo method. Stat Comput 33, 97 (2023). https://doi.org/10.1007/s11222-023-10249-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10249-9