Abstract

We discuss estimating the probability that the sum of nonnegative independent and identically distributed random variables falls below a given threshold, i.e., \(\mathbb {P}(\sum _{i=1}^{N}{X_i} \le \gamma )\), via importance sampling (IS). We are particularly interested in the rare event regime when N is large and/or \(\gamma \) is small. The exponential twisting is a popular technique for similar problems that, in most cases, compares favorably to other estimators. However, it has some limitations: (i) It assumes the knowledge of the moment-generating function of \(X_i\) and (ii) sampling under the new IS PDF is not straightforward and might be expensive. The aim of this work is to propose an alternative IS PDF that approximately yields, for certain classes of distributions and in the rare event regime, at least the same performance as the exponential twisting technique and, at the same time, does not introduce serious limitations. The first class includes distributions whose probability density functions (PDFs) are asymptotically equivalent, as \(x \rightarrow 0\), to \(bx^{p}\), for \(p>-1\) and \(b>0\). For this class of distributions, the Gamma IS PDF with appropriately chosen parameters retrieves approximately, in the rare event regime corresponding to small values of \(\gamma \) and/or large values of N, the same performance of the estimator based on the use of the exponential twisting technique. In the second class, we consider the Log-normal setting, whose PDF at zero vanishes faster than any polynomial, and we show numerically that a Gamma IS PDF with optimized parameters clearly outperforms the exponential twisting IS PDF. Numerical experiments validate the efficiency of the proposed estimator in delivering a highly accurate estimate in the regime of large N and/or small \(\gamma \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Efficient estimation of rare event probabilities finds various applications in the performance evaluation/prediction of wireless communication systems operating over fading channels (Simon and Alouini 2005). In particular, the left-tail of the cumulative distribution function (CDF) of sums of nonnegative independent and identically distributed (i.i.d.) random variables is an example of a rare event probability that is of practical importance. More specifically, the outage probability at the output of equal gain combining (EGC) and maximum ratio combining (MRC) receivers can be expressed as the CDF of the sum of fading channel envelops (for EGC) and fading channel gains (for MRC) (Ben Rached et al. 2016).

The accurate estimation of the left-tail of the CDF of sums of random variables requires the use of variance reduction techniques because the naive Monte Carlo sampler is computationally expensive (Kroese et al. 2011; Rubino and Tuffin 2009; Asmussen and Glynn 2007). Moreover, the existing closed-form approximations (Xiao et al. Aug. 2019; Zhu and Cheng Oct. 2019; Ermolova Jul. 2008; Hu and Beaulieu Feb. 2005; Lopez-Salcedo Mar. 2009; Da Costa and Yacoub 2009; Renzo et al. Apr. 2009) fail to be accurate when the tail of the CDF is considered. The literature is rich in works in which variance reduction techniques were developed to efficiently estimate rare event probabilities corresponding to the left-tail of the CDF of sums of random variables, see Asmussen et al. (Sep. 2016), Ben Rached et al. (2016), Ben Rached et al. (2018), Botev et al. (2019), Gulisashvili and Tankov (Feb. 2016), Alouini et al. (2018) and Beaulieu and Luan (2019) and the references therein. For instance, the authors in Asmussen et al. (Sep. 2016) used exponential twisting, which is a popular importance sampling (IS) technique, to propose a logarithmically efficient estimator of the CDF of the sum of i.i.d. Log-normal random variables. The logarithmic efficiency is a popular property in rare event simulation used to ensure estimators’ efficiency (Ben Rached et al. 2016). Let \(\hat{\alpha }\) be an unbiased estimator of \(\alpha \), i.e., \(\mathbb {E}[\hat{\alpha }]=\alpha \). We say that \(\hat{\alpha }\) is logarithmically efficient if \(\lim _{\alpha \rightarrow 0}\frac{\log (\mathbb {E}[\hat{\alpha }^2])}{\log (\alpha ^2)}=1\). In Gulisashvili and Tankov (Feb. 2016), the CDF of the sum of correlated Log-normal random variables was considered. The authors developed an IS estimator based on shifting the mean of the corresponding multivariate Gaussian distribution. Under mild assumptions, they proved that their proposed estimator is logarithmically efficient. Based on Gulisashvili and Tankov (Feb. 2016) and under the assumption that the left-tail sum distribution is determined by only one dominant component, the authors in Alouini et al. (2018) combined IS with a control variate technique to construct an estimator with the asymptotically vanishing relative error property, which is the most desired property in the field of rare event simulations (Kroese et al. 2011). In Ben Rached et al. (2016), two unified IS approaches were developed using the hazard rate twisting concept (Juneja and Shahabuddin Apr. 2002; Ben Rached et al. 2016) to efficiently estimate the CDF of sums of independent random variables. The first estimator is shown to be logarithmically efficient, whereas the second achieves the bounded relative error property for i.i.d. sums of random variables and under the given assumption that was shown to hold for most of the practical distributions used to model the amplitude/power of fading channels. The bounded relative error is a stronger criterion than the logarithmic efficiency. We say that an unbiased estimator \(\hat{\alpha }\) of \(\alpha \) achieves the bounded relative error property if \(\frac{\mathrm {var}[\hat{\alpha }]}{\alpha ^2}\) is asymptotically bounded when \(\alpha \) goes to 0, see Ben Rached et al. (2016)

The efficiency of the above-mentioned estimators was studied when the number of summand N was kept fixed. More specifically, recall that the objective is to efficiently estimate the probability that the sum of nonnegative i.i.d. random variables falls below a given threshold, i.e., \(\mathbb {P}(\sum _{i=1}^{N}{X_i} \le \gamma )\). A close look at the above-mentioned estimators shows that the efficiency results were proved when the rarity parameter \(\gamma \) decreases, whereas N is kept fixed. However, in most cases, the efficiency of the existing estimators is considerably affected when N increases. This represents the main motivation of the present work. We aim to introduce a highly accurate estimator that efficiently estimates \(\mathbb {P}(\sum _{i=1}^{N}{X_i} \le \gamma )\) in the rare event regime when N is large and/or \(\gamma \) is small.

It is well acknowledged that the exponential twisting technique compares favorably, in most cases, to existing estimators. It is the optimal IS probability density function (PDF) in the sense that it minimizes the Kullback–Leibler (KL) divergence with respect to the underlying PDF under certain constraints (Ridder and Rubinstein 2007). However, it has some limitations. First, it requires the knowledge of the moment-generating function of \(X_i\), \(i=1,2,\cdots ,N\). Second, sampling according to the new IS PDF is not straightforward and might be expensive. Moreover, the twisting parameter is not available in a closed-form expression and needs to be estimated numerically. Motivated by the above limitations, we summarize the main contributions of the present work as follows:

-

We propose an alternative IS estimator that approximately yields, for certain classes of distributions and in the rare event regime, at least the same efficiency as the one given by the estimator based on exponential twisting and at the same time does not introduce the above limitations.

-

The first class includes distributions whose PDFs vanish at zero polynomially. For this class of distributions, the Gamma IS PDF with appropriately chosen parameters retrieves approximately, in the regime of rare events corresponding to small values of \(\gamma \) and/or large values of N, the same performances as the exponential twisting PDF.

-

The above result does not apply to the Log-normal setting as the corresponding PDF approaches zero faster than any polynomials. We show numerically that in this setting, the Gamma IS PDF with optimized parameters achieves a substantial amount of variance reduction compared to the one given by exponential twisting.

-

Numerical comparisons with some of the existing estimators validate that the proposed estimator can deliver highly accurate estimates with low computational cost in the rare event regime corresponding to large N and/or small \(\gamma .\)

The paper is organized as follows. In Sect. 2, we define the problem setting and motivate the work. In Sect. 3, we introduce the exponential twisting approach and present its limitations. The main contribution of this work is presented in Sect. 4, where we show that the Gamma IS PDF with optimized parameters retrieves approximately, for certain classes of distributions and in the rare event regime, at least the same performance as the exponential twisting technique. Finally, numerical experiments are shown in Sect. 5 to compare the proposed estimator with various existing estimators.

2 Problem setting and motivation

Let \(X_1,X_2,\cdots ,X_N\) be i.i.d. nonnegative random variables with common PDF \(f_X(\cdot )\) and CDF \(F_{X}(\cdot )\). Let \(\varvec{x}=(x_1,\cdots ,x_N)^{t}\) and \(h_\mathbf{X }(\varvec{x})=\prod _{i=1}^{N}{f_X(x_i)}\) be the joint PDF of the random vector \((X_1,\cdots ,X_N)^{t}\). We consider the estimation of

where \(\mathbb {P}_{h_\mathbf{X }} (\cdot )\) is the probability under which the random vector \(\mathbf{X} =(X_1,\cdots ,X_N)^{t}\) is distributed according to \(h_\mathbf{X }(\cdot )\), i.e., for any Borel measurable set A in \(\mathbb {R}^N\), we have \(\mathbb {P}_{h_\mathbf{X }} \left( \mathbf{X} \in A\right) =\int _{A} h_\mathbf{X }(\varvec{x})d\varvec{x}\). As an application, the quantity of interest \(\alpha (\gamma ,N)\) could represent the outage probability at the output of EGC and MRC wireless receivers operating over fading channels. In fact, the instantaneous signal-to-noise ratio (SNR) at EGC or MRC diversity receivers is given as follows (Ben Rached et al. 2016)

where N is the number of diversity branches, \(\frac{E_s}{N_0}\) is the SNR per symbol at the transmitter, \(R_i\), \(i=1,2,...,N\), is the fading channel envelope, and

The outage probability is defined as the probability that the SNR falls below a given threshold. Using (2), it can be easily shown that the outage probability at the output of EGC and MRC receivers can be expressed as the CDF of the sum of fading channel envelops (for EGC) and fading channel gains (for MRC) and hence can be expressed as in (1).

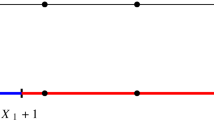

We focus on the estimation of \(\alpha (\gamma ,N)\) when N is large and/or \(\gamma \) is small. Before delving into the core of the paper, we illustrate via a simple example that the efficiency of an IS estimator, that performs well when \(\gamma \) decreases and N is not sufficiently large, can deteriorate when we increase the values of N. We first write the quantity of interest as

where \(w_i\) is equal in distribution to \(\frac{X_i}{\gamma }\) conditional on the event \(\{X_i \le \gamma \}\), \(i=1,2,.\cdots ,N\), and \(h_{\varvec{w}}(\varvec{w})=\prod _{i=1}^{N}{f_w(w_i)}\) with \(f_{w}(\cdot )\) is the PDF of \(w_i\), i.e., the conditional PDF of \(\frac{X_i}{\gamma }\) given the event \(\{ \frac{X_i}{\gamma } \le 1\}\), and is given by \(f_w(w)=\frac{\gamma f_X(\gamma w)}{F_X(\gamma )} \mathbf{1} _{(w<\gamma )}\). Note that \(\mathbb {E}_{h_{\varvec{w}}}[\cdot ]\) denotes the expectation under \(h_{\varvec{w}}(\cdot )\). The estimator is then given by estimating the right-hand side term of (4) by the naive Monte Carlo method

where \((w_1^{(k)},\cdots ,w_N^{(k)})\) , \(k=1,\cdots ,M\), are independent realizations sampled according to \(h_{\varvec{w}}(\cdot )\). Note that this estimator can be understood as applying IS with IS PDF being the truncation of the underlying PDF over the hypercube \([0,\gamma ]^N\). It can be easily proved that for fixed N, this estimator achieves the desired bounded relative error property with respect to the rarity parameter \(\gamma \) for distributions that satisfy \(f_{w}(x) \sim b x^{p}\) as x approaches zero and for \(p>-1\) and \(b>0\), see Ben Rached et al. (2018). This property means that the squared coefficient of variation, defined as the ratio between the variance of an estimator and its squared mean, remains bounded as \(\gamma \rightarrow 0\), see Kroese et al. (2011). More precisely, when this property holds, the number of required samples to meet a fixed accuracy requirement remains bounded independently of how small \(\alpha (\gamma ,N)\) is. The question now is what happens when N is large.

Using the Chernoff bound, we obtain for all \(\eta >0\)

where \(\mathbb {E}_{f_w}[\cdot ]\) denotes the expectation under \(f_{w}(\cdot )\). The squared coefficient of variation of \(\hat{\alpha }(\gamma ,N)\) in (4) is given by

In particular, when \(\eta =1\), the squared coefficient of variation (which is asymptotically equal to \(1/ \mathbb {P}_{h_{\varvec{w}}}(\sum _{i=1}^{N}{w_i}\le 1) \) in the regime of rare events) is lower bounded by \(\exp \left( -1-N \log \left( \mathbb {E}_{f_w}{}[\exp (-w)]\right) \right) \). This shows that the squared coefficient of variation increases at least exponentially, which proves that the efficiency of the estimator deteriorates when N is large.

3 Exponential twisting

In this section, we review the popular exponential twisting IS approach and enumerate its limitations in estimating the quantity of interest. When applicable, it is well acknowledged that the exponential twisting technique is expected to produce a substantial amount of variance reduction and to compare favorably, in most cases, to other estimators (Asmussen et al. Sep. 2016). For distributions with light right tails and under the i.i.d. assumption, the estimator based on exponential twisting can be proved, under some regularity assumptions, to be logarithmically efficient when the probability of interest is either \(\mathbb {P}_{h_\mathbf{X }}(\sum _{i=1}^{N}{X_i}>\gamma )\) and \(\gamma \rightarrow +\infty \) or \(\mathbb {P}_{h_\mathbf{X }}(\sum _{i=1}^{N}{X_i}>\gamma N)\) and \(N \rightarrow +\infty \) (Asmussen and Glynn 2007). In the left-tail setting, which is the region of interest in the present work, the exponential twisting was shown in Asmussen et al. (Sep. 2016) to achieve the logarithmic efficiency property in the case of i.i.d. Log-normal random variables when the probability of interest is \(\mathbb {P}_{h_\mathbf{X }}(\sum _{i=1}^{N}{X_i} <N\gamma )\) and either \(N\rightarrow +\infty \) or \(\gamma \rightarrow 0\).

In Ridder and Rubinstein (2007), the exponential twisting technique was also shown to be optimal in the sense that it minimizes the KL divergence with respect to the underlying PDF under the constraint that the rare set \(\{\varvec{x} \in \mathbb {R}^{N}_{+}, \text { such that } \sum _{i=1}^{N}{x_i} \le \gamma \} \) is no longer rare. The IS PDF is selected to be the solution of the following optimization problem, see Ridder and Rubinstein (2007),

The solution of this problem is given as (see Ridder and Rubinstein (2007) for a more general setting)

and \(\theta ^*\) solves

Hence, by writing \(h_\mathbf{X }^*(\varvec{x})=\prod _{i=1}^{N}{f_\mathbf{X }^*(x_i)}\), we clearly observe that the optimal density is given by exponentially twisting each univariate PDF \(f_X(\cdot )\)

with \(M(\theta )=E_{f_X}[\exp (\theta X)]\) and the optimal twisting parameter \(\theta ^*\) satisfies

Since the left-tail of sums of random variables is considered in this work, we have that \(\theta ^* \rightarrow -\infty \) as \(\gamma \rightarrow 0\) and/or \(N \rightarrow +\infty \) (Asmussen et al. Sep. 2016). Using the exponential twisting technique, the IS estimator of \(\alpha (\gamma ,N)\) using M i.i.d. samples of \(\mathbf{X} \) from \(h_\mathbf{X }^*(\cdot )\) is given as follows

Observe, however, that the exponential twisting technique has some restrictive limitations. The main one is that sampling according to \(f_X^*(\cdot )\) is not straightforward. One generally needs the use of an acceptance–rejection technique, the complexity of which can be dramatic when the probability of acceptance is relatively small. In such a case, the computational complexity of the algorithm can be huge and even worse than the naive Monte Carlo method. There are other less critical drawbacks. First, computations are much simpler if the moment-generating function \(M(\theta )\) is known in closed form. Such a requirement does not hold in general. Also, the twisting parameter \(\theta ^*\) does not have, in general, a closed-form expression, and hence, it should be approximated numerically.

4 Gamma family as IS PDF

The objective of this paper is to propose an alternative IS PDF that approximately yields, for certain classes of distributions that include most of the common distributions and in the rare event regime corresponding to large N and/or small \(\gamma \), at least the same performance as the exponential twisting technique and at the same time does not introduce serious limitations. We distinguish three scenarios depending on how the PDF \(f_X(\cdot )\) approaches zero.

4.1 \(f_X(x) \sim b\) as x goes to 0 and \(b>0\) is a constant

Recall that the exponential twisting IS PDF satisfies

with \(\theta ^* \rightarrow -\infty \) as \(\gamma \rightarrow 0\) and/or \(N \rightarrow +\infty \). Therefore, as \(f(x)\sim b\) and \(b>0\), and by letting \(\tilde{M}(\theta )=-\frac{1}{\theta }\), we instead consider the following IS PDF

We choose \(\theta \) to be equal to \(\tilde{\theta }\) such that \(\frac{\tilde{M}^{'}(\tilde{\theta })}{\tilde{M}(\tilde{\theta })}=\frac{\gamma }{N}\). Through simple computation, we obtain \(\tilde{\theta }=-\frac{N}{\gamma }\). To conclude, when \(f(x)\sim b\) and \(b>0\), we propose an IS PDF given by the exponential distribution with rate \(\frac{N}{\gamma }\).

4.2 \(f_X(x)=x^{p} g(x)\) with \(g(x) \sim b\) as x goes to 0, \(p>-1\), and \(b>0\) is a constant

Using the same methodology as in Sect. 4.1, the IS PDF that we consider is

Therefore, the new PDF corresponds to the Gamma PDF with shape parameter \(p+1\) and scale parameter \(-1/\theta \). The normalizing constant is \(\tilde{M}(\theta )=\frac{\Gamma (p+1)}{(-\theta )^{p+1}}\). Hence, the value \(\theta \) is chosen to be equal to \(\tilde{\theta }\) such that \(\frac{\tilde{M}^{'}(\tilde{\theta })}{\tilde{M}(\tilde{\theta })}=\frac{\gamma }{N}\) and is given by

Using the Gamma IS PDF in (7), the proposed IS estimator of \(\alpha (\gamma ,N)\) using M i.i.d. samples of \(\mathbf{X} \) from \(\tilde{h}_\mathbf{X }(\varvec{x})=\prod _{i=1}^{N}{\tilde{f}_{X}(x_i)}\) is

In Table 1, we provide a non-exhaustive list of distributions that belong to Sect. 4.2. (Note that distributions in Sect. 4.2 include those in Sect. 4.1.) These distributions are among the most used distributions to model the amplitudes and powers of wireless communications fading channels.

Remark 1

It is worth mentioning that for distributions satisfying \(f_X(x)=x^{p} g(x)\) with \(g(x) \sim b\) as x goes to 0, \(p>-1\), and \(b>0\) is a constant, the proposed approach with the Gamma IS PDF in (7) with parameters p and \(\tilde{\theta }\) in (8) achieves approximately, as \(\gamma \) decreases to 0 and/or N increases, the same performance as the one given by the exponential twisting without introducing serious limitations. Let \(A_1\) and \(A_2\) be the second moments of the proposed and the exponential twisting estimators, respectively. Then, the ratio between \(A_1\) and \(A_2\) has the following expression

First observe that \(M(\theta )=\int _{0}^{\infty }{\exp (\theta x)x^p g(x)dx}\) is well approximated by \(b\tilde{M}(\theta )=b \int _{0}^{\infty }{\exp (\theta x)x^p dx}\) for sufficiently small negative values of \(\theta \). Moreover, recall that \(\theta ^*\) and \(\tilde{\theta }\) go to \(-\infty \) as either \(\gamma \rightarrow 0\) or \(N \rightarrow \infty \), and that \(\theta ^*\) and \(\tilde{\theta }\) satisfy \(\frac{M'(\theta ^*)}{M(\theta ^*)}=\frac{\gamma }{N}\) and \(\frac{\tilde{M}'(\tilde{\theta })}{\tilde{M}(\tilde{\theta })}=\frac{\gamma }{N}\), respectively. Thus, as \(\gamma \rightarrow 0\) and/or \(N \rightarrow \infty \), we obtain that \(\theta ^*\) is well approximated by \(\tilde{\theta }\), and hence \(M(\theta ^*)\) is well approximated by \(b \tilde{M}(\tilde{\theta })\). Finally, using the latter two approximations and the fact that \(g(x) \sim b\) as x goes to 0, we conclude from (9) that \(A_1\) is approximately equal to \(A_2\) when \(\gamma \) goes to 0. For large values of N, the same conclusion can be deduced by observing that \(\mathbb {E}_{f_{X}^*}[X_i]=\mathbb {E}_{\tilde{f}_X}[X_i]=\frac{\gamma }{N}\), \(i=1,2,\cdots ,N\). Thus, the random variables \(X_1,X_2,\cdots ,X_N\) take, when sampled according to the IS PDFs, sufficiently small values when N is sufficiently large.

4.3 The Log-normal case

Distributions that do not approach 0 polynomially are much more difficult to handle and need to be tackled on a case-by-case basis. In this work, we consider the case of the sum of i.i.d. standard Log-normal random variables. The density decreases to 0 at a faster rate than any polynomials, and thus, the Gamma distribution with fixed shape parameter will not recover the results given by the use of the exponential twisting technique. Note that in Asmussen et al. (Sep. 2016), the exponential twisting technique was applied to the sum of i.i.d. standard Log-normals by i) providing an unbiased estimator of the moment-generating function, ii) approximating the value of \(\theta \), and iii) using acceptance–rejection to sample from the IS PDF.

The main difficulty is that the PDF of the Log-normal distribution does not have a Taylor expansion at \(x=0\). The first estimator we propose is based on truncating the support \([0,+\infty ]\) and only working on \([a,+\infty ]\) with \(a=\delta \gamma /N\). This allows the use of a Taylor expansion at \(x=a\). This procedure, however, introduces a bias that needs to be controlled. We show numerically that this estimator exhibits better performances than the one based on exponential twisting. Moreover, we observe that, in the regime of rare events, the proposed estimator achieves approximately the same performances as the Gamma IS PDF with shape parameter equal to 2. This is the main motivation behind introducing a second estimator whose IS PDF is a Gamma PDF with optimized parameters. The numerical results show that the second estimator achieves substantial variance reduction with respect to the first estimator.

4.3.1 Biased estimator

We rewrite the quantity of interest as

where \(\delta \) is a fixed value belonging to [0, 1). The first factor on the right-hand side has a known closed-form expression. Let \(\bar{f}_X(\cdot )\) be the PDF of \(X_i |\{X_i >\frac{\delta \gamma }{N}\}\), \(i=1,2,\cdots ,N\), whose expression is given as follows:

Next, we write the second factor on the right-hand side of (10) as follows:

with \(\bar{h}_\mathbf{X }(\varvec{x})=\prod _{i=1}^{N}{\bar{f}_{X}(x_i)}\). The exponential twisting IS PDF is then given by

Now, by using the Taylor expansion of \(\bar{f}_{X}(\cdot )\) at the point \(x=\delta \gamma /N\), we write

where \(\xi _{x,\delta ,N}\) is between \(\frac{\delta \gamma }{N}\) and x. Hence, the approximate exponential twisting IS PDF is given by

with the notation \(\bar{f}_X=\bar{f}_X(\frac{\delta \gamma }{N})\) and \(\bar{f}_X^{'}=\bar{f}_X^{'}(\frac{\delta \gamma }{N})\). We assume that \(\frac{\delta \gamma }{N}\) is strictly less than \(\exp (-1)\) to ensure that \(\bar{f}_X^{'}>0\). This assumption is not restrictive, as we are interested in the rare event regime corresponding to N large and/or \(\gamma \) small. Through a simple computation, we get

The value of \(\theta \) that solves \(\frac{\tilde{M}^{'}(\theta )}{\tilde{M}(\theta )}=\frac{\gamma }{N}\) is given by

with \(c=\frac{\gamma }{N}(1-\delta )\). The remaining part is to sample from \(\tilde{f}_X(\cdot )\). To do this, we write

where \(\tilde{f}_1(x)=-\frac{\theta \exp (\theta x)}{\exp (\theta \delta \gamma /N)}\) and \(\tilde{f}_2(x)=\frac{\theta ^2(x-\delta \gamma /N)\exp (\theta x)}{\exp (\theta \delta \gamma /N)}\) are two valid PDFs for \(x>\delta \gamma /N\).

The question that remains is related to controlling the bias through a proper choice of the parameter \(\delta \). Let \(\alpha _1(\gamma ,N)=\left( 1-F_X(\frac{\delta \gamma }{N}) \right) ^N \mathbb {P}_{h_\mathbf{X }} \left( \sum _{i=1}^{N}{X_i}\le \gamma \Big {|}X_i>\frac{\delta \gamma }{N}, \forall i\right) \). Then, the global relative error can be upper bounded as follows:

where \(\hat{\alpha }_{1,is,M}\) is the IS estimator of \(\alpha _1(\gamma ,N)\) based on M i.i.d. realizations sampled according to \(\tilde{h}_\mathbf{X }(\varvec{x})=\prod _{i=1}^{N}{\tilde{f}_X(x_i)}\) where the PDF \(\tilde{f}_X(\cdot )\) is given in (11)

The parameter \(\delta \) is then chosen to control the bias term in (12), that is the first term on the right-hand side of (12). The second term on the right-hand side is the statistical relative error of estimating \(\alpha _1(\gamma ,N)\) by \(\hat{\alpha }_{1,is,M}\). From the central limit theorem (CLT), this error term is approximately proportional to the coefficient of variation of \(\hat{\alpha }_{1,is,M}\).

To achieve a global relative error of order \(\epsilon \), it is sufficient to bound the two error terms, i.e., the statistical relative error and the relative bias, by \(\epsilon /2\). Hence, the value of \(\delta \) is selected such that the following inequality holds

The following lemma provides the relation between \(\delta \) and \(\epsilon \) such that (13) is fulfilled.

Lemma 1

The following expression of \(\delta (\epsilon ,N,\gamma )\)

where \(\Phi (\cdot )\) is the CDF of the standard normal distribution, ensures that (13) holds.

Proof

We first write that

On the other hand, we have

Therefore, we get

By equating the right-hand side of the above inequality with \(\epsilon /2\), we obtain

and hence, the proof is concluded. \(\square \)

4.3.2 The Gamma family as an IS PDF

When we consider a sufficiently small value of \(\delta \) in the above analysis, we observe from the expression of the IS PDF in (11) that the proposed estimator with the IS PDF in (11) achieves approximately the same performance as the Gamma IS PDF with shape parameter equal to 2. This suggests investigating whether the Gamma family can achieve further variance reduction with respect to the approach in the previous subsection. Note that the advantage of using the Gamma family as IS PDFs compared to the approach in the previous subsection is that the estimator is unbiased. Recall that the Gamma PDF is given by

where \(\theta >0\) and \(k>0\) are the scale and shape parameters. The value of \(\theta \) is chosen to be equal to \(\theta =\frac{\gamma }{Nk}\) to ensure that the expected value of each of the \(X_i\)’s, \(i=1,2,\cdots ,N\), under the PDF \(\tilde{f}_{X}(\cdot )\) is equal to \(\frac{\gamma }{N}\). The likelihood ratio is then given by

The second moment of the IS estimator is bounded by

The last upper bound is found by maximizing the function \(x \rightarrow -(\log (x))^2-2k \log (x)\) for \(x>0\). Next, using Stirling’s formula for the gamma function \(\Gamma (k)= \sqrt{2\pi }k^{k-\frac{1}{2}} \exp (-k)(1+\mathcal {O}(\frac{1}{k}))\), we get

where C is a constant. Next, the value of k is chosen such that it minimizes the above right-hand side term. The solution of this minimization problem is given as follows:

Note that when N is large and/or \(\gamma \) is small, the value of \(k^*\) satisfies \(k^* \sim \log (\frac{N}{\gamma })\).

5 Numerical results

In this section, we show some selected numerical results to compare the performance of the proposed estimators compared to some of the existing estimators. We consider three scenarios depending on the distribution of \(X_i\), \(i=1,2,\cdots ,N\): the Weibull, the Gamma–Gamma, and the Log-normal distributions. Note that the proposed approach is not restricted to these three distributions (see Table I for a non-exhaustive list of distributions that can be handled).

We recall that the squared coefficient of variation of an unbiased estimator \(\hat{\alpha }(\gamma ,N)\) of \(\alpha (\gamma ,N)\) has the following expression

Note that, from the CLT, the number of required samples to meet \(\epsilon \) statistical relative error with \(95\%\) confidence is equal to \((1.96)^2 \text {SCV}(\hat{\alpha }(\gamma ,N))/\epsilon ^2\). Therefore, when we compare two estimators, the one with the smaller squared coefficient of variation exhibits better performance than the other.

5.1 Weibull case

In this section, we assume that \(X_i\), \(i=1,2,\cdots ,N\), are distributed according to the Weibull distribution whose PDF is given in Table I. The comparison is made with respect to the second IS approach of Ben Rached et al. (2016) that is based on using the hazard rate twisting (HRT). In Figs. 1 and 2, we plot the squared coefficient of variations given by the HRT technique and the proposed approach for two different values of the shape parameter: \(k=1.5\) and \(k=0.5\), respectively. The value of \(\alpha (\gamma ,N)\) ranges approximately from \(10^{-20}\) to \(10^{-6}\) (respectively, from \(10^{-16}\) to \(10^{-6}\)) using the system’s parameters of Fig. 1 (respectively, of Fig. 2). These figures show that the proposed approach clearly outperforms the one based on HRT. For instance, when \(k=1.5\), \(\lambda =1\), \(\gamma =0.5\), and \(N=12\), the proposed approach is approximately 270 times more efficient than the one based on HRT. More specifically, to meet the same accuracy, the number of samples needed by the approach based on HRT should be approximately 270 times the number of samples needed by the proposed approach.

In the next experiment, we aim to compare the proposed approach with the HRT one when N is fixed and \(\gamma \) decreases. In Fig. 3, we compare the efficiency of both approaches in terms of squared coefficient of variations plotted as a function of \(\gamma \) for two scenarios depending on the value of N (\(N=8\) and \(N=10\)). In this case, the value of \(\alpha (\gamma ,N)\) ranges approximately from \(10^{-16}\) to \(10^{-6}\) for \(N=8\) and from \(10^{-22}\) to \(10^{-8}\) for \(N=10\). We observe a clear outperformance of the proposed approach compared to the one based on using HRT for both values of N. While the HRT approach was proved in Ben Rached et al. (2016) to achieve the bounded relative error property with respect to \(\gamma \) and for a fixed value of N, it is clear from Fig. 3 that the asymptotic bound increases substantially with respect to N, and hence, the performance of the HRT approach is dramatically affected by increasing N. On the other hand, we observe that increasing the value of N has a minor effect on the efficiency of the proposed approach, i.e., the squared coefficient of variation is approximately unchanged for both values of N and for the considered range of \(\gamma \).

This numerical observation suggests to conclude that the proposed approach satisfies the bounded relative error property with an asymptotic bound that increases with a very slow rate, compared to the one given by the HRT approach, as we increase N. For illustration, the proposed approach is approximately 18 (respectively, 64) times more efficient than the HRT one when \(N=8\) (respectively, \(N=10\)) and \(\gamma =0.2\). Note that the previous observations are valid independently of the value of \(\alpha (\gamma ,N)\) (see Fig. 3, where the squared coefficient of variation is approximately constant for a fixed value of N and for the considered range of \(\gamma \)). This experiment and the numerical results in Figs. 1 and 2 validate the ability of the proposed approach to deliver a very accurate and efficient estimate of \(\alpha (\gamma ,N)\) when N increases and/or \(\gamma \) decreases.

5.2 Gamma–Gamma case

The Gamma–Gamma distribution is used for various challenging applications in wireless communications. For instance, it exhibited a good fit to experimental data and was used to model wireless radio-frequency channels (Shankar Feb. 2004) and to model atmospheric turbulences in free-space optical communication systems (Hajji and Bouanani 2017). The PDF of \(X_i\) is given in Table 1.

In Fig. 4, we compare the proposed approach with the one in Ben Issaid et al. (2017) by plotting the corresponding squared coefficient of variations as a function of N and for a fixed value of \(\gamma \). Note that in Ben Issaid et al. (2017), the proposed IS PDF is simply another Gamma–Gamma PDF with shifted mean. We call this method the IS-based mean-shifted approach. The range of the quantity of interest \(\alpha (\gamma ,N)\) is approximately from \(10^{-18}\) to \(10^{-5}\). We observe that the proposed estimator outperforms the one in Ben Issaid et al. (2017). Also, we observe that the outperformance of the proposed estimator compared to the one based on mean shifting increases as we increase N. Moreover, we should note here that the cost per sample (in terms of CPU time) of the approach in Ben Issaid et al. (2017) is twice the cost of the proposed approach. This is because a Gamma–Gamma random variable is generated by the product of two independent Gamma random variables, see Chatzidiamantis et al. (2009). For illustration, we observe from Fig. 4 that when \(N=12\), the proposed approach is approximately 2.5 times (five times if we include the computing time in the comparison) more efficient than the one of Ben Issaid et al. (2017).

5.3 Log-normal case

The Log-normal distribution can be used to model several types of attenuation including shadowing (Tjhung et al. 1997), and weak-to-moderate turbulence channels in free-space optical communications (Ansari et al. 2014). The standard Log-normal PDF (the associated Gaussian random variable has zero mean and unit variance) is given by

Figure 5 shows the squared coefficient of variation given by the exponential twisting (Asmussen et al. Sep. 2016), and the two proposed approaches, i.e., the one based on the biased estimator and the other based on using the Gamma distribution as an IS PDF. The value of \(\alpha (\gamma ,N)\) ranges approximately from \(10^{-20}\) to \(10^{-2}\). For the considered range of N, we observe that out of these three approaches, it is the one using the Gamma distribution as an IS PDF that outperforms the others. When \(N=9\), it is approximately 30 times more efficient than the one based on exponential twisting. In addition to the efficiency in terms of number of samples, it is worth recalling that the exponential twisting technique developed in Asmussen et al. (Sep. 2016) is computationally expensive in terms of computing time compared to the proposed approaches. Moreover, Fig. 5 also shows that the approach based on the biased estimator achieves better performances than the one based on exponential twisting. It is important to mention here that, for the comparison to be fair, the required number of samples of the biased estimator should be multiplied by 4. This follows from the error analysis in (12), in which the statistical relative error should be bounded by \(\epsilon /2\), where \(\epsilon \) is the required global relative error.

In Fig. 6, we plot the squared coefficient of variations given by the three approaches as a function of \(\gamma \) and for two different values of N (\(N=8\) and \(N=10\)). The quantity of interest \(\alpha (\gamma ,N)\) ranges approximately from \(10^{-15}\) to \(10^{-6}\) for \(N=8\) and from \(10^{-21}\) to \(10^{-9}\) for \(N=10\). We observe that the approach based on using the Gamma distribution as an IS PDF clearly asymptotically outperforms the two other approaches. For both values of N, the outperformance increases as we decrease \(\gamma \). Moreover, the biased estimator exhibits better performances than the exponential twisting one for both values of N and for the considered range of \(\gamma \) values. Furthermore, increasing N has a considerable negative effect on the performances of the exponential twisting and the biased IS-based approaches. On the other hand, Fig. 6 shows that increasing N does not largely affect the performance of the IS estimator based on the use of the Gamma distribution as an IS PDF. For illustration, the approach based on using the Gamma distribution as an IS PDF is approximately 15 times (respectively, 35) more efficient that the exponential twisting one when \(N=8\) (respectively, \(N=10\)) and \(\gamma =0.6\).

It is important to mention that the outperformance of the estimator based on using the Gamma distribution as an IS PDF over the one based on using the biased estimator is expected. As it was mentioned above, the latter approach gives approximately the same performance as the Gamma distribution with shape parameter equal to 2, while the former one uses the Gamma distribution as an IS PDF with an optimized shape parameter. (The shape parameter was chosen to minimize an upper bound of the second moment of the proposed estimator, see the expression of \(k^*\) in (17).)

All of the above comparisons have been carried out in terms of the number of sampled needed to meet a fixed accuracy requirement. In order to include the computing time in our comparison, we define the work normalized relative variance (WNRV) metric of an unbiased estimator \(\hat{\alpha }(\gamma ,N)\) of \(\alpha (\gamma ,N)\) as follows (see Ben Rached et al. 2018):

The computing time is the time in seconds needed to get an estimator of \(\alpha (\gamma ,N)\) using M i.i.d. samples of \(\hat{\alpha }(\gamma ,N)\). When comparing two estimators, the one that exhibits less WNRV is more efficient than the other estimator. More precisely, an estimator is efficient in terms of WNRV than another estimator which means that it achieves less relative error for a given computational budget, or equivalently, it needs less computing time to achieve a fixed relative error. Using the same setting as in Fig. 6, we plot in Fig. 7 the WNRV metric as a function of \(\gamma \) for two scenarios depending on the value of N (\(N=8\) and \(N=10\)).

We observe that as \(\alpha (\gamma ,N)\) is getting smaller, it is the approach based on using the Gamma PDF as an IS PDF that outperforms the two other approaches in terms of WNRV. (The efficiency increases as the event becomes rarer.) It is worth recalling that the WNRV of the approach based on biased PDF should be multiplied by 4 in order for the analysis to be fair. (This follows from the error analysis that was performed in section 4.3.1.) Moreover, Fig. 7 shows that, in addition to reducing the variance, as shown in Fig. 6, the approach based on using the Gamma IS PDF also reduces the computing time compared to the one using the exponential twisting technique. To see that, for \(N=10\) and \(\gamma =0.6\), the approach based on using the Gamma IS PDF is approximately 35 times (respectively, 340 times) more efficient than the one based on exponential twisting when using the squared coefficient of variation metric (respectively, the WNRV metric). More specifically, the Gamma-based IS approach approximately reduces the computing time by a factor of 10 with respect to the exponential twisting approach.

6 Conclusion

We developed efficient importance sampling estimators to estimate the rare event probabilities corresponding to the left-tail of the cumulative distribution function of large sums of nonnegative independent and identically distributed random variables. The proposed estimators achieve asymptotically at least the same performance as the exponential twisting technique, in the regime of rare events and for certain classes of distributions that include most of the common distributions. The main conclusion is that the Gamma PDF with suitably chosen parameters achieves for most of the common distributions substantial variance reduction and at the same time avoids the restrictive limitations of the exponential twisting technique. The numerical results validate the efficiency of the proposed approach in being able to accurately and efficiently estimate the quantity of interest in the rare event regime corresponding to large N and/or small \(\gamma \). One possible extension of the present work is to connect it to the works in Beskos et al. (2017) and Jasra et al. (2021) by creating a sequence of approximate measures corresponding to increasing the values of N.

References

Alouini, M.-S., Ben Rached, N., Kammoun, A., Tempone, R.: On the efficient simulation of the left-tail of the sum of correlated Log-normal variates. Monte Carlo Methods Appl. 24(2), 101–115 (2018)

Ansari, I. S., Alouini, M.-S., Cheng, J.: On the capacity of FSO links under Lognormal and Rician-Lognormal turbulences. In: In Proc. of the IEEE 80th Vehicular Technology Conference (VTC2014-Fall), pp. 1–6 (2014)

Asmussen, S., Glynn, P.W.: Stochastic Simulation : Algorithms and Analysis. Stochastic Modelling and Applied Probability, Springer, New York (2007)

Asmussen, S., Jensen, J.L., Rojas-Nandayapa, L.: Exponential family techniques for the lognormal left tail. Scand. J. Stat. 43(3), 774–787 (2016)

Beaulieu, N. C., Luan, G.: Improving simulation of Lognormal sum distributions with hyperspace replication. In: In Proc. of the IEEE Global Communications Conference (GLOBECOM), pp. 1–7 (2019)

Ben Issaid, C., Ben Rached, N., Kammoun, A., Alouini, M.-S., Tempone, R.: On the efficient simulation of the distribution of the sum of Gamma-Gamma variates with application to the outage probability evaluation over fading channels. IEEE Trans. Commun. 65(4), 1839–1848 (2017)

Ben Rached, N., Benkhelifa, F., Kammoun, A., Alouini, M.-S., Tempone, R.: On the generalization of the hazard rate twisting-based simulation approach. Stat. Comput. 28(1), 61–75 (2016)

Ben Rached, N., Botev, Z.I., Kammoun, A., Alouini, M.-S., Tempone, R.: On the sum of order statistics and applications to wireless communication systems performances. IEEE Trans. Wirel. Commun. 17(11), 7801–7813 (2018)

Ben Rached, N., Kammoun, A., Alouini, M.-S., Tempone, R.: Unified importance sampling schemes for efficient simulation of outage capacity over generalized fading channels. IEEE J. Sel. Top. Signal Process. 10(2), 376–388 (2016)

Beskos, A., Jasra, A., Law, K., Tempone, R., Zhou, Y.: Multilevel sequential Monte Carlo samplers. Stoch. Process. Appl. 127(5), 1417–1440 (2017)

Botev, Z.I., Salomone, R., Mackinlay, D.: Fast and accurate computation of the distribution of sums of dependent log-normals. Ann. Oper. Res. 280(1), 19–46 (2019)

Chatzidiamantis, N. D., Karagiannidis, G. K., Michalopoulos, D. S.: On the distribution of the sum of Gamma-Gamma variates and application in MIMO optical wireless systems. In: in Proc. of the EEE Global Telecommunications Conference, pp. 1–6 (2009)

Da Costa, D.B., Yacoub, M.D.: Accurate approximations to the sum of generalized random variables and applications in the performance analysis of diversity systems. IEEE Trans. Commun. 57(5), 1271–1274 (2009)

Ermolova, N.Y.: Moment generating functions of the generalized \(\eta -\mu \) and \(\kappa -\mu \) distributions and their applications to performance evaluations of communication systems. IEEE Commun. Lett. 12(7), 502–504 (2008)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products, 7th edn. Elsevier/Academic Press, Amsterdam (2007)

Gulisashvili, A., Tankov, P.: Tail behavior of sums and differences of Log-normal random variables. Bernoulli 22(1), 444–493 (2016)

Hajji, M., Bouanani, F. E.: Performance analysis of mixed Weibull and Gamma-Gamma dual-hop RF/FSO transmission systems. In: In Proc. of the International Conference on Wireless Networks and Mobile Communications (WINCOM), pp. 1–5 (2017)

Hu, J., Beaulieu, N.C.: Accurate closed-form approximations to Ricean sum distributions and densities. IEEE Commun. Lett. 9(2), 133–135 (2005)

Jasra, A., Law, K.J.H., Lu, D.: Unbiased estimation of the gradient of the log-likelihood in inverse problems. Stat. Comput. 31(3) (2021)

Juneja, S., Shahabuddin, P.: Simulating heavy tailed processes using delayed hazard rate twisting. ACM Trans. Model. Comput. Simul. 12(2), 94–118 (2002)

Kroese, D.P., Taimre, T., Botev, Z.I.: Handbook of Monte Carlo Methods. Wiley, Hoboken (2011)

Lopez-Salcedo, J.A.: Simple closed-form approximation to Ricean sum distributions. IEEE Signal Process. Lett. 16(3), 153–155 (2009)

Renzo, M.D., Graziosi, F., Santucci, F.: Further results on the approximation of Log-normal power sum via Pearson type IV distribution: a general formula for log-moments computation. IEEE Trans. Commun. 57(4), 893–898 (2009)

Ridder, A., Rubinstein, R.: Minimum cross-entropy methods for rare-event simulation. Simulation 83(11), 769–784 (2007)

Rubino, G., Tuffin, B.: Rare Event Simulation Using Monte Carlo Methods. Wiley, Hoboken (2009)

Shankar, P.M.: Error rates in generalized shadowed fading channels. Wirel. Pers. Commun. 28, 233–238 (2004)

Simon, M.K., Alouini, M..-S.: Digital Communication over Fading Channels. Wiley Series in Telecommunications and Signal Processing, 2nd edn. Wiley-Interscience, Hoboken (2005)

Tjhung, T.T., Chai, C.C., Dong, X.: Outage probability for Lognormal-shadowed Rician channels. IEEE Trans. Veh. Technol. 46(2), 400–407 (1997)

Xiao, Z., Zhu, B., Cheng, J., Wang, Y.: Outage probability bounds of EGC over dual-branch non-identically distributed independent Lognormal fading channels with optimized parameters. IEEE Trans. Veh. Technol. 68(8), 8232–8237 (2019)

Zhu, B., Cheng, J.: Asymptotic outage analysis on dual-branch diversity receptions over non-identically distributed correlated Lognormal channels. IEEE Trans. Commun. 67(10), 7126–7138 (2019)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This publication is based upon work supported by the King Abdullah University of Science and Technology (KAUST) Office of Sponsored Research (OSR) under Award No. OSR-2019-CRG8-4033 and the Alexander von Humboldt Foundation. A-L. Haji-Ali was supported by a Sabbatical Grant from the Royal Society of Edinburgh.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ben Rached, N., Haji-Ali, AL., Rubino, G. et al. Efficient importance sampling for large sums of independent and identically distributed random variables. Stat Comput 31, 79 (2021). https://doi.org/10.1007/s11222-021-10055-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-021-10055-1