Abstract

In the current work we present two generalizations of the Parallel Tempering algorithm in the context of discrete-time Markov chain Monte Carlo methods for Bayesian inverse problems. These generalizations use state-dependent swapping rates, inspired by the so-called continuous time Infinite Swapping algorithm presented in Plattner et al. (J Chem Phys 135(13):134111, 2011). We analyze the reversibility and ergodicity properties of our generalized PT algorithms. Numerical results on sampling from different target distributions, show that the proposed methods significantly improve sampling efficiency over more traditional sampling algorithms such as Random Walk Metropolis, preconditioned Crank–Nicolson, and (standard) Parallel Tempering.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Modern computational facilities and recent advances in computational techniques have made the use of Markov Chain Monte Carlo (MCMC) methods feasible for some large-scale Bayesian inverse problems (BIP), where the goal is to characterize the posterior distribution of a set of parameters \(\theta \) of a computational model which describes some physical phenomena, conditioned on some (usually indirectly) measured data y. However, some computational difficulties are prone to arise when dealing with difficult to explore posteriors, i.e., posterior distributions that are multi-modal, or that concentrate around a non-linear, lower-dimensional manifold, since some of the more commonly-used Markov transition kernels in MCMC algorithms, such as random walk Metropolis (RWM) or preconditioned Crank-Nicholson (pCN), are not well-suited in such situations. This in turn can make the computational time needed to properly explore these complicated target distributions arbitrarily long. Some recent works address these issues by employing Markov transitions kernels that use geometric information (Beskos et al. 2017); however, this requires efficient computation of the gradient of the posterior density, which might not always be feasible, particularly when the underlying computational model is a so-called “black-box”.

In recent years, there has been an active development of computational techniques and algorithms to overcome these issues using a tempering strategy (Dia 2019; Earl and Deem 2005; Latz et al. 2018; Miasojedow et al. 2013; Vrugt et al. 2009). Of particular importance for the work presented here is the Parallel Tempering (PT) algorithm (Earl and Deem 2005; Łącki and Miasojedow 2016; Miasojedow et al. 2013) (also known as replica exchange), which finds its origins in the physics and molecular dynamics community. The general idea behind such methods is to simultaneously run K independent MCMC chains, where each chain is invariant with respect to a flattened (referred to as tempered) version of the posterior of interest \(\mu \), while, at the same time, proposing to swap states between any two chains every so often. Such a swap is then accepted using the standard Metropolis-Hastings (MH) acceptance-rejection rule. Intuitively, chains with a larger smoothing parameter (referred to as temperature) will be able to better explore the parameter space. Thus, by proposing to exchange states between chains that target posteriors at different temperatures, it is possible for the chain of interest (i.e., the one targeting \(\mu \)) to mix faster, and to avoid the undesirable behavior of some MCMC samplers of getting “stuck” in a mode. Moreover, the fact that such an exchange of states is accepted with the typical MH acceptance-rejection rule, will guarantee that the chain targeting \(\mu \) remains invariant with respect to such probability measure (Earl and Deem 2005).

Tempering ideas have been successfully used to sample from posterior distributions arising in different fields of science, ranging from astrophysics to machine learning (Desjardins et al. 2010; Earl and Deem 2005; Miasojedow et al. 2013; Van Der Sluys et al. 2008). The works (Madras and Randall 2002; Woodard et al. 2009) have studied the convergence of the PT algorithm from a theoretical perspective and provided minimal conditions for its rapid mixing. Moreover, the idea of tempered distributions has not only been applied in combination with parallel chains. For example, the simulated tempering method (Marinari and Parisi 1992) uses a single chain and varies the temperature within this chain. In addition, tempering forms the basis of efficient particle filtering methods for stationary model parameters in Sequential Monte Carlo settings (Beskos et al. 2016, 2015; Kahle et al. 2019; Kantas et al. 2014; Latz et al. 2018) and Ensemble Kalman Inversion (Schillings and Stuart 2017).

A generalization over the PT approach, originating from the molecular dynamics community, is the so-called Infinite Swapping (IS) algorithm (Dupuis et al. 2012; Plattner et al. 2011). As opposed to PT, this IS paradigm is a continuous-time Markov process and considers the limit where states between chains are swapped infinitely often. It is shown in Dupuis et al. (2012) that such an approach can in turn be understood as a swap of dynamics, i.e., kernel and temperature (as opposed to states) between chains. We remark that once such a change in dynamics is considered, it is not possible to distinguish particles belonging to different chains. However, since the stationary distribution of each chain is known, importance sampling can be employed to compute posterior expectations with respect to the target measure of interest. Infinite Swapping has been successfully applied in the context of computational molecular dynamics and rare event simulation (Doll et al. 2012; Lu and Vanden-Eijnden 2013; Plattner et al. 2011); however, to the best of our knowledge, such methods have not been implemented in the context of Bayesian inverse problems.

In light of this, the current work aims at importing such ideas to the BIP setting, by presenting them in a discrete-time Metropolis-Hastings Markov chain Monte Carlo context. We will refer to these algorithms as Generalized Parallel Tempering (GPT). We emphasize, however, that these methods are not a time discretization of the continuous-time Infinite Swapping presented in Dupuis et al. (2012), but, in fact, a discrete-time Markov process inspired by the ideas presented therein with suitably defined state-dependent probabilities of swapping states or dynamics. We now summarize the main contributions of this work.

We begin by presenting a generalized framework for discrete time PT in the context of MCMC for BIP, and then proceed to propose, analyze and implement two novel state-dependent PT algorithms inspired by the ideas presented in Dupuis et al. (2012).

Furthermore, we prove that our GPT methods have the right invariant measure, by showing reversibility of the generated Markov chains, and prove their ergodicity. Finally, we implement the proposed GPT algorithms for an array of Bayesian inverse problems, comparing their efficiency to that of an un-tempered, (single temperature), version of the underlying MCMC algorithm, and standard PT. For the base method to sample at the cold temperature level, we use Random Walk Metropolis (RWM) (Sects. 5.3–5.6) or preconditioned Crank-Nicolson (Sect. 5.7), however, we emphasize that our methods can be used together with any other, more efficient base sampler. Experimental results show improvements in terms of computational efficiency of GPT over un-tempered RWM and standard PT, thus making the proposed methods attractive from a computational perspective. From an implementation perspective, the swapping component of our proposed methods is rejection-free, thus effectively eliminating some tuning parameters on the PT algorithm, such as swapping frequency.

We notice that a PT algorithm with state-dependent swapping probabilities has been proposed in Łącki and Miasojedow (2016), however, such a work only consider pairwise swapping of chains and a different construction of the swapping probabilities, resulting in a less-efficient sampler, at least for the BIPs addressed in this work.

Our ergodicity result relies on an \(L_2\) spectral gap analysis. It is known Rudolf (2012) that when a Markov chain is both reversible and has a positive \(L_2\)-spectral gap, one can in turn provide non-asymptotic error bounds on the mean square error of an ergodic estimator of the chain. Our bounds on the \(L_2\)-spectral gap, however, are far from being sharp and could possibly be improved using e.g., domain decomposition ideas as in Woodard et al. (2009). Such analysis is left for a future work.

The rest of this paper is organized as follows. Section 2 is devoted to the introduction of the notation, Bayesian inverse problems, and Markov chain Monte Carlo methods. In Sect. 3, we provide a brief review of (standard) PT (Sect. 3.2), and introduce the two versions of the GPT algorithm in Sects. 3.3 and 3.4, respectively. In fact, we present a general framework that accommodates both the standard PT algorithms and our generalized versions. In Sect. 4, we recall some of the standard theory of Markov chains in Sect. 4.1 and state the main theoretical results of the current work (Theorems 1 and 2) in Sect. 4.2. The proof of these Theorems is given by a series of Propositions in Sect. 4.2. We present some numerical experiments in Sect. 5, and draw some conclusions in Sect. 6.

2 Problem setting

2.1 Notation

Let \((W,\left\| \cdot \right\| )\) be a separable Banach space with associated Borel \(\sigma \)-algebra \({\mathcal {B}}(W)\), and let \(\nu _W\) be a \(\sigma \)-finite “reference” measure on W. For any measure \(\mu \) on \((W, {\mathcal {B}}(W))\) that is absolutely continuous with respect to \(\nu _W\) (in short \(\mu \ll \nu _W\)), we define the Radon-Nikodym derivative \(\pi _\mu {:}{=} \frac{\mathrm {d}\mu }{\mathrm {d}\nu _W}\). We denote by \(\overline{{\mathcal {M}}}(W)\) the set of real-valued signed measures on \((W,{\mathcal {B}(W)})\), and by \({\mathcal {M}}(W)\subset \overline{{\mathcal {M}}}(W)\) the set of probability measures on \((W,{\mathcal {B}(W)})\).

Let \(W_1, W_2\) be two separable Banach spaces with reference measures \(\nu _{W_1},\nu _{W_2}\), and let \(\mu _1 \ll \nu _{W_1}, \mu _{2} \ll \nu _{W_2}\) be two probability measures, with corresponding densities given by \(\pi _{\mu _1},\pi _{\mu _2}\). The product of these two measures is defined by

for all \(A\in {\mathcal {B}}(W_1 \times W_2).\) Joint measures on \((W_1\times W_2, {\mathcal {B}}(W_1,\times W_2))\) will always be written in boldface.

A Markov kernel on a Banach space W is a function \(p:W\times {\mathcal {B}}(W)\rightarrow [0,1]\) such that

-

1.

For each A in \({\mathcal {B}}(W)\), the mapping \( W\ni \theta \mapsto p(\theta ,A)\), is a \({\mathcal {B}}(W)\)-measurable real-valued function.

-

2.

For each \(\theta \) in W, the mapping \({\mathcal {B}}(W)\ni A\mapsto p(\theta ,A)\), is a probability measure on \((W,{\mathcal {B}}(W))\).

We denote by P the Markov transition operator associated to p, which acts on measures as \(\nu \mapsto \nu P\in {\mathcal {M}}(W),\) and on functions as \(f\mapsto Pf,\) with Pf measurable on \((W,{\mathcal {B}}(W)),\) such that

Let \(P_k,\ k=1,2,\) be Markov transition operators associated to kernels \(p_k:W_k\times {\mathcal {B}}(W_k)\mapsto [0,1].\) We define the tensor product Markov operator \({\mathbf {P}}{:}{=}P_1\otimes P_2\) as the Markov operator associated with the product measure \({\mathbf {p}}({\varvec{\theta }},\cdot )=p_1(\theta _1,\cdot )\times p_2(\theta _2,\cdot ), \ {\varvec{\theta }}=(\theta _1,\theta _2)\in W_1\times W_2\). In particular, \({\varvec{\nu }}{\mathbf {P}}\) is the measure on \((W_1 \times W_2, {\mathcal {B}}(W_1 \times W_2))\) that satisfies

for all \(A_1 \in {\mathcal {B}}(W_1)\) and \(A_2 \in {\mathcal {B}}(W_2)\). Moreover, \(({\mathbf {P}}f): W_1 \times W_2 \rightarrow {\mathbb {R}}\) is the function given by

for an appropriate \(f: W_1 \times W_2 \rightarrow {\mathbb {R}}\).

We say that a Markov operator P (resp. \({\mathbf {P}}\)) is invariant with respect to a measure \(\nu \) (resp. \({\varvec{\nu }}\)) if \(\nu P=\nu \) (resp. \({\varvec{\nu }}{\mathbf {P}}={\varvec{\nu }}\) ). A related concept to invariance is that of reversibility; a Markov kernel \(p:W\times {\mathcal {B}(W)}\mapsto [0,1]\) is said to be reversible (or \(\nu \)-reversible) with respect to a measure \(\nu \in {\mathcal {M}}(W)\) if

For two given \(\nu \)-invariant Markov operators \(P_1,P_2\), we say that \(P_1P_2\) is a composition of Markov operators, not to be confused with \( P_1\otimes P_2\). Furthermore, given a composition of K \(\nu \)-invariant Markov operators \(P_c{:}{=}P_1P_2\dots P_K\), we say that \(P_c\) is palindromic if \(P_1=P_K,\) \(P_2=P_{K-1},\) ..., \(P_k=P_{K-{k+1}}, \ k=1,2\dots ,K\). It is known (see, e.g., Brooks et al. (2011) section 1.12.17) that a palindromic, \(\nu \)-invariant Markov operator \(P_c\) has an associated Markov transition kernel \(p_c\) which is \(\nu \)-reversible.

2.2 Bayesian inverse problems

Let \((\varTheta ,\left\| \cdot \right\| _\varTheta )\) and \((Y,\left\| \cdot \right\| _Y)\) be separable Banach spaces with associated Borel \(\sigma \)-algebras \({\mathcal {B}}(\varTheta ),\ {\mathcal {B}}(Y)\). In Bayesian Inverse Problems we aim at characterizing the probability distribution of a set of parameters \(\theta \in \varTheta \) conditioned on some measured data \(y\in Y\), where the relation between \( \theta \) and y is given by:

Here \(\varepsilon \) is some random noise with known distribution \(\mu _\mathrm {noise}\) (assumed to have a density \(\pi _\text {noise}\) with respect to some reference measure \(\nu _Y\) on Y) and \({\mathcal {F}}:\varTheta \mapsto Y\) is the so-called forward mapping operator. Such an operator can model, e.g., the numerical solution of a possibly non-linear Partial Differential Equation (PDE) which takes \( \theta \) as a set of parameters. We will assume that the data y is finite dimensional, i.e., \(Y=\mathbb {R}^{d_y}\) for some \(d_y\ge 1\), and that \(\theta \sim \mu _\mathrm {pr}\). Furthermore, we define the potential function \(\varPhi (\theta ;y):\varTheta \times Y\mapsto \mathbb {R}\) as

where the function \(\varPhi (\theta ;y)\) is a measure of the misfit between the recorded data y and the predicted value \({\mathcal {F}}(\theta )\), and often depends only on \(\left\| y-{\mathcal {F}}(\theta )\right\| _Y\). The solution to the Bayesian inverse problem is given by (see, for example, Latz et al. (2019) Theorem 2.5)

where \(\mu \) (with corresponding \(\nu _\varTheta \)-density \(\pi \)) is referred to as the posterior measure and

The Bayesian approach to the inverse problem consists of updating our knowledge concerning the parameter \(\theta \), i.e., the prior, given the information that we observed in Eq. (2). One way of doing so is to generate samples from the posterior measure \(\mu ^y\). A common method for performing such a task is to use, for example, the Metropolis-Hastings algorithm, as detailed in the next section. Once samples \(\{\theta ^{(n)}\}_{n=0}^N\) have been obtained, the posterior expectation \(\mathbb {E}_{\mu ^y}[\mathcal {Q}]\) of some \(\mu ^y\)-integrable quantity of interest \(\mathcal {Q}:\varTheta \mapsto \mathbb {R}\) can be approximated by the following ergodic estimator

where \(b<N\) is the so-called burn-in period, used to reduce the bias typically associated to MCMC algorithms.

2.3 Metropolis-Hastings and tempering

We briefly recall the Metropolis-Hastings algorithm (Hastings 1970; Metropolis et al. 1953). Let \(q_\mathrm {prop}:\varTheta \times {\mathcal {B}}(\varTheta )\mapsto [0,1]\) be an auxiliary kernel. For \(n=1,2,\dots ,\) a candidate state \(\theta ^*\) is sampled from \(q_\mathrm {prop}(\theta ^n,\cdot )\), and proposed as the new state of the chain at step \({n+1}\). Such a state is then accepted (i.e., we set \(\theta ^{n+1}=\theta ^*)\), with probability \(\alpha _\text {MH}\),

otherwise the current state is retained, i.e., \(\theta ^{n+1}=\theta ^n\). Notice that, with a slight abuse of notation, we are denoting kernel and density by the same symbol \(q_\mathrm {prop}\). The Metropolis-Hastings algorithm induces the Markov transition kernel \(p:\varTheta \times {\mathcal {B}}(\varTheta )\mapsto [0,1]\)

for every \(\theta \in \varTheta \) and \(A\in {\mathcal {B}}(\varTheta )\). In most practical algorithms, the proposal state \(\theta ^*\) is sampled from a state-dependent auxiliary kernel \(q_\mathrm {prop}(\theta ^n,\cdot )\). Such is the case for random walk Metropolis or preconditioned Crank Nicolson, where \(q_\mathrm {prop}(\theta ^n,\cdot )={\mathcal {N}}(\theta ^n,\Sigma )\) or \(q_\mathrm {prop}(\theta ^n,\cdot )={\mathcal {N}}(\sqrt{1-\rho ^2}\theta ^n,\rho \Sigma ),\) \(0<\rho <1\), respectively. However, these types of localized proposals tend to present some undesirable behaviors when sampling from certain difficult measures, which are, for example, concentrated over a manifold or are multi-modal (Earl and Deem 2005). In the first case, in order to avoid a large rejection rate, the “step-size” \(\left\| \Sigma ^{1/2}\right\| \) of the proposal kernel must be quite small, which will in turn produce highly-correlated samples. In the second case, chains generated by these localized kernels tend to get stuck in one of the modes. In either of these cases, very long chains are required to properly explore the parameter space.

One way of overcoming such difficulties is to introduce tempering. Let \(\mu _k, \mu _\text {pr}\) be probability measures on \((\varTheta ,{\mathcal {B}}(\varTheta )),\) \(k=1,\dots ,K,\) such that all \(\mu _k\) are absolutely continuous with respect to \(\mu _\text {pr}\), and let \(\{T_k\}_{k=1}^K\) be a set of K temperatures such that \(1=T_1<T_2<\dots <T_K\le \infty \). In a Bayesian setting, \(\mu _\text {pr}\) corresponds to the prior measure and \(\mu _k, k=1,\dots ,K\) correspond to posterior measures associated to different temperatures. Denoting by \(\pi _k\) the \(\mu _\mathrm {pr}\)-density of \(\mu _k\), we set

where \(\quad Z_k{:}{=}\int _\varTheta e^{-\varPhi (\theta ;y)/T_k} \mu _\text {pr}(\mathrm {d}\theta ) ,\) and with \(\varPhi (\theta ;y)\) as the potential function defined in (3). In the case where \(T_K=\infty \), we set \(\mu _K=\mu _\text {pr}\). Notice that \(\mu _1\) corresponds to the target posterior measure.

We say that for \(k=2,\dots , K,\) each measure \(\mu _k\) is a tempered version of \(\mu _1\). In general, the \({1/T_k}\) term in (5) serves as a “smoothing”Footnote 1 factor, which in turn makes \(\mu _k\) easier to explore as \(T_k\rightarrow \infty \). In PT MCMC algorithms, we sample from all posterior measures \(\mu _k\) simultaneously. Here, we first use a \(\mu _k\)-reversible Markov transition kernel \(p_k\) on each chain, and then, we propose to exchange states between chains at two consecutive temperatures, i.e., chains targeting \(\mu _k,\mu _{k+1}, \ k\in \{1,\dots ,K-1\}\). Such a proposed swap is then accepted or rejected with a standard Metropolis-Hastings acceptance rejection step. This procedure is presented in Algorithm 1. We remark that such an algorithm can be modified to, for example, propose to swap states every \(N_s\) steps of the chain, or to swaps states between two chains \(\mu _i,\mu _j\), with i, j chosen randomly and uniformly from the index set \(\{1,2,\dots ,K\}\). In the next section we present the generalized PT algorithms which swap states according to a random permutation of the indices drawn from a state dependent probability.

3 Generalizing parallel tempering

Infinite Swapping was initially developed in the context of continuous-time MCMC algorithms, which were used for molecular dynamics simulations. In continuous-time PT, the swapping of the states is controlled by a Poisson process on the set \(\{1,\ldots ,K\}\). Infinite Swapping is the limiting algorithm obtained by letting the waiting times of this Poisson process go to zero. Hence, we swap the states of the chain infinitely often over a finite time interval. We refer to Dupuis et al. (2012) for a thorough introduction and review of Infinite Swapping in continuous-time. In Section 5 of the same article, the idea to use Infinite Swapping in time-discrete Markov chains was briefly discussed. Inspired by this discussion, we present two Generalizations of the (discrete-time) Parallel Tempering strategies. To that end, we propose to either (i) swap states in the chains at every iteration of the algorithm in such a way that the swap is accepted with probability one, which we will refer to as the Unweighted Generalized Parallel Tempering (UGPT), or (ii), swap dynamics (i.e., swap kernels and temperatures between chains) at every step of the algorithm. In this case, importance sampling must also be used when computing posterior expectations since this in turn provides a Markov chain whose invariant measure is not the desired one. We refer to this approach as Weighted Generalized Parallel Tempering (WGPT). We begin by introducing a common framework to both PT and the two versions of GPT.

Let \((\varTheta ,\left\| \cdot \right\| _{\varTheta })\) be a separable Banach space with associated Borel \(\sigma \)-algebra \({\mathcal {B}}(\varTheta )\). Let us define the K-fold product space \(\varTheta ^K {:}{=} {\times }^{K}_{k=1} \varTheta \), with associated product \(\sigma \)-algebra \({\mathcal {B}}^K{:}{=} \bigotimes ^K_{k=1} {\mathcal {B}}(\varTheta )\), as well as the product measures on \((\varTheta ^K,{\mathcal {B}}^K)\)

where \(\mu _k\) \(k=1,\dots , K\) are the tempered measures with temperatures \(1= T_1<T_2<T_3<\dots < T_K\le \infty \) introduced in the previous section. Similarly, we define the product prior measure \({\varvec{\mu }}_\mathrm {pr}{:}{=}\times _{k=1}^K\mu _\mathrm {pr}.\) Notice that \({\varvec{\mu }}\) has a density \({\varvec{\pi }}({\varvec{\theta }})\) with respect to \({\varvec{\mu }}_\text {prior}\) given by

with \(\pi _i(\theta )\) added subscript given as in (5). The idea behind the tempering methods presented herein is to sample from \({\varvec{\mu }}\) (as opposed to solely sampling from \(\mu _1\)) by creating a Markov chain obtained from the successive application of two \({\varvec{\mu }}\)-invariant Markov kernels \({\mathbf {p}}\) and \({\mathbf {q}}\), to some initial distribution \({\varvec{\nu }}\), usually chosen to be the prior \({\varvec{\mu }}_\mathrm {pr}\). Each kernel acts as follows. Given the current state \({\varvec{\theta }}^n=(\theta ^n_1,\dots ,\theta ^n_K),\) the kernel \({\mathbf {p}}\), which we will call the standard MCMC kernel, proposes a new, intermediate state \({\tilde{{\varvec{\theta }}}}^{n+1}=({\tilde{\theta }}^{n+1}_1,\dots ,{\tilde{\theta }}^{n+1}_K),\) possibly following the Metropolis-Hastings algorithm (or any other algorithm that generates a \({\varvec{\mu }}\)-invariant Markov operator). The Markov kernel \({\mathbf {p}}\) is a product kernel, meaning that each component \({\tilde{\theta }}_k^n\), \(k=1\dots ,K,\) is generated independently of the others. Then, the swapping kernel \({\mathbf {q}}\) proposes a new state \({\varvec{\theta }}^{n+1}=(\theta _1^{n+1},\dots ,\theta _K^{n+1})\) by introducing an “interaction” between the components of \({\tilde{{\varvec{\theta }}}}^{(n+1)}.\) This interaction step can be achieved, e.g., in the case of PT, by proposing to swap two components at two consecutive temperatures, i.e., components k and \(k+1\), and accepting this swap with a certain probability given by the usual Metropolis-Hastings acceptance-rejection rule. In general, the swapping kernel is applied every \(N_s\) steps of the chain, for some \(N_s\ge 1\). We will devote the following subsection to the construction of the swapping kernel \({\mathbf {q}}\).

3.1 The swapping kernel \({\mathbf {q}}\)

Define \({\mathscr {S}}_K\) as the collection of all the bijective maps from \(\{1,2,\dots ,K \}\) to itself, i.e., the set of all K! possible permutations of \(\mathrm {id}{:}{=}\{1,\dots ,K\}\). Let \(\sigma \in {\mathscr {S}}_K\) be a permutation, and define the swapped state \({\varvec{\theta }}_\sigma {:}{=}(\theta _{\sigma (1)},\dots ,\theta _{\sigma (K)}),\) and the inverse permutation \(\sigma ^{-1}\in {\mathscr {S}}_K\) such that \(\sigma \circ \sigma ^{-1}=\sigma ^{-1}\circ \sigma =\mathrm {id}\). In addition, let \(S_K\subseteq {\mathscr {S}}_K\) be any subset of \({\mathscr {S}}_K\) closed with respect to inversion, i.e., \(\sigma \in S_K\implies \sigma ^{-1}\in S_K\). We denote the cardinality of \(S_K\) by \(|S_K|.\)

Example 1

As a simple example, consider a Standard PT as in Algorithm 1 with \(K=4\). In this case, we attempt to swap two contiguous temperatures \(T_i\) and \(T_{i+1}, \ i=1,2,3\). Thus, \(S_K\) is the set of permutations \(\{\sigma _{1,2},\sigma _{2,3},\sigma _{3,4}\}\) with:

Notice that each permutation is its own inverse; for example:

To define the swapping kernel \({\mathbf {q}}\), we first need to define the swapping ratio and swapping acceptance probability.

Definition 1

(Swapping ratio) We say that a function \(r:\varTheta ^K\times S_K \mapsto [0,1]\) is a swapping ratio if it satisfies the following two conditions:

-

1.

\(\forall {\varvec{\theta }}\in \varTheta ^K\), \(r({\varvec{\theta }},\cdot )\) is a probability mass function on \(S_K\).

-

2.

\(\forall \sigma \in S_K\), \(r(\cdot ,\sigma )\) is measurable on \((\varTheta ^K,{\mathcal {B}}^K)\).

Definition 2

(Swapping acceptance probability)

Let \({\varvec{\theta }}\in \varTheta ^K\) and \(\sigma ,\ \sigma ^{-1}\in S_K\). We call swapping acceptance probability the function \(\alpha _{\mathrm {{swap}}}:\varTheta ^K\times S_K\mapsto [0,1] \) defined as

We can now define the swapping kernel \({\mathbf {q}}\).

Definition 3

(Swapping kernel) Given a swapping ratio \(r:\varTheta ^K\times S_K\mapsto [0,1]\) and its associated swapping acceptance probability \(\alpha _\mathrm {swap}:\varTheta ^K\times S_K\mapsto [0,1]\), we define the swapping Markov kernel \({\mathbf {q}}:\varTheta ^K\times {\mathcal {B}}^K\mapsto [0,1]\) as

where \(\delta _{{\varvec{\theta }}}(B)\) denotes the Dirac measure in \({\varvec{\theta }}\), i.e., \(\delta _{{\varvec{\theta }}}(B)=1\) if \({\varvec{\theta }}\in B\) and 0 otherwise.

The swapping mechanism should be understood in the following way: given a current state of the chain \({\varvec{\theta }}\in \varTheta ^K,\) the swapping kernel samples a permutation \(\sigma \) from \(S_K\) with probability \(r({\varvec{\theta }},\sigma )\) and generates \({\varvec{\theta }}_\sigma .\) This permuted state is then accepted as the new state of the chain with probability \(\alpha _{\mathrm {swap}}({\varvec{\theta }},\sigma )\). Notice that the swapping kernel follows a Metropolis-Hastings-like procedure with “proposal” distribution \(r({\varvec{\theta }},\sigma )\) and acceptance probability \(\alpha _{\mathrm {swap}}({\varvec{\theta }},\sigma )\).

Moreover, as detailed in the next proposition, such a kernel is reversible with respect to \({\varvec{\mu }}\), since it is a Metropolis-Hastings type kernel.

Proposition 1

The Markov kernel \({\mathbf {q}} \) defined in (7) is reversible with respect to the product measure \({\varvec{\mu }}\) defined in (6).

Proof

Let \(A,B\in {\mathcal {B}}^K\). We want to show that

Thus,

Let \(A_\sigma {:}{=}\{{\varvec{z}} \in \varTheta ^K : {\varvec{z}}_{\sigma ^{-1}}\in A\}\), and, for notational simplicity, write \(\min \{a,b\}=\{a\wedge b\}, \ a,b\in \mathbb {R}\). From I, we have:

Then, noticing that \({\varvec{\mu }}_\mathrm {pr}\) is permutation invariant, we get

For the second term II we simply have

\(\square \)

This generic form of the swapping kernel provides the foundation for both PT and GPT. We describe these algorithms in the following subsections.

3.2 The parallel tempering case

We first show how a PT algorithm that only swaps states between the \(i^\mathrm {th}\) and \(j^\mathrm {th}\) components of the chain can be cast in the general framework presented above. To that end, let \(\sigma _{i, j}\) be the permutation of \((1,2,\dots ,K),\) which only permutes the \(i^\mathrm {th}\) and \(j^\mathrm {th}\) components, while leaving the other components invariant (i.e., such that \(\sigma (i)=j\), \(\sigma (j)=i,\) and \(\sigma (k)=k\), \(k\ne i,k\ne j\)). We can take \(S_K=\{\sigma _{i, j},\ i,j=1,\dots ,K\}\) and define the PT swapping ratio between components i and j by \(r_{i,j}^\mathrm {(PT)}:\varTheta ^K\times S_K\mapsto [0,1]\) as

Notice that this implies that \(r_{i,j}^\mathrm {(PT)}({\varvec{\theta }}_\sigma ,\sigma ^{-1})=r_{i,j}^\mathrm {(PT)}({\varvec{\theta }},\sigma )\) since \(\sigma ^{-1}_{i,j}=\sigma _{i,j}\) and \(r_{i,j}^\mathrm {(PT)}\) does not depend on \({\varvec{\theta }}\), which in turn leads to the swapping acceptance probability \(\alpha _{\mathrm {swap}}^\mathrm {(PT)}:\varTheta ^K\times S_K\mapsto [0,1]\) defined as:

Thus, we can define the swapping kernel for the Parallel Tempering algorithm that swaps components i and j as follows:

Definition 4

(Pairwise Parallel Tempering swapping kernel) Let \({\varvec{\theta }}\in \varTheta ^K\), \(\sigma _{i, j} \in S_K\). We define the Parallel Tempering swapping kernel, which proposes to swap states between the \(i^\mathrm {th}\) and \(j^\mathrm {th}\) chains as \({\mathbf {q}}_{i, j}^\mathrm {{(PT)}}:\varTheta ^K\times {\mathcal {B}}^K\mapsto [0,1] \) given by

In practice, however, the PT algorithm considers various sequential swaps between chains, which can be understood by applying the composition of kernels \({\mathbf {q}}^\mathrm {(PT)}_{i, j}{\mathbf {q}}^\mathrm {(PT)}_{k, \ell }\dots \) at every swapping step. In its most common form Brooks et al. (2011); Earl and Deem (2005); Miasojedow et al. (2013), the PT algorithm, hereafter referred to as standard PT (which on a slight abuse of notation we will denote by PT), proposes to swap states between chains at two consecutive temperatures. Its swapping kernel \({\mathbf {q}}^\mathrm {(PT)}: \varTheta ^K\times {\mathcal {B}}^K\mapsto [0,1]\) is given by

Moreover, the algorithm described in Earl and Deem (2005), proposes to swap states every \(N_s\ge 1\) steps of MCMC. The complete kernel for the PT kernel is then given by Brooks et al. (2011); Earl and Deem (2005); Miasojedow et al. (2013)

where \({\mathbf {p}}\) is a standard reversible Markov transition kernel used to evolve the individual chains independently.

Remark 1

Although the kernel \({\mathbf {p}}\) as well as each of the \({\mathbf {q}}_{i,i+1}\) are \({\varvec{\mu }}\)-reversible, notice that (8) does not have a palindromic structure, and as such it is not necessarily \({\varvec{\mu }}\)-reversible. One way of making the PT algorithm reversible with respect to \({\varvec{\mu }}\) is to consider the palindromic form

where RPT stands for Reversible Parallel Tempering. In practice, there is not much difference between \({\mathbf {p}}^\mathrm {(RPT)}\) and \({\mathbf {p}}^\mathrm {(PT)}\), however, under the additional assumption of geometric ergodicity of the chain (c.f Sect. 4) having a reversible kernel is useful to compute explicit error bounds on the non-asymptotic mean square error of an ergodic estimator (Rudolf 2012).

3.3 Unweighted generalized parallel tempering

The idea behind the Unweighted Generalized Parallel Tempering algorithm is to generalize PT so that (i) \(N_s=1\) provides a proper mixing of the chains, (ii) the algorithm is reversible with respect to \({\varvec{\mu }}\), and (iii) the algorithm considers arbitrary sets \(S_K\) of swaps (always closed w.r.t inversion), instead of only pairwise swaps. We begin by constructing a kernel of the form (7). Let \(r^\mathrm {{(UW)}}:\varTheta ^K\times S_K\mapsto [0,1]\) be a function defined as

Clearly, (9) is a swapping ratio according to Definition 1. As such, given some state \({\varvec{\theta }}\in \varTheta ^K\), \(r^\mathrm {{(UW)}}({\varvec{\theta }},\sigma )\) assigns a state-dependent probability to each of the \({|S_K|}\) possible permutations in \(S_K\). A permutation \(\sigma \in S_K\) is then accepted with probability \(\alpha _{\mathrm {swap}}^\mathrm {{(UW)}}({\varvec{\theta }},\sigma )\), given by

Thus, we can define the swapping kernel for the UGPT algorithm, which takes the form of (7), with the particular choice of \(r({\varvec{\theta }},\sigma )=r^\mathrm {{(UW)}}({\varvec{\theta }},\sigma )\) and

Notice that \(\alpha ^\mathrm {{(UW)}}_\text {swap}({\varvec{\theta }},\sigma )=1, \forall \sigma \in S_K\). Indeed, if we further examine Eq. (10), we see that

In practice, this means that the proposed permuted state is always accepted with probability 1. The expression of the UGPT kernel then simplifies as follows.

Definition 5

(unweighted swapping kernel) The unweighted swapping kernel \({\mathbf {q}}^\mathrm {{(UW)}}:\varTheta ^K\times {\mathcal {B}}^K\mapsto [0,1] \) is defined as

\( \forall {\varvec{\theta }}\in \varTheta ^K,\ B\in {\mathcal {B}}^K. \) Applying this swapping kernel successively with the kernel \({\mathbf {p}}=p_1\times p_2\times \dots p_K \) in the order \({\mathbf {q}}^\mathrm {{(UW)}} {\mathbf {p}} {\mathbf {q}}^\mathrm {{(UW)}}=:{\mathbf {p}}^\mathrm {{(UW)}}\) gives what we call Unweighted Generalized Parallel Tempering kernel \({\mathbf {p}}^\mathrm {{(UW)}}\). Lastly, we write the UGPT in operator form as

where \({\mathbf {P}}\) and \({\mathbf {Q}}^\mathrm {{(UW)}}\) are the Markov operators corresponding to the kernels \({\mathbf {p}}\) and \({\mathbf {q}}^\mathrm {{(UW)}}\), respectively. We now investigate the reversibility of the UGPT kernel. We start with a rather straightforward result.

Proposition 2

Suppose that, for any \(k=1,2,\ldots , K,\) \(p_k\) is \(\mu _k\)-reversible. Then, \({\mathbf {p}}=p_1\times \dots \times p_K\) is reversible with respect to \({\varvec{\mu }}\).

Proof

We prove reversibility by confirming that Eq. (1) holds true. To that end, let \({\varvec{\theta }}\in \varTheta ^K, \ A,B\in {\mathcal {B}}^K\), where A and B tensorize, i.e., \(A\,{:}{=} \prod _{k=1}^K A_k\) and \(B\,{:}{=} \prod _{k=1}^K B_k\), with \(A_1,\ldots , A_K, B_1,\ldots ,B_K \in {\mathcal {B}}(\varTheta )\). Then,

Showing that the previous equality holds for sets A, B that tensorize is indeed sufficient to show that the claim holds for any \(A, B \in {\mathcal {B}}^K\). This follows from Carathéodory’s Extension Theorem applied as in the proof of uniqueness of product measures; see Ash (2000) section 1.3.10, 2.6.3, for details. \(\square \)

We can now prove the reversibility of the chain generated by \({\mathbf {p}} ^\mathrm {(UW)}\).

Proposition 3

(Reversibility of the UGPT chain) Suppose that, for any \(k=1,2,\dots , K,\) \(p_k\) is \(\mu _k\)-reversible. Then, the Markov chain generated by \({\mathbf {p}} ^(UW) \) is \({\varvec{\mu }}\)-reversible.

Proof

It follows from Propositions 1 and 2 that the kernels \({\mathbf {q}}^\mathrm {{(UW)}}\) and \({\mathbf {p}}\) are \({\varvec{\mu }}\)-reversible. Furthermore, since \({\mathbf {p}}^\mathrm {{(UW)}}\) is a palindromic composition of kernels, each of which is reversible with respect to \({\varvec{\mu }}\), then, \({\mathbf {p}}^\mathrm {{(UW)}}\) is reversible with respect to \({\varvec{\mu }}\) Brooks et al. (2011). \(\square \)

The UGPT algorithm proceeds by iteratively applying the kernel \({\mathbf {p}}^\mathrm {{(UW)}}\) to a predefined initial state. In particular, states are updated using the procedure outlined in Algorithm 2.

Remark 2

In practice, one does not need to perform \(|S_K|\) posterior evaluations when computing \(r^\mathrm {(UW)}({\varvec{\theta }}^{n},\cdot ),\) rather “just” K of them. Indeed, since \(\pi _j(\theta ^n_k)\propto \pi (\theta _k)^{T_j},\) \(k,j=1,2,\dots ,K\), we just need to store the values of \(\pi (\theta ^{n}_k),k=1,2,\dots ,K\), for a fixed n, and then permute over the temperature indices.

Let now \({\mathcal {Q}}:\varTheta \mapsto {\mathbb {R}}\) be a quantity of interest. The posterior mean of \({{\mathcal {Q}}}\) , \(\mu ({\mathcal {Q}}) {:}{=} \mu _1({\mathcal {Q}})\) is approximated using \(N \in {\mathbb {N}}\) samples by the following ergodic estimator \(\widehat{{\mathcal {Q}}}_{(\mathrm {UW})}\):

3.3.1 A comment on the pairwise state-dependent PT method of Łącki and Miasojedow (2016)

The work Łącki and Miasojedow (2016) presents a similar state-dependent swapping. We will refer to the method presented therein as Pairwise State Dependent Parallel Tempering (PSDPT). Such a method, however, differs from UGPT from the fact that (i) only pairwise swaps are considered and (ii) it is not rejection free. We summarize such a method for the sake of completeness. Let \(S_{K,\mathrm {pairwise}}\) denote the group of pairwise permutations of \((1,2,\dots ,K).\) Given a current state \({\varvec{\theta }}\in \varTheta ^K\), the PSDPT algorithm samples a pairwise permutation \({\varvec{\theta }}_{\sigma _{i, j}}\in S_{K,\mathrm {pairwise}}\) with probability \(r_{i,j}^\mathrm {(PSDPT)}({\varvec{\theta }},\sigma _{i,j})\) given by

and then accepts this swap with probability

This method is attractive from an implementation point of view in the sense that it promotes pairwise swaps that have a similar energy, and as such, are likely (yet not guaranteed) to get accepted. In contrast, UGPT always accepts the new proposed state, which in turn leads to a larger amount of global moves, thus providing a more efficient algorithm. This is verified on the numerical experiments.

3.4 Weighted generalized parallel tempering

Following the intuition of the continuous-time Infinite Swapping approach of Dupuis et al. (2012); Plattner et al. (2011), we propose a second discrete-time algorithm, which we will refer to as Weighted Generalized Parallel Tempering (WGPT). The idea behind this method is to swap the dynamics of the process, that is, the Markov kernels and temperatures, instead of swapping the states such that any given swap is accepted with probability 1. We will see that the Markov kernel obtained when swapping the dynamics is not invariant with respect to the product measure of interest \({\varvec{\mu }}\); therefore, an importance sampling step is needed when computing posterior expectations.

For a given permutation \(\sigma \in S_K\), we define the swapped Markov kernel \({\mathbf {p}}_\sigma : \varTheta ^K\times {\mathcal {B}}^K\mapsto [0,1]\) and the swapped product posterior measure \({\varvec{\mu }}_\sigma \) (on the measurable space \((\varTheta ^K,{\mathcal {B}}^K)\)) as:

where the swapped posterior measure has a density with respect to \({\varvec{\mu }}_\text {prior}\) given by

Moreover, we define the swapping weights

Note that, in general, \({\varvec{\pi }}_\sigma ({\varvec{\theta }})\ne {\varvec{\pi }}({\varvec{\theta }}_\sigma )\), and as such, \(w_\sigma ({\varvec{\theta }})\ne r^\mathrm {{(UW)}}({\varvec{\theta }},\sigma )\), with \(w_\sigma \) defined as in (12).

Definition 6

We define the Weighted Generalized Parallel Tempering kernel \({\mathbf {p}}^\mathrm {(W)} : \varTheta ^K\times B^K\mapsto [0,1]\) as the following state-dependent, convex combination of kernels:

Thus, the WGPT chain is obtained by iteratively applying \({\mathbf {p}}^{\mathrm {(W)}}\). We show in Proposition 4 that the resulting Markov chain has invariant measure

with \({\tilde{\mu }}=\frac{1}{|S_K|}\sum _\sigma \mu _\sigma ,\) i.e., the average with tensorization. Furthermore, \({\varvec{\mu }}_{\mathrm {W}}\) has a density (w.r.t the prior \({\varvec{\mu }}^0\)) given by

and a similar average and then tensorization representation applies to \({\varvec{\pi }}_{\mathrm {W}}.\) We now proceed to show that \({\mathbf {p}}^{(\mathrm {W})}({\varvec{\theta }},\cdot )\) is \({\varvec{\mu }}_{\mathrm {W}}\)-reversible (hence \({\varvec{\mu }}_{\mathrm {W}}\)-invariant).

Proposition 4

(Reversibility of the WGPT chain) Suppose that, for any \(k=1,2,\dots , K\) \(p_k\) is \(\mu _k\)-reversible. Then, the Markov chain generated by \({\mathbf {p}} ^(W) \) is \({\varvec{\mu }}_\text {W}\)-reversible.

Proof

We show reversibility by showing that (1) holds true. Thus, for \({\varvec{\theta }}\in \varTheta ^K,\ A,B\in {\mathcal {B}}^K\), with \(A{:}{=}A_1\times \dots \times A_K\), \(A_k\in {\mathcal {B}}(\varTheta )\), and with \(B_k\) defined in a similar way, we have that:

From proposition 2, and multiplying and dividing by

we obtain

where once again, in light of Carathéodory’s Extension Theorem, it is sufficient to show that reversibility holds for sets that tensorize. \(\square \)

We remark that the measure \({\varvec{\mu }}_{\mathrm {W}}\) is not of interest per se. However, we can use importance sampling to compute posterior expectations. Let \({\mathcal {Q}}({\varvec{\theta }}){:}{=}{\mathcal {Q}}(\theta _1)\) be a \({\varvec{\mu }}\)-integrable quantity of interest. We can write

The last equality can be justified since \({\varvec{\mu }}_{\mathrm {W}}\) is invariant by permutation of coordinates. Thus, we can define the following (weighted) ergodic estimator \(\widehat{{\mathcal {Q}}}_{(\mathrm {W})} \) of the posterior mean of a quantity of interest \({\mathcal {Q}} \) by

where we have denoted the importance sampling weights by \({\widehat{w}}({\varvec{\theta }},\sigma ){:}{=}\frac{{\varvec{\pi }}({\varvec{\theta }}_\sigma )}{{\varvec{\pi }}_\text {W}({\varvec{\theta }}_\sigma )}=\frac{\mathrm {d}{\varvec{\mu }}}{\mathrm {d}{\varvec{\mu }}_{\mathrm {W}}}({\varvec{\theta }}_\sigma )\) and where N is the number of samples in the chain. Notice that \(w({\varvec{\theta }},\sigma )={\widehat{w}}({\varvec{\theta }},\sigma ^{-1}).\) As a result, the WGPT algorithm produces an estimator based on NK weighted samples, rather than “just” N, at the same computational cost of UGPT. Thus, the previous estimator evaluates the quantity of interest \({\mathcal {Q}}\) not only in the points \({\mathcal {Q}}(\theta _1^{(n)})\), but also in all states of the parallel chains, \({\mathcal {Q}}(\theta ^{(n)}_{\sigma (1)})\) for all \(\sigma \in S_K\), namely \({\mathcal {Q}}(\theta ^{(n)}_k), \ k=1,2,\dots ,K\).

Remark 3

Although it is known that, in some cases, an importance sampling estimator can be negatively affected by the dimensionality of the parameter space \(\varTheta \) (see e.g., Asmussen and Glynn (2007) Remark 1.17 or Owen (AB) Examples 9.1-9.3), we argue that this is not the case for our estimator. Indeed, notice that the importance-sampling weights \({\widehat{w}}({\varvec{\theta }},\sigma )\) are always upper bounded by \(|S_K|\), and do not blow up when the dimension goes to infinity. In Sect. 5.7 we present a numerical example on a high-dimensional problem. The results on that section evidence the robustness of WGPT with respect to the dimension of \(\theta \).

The Weighted Generalized Parallel Tempering procedure is shown in Algorithm 3. To reiterate, we remark that sampling from \({\mathbf {p}}_\sigma ({\varvec{\theta }}^{(n)},\cdot )\) involves a swap of dynamics, i.e., kernels and temperatures.

Just as in Remark 2, one only needs to evaluate the posterior K times (instead of \(|S_K|\)) to compute \(w_{(\cdot )}({\varvec{\theta }}^n)\).

4 Ergodicity of generalized parallel tempering

4.1 Preliminaries

We assume that the chains generated by the MCMC kernels \(p_k\), for \(k=1,\dots ,K\), are aperiodic, \(\mu _k\)-irreducible (Asmussen and Glynn 2007), and have invariant measure \(\mu _k\) on the measurable space \((\varTheta ,{\mathcal {B}}(\varTheta ))\). Let \(r\in [1,\infty )\) and \(\mu \in {\mathcal {M}}(\varTheta )\) be a “reference” probability measure. On a BIP setting, this reference measure is considered to be the posterior. We define the following spaces

Moreover, when \(r=\infty \), we define

Notice that, clearly, \({L^0_r(\varTheta ,\mu )}\subset {L_r(\varTheta ,\mu )}\). In addition we define the spaces of measures

Notice that the definition of \(L_r\)-norm depends on the reference measure \(\mu ,\) and on \(\varTheta \).

A Markov operator \(P:L_r(\varTheta ,\mu )\mapsto L_r(\varTheta ,\mu )\) with invariant measure \(\mu \) is a bounded linear operator whose norm is given by

for \(\ f\in L_r(\varTheta ,\mu ).\) It is well-known (see, e.g., Rudolf (2012)) that any Markov operator P on \(L_r(\varTheta ,\mu )\) with invariant measure \(\mu \) can be understood as a weak contraction in \(L_r(\varTheta ,\mu ),\) i.e., \(\left\| P\right\| _{L_r(\varTheta ,\mu )\mapsto L_r(\varTheta ,\mu )}\le 1\). To quantify the convergence of a Markov chains generated by a Markov operator P, we define the concept of geometric ergodicity. Let \(r\in [1,\infty ]\). A Markov operator P with invariant measure \(\mu \in {\mathcal {M}}(\varTheta )\) is said to be \(L_r(\varTheta ,\mu )\)-geometrically ergodic if for all probability measures \(\nu \in {\mathcal {M}}_r(\varTheta ,\mu )\) there exists an \(\alpha \in (0,1)\) and \(C_\nu <\infty \) such that

A related concept to \(L_2\)-geometric ergodicity is that of \(L_2\)-spectral gap. A Markov operator \(P: {L_2(\varTheta ,\mu )}\mapsto L_2(\varTheta ,\mu )\) with invariant measure \(\mu \in {\mathcal {M}}(\varTheta )\) has an \(L_2(\varTheta ,\mu )\)-spectral gap \(1-\beta >0\), with \(\beta <1\), if the following holds

The next Proposition, whose proof can be found e.g., in Rudolf (2012), relates the existence of an \(L_2\)-spectral gap to the geometric ergodicity of the chain (with \(\beta \le \alpha \), in general).

Proposition 5

Let \(P:L_2(\varTheta ,\mu )\mapsto L_2(\varTheta ,\mu )\) be a \(\mu \) reversible Markov transition operator. The existence of an \(L_2(\varTheta ,\mu )\)-spectral gap implies \(L_r(\varTheta ,\mu )\)-geometric ergodicity for any \(r\in [1,\infty ]\).

Proof

The previous claim is shown in Rudolf (2012) Proposition 3.17 and Appendix A.4. It is also shown in Rudolf (2012) that, in general, \(\beta \le \alpha \), with \(\alpha ,\beta \) given as in Eqs. (14) and (15). \(\square \)

Our path to prove ergodicity of the GPT algorithms will be to show the existence of an \(L_2\)-spectral gap.

4.2 Geometric ergodicity and \(L_2\)-spectral gap for GPT

The main results of this section are presented in Theorems 1 and 2, which show the existence of an \(L_2\)-spectral gap for both the UGPT and WGPT algorithms, respectively.

We begin with the definition of overlap between two probability measures. Such a concept will later be used to bound the spectral gap of the GPT algorithms.

Definition 7

(Density overlap) Let \(\mu _k,\mu _j\) be two probability measures on the measurable space \((\varTheta ,{\mathcal {B}}(\varTheta ))\), each having respective densities \(\pi _k(\theta ),\pi _j(\theta ), \ \theta \in \varTheta ,\) with respect to some common reference measure \(\nu _\varTheta \) also on \((\varTheta ,{\mathcal {B}}(\varTheta ))\). We define the overlap between \( \pi _k(\theta )\) and \(\pi _j(\theta )\) as

An analogous definition holds for \({\varvec{\pi }}_\sigma ,{\varvec{\pi }}_\rho \), with \(\rho ,\sigma \in S_K\).

Assumption 1

For \(k=1,\dots ,K\), let \(\mu _k\in {\mathcal {M}}_1(\varTheta ,\mu _\mathrm {pr})\) be given as in (5), \(p_k:\varTheta \times {\mathcal {B}}(\varTheta )\mapsto [0,1]\) be the Markov kernel associated to the \(k^\text {th}\) dynamics and let \(P_k:L_r(\varTheta ,\mu _k)\mapsto L_r(\varTheta ,\mu _k)\) be its corresponding \(\mu _k\) invariant Markov operator. In addition, for \(\sigma ,\rho \in S_K\), define the measures \({\varvec{\mu }}_\sigma ,{\varvec{\mu }}_\rho \in {\mathcal {M}}(\varTheta ^K)\) as in Eq. (6). Throughout this work it is assumed that:

-

C1

The Markov kernel \(p_k\) is \(\mu _k\)-reversible.

-

C2

The Markov operator \(P_k\) has an \(L_2(\varTheta ,\mu _k)\) spectral gap.

-

C3

For any \(\sigma ,\rho \in S_K\), \(\varLambda _{\sigma ,\rho }{:}{=}\eta _{{\varvec{\mu }}_\mathrm {pr}}({\varvec{\pi }}_\sigma ,{\varvec{\pi }}_\rho )>0,\) with \({\varvec{\pi }}_\sigma ,{\varvec{\pi }}_\rho \) defined as in (11).

These assumptions are relatively mild. In particular, C1 and C2 are known to hold for many commonly-used Markov transition kernels, such as RWM, Metropolis-adjusted Langevin Algorithm, Hamiltonian Monte Carlo, (generalized) preconditioned Crank-Nicolson, among others, under mild regularity conditions on \(\pi \)Asmussen and Glynn (2007); Hairer et al. (2014). Assumption C3 holds true given the construction of the product measures in Sect. 3.

We now present an auxiliary result that we will use to bound the spectral gap of both the Weighted and Unweighted GPT algorithms.

Proposition 6

Suppose that Assumption 1 holds and let \({\mathbf {P}}{:}{=}\bigotimes _{k=1}^KP_k: L_2(\varTheta ^K,{\varvec{\mu }})\mapsto L_2(\varTheta ^K,{\varvec{\mu }}),\) with invariant measure \({\varvec{\mu }}=\mu _1\times \dots \times \mu _K\). Then, \({\mathbf {P}}\) has an \(L_2(\varTheta ^K,{\varvec{\mu }})\)-spectral gap, i.e., \(\left\| {\mathbf {P}} \right\| _{L^0_2(\varTheta ^K,{\varvec{\mu }})\mapsto L^0_2(\varTheta ^K,{\varvec{\mu }})}<1\). Moreover, the Markov chain obtained from \({\mathbf {P}}\) is \(L_r\) geometrically ergodic, for any \(r\in [1,\infty ]\).

Proof

We limit ourselves to the case \(K=2\), since the case for \(K>2\) follows by induction. Denote by \(I:L_2(\varTheta ,\mu _k)\mapsto L_2(\varTheta ,\mu _k), k=1,2\) the identity Markov transition operator, and let \(f\in L_2(\varTheta ^2,{\varvec{\mu }}).\) Notice that f admits a spectral representation in \(L_2(\varTheta ^2,{\varvec{\mu }})\) given by \(f({\varvec{\theta }})=\sum _{k,j}\phi _k(\theta _1)\psi _j(\theta _2)c_{k,j}\), with \(c_{k,j}\in \mathbb {R}\), and where \(\{ \phi _k \}_{i\in {\mathbb {N}}}\) is a complete orthonormal basis (CONB) of \(L_2(\varTheta ,\mu _1)\) and \(\{\psi _j\}_{j\in {\mathbb {N}}}\) is a CONB of \(L_2(\varTheta ,\mu _2)\), so that \(\{\phi _k\otimes \psi _j\}_{k,j\in {\mathbb {N}}}\) is a CONB of \(L_2(\varTheta ^2,{\varvec{\mu }})\). Moreover, we assume that \(\phi _0=\psi _0=1\), and write, for notational simplicity \(\left\| P_1 \right\| =\left\| P_1\right\| _{L_2(\varTheta ,\mu _1)\mapsto L_2(\varTheta ,\mu _1)}\), and \(\left\| {P_2}\right\| =\left\| P_2\right\| _{L_2(\varTheta ,\mu _2)\mapsto L_2(\varTheta ,\mu _2)}\). Lastly, denote \(f_0=f-c_{0,0}\), so that \(f_0\in L_2^0(\varTheta ^2,{\varvec{\mu }})\). Notice that

Splitting the sum, we get from the orthonormality of the basis that:

Proceeding similarly, we can obtain an equivalent bound for \(\left\| (I\otimes P_2)f_0\right\| _{L_2(\varTheta ^2,{\varvec{\mu }})} ^2\). We are now ready to bound \(\left\| {\mathbf {P}}\right\| ^2_{L_2(\varTheta ^2,{\varvec{\mu }})\mapsto L_2(\varTheta ^2,{\varvec{\mu }})}\):

Assuming without loss of generality that \(\left\| P_1\right\| \ge \left\| P_2\right\| \), we can use the inequality above to bound

Thus, we have that

The previous result can easily be extended to \(K>2\). Lastly, \(L_r(\varTheta ^K,{\varvec{\mu }})\)-geometric ergodicity \(\forall r\in [1,\infty ]\) follows from proposition 5. \(\square \)

We can use the previous result to prove the geometric ergodicity of the UGPT algorithm:

Theorem 1

(Ergodicity of UGPT ) Suppose Assumption 1 holds and denote by \({\varvec{\mu }}\) the invariant measure of the UGPT Markov operator \({\mathbf {P}}^{(UW) }\). Then, \({\mathbf {P}}^{(UW) }\) has an \(L_2(\varTheta ^K,{\varvec{\mu }})\)-spectral gap. Moreover, the chain generated by \({\mathbf {P}}^{(UW) }\) is \(L_r(\varTheta ^K,{\varvec{\mu }})\)-geometrically ergodic for any \(r\in [1,\infty ]\).

Proof

Recall that \({\mathbf {P}}^\mathrm {(UW)}{:}{=}{\mathbf {Q}}^\mathrm {(UW)} {\mathbf {P}} {\mathbf {Q}}^\mathrm {(UW)}\). From the definition of operator norm, we have that

where the previous line follows from Proposition 6 and the fact that \({\mathbf {Q}}^{\mathrm {(UW)}}\) is a weak contraction in \(L_2(\varTheta ^K,{\varvec{\mu }})\) (see, e.g., Baxter and Rosenthal (1995) Proposition 1). Lastly, \(L_r(\varTheta ^K,{\varvec{\mu }})\)-geometric ergodicity \(\forall r\in [1,\infty ]\) follows from Proposition 5 and the fact that \({\mathbf {P}}^\mathrm {(UW)}\) is \({\varvec{\mu }}\)-reversible by Proposition 3. \(\square \)

We now turn to proving geometric ergodicity for the WGPT algorithm. We begin with an auxiliary result, lower-bounding the variance of a \({\varvec{\mu }}_{\mathrm {W}}\)-integrable functional \(f\in L_2(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})\).

Proposition 7

Let \(f\in L^0_2(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})\) be a \({\varvec{\mu }}_{\mathrm {W}}\)-integrable function such that \(\left\| f \right\| _{L_2(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})}=1,\) and denote by \(\mathbb {V}_{{\varvec{\mu }}_{\mathrm {W}}}[f]\), \(\mathbb {V}_{{\varvec{\mu }}_\sigma }[f]\) the variance of f with respect to \({\varvec{\mu }}_{\mathrm {W}},{\varvec{\mu }}_\sigma \), respectively with \(\sigma \in S_K\). In addition, suppose Assumption 1 holds. Then, it can be shown that

with \(\varLambda _m=\underset{\sigma ,\rho \in S_K}{\min }\{\varLambda _{\sigma ,\rho }\}\) and \(\varLambda _{\sigma ,\rho }\) as in Assumption C3.

Proof

The proof is technical and tedious and is presented in Appendix A. \(\square \)

We are finally able to prove the ergodicity of the WGPT algorithm.

Theorem 2

(Ergodicity of WGPT) Suppose Assumption 1 holds for some \(r\in [1,\infty ]\) and denote by \({\varvec{\mu }}_W \) the invariant measure of the WGPT Markov operator \({\mathbf {P}}^{(W) }\). Then, \({\mathbf {P}}^{(W) }\) has an \(L_2(\varTheta ^K,{\varvec{\mu }}_W )\)-spectral gap. Moreover, the chain generated by \({\mathbf {P}}^{(W) }\) is \(L_r(\varTheta ^K,{\varvec{\mu }}_W )\) geometrically ergodic for any \(r\in [1,\infty ]\).

Proof

Let \({\mathcal {L}}{:}{=} \{f\in L_2^0(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}}) : \left\| f\right\| _{L_2^0(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})}=1\}\), and, for notational clarity, write

Then, from the definition of operator norm,

where the second to last line follows from the convexity of \((\cdot )^2\) and the last line follows from the definition of \(w_\sigma \) and \({\varvec{\mu }}_{\mathrm {W}}.\) Now, let \(\bar{f}_\sigma {:}{=}{\varvec{\mu }}_\sigma (f)\). Notice that we have

Thus, multiplying and dividing I by

we obtain from the definition of \(\left\| {\mathbf {P}}_\sigma \right\| _{L^0_2}^2\) that:

Replacing Eq. (19) into Eq. (17), we get

Thus, \(\mathbf {{{\varvec{P}}^\text {(w)}}}\) has an \(L_2(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})\) spectral gap. Once again, \(L_r(\varTheta ^K,{\varvec{\mu }}_{\mathrm {W}})\)-geometric ergodicity (with \(r\in [1,\infty ])\) follows from Proposition 5 and the fact that \({\mathbf {P}}^\mathrm {(W)}\) is \({\varvec{\mu }}_{\mathrm {W}}\)-reversible by Proposition 4. \(\square \)

4.2.1 Discussion and comparison to similar theoretical result

Theorems 1 and 2 state the existence of an \(L_2\)-spectral gap, hence \(L_r\)-geometric ergodicity for both the UGPT and the WGPT algorithm. Their proof provides also a quantification of the \(L_2\)-spectral gap in terms of the \(L_2\)-spectral gap of each individual Markov operator \(P_k\). Such a bound is, however, not satisfactory as it does not use any information on the temperature and it just states that the \(L_2\)-spectral gap of the UWPT and WGPT chain is not worse that the smallest \(L_2\)-spectral gap among the individual chains (without swapping). This result is not sharp, as it can be evidenced in the numerical section, where a substantial improvement in convergence is achieved by our methods.

Convergence results for the standard parallel tempering algorithm have been obtained in the works (Miasojedow et al. 2013) and (Woodard et al. 2009). In particular, the work (Miasojedow et al. 2013) has proved geometric ergodicity for the pairwise parallel tempering algorithm using the standard drift condition construction of Meyn and Tweedie (2012). It is unclear from that work which convergence rate is obtained for the whole algorithm. In comparison, our results are given in terms of spectral gaps. On the other hand, the work Woodard et al. (2009) presents conditions for rapid mixing of a particular type of parallel tempering algorithm, where the transition kernel is to be understood as a convex combination of such kernels, as opposed to our case, where it is to be understood as a tensorization. Their obtained results provide, for their setting, a better convergence rate that the one we obtained for the UGPT. We believe that their result can be extended to the UGPT algorithm, and this will be the focus of future work. On the other hand, the use of the ideas in Woodard et al. (2009) for the WGPT algorithm seems more problematic.

5 Numerical experiments

We now present four academic examples to illustrate the efficiency of both GPT algorithms discussed herein and compare them to the more traditional random walk Metropolis and standard PT algorithms. Notice that we compare the different algorithms in their simplest version that uses random walk Metropolis as a base transition kernel. The only exception is in Sect. 5.7, which presents a high-dimensional BIP for which the preconditioned Crank-Nicolson Cotter et al. (2013) is used as the base method in all algorithms instead of RWM. More advanced samplers, such as Adaptive metropolis (Haario et al. 2006, 2001), or other transition kernels, could be used as well to replace RWM or pCN. Experiments 5.3, 5.4 and 5.5 were run in a Dell (R) Precision (TM) T3620 workstation with Intel(R) Core(TM) i7-7700 CPU with 32 GB of RAM. Numerical simulations in Sects. 5.3 and 5.5 were run on a single thread, while the numerical simulations in Sect. 5.4 were run on an embarrassingly parallel fashion over 8 threads using the Message Passing Interface (MPI) and the Python package MPI4py (Dalcín et al. 2005). Lastly, Experiments 5.6 and 5.7 were run on the Fidis cluster of the EPFL. The scripts used to generate the results presented in this section were written in Python 3.6, and can be found in https://doi.org/10.5281/zenodo.3700048.

5.1 Implementation remarks

In most Bayesian inverse problems, particularly those dealing with large-scale computational models, the computational cost is dominated by the evaluation of the forward operator, which can be, for example, the numerical approximation of a possibly non-linear partial differential equation. In the case where all possible permutations are considered (i.e., \(S_K={\mathscr {S}}_K\)), there are K! possible permutations of the states, the computation of the swapping ratio in the GPT algorithms can become prohibitively expensive if one is to evaluate K! forward models, even for moderate values of K. This problem can be circumvented by storing the values \(\pi (\theta ^{(n)}_k)\), \(k=1,\dots ,K,\) \(n=1,\dots N\), since the swapping ratio for GPT consists of permutations of these values, divided by the temperature parameters. Thus, “only” K forward model evaluations need to be computed at each step and the swapping ratio can be computed at negligible cost for moderate values of K.

There is, however, a clear trade-off between the choice of K (which has a direct impact on the efficiency of the method), and the computational cost associated to (G)PT. Intuitively, a large K would provide a better mixing, however, it requires a larger number of forward model evaluations, which tends to be costly. We remark that such a trade-off between efficiency and number of function evaluations is also present in some advanced MCMC methods, such as Hamiltonian Monte Carlo, where one needs to choose a number of time steps for the time integration (see, e.g., Beskos et al. (2017)). Furthermore, there is an additional constraint when choosing \(S_K={\mathscr {S}}_K\), and it is the permutation cost associated to computing \(r^\mathrm {{(UW)}}({\varvec{\theta }},\sigma )\) and \(w_\sigma ({\varvec{\theta }})\). In particular, the computation of either of those quantities has a complexity of K! thus, this cost will eventually surpass the cost of evaluating the forward model K times. This is illustrated in Fig. 1, where we plot the cost per sample of two different posteriors vs K. These posteriors are taken from the numerical examples in Sects. 5.5 and 5.7. The posterior in Sect. 5.5 is rather inexpensive to evaluate, since one can compute the forward map \({\mathcal {F}}\) analytically (the difficulty associated to sampling from that posterior comes from its high multi-modality). On the contrary, evaluating the posterior in Sect. 5.7 requires numerically approximating the solution to a time-dependent, second-order PDE, and as such, evaluating such a posterior is costly. As we can see for \(K\le 6\), the computational cost in both cases is dominated by the forward model evaluation. Notice that for \(K\le 9\), the cost per sample from posterior (27) is dominated by the evaluation of the forward model.

Thus, for high values of K, it is advisable to only consider the union of properly chosen semi-groups A, B of \({\mathscr {S}}_K,\) with \( A\cap B\ne \emptyset ,\) such that A, B generates \({\mathscr {S}}_K\) (i.e., if the smallest semi-groups that contains A and B is \({\mathscr {S}}_K\) itself), and \(|A\cup B|<|{\mathscr {S}}_K|=K!,\) which is referred to as partial Infinite Swapping in the continuous case (Dupuis et al. 2012). One particular way of choosing A and B is to consider, for example, A to be the set of permutations that only permute the indices associated with relatively low temperatures while leaving the other indices unchanged, and B as the set of permutations for the indices of relatively high temperatures, while leaving the other indices unchanged. Intuitively, swaps between temperatures that are, in a sense, “close” to each other tend to be chosen with a higher probability. We refer the reader to Dupuis et al. (2012) section 6.2 for a further discussion on this approach in the continuous-time setting. One additional idea would be to consider swapping schemes that, for example, only permute states between \(\mu _i\) and \(\mu _{i+1}, \mu _{i+2}, \dots , \mu _{i+\ell }\) for some user-defined \(\ell \ge 1\) and any given \(i=1,2,\dots , K-1\). The intuition behind this choice also being that swaps between posteriors that are at close temperatures are more likely to occur than swaps between posteriors with a high temperature difference. We intend to explore this further in depth in future work.

We reiterate that the total number of temperatures K depends heavily on the problem and the computational budget available (Doll et al. 2012; Van Der Sluys et al. 2008; Yu et al. 2016) For the experiments considered in the work we chose \(K=4\) or \(K=5\), which provide an acceptable compromise between acceleration and cost.

5.2 Experimental setup

We now present an experimental setup common to all the numerical examples presented in the following subsections. In particular, all the experiments presented in this work utilize a base method given by either RWM (for Experiments 5.3 through 5.6) or pCN (used in Experiment 5.7) for the Markov transition kernels p. Furthermore, we take \(S_K={\mathscr {S}}_K\) for all experiments, where \(K=5\) for Experiment 5.5 and \(K=4\) for the other 4 experiments. In addition, we follow the rule of thumb of Earl and Deem (2005) for the choice of temperatures, setting, for each experiment, \(T_{k}=a^{k-1},\) \(k=1,\ldots ,K\), for some positive constant \(a>1\). The particular choice of a is problem-dependent and it is generally chosen so that \(\mu _K\) becomes sufficiently simple to explore. For each experiment we implement 5 MCMC algorithms to sample from a given posterior \(\mu =\mu _1\), namely, the base (untempered) method (either RWM or pCN), and such a method combined with the standard PT algorithm (PT) with \(N_s=1,\) the PSDPT algorithm of Łącki and Miasojedow (2016), and both versions of GPT. For our setting, the tempered algorithms have a cost (in terms of number of likelihood evaluations) that is K times larger than the base method. Thus, to obtain a fair comparison across all algorithms, we run the chain for the base method K times longer. Lastly, given some problem-dependent quantity of interest \({\mathcal {Q}}\), we assess the efficiency of our proposed algorithms to compute the posterior expectation of \({\mathcal {Q}}\) by comparing the mean square error (Experiments 5.3–5.5), for which the exact value of \(\mathbb {E}_{\mu }[{\mathcal {Q}}]\) is known, or the variance (Experiments 5.6–5.7) of the ergodic estimator \(\hat{{\mathcal {Q}}}\) obtained over \(N_\text {runs}\) independent runs of each algorithm.

5.3 Density concentrated over a quarter circle-shaped manifold

Let \(\mu \) be a probability measure that has density \(\pi \) with respect to the uniform Lebesgue measure on the unit square \(\mu _\mathrm {pr}={\mathcal {U}}([0,1]^2)\) given by

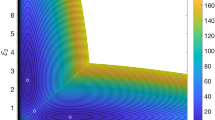

where \(\theta =(\theta _1,\theta _2),\) Z is the normalization constant, and \({\mathbf {1}}_{[0,1]^2}\) is the indicator function over the unit square. We remark that this example is not of particular interest per se; however, it can be used to illustrate some of the advantages of the algorithms discussed herein. The difficulty of sampling from such a distribution comes from the fact that its density is concentrated over a quarter circle-shaped manifold, as can be seen on the left-most plot in Fig. 2. This in turn will imply that a single level RWM chain would need to take very small steps in order to properly explore such density.

We aim at estimating \(\hat{{\mathcal {Q}}}_i=\mathbb {E}_{\mu }[\theta _i]\approx {\hat{\theta _i}}\), for \(i=1,2\). For the tempered algorithms (PT, PSDPT, UGPT, and WGPT), we consider \(K=4\) temperatures and choose \(T_4=5000\), so that the tempered density \(\pi _4\) becomes sufficiently simple to explore the target distribution. This gives \(T_1=1, T_2=17.1, T_3=292.4, T_4=5000\).

We compare the quality of our algorithms by examining the variance of the estimators \({\hat{\theta _i}},\) \(i=1,2\) computed over \(N_\text {runs}=100\) independent MCMC runs of each algorithm. For the tempered algorithms, each estimator is obtained by running the inversion experiment for \(N=25,000\) samples per run, discarding the first 20% of the samples (5000) as a burn-in. Accordingly, we run the single-chain random walk Metropolis algorithm for \(N_\mathrm {RWM}=K N=100, 000\) iterations, per run, and discard the first 20% of the samples obtained with the RWM algorithm (20,000) as a burn-in.

The untempered RWM algorithm uses Gaussian proposals with covariance matrix \(\Sigma _\mathrm {RWM}=\rho _1^2 I_{2\times 2},\) where \(I_{2\times 2}\) is the identity matrix in \(\mathbb {R}^{2\times 2}\), and \(\rho ^2_1=0.022\) is chosen in order to obtain an acceptance rate of around 0.23. For the tempered algorithms (i.e., PT, PSDPT, and both versions of GPT), we use \(K=4\) RWM kernels \(p_k\), \(k=1,2,3,4\), with proposal density \(q_{\mathrm {prop},k}(\theta ^{(n)}_k,\cdot )={\mathcal {N}}(\theta _k^{(n)},\rho _k^2I_{2\times 2})\), where \(\rho _k\) is shown in Table 1. This choice of \(\rho _k\) gives an acceptance rate for each chain of around 0.23. Notice that \(\rho _1\) corresponds to the “step-size” of the single-temperature RWM algorithm.

Experimental results for the ergodic run are shown in Table 2. We can see how both GPT algorithms provide a gain over RWM, PT and PSDPT algorithms, with the WGPT algorithm providing the largest gain. Scatter plots of the samples obtained with each method are presented in Fig. 3. Here, the subplot titled “WGPT” (bottom row, middle) corresponds to weighted samples from \({\varvec{\mu }}_{\text {W}}\), with weight \({\hat{w}}\) as in (13), while the one titled “WGPT (inv)” (bottom row, right) corresponds to samples from \({\varvec{\mu }}_{\mathrm {W}}\) without any post-processing. Notice how the samples from the latter concentrates over a thicker manifold, which in turn makes the target density easier to explore when using state-dependent Markov transition kernels.

5.4 Multiple source elliptic BIP

We now consider a slightly more challenging problem, for which we try to recover the probability distribution of the location of a source term in a Poisson equation (Eq. 20), based on some noisy measured data. Let \((\varTheta ,{\mathcal {B}}(\varTheta ),\mu _\mathrm {pr})\) be the measure space, set \(\varTheta ={\bar{D}}{:}{=}[0,1]^2\), with Lebesgue (uniform) measure \(\mu _\mathrm {pr},\) and consider the following Poisson’s equation with homogeneous boundary conditions:

Such equation can model, for example, the electrostatic potential \(u{:}{=}u( x,\theta )\) generated by a charge density \(f( x,\theta )\) depending on an uncertain location parameter \(\theta \in \varTheta \). Data y is recorded on an array of \(64\times 64\) equally-spaced points in D by solving (20) with a forcing term given by

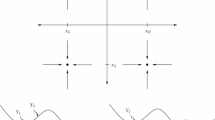

where the true source locations \(s^{(i)}, \ i=1,2,3,4,\) are given by \(s^{(1)}=(0.2,0.2),\ s^{(2)}=(0.2,0.8),\ s^{(3)}=(0.8,0.2),\) and \(s^{(4)}=(0.8,0.8)\). Such data is assumed to be polluted by an additive Gaussian noise with distribution \({\mathcal {N}}(0,\eta ^2 I_{64\times 64})\), with \(\eta =3.2\times 10^{-6}\), (which corresponds to a 1% noise) and where \(I_{64\times 64}\) is the 64-dimensional identity matrix. Thus, we set \((Y,\left\| \cdot \right\| _Y)=(\mathbb {R}^{64\times 64},\left\| \cdot \right\| )\), with \(\left\| A \right\| =(64\eta )^{-2}\left\| A \right\| ^2_F \), for some arbitrary matrix \(A\in \mathbb {R}^{64\times 64},\) where \(\left\| \cdot \right\| _F\) is the Frobenius norm. We assume a misspecified model where we only consider a single source in Eq. (21). That, is, we construct our forward operator \({\mathcal {F}}: \varTheta \mapsto Y\) by solving (20) with a source term given by

In this particular setting, this leads to a posterior distribution with four modes since the prior density is uniform in the domain and the likelihood has a local maximum whenever \((\theta _1,\theta _2)=(s_1^{(i)},s_2^{(i)}),\ i=1,2,3,4\). The Bayesian inverse problem at hand can be understood as sampling from the posterior measure \(\mu \), which has a density with respect to the prior \(\mu _\mathrm {pr}={\mathcal {U}}({\bar{D}})\) given by

for some (intractable) normalization constant Z as in (4). We remark that the solution to (20) with a forcing term of the form of (22) is approximated using a second-order accurate finite difference approximation with grid-size \(h=1/64\) on each spatial component.

The difficulty in sampling from the current BIP arises from the fact that the resulting posterior \(\mu \) is multi-modal and the number of modes is not known apriori (see Fig. 4).

We follow a similar experimental setup to the previous example, and aim at estimating \(\hat{{\mathcal {Q}}}_i=\mathbb {E}_{\mu }[\theta _i]\approx {\hat{\theta _i}}\), for \(i=1,2\). We use \(K=4\) temperatures and \(N_\text {runs}=100\). For the PT, PSDPT and GPT algorithms, four different temperatures are used, with \(T_1=1,\ \ T_2=7.36,\ \ T_3=54.28,\) and \(T_4=400\). For each run, we obtain \(N=25,000\) samples with the PT, PSDPT, and both GPT algorithms, and \(N=100,000\) samples with RWM, discarding the first 20% of the samples in both cases (5000, 20000, respectively) as a burn-in. On each of the tempered chains, we use RWM proposals, with step-sizes shown in Table 3. This choice of step size provides an acceptance rate of about 0.24 across all tempered chains and all tempered algorithms. For the single-temperature RWM run, we choose a larger step size (\(\rho _\mathrm {RWM}=0.16\)) so that the RWM algorithm is able to explore the whole distribution. Such a choice, however, provides a smaller acceptance rate of about 0.01 for the single-chain RWM.

Experimental results are shown in Table 4. Once again, we can see how both GPT algorithms provide a gain over RWM and both variations of the PT algorithm, with the WGPT algorithm providing a larger gain. Scatter-plots of the obtained samples are shown in Fig. 4.

Scatterplots of the samples from \(\mu \) obtained with different algorithms on a single run. Top, from left to right: random walk Metropolis, PT and PSDPT. Bottom, from left to right: UGPT, WGPT (after re-weighting the samples), and WGPT, before re-weighting the samples. As we can see, WGPT (before re-weighting) is able to “connect” the parameter space

5.5 1D wave source inversion

We consider a small variation of example 5.1 in Motamed and Appelo (2019). Let \((\varTheta ,{\mathcal {B}}(\varTheta ),\mu _\mathrm {pr})\) be a measure space, with \(\varTheta =[-5,5]\) and uniform (Lebesgue) measure \(\mu _\mathrm {pr}\), and let \(I=(0,T]\) be a time interval. Consider the following Cauchy problem for the 1D wave equation:

Here, \(h(x,\theta )\) acts as a source term generating a initial wave pulse. Notice that Eq. (23) can be easily solved using d’Alembert’s formula, namely

Synthetic data y is generated by solving Eq. (23) with initial data

with \(\theta _1=-3,\theta _2=3\) and observed at \(N_R=11\) equally-spaced receiver locations between \(R_1=-5\) and \(R_2=5\) on \(N_T=1000\) time instants between \(t=0\) and \(T=5\). The signal recorded by each receiver is assumed to be polluted by additive Gaussian noise \({\mathcal {N}}(0,\eta ^2 I_{1000\times 1000})\), with \(\eta =0.01\), which corresponds to roughly 1% noise. We set \((Y,\left\| \right\| _{Y})=(\mathbb {R}^{11\times 1000}, \left\| \cdot \right\| _{\Sigma }), \) with

\(A\in \mathbb {R}^{11\times 1000}\). Once again, we assume a misspecified model where we construct our forward operator \({\mathcal {F}}: \varTheta \mapsto Y\) by solving (23) with a source term given by

The Bayesian inverse problem at hand can be understood as sampling from the posterior measure \(\mu \), which has a density with respect to the prior \(\mu _\mathrm {pr}={\mathcal {U}}([-5,5])\) given by

for some (intractable) normalization constant Z as in (4). The difficulty in solving this BIP comes from the high multi-modality of the potential \(\varPhi (\theta ;y)\), as it can be seen in Fig. 6. This, shape of \(\varPhi (\theta ;y)\) makes the posterior difficult to explore using local proposals.

In this case, we consider \(K=5\), and set \(T_1=1,\ \ T_2=5,\ \ T_3=25,\) \(T_4=125\) and \(T_5=625\). Notice that from Fig. 1, the computational cost per sample is dominated by the evaluation of (24) for values of \(K\le 6\). Once again, we obtain \(N=25,000\) samples with the PT, PSDPT, and both GPT algorithms, and \(N=125,000\) samples with RWM, discarding the first 20% of the samples in both cases (5000, 25000, respectively) as a burn-in. On each of the tempered chains, we use RWM proposals, with step-sizes shown in Table 5. This choice of step size provides an acceptance rate of about 0.4 across all tempered chains and all tempered algorithms. The choice of step-size for the RWM algorithm is done in such a way that it can “jump” modes, which are at distance of roughly 1/2.

We consider \({\mathcal {Q}}=\theta \) as a quantity of interest. Experimental results are shown in Table 6. Once again, we can see how both GPT algorithms provide a gain over RWM and both variations of the PT algorithm, with the WGPT algorithm providing the largest gain. Notice that, given the high muti-modality of the posterior at hand, the simple RWM algorithm is not well-suited for this type of distribution, as it can be seen from its large variance; this suggests that the RWM usually gets “stuck” at one mode of the posterior. Notice that, intuitively, due to the symmetric nature of the potential, one would expect the true mean of \(\theta \) to be close to 0. This value was computed by means of numerical integration and is given by \(\mathbb {E}_\mu [\theta ]=0.08211\).

5.6 Acoustic wave source inversion

We consider a more challenging problem, for which we try to recover the probability distribution of the spatial location of a (point-like) source term, together with the material properties of the medium, on an acoustic wave equation (Eq. 25), based on some noisy measured data. We begin by describing the mathematical model of such wave phenomena. Let \((\varTheta ,{\mathcal {B}}(\varTheta ),\mu _\mathrm {pr})\) be the measure space , with Lebesgue (uniform) measure \(\mu _\mathrm {pr}\), set \({\bar{D}}{:}{=}[0,3]\times [0,2],\) \(\partial D={\bar{\varGamma }}_{N}\cup {\bar{\varGamma }}_{\mathrm {Abs}}, \mathring{\varGamma _{\mathrm {N}}}\cap \mathring{\varGamma _{\mathrm {Abs}}}=0,\) \(|{\varGamma _{\mathrm {N}}}|, |{\varGamma _{\mathrm {Abs}}}|>0,\) and define \(\varTheta =D\times \varTheta _\alpha \times \varTheta _\beta ,\) where \(\varTheta _\alpha =[6,14]\), \(\varTheta _\beta =[4500,5500]\). Here, we are considering a rectangular spatial domain D, with the top boundary denoted by \({\varGamma _{\mathrm {N}}}\) and the side and bottom boundaries denoted by \({\varGamma _{\mathrm {Abs}}}\). Lastly, let \(\theta {:}{=}(s_1,s_2,\alpha ,\beta )\in \varTheta \). Consider the following acoustic wave equation with absorbing boundary conditions:

where \(u=u(x,t,\theta )\), and \(f=f(x,t,\theta )\). Here the boundary condition on the top boundary \({\varGamma _{\mathrm {N}}}\) corresponds to a Neumann boundary condition, while the boundary condition on \({\varGamma _{\mathrm {Abs}}}\) corresponds to the so-called absorbing boundary condition, a type of artificial boundary condition used to minimize reflection of wave hitting the boundary. Data \(y\in Y\) is obtained by solving Eq. (25) with a force term given by