Abstract

Despite considerable progress in understanding the journal evaluation system in China, empirical evidence remains limited regarding the impact of changes in journal rank (CJR) on scientific output. By employing the difference-in-differences (DID) framework, we exploit panel data from 2015 to 2019 to examine the effect of changes in journal ranks on the number of publications by Chinese researchers. Our analysis involves comparing two groups—journals that experienced a change in ranking and journals that did not—before and after the change in ranking. Our analysis reveals a statistically significant negative effect. The results suggest that CJR has led to a 14.81% decrease in the number of publications per 100, relative to the sample mean value. The observed negative impact is consistently confirmed through robustness tests that involve excluding journals that do not adhere to best practices, removing retracted publications from the calculation of publication numbers, and randomly selecting journals with changed ranks for estimation. We also observed that CJR exhibits a widespread but unequal effect. The negative effect is particularly pronounced in the academic domains of life sciences and physical sciences, in journals that experience declines in rank, and in less-prestigious universities. We contribute to the literature on how changes in journal rankings affect researchers’ academic behavior and the determinants of scholarly publication productivity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

For decades, China has demonstrated a steadfast dedication to promoting scientific innovation, positioning itself as one of the leading countries in terms of international scientific publications (Brainard & Normile, 2022). To guide researchers’ publishing behavior and establish criteria for academic impact evaluation, various journal lists and indexing services have been implemented (Kulczycki et al., 2022). Among these, the CAS Journal Ranking, an annual journal ranking system released by National Science Library of Chinese Academy of Sciences (CAS), holds a prominent position. This journal ranking has been widely embraced by universities and academic institutions to evaluate scientific endeavors and assess academic achievements. Though considerable progress has been made in understanding the journal evaluation system in China (Huang et al., 2021), there is still a lack of empirical evidence regarding the impact of the CAS Journal Ranking on researchers’ scientific output.

Understanding both the scope and the nature of the journal ranking system’s impact is crucial for two main reasons. First, within China’s academic ecosystem, the CAS Journal Ranking continues to hold immense importance in determining the allocation of national-level research funding and the academic promotion of researchers. Second, the CAS Journal Ranking classifies journals into hierarchical tiers that undergo annual variations, but it remains uncertain whether this system effectively guides researchers in making informed submission decisions. Thus, it is crucial to delve deeply into an evaluation of the impact caused by changes in journal rank (CJR), as documented in the CAS Journal Ranking, on scientific output. This endeavor would offer valuable empirical evidence to the academic community, facilitating the efficient assessment of research outputs.

To accomplish this objective, this analysis involves the construction of a unique dataset comprising 1024 journals from the CAS Journal Ranking spanning the years 2015–2019. The data include highly detailed information about each journal: not only its impact factor, publication cycle, and publisher, but also CJR potentially affecting researchers’ scientific output. For each journal and year, we measure the scientific output of Chinese researchers by considering the number of publications, which is then divided by 100 for ease of scaling. This comprehensive panel dataset enables us to examine the scientific output of Chinese scholars before and after the implementation of CJR. To conduct our analysis, we employ the difference-in-differences (DID) method (Goodman-Bacon, 2021; Sun et al., 2024). This approach involves comparing the publication output performance of Chinese researchers in journals before and after documented CJR with that of journals that did not undergo any rank changes during the same period.

In detail, we have formulated four research questions:

RQ1: Is there a statistically significant effect of changes in journal rank on the publication output of Chinese researchers?

RQ2: Does the effect vary across different academic fields?

RQ3: Does the effect vary in journals experiencing different changing trends?

RQ4: Does the effect vary for authors in different institutional affiliations?

This study fits into the existing literature on how policy implementation affects the academic behavior of researchers (Franzoni et al., 2011). Previous research has extensively examined the academic incentives that drive researchers (Hicks, 2012). Śpiewanowski and Talavera (2021) studied the publication behavior of a group of UK-based scholars after the 2015 ABS journal ranking change and found that they tended to publish less in those downgraded journals while publishing more frequently in those upgraded journals. By comparing the publication behaviors before and after the second national research assessment for Italian sociologists, Akbaritabar et al. (2021) revealed that the potentially distorting impact of institutional signals on publication patterns, as these sociologists tended to publish more in journals deemed influential for assessment purposes, even though some of these journals may have questionable quality. A recent study by Fry et al. (2023) investigated the impact of a nationwide ranking system in Indonesia, which modestly improved publication rates in top-ranked journals. In China, a newly implemented research evaluation policy requires scholars to publish at least one-third of their work in Chinese domestic outlets while maintaining engagement with the international research community (Huang, 2020). This policy has resulted in academic economists with foreign qualifications publishing a greater number of high-quality papers in China’s journals than do their peers with Chinese degrees (Liang et al., 2022). Recently the National Science Library of Chinese Academy of Sciences officially announced their 2024 list of suspect journals; Chinese scholars have typically avoided submitting to these journals once they have been included in the list (Mallapaty, 2024). Building upon this body of literature, our study focuses on a relatively understudied impact of the change in research evaluation system in China, namely CJR documented in the CAS Journal Ranking. Li et al. (2022) measured the influence of Journal Impact Factor (JIF) and two other journal ranking systems, Journal Citation Reports (JCR) and CAS Journal Ranking on scholars' manuscript submission behaviors across different countries. It is found that for Chinese scholars, both JCR and CAS Journal Rankings had a positive effect on their submission behavior, but the impact of the CAS Journal Ranking was significantly greater. However, this study only investigated the static influence of CAS Journal Ranking as a general ranking system; no effect of changes in the ranking system has been explored. Our research provides empirical evidence of changes in researchers’ scientific publishing behavior in response to changes in this nationwide journal ranking system.

Our study also contributes to the literature on the determinants of scholarly publication productivity (Gaston et al., 2020; Wahid et al., 2022). Previous research has explored individual factors such as academic age (Gonzalez-Brambila & Veloso, 2007), strategic research agendas (Santos et al., 2022), and environmental factors including national funding (Jacob & Lefgren, 2011a, 2011b; Yu et al., 2022). In a recent study of 37 Romanian public universities, Kifor et al. (2023) proved that university category determined by institutional prestige and reputation had a strong correlation with research productivity. Brito et al. (2023) analyzed the effect of prolific collaborations on scholars’ productivity and found a large positive effect in formal and applied disciplines. By examining researchers from the discipline of environmental science and engineering in Chinese universities, Peng et al. (2023) found a negative association between the researcher geographic mobility and publication productivity. Our analysis enriches this branch of literature by providing empirical evidence that publication productivity of scholars can be affected by the research evaluation system, which plays an essential role in determining their job offers and academic promotions. Furthermore, through a heterogeneity analysis, we are able to observe how scholars from prestigious universities and less-prestigious universities adopt different publication strategies in response to the CJR. This distinction holds substantial significance in evaluating the influence of a journal assessment system on scientific productivity of scholars in China.

From a methodology perspective, our study enriches empirical research applying the DID method in scientometrics. With the growing volume of large-scale scientific corpus, researchers have been exploring opportunities to apply DID methods to scientometrics problems, seeking clearer and more accurate answers. Makkonen and Mitze (2016) used the DID method to empirically test the effect of joining European Union on scientific collaborations. Huang et al. (2023) conducted a DID estimation to investigate how winning a Nobel Prize influences the laureate’s future citation impact compared to coauthors in the same prizewinning paper and found no significant effect. Zhang and Ma (2023) applied a DID method to investigate the impact of open data policies on article-level citations and found a dual effect: citations were boosted shortly, but the decay was also accelerated. Combining matching and DID methods, Zheng et al. (2024) empirically tested the causal relationship between gold open access and academic purification, finding that gold open access could speed up the detection and retraction of flawed articles. By employing a DID approach, Ali (2024) set a quasi-experimental design to examine the effect of adopting scientometric evaluations on intensification of international publications in Egyptian universities; the spillover effect on the research centres that do not employ scientometric evaluations was also confirmed. Theses research have demonstrated the usefulness of DID approaches in impact evaluation and science policy analysis. DID approaches have also been applied in an expanded setting in scientometrics. In a recent DID study on ACM Fellows by Jiang et al. (2024), it was found that moving from big tech corporations to elite universities tends to enhance a scientists’ collaborations and productivity, while the opposite mobility paths had a negative effect. Zhang et al. (2024) compared awardees granted industrial PhD funding with their counterparts in terms of research performance in their early careers in a DID setting and found academic engagement with industry enhances the quantity and quality of research output. Our current study contributes to the literature by providing a rigorous examination of how a change in a journal evaluation system influences scholars’ productivity.

The remainder of the study is organized as follows. "Context and Background" Section provides the context and background. "Data and Methodology" Section describes the data and identification strategy, and "Results" Section reports the main empirical results. "Discussion" Section discusses the findings, from which conclusions are drawn in "Conclusions" Section.

Context and Background

Overview of CAS

Established in 1949, the Chinese Academy of Sciences (CAS) is a leading research institution and holds considerable authority in the academic community in China. CAS plays a pivotal role in advancing scientific research and promoting innovation. It actively cultivates collaboration among esteemed scientists, facilitates interdisciplinary investigations, and serves as a platform for the exchange of scientific ideas and dissemination of knowledge. Committed to pushing the frontiers of scientific understanding, CAS addresses crucial societal challenges through its diverse research endeavors spanning various disciplines, including mathematics, physics, chemistry, biology, earth sciences, engineering, and information technology. Beyond its research pursuits, CAS also plays a crucial role in formulating national science and technology policies.

The CAS Journal Ranking

In 2004, the National Science Library of Chinese Academy of Sciences (CAS) launched its own journal ranking, which is known as CAS Journal Ranking (Tong et al., 2023), to provide a comprehensive and authoritative cross-disciplinary assessment of academic journals.Footnote 1 The research areas are grouped into 13 major categories, which cover 176 Journal Citation Reports (JCR) subject categories. The CAS Journal Ranking employs a tiered ranking system in which Web of Science (WoS) journals are divided into four partitions based on the average of three recent Journal Impact Factors (JIFs) of each journal. It follows a roughly pyramidal distribution: Tier 1 includes the top 5% journals with the highest average three-year JIF in their disciplines, while Tier 2, Tier 3, and Tier 4 contain 6%–20%, 21%–50%, and the remaining journals respectively (Huang et al., 2021). This journal ranking serves as a prominent tool for assessing and categorizing journals within the Chinese academic community. It enables researchers and institutions to identify high-quality journals suitable for publishing their research while also enhancing their academic impact. It is widely utilized by universities, research institutions, funding agencies, and government bodies to make informed decisions regarding research funding allocation, academic promotions, and research evaluations.

The ranking is updated on a yearly basis to reflect alterations in the academic landscape and emerging trends in scientific research. Each journal can experience an upgrade, downgrade or no change in ranking tiers every year. The annual release of the CAS Journal Ranking provides the research community with an up-to-date and reliable resource for journal evaluation and facilitates informed decision-making in academia. Leveraging the updated information provided by the CAS Journal Ranking, we compile a panel dataset from the documented CJR within the CAS Journal Ranking. This allows for empirical estimation of the impact of CJR on the scholarly productivity of Chinese researchers.

Data and Methodology

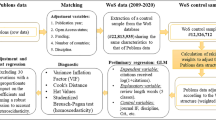

CAS Journal Ranking database

Our analysis begins by utilizing a sample from the yearly releases of the CAS Journal Ranking. To access this data, we collaborated with a librarian and obtained the relevant publications from the years 2015–2019. To mitigate the potential impact of two significant national policies implemented by the Chinese government in 2020Footnote 2 (Ministry of Education and Ministry of Science and Technology, 2020), which introduced transformative guidelines for academic publishing and research evaluation, we did not incorporate data after 2019.

To ensure the reliability of our analysis, we excluded 2753 journals with incomplete records during the time span of our analysis: any that were either initially added or removed from the CAS Journal Ranking within the 2015–2019 window were not considered. Furthermore, we solely focused on journals that experienced a single change in the CAS Journal Ranking within this time frame. 2815 journals exhibiting inconsistent changes (e.g., falling in rank in 2016, then rising in 2017) were excluded from the analysis.

As shown in Fig. 1A, the resultant comprehensive dataset comprises 4345 journals that experienced either no change (control group) or consistent changes (treatment group) during a specific year between 2015 and 2019. To accurately assess the effects and establish comparable treatment and control groups, we adopted the matched-pair design methodology employed by Li et al. (2019). This involved identifying control groups with similar citation network characteristics, on the premise that authors who submit to journals in the treatment group are likely to consider submitting to the control groups within that citation network. By systematically matching the treated journals with no more than three other representative journals, we attained a final dataset of 1024 journals: 348 in the treatment group and 676 in the control group. The sample selection is illustrated in Fig. 1. The final panel dataset includes 5120 journal-year records, covering the five-year period from 2015 to 2019.

Process of journal selection. In A, a total of 2753 journals were initially added or removed from the CAS Journal Ranking, and an additional 2815 journals with inconsistent changes in journal rank were excluded from further analysis. A subset of 4345 journals remained, which experienced either no change or consistent changes during the period from 2015 to 2019. In B from this subset of 4345 journals, 676 journals were identified as the control group, while 348 were identified as the treatment group for empirical analysis

Variable Definition and Description Analysis

This study aims to examine the influence of CJR, as documented in the CAS Journal Ranking on the publication output of Chinese researchers. Specifically, we seek to shed light on how the national research assessment impacts the scientific productivity of scholars in China. To that end, we examine specific variables related to scholars’ scientific productivity.

Measuring Number of Publications of Chinese Researchers

One key measure of scientific productivity is the number of publications by Chinese researchers. This was obtained as an annual figure by extracting data from the Web of Science database, specifically focusing on the publication history within each of the selected 1024 journals. However, given the inherent inter-journal variations in the annual number of publications, direct comparisons of publication counts may be misleading. To address this issue and ensure comparability, the number of publications was normalized per 100 publications, thus accounting for potential disparities in overall publication volume (Paltridge, 2017).

Control Variables

In addition to the changes in journal rank, it is essential to consider other factors that may potentially influence the number of publications of Chinese scholars. Among these is the impact factor of journals, which can influence scholars’ submission behavior. Journals with higher impact factors are often perceived as having higher quality and are more likely to receive a greater number of submissions (Gaston et al., 2020). Another relevant indicator is the ratio of self-citations within a journal, which can enhance the visibility and citation impact of an article (Gazni & Didegah, 2021; Szomszor et al., 2020). This indicator also plays a role in scholars’ decision-making process when choosing a journal for manuscript submission.

The publication cycle of a journal also has a direct impact on scholars’ manuscript submission behavior (Chaitow, 2019). Journals with more issues per year tend to accommodate a greater number of publications, which can impact the likelihood of manuscript acceptance and publication. To account for this effect, we collected information on the publication frequency of each journal. Additionally, the initial years of a journal’s publication are crucial in attracting high-quality contributions and building a strong readership (Alexander & Mabry, 1994). Therefore, we also included the first year of publication as an additional control variable, which reflects the scientific impact of a journal and may influence scholars’ decision to submit their manuscripts.

The choice of publisher is another crucial factor influencing Chinese scholars’ manuscript submission decisions (Kim & Park, 2020). Journals issued by renowned publishers such as Elsevier, Springer, and Wiley are often favored due to their reputation and credibility. We therefore control for the journal’s publisher in our analysis. Also potentially impactful is the geographical region of a journal, as scholars may prioritize regional journals that are more relevant to their research area or target audience (Majzoub et al., 2016).

In our analysis, we considered the aforementioned six indicators as control variables. Table 1 summarizes the descriptive statistics for both the key variable and these six control variables.

Identification Strategy

To identify the effect of CJR on the publication output of Chinese researchers, we use the difference-in-differences (DID) approach. Specifically, DID estimation involves comparing the performance of Chinese researchers’ publication output in journals before and after documented CJR with that of journals which did not experience rank changes during a specific year between 2015 and 2019. The baseline DID estimation has the following specification:

where i and t indicate journal and year, respectively; \(Y_{it}\) represents the number of publications per hundred by Chinese scholars; \(X_{it}\) are the set of control variables mention above; \(\gamma_{i}\) is.

the journal fixed effects, capturing all the time-invariant characteristics of the journals that might influence the outcome of interest; \(\mu_{t}\) is the year fixed effects, controlling for journal-wide shocks in a particular year likely to have affected all journals in a similar manner; and \(\varepsilon_{it}\) is the error term.

\(Rank\_change_{it}\) denotes the regressor of interest, indicating the journal’s rank change status. Specifically, \(Rank\_change_{it} = Treatment_{i} \cdot Post_{it}\), where \(Treatment_{i}\) if journal i experienced a rank change during the sample period, and 0 otherwise. \(Post_{it}\) is a post-treatment indicator, taking a value of 1 if \(t \ge t_{i0}\) where \(t_{i0}\) is the year journal i experienced the rank change, and 0 otherwise. The null hypothesis (H0) states that CJR has no significant effect on publication output. The alternative hypothesis (H1) proposes that CJR does have a significant effect on publication output. If we reject H0, we find evidence supporting the claim that CJR influences publication output. We posit that the coefficient β exhibits statistical significance.

To ensure the robustness of our findings based on DID model specified in Eq. (1), we conducted several robustness checks. These included considerations related to sampling as well as a placebo test, namely a permutation test that involved randomly assigning CJR treatment.

Results

Changes in Journal Ranks and Publication Output

In this section, we establish a baseline estimate using the previously constructed model. The estimation results are reported in column 1 of Table 2, with journal and year fixed effects included in the model. The result indicates a negative and statistically significant relationship between the rank changes and the number of publications by Chinese researchers. This finding implies that the journal rank changes in China’s national research assessment may have suppressed the scientific productivity of scholars. In column 2, which includes control variables, we again observe a negative, statistically significant effect of CJR despite a slight drop in its estimated magnitude.

Using the estimates in column 2 to calculate the economic magnitude, we find that the total number of publications authored by Chinese researchers is declining by an average of 9.2%. Comparing this to the sample mean of the outcome variable (0.621), we observe a 14.81% decrease in the number of publications by Chinese researchers.

Robustness Check

To address potential concerns regarding the identifying assumptions and to further support the validity of our findings, we next report a comprehensive set of robustness checks.

Sample of Journals with Best Practices

Researchers must exercise caution when selecting prestigious journals to publish their work. Submitting manuscripts to predatory journals or those that do not adhere to best practices can signal a negative impact on scientific output. To mitigate the potential influence of this situation, we employed Beall’s list and the DOAJ blacklist (Beall, 2013; da Silva & Tsigaris, 2018), widely accepted criteria for identifying predatory journals and those that do not follow best practices (Demir, 2018). After eliminating six journals lacking the requisite best practices from our sample,Footnote 3 the estimation results (Table 3, column 1) demonstrate a similar effect in terms of statistical significance and magnitude.

Sample Without Retracted Papers

In the academic realm, papers can occasionally undergo retraction after their publication, due to either erroneous conclusions or fraudulent research practices. Including these retracted papers in the publication count attributed to Chinese scholars can introduce estimation biases (Chen et al., 2018). To mitigate this concern, we have excluded a total of 734 retracted publications from all journals under consideration, of which 100 involve Chinese corresponding authors. The re-estimated result (Table 3, column 2) reflects a very similar estimate to those given in Table 2.

Randomly generated rank changes (placebo test)

To assess the potential impact of omitted variables on the results, a nonparametric permutation test is conducted, following the approach of randomly assigning rank changes to journals (Ferrara et al., 2012). For the placebo test, a treatment group consisting of randomly selected rank changes in journals is utilized to validate the estimates. Figure 2 illustrates the distribution of the estimates obtained from 500 iterations, alongside the benchmark estimate of − 0.092 from column 2 of Table 2. The coefficients estimated in the placebo test exhibit a central tendency around zero, while the benchmark estimates (vertical dotted line) clearly fall outside the range of estimated coefficients.

Distribution of estimated coefficients of permutation test. The figure shows the cumulative distribution density of the estimated coefficients from 500 simulations of randomly assigned journal rank changes. The vertical line presents the estimated result of column 2 in Table 2

Widespread but Unequal Impacts of Journal Rank Changes

The impacts of CJR are widespread, affecting various aspects of scholarly publishing. However, these impacts have been observed to be unequal, with certain groups or disciplines experiencing greater effects than others. To identify differential impacts of CJR, we further divided and analyzed the sample by academic field, journal rank trend, and researchers’ institutional affiliations.

Impact of CJR on Scientific Output of Different Fields

The effect of CJR on scientific output may vary across academic fields. To examine potential heterogeneity concerns, we followed the same categorization utilized in our previous studyFootnote 4 (Sun et al., 2023): “social sciences”, “physical sciences”, “life sciences”, and “health sciences.” The estimated results are reported in Table 4. Interestingly, our results reveal that CJR had a significant negative effect on the number of publications by Chinese researchers in the life sciences and physical sciences. However, no significant impact was observed in health sciences or social sciences.

Impact of CJR on Scientific Output of Different Journal Groups

In the CAS journal ranking system, changes in the ranking of journals, whether an upgrade or a downgrade, may potentially affect the publication behavior of Chinese researchers. Publishing in a high-ranked journal can benefit scholars in terms of academic promotion and reputation, whereas lower-ranked journals may not strongly encourage researchers to submit their work. To verify this possibility, we conducted separate estimations to examine the effects of the CJR on two distinct groups: journals that experienced an upgrade in ranking and those that were downgraded. For example, since the journal of Gastrointestinal Endoscopy changed from T2 to T1 during the sample period, we label it an “upgraded” journal. Likewise, since the rank of the British Medical Bulletin fell from T1 to T2, it was classified into the “downgraded” group.

The estimation results, presented in Table 5, are consistent with our proposed scenario. Journals whose rank decreased exhibited a significant reduction in the number of publications by Chinese scholars. However, the upgraded group did not show a significant impact from the changes in journal rank.

Impact of CJR by Author Affiliation

We then set out to investigate the heterogeneity of CJR’s effect on the behavior of researchers at different universities. To conduct a comprehensive analysis of CJR’s influence on scholars’ submission decisions, we utilized the Soft Science Ranking of Chinese Universities to classify universities into two categories: prestigious and less-prestigious. Specifically, we designated the top 30 universities as prestigious and the remainder as less-prestigious. This allowed us to distinguish the two groups and examine their respective responses to changes in journal ranking.

Consistent with the findings presented in Table 5, both researchers affiliated with prestigious universities and those from less-prestigious universities demonstrated a decrease in their scientific output to journals that experienced a decline in rank (columns 3 and 4 of Table 6). However, researchers from less-prestigious universities exhibited a more pronounced reduction in their submissions compared to their counterparts from prestigious universities. In the case of journals in the upgraded group, the impact was observed exclusively among researchers affiliated with less-prestigious universities (Table 6, column 2).

To check the robustness of our estimations, we used membership within Project 985 and Project 211Footnote 5 as an alternative measure of prestige. The results are presented in Appendix Tables 7 and 8 of the Appendix, which corroborate those in Table 6.

Discussion

In contrast to previous research which used the DID approaches and Before-After-Control-Impact methods to prove the positive impact of scientometric evaluation on scientific activities in developing countries such as Egypt (Ali, 2024) and South Africa (Inglesi-Lotz & Pouris, 2011), our study delves deeper by exploiting a natural experiment in the context of the largest developing country, China, to examine the effect of national research assessment on scientific productivity. Specifically, employing the DID framework, we examine the effect of changes in journal rank as documented in a major journal list in China (the CAS Journal Ranking) on the number of publications of Chinese researchers. Through a more comprehensive setting that incorporates the heterogeneity of journals and universities, we were able to inspect the effect of both upgrading and downgrading rank changes of journals in different quality tiers for universities with varying levels of prestige. Our analysis reveals a statistically significant negative effect and suggests that CJR has led to a 14.81% decrease in the number of publications per 100 compared to the overall average in our sample. We conducted additional robustness tests by excluding journals that do not adhere to best practices and by excluding retractions, and our estimations consistently confirm the observed impact of CJR on the publication output of Chinese researchers.

Implications for Researchers

The observed widespread but unequal effect of changes in journal ranking on publication output highlights the need for a nuanced understanding of how journal evaluation system can impact the publication strategies of researchers. The negative effect of changes in journal ranking is particularly pronounced in the academic domains of life sciences and physical sciences. This suggests that researchers in these fields may be more sensitive to changes in journal rankings. Moreover, the negative effect is more pronounced in less-prestigious universities, implying that researchers at these institutions might face greater pressure to publish in highly ranked journals, potentially at the expense of publishing in a wider range of outlets.

Implications for Universities and Institutions

Prestigious universities, which place more emphasis on the quality rather than the quantity of academic publications (Calcagno et al., 2012), may be less affected by changes in journal rankings. In contrast, less-prestigious universities, which often have fewer resources and less established reputations, may experience a more pronounced negative effect on their researchers’ publication outputs. This suggests that the pressure to publish in prestigious journals for career advancement can overshadow the pursuit of scientific excellence and innovation at these institutions.

Implications for Policy-Makers

Policy-makers should consider the unintended consequences of national research assessments, such as the CAS Journal Ranking, on researchers’ publication behaviors. The pressure to publish in highly ranked journals can lead to a decrease in the number of publications when journal rankings decrease, potentially undermining good science. This highlights the need for a comprehensive evaluation system that goes beyond journal rankings and considers the intrinsic value and impact of research outcomes. It is important for policy-makers to develop a balanced approach that encourages quality research while reducing the negative effects of overly rigid assessments.

Implications for Journal Publishers and Editors

This study could be a useful step toward better understanding trends in top journal publications (Franco et al., 2014) induced by journal evaluation system in developing countries. Journal publishers and editors should be aware of the potential impact of changes in journal rankings on the submission behavior of researchers. The observed negative effect suggests that changes in rankings can lead to a decrease in submissions, particularly in journals experiencing a downward trend. We posit that this phenomenon can be attributed to the fact that submitting research to journals deemed lower-ranked by the CAS system may contravene established academic evaluation criteria (Drivas & Kremmydas, 2020). Consequently, such submissions may not contribute positively to scholars’ academic reputation, career advancement prospects, and funding applications, which heavily rely on publications in highly prestigious journals (Bollen et al., 2006). Publishers and editors may need to adopt strategies to mitigate the negative effects of ranking changes, such as improving the quality and visibility of their journals, fostering international collaborations, and enhancing the transparency and fairness of the peer review process.

Although we interpret our results as evidence that CJR, as documented in the CAS Journal Ranking, influences research output, there are several limitations that merit discussion. First, although the number of publications is commonly employed as a proxy for scientific output (Fry et al., 2023), accurately measuring the true impact of the CJR on actual scientific output in China is challenging due to the absence of data on rejected submissions. In addition, the CAS Journal Ranking is updated only once in October to November every year (CAS Journal Ranking, n.d.). Due to the unavailability of submission data, it could happen that a published paper may have been submitted prior to the release of the updated journal ranking and its corresponding ranking change. Though it has been proved that the number of submissions has a strong positive correlation with the number of publications in journals (Ausloos et al., 2019; Li et al., 2022), using publication data instead of submission data may introduce certain bias. Fortunately, what we estimate by using the DID model is indeed a lower bound for the causal link of CJR on scientific output. In the further study, we hope to collect more comprehensive submission information to reach more complete and robust conclusions. Second, our estimates are contingent upon the specific context of China and cannot be readily generalized to other countries. Extending the analysis to different national contexts could offer broader insights into the global impact of similar ranking systems. Third, despite our efforts to collect a comprehensive dataset via the CAS Journal Ranking, it is essential to acknowledge the temporal constraints of our analysis. Expanding the time frame for future studies could yield more nuanced results. Finally, Chinese researchers’ decisions to submit to certain journals may also be shaped by school journal lists, especially in fields such as management science and economics. However, it is worth noting that Chinese scholars tend to have minimal representation in these rankings. For instance, in Research Policy, a prestigious journal included in the FT 50 journal list, Chinese scholars typically contribute fewer than 10 papers annually. This influence is marginal, and it may not significantly alter the effect we estimated. Future research may delve into specific disciplines to precisely estimate such effects.

Conclusions

Employing the DID model, this study contributes to the empirical estimation of the effect of journal rank changes, as reflected in a nationally prominent list, on the publication output of Chinese researchers. A negative trend is found in the number of publications by researchers in journals that experience such changes. The main driver may be the publishing behavior of scholars from non-top-tier academic institutions, who are less likely to submit to journals undergoing a decrease in ranking. This study could be a useful step in evaluating how nationwide assessment systems influence scientific output in developing countries more generally. Given the importance of scientific evaluation across the range of global and national scientific activity, considerably more research is warranted.

Data Availability

Data will be made available on request.

Notes

Since the time span of our analysis is between 2015 and 2019, when the CAS Journal Ranking was the basic edition (2004–2019) (Li et al., 2024), we refer to this basic edition when discussing CAS Journal Ranking in this manuscript.

These policies explicitly emphasized the shift away from solely relying on international publications as a direct criterion for assessing research performance among scholars and institutions. Their goal was to break the conventions of “paper only” and traditional reliance on “quantity over quality” in research evaluation.

The six journals thus eliminated are: Proceedings of the Japan Academy, Series B Physical and Biological Sciences; Preventing Chronic Disease; Kybernetika; Geodiversitas; Geologia Croatica; and Memórias do Instituto Oswaldo Cruz.

The categorization is adopted by the Scopus and ScienceDirect database owned by the publisher Elsevier.

Project 985 was an initiative launched by the Chinese central government in May 1998. Its goal was to develop and support a select group of universities to achieve world-class status in higher education. A total of 39 universities were chosen to participate in this program (“Project 985,” 2024). Project 211 was an initiative launched by the Chinese central government in November 1995. Its purpose was to prepare around 100 universities for the challenges of the twenty-first century through development and sponsorship. A total of 115 universities and colleges were selected to participate in this program (“Project 211,” 2024).

References

Akbaritabar, A., Bravo, G., & Squazzoni, F. (2021). The impact of a national research assessment on the publications of sociologists in Italy. Science and Public Policy, 48(5), 662–678. https://doi.org/10.1093/scipol/scab013

Alexander, J. C., Jr., & Mabry, R. H. (1994). Relative significance of journals, authors, and articles cited in financial research. The Journal of Finance, 49(2), 697–712. https://doi.org/10.1111/j.1540-6261.1994.tb05158.x

Ali, M. F. (2024). Is there a “difference-in-difference”? The impact of scientometric evaluation on the evolution of international publications in Egyptian universities and research centres. Scientometrics, 129(2), 1119–1154.

Ausloos, M., Nedič, O., & Dekanski, A. (2019). Correlations between submission and acceptance of papers in peer review journals. Scientometrics, 119(1), 279–302. https://doi.org/10.1007/s11192-019-03026-x

Beall, J. (2013). Beall’s List: Potential, possible, or probable predatory scholarly open-access publishers. Scholarly Open Access, 21.

Bollen, J., Rodriquez, M. A., & de Sompel, H. (2006). Journal status. Scientometrics, 69(3), 669–687. https://doi.org/10.1007/s11192-006-0176-z

Brainard, J., & Normile, D. (2022). China rises to first place in one key metric of research impact. Science, 377(6608), 799. https://doi.org/10.1126/science.ade4423

Brito, A. C. M., Silva, F. N., & Amancio, D. R. (2023). Analyzing the influence of prolific collaborations on authors productivity and visibility. Scientometrics, 128(4), 2471–2487. https://doi.org/10.1007/s11192-023-04669-7

Calcagno, V., Demoinet, E., Gollner, K., Guidi, L., Ruths, D., & de Mazancourt, C. (2012). Flows of research manuscripts among scientific journals reveal hidden submission patterns. Science, 338(6110), 1065–1069. https://doi.org/10.1126/science.1227833

CAS Journal Ranking. (n.d.). online platform use instructions of CAS Journal Ranking. https://www.fenqubiao.com/User/Help.aspx (in Chinese)

Chaitow, S. (2019). The life-cycle of your manuscript: From submission to publication. Journal of Bodywork and Movement Therapies, 23, 683–689. https://doi.org/10.1016/j.jbmt.2019.09.007

Chen, W., Xing, Q.-R., Wang, H., & Wang, T. (2018). Retracted publications in the biomedical literature with authors from mainland China. Scientometrics, 114(1), 217–227. https://doi.org/10.1007/s11192-017-2565-x

da Silva, J. A. T., & Tsigaris, P. (2018). What value do journal whitelists and blacklists have in academia? The Journal of Academic Librarianship, 44(6), 781–792. https://doi.org/10.1016/j.acalib.2018.09.017

Demir, S. B. (2018). Predatory journals: Who publishes in them and why? Journal of Informetrics, 12(4), 1296–1311. https://doi.org/10.1016/j.joi.2018.10.008

Drivas, K., & Kremmydas, D. (2020). The Matthew effect of a journal’s ranking. Research Policy, 49(4), 103951. https://doi.org/10.1016/j.respol.2020.103951

Ferrara, E. L., Chong, A., & Duryea, S. (2012). Soap operas and fertility: Evidence from Brazil. American Economic Journal: Applied Economics, 4(4), 1–31.

Franco, A., Malhotra, N., & Simonovits, G. (2014). Publication bias in the social sciences: Unlocking the file drawer. Science, 345(6203), 1502–1505. https://doi.org/10.1126/science.1255484

Franzoni, C., Scellato, G., & Stephan, P. (2011). Changing incentives to publish. Science, 333(6043), 702–703.

Fry, C. V., Lynham, J., & Tran, S. (2023). Ranking researchers: Evidence from Indonesia. Research Policy, 52(5), 104753. https://doi.org/10.1016/j.respol.2023.104753

Gaston, T. E., Ounsworth, F., Senders, T., Ritchie, S., & Jones, E. (2020). Factors affecting journal submission numbers: Impact factor and peer review reputation. Learned Publishing, 33(2), 154–162. https://doi.org/10.1002/leap.1285

Gazni, A., & Didegah, F. (2021). Journal self-citation trends in 1975–2017 and the effect on journal impact and article citations. Learned Publishing, 34(2), 233–240. https://doi.org/10.1002/leap.1348

Gonzalez-Brambila, C., & Veloso, F. M. (2007). The determinants of research output and impact: A study of Mexican researchers. Research Policy, 36(7), 1035–1051. https://doi.org/10.1016/j.respol.2007.03.005

Goodman-Bacon, A. (2021). Difference-in-differences with variation in treatment timing. Journal of Econometrics, 225(2), 254–277.

Hicks, D. (2012). Performance-based university research funding systems. Research Policy, 41(2), 251–261. https://doi.org/10.1016/j.respol.2011.09.007

Huang, F. (2020). China is choosing its own path on academic evaluation. University World News, 26, 18.

Huang, Y., Li, R., Zhang, L., & Sivertsen, G. (2021). A comprehensive analysis of the journal evaluation system in China. Quantitative Science Studies, 2(1), 300–326. https://doi.org/10.1162/qss_a_00103

Huang, Y., Tian, C., & Ma, Y. (2023). Practical operation and theoretical basis of difference-in-difference regression in science of science: The comparative trial on the scientific performance of Nobel laureates versus their coauthors. Journal of Data and Information Science, 8(1), 29–46.

Inglesi-Lotz, R., & Pouris, A. (2011). Scientometric impact assessment of a research policy instrument: The case of rating researchers on scientific outputs in South Africa. Scientometrics, 88(3), 747–760. https://doi.org/10.1007/s11192-011-0440-8

Jacob, B. A., & Lefgren, L. (2011). The impact of NIH postdoctoral training grants on scientific productivity. Research Policy, 40(6), 864–874. https://doi.org/10.1016/j.respol.2011.04.003

Jacob, B. A., & Lefgren, L. (2011). The impact of research grant funding on scientific productivity. Journal of Public Economics, 95(9), 1168–1177. https://doi.org/10.1016/j.jpubeco.2011.05.005

Jiang, F., Pan, T., Wang, J., & Ma, Y. (2024). To academia or industry: Mobility and impact on ACM fellows’ scientific careers. Information Processing & Management, 61(4), 103736. https://doi.org/10.1016/j.ipm.2024.103736

Kifor, C. V., Benedek, A. M., Sîrbu, I., & Săvescu, R. F. (2023). Institutional drivers of research productivity: A canonical multivariate analysis of Romanian public universities. Scientometrics, 128(4), 2233–2258. https://doi.org/10.1007/s11192-023-04655-z

Kim, S. J., & Park, K. S. (2020). Influence of the top 10 journal publishers listed in journal citation reports based on six indicators. Science Editing, 7(2), 142–148. https://doi.org/10.6087/KCSE.209

Kulczycki, E., Huang, Y., Zuccala, A. A., Engels, T. C. E., Ferrara, A., Guns, R., Pölönen, J., Sivertsen, G., Taşkın, Z., & Zhang, L. (2022). Uses of the journal impact factor in national journal rankings in China and Europe. Journal of the Association for Information Science and Technology, 73(12), 1741–1754. https://doi.org/10.1002/asi.24706

Li, C. Y., Wang, Y., & Giacomin, A. J. (2024). Chinese academy of science journal ranking system (2015–2023). Physics of Fluids. https://doi.org/10.1063/50211100

Li, L., Liao, Y., Yang, M., Wang, M., Chen, F., Shen, Z., & Yang, L. (2022). Research on the influence of JIF and its ranking information on the submission behavior of scholars in different countries—based on the monthly accepted paper submission data of NPG journals. Data Analysis and Knowledge Discovery, 6(12), 43–52. (in Chinese).

Li, W., Aste, T., Caccioli, F., & Livan, G. (2019). Early coauthorship with top scientists predicts success in academic careers. Nature Communications, 10(1), 5170. https://doi.org/10.1038/s41467-019-13130-4

Liang, W., Gu, J., & Nyland, C. (2022). China’s new research evaluation policy: Evidence from economics faculty of Elite Chinese universities. Research Policy, 51(1), 104407. https://doi.org/10.1016/j.respol.2021.104407

Majzoub, A., Al Rumaihi, K., & Al Ansari, A. (2016). The world’s contribution to the field of urology in 2015: A bibliometric study. Arab Journal of Urology, 14(4), 241–247. https://doi.org/10.1016/j.aju.2016.09.004

Makkonen, T., & Mitze, T. (2016). Scientific collaboration between ‘old’and ‘new’member states: Did joining the European Union make a difference? Scientometrics, 106, 1193–1215.

Mallapaty, S. (2024). China has a list of suspect journals and it’s just been updated. Nature, 627(8003), 252–253. https://doi.org/10.1038/d41586-024-00629-0

Ministry of Education and Ministry of Science and Technology. (2020). Opinions on regulating the usage of SSCI paper indicators and building correct evaluation guidelines (in Chinese).

Paltridge, B. (2017). Pragmatics and reviewers’ reports. In B. Paltridge (Ed.), The discourse of peer review. London: Palgrave Macmillan.

Peng, C., Li, Z., & (Lionel), & Wu, C. (2023). Researcher geographic mobility and publication productivity: An investigation into individual and institutional characteristics and the roles of academicians. Scientometrics, 128(1), 379–406. https://doi.org/10.1007/s11192-022-04546-9

Project 985. (2024, August 3). In Wikipedia. https://en.wikipedia.org/wiki/Project_985

Project 211. (2024, August 3). In Wikipedia. https://en.wikipedia.org/wiki/Project_211

Santos, J. M., Horta, H., & Li, H. (2022). Are the strategic research agendas of researchers in the social sciences determinants of research productivity? Scientometrics, 127(7), 3719–3747. https://doi.org/10.1007/s11192-022-04324-7

Śpiewanowski, P., & Talavera, O. (2021). Journal rankings and publication strategy. Scientometrics, 126(4), 3227–3242. https://doi.org/10.1007/s11192-021-03891-5

Sun, Z., Cao, C. C., Liu, S., Li, Y., & Ma, C. (2024). Behavioral consequences of second-person pronouns in written communications between authors and reviewers of scientific papers. Nature Communications, 15(1), 152. https://doi.org/10.1038/s41467-023-44515-1

Sun, Z., Liu, S., Li, Y., & Ma, C. (2023). Expedited editorial decision in COVID-19 pandemic. Journal of Informetrics, 17(1), 101382. https://doi.org/10.1016/j.joi.2023.101382

Szomszor, M., Pendlebury, D. A., & Adams, J. (2020). How much is too much? The difference between research influence and self-citation excess. Scientometrics, 123(2), 1119–1147. https://doi.org/10.1007/s11192-020-03417-5

Tong, S., Chen, F., Yang, L., & Shen, Z. (2023). Novel utilization of a paper-level classification system for the evaluation of journal impact: An update of the CAS Journal Ranking. Quantitative Science Studies, 4(4), 960–975.

Wahid, N., Warraich, N. F., & Tahira, M. (2022). Factors influencing scholarly publication productivity: A systematic review. Information Discovery and Delivery, 50(1), 22–33. https://doi.org/10.1108/IDD-04-2020-0036

Yu, N., Dong, Y., & de Jong, M. (2022). A helping hand from the government? How public research funding affects academic output in less-prestigious universities in China. Research Policy, 51(10), 104591. https://doi.org/10.1016/j.respol.2022.104591

Zhang, L., & Ma, L. (2023). Is open science a double-edged sword?: Data sharing and the changing citation pattern of Chinese economics articles. Scientometrics, 128(5), 2803–2818.

Zhang, X., Yin, D., Tang, L., & Zhao, H. (2024). Does academic engagement with industry come at a cost for early career scientists? Evidence from high-tech enterprises’ Ph.D. funding programs. Information Processing & Management, 61(3), 103669. https://doi.org/10.1016/j.ipm.2024.103669

Zheng, E. T., Fang, Z., & Fu, H. Z. (2024). Is gold open access helpful for academic purification? A causal inference analysis based on retracted articles in biochemistry. Information Processing & Management, 61(3), 103640.

Acknowledgements

This work is supported by Teachers Research Foundation Project of Nanjing University of Posts and Telecommunications (NYY222042), the Youth Program of National Natural Science Foundation of China (72404144), and the Faculty Research Grant (DB24A8) at Lingnan University.

Funding

Open Access Publishing Support Fund provided by Lingnan University.

Author information

Authors and Affiliations

Contributions

Zhuanlan Sun: Conceptualization, Methodology, Formal analysis, Writing–original draft, Writing–review & editing. Chenwei Zhang: Methodology, Formal analysis, Writing–review & editing. Ka Lok Pang: Methodology, Formal analysis, Writing–original draft. Ying Tang: Formal analysis. Yiwei Li: Conceptualization, Writing–review & editing, Project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, Z., Zhang, C., Pang, K.L. et al. Do Changes in Journal Rank Influence Publication Output? Evidence from China. Scientometrics (2024). https://doi.org/10.1007/s11192-024-05167-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11192-024-05167-0