Abstract

In the evaluation of scientific publications’ impact, the interplay between intrinsic quality and non-scientific factors remains a subject of debate. While peer review traditionally assesses quality, bibliometric techniques gauge scholarly impact. This study investigates the role of non-scientific attributes alongside quality scores from peer review in determining scholarly impact. Leveraging data from the first Italian Research Assessment Exercise (VTR 2001–2003) and Web of Science citations, we analyse the relationship between quality scores, non-scientific factors, and publication short- and long-term impact. Our findings shed light on the significance of non-scientific elements overlooked in peer review, offering policymakers and research management insights in choosing evaluation methodologies. Sections delve into the debate, identify non-scientific influences, detail methodologies, present results, and discuss implications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the realm of equivalent cost, does the superior quality product emerge as the top-selling among those fulfilling a specific need? Probably, but not necessarily. Is it solely the product quality that influences consumer choices? Certainly not. The service integrated into or associated with the product, packaging design, distribution channel, promotion, and general marketing are factors that can contribute to enhancing the product’s value and/or the consumer’s perception of it. The ultimate goal for a company seeking to maximise profits for its shareholders is not only to produce goods or services better than competitors but to sell more (at an equivalent cost). Therefore, the company also invests in activities complementary to those typically aimed at increasing the intrinsic quality of the product.

With the necessary modifications, the taxpayer, i.e., the shareholder of public research institutions (PROs), and consequently, the policymaker and top management overseeing them, should aim at maximising the socio-economic impact of research expenditures rather than solely focusing on the quality of research output. Notably, many of these institutions have established industrial liaison and technology licensing offices (equivalent to the marketing and sales functions of private companies operating in the market) to promote cross-sector knowledge transfer (social impact). Researchers increasingly turn to social media to expedite the speed, reach, and significance of disseminating research results within the scientific community (intra-sector knowledge transfer, i.e., scholarly impact). Similar to consumer goods, empirical evidence for scientific research “products” indicates that, in addition to intrinsic quality, various non-scientific factors play a role in determining their value/impact (Mammola et al., 2022; Tahamtan et al., 2016; Xie et al., 2019a, 2019b).

If the impact of research is what Public Research Organizations (PROs) should maximise rather than quality, then why resort to evaluation methods and incentivising systems based on the assessment of quality through peer review of scientific publications? The latest UK Research Evaluation Framework (REF) 2021, the current descendant of the Research Assessment Exercise (RAE) and precursor to other RAEsFootnote 1 adopted by an increasing number of countries under various names (e.g., ERA in Australia, PBRF in New Zealand, VQR in Italy, etc.), “is the system for assessing the quality of research in Higher Education Institutions (HEIs) in the UK,” where “the primary outcome of the (evaluation) panels’ work will be an overall quality profile awarded to each submission.” The quality of submitted research outputs in terms of their originality, significance, and rigour constitutes 60 per cent of the overall performance score. In comparison, the social impact “underpinned by excellent research conducted in the submitted unit” accounts for 25 per cent. Additionally, the “vitality and sustainability” of the research environment contribute 15 per cent.Footnote 2 The introduction of social impact evaluation in RAEs is relatively recent and conducted through the analysis of a very limited number of case studies. In any case, the assessment of the quality of research output continues to play a primary role both in the final evaluation and in the performance-based allocation of resources to institutions. These exercises often use a combination of methods, but peer review plays a central role in determining the quality of research outputs and, consequently, the overall research performance of institutions and individuals.

The debate on which of the two approaches is preferable for research evaluation has recently been reignited by the Coalition for Advancing Research Assessment (CoARA) initiative (Abramo, 2024; Rushforth, 2023; Torres-Salinas et al., 2023). CoARA advocates that research assessment should be primarily based on qualitative judgment, with peer review playing a central role.Footnote 3 It is unequivocal that both approaches have pros and cons. Still, it is important to acknowledge that the two methods measure different attributes of research, one being the quality of scientific output and the other its scholarly impact. The potential difference between the two should be determined by non-scientific factors associated with the publication, which will be the subject of the present study.

This study aims to ascertain to what extent non-scientific factors contribute to determining, in addition to intrinsic quality, the scholarly impact of a research product. To achieve this, we assume that peer review competently measures quality, and citation-based metrics measure the scholarly impact of research products despite the respective limitations of the two methodologies extensively dissected in the literature (Gingras, 2016; Lee et al., 2013).

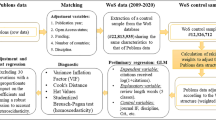

We leverage the knowledge of the quality scores attributed by reviewers to the publications submitted for evaluation in the first Italian RAE, named VTR 2001–2003. For each such publication indexed in the Web of Science (WoS), we measure its citation-based impact. Subsequently, we identify the non-scientific attributes of each publication. Finally, we fit a statistical model to analyse the relationship between the impact of the publication and the quality score assigned by reviewers, controlling for the non-scientific factors. This allows us to gain insight into the weight of non-scientific factors that reviewers may not capture in determining the scholarly impact of a publication.

The results should interest policymakers and research institution management, who must decide whether to measure the quality of research output through peer review or academic impact through bibliometric techniques.

The following section presents the main insights from the debate on peer review vs bibliometric approaches to assess research. In Sect. ‘Non-scientific factors affecting publications impact’’, we examine the non-scientific factors that could influence the scholarly impact of publications. In Sect. ‘‘Data and methods’’, we provide details on the data and methods used to measure their relative contribution to impact. In Sect. ‘Results’’, we present the results, while in Sect. ‘Discussion and conclusions’’, we discuss them and draw conclusions.

Quality or impact, peer review or bibliometrics?

For a research product to impact the scientific community, it must be utilised (OECD/Eurostat, 2018). To ensure this, it cannot remain tacit but must be encoded in written form beforehand to facilitate its dissemination across all potential users. The most commonly adopted written form by scholars is the article published in scientific journals. The utilisation of the knowledge embedded in the publication is primarily determined by its quality, encompassing originality, significance, and rigour (Jabbour et al., 2013; Patterson & Harris, 2009), as well as other non-scientific attributes (Mammola et al., 2022; Tahamtan et al., 2016; Xie et al., 2019a, 2019b). The contribution of these non-scientific factors may allow a publication of lower quality to have a greater impact than a qualitatively superior one. Therefore, if the intention is to measure impact, evaluating quality alone could lead to distorted results. While bibliometric techniques directly measure a publication’s scholarly impact, determined by its quality and non-scientific factors to some extent, the peer review process is intended to judge its quality.

The pros and cons of each approach have been extensively debated in the literature. The major limitation of peer evaluation is the subjectivity in assessments, as highlighted by the not infrequent disagreements among peers (Bertocchi et al., 2015; Kirman et al., 2019; Schroter et al., 2022), especially in the case of interdisciplinary works (Thelwall et al., 2023a). Subjectivity occurs not only in the evaluation of the research product but also in the upstream phase of selecting peers (Horrobin, 1990; Moxham & Anderson, 1992) and in the selection of products to be subjected to evaluation (Abramo et al., 2014). Other limitations include potential conflicts of interest, the natural tendency to more favourably evaluate authors with a higher reputation or affiliated with more prestigious institutions, the difficulty of contextualising the judgment on the work to be evaluated in the state of the art at the time of execution, the challenge of identifying quality reviewers as the number of works to be evaluated increases (Abramo et al., 2013), and last but not least, the costs and time involved in large-scale evaluations, RAEs. Notably, RAEs that adopt peer evaluation base the comparative assessment of institutions on a limited number of total research products to reduce costs and time, albeit at the expense of inevitable distortions in rankings (Abramo et al., 2010).

Citation-based metrics of the scholarly impact of a publication presuppose that a citation represents a recognition of the influence of the cited publication on the citing one (normative theory of citing) (Bloor, 1976; Merton, 1973; Mulkay, 1976). This assumption is strongly opposed by social constructivists who, on the contrary, believe that persuasion is the primary reason for citing, and therefore, citation-based metrics are not suitable for measuring the impact of scientific work (Brooks, 1985; Knorr-Cetina, 1981; Latour & Woolgar, 1979; MacRoberts & MacRoberts, 1984). The literature has extensively discussed this opposition (Tahamtan & Bornmann, 2018). However, the fact that there is a significant correlation between quality as judged by peers and scholarly impact as measured by citation metrics, both perceived and empirically observed (Jabbour et al., 2013; Patterson & Harris, 2009), supports the normative theory of citing. This does not mean that citations as an impact indicator are free from limitations. In fact, publications are sometimes cited erroneously (e.g., based on a superficial read of the abstract and title). Citations do not always reflect quality, as a work can be cited to demonstrate its faults rather than its merits (negative citations). However, this rare event generally occurs soon after publication and should not disrupt the analyses (Pendlebury, 2009). Citations can also be manipulated by authors for their own benefit (excessive recourse to self-references and cross-citations) or by editors who, in some cases, exert pressure on authors to cite works already published in their journals to increase the journals’ impact indicators (Pichappan & Sarasvady, 2002). Another limitation is the “delayed recognition” phenomenon, which sometimes affects more mature works (Garfield, 1980; Ke et al., 2015; van Raan, 2004). Although it has been demonstrated that cases of delayed recognition are quite rare, their effects can still be mitigated by introducing other variables beyond early citations that improve the predictive power of the latter (Xia, Li, & Li, 2023; Abramo et al., 2019a, 2019b). Finally, the use of citations requires reliance on bibliographic repertories, which do not index all publications and, in disciplines such as arts and humanities, their coverage is insufficient to provide a robust representation of research output (Aksnes & Sivertsen, 2019; Archambault et al., 2006; Moed, 2005).

The debate on which of the two approaches is more appropriate is still open and will likely remain so for a long time. There is no definitive answer: the choice between the two, either individually or in combination, will depend on the measurement goal, context, measurement scale, data availability, and resources and time constraints.

In the next section, we will analyse the non-scientific attributes of a scientific publication that, together with its intrinsic quality, determine its scholarly impact.

Non-scientific factors affecting publications impact

A comprehensive body of literature has emerged on the non-scientific factors influencing publication impact, as evidenced in the review by Tahamtan et al. (2016). Xie et al., (2019a, 2019b) identified 66 factors possibly associated with impact.

These factors can be classified into two main categories: those external to the manuscript and those intrinsic to the manuscript. Among the former, notable elements include i) knowledge distribution channels such as the prestige level of the publishing journal (Mammola et al., 2021; Bornmann & Leydesdorf, 2015; Stegehuis et al., 2015) and the type of access (open access, or OA, vs non-open access, or non-OA) to the publication by a potential reader (Yu et al., 2022; Langham-Putrow, Bakker, & Riegelman, 2021; Piwowar, et al., 2018; Wang et al., 2015a, 2015b; Gargouri, et al., 2010; Antelman, 2004); and ii) communication initiatives in social media (i.e., blogs, Twitter, Facebook, pre-prints) undertaken by the authors to increase the visibility of the manuscript (Özkent, 2022). Intrinsic factors within the manuscript can be further categorised into three groups based on the part of the manuscript they pertain to i) features of the byline, ii) features of the body of the manuscript, and iii) features of the reference list.

Regarding the features of the byline, factors associated with impact include i) the length of the author list (Abramo & D’Angelo, 2015; Didegah & Thelwall, 2013; Fox et al., 2016; Talaat & Gamel, 2022; Thelwall et al., 2023b; Wuchty et al., 2007); ii) the authors’ academic influence and collaboration network (Hurley et al., 2013; Mammola et al., 2022); iii) authors’ gender or other personal features (Abramo, Aksnes, & D’Angelo, 2021; Andersen et al., 2019; Duch et al., 2012; Aksnes et al., 2011; Larivière et al., 2011; Symonds et al., 2006); iv) the number of institutions collaborating (Sanflippo, Hewitt, & Mackey, 2018; Narin & Whitlow, 1990); v) the number of countries involved (Glänzel & De Lange, 2002).

Moving to the features of the body of the text, factors linked to impact include i) document types (e.g., articles, reviews, proceedings papers, books, etc.), which are differently associated with impact and speed of impact (Wang et al., 2013); ii) linguistic attributes of the manuscript (including title and abstract) such as readability (Ante, 2022; Heßler, Ziegler, 2022; Rossi & Brand, 2020; Stremersch et al., 2015; Didegah & Thelwall, 2013; Walters, 2006), (ab)use of jargon and acronyms (Barnett & Doubleday, 2020; Martínez & Mammola, 2021); eye-catchy titles (Heard et al., 2023); iii) the manuscript’s length (Ball, 2008; Elgendi, 2019; Fox et al., 2016; Xie et al., 2019a, 2019b); iv) the degree of interdisciplinarity (Chen et al., 2015; Yegros-Yegros et al., 2015); v) popularity and interest of the subject (Peng & Zhu, 2012); vi) the discipline the manuscript falls under (Larivière & Gingras, 2010; Levitt & Thelwall, 2008); and vii) research fundings (Rigby, 2013).

Finally, concerning the reference list, factors associated with impact include i) the length of the reference list (Fox et al., 2016; Mammola et al., 2021); ii) the impact of the cited works (Jiang et al., 2013; Sivadas & Johnson, 2015); iii) the incidence of more recent cited publications (Liu et al., 2022; Mammola et al., 2021); iv) the number of cited fields and their cognitive distance (Wang, Thijs, & Glänzel, 2015).

The non-scientific traits of a manuscript associated with its future impact are numerous, but not all of them are easily measurable in large-scale analyses.

Data and methods

Data

To better understand the methodology employed to address our research query, it is essential to delve into the VTR 2001–2003, which adopted a peer review assessment. The primary objective of the VTR was to evaluate the research conducted by Italian universities and public research institutions during the specified timeframe. Each of the 102 institutions under scrutiny, encompassing 64,000 researchers, was tasked with submitting their research works published during this timeframe. Eligible products included articles, books and chapters, conference proceedings, patents, designs, performances, exhibitions, artifacts, and artworks. Excluded were purely editorial activities, teaching materials, congress abstracts, trials, routine analyses, and internal reports. A restriction was imposed to ensure that the number of products did not exceed 50%Footnote 4 of the full-time-equivalent (FTE) research staff of each evaluated institution. 14 disciplinary panels, corresponding to 14 disciplinary areas (DAs), and consisting of 151 high-level peers (79 from Italian universities, 37 from abroad, 19 from domestic research institutions, and 16 from industry), assessed a total of 17,329 research products. External experts assisted in evaluations, with at least two experts per product, totalling 6,661 evaluations (Cuccurullo, 2006). Following the conclusion of the peer review process, each research product received a final judgment, expressed on a four-point rating scale: Excellent (E) = 1, denoting products that met the top 20% of international standards; Good (G) = 0.8 for those falling between 80 and 60%; Acceptable (A) = 0.6 for products scoring between 60 and 40%; and Limited (L) = 0.2 for products falling below the 40% threshold. Each product selected by institutions and sent to the agency in charge of the evaluation was classified into a particular subject area. For the purposes of this paper, we have limited the analysis only to products (9,225 in all) classified in 7 of such areas and, more precisely: 1—Mathematics and computer science; 2—Physics; 3—Chemistry; 4—Earth sciences; 5—Biology; 7—Agricultural and veterinary sciences; 8—Civil engineering; 9—Industrial and information engineering. Notably, these cover all STEM.

These products are mainly journal articles (72%), but there are also books and book chapters (23%), patents (2%), and miscellaneous items (3%).

In certain instances, co-authors from distinct institutions submitted identical products (in a very limited number of cases, in distinct DAs). Among the submitted products, 8,086 were scientific publications indexed in the Web of Science (WoS). Some of them were excluded since i) exhibited publication dates outside the 2001–2003 period; ii) were hosted in a source lacking impact factor (IF); or were assigned to different DA panels (as anticipated above), and received different evaluation scores. After eliminating all the aforementioned cases, the finalised dataset comprised 7,305 publications, whose breakdown by DAs is shown in Table 1. This corresponds to 11.8% of the WoS-indexed scientific production of all Italian academics within those DAs, ranging from a minimum of 7.2% in Industrial and information engineering to a maximum of 27.3% in Earth sciences.

Methods

Variables

For the analysis, we focus on “journal articles” only, which are the vast majority of the research products in the dataset (6,889 articles out of 7,305, which represents 94% of the research products). To assess the impact of the articles, we use WoS bibliometric data. Specifically, we calculate the normalised scholarly impact of publication i as its citations accrued up to 31/12/2022, normalised to the reference distribution, i.e. divided by the average number of citations (counted at the same date) received by all WoS publications classified in the same subject category (SC),Footnote 5Footnote 6 and indexed in the same year of publication i. Having considered a fixed citation count date, the citation window used to assess the impact of publications varies from a minimum of 19 years to a maximum of 21. In all cases, these are extremely long, which ensures a reliable measurement of long-term scholarly impact. For ease of reading, in the following, we call the scholarly impact simply “impact”, and, being our target variable, we will denote it by Y. In fact, as will become clearer below, we will also consider the “short-term impact” of each publication, measured exactly as indicated above, but taking into account citations received up to 31/12/2005, i.e., with the same time citation window available to the evaluators in the VTR.

We assume that the impact depends mainly on “quality”, denoted with Z, for the measurement of which we rely on the final judgment expressed by reviewers; hence, quality is expressed through the four-point rating scale already described above (1 for “excellent products”; 0.8 for “good” ones; 0.6 for “acceptable” and 0.2 for “limited”).

On the other hand, as the literature suggests, we also assume that impact depends on several non-scientific factors (X1,…, Xp). We group them into three sets of features.

Features of the byline

-

The number of authors of the publication.

-

The average impact of their 2001–2003 publications (measured as the Y).

-

A dummy variable for the presence of an English mother tongue author for modelling the possible “linguistic advantage”.

-

The share of female co-authors.

-

The number of institutions and countries in the affiliations list. The strong correlation between these two variables leads us to exclude both in favour of a single dummy (“foreign”) equal to 1 when the address list contains more than one country (0, otherwise).

Features related to the publication’s content and venue

-

The open-access character, expressed by a single dummy equal to 1 for Green, Hybrid, and Gold OA-tagged publications.

-

The length of the publication expressed by the number of pages.

-

Impact_factor of the hosting source, extracted from the Journal Citation Report 2004 edition and normalised to the reference distribution, i.e., divided by the average impact factor of all sources in the same subject category.

-

The degree of interdisciplinarity of the publication measured by the share of cited papers in the bibliography falling in SCs other than the dominant one in the article reference list (Abramo et al., 2018).

Features related to the publication’s bibliography

-

The length of the reference list (number of cited references).

-

The share of references indexed in WoS signalling the extent of recourse to “qualified” literature.

-

The share of self-citations in the reference list.

-

The average age of cited publications.

-

Their average normalised impact (measured as the outcome Y).

Some additional explanations deserve to be given on how the features related to the byline were measured. Specifically, each author of the publications in the dataset has been “disambiguated” using the algorithm proposed by Caron and van Eck (2014). This enables us to attribute their gender and nationality, survey their past scientific production, and measure the average impact of their 2001–2003 publications.

We dropped 220 articles with missing values on the authors’ average impact and 223 with outlying values in the number of authors, pages, or references. Hence, the final dataset for the analysis includes 6,446 articles.

Table 2 shows summary statistics of the variables of the dataset. For better readability, we omit all variables found to be not statistically significant at p = 0.05 in terms of their effect on the response (Y), both here and in the following.

The statistical model

We exploit a linear random effects model to evaluate the extent of the association of the above-mentioned non-scientific features via-à-vis the quality of a given publication with its scholarly impact (e.g., Rabe-Hesketh & Skrondal, 2022). Specifically, for an article i published in journal j, the model is:

where \({Y}_{ij}\) is the outcome variable, i.e., the article’s (normalised) impact, \({Z}_{ij}\) is a measure of the article’s quality and \({X}_{1ij},\dots ,{X}_{pij}\) are the article’s non-scientific factors.

Model [1] includes a random effect \({u}_{j}\) representing the unobserved factors for journal j and a residual error \({e}_{ij}\). Both \({u}_{j}\) and \({e}_{ij}\) are assumed to be normal random variables with zero mean and unknown standard deviations denoted with \({\sigma }_{u}\) and \({\sigma }_{e}\), respectively. The model is fitted by a GLS algorithm using the xtreg command of Stata 18 with the re option (StataCorp., 2023). Note that the journal effects are assumed to be random; they cannot be fixed effects since this would prevent including the journal IF.

A preliminary analysis based on local polynomials showed that the relationships are linear on the logarithmic scale for all “impact” variables with right-skewed distributions. For this reason, those variables are log-transformed. Values equal to zero are replaced with the minimum positive value before computing the logarithm.

The model is specified in two main steps: (i) the bivariate relationships between the outcome and the continuous explanatory variables were explored, as mentioned above, by local polynomials to select a suitable functional form; (ii) the model was fitted firstly with all explanatory variables, then it was fitted again with statistically significant variables (p < 0.05). As compared to the variables considered for the analysis, listed in Sect. ‘‘Variables’’., the final model excluded the indicator for an English mother tongue author and the interdisciplinary index. Furthermore, the number of institutes and the number of countries, which are highly correlated and very skewed, have been summarised by an indicator for at least two countries (“Foreign”).

The predictive ability of the models is evaluated by the coefficient of determination R-squared, namely the square of the correlation between the observed outcome and the predicted outcome. To check the distributional assumption on the random effects, we computed their predictions and drew a normal probability plot, confirming the adequacy of the normality assumption.

Results

We fitted three models, each including all the relevant non-scientific factors in Table 2. The response variable is the logarithm of the article’s impact. The explanatory variables based on a measure of impact are also logarithmic. The first model includes only non-scientific factors as predictors, the second model adds the “quality” of the article in terms of the score assigned by the peers, and the third model substitutes the quality scores with the short-term impact as measured by the early citations. The results are reported in Table 3. The R-squared, a measure of predictive ability, is 0.248 when considering only non-scientific factors. It increases slightly to 0.260 when including the score assigned by peers and substantially to 0.485 when adding early citations. Therefore, the value added by the peers is marginal and much smaller than the contribution given by an easily and cheaply calculated bibliometric indicator of short-term impact.

All explanatory variables are statistically significant at the 0.01 level, except for open access in all models and the number of authors in the third one. Moreover, in the second model, the categories 0.2 and 0.6 of the score assigned by peers do not achieve statistical significance (p-values equal to 0.8909 and 0.0650, respectively): This means that, when controlling for non-scientific factors, articles with scores of 0.2 and 0.6 do not exhibit statistically different impacts compared to those with a score of 0.8 (baseline). However, the top score, namely 1, has a highly significant effect (p-value < 0.0001), meaning that reviewers better identify excellent rather than poor papers. Specifically, an article scoring 1 by peers has, other things being equal, a long-term scholarly impact about 28% greater than an article scoring 0.8. On the other hand, the coefficient of the short-term impact is an elasticity measure; thus, a 1% increase in the early citations is associated with a 0.446% increase in the long-term impact.

Non-scientific factors consistently influence the outcome of all models. Their effects tend to be smaller in magnitude in the model with the early citations. The reason is that the impact measured by early citations is a mediator for the long-term impact, absorbing part of the effects; nonetheless, it is remarkable that most non-scientific factors are relevant, even controlling for the early citations. Specifically, as for the byline, the number of authors is not statistically significant, whereas an increase of 1% in the average author’s impact is associated with a 0.246% increase in the long-term impact, and the presence of a foreign author is associated with a 7.2% increase. As for content and venue, open access is not statistically significant, whereas one more page length is associated with 1.1% more impact, and an increase of 1% in the journal IF corresponds to 0.267% more impact. As for features related to the publication’s bibliography, we found different patterns: the effect on the long-term impact is positive for the number of references (+ 0.4% for one additional reference) and the average impact of cited articles (+ 0.101% for 1% more average impact). On the other hand, the relationship is negative for the cited articles’ age (-2.3% for one additional year), the percentage of self-cites (-0.4% for one additional percentage point), and the percentage of cited articles in WoS (-0.4% for one additional point). The estimated coefficients generally align with expectations, except for the negative coefficient associated with the percentage of cited articles in WoS. This unexpected result may warrant further investigation. However, it should be noted that this effect is adjusted for the other explanatory variables, particularly the bibliometric ones.

Considering the residual variances, it is worth noting that inserting the peers’ score in the second model has negligible consequences. On the other hand, inserting the early impact in the third model leaves the journal-level variance unchanged while substantially reducing the article-level variance; thus, the fraction of residual variance due to journals rises from 19.6 to 27.3%. Note that in the third model, 27.3% of the residual variance is at the journal level, i.e., more than one-fourth of the variance due to unmeasured factors is attributable to the journal. This implies a residual correlation of 0.273 among articles published in the same journal, even controlling for the journal IF. Therefore, the journal plays an important role in determining the long-term impact beyond the value of its IF.

The analysis has been replicated at the disciplinary area level of the articles. Table 4 shows the R-squared for the three fitted models. The overall pattern is confirmed in all areas: once the non-scientific factors are considered, the score assigned by peers barely improves the prediction of the long-term impact, whereas the short-term impact provides a substantial improvement, especially in Physics and Biology.

Discussion and conclusions

The prevailing view among the majority of research assessment scholars and practitioners is that peer review evaluation stands as the gold standard, while citation-based evaluation serves as a quicker and more economical surrogate. Consequently, considerable scholarly attention has been directed towards assessing how effectively bibliometric evaluation approximates peer review, with potential for substitution or complementary use across various domains: i) individual scientific publications (Bertocchi et al., 2015; Bornmann & Leydesdorff, 2013); ii) individual researchers (Cabezas-Clavijo et al., 2013; Vieira & Gomes, 2018), and iii) research institutions (Franceschet & Costantini, 2011; Pride & Knoth, 2018). However, the underlying rationale for the presumption of peer review’s superiority over evaluative bibliometrics remains elusive.

On the empirical front, Abramo et al., (2019a, 2019b) presented evidence that early citations offer better predictions of the long-term scholarly impact of scientific publications compared to peer-review quality scores. The present study reinforces these findings, even after factoring in the influence of non-scientific factors on impact.

Similarly, theoretical foundations are also wanting. In recent years, policymakers have rightly shifted their focus towards assessing the social impact of research rather than merely its quality. While research output quality serves as a means to an end, the ultimate goal of research activity invariably remains its societal impact. This impact is influenced partly by research institutions and partly by the capacity of both the industrial and public sectors to incorporate research findings into enhanced products and processes. Concerning institutional responsibilities, a clear division between production and dissemination—between researchers and technology licensing offices—is essential. Researchers must not only generate quality results but also ensure their swift dissemination within the scholarly community, utilising non-scientific factors responsibly to enhance scholarly impact.

Recent national evaluation initiatives have partly embraced these new priorities, mandating institutions to demonstrate the societal impact of their research by submitting relevant case studies. However, by retaining peer review evaluation of publications as the primary metric, these assessments continue to prioritise research quality over scholarly impact. Peers, often experts in specific fields, face challenges in assessing the broader scientific implications of research beyond their expertise. Recent studies reveal that citations to publications predominantly originate from diverse domains (Abramo & D’Angelo, 2024).

While the quality of scientific products typically plays a significant role in scholars’ choices, other factors also exert influence. As demonstrated in this study concerning scientific articles, factors such as the reputation of authors and the publication venue, alongside quality, shape their utilisation by the scientific community for advancing knowledge. Additionally, factors like the average impact of cited works and multinational authorships contribute to a lesser extent. Embracing bibliometric methods, which effectively measure scholars’ citations as “consumer purchases,” could advance policymakers’ shared objectives. Replacing “evaluation of research quality” with “evaluation of research impact” in technical parlance would foster greater clarity among professionals and stakeholders regarding evaluation objectives.

Given these research findings and considerations, the recent initiative by CoARA towards predominantly peer review evaluation systems raises several perplexities and concerns that policymakers should carefully address.

Notes

Henceforth, we will use the acronym ‘RAEs’ to generically refer to national research assessment exercises.

The above phrases in quotes are extracted from REF 2021–Panel criteria and working methods, https://www.ref.ac.uk/publications-and-reports/panel-criteria-and-working-methods-201902/

To account for time devoted to teaching activities, 1 professor equals 0.5 FTE.

The WoS classification scheme involves 255 SCs in all.

The SC of a publication corresponds to that of the journal where it is published. For publications in multidisciplinary journals (multiple SCs) the scaling factor is calculated as the average of the standardized values for each subject category.

References

Abramo, G. (2024). The forced battle between peer-review and scientometric research assessment: Why the CoARA initiative is unsound. Research Evaluation. https://doi.org/10.1093/reseval/rvae021

Abramo, G., & D’Angelo, C. A. (2015). The relationship between the number of authors of a publication, its citations and the impact factor of the publishing journal: Evidence from Italy. Journal of Informetrics, 9(4), 746–761.

Abramo, G., D’Angelo, C. A., & Viel, F. (2010). Peer review research assessment: A sensitivity analysis of performance rankings to the share of research product evaluated. Scientometrics, 85(3), 705–720.

Abramo, G., D’Angelo, C. A., & Viel, F. (2013). Selecting competent referees to assess research projects proposals: A study of referees’ registers. Research Evaluation, 22(1), 41–51.

Abramo, G., D’Angelo, C. A., & Di Costa, F. (2014). Inefficiency in selecting products for submission to national research assessment exercises. Scientometrics, 98(3), 2069–2086. https://doi.org/10.1007/s11192-013-1177-3

Abramo, G., D’Angelo, C. A., & Zhang, L. (2018). A comparison of two approaches for measuring interdisciplinary research output: The disciplinary diversity of authors vs the disciplinary diversity of the reference list. Journal of Informetrics, 12(4), 1182–1193.

Abramo, G., D’Angelo, C. A., & Felici, G. (2019a). Predicting long-term publication impact through a combination of early citations and journal impact factor. Journal of Informetrics, 13(1), 32–49.

Abramo, G., D’Angelo, C. A., & Reale, E. (2019b). Peer review vs bibliometrics: Which method better predicts the scholarly impact of publications? Scientometrics, 121(1), 537–554.

Abramo, G., Aksnes, D. W., & D’Angelo, C. A. (2021). Gender differences in research performance within and between countries: Italy vs Norway. Journal of Informetrics, 15(2), 101144. https://doi.org/10.1016/j.joi.2021.101144

Abramo, G., & D’Angelo, C.A. (2024). Analysing the inter-domain vs intra-domain knowledge flows. Working paper.

Aksnes, D. W., & Sivertsen, G. (2019). A criteria-based assessment of the coverage of scopus and web of science. Journal of Data and Information Science, 4(1), 1–21.

Aksnes, D. W., Rorstad, K., Piro, F., & Sivertsen, G. (2011). Are female researchers less cited? A large-scale study of norwegian scientists. Journal of the American Society for Information Science and Technology, 62(4), 628–636.

Andersen, J. P., Schneider, J. W., Jagsi, R., & Nielsen, M. W. (2019). Gender variations in citation distributions in medicine are very small and due to self-citation and journal prestige. eLife, 8, 1–17.

Ante, L. (2022). The relationship between readability and scientific impact: Evidence from emerging technology discourses. Journal of Informetrics, 16(1), 101252.

Antelman, K. (2004). Do open-access articles have a greater research impact? College & Research Libraries, 65(5), 372–382.

Archambault, É., Vignola-Gagné, É., Côté, G., Larivière, V., & Gingras, Y. (2006). Benchmarking scientific output in the social sciences and humanities: The limits of existing databases. Scientometrics, 68(3), 329–342.

Ball, P. (2008). A longer paper gathers more citations. Nature, 455(7211), 274.

Barnett, A., & Doubleday, Z. (2020). The growth of acronyms in the scientific literature. eLife. https://doi.org/10.7554/eLife.60080

Bertocchi, G., Gambardella, A., Jappelli, T., Nappi, C. A., & Peracchi, F. (2015). Bibliometric evaluation vs informed peer review: Evidence from Italy. Research Policy, 44(2), 451–466.

Bloor, D. (1976). Knowledge and Social Imagery. Routledge, Kegan and Paul.

Bornmann, L., & Leydesdorff, L. (2013). The validation of (advanced) bibliometric indicators through peer assessments: A comparative study using data from InCites and F1000. Journal of Informetrics, 7(2), 286–291.

Bornmann, L., & Leydesdorff, L. (2015). Does quality and content matter for citedness? A comparison with para-textual factors and over time. Journal of Informetrics, 9(3), 419–429.

Brooks, T. A. (1985). Private acts and public objects: An investigation of citer motivations. Journal of the American Society for Information Science, 36(4), 223–229.

Cabezas-Clavijo, Á., Robinson-García, N., Escabias, M., & Jiménez-Contreras, E. (2013). Reviewers’ ratings and bibliometric indicators: Hand in hand when assessing over research proposals? PLoS ONE, 8(6), e68258.

Caron, E., & van Eck, N. J. (2014). Large scale author name disambiguation using rule-based scoring and clustering. In E. Noyons (Ed.), 19th International Conference on Science and Technology Indicators. “context counts: Pathways to master big data and little data” (pp. 79–86). Leiden: CWTS-Leiden University.

Chen, S., Arsenault, C., & Larivière, V. (2015). Are top-cited papers more interdisciplinary? Journal of Informetrics, 9(4), 1034–1046.

Cuccurullo, F. (2006). La valutazione triennale della ricerca–VTR del CIVR. Analysis, 3(4), 5–7.

Didegah, F., & Thelwall, M. (2013). Which factors help authors produce the highest impact research? Collaboration, journal and document properties. Journal of Informetrics, 7(4), 861–873.

Duch, J., Zeng, X. H. T., Sales-Pardo, M., Radicchi, F., Otis, S., Woodruff, T. K., & Amaral, L. A. N. (2012). The possible role of resource requirements and academic career-choice risk on gender differences in publication rate and impact. PLoS ONE, 7(12), e51332.

Elgendi, M. (2019). Characteristics of a highly cited article: A machine learning perspective. IEEE Access, 7, 87977–87986.

Fox, C. W., Paine, C. T., & Sauterey, B. (2016). Citations increase with manuscript length, author number, and references cited in ecology journals. Ecology and Evolution, 6(21), 7717–7726.

Franceschet, M., & Costantini, A. (2011). The first italian research assessment exercise: A bibliometric perspective. Journal of Informetrics, 5(2), 275–291.

Garfield, E. (1980). Premature discovery or delayed recognition–Why? Current Contents, 21, 5–10.

Gargouri, Y., Hajjem, C., Larivière, V., Gingras, Y., Carr, L., Brody, T., et al. (2010). Self-Selected or mandated, open access increases citation impact for higher-quality research. PLoS ONE, 5(10), e13636.

Gingras, Y. (2016). Scientometrics and research evaluation: Uses and abuses. MIT Press Cambridge.

Glänzel, W., & De Lange, C. (2002). A distributional approach to multinationality measures of international scientific collaboration. Scientometrics, 54, 75–89.

Heard, S. B., Cull, C. A., & White, E. R. (2023). If this title is funny, will you cite me? Citation impacts of humor and other features of article titles in ecology and evolution. FACETS, 8(1), 1–15.

Heßler, N., & Ziegler, A. (2022). Evidence-based recommendations for increasing the citation frequency of original articles. Scientometrics, 127, 3367–3381.

Horrobin, D. F. (1990). The philosophical basis of peer review and the suppression of innovation. Journal of the American Medical Association, 263(10), 1438–1441.

Hurley, L. A., Ogier, A. L., & Torvik, V. I. (2013). Deconstructing the collaborative impact: Article and author characteristics that influence citation count. Proceedings of the American Society for Information Science and Technology, 50(1), 1–10.

Jabbour, C. J. C., Jabbour, A. B. L. D. S., & de Oliveira, J. H. C. (2013). The perception of brazilian researchers concerning the factors that influence the citation of their articles: A study in the field of sustainability. Serials Review, 39(2), 93–96.

Jiang, J., He, D., & Ni, C. (2013). The correlations between article citation and references’ impact measures: What can we learn? Proceedings of the American Society for Information Science and Technology, 50(1), 1–4.

Ke, Q., Ferrara, E., Radicchi, F., & Flammini, A. (2015). Defining and identifying sleeping beauties in science. Proceedings of the National Academy of Sciences, 112(24), 7426–7431.

Kirman, C. R., Simon, T. W., & Hays, S. M. (2019). Science peer review for the 21st century: Assessing scientific consensus for decision-making while managing conflict of interests, reviewer and process bias. Regulatory Toxicology and Pharmacology, 103, 73–85.

Knorr-Cetina, K. D. (1981). The Manufacture of knowledge: An essay on the constructivist and contextual nature of science. Pergamon Press.

Langham-Putrow, A., Bakker, C., & Riegelman, A. (2021). Is the open access citation advantage real? A systematic review of the citation of open access and subscription-based articles. PLoS ONE. https://doi.org/10.1371/journal.pone.0253129

Larivière, V., & Gingras, Y. (2010). On the relationship between interdisciplinary and scientific impact. Journal of the American Society for Information Science and Technology, 61(1), 126–131.

Larivière, V., Vignola-Gagné, É., Villeneuve, C., Gelinas, P., & Gingras, Y. (2011). Sex differences in research funding, productivity and impact: An analysis of Quebec university professors. Scientometrics, 87(3), 483–498.

Latour, B., & Woolgar, S. (1979). Laboratory Life: the social construction of scientific facts. Sage.

Lee, C. J., Sugimoto, C. R., Zhang, G., & Cronin, B. (2013). Bias in peer review. Journal of the American Society for Information Science and Technology, 64(1), 2–17.

Levitt, J. M., & Thelwall, M. (2008). Is multidisciplinary research more highly cited? A macro-level study. Journal of the American Society for Information Science and Technology, 59(12), 1973–1984.

Liu, J., Chen, H., Liu, Z., Bu, Y., & Gu, W. (2022). Non-linearity between referencing behavior and citation impact: A large-scale, discipline-level analysis. Journal of Informetrics, 16(3), 101318.

MacRoberts, M. H., & MacRoberts, B. R. (1984). The negational reference: Or the art of dissembling. Social Studies of Science, 14(1), 91–94.

Mammola, S., Fontaneto, D., Martínez, A., & Chichorro, F. (2021). Impact of the reference list features on the number of citations. Scientometrics, 126(1), 785–799.

Mammola, S., Piano, E., Doretto, A., Caprio, E., & Chamberlain, D. (2022). Measuring the influence of non-scientific features on citations. Scientometrics, 127(7), 4123–4137.

Martínez, A., & Mammola, S. (2021). Specialized terminology reduces the number of citations of scientific papers. Proceedings of the Royal Society B, 288(1948), 20202581.

Merton, R. K. (1973). Priorities in scientific discovery. In R. K. Merton (Ed.), The sociology of science: Theoretical and empirical investigations (pp. 286–324). University of Chicago Press.

Moed, H. F. (2005). Citation analysis in research evaluation. Springer.

Moxham, H., & Anderson, J. (1992). Peer review. A view from the inside. Science and Technology policy 7–15.

Mulkay, M. (1976). Norms and ideology in science. Social Science Information, 15(4–5), 637–656.

Narin, F., & Whitlow, E. S. (1990). Measurement of Scientific Co-operation and Coauthorship in CEC-related areas of Science. Report EUR 12900, office for official publications in the European Communities.

OECD, Eurostat,. (2018). Oslo Manual 2018: Guidelines for collecting, reporting and using data on innovation Activities. OECD Publishing. https://doi.org/10.1787/9789264304604-en

Özkent, Y. (2022). Social media usage to share information in communication journals: An analysis of social media activity and article citations. PLoS ONE, 17(2), e0263725. https://doi.org/10.1371/journal.pone.0263725

Patterson, M. S., & Harris, S. (2009). The relationship between reviewers’ quality-scores and number of citations for papers published in the journal physics in medicine and biology from 2003–2005. Scientometrics, 80(2), 343–349.

Pendlebury, D. A. (2009). The use and misuse of journal metrics and other citation indicators. Scientometrics, 57(1), 1–11.

Peng, T. Q., & Zhu, J. J. H. (2012). Where you publish matters most: A multilevel analysis of factors affecting citations of internet studies. Journal of the American Society for Information Science and Technology, 63(9), 1789–1803.

Pichappan, P., & Sarasvady, S. (2002). The other side of the coin: The intricacies of author self-citations. Scientometrics, 54(2), 285–290.

Piwowar, H., Priem, J., Larivière, V., Alperin, J. P., Matthias, L., Norlander, B., & Haustein, S. (2018). The state of OA: A large-scale analysis of the prevalence and impact of open access articles. PeerJ. https://doi.org/10.7717/peerj.4375

Pride, D., & Knoth, P. (2018). Peer review and citation data in predicting university rankings, a large-scale analysis. International Conference on Theory and Practice of Digital Libraries, TPDL 2018: Digital libraries for open knowledge, 195–207. https://doi.org/10.1007/978-3-030-00066-0_17, last accessed 22 May 2024.

Rabe-Hesketh, S., & Skrondal, A. (2022). Multilevel and longitudinal modeling using stata (4th ed.). Stata Press.

Rigby, J. (2013). Looking for the impact of peer review: Does count of funding acknowledgements really predict research impact? Scientometrics, 94, 57–73. https://doi.org/10.1007/s11192-012-0779-5

Rossi, M. J., & Brand, J. C. (2020). Journal article titles impact their citation rates. Arthroscopy, 36, 2025–2029.

Rushforth, A. (2023). Letter: Response to Torres-Salinas et al. on “bibliometric denialism”. Scientometrics, 128, 6781–6784. https://doi.org/10.1007/s11192-023-04842-y

Sanfilippo, P., Hewitt, A. W., & Mackey, D. A. (2018). Plurality in multi-disciplinary research: Multiple institutional affiliations are associated with increased citations. PeerJ, 6, e5664.

Schroter, S., Weber, W. E. J., Loder, E., Wilkinson, J., & Kirkham, J. J. (2022). Evaluation of editors’ abilities to predict the citation potential of research manuscripts submitted to the BMJ: A cohort study. British Medical Journal. https://doi.org/10.1136/bmj-2022-073880

Sivadas, E., & Johnson, M. S. (2015). Relationships between article references and subsequent citations of marketing journal articles. Revolution in marketing: market driving changes (pp. 199–205). Springer.

StataCorp. (2023). Stata 18 Statistical software. StataCorp LLC.

Stegehuis, C., Litvak, N., & Waltman, L. (2015). Predicting the long-term citation impact of recent publications. Journal of Informetrics, 9(3), 642–657.

Stremersch, S., Camacho, N., Vanneste, S., & Verniers, I. (2015). Unraveling scientific impact: Citation types in marketing journals. International Journal of Research in Marketing, 32(1), 64–77.

Symonds, M. R., Gemmell, N. J., Braisher, T. L., Gorringe, K. L., & Elgar, M. A. (2006). Gender differences in publication output: Towards an unbiased metric of research performance. PLoS ONE, 1(1), e127.

Tahamtan, I., & Bornmann, L. (2018). Core elements in the process of citing publications: Conceptual overview of the literature. Journal of Informetrics, 12(1), 203–216.

Tahamtan, I., SafipourAfshar, A., & Ahamdzadeh, K. (2016). Factors affecting number of citations: A comprehensive review of the literature. Scientometrics, 107(3), 1195–1225.

Talaat, F. M., & Gamel, S. A. (2022). Predicting the impact of no. of authors on no. of citations of research publications based on neural networks. Journal of Ambient Intelligence and Humanized Computing. https://doi.org/10.1007/s12652-022-03882-1

Thelwall, M., Kousha, K., Abdoli, M., Stuart, E., Makita, M., Wilson, P., & Levitt, J. (2023a). Why are co-authored academic articles more cited: Higher quality or larger audience? Journal of the Association for Information Science and Technology, 74, 791–810.

Thelwall, M., Kousha, K., Stuart, E., Makita, M., Abdoli, M., Wilson, P., & Levitt, J. M. (2023b). Does the perceived quality of interdisciplinary research vary between fields? Journal of Documentation, 79(6), 1514–1531.

Torres-Salinas, D., Arroyo-Machado, W., & Robinson-Garcia, N. (2023). Bibliometric denialism. Scientometrics, 28, 5357–5359.

van Raan, A. F. J. (2004). Sleeping beauties in science. Scientometrics, 59(3), 461–466.

Vieira, E. S., & Gomes, J. A. N. F. (2018). The peer-review process: The most valued dimensions according to the researcher’s scientific career. Research Evaluation, 27(3), 246–261.

Walters, G. D. (2006). Predicting subsequent citations to articles published in twelve crime-psychology journals: Author impact versus journal impact. Scientometrics, 69(3), 499–510.

Wang, D., Song, C., & Barabási, A. (2013). Quantifying long-term scientific impact. Science, 342(6154), 127–132. https://doi.org/10.1126/science.1237825

Wang, J., Thijs, B., & Glänzel, W. (2015a). Interdisciplinarity and impact: Distinct effects of variety, balance, and disparity. PLoS ONE, 10(5), e01277298.

Wang, X., Liu, C., Mao, W., & Fang, Z. (2015b). The open access advantage considering citation, article usage and social media attention. Scientometrics, 103(2), 555–564. https://doi.org/10.1007/s11192-015-1547-0

Wuchty, S., Jones, B. F., & Uzzi, B. (2007). The increasing dominance of teams in production of knowledge. Science, 316(5827), 1036–1039.

Xia, W., Li, T., & Li, C. (2023). A review of scientific impact prediction: Tasks, features and methods. Scientometrics, 128(1), 543–585.

Xie, J., Gong, K., Cheng, Y., & Ke, Q. (2019a). The correlation between paper length and citations: A meta-analysis. Scientometrics, 118(3), 763–786.

Xie, J., Gong, K., Li, J., Ke, Q., Kang, H., & Cheng, Y. (2019b). A probe into 66 factors which are possibly associated with the number of citations an article received. Scientometrics, 119(3), 1429–1454.

Yegros-Yegros, A., Rafols, I., & D’Este, P. (2015). Does interdisciplinary research lead to higher citation impact? The different effect of proximal and distal interdisciplinarity. PLoS ONE, 10(8), e0135095. https://doi.org/10.1371/journal.pone.0135095

Yu, X., Meng, Z., Qin, D., Shen, C., & Hua, F. (2022). The long-term influence of open access on the scientific and social impact of dental journal articles: An updated analysis. Journal of Dentistry, 119, 104067. https://doi.org/10.1016/j.jdent.2022.104067

Funding

Open access funding provided by Università degli Studi di Roma Tor Vergata within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Giovanni Abramo and Ciriaco Andrea D’Angelo are members of the Distinguished Reviewers Board of Scientometrics. The authors DECLARE that they have no other known competing interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abramo, G., D’Angelo, C.A. & Grilli, L. The role of non-scientific factors vis-à-vis the quality of publications in determining their scholarly impact. Scientometrics (2024). https://doi.org/10.1007/s11192-024-05106-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11192-024-05106-z