Abstract

Social networks have become popular among researchers and scientists. Specialized platforms for researchers offer many metrics and indicators which are used to evaluate various scientists and assess the strength of their impact. In this article the authors perform systematic comparison between the main university level ResearchGate (RG) metrics: total RG Score, number of publications, number of affiliated profiles and ARWU. A tool for acquiring the RG metrics of research units and a framework for calculating alternative university ranks was implemented and tested. As a point of reference the ranking system of the Academic Ranking of World Universities (ARWU, 2019) was used. The authors used a web scraping technique to acquire data. Data analysis was based on Spearman's rho and multiple linear regression (MLR). Ten additional ranks were developed and compared with the benchmark ranking. The k-means clustering method was used to identify the groups of ARWU universities. The research results show that the metrics provided by specialized social networks can be used for the assessment of universities, however, an in-depth evaluation requires a more advanced procedure and indicators to measure many areas of scholarly activity like research, integration, application, teaching, and co-creation. Clustering method showed also that the distance between the ARWU universities measured in values of RG metrics are bigger for the top of the ranking. The university authorities should encourage researchers to use specialized social networks, and train them how to do it, to promote not only their own achievements, but also to increase the impact and recognition of their respective research units. At the end of the article some limitations of the method used and some practical recommendations for the university authorities were formulated.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Web 2.0 tools have become increasingly important in the research community (Ahmad, 2011; Grabner-Kräuter, 2009; He et al., 2014). Social networks based on Web 2.0 not only allow for the distribution of entertainment, they may also facilitate effective scholarly communication (Chugh & Ruhi, 2018; Görögh et al., 2017; Greifeneder et al., 2018; Harris & Rea, 2019; Hung & Yuen, 2010; Zaidieh, 2012). The current international academic environment is facilitated by many academic social networks (ASN) that are used by both early career and experienced researchers (Mason, 2020) and their usage has increased significantly over the past decade (Jordan, 2019). ASNs are important tools for disseminating research outputs and interacting with other scientists (Kadriu, 2013; Noorden, 2014). Social networks have accelerated the process of sharing knowledge (Asnafi, 2017). Specialized social networking platforms have attracted the attention of researchers and scientists because such tools can be effectively used not only for building a portfolio, developing international scientific networks, sharing ideas and convenient communication (Al-Aufi & Fulton, 2014; Greifeneder et al., 2018; Manco, 2019; Meishar-Tal & Pieterse, 2017; Muscanell Utz, 2017), but also for making comparisons, assessing research and researcher impact (Erdt et al., 2016; Hoffmann et al., 2016; Sugimoto, et al., 2017; Yan & Zhang, 2019) and promoting research institutions and developing regions (Asmi & Margam, 2018; Meishar-Tal & Pieterse, 2017McGillivray & Astell, 2019). Researchers have analysed both ASNs, their usefulness and benefits, but also occasionally criticized their disadvantages and defects (Jacsó, 2005; Jamali, 2017; Kraker & Lex, 2015; Thelwall & Kousha, 2017). Many studies have confirmed the benefits of ASNs both for researchers and research institutions and indicate that these tools are necessary at present to improve the international visibility of emerging academic systems (Mason, 2020) and measure many areas of scholarly activity like research, integration, application, teaching, and co-creation (Nicholas et al., 2015). The influence of articles and research based on citations is a transparent but slow process and as a consequence, the articles and research teams have to wait before they are truly recognized, therefore altmetric tools are needed to measure their real time impact (Warren et al., 2017; Williams, 2017).

The major known international university rankings include QS World University Rankings, Times Higher Education World University Rankings, and Academic Ranking of World Universities (ARWU). ARWU is one of the most influential, classical word university ranking (Shanghairanking, 2021). The indicators of university performance are also commonly provided by academic social networking platforms. There are many specialized platforms for scientists: Academia.edu, ResearchGate, Google Scholar, Scopus, Web of Science, ResearcherID or ORCID. They offer various features such as convenient mechanisms for sharing data and provide useful indicators for researcher and research unit evaluation (Herman & Nicholas, 2019; Waltman, 2016). At present, one of the most popular ASNs available is ResearchGate (RG) with over 16 million users worldwide. It is used to share, discover, and discuss research (ResearchGate, 2020). RG is recognized by the vast majority of researchers (Van Noorden, 2014). It offers many metrics useful for researcher level evaluation: RG Score, Total Research Interest, citations, h-index, recommendations, reads, research items, projects, questions, answers, following, followers (Shrivastava & Mahajan, 2015; Wiechetek, 2019; Wiechetek et al., 2020). These metrics may be used for recruitment, promotion and a broad researcher evaluation (Orduna-Malea et al., 2017; Yu et al., 2016; Nicholas, Clark, et al., 2016; Martín-Martín, 2016; Thelwall & Kousha, 2017; Gumpenberger et al., 2016; Jordan, 2015). ResearchGate also provides university level metrics: The total RG Score, number of publications and the number of researchers which could be used to compare the research units, faculties, or the universities as a whole, and assist with the process of organizational level evaluation. It appears that RG metrics may be an effective altmetric indicator for active researchers but they may also be used to measure the scientific outputs of the research units.

Higher education units are complicated organizations with a “complex production process”, therefore it is difficult to find simple up to date metrics that reflect their performance in a holistic manner (Johnes, 2016). Institutional performance may be improved with the use of specialized social networking platforms. Research conducted by Ali et al. indicates that there is a tendency for lower-ranked institutions to obtain a lower RG score (Ali et al., 2017). The research also confirms that academic social networking sites provide useful metrics which realistically mirror the research activity of institutions (Yan & Zhang, 2018), therefore ASNs may serve as a source of indicators for the evaluation of research institutions. The visibility of institutions on ResearchGate is closely correlated with the number of academic staff, therefore some analyses indicate that the total RG indicators should not be interpreted as strong indicators of university productivity (Lepori et al., 2018). However Thelwall and Kousha have provided proof that ResearchGate indicators correlate moderately well with major university rankings (Thelwall & Kousha, 2015). Many of these indicators are also stable across the different platforms (Martín-Martín et al., 2016) and in many cases universities which are included in the top university ranks occupy similar places with the other rankings based on bibliometric indicators (Borrego, 2017). New metrics may be used to add richness and differentiation to the scientific impact assessment (Jamali et al., 2016). If we assume that universities are collections of researchers, we may also assume that the sum of metrics of individual researchers are also useful for the evaluation of research units.

ResearchGate generates many benefits and value added for researchers and the research units, but it has also been criticized at times. Thelwall and Kousha revealed that the RG portal is dominated by recent articles and that some disciplines like arts and humanities, health professions and decision sciences are underrepresented (Thelwall & Kousha, 2015). It has been alleged that specialized social networks have facilitated copyright infringement (Jamali, 2017). Some RG users have falsified data in their publications and the platforms do not have the tools required to fight this issue (Meier & Tunger, 2018a). Finally, researchers have addressed the shortcomings of the RG score: inconsistency with other metrics (Copiello & Bonifaci, 2019), a lack of transparency, incorporating the journals impact factor into single researcher evaluation, obscure indicators (Kraker & Lex, 2015; Meier & Tunger, 2018b), ignoring certain scholarly activities (Nicholas et al., 2016b) and reflecting the social networking environment, rather than the publication impact (Copiello & Bonifaci, 2018). The criticisms above must also be considered and therefore university ranks based on ASN metrics may have some drawbacks.

Scholarly social networks offer many metrics that could support the assembly of richer and more up to date frameworks for assessing the article or researcher impact, that could be called scientometrics 2.0 (Priem & Hemminger, 2010). The altmetrics impact measures are based mainly on social media activity, they are the latest addition to the evaluation toolbox (Bar-Ilan et al., 2018). These methods will play an important role in the future and revolutionize the analysis of the impact of scholarly work (Galligan & Dyas-Correia, 2013; González-Valiente et al., 2016). However, at the present time there are an insufficient number of articles addressing the systematic comparison between RG scores and ARWU. It may be assumed that the reputation of a university is largely made up of the combined reputations of single employees, therefore in this paper, an alternative university rankings system based on ResearchGate metrics was developed and compared with the Academic Ranking of World Universities.

In this article the authors present the systematic comparison between RG metrics and ARWU. The next parts of the paper are organized as follows: In Sect. 2, the authors describe the method that was used to collect the data and the alternative ranks developed were included. Section 3 presents the results and includes descriptive statistics of the total RG metrics for the analysed universities, an analysis of the developed rankings, and a multiple linear regression model. Finally, in Sect. 4 discussion and in Sect. 5 conclusions, limitations and implications are presented.

Research

The research questions

The ResearchGate metrics of the best universities in the world (according to the ARWU ranking) were collected and analysed to answer the following research questions:

-

Q1: Which parameters are comparable in the ARWU and ResearchGate based evaluation system?

-

Q2: What are the values of the university level RG metrics of World’s top universities?

-

Q3: If rankings based on the RG metrics are positively correlated with ARWU and can be used for developing alternative university rankings?

-

Q4: Can we predict the university's position in ARWU ranking based on RG Score metrics?

-

Q5: What are the differences between ARWU and RG based ranks by continent?

-

Q6: What groups of ARWU universities can be identified using RG metrics?

To answer the research questions, the authors developed the web scrapping tool. Then the collected data were analysed using IBM SPSS Statistics tool. The descriptive statistics, Spearman's rho correlation, k-means clustering, and multiple linear regression was used.

The tool

In order to establish whether the metrics provided by the ResearchGate platform could possibly be useful for the evaluation of research organizations, the authors collected the Total RG Score, number of publications and number of researchers for a thousand universities from ARWU 2019.

ResearchGate provides three metrics that can be used to characterize research units: total RG Score (Total RG), members (researchers) and publications. The RG score is one of the fundamental metrics used in our analysis. It is occasionally the subject of accusations of a lack of transparency; however, many publications and our previous research (Wiechetek & Mastalerz, 2019) indicates that the metric has a particularly close correlation with very transparent indicators like the number of citations, reads. The analysis and observations made by the authors also indicate that the biggest impact on the RG score value was made by publications (not only on the quantity but also the quality), the activity level on the portal has a smaller impact. Some research also indicates that researcher level rankings based on the RG score correlate moderately with other academic rankings and therefore also reflect the traditional academic capital (Thelwall & Kousha, 2015), as well as platform engagement and seniority (Hoffmann et al., 2016) and therefore it may be used as an indicator of research performance (Yu et al., 2016).

In order to download these metrics for all universities from ARWU, two R scripts were implemented. The authors used the R package version 3.6.1 supplemented with RStudio, an integrated development environment. The web scraping technique was used to obtain data from the ARWU web site and RG platform. At present, the automatic retrieval of data from the Web for academic and business research projects is a common practice (Krotov & Silva, 2018). Most internet search engines use this technique to find specific information on the Internet (Gold & Latonero, 2017). Also, specialized social network portals use these tools for gathering information about the new publications of their users. Many scientific papers present different research cases based on the data collected using web-scrapping. Google scholar presents over 24 thousand articles addressing this technique, they were published after 2016. Web scrapping offers many new possibilities; however, it could also lead to ethical concerns that are still a “grey area” (Krotov & Silva, 2018; Sugiura et al., 2017).

The script was based on the rvest library (Wickham, 2016) for the R package which helps with the task of scraping information from the web page. The operation of the script may be described using the following steps:

-

1.

Loading the names of the universities and their RG profiles from the CSV file.

-

2.

Creating the output file for storing the downloaded metrics (if necessary).

-

3.

Counting the new starting point of scraping if the previous collecting process was interrupted.

-

4.

In the main loop in the script, each university on the list included the following:

-

a.

The hyperlink to the university profile on RG is created.

-

b.

The full html page with the university profile data is retrieved.

-

c.

Metrics values are extracted from the html page.

-

d.

If the metrics are not found special information is prepared instead of the metric.

-

e.

A data line with the university name, metric values and timestamp is prepared and written to the output file.

-

a.

The script contained a mechanism to prevent unfinished data collection. If the process was interrupted by the user or by the RG server (e.g. 429 Too Many Requests error) after the restart, the tool collected the next data. To avoid blocking by the server at the end of the script a sleep function was used to pause the process for some time. The script was used both for collecting the RG profile names of the ARWU universities and to collect the values of all university level metrics in two iterations. The first iteration allowed for the collection of the university profile names if they were based on the full university name. In the second iteration, after manually completing the missing profile names, the metrics of the top 1000 ARWU universities were collected.

The CSV file containing the acquired data was then imported to Microsoft Excel to be supplemented. For future analysis and visualization, additional columns labelled Country, Continent and Country Code were added. The spreadsheet was used to create alternative rankings for universities based on RG metrics and their combinations. The authors prepared several rankings based on the absolute and average values of RG metrics.

A Microsoft Excel file containing the created rankings was imported to IBM SPSS Statistics 25 to a perform statistical analysis and visualization. A descriptive statistics, correlation analysis and a multiple linear regression was performed to check if rankings based on the ResearchGate metrics are highly and positively correlated with the ARWU rank, and therefore can be used for real time university evaluation.

Variables

The collected dataset consists of the main ResearchGate metrics Total RG Score, the number of profiles and the number of publications for all universities presented in the Academic Ranking of World Universities 2019. The data were collected using a web scraping technique in December 2019.The rankings used in the analysis are presented in Table 1.

The first rankings developed were based on the absolute values of the Total value of the RG Score, members on the RG platform, number of publications, but also on the average value of publications per employee and RG Score per researcher. Finally, the last five rankings were based on a combination of RG metrics.

Results

ARWU 2019 universities and their RG metrics

To answer the research question about the university level metrics (Q2) the descriptive statistics were calculated. The analysed metrics were diverse among the universities. The mean value for the Total RG Score was almost 33,567, the average value of the profiles per university was slightly above 6484, while the average number of publications per university was near 48,481. The descriptive statistics of the RG metrics for the ARWU top 1000 universities is presented in Table 2.

The median is lower than the mean for all of the analysed metrics, so the distribution is asymmetrical with a positive skew. For the Total RG Score, the number of profiles and publications, the skewness value is 2.169, 1.839 and 2.425 respectively. The minimal value of the Total RG Score was over three hundred times lower than the maximal value. The least well represented university on RG had only 82 profiles while the most represented research organization had over 46,000 affiliated researchers on RG. The RSD indicates that for all the metrics the residuals are widely spread around the mean (RSD > 86%).

The positive correlations between values of three metrics should be noted. The total RG score is bigger when the number of researchers (rho = 0.897) and publications (rho = 0.897) are higher. Also, the number of researchers has a positive impact on the number of publications (Fig. 1).

Comparison of alternative RG rankings with ARWU

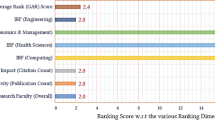

The dispersion charts (Fig. 2) indicate that the less related ranking is based on the average RG score per university profile and the average number of publications per RG profile. The rankings based on the absolute values of the ResearchGate metrics (Total RG Score, Number of publications, Number of researchers) are more convergent.

For both rankings based on the one metric and developed with the use of a combination of more than one RG metric, matching is better for the first 300 universities. For the middle and the last universities in ARWU we may observe a large degree of scatter.

The Spearman's rho was used to find out if rankings based on the RG metrics are positively correlated with ARWU (Q3). The analysis shows a statistically significant positive correlation between ARWU 2019 and all of the rankings based on a summary of ResearchGate metrics for research units (Table 3).

Predicting the position of the university

Let us define × 1, × 2, × 3 to be a summary of the RG score, the number of university RG profiles and the number of items published on RG on the profiles affiliated to the university, then the position in the rank based on RG metrics (RG_ARWU) should be calculated as follows:

Multiple Linear Regression (MLR) was used to create a model for predicting the position of the university in the ARWU rank according to summary metrics provided for research units by ResearchGate portal (Q5). The results of the MLR model are shown in Table 4.

According to the MLR analysis, the final model allowing for an estimation of the position of the university in the world university ranking based on the RG university level metrics is as follows:

The developed model fits well with the analysed data (F = 345.248, p < 0.001). A correlation of all the predictors with a place in the ARWU is 0.714. According to the model, 51% of the volatility of the position in the ranking is explained by the volatility of the three ResearchGate metrics.

ARWU members by country and continent

In the ARWU top 1000 rank, the majority were European (38.5%) and Asian (28.9%) universities. The lowest number of universities were represented by Oceania, South America and Africa, these continents had ≤ 4.1% of the total university number. The very top positions were occupied by North American universities. The most represented countries were the United States (20.6%), China (13.2%), the United Kingdom (6.1%) and Germany (5.1%). All the rest of the countries (27.2%) had a representation of less than 5%.

Considering the continents, we should note that North America has the most universities in the top 400, Asia has the majority in the last 500, while Europe is equally dispersed from the first to the last position (Fig. 3). The South American universities look better when we consider the RG rankings as opposed to ARWU. Asian research units especially those from the lower 500 group on the list are better represented in ARWU than by the results from the absolute indicators provided by RG. However, when we investigate the average RG Score and the average number of publications per profile, they should be placed higher. North American universities especially from the top 200 group are more highly placed by the ARWU ranks than by the results from the average values of the RG metrics. Finally, research units from Oceania, according to the average RG score and the average number of publications should be placed lower in the ranking scheme.

The histogram analysis (Fig. 4) was used to check the differences between ARWU and RG based ranks by continent (Q5). The analysis indicates that according to ARWU rank, the top positions are occupied mainly by the universities from North America and Europe. The middle places are taken by both European and Asian academic centres. Africa, Oceania, and South America have few universities in the ranking list. However, while African and South American universities occupy the last positions, most universities from Oceania occupy positions in the top 400. When we take into account the ranking list based on the Total university RG score we observe that European universities are better represented (than in ARWU) in fact, most of them are placed in the first 500, while Asian institutions are poorly represented in the high and medium positions. Also, African units are moved to better positions.

The American and Oceanian universities occupy very similar places in both the ARWU and RG score-based rankings. When we take into account the total number of affiliated profiles, American and Asian universities decline in their rankings. Asian universities fell from the middle positions to the last places while the American ones declined from the top positions to the middle ones. The universities that benefited the most are located in Africa and South America. These universities rose from the last places to the top positions.

The third university level metric available on the RG platform is the total number of publications. The alternative ranking method based on this metric looks very similar to ARWU with two exceptions. The North American universities rose from the top to the very top positions and European academic centres rose from the middle towards the top positions. A large difference may be noted between ARWU and the rankings based on the average value of the RG score and number of publications. When we consider the average RG score, African and Oceanian universities fell to the last positions, North American declined from the top to the middle and the European institutions fell from the middle to the last places. The only academic centres which gained according to this ranking are located in Asia. When considering the average number of publications, we may also take note of the significant differences between the ARWU and RG based rankings. African and Oceanian universities lost their positions in the middle of the ranking list. European academic centres fell from the top position to the middle ones, and also, North American centres declined from the top positions to those in the middle. South American universities occupied similar positions. The main beneficiary were Asian academic centres which rose from the last and middle places to the very top positions.

Groups of ARWU universities according to the ResearchGate metrics

The k-means clustering method was used to identify the groups of universities presented in ARWU ranking (Q6). Before the clustering was performed the metrics were standardized. Basing on the three university level RG metrics: RG Total Score, Number of affiliated researchers and Number of publications the authors identified three groups of universities (Fig. 5).

F test indicated that all three analysed RG metrics were statistically significant for developing the clusters. The clustering procedure allowed for identification of the following clusters. Cluster 1—can be labelled as “Leaders” (n = 66). It consists mostly of the universities with the high values of all the three analysed metrics. It contains mostly the universities form the top 100 ARWU rank. However, in this cluster we can find also the four universities ranked at the end of the second hundred of the ranking. In this group we can notice relatively large spread with several universities with very high metrics’ values. Cluster 2—“Middlers” (n = 304) can be characterized as a set of universities with medium value of all three metrics. The group is moderately consistent. Inside the group the universities with lower (medium) values of all RG metrics are more similar. This cluster members occupy various positions in the ranking from 6 to 900 in ARWU rank. Finally, third identified cluster is the most consistent. The cluster can be described as “Closing”. It contains the biggest number of the universities (n = 630). The values of RG metrics of the universities assigned to the third cluster are the lowest, but the most similar. The members of this cluster mostly occupy places beyond the top hundred.

The descriptives of the three identified groups (Table 5) indicate the huge distance between the clusters. The median of Total RG score for the leaders is two and a half times bigger than for Middlers and seven times higher than in Closing cluster.

Leaders have also much more researchers and publications than Middlers and Closing. The clustering analysis indicate that university level metrics provided by RG can be used to identify and evaluate different types of research units. That analysis can be used to find the research unit that could serve as a benchmark, but also to evaluate the progress of the university and assess the distance between research units. The analysis indicates that according to the RG metrics is not easy to move from the Closing cluster to Middlers and Leaders group, however, the differences between universities from the Closing cluster are not big, so changing places in the ranking inside this cluster is possible with a relatively small improvement in the value of the RG metrics.

Interesting can be also the cases where developed clusters do not match the ARWU position. In Leaders cluster we can notice thirteen universities that occupy places at the end of the ARWU (beyond the first 700). They are mostly Asian and European universities and were incorporated to the cluster because of huge number of profiles on RG. In Closing cluster, we also can observe nine universities that according to the ARWU should occupy places in the top 150. The cases are mostly the universities from North America and Europe. They were assigned to the Closing cluster mostly because of the small number of Research Gate profiles affiliated with them. They can be small universities or only a small part of their employees has the RG account.

Discussion

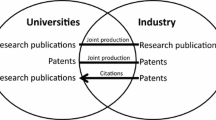

The ARWU methodology analysis indicates that the ranking is based mostly on staff and alumni performance. The ResearchGate portal provides three university level metrics Total RG score, number of publications, number of employers. The analysis and observations made by the authors indicate that the most significant impact on the RG score value are publications (not only the quantity, but also the quality), activity on the portal has less influence. Some scientists also indicate that researcher level rankings based on the RG score correlate moderately with other academic rankings and therefore not only reflect the traditional academic capital (Thelwall & Kousha, 2015), but also the platform engagement and seniority (Hoffmann et al., 2016) and therefore, it may be an indicator for measuring researcher performance (Yu et al., 2016). In our opinion, the most important and valuable asset of the university is people, particularly researchers, and therefore the aggregation of researcher level metrics may be used to build university level indicators useful for developing a new metric which can support traditional academic rankings. In this case the RG based ranking is similar to ARWU. They both use among others number of citations and published papers (Q1).

The descriptive statistics showed that the RG metrics of ARWU top 1000 universities are very diverse. In the ranking we have both big universities with thousands of researchers and hundreds of thousands of publications, but also small units with only about hundred employees active on ResearchGate. Therefore, we can conclude than not only big universities can be the best one. However, the most top ARWU positions are occupied rather by the big organizations with a large number of researchers and publications (Q2).

We may observe a strong positive correlation between ARWU and three rankings based on RG metrics. So they may be used for preparing the alternative university rankings (Q3). The closest correlation is between ARWU and the alternative ranking (R_RG_Pub) based on the multiplied number of publications and the Total RG Score value (rho = 0.808), also between ARWU and the number of publications (rho = 0.802). Surprisingly, the correlation between ARWU and the average number of publications per profile and the average number of RG scores per profile is weak (rho ≤ 0.241). Considering all ARWU top 1000 universities we may conclude that the rankings based on absolute ResearchGate metrics values are more similar to the Academic Ranking of World Universities. According to the correlation analysis, universities that wish for more recognition in the ResearchGate should motivate their employees to create and maintain RG profiles. One important factor is the requirement to possess a large number of publications and make them available through the platform. Finally, the quality of the publication is reflected in the high RG Score value, this is also an important factor for research unit evaluation. Taking into account the level of similarity between the ResearchGate metrics-based rankings and the reference ARWU we can state that university level RG metrics reflect the impact of research organizations.

The developed model based on Multiple Linear Regression shows that the position in the university ranking can be partially estimated by summarizing the RG score, the number of profiles and the number of affiliated publications provided by the ResearchGate portal (Q4). The least significant metric in the model is the number of affiliated ResearchGate profiles. However, 49% of the volatility of the position in the ARWU rank should be explained by other factors, this factors are due to the “complex production process” of universities (Johnes, 2016). The RG metrics can only reflect a few areas in the field of activity of the universities related to research and international cooperation, however, holistic university evaluation should also include integration, application, teaching, business cooperation, adapting to market requirements and many other considerations.

Comparing the ARWU and RG based ranks by continent we may state that alternative rankings based on absolute RG metrics are more like ARWU and more accurately reflect the impact of the university than rankings developed with the use of average university level RG indicators. According to these rankings, universities in developing countries, especially those located in Africa and South America gain the most (compare Asmi & Margam, 2017; Meishar-Tal & Pieterse, 2017; McGillivrayl & Astell, 2019). The situation of the academic centres in Asia is getting worse while the positions of the North American and Europe academic centres looks similar to ARWU. The Asian universities gain when we consider indicators per RG user (Q5).

The k-means clustering method allowed for dividing the ARWU Top 1000 universities into three different clusters: Leaders, Middlers and Closing. The analysis indicates that the universities from the end of the ranking are more similar according to the RG metrics, while in the leaders cluster the differences are huge. The clustering also confirmed the previous analysis and indicated that both universities from the top, middle and last positions in ARWU can form coherent clusters Leaders, Middlers and Closing that consists of universities with similar metric values (Q6).

The performed analysis has several practical implications. We may state that rankings based on RG metrics could be useful for university evaluation (Borrego, 2017; Priem & Hemminger, 2010; Thelwall & Kousha, 2015; Yan & Zhang, 2018), because they produce results similar to those of ARWU. Of course, the similarity is not perfect. The reason for this may be the stress which is placed on social network activity (Copiello & Bonifaci, 2018) which does not reflect all scholarly activities (Nicholas, Herman, et al., 2016). However, if university authorities wish to obtain a rapid and inexpensive analysis based, on real time and stable data (Martín-Martín et al., 2016) they can use the metrics provided by ResearchGate. Of course, using this solution also comes with some opportunities for manipulation. Manipulation may be caused by the researcher themselves who infringes copyright, fakes profile data or generates scores not through research but through social network activity (Jamali, 2017; Meier & Tunger, 2018a; Kraker & Lex, 2015; Thelwall & Kousha, 2015), manipulation may also be caused by the university authorities which use specially selected metrics. For example, to show universities from developing countries in a better light they could use the absolute values of the RG indicators. To enhance the promotion of Asian universities, the average values of the university level metrics should be used. To avoid manipulation the evaluation process should have a clear methodology and be based on input data which is consistent with reality and describes the broad aspects of the functioning of the university.

Conclusions

ResearchGate is one of the leading social networks for researchers (Jamali et al., 2016), therefore it attracts the attention of not only researchers but also research unit managers that want to build scientific networks and promote their achievements all over the world. The aim of the article was to perform the systematic comparison between RG scores and ARWU. In some cases the RG based ranking is similar to ARWU. They both are bases mostly on the staff research outcomes.

The implemented web scraping tool was used to download RG metrics of ARWU top 1000 universities. The calculated alternative university ranks were compared with the Academic Ranking of World Universities (ARWU, 2019). Based on the analysis performed we may conclude that the metrics provided by scholarly social networks can be used not only for single researcher evaluation but also for benchmarking research units and entire universities. Of course, specialized monitoring institutions offer more precise rankings that consider many areas of university operation such as: research, education, business cooperation, internationalization and many others. However, these rankings are prepared and published quite rarely, mainly once a year. The tools and evaluation procedure presented in the article confirm that the metrics provided by specialized social networks are easily accessible and provide up to date information about the impact of research conducted by the university staff, therefore, it may be used to develop useful university ranks and new, internet-based evaluation systems.

RG metrics correlate with rankings, and it is the fact known in the literature. The existing research (as i.e. Ali et al., 2017; Lepori et al., 2018; Ortego, 2015; Ravenscroft et al., 2017) are presenting other points of view on the problem described comparing to our research. Additionally, we did not find the published works with the topic related directly to our paper ideas. We hope our research can be used as the fulfillment of the different perspectives of looking at the university rankings and their scores presentation and evaluation. Also, there is the direct connection between our research and the university practices, which may allow the university managers and administration to improve their universities activity in comparison to the others. Our conclusions are basing both on data analysis and personal business and academic experiences connected to the university management, and we are aware the possibility of using research effects in the university practice to rise up competitive position on the global academic entities network.

Modern universities should look for new solutions to assist them in the task of monitoring and evaluating their performance and progress. New data sources (social media) and technologies (e.g. big data) as well as Web 2.0 and Web 3.0 tools provide a substantial opportunity for economical and rapid data collection and analysis. As stated in the introduction, we agree that the RG score is not an ideal indicator (Jacsó, 2005; Jamali, 2017; Kraker & Lex, 2015; Thelwall & Kousha, 2017), however, many researchers have confirmed the benefits of specialized social networks both for researchers and research institutions and their usefulness in increasing international visibility, integration and knowledge co-creation (Orduna-Malea et al., 2017; Yu et al., 2016; Nicholas, Clark, et al., 2016; Martín-Martín, 2016; Thelwall & Kousha, 2017; Gumpenberger et al., 2016; Jordan, 2015).

The ideal tool for the university evaluation does not exist, also the traditional, well-known rankings like ARWU are sometimes criticized for relying mostly on awards and prizes, dependence on the university size, problems with transparency and aggregation methodology. Furthermore, the education and research systems are evolving and therefore new sources of information and evaluation methods should be used and developed. The major concern is still the lack of transparency of the RG score, which uses an unknown algorithm to calculate its value (Copiello, 2019; Kraker & Lex, 2015) this may discourage some parties from using it to build university rankings. Secondly, the authors assumed that the Academic Ranking of World Universities (ARWU) is a reliable and true measure of research quality, while in fact, we can identify many problems with university rankings (Hazelkorn, 2009). However, the ARWU ranking is also it is also sometimes criticized. The correlation analysis showed that rankings based on the total RG Score, the numbers of profiles and numbers of affiliated publications available on ResearchGate are closely correlated with the ARWU rank, therefore university authorities (despite the lack of transparency of the RG Score) should motivate staff to create profiles on scholarly social networks like RG (Gaitán-Angulo et al., 2019) not only to widely disseminate their research results but also to improve the university metrics which can be used for global research unit evaluation.

The main contribution of this paper is to indicate that the traditional methods for solving the university rankings problem could be supplemented with rapid, economical and effective methods based on metrics provided by popular specialized social networking platforms and this may be the next step towards constructing new, and more effective indicators for measuring university performance, based on both quantitative and qualitative digital data. Of course, more in depth research in this area is required to find better solutions however, the ResearchGate metrics seems to be a good starting point.

Availability of data and material

Data available.

References

Ahmad, A. (2011). Social network sites and its popularity. International Journal of Research and Reviews in Computer Science, 2(2), 522.

Al-Aufi, A. S., & Fulton, C. (2014). Use of social networking tools for informal scholarly communication in humanities and social sciences disciplines. Procedia-Social and Behavioral Sciences, 147, 436–445.

Ali, M. Y., Wolski, M., & Richardson, J. (2017). Strategies for using ResearchGate to improve institutional research outcomes. Library Review, 66(8/9), 726–739. https://doi.org/10.1108/LR-07-2017-0060)

Asmi, N. A., & Margam, M. (2018). Academic social networking sites for researchers in Central Universities of Delhi. Global Knowledge, Memory and Communication., 67(1/2), 91–108.

Asnafi, A. R. (2017). Presence of the Iranian Library and the Information Science departments in ResearchGate. DESIDOC Journal of Library & Information Technology, 37(4), 259–263.

Bar‐Ilan, J., Haustein, S., Milojević, S., Peters, I., & Wolfram, D. (2018). Peer review, bibliometrics and altmetrics. Do we need them all? Proceedings of the Association for Information Science and Technology, 55(1), 653–656.

Borrego, Á. (2017). Institutional repositories versus ResearchGate: The depositing habits of Spanish researchers. Learned Publishing, 30(3), 185–192.

Chugh, R., & Ruhi, U. (2018). Social media in higher education: A literature review of Facebook. Education and Information Technologies, 23(2), 605–616.

Copiello, S. (2019). Research Interest: Another undisclosed (and redundant) algorithm by ResearchGate. Scientometrics, 120(1), 351–360.

Copiello, S., & Bonifaci, P. (2018). A few remarks on ResearchGate score and academic reputation. Scientometrics, 114(1), 301–306.

Copiello, S., & Bonifaci, P. (2019). ResearchGate Score, full-text research items, and full-text reads: A follow-up study. Scientometrics, 119(2), 1255–1262.

Empiricaldata. (2019). A guide to ethical web scraping. https://www.empiricaldata.org/dataladyblog/author/jami-empirical. Accessed 23 Oct 2020.

Erdt, M., Nagarajan, A., Sin, S. C. J., & Theng, Y. L. (2016). Altmetrics: An analysis of the state-of-the-art in measuring research impact on social media. Scientometrics, 109(2), 1117–1166.

Gaitán-Angulo, M., Vásquez, C., Torres, M., & Viloria, A. (2019). Determinants of ResearchGate (RG) Score for the Top100 of Latin American Universities at Webometrics. In Data mining and big data: 4th international conference, DMBD 2019, Chiang Mai, Thailand, July 26–30, 2019, Proceedings (Vol. 1071, p. 320). Springer.

Galligan, F., & Dyas-Correia, S. (2013). Altmetrics: Rethinking the way we measure. Serials Review, 39(1), 56–61.

Gold, Z., & Latonero, M. (2017). Robots welcome: Ethical and legal considerations for web crawling and scraping. Wash. JL Tech. & Arts, 13, 275.

González-Valiente, C. L., Pacheco-Mendoza, J., & Arencibia-Jorge, R. (2016). A review of altmetrics as an emerging discipline for research evaluation. Learned Publishing, 29(4), 229–238.

Görögh, E., Vignoli, M., Gauch, S., Blümel, C., Kraker, P., Hasani-Mavriqi, I., Luzi, D., Walker, M., Toli, E., & Sifacaki, E. (2017). Opening up new channels for scholarly review, dissemination, and assessment. In Proceedings of the 13th international symposium on open collaboration, 1–11.

Grabner-Kräuter, S. (2009). Web 2.0 social networks: The role of trust. Journal of business ethics, 90(4), 505–522.

Greifeneder, E., Pontis, S., Blandford, A., Attalla, H., Neal, D., & Schlebbe, K. (2018). Researchers’ attitudes towards the use of social networking sites. Journal of Documentation, 74(1), 119–136.

Gumpenberger, C., Glänzel, W., & Gorraiz, J. (2016). The ecstasy and the agony of the altmetric score. Scientometrics, 108(2), 977–982.

Harris, A. L., & Rea, A. (2019). Web 2.0 and virtual world technologies: A growing impact on IS education. Journal of Information Systems Education, 20(2), 3.

Hazelkorn, E. (2009). Rankings and the battle for world-class excellence: Institutional strategies and policy choices. Higher Education Management and Policy, 21(1), 1–22.

He, X., Gao, M., Kan, M. Y., Liu, Y., & Sugiyama, K. (2014). Predicting the popularity of web 2.0 items based on user comments. In Proceedings of the 37th international ACM SIGIR conference on Research & development in information retrieval (pp. 233–242).

Herman, E., & Nicholas, D. (2019). Scholarly reputation building in the digital age: An activity-specific approach. Review article. El Profesional de la Información (EPI), 28(1), 1–31.

Hoffmann, C. P., Lutz, C., & Meckel, M. (2016). A relational altmetric? Network centrality on Research Gate as an indicator of scientific impact. Journal of the Association for Information Science and Technology, 67(4), 765–775.

Hung, H. T., & Yuen, S. C. Y. (2010). Educational use of social networking technology in higher education. Teaching in Higher Education, 15(6), 703–714.

Jacsó, P. (2005). Google Scholar: The pros and the cons. Online Information Review, 29(2), 208–214.

Jamali, H. R. (2017). Copyright compliance and infringement in ResearchGate full-text journal articles. Scientometrics, 112(1), 241–254.

Jamali, H. R., Nicholas, D., & Herman, E. (2016). Scholarly reputation in the digital age and the role of emerging platforms and mechanisms. Research Evaluation, 25(1), 37–49.

Johnes, J. (2016). Performance indicators and rankings in higher education.

Jordan, K. (2015). Exploring the ResearchGate score as an academic metric: Reflections and implications for practice, 1–3.

Jordan, K. (2019). From social networks to publishing platforms: A review of the history and scholarship of academic social network sites. Frontiers in Digital Humanities, 6, 5.

Kadriu, A. (2013). Discovering value in academic social networks: A case study in ResearchGate. In Proceedings of the ITI 2013 35th international conference on information technology interfaces (pp. 57–62).

Kraker, P., & Lex, E. (2015). A critical look at the ResearchGate score as a measure of scientific reputation. In Proceedings of the quantifying and analysing scholarly communication on the web workshop (ASCW’15), Web Science conference (pp. 7–9).

Krotov, V., & Silva, L. (2018). Legality and ethics of web scraping, https://www.researchgate.net/profile/Vlad_Krotov/publication/324907302_Legality_and_Ethics_of_Web_Scraping/links/5aea622345851588dd8287dc/Legality-and-Ethics-of-Web-Scraping.pdf. Accessed 23 Oct 2020.

Kumar, R., Novak, J., & Tomkins, A. (2010). Structure and evolution of online social networks. In Link mining: Models, algorithms, and applications (pp. 337–357). New York, NY: Springer.

Lepori, B., Thelwall, M., & Hoorani, B. H. (2018). Which US and European Higher Education Institutions are visible in ResearchGate and what affects their RG score? Journal of Informetrics, 12(3), 806–818.

Manco, A. (2019). The use of social networking sites for scholarly communication by emergent social scientists: An affordances approach. Young Information Scientist, 4, 17–30.

Martín-Martín, A., Orduna-Malea, E., Ayllón, J. M., & Lopez-Cozar, E. D. (2016). The counting house: Measuring those who count. Presence of bibliometrics, scientometrics, informetrics, webometrics and altmetrics in the Google Scholar citations, Researcherid, ResearchGate, Mendeley & Twitter. arXiv preprint arXiv:1602.02412. https://arxiv.org/pdf/1602.02412.

Mason, S. (2020). Adoption and usage of Academic Social Networks: A Japan case study. Scientometrics, 122(3), 1751–1767.

McGillivray, B., & Astell, M. (2019). The relationship between usage and citations in an open access mega-journal. Scientometrics, 121(2), 817–838.

Meier, A., & Tunger, D. (2018b). Survey on opinions and usage patterns for the ResearchGate platform. PLoS ONE, 13(10), e0204945.

Meier, A., & Tunger, D. (2018). Investigating the transparency and influenceability of altmetrics using the example of the RG score and the ResearchGate platform. Information Services & Use, 38(1–2), 99–110.

Meishar-Tal, H., & Pieterse, E. (2017). Why do academics use academic social networking sites? International Review of Research in Open and Distributed Learning, 18(1), 1–22.

Muscanell, N., & Utz, S. (2017). Social networking for scientists: an analysis on how and why academics use ResearchGate. Online information review.

Nicholas, D., Clark, D., & Herman, E. (2016). ResearchGate: Reputation uncovered. Learned Publishing, 29(3), 173–182.

Nicholas, D., Herman, E., & Clark, D. (2016). Scholarly reputation building: How does ResearchGate Fare? International Journal of Knowledge Content Development & Technology, 6(2), 67.

Nicholas, D., Herman, E., Jamali, H., Rodríguez-Bravo, B., Boukacem-Zeghmouri, C., Dobrowolski, T., & Pouchot, S. (2015). New ways of building, showcasing, and measuring scholarly reputation. Learned Publishing, 28(3), 169–183.

Orduna-Malea, E., Martín-Martín, A., Thelwall, M., & López-Cózar, E. D. (2017). Do ResearchGate Scores create ghost academic reputations? Scientometrics, 112(1), 443–460.

Ortega, J. L. (2015). Relationship between altmetric and bibliometric indicators across academic social sites: The case of CSIC’s members. Journal of Informetrics, 9(1), 39–49. https://doi.org/10.1016/j.joi.2014.11.004

Priem, J., & Hemminger, B. H. (2010). Scientometrics 2.0: New metrics of scholarly impact on the social Web. First Monday, 15(7).

Ravenscroft, J., Liakata, M., Clare, A., Duma, D. (2017). Measuring scientific impact beyond academia: An assessment of existing impact metrics and proposed improvements, PLoS ONE, 12(3), Article e0173152. https://doi.org/10.1371/journal.pone.0173152

ResearchGate (2020). ResearchGate home page https://www.researchgate.net/about. Accessed 04 Mar 2020.

Shanghairanking (2021). Shanghairanking methodology http://www.shanghairanking.com/ARWU-Methodology-2020.html. Accessed 15 Apr 2021.

Shrivastava, R., & Mahajan, P. (2015). Relationship amongst ResearchGate altmetric indicators and Scopus bibliometric indicators. New Library World, 116(9/10), 564–577. https://doi.org/10.1108/NLW-03-2015-0017

Sugimoto, C. R., Work, S., Larivière, V., & Haustein, S. (2017). Scholarly use of social media and altmetrics: A review of the literature. Journal of the Association for Information Science and Technology, 68(9), 2037–2062.

Sugiura, L., Wiles, R., & Pope, C. (2017). Ethical challenges in online research: Public/private perceptions. Research Ethics, 13(3–4), 184–199.

Thelwall, M., & Kousha, K. (2015). ResearchGate: Disseminating, communicating, and measuring Scholarship? Journal of the Association for Information Science and Technology, 66(5), 876–889.

Thelwall, M., & Kousha, K. (2017). ResearchGate articles: Age, discipline, audience size, and impact. Journal of the Association for Information Science and Technology, 68(2), 468–479.

Van Noorden, R. (2014). Online collaboration: Scientists and the social network. Nature News, 512(7513), 126.

Vitak, J., Shilton, K., & Ashktorab, Z. (2016). Beyond the Belmont principles: ethical challenges, practices, and beliefs in the online data research community. In Proceedings of the 19th ACM conference on computer-supported cooperative work & social computing (pp. 941–953).

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Warren, H. R., Raison, N., & Dasgupta, P. (2017). The Rise of Altmetrics. JAMA, 317(2), 131–132.

Wickham, H. (2016). Package ‘Rvest’. https://cran.r-project.org/web/packages/rvest/rvest.pdf.

Wiechetek, Ł., & Mastalerz, M. W. (2019) Using Specialized Social Media Networks by Academics Employed in Polish Business Schools. A Study on ResearchGate.

Wiechetek, Ł. (2019). Educators and academics in specialized social networks. Comparison of Google Scholar and ResearchGate usage by business researchers of MCSU. EDULEARN19 Proceedings, 8039–8051.

Wiechetek, Ł., Phusavat, K., & Pastuszak, Z. (2020). An analytical system for evaluating academia units based on metrics provided by academic social network. Expert Systems with Applications, 113608.

Williams, A. E. (2017). Altmetrics: an overview and evaluation. Online Information Review.

Yan, W., & Zhang, Y. (2018). Research universities on the ResearchGate social networking site: An examination of institutional differences, research activity level, and social networks formed. Journal of Informetrics, 12(1), 385–400.

Yan, W., & Zhang, Y. (2019). Disciplinary comparisons of academic social networking site use by Chinese researchers. Proceedings of the Association for Information Science and Technology, 56(1), 825–826.

Yu, M. C., Wu, Y. C. J., Alhalabi, W., Kao, H. Y., & Wu, W. H. (2016). ResearchGate: An effective altmetric indicator for active researchers? Computers in Human Behavior, 55, 1001–1006.

Zaidieh, A. J. Y. (2012). The use of social networking in education: Challenges and opportunities. World of Computer Science and Information Technology Journal (WCSIT), 2(1), 18–21.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declare that he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wiechetek, Ł., Pastuszak, Z. Academic social networks metrics: an effective indicator for university performance?. Scientometrics 127, 1381–1401 (2022). https://doi.org/10.1007/s11192-021-04258-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-04258-6

Keywords

- ResearchGate

- Altmetrics

- Web scraping

- Academic social network

- University rank

- University evaluation

- Comparison