Abstract

Probability weighting functions relate objective probabilities and their subjective weights, and play a central role in modeling choices under risk within cumulative prospect theory. While several different parametric forms have been proposed, their qualitative similarities make it challenging to discriminate among them empirically. In this paper, we use both simulation and choice experiments to investigate the extent to which different parametric forms of the probability weighting function can be discriminated using adaptive design optimization, a computer-based methodology that identifies and exploits model differences for the purpose of model discrimination. The simulation experiments show that the correct (data-generating) form can be conclusively discriminated from its competitors. The results of an empirical experiment reveal heterogeneity between participants in terms of the functional form, with two models (Prelec-2, Linear-in-Log-Odds) emerging as the most common best-fitting models. The findings shed light on assumptions underlying these models.

Similar content being viewed by others

Notes

CPT allows for different decision weights for gains and losses. In this study, we focus only on gains in order to simplify the analysis and focus more precisely on probability weighting. The extension to the case of losses and mixed gambles is straightforward.

CPT always satisfies stochastic dominance, so presenting stochastically dominated stimuli would not help to discriminate between functional forms of CPT. However, in principle, this restriction of the choice-stimulus space could be relaxed to compare other models that do not satisfy stochastic dominance.

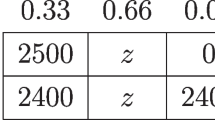

Discriminating stimuli were identified by computing the utility of each gamble, under each weighting function, with the specified parameters. The utilities can then be used to generate two vectors of predicted choices across all stimuli, one for each weighting function. Comparing the two vectors reveals the stimuli on which the predicted choices differ.

This estimate counts stimuli only in the MM-triangle with probabilities rounded to the nearest 0.1. Rounding to the nearest 0.05 instead of 0.1 yields a similar estimate, with 201 out of 5940 stimuli discriminating between the two weighting functions depicted in Fig. 2.

All parameter priors were uniform on their permissible ranges except for r in LinLog and s in both Prl2 and LinLog, which were uniform on 0, 2 to avoid using degenerate priors. The bounds are plausible given that the largest reported estimates of r and s that we can find in the literature are r = 1. 59 (Birnbaum and Chavez 1997), and s = 1. 40 (Stott 2006).

The parameter v is assumed to be a function of the three fixed outcome values, x 1, x 2, and x 3, which are set by the experimenter. By setting v = 0. 5 in the simulation, we are assuming that the outcome values were set such that \(\frac {v(x_{2})-v(x_{1})}{v(x_{3})-v(x_{1})}=0.5\). In an actual experiment, the experimenter would need to set x 1, x 2 and x 3without foreknowledge of a participant’s value function.

The flat-lining of the posterior probabilities in the HILO simulation may be related to the fact that the error rates on each trial were assumed to be iid. If a different form of stochastic error were assumed, in which the error rate on a given choice pair is tied to the utilities of the gambles in that pair (e.g., a “white noise” model), then repeating the same stimuli would help to estimate the error rates more precisely, which in turn would provide information about the utility values. However, implementing this formally would require additional assumptions about the functional form of the white noise (e.g., logit or probit transformation), as well as additional computation for estimating and updating the error parameters.

Jefferys gave rule-of-thumb guidelines for interpreting Bayes factors: 1 to 3.2 is “not worth more than a bare mention,” 3.2 to 10 is “substantial,” 10 to 100 is “strong,” and greater than 100 is decisive. These cutoffs can be converted to posterior probabilities by transforming the odds ratio into a probability, as \(p=\frac {BF}{1+BF}\). For example, a Bayes factor of 100 is equivalent to a posterior probability of \(\frac {100}{101}=0.9901\).

Posterior probabilities were also computed with the inclusion of a “null” model, in which choices are assumed to be made at random (e.g., choices are made based on the flip of a coin: A for heads, B for tails). Inclusion of the null model as a candidate only affected the final posterior probability for one participant (15), for whom the posterior probability of the null model was 0.47. This could be the result of the participant misunderstanding the instructions, failing to pay attention to the stimuli, choosing randomly, or somehow otherwise malingering.

10 Interestingly, for participants 9 and 13 the best fitting model was not the model with the highest posterior probability. This is because the posterior probability takes into account model complexity as well as model fit (Myung 2000). For 9 and 13, the relative simplicity of the EU functional form outweighed the superior fit of the more complex competitors.

References

Abdellaoui, M. (2000). Parameter-free elicitation of utilities and probability weighting functions. Management Science, 46, 1497–1512.

Abdellaoui, M., Bleichrodt, H., Kammoun, H. (2013). Do financial professionals behave according to prospect theory? An experimental study. Theory and Decision, 74(3), 411–429.

Aczél, J., & Luce, R. (2007). A behavioral condition for Prelec’s weighting function on the positive line without assuming w(1) = 1. Journal of Mathematical Psychology, 51(2), 126–129.

Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle. In Petrov, B.N., & Csaki, F. (Eds.) Second international symposium on information theory, (pp. 267–281). Budapest: Academiai Kiado.

Al-Nowaihi, A., & Dhami, S. (2006). A simple derivation of Prelec’s probability weighting function. Journal of Mathematical Psychology, 50(6), 521–524.

Allais, M. (1953). Le comportement de l’homme rationnel devant le risque: Critique des postulats et axiomes de l’école Américaine. Econometrica: Journal of the Econometric Society, 21(4), 503–546.

Baucells, M., & Heukamp, F. (2009). Probability and time tradeoff. Theory and Decision.

Birnbaum, M. (2008). New paradoxes of risky decision making. Psychological Review, 115(2), 463–500.

Birnbaum, M. (2012). A statistical test of independence in choice data with small samples. Judgment and Decision Making, 7(1), 97–109.

Birnbaum, M., & Chavez, A. (1997). Tests of theories of decision making: violations of branch independence and distribution independence. Organizational Behavior and Human Decision Processes, 71(2), 161–194.

Birnbaum, M., & Gutierrez, R. (2007). Testing for intransitivity of preferences predicted by a lexicographic semi-order. Organizational Behavior and Human Decision Processes, 104(1), 96–112.

Blavatskyy, P.R. (2007). Stochastic expected utility. Journal of Risk and Uncertainty, 34, 259–286.

Bleichrodt, H., Pinto, J., Wakker, P. (2001). Making descriptive use of prospect theory to improve the prescriptive use of expected utility. Management Science, 47(11), 1498–1514.

Booij, A., & van de Kuilen, G. (2009). A parameter-free analysis of the utility of money for the general population under prospect theory. Journal of Economic Psychology, 30(4), 651–666.

Burns, Z., Chiu, A., Wu, G. (2010). Overweighting of small probabilities. Wiley Encyclopedia of Operations Research and Management Science.

Camerer, C. (2004a). Advances in behavioral economics. Princeton: Princeton University Press.

Camerer, C. (2004b). Prospect theory in the wild: evidence from the field In C. F. Camerer, G. Loewenstein, M. Rabin (Eds.), Advances in behavioral economics (pp. 148–161).

Camerer, C., & Ho, T. (1994). Violations of the betweenness axiom and nonlinearity in probability. Journal of Risk and Uncertainty, 8(2), 167–196.

Cavagnaro, D., Gonzalez, R., Myung, J., Pitt, M. (2013). Optimal decision stimuli for risky choice experiments: an adaptive approach. Management Science, 59(2), 358–375.

Cavagnaro, D., Myung, J., Pitt, M., Kujala, J. (2010). Adaptive design optimization: a mutual information-based approach to model discrimination in cognitive science. Neural Computation, 22(4), 887–905.

Cavagnaro, D., Pitt, M., Myung, J. (2011). Model discrimination through adaptive experimentation. Psychonomic Bulletin and Review, 18(1), 204–210.

Chaloner, K., & Verdinelli, I. (1995). Bayesian experimental design: a review. Statistical Science, 10(3), 273–304.

Chew, S., & Waller, W. (1986). Empirical tests of weighted utility theory. Journal of Mathematical Psychology, 30(1), 55–72.

Conte, A., Hey, J., Moffatt, P. (2011). Mixture models of choice under risk. Journal of Econometrics, 162(1), 79–88.

Daniels, R., & Keller, L. (1990). An experimental evaluation of the descriptive validity of lottery-dependent utility theory. Journal of Risk and Uncertainty, 3(2), 115–134.

Daniels, R., & Keller, L. (1992). Choice-based assessment of utility functions. Organizational Behavior and Human Decision Processes, 52(3), 524–543.

Diecidue, E., Schmidt, U., Zank, H. (2009). Parametric weighting functions. Journal of Economic Theory, 144(3), 1102–1118.

Donkers, B., Melenberg, B., van Soest, A. (2001). Estimating risk attitudes using lotteries: a large sample approach. Journal of Risk and Uncertainty, 22(2), 165–195.

Epper, T., Fehr-Duda, H., Bruhin, A. (2011). Viewing the future through a warped lens: why uncertainty generates hyperbolic discounting. Journal of Risk and Uncertainty, 43(3), 169–203.

Furlong, E., & Opfer, J. (2009). Cognitive constraints on how economic rewards affect cooperation. Psychological Science, 20(1), 11–16.

Gelman, A., Carlin, J.B., Stern, H.S., Rubin, D.B. (2004). Bayesian data analysis, 2nd Edn. Boca Raton: Chapman and Hall/CRC.

Goeree, J., Holt, C., Palfrey, T. (2002). Quantal response equilibrium and overbidding in private-value auctions. Journal of Economic Theory, 104(1), 247–272.

Goldstein, W., & Einhorn, H. (1987). Expression theory and the preference reversal phenomena. Psychological Review, 94(2), 236.

Gonzalez, R., & Wu, G. (1999). On the shape of the probability weighting function. Cognitive Psychology, 38(1), 129–166.

Grinblatt, M., & Han, B. (2005). Prospect theory, mental accounting, and momentum. Journal of Financial Economics, 78(2), 311–339.

Gurevich, G., Kliger, D., Levy, O. (2009). Decision-making under uncertainty-a field study of cumulative prospect theory. Journal of Banking and Finance, 33(7), 1221–1229.

Guthrie, C. (2003). Empirical legal realism: a new social scientific assessment of law and human behavior: prospect theory, risk preference, and the law. Northwestern University Law Review, 97, 1115–1891.

Hey, J. (2005). Why we should not be silent about noise. Experimental Economics, 8(4), 325–345.

Holmes, Jr., R., Bromiley, P., Devers, C., Holcomb, T., McGuire, J. (2011). Management theory applications of prospect theory: accomplishments, challenges, and opportunities. Journal of Management, 37(4), 1069–1107.

Ingersoll, J. (2008). Non-monotonicity of the Tversky-Kahneman probability-weighting function: a cautionary note. European Financial Management, 14(3), 385–390.

Jeffreys, H. (1961). Theory of probability. International series of monographs on physics.

Jullien, B., & Salanié, B. (2000). Estimating preferences under risk: the case of racetrack bettors. Journal of Political Economy, 108(3), 503–530.

Kahneman, D., & Tversky, A. (1979). Prospect theory: an analysis of decision under risk. Econometrica, 47, 263–291.

Karmarkar, U. (1978). Subjectively weighted utility: a descriptive extension of the expected utility model. Organizational Behavior and Human Performance, 21(1), 61–72.

Karmarkar, U. (1979). Subjectively weighted utility and the Allais paradox. Organizational Behavior and Human Performance, 24(1), 67–72.

Kass, R., & Raftery, A. (1995). Bayes factors. Journal of the American Statistical Association, 90(430), 773–795.

Kusev, P., van Schaik, P., Ayton, P., Dent, J., Chater, N. (2009). Exaggerated risk: prospect theory and probability weighting in risky choice. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35(6), 1487.

Lattimore, P., Baker, J., Witte, A. (1992). The influence of probability on risky choice: a parametric examination. Technical report, National Bureau of Economic Research.

Levy, J. (2003). Applications of prospect theory to political science. Synthese, 135(2), 215–241.

Liu, C., & Aitkin, M. (2008). Bayes factors: prior sensitivity and model generalizability. Journal of Mathematical Psychology, 52(6), 362–375.

Liu, Y. (1998). Prospect theory: developments and applications in marketing. Technical report, Working Paper. New Brunswick: Rutgers University.

Luce, R. (2001). Reduction invariance and Prelec’s weighting functions. Journal of Mathematical Psychology, 45(1), 167–179.

Luce, R., & Fishburn, P. (1991). Rank- and sign-dependent linear utility models for finite first-order gambles. Journal of Risk and Uncertainty, 4(1), 29–59.

Luce, R., Mellers, B., Chang, S. (1993). Is choice the correct primitive? On using certainty equivalents and reference levels to predict choices among gambles. Journal of Risk and Uncertainty, 6(2), 115–143.

Machina, M. (1982). Expected utility theory without the independence axiom. Econometrica, 50, 277–323.

Marschak, J. (1950). Rational behavior, uncertain prospects, and measurable utility. Econometrica: Journal of the Econometric Society, 18(2), 111–141.

Myung, I. (2000). The importance of complexity in model selection. Journal of Mathematical Psychology, 44(1), 190–204.

Nilsson, H., Rieskamp, J., Wagenmakers, E. (2011). Hierarchical Bayesian parameter estimation for cumulative prospect theory. Journal of Mathematical Psychology, 55(1), 84–93.

Prelec, D. (1998). The probability weighting function. Econometrica, 66(3), 497–527.

Shiffrin, R., Lee, M., Kim, W., Wagenmakers, E. (2008). A survey of model evaluation approaches with a tutorial on hierarchical Bayesian methods. Cognitive Science, 32(8), 1248–1284.

Stott, H. (2006). Cumulative prospect theory’s functional menagerie. Journal of Risk and Uncertainty, 32(2), 101–130.

Tversky, A., & Fox, C. (1995). Weighing risk and uncertainty. Psychological Review, 102(2), 269.

Tversky, A., & Kahneman, D. (1992). Advances in prospect theory: cumulative representation of uncertainty. Journal of Risk and Uncertainty, 5(4), 297–323.

van de Kuilen, G., Wakker, P., Zou, L. (2009). A midpoint technique for easily measuring prospect theory’s probability weighting. Technical report, Working Paper.

Wu, G., & Gonzalez, R. (1996). Curvature of the probability weighting function. Management Science, 42(12), 1676–1690.

Wu, G., & Gonzalez, R. (1998). Common consequence conditions in decision making under risk. Journal of Risk and Uncertainty, 16(1), 115–139.

Zeisberger, S., Vrecko, D., Langer, T. (2012). Measuring the time stability of prospect theory preferences. Theory and Decision, 72(3), 359–386.

Zhang, H., & Maloney, L. (2012). Ubiquitous log odds: a common representation of probability and frequency distortion in perception, action, and cognition. Frontiers in Neuroscience, 6, 1–14. article 1.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research is supported by National Institute of Health Grant R01-MH093838 to J.I.M and M.A.P.

Appendix

Appendix

1.1 Preliminary simulations

The purpose of the following set of simulations is to illustrate the logic of ADO in a simple case, i.e., a case in which it is easy to see why some stimuli are more diagnostic than others. The simple case is discriminating just EU and TK. This case is an ideal starting point because there is already an established body of knowledge about which designs work well for discriminating between these models, which provides a natural benchmark against which to compare the results of the simulations using ADO.

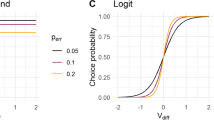

We will present the results of three simulations. In the first two, the data will be generated without stochastic error. This will allow us to focus on the logic of ADO’s selection of stimuli, and to compare the stimuli selected by ADO to those that have been identified in the literature as being diagnostic between EU and TK. In the third simulation, the data will be generated with stochastic error, so we can see how errors in the choice process affect ADO’s selection of stimuli, and its identification of the data-generating model.

1.1.1 Simulation 1: Data generated from TK without stochastic error

In the first simulation, data were generated from TK with v = 0. 5 and r = 0. 71. The TK probability weighting function with r = 0. 71 is depicted on the left side of Fig. 14. The level curves of the CPT utility function in the Triangle, with this particular probability weighting function and v = 0. 5, are depicted on the right side of Fig. 14.

The posterior probabilities of EU and TK across 30 trials of the experiment, and the gamble pairs selected by ADO, are depicted in Fig. 15. Figure 16 shows why the stimuli selected by ADO are optimal for discriminating the generating model (TK) from its competitor (EU). Essentially, ADO has automatically identified stimuli that force the indifference curves to “fan out,” increasing in steepness from right to left.

1.1.2 Data generated from EU without stochastic error

In the second simulation, data were generated from EU with v = 0. 5. The posterior probabilities of EU and TK across 30 trials of the experiment, and the gamble pairs selected by ADO, are depicted in Fig. 17. These stimuli are different than those identified in Simulation 1, which shows that the optimal stimuli depend on the data generating model. In this case, ADO is essentially testing to see if the indifference curves are really parallel across the entire MM-Triangle.

1.1.3 Simulation 2: Data generated from TK with stochastic error

In the third simulation, data were generated from TK with v = 0. 5 and r = 0. 71 and a constant stochastic error rate of 0.25. That is, on each choice instance, there was a 25% chance that the generated choice would be the opposite of that predicted by the true model. The posterior probabilities of EU and TK across 30 trials of the experiment, and the gamble pairs selected by ADO, are depicted in Fig. 18. We see the same pattern of stimuli, but with more variation. The posterior model probability still converges, but not as quickly, and not monotonically.

Results of Simulation 3, in which the generating model was TK(0.5, 0.71) with a stochastic error rate of 0.25. Posterior model probabilities (left) are noisy but strongly favor TK by stage 30. Stimuli selected by ADO (right) resemble those selected in the noiseless case (simulation 1, Fig. 15), with more variation, corresponding to the longer “feeling out” period resulting from the noisy data stream

1.1.4 Summary of preliminary simulations

In the preceding three simulations, ADO successfully discriminated between EU and TK forms of the probability weighting function. But what about the other functional forms: Prl1, Prl2, and LinLog? Would the choice data from these simulations also identify the generating model from among this larger class of candidates? To answer that question, we can restart the simulations with equal prior probabilities of each of those five candidate models, and uniform parameter priors for each model, and then update them based on the same data stream from the preceding simulations (i.e., the same choices at the same stimuli). The resulting progression of posterior probabilities from simulation 3 is shown in Fig. 19. Even after all 30 trials are complete, the posterior probability of TK (the true generating model) is only 0.29, indicating that the generating model has not been identified. Figure 19 suggests that a more refined set of stimuli may be required to discriminate among a larger set of possible functional forms.

Posterior probabilities of EU, TK, Prl1, Prl2, and LinLog based on the data from Simulation 3. Stimuli were optimized to discriminate only EU and TK. The data clearly discriminate TK from EU, but not from the other models, suggesting that a more specialized set of stimuli is required to discriminate among the larger set of models

Rights and permissions

About this article

Cite this article

Cavagnaro, D.R., Pitt, M.A., Gonzalez, R. et al. Discriminating among probability weighting functions using adaptive design optimization. J Risk Uncertain 47, 255–289 (2013). https://doi.org/10.1007/s11166-013-9179-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11166-013-9179-3