Abstract

In the digital age, handwriting literacy has declined to a worrying degree, especially in non-alphabetic writing systems. In particular, Chinese (and also Japanese) handwriters have suffered from character amnesia (提笔忘字), where people cannot correctly produce a character though they can recognize it. Though character amnesia is widespread, there is no diagnostic test for it. In this study, we developed a fast and practical test for an individual’s character amnesia rate calibrated for adult native speakers of Mandarin. We made use of a large-scale handwriting database, where 42 native Mandarin speakers each handwrote 1200 characters from dictation prompts (e.g., 水稻的稻, read shui3 dao4 de1dao4 meaning “rice from the word rice-plant”). After handwriting, participants were presented with the target character and reported whether their handwriting was correct, they knew the character but could not fully handwrite it (i.e., character amnesia), or they did not understand the dictation phrase. We used a two-parameter Item Response Theory to model correct handwriting and character amnesia responses, after excluding the don’t-know responses. Using item characteristics estimated from this model, we investigate the performance of short-form tests constructed with random, maximum discrimination, and diverse difficulty subsetting strategies. We construct a 30-item test that can be completed in about 15 min, and by repeatedly holding out subsets of participants, estimate that the character amnesia assessments from it can be expected to correlate between r = 0.82 and r = 0.89 with amnesia rates in a comprehensive 1200 item test. We suggest that our short test can be used to provide quick assessment of character amnesia for adult Chinese handwriters and can be straightforwardly re-calibrated to prescreen for developmental dysgraphia in children and neurodegenerative diseases in elderly people.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Handwriting has been a cornerstone of human civilization (Harari, 2014), and even in the digital age still plays an important role in daily learning and communication. It is still used in many daily activities, for instance writing a birthday card or filling in a banking form. More importantly, it is still the dominant way of learning and assessment for students (Cutler & Graham, 2008; Jones & Hall, 2013). In fact, with the availability of digital pens and handwriting input methods, handwriting now plays an increasingly important role in information processing and communication (e.g., note-taking, annotations and handwritten graphics using digital pens, or handwriting input using figures on smartphones). However, handwriting is a skill that many students struggle with (McBride, 2019) and may be in danger of being lost (e.g., Jones & Hall, 2013; Medwell & Wray, 2008; Wollscheid et al., 2016), especially in languages with a logographic script such as Chinese. In Chinese-speaking regions, there has been a significant decline in people’s handwriting literacy due to the dominant use of typing, resulting in many Chinese handwriters suffering from what is known as character amnesia (提笔忘字 in Chinese, see for example Almog, 2019; Hilburger, 2016; Huang et al., 2021; Huang, Zhou et al., 2021).

In this paper, we draw on a large-scale Chinese character handwriting database (Cai, Xu & Yu, in prep-b), and use an Item Response Theory model to select 30 characters for use in a test of character amnesia rate. This short diagnostic test can be used in a pencil-and-paper assessment of character amnesia in educational and clinical settings.

Character amnesia in Chinese handwriting

The dominance of digital typing in recent decades has diminished the opportunity for people to handwrite. With increasing use of digital communication, people now rarely use a pen other than a stylus, and keyboard-based input systems dominate Chinese character production. While digital typing resembles handwriting in its sequential spelling of phonemes into graphemes in transparent scripts (e.g., letters in Greek or English), this is not true in logographic writing systems such as Chinese. In the Chinese character-based system, phonemes in the spoken language do not have transparent mappings onto graphmes in writing. Instead, each meaning-carrying Chinese syllable is mapped onto a character. Chinese has thousands of characters, which are in turn combinations of more than 600 orthographic components (i.e., radicals and strokes, documented in the GB13 Character Component Standard, 1997). For example, the character 语 (meaning “speech”) is composed of three radicals (讠, 五, and 口). A radical is further composed by two or more strokes arranged in a two-dimensional space with a particular stroke order (e.g., 讠 decomposes into upper and lower components, which are re-used in other contexts). In addition, there is no one-to-one mapping between phonology and orthography in Chinese. A syllable such as yu3 (subscript indicating tone) in Mandarin can be realized as different characters, depending on the intended meaning (e.g., 语 meaning “language”, 羽 meaning “feather”, 雨 meaning “rain”, 宇 meaning “eaves”). As a result, handwriting literacy in Chinese requires explicit orthographic-motor knowledge of thousands of characters, which is typically accumulated in childhood via years if not decades of rote learning, and needs to be constantly consolidated against attrition in adulthood (McBride-Chang & Ho, 2000; Tong & McBride-Chang, 2010). To make things worse, the dominant form of digital typing of Chinese (at least in mainland China) makes use of a Pinyin romanization system, which generates lists of candidate characters from a phonological transcription entered without tones. For example, the input “yu” will generate candidates such as 鱼 (“fish”), 语 (“language”), 雨 (“rain”), and 玉 (“jade”), from which the intended target can be selected. Such phonology-based input methods render it unnecessary for people to access the radicals and strokes to write a character.

A negative consequence of digital typing, especially the use of Pinyin input methods, is character amnesia (Pyle, 2016). As mentioned above, character amnesia refers to the phenomenon whereby Chinese speakers fail to handwrite a character even though they know what character they are supposed to write (e.g., Almog, 2019; Hilburger, 2016; Huang, Zhou et al, 2021). For instance, when asked to handwrite the character according to a dictation prompt (e.g., shui3 dao4 de dao4, 水稻的稻, meaning “rice from the word rice-plant”), people have access to both the phonology (i.e., dao4) and meaning (i.e., rice), but may produce no stroke or just some strokes of the target character. Character amnesia has been a societal concern widely reported in the news. A survey by the China Youth Daily (Xu & Zhou, 2013), for instance, revealed that 98.9% of 2517 survey respondents reported experience of character amnesia, with about 30% of them saying that character amnesia was quite common for them. A recent survey by Guang Ming Daily (Guangming Wang Public Opinion Center, 2019) on internet users in China found 60% reported having regular experience of character amnesia. A recent analysis (Huang, Zhou et al., 2021) of a large-scale handwriting database (developed by Wang et al., 2020) showed that college students in mainland China suffered from character amnesia about 5.6% of the time on average.

Assessing handwriting literacy in Chinese

A range of assessment tools have been developed for measuring handwriting literacy. Many of these focus on penmanship, the fine motor skills involved in producing legible characters. Typically, tests of penmanship involve test-takers (normally children) being asked to copy a number of words on a sheet (Phelps et al., 1985; Reisman, 1993), or to produce highly familiar characters such as their own names (Chan & Louie, 1992; Chan et al., 2008; Tse et al., 2019; Yin & Treiman, 2013). Tests of penmanship provide an opportunity for a trained professional to evaluate important aspects of handwriting legibility such as alignment, spacing and size. Other assessments place emphasis on fluency. Copied characters-per-minute is commonly used as a measure of handwriting fluency (Chow et al., 2003; Tseng & Hsueh, 1997; Wallen et al., 1997). Recent work has explored more multifaceted measurements of handwriting properties in copying tasks, including legibility and pen-pressure (Li-Tsang et al., 2019), and other handwriting features made available in a standardized format by computerized handwriting input (Falk et al., 2011; Li-Tsang et al., 2022).

By using highly familiar or provided characters, tests of penmanship or fluency typically minimize or eliminate the need to retrieve orthographic representations. Thus, these tests do not evaluate orthographic retrieval, the process involved in character amnesia. By design, most existing Chinese handwriting assessments do not assess how good (or bad) a test-taker is at accessing the orthographic components of characters. A better solution for assessing character amnesia is to use writing to dictation, where test-takers handwrite a character according to a spoken dictation prompt (see for example Huang, Lin et al., 2021; Huang, Zhou et al., 2021; Wang et al., 2020).

A key issue in the design of dictation tests is the selection of test characters. Chinese has approximately 12,500 characters according to the Modern Chinese Language Dictionary (The Commercial Press, 2016) and about 4500 characters that are commonly used in daily life according to the Modern Chinese Frequency Dictionary (Institute of Language Teaching & Research, 1986). Since an exhaustive test is impossible, it is important for a practical assessment of character amnesia to select a reasonably small number of test characters. The selected characters should not be too easy or too difficult to write, as such characters will not be able to finely assess test-takers’ degree of character amnesia. In this paper, we aim to develop an assessment of character amnesia on the basis of a large-scale handwriting database (Cai, Xu & Yu, in prep-b), using Item Response Theory to select a set of characters that discriminate well between test-takers with different amnesia rates. Below, we describe the construction of the short-form test and quantify how well it predicts amnesia rates on a comprehensive long-form test.

Item response theory

Item response theory (IRT) departs from classical test theory in trying to jointly infer properties of both the test items and the test-takers (Bock, 1997). The core idea is that item properties such as difficulty can and should inform the interpretation of test-takers’ answers. For example, a correct answer to a difficult item should ‘count more’ towards an estimate of ability than a correct answer to an easy item. Jointly inferring item difficulty alongside test-taker ability is an evidence-based way to set the appropriate weights. Difficulty is just one commonly-inferred item characteristic: other possibilities include diagnosticity (how quickly probability of success changes with increases in ability), guess rates (for multiple choice questions) and factor loadings (if multiple distinct factors are thought to influence responses). IRT models form a large and diverse family of approaches (Chalmers, 2012; Van der Linden & Hambleton, 1997) differing in the item characteristics they aim to estimate, the assumptions they make about the structure of the target ability being tested, and the inferential framework used. Particularly common variants include the Rasch model, which estimates difficulty only, the 2-parameter IRT, which estimates difficulty and discriminability, and the 3-parameter IRT model, which additionally considers guess-rate (Reise et al., 2005). In this paper we take a hierarchical Bayesian approach (Bürkner & Vuorre, 2019), applying the hierarchical 2-parameter IRT (closely following an example implementation by the Stan development team, 2021) to our domain of interest in handwriting assessment.

In addition to supporting inferences about test-taker abilities, estimates of item characteristics can inform test design. Tests designers typically aim to achieve maximum information within time and resource constraints. Information gain is highest when all outcomes for a test item are equally likely, and zero if a particular response is guaranteed ahead of time. Test information gain is therefore a function of both the item characteristics and the ability of the test-taker (Lord, 1977; Schmidt & Embretson, 2012). In particular, for the 2-parameter model considered here, item information is given by \(\gamma^{2} P_{i} (\alpha )Q_{i} (\alpha )\), where γ is the estimate of item discrimability, α is participant ability, P (α) is the probability of writing the character correctly for the item in question, and Q(α) is 1 − P (α) (Tsompanaki & Caroni, 2021).

The main barrier to directly optimizing for information gain is its dependence on the test-taker’s ability, which implies that different combinations of items will be most sensitive in different ability ranges. The optimal distribution of sensitivity over ability levels depends on the goals of the test: if the goal is highest overall accuracy, better results will be achieved by concentrating sensitivity in the most common ability ranges. However, in some applications it may be desirable to sacrifice some precision in the most commonly occurring ability ranges in order to better distinguish the extremes.

In the absence of a uniquely optimal way to create subsets of test items for all applications, we focus on subsets maximizing the correlation between predicted amnesia rates and observed amnesia rates in held-out observations. In the following, we distinguish between holding out responses, which examines the model’s ability to predict amnesia/success on unseen characters given only responses to a tested subset, and holding out participants, which examines the subsetting procedure’s ability to select characters appropriate for new test-takers from the same population. To the best of our knowledge, analytical results giving optimal subsets for these measures of performance are not available, so we turn instead to empirical cross-validation of heuristic subsetting schemes.

One reason analytical results are hard to come by is that high discrimination and a diversity of difficulties are both highly desirable, and these factors interact with each other to determine the overall quality of the test (An & Yung, 2014). Low discrimination items can attract both correct and incorrect answers from participants with a wide range of ability scores, so observing one response from a low-discrimination item is weakly informative about where on the ability scale any one participant lies. High discriminability items come closer to ruling out all ability levels not consistent with the observed response, and are therefore typically more informative. However, high discriminability does not give a straightforward guarantee of high information gain. High discrimination items can give poor information gain if they cluster together in a small difficulty range, with the restricted coverage making the information from each item redundant. They may also be uninformative if they take on extreme values such as very high or very low difficulty, leaving them discriminating sharply between participant ability values that are rarely observed.

Below, we propose two heuristic schemes for selecting items from a large pool of candidates to construct a short-form test of character amnesia rates, with a third random selection scheme considered as a baseline. The two heuristic schemes are subsetting based on high discriminability only, or on high discriminability after first ensuring a diversity of difficulties. We make no claim to optimality, and investigate the quality of these item selection schemes empirically with the expectation that their performance will depend on the specific distribution of participant ability and item characteristics particular to our application domain. Our primary measure of test performance is the prediction of amnesia/correct handwriting responses in the 1200 item test using participant ability parameters estimated from responses to the short form test, for held-out participants not involved in any aspect of the test construction or item characteristic estimation. We find the correlation between predicted and observed character amnesia rates to be typically around 0.89 in repeated tests of the best-performing selection procedure (maximum discrimination) using different sets of held-out participants. We also discuss the stability of the item selection procedures given sampling variation in the responses used to calibrate our estimates of the item characteristics, and describe how prediction performance changes with the number of items included in the short-form test.

The handwriting database

Our work here draws on an existing large-scale handwriting database (Cai, Xu & Yu, in prep), which served as input to our IRT analysis for the construction of the diagnostic test. The database contains handwriting outcomes of 1200 Chinese characters by 42 adult native speakers of Mandarin (32 female, age range 19–22 years, mean 20.50) in a written-dictation task. All the participants were right-handed, had normal or corrected-to-normal vision and hearing and reported no neurological, psychiatric disorders or ongoing medical treatment.

The characters used originate from SUBTLEX-CH (Cai & Brysbaert, 2010), a corpus of Chinese film subtitles, but with several filters to extract a subset suitable for a dictation task (Wang et al., 2020). The first filter is on character frequency, which was constrained to a log frequency between 1.5 and 5.0 (corresponding to more than 32 and less than 100,000 instances in the corpus). Cai et al. (in prep) discarded characters with four or fewer strokes where most handwriters could be expected to be at ceiling performance. These filters resulted in a candidate set of 2095 characters. These were further filtered for familiarity rating. For each of these, Cai et al. (in prep) took the most common bi-character word context the character appeared in (e.g., 水稻, shui3 dao4, for the target character 稻, dao4) and asked 15 participants (university undergraduates from the same universities as the participants in the current study) to rate them 1–7 for familiarity. Bi-character words with an average familiarity rating of less than 4 were excluded. Dictation phrases were converted to audio using Google’s text-to-speech reader https://cloud.google.com/text-to-speech and further filtered for clarity, resulting in 1600 candidate dictation recordings. In order to keep participation times within a 4 × 1 h timeframe, Cai et al. (in prep) selected 1200 items uniformly at random from this candidate pool. Mean log-frequency with regard to the original corpus was 3.44. The number of strokes per character ranged between 5 and 21, with a mean of 9.72, while the number of constituent radicals ranged between 1 and 7 with a mean of 2.98.

In the experiment, participants heard a dictation prompt indicating the to-be-written character in a one-word context (e.g., prompts of the form “水稻的稻”, meaning “The character ‘稻’ in the word 水稻”) and wrote the target character on the completion of the prompt. When they had finished handwriting, participants were shown the target character and self-reported whether they had written the character correctly (a success response), knew which character they were supposed to write but forgot how to write it (a character amnesia response), or didn’t know the character to write (a don’t know response) (see Fig. 1). Self-reports were checked by three checkers each examining all responses to 400 of the characters. This process identified 297 self-reported handwriting outcomes as questionable (0.6%): on this basis we accept the accuracy of self-reported response status and use it throughout. In all, there were 45,737 success responses (90.7%), 2679 character amnesia responses (5.3%) and 1984 don’t-know responses (3.9%). Here we consider only success and character amnesia responses, and interpret results as handwriting success conditional on knowing the character (Fig. 2).

Presentation procedure for the dictation task. Participants heard a start-of-trial ding, followed by a 500 ms pause and a dictation prompt (e.g., 水稻的稻/shui3 dao4 de1 dao4/, meaning ‘rice’ (dao4) from the word ‘rice-plant’ (shui3 dao4). After they finished handwriting, the target character was shown on screen and they self-evaluated their response using the options: “0: Correct writing”, “1: I knew the character to write but forgot how to write it” and “2: I did not know the character to write”

The mean amnesia rate for participants in this study was 0.055 (median 0.053) with a maximum observed amnesia rate of 0.11. The smallest number of amnesia responses for any one participant was one, the maximum was 137 (see Fig. 3 for the proportion of each response type from each participant). Mean amnesia rate for characters was 0.061, but the distribution of amnesia rates is strongly right-skewed, with a median at 0.024 and third quantile at 0.071. There were 553 characters that never attracted an amnesia response, while a small minority of characters produced very many amnesia responses, with the most-forgotten character, 瘪 (bie3, deflated), giving an amnesia rate of 0.852.

Analysis

In this section, we provide the model definition and item selection procedures which together constitute our handwriting test. The IRT model follows the 2-parameter IRT implementation given in the Stan user’s guide (Stan development team, 2021) and estimates participant ability (α), item difficulty (β), and item discriminability (γ). The item selection procedures are aimed at high discriminability or diverse difficulty, with random item selection also considered as a baseline. We then aim to answer the following three questions. First, how stable are the item selection procedures, given that there is some sampling variability in the estimates of item characteristics? Second, given our best estimates of the item characteristics, how does prediction performance change with the number of items included in the subset? Finally, at our chosen subset size of 30 items, what is the expected performance when predicting character amnesia rates in future test-takers?

Brief model description

Full implementation details appear in Appendix 1. In brief, each trial is considered to be a Bernoulli random variable with probability of success given by \(\Pr (success) = logit^{ - 1} (\gamma j(\alpha_{i} - \beta_{j} ))\) for participant i responding to item j, where the participant has ability α, and the item has difficulty β and discrimination γ. The inverse logit transforms the combined parameters into an S-shaped curve between zero and one, interpretable as a probability of success. The difference between participant ability and item difficulty determines the location of the ‘step’ from likely failure to likely success, while the discrimination parameter determines how steep the step is. Subscripts indicate that distinct participant abilities and item characteristics are estimated for each participant and item. A plate diagram and details of the prior specification appears in Appendix 1, along with some further discussion of the modelling choices. Code is available at https://osf.io/nersu/?view_only=80343b61798d4eac8f9912de0b9c1ec9.

Item selection procedures

The three item selection procedures described below draw from the 1200 characters for which item characteristic estimates are available, and attempt to select subsets that will support accurate inference of amnesia rates in the whole set.

Random

The random selection procedure chooses characters uniformly at random without replacement from the available set of 1200. It is presented here as a baseline procedure.

Maximum discrimination

The maximum discrimination selection procedure excludes from consideration any characters with an amnesia rate of less than 1%. Although items with no variation in responses are uninformative, they might otherwise be selected by this procedure. This is because lack of variation is most consistent with an item having high discrimination and floor or ceiling difficulty, since low discrimination items produce the minority response more readily over a broader range of abilities. We therefore exclude very low difficulty items from consideration before sorting on mean estimated discrimination (γ) and selecting the highest n items to produce candidate test-sets of size n.

Diverse difficulty

The diverse difficulty selection procedure first excludes amnesia rates of less than 1% as in maximum discrimination. The considered items are sorted by mean estimated difficulty (β) and divided into n equally sized bins where n is the desired subset size, with the last bin ‘short’ if the available characters cannot be evenly divided. Within each difficulty bin, the highest-discrimination character is selected. While this procedure attempts to select items from the full range of difficulties spanned by the items showing amnesia responses, note that the β scores from test sets selected in this way preserve a strong right skew of difficulty scores present in the original full set of items. That is to say, the easiest items are very easy, and difficulty increases quickly over the first few bins and more slowly over subsequent bins.

Stability of the calibration estimates

We investigated the stability of the item selection procedures defined above by repeatedly removing 10 participants, estimating item characteristics from the remaining observations, and selecting items according to these item characteristics. Over 30 repetitions of this procedure, we asked how often items were selected for inclusion in a 30-item test. The random procedure is not sensitive to the number of calibration observations, but the maximum discrimination and diverse difficulty procedures will produce subsets deterministically in the limit of perfectly stable β and γ estimates. How variable the character ‘inclusion rate’ is when repeatedly dropping a number of participants gives some indication of how sensitive the item selection procedure is to sampling variability in the calibration participation.

Thirty repetitions selecting item sets of size 30 gives 900 opportunities for item selection. With only 1200 items available, there will be some collisions due to chance: random item selection would typically (95%) result in between 632 and 658 unique characters used. In contrast, the diverse difficulty item selection procedure used only 290 distinct characters across the 30 tests, with 6 characters appearing in more than half the tests. The maximum discrimination procedure used only 153 distinct characters in the test lists it selected, with 19 characters appearing in over half the tests. These large overlaps lead us to conclude that increasing the number of calibration participants further is unlikely to result in substantial changes to the subsets selected by maximum discrimination and diverse difficulty, particularly since the substitutions are for items estimated to have similar characteristics.

Test set size

To examine the impact of test set size we take participant amnesia rates on the full set of 1200 tested characters as the ‘ground truth’ and ask to what extent amnesia rate on the full set can be predicted by the model when fit only to participant responses to a subset. In these analyses the item characteristics used were those estimated from all available data. Because these item characteristics are estimated using the same participants whose responses are being predicted, it is possible that prediction performance may be somewhat optimistic. Here our focus is on the relative improvement in prediction accuracy for held-out items as the number of items in the test increases. In the next analysis, we assess prediction performance for held-out participants who were not involved in estimates of item characteristics.

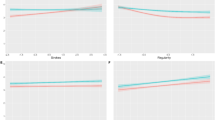

We find that as test-set size increases, correlations between predicted and observed amnesia rate on the full set of items approach a correlation of roughly 0.9, with diminishing improvements for additional test items (Fig. 4). The maximum discrimination subsetting scheme reaches this level of performance fastest at around 20 items, followed by diverse difficulty, which requires around 50 items for similar performance. The quality of random shows large variation depending on the characters selected, but appears to be approaching a somewhat lower correlation of 0.8 at the largest set size tested, 60 items. We conclude that a test-set size of 30 items is adequate for predicting the amnesia rates that would have been observed in a comprehensive long-form test.

Correlations between predicted and observed character amnesia rates using test subsets chosen randomly (left), for diverse difficulty (center), or for maximum discrimination (right). Random subsets are poorly performing and highly variable, maximum discrimination and diverse difficulty subsets converge relatively quickly to predictions correlating around 0.9 with observed rates on all items. Reference lines are loess-smoothed trends. A proposed test-set size of 30 items is highlighted with a dashed vertical line. We conclude that tests of this size can be adequately predictive of amnesia rates seen in the full 1200 item set

Figure 5 ‘unpacks’ one of the correlations plotted in Fig. 4 to show predicted vs observed amnesia rates for all participants. This particular test used a test size of 30 items and observed a predicted vs observed correlation of 0.89 for maximum discrimination, 0.79 for diverse difficulty, and 0.63 for random item selection. We present it here as a typical representative of results at this test-set size. Note that high amnesia rates appear to be systematically overestimated by this model when using only the subset responses. Rank-ordering among participants is still well-preserved.

A single prediction check at the target test size of 30 items. In this test, the subset chosen by maximum discrimination (right) produces predicted amnesia rates that correlate at r = 0.89 with those observed over all responses. Correlations from diverse difficulty (center) and random (left) were 0.79 and 0.62 respectively. The reference line shows the identity line representing the ideal outcome: estimates from the high-discriminability subsets appear to over-estimate very high amnesia rates, although rank ordering is well-preserved

Performance with new test-takers

We sought to evaluate out-of-sample performance of the procedure as a whole, including item selection and subsequent prediction of amnesia rates after fitting to the selected short-form test. Over 30 repetitions, we randomly held out 10 participants and fit the model using the responses of the remaining 32. This calibration fit provided estimates of item characteristics for all 1200 characters. Item subsets were then selected according to each of the three selection procedures using these estimates. The model was then re-fit to all the data that would have been visible had the hold-out participants been new test-takers: all responses from participants involved in the calibration and item selection plus responses to the selected subset only from hold-out participants who were not involved in calibration or item selection. Overall amnesia rates were estimated for all participants, including the newly introduced ones who supplied only a subset of responses, by predicting success or amnesia on each item in the full item set using the item characteristics estimated from the 32-participant calibration data.

Our main outcome of interest was the correlation between the observed and predicted amnesia rate for the held-out participants who only provided observations for the selected subset of items (Fig. 6). Over thirty repetitions, these ranged between 0.96 and 0.49 with maximum discrimination item sets, between 0.94 and 0.46 for diverse difficulty items sets, and between 0.91 and 0.04 for random item sets, with mean correlation of 0.82, 0.74, and 0.62 respectively. Since this measure is bounded above these distributions are all left-skewed: the modal correlation observed for the best performing selection procedure (maximum discrimination) is 0.89.

Over 30 repetitions, 10 participants were held out and item characteristics estimated from the remaining 32. Item subsets were then selected according to these estimates, and observations from only selected items were added to the observation pool from the held-out participants. Histograms show the 30 correlations obtained between estimates of the 10 hold-out participants’ amnesia rates based only on the selected subset of items and the observed amnesia rates taken from their responses to all items

Discussion

We set out to address the issue of constructing a short-form test that can assess a Mandarin Chinese speaker’s character amnesia rate in handwriting. We approached this issue by applying IRT to an existing large-scale handwriting database. Acknowledging that different application areas will have different requirements, we find that a thirty-item test is probably adequate for many applications, with best results given by the maximum discrimination item selection procedure. We estimate that the correlation between predicted and actual amnesia rates for new participants not involved in calibration assessment of the item characteristics is likely to be between 0.82 and 0.89 in this case. We further show that increasing the size of the test set beyond this does not give substantial improvement for tests using fewer than 60 items.

The selected 30 characters can be easily compiled into a quick (about 15 min) diagnostic test of handwriting literacy with an implementation following the dictation test format used in the calibration data (Fig. 1). In particular, the test will involve test-takers listening to a dictation prompt specifying a target character for the test-taker to write down. We note that in addition to measuring a test-taker’s character amnesia rate, such a test can also measure a test-taker’s handwriting fluency if handwriting times are recorded (see Wang et al., 2020, for one such implementation). In addition, the resultant handwritten images can also be used for penmanship evaluation (Xu et al., in prep).

We anticipate a range of uses for this test in both research and applied settings. First, in the absence of easily administered and validated assessments of character amnesia and other handwriting problems (e.g., disfluency or poor penmanship), the identification of factors that lead to decline of handwriting literacy has been largely speculative in nature. Digital typing has been argued to be the main cause of handwriting problems (e.g., Almog, 2019) and there is indeed some empirical evidence that people with more digital exposure (e.g., using smart phones or computers) tend to be worse in handwriting (Huang, Lin et al., 2021). However, it remains largely unclear exactly how digital typing damages people’s handwriting ability. It is possible that digital typing adversely affects handwriting because handwriting time is diminished. Alternatively, it is possible that aspects of digital typing are at work. For instance, phonology-based inputs such as Pinyin allow users to generate candidate characters without having to input any orthographic components, potentially leading to decay of orthographic-motor representations that are critical for Chinese character handwriting. If this is the case, it should be expected that people who use orthography-based input methods such as Cangjie may be less susceptible to character amnesia (and indeed other handwriting difficulties). Addressing these issues will require broad assays of handwriting literacy over many individuals, and our short diagnostic test can be used for this purpose.

Second, our diagnostic test may have applications in prescreening Chinese-speaking children for developmental dysgraphia, since this manifests as a specific difficulty in acquiring handwriting skills (Berninger et al., 2008; Gosse & Van Reybroeck, 2020; Kandel et al., 2017; McCloskey & Rapp, 2017). These children have highly inflated character amnesia rates compared to normally-developing children (Cheng-Lai et al., 2013; Lam et al., 2011), and as such, could be expected to receive high amnesia estimates in an appropriately-calibrated test. The test would certainly need to be re-calibrated to find the item characteristics as experienced by a school-age population, and the sensitivity and specificity of the test would depend on the degree of variation in handwriting ability among normally-developing students (which may be somewhat larger than the undergraduate cohort studied here) and the degree of separation between the groups.

Another related application might be to prescreen elderly people for very early signs of neurodegenerative diseases such as Alzheimer’s disease or Parkinson’s disease. Alzheimer’s disease, for example, is initially associated with linguistic degradation symptoms such as semantic memory decay and word finding difficulty (Bartzokis, 2004). This means that people with early Alzheimer’s disease may also have increased character amnesia in handwriting. The mild phase of the disease is also associated with deterioration in fine motor control and coordination (Yan et al., 2008). A handwriting task may be well placed to serve as a test of both cognitive and motor deficits (Garre-Olmo et al., 2017), although as in the developmental dysgraphia case the practical performance of such a test would depend on the to-be-determined variability in the healthy group and the degree of contrast shown by the target group.

Of course, there are caveats in these applications. The most important limitation for applications of this test procedure is that it needs to be calibrated for each particular population. The only reason it is possible to make inferences based on so few characters is because we have preexisting information about the character attributes, but these character attributes are certainly different for different populations. Easy and difficult characters for university undergraduates are not the same as easy and difficult characters for primary school students. Test size, predictive performance, and even which of the subsetting procedures works best may vary by target population, since these all depend on the shape of the full distributions over participant abilities and item difficulties. However, estimating these quantities appears feasible from realistic amounts of calibration data. For the case we examine here, adequate calibration data was collected from a relatively small number of participants. While more variable populations may require more calibration data, the current results suggest on the order of 40 − 50 calibration participants is a reasonable starting point for this method, with exact numbers to be assessed on a case-by-case basis.

It is worth noting that the interpretation of failures to handwrite may also be different in these different target populations. The test given here estimates handwriting failures from all causes, whether orthographic retrieval, attention, motor planning and execution, or other factors. It may be that different factors dominate in different cohorts. Although ‘character amnesia’ has been used as a convenient catch-all term in previous literature (e.g., Hilburger, 2016), and the overall rate of handwriting failures may in many cases be directly of interest, if the target of investigation is a specific sub-process, the test described here would need to be supplemented with additional construct-specific testing.

A second important caveat is the tendency of the model to over-estimate high amnesia rates when only given access to a selected subset of items. The amnesia response is a relatively rare outcome across all 1200 characters, so it is necessarily more common in the highly informative subsets than in general. Test-takers who produce amnesia responses are then unable to ‘dilute’ this response with subsequent successes as much as they otherwise might in a longer test. How serious a limitation this is depends on the application. For applications concerned mainly with rank-ordering, such as identifying students who may benefit from a particular writing exercise, or applications making between-groups comparisons on the same test within the same population, it is not a major problem, as ranks are reasonably well-preserved. It does, however, limit potential comparisons between tests calibrated for different populations, or absolute claims about amnesia rates in natural writing outside the test context. There are applications where such claims might be desirable, for example in cross-sectional analysis of age cohort effects; the test presented here does not support such analyses.

Future work could examine richer modelling of response types, moving from the binary amnesia/success outcomes used here to ternary amnesia/success/don’t know, or also distinguishing between different types of failure-to-write depending on whether many, some, or no elements of the target character were produced. Since the handwriting test is administered via a computer interface, it may also be desirable to use adaptive designs. Estimates of item difficulty should allow for adaptive staircase designs on character difficulty, with estimates of test-taker ability admitting a common language in terms of α to allow meaningful comparisons between people who saw different tests. We defer investigating these improvements to future work.

Conclusion

The causes of character amnesia states are the subject of frequent speculation, often invoking the fact that people use handwriting less and less due to the dominance of digital typing (Guangming Wang Public Opinion Center, 2019; Xu & Zhou, 2013). However, an understanding of handwriting failures is also part the broader study of writing systems more generally (Morin et al., 2020). The work presented above provides one part of the toolkit needed to answer such questions, by supplying a quick and easily-administered test of character amnesia rate calibrated for adult native speakers of Mandarin. The example test developed here, calibrated for university undergraduates, demonstrates that quick and easy testing for character amnesia rates with reasonable reliability is feasible using calibration data from the target population on the order of 40 × 4 participation hours. The example test described above and scripts to select items using new calibration data are available under a Creative Commons Attribution-NonCommercial 4.0 International Public License at https://osf.io/nersu/?view_only=80343b61798d4eac8f9912de0b9c1ec9.

References

Almog, G. (2019). Reassessing the evidence of Chinese "character amnesia". The China Quarterly, 238, 524–533. https://doi.org/10.1017/s0305741018001418

An, X., & Yung, Y.-F. (2014). Item response theory: What it is and how you can use the IRT procedure to apply it. SAS Institute Inc. SAS364–2014, 10(4)

Bartzokis, G. (2004). Age-related myelin breakdown: A developmental model of cognitive decline and Alzheimer’s disease. Neurobiology of Aging, 25(1), 5–18. https://doi.org/10.1016/j.neurobiolaging.2003.03.001

Berninger, V. W., Nielsen, K. H., Abbott, R. D., Wijsman, E., & Raskind, W. (2008). Writing problems in developmental dyslexia: Under-recognized and under-treated. Journal of School Psychology, 46(1), 1–21. https://doi.org/10.1016/j.jsp.2006.11.008

Bock, R. D. (1997). A brief history of item theory response. Educational Measurement: Issues and Practice, 16(4), 21–33. https://doi.org/10.1111/j.1745-3992.1997.tb00605.x

Bürkner, P.-C., & Vuorre, M. (2019). Ordinal regression models in psychology: A tutorial. Advances in Methods and Practices in Psychological Science, 2(1), 77–101. https://doi.org/10.31234/osf.io/x8swp

Cai, Z. G., Xu, Z., & Yu, S. (in prep). A stroke-level database of Chinese handwriting: Using OpenHandWrite with PsychoPy Builder GUI for capturing handwriting processes. https://osf.io/c9m35/

Cai, Q., & Brysbaert, M. (2010). Subtlex-ch: Chinese word and character frequencies based on film subtitles. PLoS ONE, 5(6), e10729. https://doi.org/10.1371/journal.pone.0010729

Chalmers, R. P. (2012). MIRT: A multidimensional item response theory package for the R environment. Journal of Statistical Software, 48, 1–29. https://doi.org/10.18637/jss.v048.i06

Chan, L., & Lobo, L. (1992). Developmental trend of Chinese preschool children in drawing and writing. Journal of Research in Childhood Education, 6(2), 93–99. https://doi.org/10.1080/02568549209594826

Chan, L., Cheng, Z.J., & Chan, L.F. (2008). Chinese preschool children’s literacy development: From emergent to conventional writing. Early Years, 28(2), 135–148. https://doi.org/10.1080/09575140801945304

Cheng-Lai, A., Li-Tsang, C. W., Chan, A. H., & Lo, A. G. (2013). Writing to dictation and handwriting performance among chinese children with dyslexia: Relationships with orthographic knowledge and perceptual-motor skills. Research in Developmental Disabilities, 34(10), 3372–3383. https://doi.org/10.1016/j.ridd.2013.06.039

Chow, S. M., Choy, S.-W., & Mui, S.-K. (2003). Assessing handwriting speed of children biliterate in English and Chinese. Perceptual and Motor Skills, 96(2), 685–694. https://doi.org/10.2466/pms.2003.96.2.685

Cutler, L., & Graham, S. (2008). Primary grade writing instruction: A national survey. Journal of Educational Psychology, 100(4), 907. https://doi.org/10.1037/a0012656

Falk, T. H., Tam, C., Schellnus, H., & Chau, T. (2011). On the development of a computer-based handwriting assessment tool to objectively quantify handwriting proficiency in children. Computer Methods and Programs in Biomedicine, 104(3), e102–e111. https://doi.org/10.1016/j.cmpb.2010.12.010

Garre-Olmo, J., Faúndez-Zanuy, M., López-de-Ipiña, K., Calvó-Perxas, L., & Turró-Garriga, O. (2017). Kinematic and pressure features of handwriting and drawing: Preliminary results between patients with mild cognitive impairment, Alzheimer disease and healthy controls. Current Alzheimer Research, 14(9), 960–968. https://doi.org/10.2174/1567205014666170309120708

National Language and Writing Committee. (1997). Chinese character component standard (信息处理用 GB13000 字符集汉字部件规范). Accessed: 19 Sep 2022

Gelman, A., Vehtari, A., Simpson, D., Margossian, C. C., Carpenter, B., Yao, Y., Kennedy, L., Gabry, J., Bürkner, P.-C., & Modrák, M. (2020). Bayesian workflow. arXiv preprint arXiv:2011.01808.

Gosse, C., & Van Reybroeck, M. (2020). Do children with dyslexia present a handwriting deficit? impact of word orthographic and graphic complexity on handwriting and spelling performance. Research in Developmental Disabilities, 97, 103553. https://doi.org/10.1016/j.ridd.2019.103553

Guangming Wang Public Opinion Center. (2019). Nearly 60% of netizens experienced character amnesia and neglected writing, leading to reflection (近六成网友称 “提笔忘字” 忽视书写引反思). https://news.gmw.cn/2019-04/09/content_32725020.htm. [Accessed: 2020–03–06]

Harari, Y. N. (2014). Sapiens: A brief history of humankind. Random House

Hilburger, C. (2016). Character amnesia: An overview. Sino-Platonic Papers, 264, 51–70.

Huang, S., Lin, W., Xu, M., Wang, R., & Cai, Z. G. (2021). On the tip of the pen: Effects of character-level lexical variables and handwriter-level individual differences on orthographic retrieval difficulties in Chinese handwriting. Quarterly Journal of Experimental Psychology, 74(9), 1497–1511. https://doi.org/10.1177/17470218211004385

Huang, S., Zhou, Y., Du, M., Wang, R., & Cai, Z. G. (2021). Character amnesia in Chinese handwriting: a mega-study analysis. Language Sciences, 85, 101383. https://doi.org/10.1016/j.langsci.2021.101383

Institute of Language Teaching and Research. (1986). A frequency dictionary of modern Chinese. Beijing Language Institute Press, Beijing

Jones, C., & Hall, T. (2013). The importance of handwriting: Why it was added to the Utah Core Standards for English language arts. The Utah Journal of Literacy, 16(2), 28–36.

Kandel, S., Lassus-Sangosse, D., Grosjacques, G., & Perret, C. (2017). The impact of developmental dyslexia and dysgraphia on movement production during word writing. Cognitive Neuropsychology, 34(3–4), 219–251. https://doi.org/10.1080/02643294.2017.1389706

Lam, S. S., Au, R. K., Leung, H. W., & Li-Tsang, C. W. (2011). Chinese handwriting performance of primary school children with dyslexia. Research in Developmental Disabilities, 32(5), 1745–1756. https://doi.org/10.1016/j.ridd.2011.03.001

Linden, W. J., & Hambleton, R. (1997). Handbook of modern item response theory. Springer New York (1) 512. https://doi.org/10.1007/978-1-4757-2691-6

Li-Tsang, C. W., Li, T. M., Lau, M. S., Lo, A. G., Ho, C. H., & Leung, H. W. (2019). Computerised Handwriting Speed Test System (CHSTS): Validation of a handwriting assessment for Chinese secondary students. Australian Occupational Therapy Journal, 66(1), 91–99. https://doi.org/10.1111/1440-1630.12526

Li-Tsang, C. W., Li, T. M., Yang, C., Leung, H. W., & Zhang, E. W. (2022). Evaluating Chinese handwriting performance of primary school students using the smart handwriting analysis and recognition platform (SHARP). MedRxiv. https://doi.org/10.1101/2022.02.19.22270984

Lord, F. M. (1977). Practical applications of item characteristic curve theory. Journal of Educational Measurement. https://doi.org/10.1002/j.2333-8504.1977.tb01128.x

McBride, C. (2019). Coping with dyslexia, dysgraphia and ADHD: A global perspective. Routledge. https://doi.org/10.4324/9781315115566

McBride-Chang, C., & Ho, C.S.-H. (2000). Developmental issues in Chinese children’s character acquisition. Journal of Educational Psychology, 92(1), 50. https://doi.org/10.1037/0022-0663.92.1.50

McCloskey, M., & Rapp, B. (2017). Developmental dysgraphia: An overview and framework for research. Cognitive Neuropsychology, 34(3–4), 65–82. https://doi.org/10.1080/02643294.2017.1369016

Medwell, J., & Wray, D. (2008). Handwriting–a forgotten language skill? Language and Education, 22(1), 34–47. https://doi.org/10.2167/le722.0

Morin, O., Kelly, P., & Winters, J. (2020). Writing, graphic codes, and asynchronous communication. Topics in Cognitive Science, 12(2), 727–743. https://doi.org/10.1111/tops.12386

Phelps, J., Stempel, L., & Speck, G. (1985). The children’s handwriting scale: A new diagnostic tool. The Journal of Educational Research, 79(1), 46–50. https://doi.org/10.1080/00220671.1985.10885646

Pyle, C. (2016). Effect of technology on Chinese character amnesia and evolution. Sino-Platonic Papers, 264, 27–49

Reise, S. P., Ainsworth, A. T., & Haviland, M. G. (2005). Item response theory: Fundamentals, applications, and promise in psychological research. Current Directions in Psychological Science, 14(2), 95–101. https://doi.org/10.1111/j.0963-7214.2005.00342.x

Reisman, J. E. (1993). Development and reliability of the research version of the Minnesota handwriting test. Physical & Occupational Therapy in Pediatrics, 13(2), 41–55. https://doi.org/10.1300/j006v13n02_03

Schmidt, K. M., & Embretson, S. E. (2012). Item response theory and measuring abilities. Handbook of Psychology, Second Edition, 2. https://doi.org/10.1002/0471264385.wei0217

Stan development team. (2021). Stan modeling language users guide and reference manual 2.28 https://mc-stan.org/users/documentation/. Accessed October 2021

Tong, X., & McBride-Chang, C. (2010). Developmental models of learning to read Chinese words. Developmental Psychology, 46(6), 1662. https://doi.org/10.1037/a0020611

Tse, L. F. L., Siu, A. M. H., & Li-Tsang, C. W. P. (2019). Assessment of early handwriting skill in kindergarten children using a Chinese name writing test. Reading and Writing, 32(2), 265–284. https://doi.org/10.1007/s11145-018-9861-6

Tseng, M. H., & Hsueh, I.-P. (1997). Performance of school-aged children on a Chinese handwriting speed test. Ocupational Therapy International, 4(4), 294–303. https://doi.org/10.1002/oti.61

Tsompanaki, E., & Caroni, C. (2021). Local influence analysis of the 2PL IRT model for binary responses. Journal of Statistical Computation and Simulation, 91(7), 1455–1477. https://doi.org/10.1080/00949655.2020.1858298

Wallen, M., Bonney, M.-A., & Lennox, L. (1997). Interrater reliability of the handwriting speed test. The Occupational Therapy Journal of Research, 17(4), 280–287. https://doi.org/10.1177/153944929701700404

Wang, R., Huang, S., Zhou, Y., & Cai, Z. G. (2020). Chinese character handwriting: A large-scale behavioral study and a database. Behavior Research Methods, 52(1), 82–96. https://doi.org/10.3758/s13428-019-01206-4

Wollscheid, S., Sjaastad, J., & Tømte, C. (2016). The impact of digital devices vs. pen(cil) and paper on primary school students’ writing skills–a research review. Computers & Education, 95, 19–35. https://doi.org/10.1016/j.compedu.2015.12.001

Xu, J., & Zhou, Y. (2013). 98.8% of respondents experienced character amnesia (98.8% 受访者曾提笔忘字). http://zqb.cyol.com/html/2013-08/27/nw.D110000zgqnb_20130827_2-07.htm. Accessed 06 May 2020

Xu, Z., Mittal, P.S., Ahmed, M.M., Adak, C., & Cai Z.G. (in prep). Assessing penmanship of Chinese handwriting: A deep learning-based approach. https://osf.io/cm53u/

Yan, J. H., Rountree, S., Massman, P., Doody, R. S., & Li, H. (2008). Alzheimer’s disease and mild cognitive impairment deteriorate fine movement control. Journal of Psychiatric Research, 42(14), 1203–1212. https://doi.org/10.1016/j.jpsychires.2008.01.006

Yin, L., & Treiman, R. (2013). Name writing in Mandarin-speaking children. Journal of Experimental Child Psychology, 116(2), 199–215. https://doi.org/10.1016/j.jecp.2013.05.010

Funding

This research was supported by a GRF grant (Project number: 14613722) from the Research Grants Committee (RGC) of Hong Kong. All the analytical scripts and data can be accessed from Open Science Framework (https://osf.io/nersu/?view_only=80343b61798d4eac8f9912de0b9c1ec9).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Model implementation

Model definition

The 2-parameter IRT model considers the probability of success in each dictation trial to be a Bernoulli random variable with a probability of success depending on participant ability α, item difficulty β, and item discrimination γ. For each response by participant i to item j,

\({\text{Pr}}\left( {{\text{success}}} \right) = {\text{logit}}^{{ - {1}}} (\gamma_{{\text{j}}} (\alpha_{{\text{i}}} - \beta_{{\text{j}}} ))\)

We take a standard normal prior over the ability scores α, with half-Cauchy priors for the scale of γ and β, and constrain γ to be positive (Fig. 7)

Notes on modelling choices

The likelihood structure is motivated by an extensive IRT literature (see for example Van der Linden & Hambleton, 1997). The particular hierarchical Bayesian implementation used here is adapted from https://mc-stan.org/docs/stan-users-guide/item-response-models.html and represents just one member of this broad family. We do not attempt a systematic survey of possible implementations, but note that one attractive feature of this Stan implementation is the ease of fitting a hierachical version of the model, which can be expected to improve inferences if items and participants can reasonably be considered as draws from some larger population of interest.

Like many cognitive models, the IRT is in principle capable of fitting the same data with different combinations of parameter values, with changes in one ‘trading off’ against changes in another. For this reason, at least one of alpha, beta, and gamma must be restricted to a relatively narrow range for the model to be identifiable. We follow the Stan User’s Guide in choosing to restrict alpha with a relatively tight prior while allowing beta and gamma to vary over a broader range. Although identifiability could also be achieved by restricting the other parameters, this particular choice leverages an a-priori plausible assumption that handwriting ability is likely to be approximately normally distributed. The identifying constraint comes mainly from the relatively tight tails of the normal distribution, rather than the particular mean and variance used in the prior. While the shape of the distribution is a consequential modeling choice, once it is fixed the prior mean and variance can be glossed as giving the scale of the units ability will be measured in. Since these units are arbitrary, we are free to choose numerically friendly values, in this case mean zero and standard deviation one. Together these considerations result in a standard normal prior for this parameter.

The other main theory-laden restriction imposed by the priors is on gamma. Gamma is restricted to avoid placing credence on the possibility of items with “inverse difficulty”, ie. those that are more likely to be produced correctly by participants with higher overall amnesia rates. Although such “trick” items have been attested in other fields (such as physics exams), we consider them unlikely in the character amnesia context, and excluding them from consideration substantially improves model performance.

The Cauchy priors used for the remaining parameters were chosen for their flexibility. The Cauchy readily accommodates values far from the mean, and is therefore a popular choice for parameters not subject to strong a-priori theoretical constraints.

For reporting purposes, we have focused here mainly on the model adequacy portion of the Bayesian workflow (Gelman et al., 2020). Regarding computational adequacy: parameter recovery from simulation for this model is good only if responding is sufficiently non-uniform. The priors imply that very uniform responding is moderately plausible; however, when fitting to such scenarios the model fails to converge. There were no divergent transitions in the model fits used in these analyses, and effective sample size was found to be adequate. However, when fitting the model to all available responses there is one R-hat value above 1.1 indicating that σγ may not be well explored. The model is certainly open to further improvements, which we defer to future work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Langsford, S., Xu, Z. & Cai, Z.G. Constructing a 30-item test for character amnesia in Chinese. Read Writ (2024). https://doi.org/10.1007/s11145-023-10506-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s11145-023-10506-3