Abstract

The mental lexicon plays a central role in reading comprehension (Perfetti & Stafura, 2014). It encompasses the number of lexical entries in spoken and written language (vocabulary breadth), the semantic quality of these entries (vocabulary depth), and the connection strength between lexical representations (semantic relatedness); as such, it serves as an output for the decoding process and as an input for comprehension processes. Although different aspects of the lexicon can be distinguished, research on the role of the mental lexicon in reading comprehension often does not take these individual aspects of the lexicon into account. The current study used a multicomponent approach to examine whether measures of spoken and written vocabulary breadth, vocabulary depth, and semantic relatedness were differentially predictive of individual differences in reading comprehension skills in fourth-grade students. The results indicated that, in addition to nonverbal reasoning, short-term memory, and word decoding, the four measures of lexical quality substantially added (30 %) to the proportion of explained variance of reading comprehension (adding up to a total proportion of 65 %). Moreover, each individual measure of lexical quality added significantly to the prediction of reading comprehension after all other measures were taken into account, with written lexical breadth and lexical depth showing the greatest increase in explained variance. It can thus be concluded that multiple components of lexical quality play a role in children’s reading comprehension.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Reading comprehension has been defined as the process of extracting and constructing meaning from written text. The reader has to create a mental representation of the text, or in other words, a situation model integrating text information with the reader’s prior knowledge (Kintsch, 1988, 2012; Van Dijk & Kintsch, 1983). In creating this representation, different higher- and lower-order processes (e.g., word decoding, inference making, meaning retrieval, monitoring) play a role (e.g., Nation, 2005; Ouellette & Beers, 2010; Perfetti, Landi, & Oakhill, 2005; Van den Broek, 1994). A great deal of research on reading comprehension has focused on listening comprehension and word decoding in explaining individual differences (Cutting & Scarborough, 2006; Gough & Tunmer, 1986; Hoover & Gough, 1990; Tilstra, McMaster, Van den Broek, Kendeou, & Rapp, 2009), leaving the impact of lexical or vocabulary processes underexplored. Therefore, the present study examines the role of differential lexical predictors of fourth graders’ reading comprehension.

To understand the complex process of reading comprehension, a general framework highlighting differential components is necessary. Perfetti and Stafura’s (2014) Reading Systems Framework (RSF) provides such a framework in which the mental lexicon plays a central role, being a connection point between word identification and text comprehension processes. When reading a text, the lexicon serves as an output source for the word identification process in which orthographic and phonological pieces of information are combined into single words. In addition, information from the lexicon also serves as an input source for comprehension-related processes in which single words are combined into comprehensive sentences and passages. Consequently, problems with the mental lexicon often result in comprehension difficulties. Although numerous studies have shown that children with more semantic knowledge are better able to understand written texts (e.g., Verhoeven & Van Leeuwe, 2008; Verhoeven, Van Leeuwe, & Vermeer, 2011), little is known about the relationship between specific dimensions of semantic knowledge and text comprehension. The current study used a multicomponent approach to examine how the mental lexicon modulates reading comprehension in fourth graders. Different aspects of the mental lexicon were examined in order to explain individual differences in reading comprehension.

The RSF distinguishes three sources of knowledge: linguistic knowledge, orthographic knowledge, and general background knowledge (Perfetti & Stafura, 2014). Different processes enable us to use and combine these sources of knowledge in order to understand written texts. Word decoding and word identification processes are necessary to make sense of the written units. Meaning retrieval, sentence building, inference, and monitoring processes, in turn, are required to combine the single words into a meaningful passage. The RSF is consistent with Hagoort’s (2005, 2007) Memory, Unification, and Control (MUC) model of language, a neurobiological model of language processing. Based on neurological evidence, three functional components can be distinguished (for a review, see Hagoort, 2013). First, the memory component is involved in retrieving information from long-term memory. By reading every single word, the knowledge of word meanings, connections to other words, and prior knowledge are retrieved from long-term memory. After retrieving this information, the unification component facilitates processes resulting in the combination of these pieces of information into larger units, such as sentences and passages. Finally, the control component ensures that the intended actions are carried out. As a case in point, executive control processes are needed to read multiple sources of text or to relate different parts of a text to one another.

As previously mentioned, the mental lexicon plays a central role in reading comprehension, serving as both an input and an output source (Perfetti & Stafura, 2014). It can be defined as the place where word representations are stored in long-term memory. Each representation corresponds to a word known to a more or lesser extent, resulting in individual vocabularies. Two dimensions are often distinguished: vocabulary breadth and vocabulary depth (e.g., Cain, 2010; Ouellette, 2006; Vermeer, 2001). Vocabulary breadth refers to the number or quantity of spoken and written word representations stored in the lexicon. Vocabulary depth refers to the quality of the representations stored in the lexicon. Previous research has indicated that skilled comprehenders differ from less skilled comprehenders in both quality and quantity of these lexical representations (Braze, Tabor, Shankweiler, & Mencl, 2007; Cain & Oakhill, 2014; Kendeou, Savage, & Van den Broek, 2009; Ouellette, 2006; Ouellette & Beers, 2010; Ricketts, Nation, & Bishop, 2007; Tannenbaum, Torgeson, & Wagner, 2006; Tilstra et al., 2009; Verhoeven & Van Leeuwe, 2008). Skilled comprehenders tend to know more words, and their knowledge of these words is more extensive compared to that of less skilled comprehenders, demonstrating the importance of a well-developed lexicon in relation to reading comprehension skills.

Whereas the RSF is a general framework bringing together comprehension-related processes, other theories, such as the Triangle model (Plaut, McClelland, Seidenberg, & Patterson, 1996) and the Lexical Quality Hypothesis (LQH; Perfetti & Hart, 2002), describe in more detail the relationship between word representations stored in the mental lexicon and reading comprehension. According to these models, each word representation or entry in the mental lexicon consists of three constituents or chunks of information: orthographic information, phonological information, and semantic information. Individual differences in reading comprehension can be deduced from individual differences in the quantity and quality of these lexical representations (Perfetti, 2007). The quality of a representation is high when orthographic, phonological, and semantic knowledge are well developed—in other words, when someone knows how to spell and pronounce the word and knows what its meaning is. Readers with a rich lexicon with many high quality representations comprehend written texts better than those with fewer representations that are usually of lower quality. In addition to the quality of the individual constituents, connection strength between the constituents is predictive of reading comprehension skill. In a factor analysis with various lexical quality tasks, Perfetti and Hart (2002) found that, for skilled adult readers, two factors could be distinguished: one factor for the orthographic and phonological tasks and one factor for the semantic tasks. Meanwhile, for less skilled adult readers, three factors could be extracted, one for each aspect of lexical quality. These results indicate that, in less skilled adult readers, the constituents are not tightly bound together, which might result in reading comprehension difficulties. Research involving primary school students has also demonstrated the importance of well-developed semantic knowledge in reading comprehension. Richter, Isberner, Naumann, and Neeb (2013) argued that grade-level differences in reading comprehension can be fully explained by individual differences in lexical quality. The authors concluded that deficits in the semantic constituent (not knowing the meaning of a word) can result in reading comprehension difficulties, even when orthographic and phonological constituents are of high quality.

The organization of the mental lexicon can be compared to a web of interconnected elements (e.g., Bock & Levelt, 1994). Representations can be connected based on, for example, semantic information. According to spreading activation models, the activation of one representation leads to the parallel activation of connected representations (Collins & Loftus, 1975). These connections can be based on different types of relationships and include antonyms, synonyms, super- and subordinate relations, category members, and functional relationships. In developing a strong network, connections start out weak, and parallel activation is not always strong enough to activate related representations. However, when connections are more often encountered in both written and spoken language, connections become stronger and the parallel activation of related representations more often succeeds. Evidence from priming studies suggests that strong comprehenders show signs of stronger word connections compared to poor comprehenders (e.g., Betjemann & Keenan, 2008; Cronin, 2002; Nation & Snowling, 1999). Therefore, having strong connections between word representations might also aid the comprehension process.

To summarize, the mental lexicon plays a central role in reading comprehension processes. Comprehension skills are related to both the quantity and quality of the representations stored in the lexicon. Previous research has shown that, to become skilled comprehenders, readers need to develop a strong lexicon, with many high quality word representations and strong connections between these representations. However, most studies on vocabulary and reading comprehension have included only one aspect of the lexicon in their design, under-exposing the multidimensional nature of the mental lexicon. Therefore, the current paper attempts to examine the relationship between different aspects of the lexicon and reading comprehension skills of children in the fourth grade of primary school. To this end, we used a multicomponent approach to measure three aspects of the lexicon: breadth, depth, and strength of connections between words. The present study provides more knowledge about the role of the mental lexicon in reading comprehension. Our research question was: How are individual differences in differential components of the mental lexicon related to individual differences in reading comprehension skills?

Previous research has indicated that a connection exists between vocabulary breadth (e.g., Verhoeven et al., 2011), vocabulary depth (e.g., Ricketts et al., 2007), and connection strength (Betjemann & Keenan, 2008), on the one hand, and reading comprehension, on the other hand (e.g., Cronin, 2002). Thus, the current study measured these three dimensions of the mental lexicon. Size of the lexicon (or vocabulary breadth) was measured using two tests: an oral and a written vocabulary breadth test. The quality of semantic knowledge (or vocabulary depth) was measured using a word definition task. Finally, connection strength between representations was measured using a word association task. To be able to examine the individual contributions of these lexical predictors, we controlled for some other well-known predictors of reading comprehension—namely, decoding (e.g., Verhoeven & Van Leeuwe, 2008), short-term memory (e.g., Nation, Adams, Bowyer-Crane, & Snowling, 1999), and general reasoning (e.g., Fuchs et al., 2012). We hypothesized that quantity and quality of semantic knowledge and the strength of the word connections were positively related to reading comprehension skills, in that children who know more words, who have deeper word knowledge, and stronger word connections would also have better developed reading comprehension skills. Due to the fact that the reading comprehension and written vocabulary breadth tasks both depend on word decoding skills, it was expected that these two would have the strongest relationship.

Methods

Participants

In total, 292 children (147 boys, 145 girls) from 11 different primary schools were tested at the start of the fourth grade (M age = 9 years, 7 months; SD age = 5.73 months). Schools were located in both urban and suburban regions of the Netherlands. Prior to testing, informed consent was obtained from the parents of the participating children. Of the 292 children, 258 children spoke Dutch with both parents. The remaining 44 children indicated that they spoke Dutch and another language at home. Participants in the current study took part in a larger longitudinal study on reading comprehension development.

Materials

Thirteen tests were used to measure reading comprehension skills, vocabulary, decoding skills, short-term memory, and nonverbal cognitive reasoning.

Reading comprehension

Reading comprehension is a complex process, and different tests might vary in the underlying skills they assess (e.g., Keenan, Betjemann, & Olson, 2008; Kintsch, 2012; Nation & Snowling, 1997). Therefore, four tests, differing in passage length, text type, and question type, were used to measure reading comprehension skills. By including diverse tasks, we aimed to capture the complex nature of reading comprehension skills. Test results were combined in order to get a single component score reflecting comprehension skills.

Short passage comprehension

A standardized test for students in the final grades of primary school was used to measure short passage reading comprehension skills (Begrijpend lezen 678 [Reading comprehension grade 4, 5, 6]; Aarnoutse & Kapinga, 2005). The test consisted of three narrative and four expository passages, containing 123–288 words (mean: 192 words per text). For each passage, students had to answer six or seven questions, resulting in 44 questions total (22 multiple choice with four options and 22 true/false). Questions related to the knowledge and strategies necessary to determine the meaning of words (e.g., what is the meaning of the word?), single sentences (e.g., is this sentence true or false with respect to what you have read in the text?), complete passages (e.g., what is the message the author wants to convey?), and relationships between sentences (e.g., who is referred to by the word “she”?). For each correct answer, students received one point, bringing the maximum score to 44 points. The developers of the test reported a Cronbach’s alpha of .86 as a measure of reliability.

Cito reading comprehension scale

Participating schools provided the researchers with results from a standardized test battery used by primary schools throughout the Netherlands to monitor reading comprehension development from first to sixth grades. Results from the test administered halfway through third grade were used in the present study (Cito, 2007). The test is divided over two parts and is partly adaptive to the student’s reading level. Each part consists of a mix of short and medium-long passages (131–634 words; mean number of words per text is 268) and a number of multiple choice questions per text. Both narrative and expository passages were included. Two types of questions were present: passage-based questions, which asked children literal questions about the content of the passages and whose answers could be derived from information literally stated in the passage, and questions requiring children to combine information explicitly stated in the passage with information not stated explicitly in the passage. Standardized scores were used. As indicated in the testing manual, the Accuracy of measurement (measure of test reliability) was >.89.

Narrative text reading comprehension

Narrative text comprehension was measured with one text from the Progress in International Reading Literacy Studies (PIRLS) Reading Literacy Test—2011 (Mullis, Martin, Kennedy, Trong, & Sainsbury, 2009): De vijandentaart [Enemy Pie]. The narrative text was relatively long and consisted of 832 words. In total, students answered 16 questions: seven multiple choice questions with four options and nine open-ended questions. Questions were literal (to assess understanding of information explicitly stated in the text), inferential (to assess inference skills), or evaluative (to examine how well students were able to evaluate information stated in the text). For the open-ended questions, students received a maximum of one, two, or three points, depending on the difficulty of the question and the quality of the answer. One-point questions required students to answer in single words or a short sentence. For questions worth two or three points, students had to answer in multiple sentences. The maximum test score was 19 points. Responses were scored by four trained research assistants based solely on the completeness of the answer, not on any spelling or grammatical mistakes. Training provided to the scorers included discussing the correct answers provided in the original scoring guide together with some example answers with the first or second author. After training, each research assistant scored the answers of 10 students participating in the study. A high degree of agreement was reached (ICC = .97). Disagreements were resolved by the first and second authors and discussed with the research assistants to reach full agreement. The Cronbach’s alpha, indicating test reliability, was .77.

Expository text reading comprehension

Expository text comprehension was measured using a different text from the PIRLS tests (Mullis et al., 2009)—namely, Het mysterie van de reuzentand [The mystery of the giant tooth]. The text was relatively long (884 words). Students answered 14 questions, again with one point for each correct multiple choice question (eight in total) and one, two, or three points for every open-ended question (six in total). Questions tapped into literal understanding of the text, inferential abilities, and evaluative skills. Responses to the open-ended questions were scored by the same research assistants who scored the open-ended questions for the narrative reading comprehension test. The same training procedure used for scoring the narrative text was followed. Inter-rater reliability was established by calculating the interclass correlation. Again, a high degree of agreement was reached (ICC = .90), and disagreements were resolved by the first and second authors and discussed with the research assistants. The Cronbach’s alpha was .75.

Vocabulary breadth

Two tests were used to assess vocabulary breadth: an oral and a written test. The choice for both an oral and written task originated from the fact that written tests depend on decoding skills whereas oral tests do not.

Oral vocabulary breadth

The present study used an adapted version of the Peabody Picture Vocabulary Test (PPVT; Dunn, Dunn, & Schlichting, 2005) so that the test could be administered to a group. A booklet presented the items from sets eight to thirteen of the original PPVT (72 items total). For each item, the four answer options (pictures) were printed next to each other. Students were orally presented with the target word by the experimenter. Students had to underline the picture in their booklet that best matched the target word. The test score was equal to the number of correct items. The Cronbach’s alpha, indicating test reliability for the self-adapted versions, was .69.

Written vocabulary breadth

The reading vocabulary subtest from the Taaltoets Allochtone Kinderen [Language Test for Foreign Children] (Verhoeven & Vermeer, 1986) was used to measure the vocabulary knowledge of written words. Although the test name might imply differently, the task was used for all children. The tasks consisted of 50 multiple-choice items. In each item, students read a sentence and had to indicate what the underlined word meant by choosing one of the four options listed below the sentence. Test scores were equal to the number of items correct. The Cronbach’s alpha was .82.

Vocabulary depth

Measures of vocabulary depth generally ask children to give word definitions. To gain insights into the vocabulary depth, students completed the vocabulary subtest of the Dutch version of the Wechsler Intelligence Scale for Children, third edition (WISC-III NL; Kort et al., 2005). The subtest consisted of 35 items for which children had to give a definition. Answers were scored using the original WISC-III scoring guide. The test score was the total number of points received. The Cronbach’s alpha was .82.

Connection strength

Word association tasks can be used to examine lexical-semantic organization (e.g., Aitchinson, 2012; Entwisle, 1966). In the current study, the students were asked to write down at least two and no more than five associations for each of the 20 target nouns (e.g., dog, finger, and spoon). Selected target nouns all had a high frequency to ensure that all students knew the words. For each association-target pair, a score for the association strength was calculated. These scores were based on the existing word association norm list of De Deyne, Navarro, and Storms (2013). In this norm list, 100 students’ associations for 1424 Dutch words were presented. For each association-target pair in the current study, it was calculated how often it occurred in De Deyne and colleagues’ norm list. This procedure resulted in a percentage score for each association-target pair, with a maximum of 100 (20 targets × 5 associations) percentage scores. These percentage scores reflected the association strength between the association and the target words, with high scores reflecting strong associations and low scores reflecting low association. For each student, the mean of all these percentage scores was calculated and used as a measure of the strength of the semantic network. The Cronbach’s alpha was .63.

Decoding skills

To measure decoding skills, word and non-word decoding tasks were administered. Test results of both word and non-word reading tasks were combined in order to get one component score reflecting decoding skills.

Word reading

The Een Minuut Test (EMT) [1 Min Test] (Brus & Voeten, 1999) was used to measure word decoding. The test consisted of a card with 116 words increasing in length, starting with simple CVC words and finishing with multi-syllable words. Students were instructed to read the words as quickly and accurately as possible, resulting in a combined score of both reading rate and reading accuracy. The score on this test was the number of words read correctly within 1 min. The Cronbach’s alpha, as established by the developers of the test, was .89.

Non-word reading

To measure non-word decoding skills, De Klepel (Van den Bos, Lutje Spelberg, Scheepstra, & de Vries, 1994) was administered. The test consisted of a card with 116 non-words increasing in length and difficulty (equal to the word reading task). Students were instructed to read the non-words as quickly and accurately as possible. The score on this test was the number of words read correctly within 2 min. The Cronbach’s alpha was .93.

Short-term memory

Students completed both digit span and word span tasks. In both span tasks, children were orally presented with a sequence of units and were asked to remember the sequence and reproduce it. For the digit span task, the units to be remembered were single-digit units; for the word span task, the units to be remembered were simple high frequency one-syllable words (e.g., ball, bike, door). Both tasks started out relatively easy, with only two units that had to be remembered. For both tasks, difficulty increased gradually to nine units. The digits and words were read to the children by the experimenter at a pace of one unit per second, with a pause of one second between each unit. For each level of difficulty, children received three attempts, and testing was terminated when all three attempts for one difficulty level were incorrect. The number of correctly recalled sequences comprised the scores for both tasks. Test results of both tasks were combined in order to get one component score reflecting short-term memory.

Nonverbal cognitive reasoning

To measure nonverbal cognitive reasoning, children completed the Raven Standard Progressive Matrices (SPM; Raven, 1960). Children were presented with 60 visual patterns divided over five sets of increasing difficulty. In each pattern, a piece of the puzzle was missing, and children were asked to indicate which of the six (for the first two sets) or eight (for sets three, four, and five) presented puzzle pieces would complete the pattern. Test scores were the sum of the number of correct answers. The Cronbach’s alpha was .89.

Procedure

All tests, except the Cito reading comprehension scale, were administered by the researchers at the start of fourth grade. The vocabulary subtest of the WISC-III NL, the word reading task, the non-word reading task, and the short-term memory tasks were completed during two individual test sessions, each taking approximately 20 min. Individual testing took place in a quiet room in the school. All other tests were administered group-wise during three separate sessions. During the first session, students completed both PIRLS texts. This session took approximately 90 min, with a short break between the two tests. In the second session, students completed the short passage reading comprehension task, the word association task, and the adapted version of the PPVT. This second session took approximately 2 h, with short breaks between the tests. On a third morning, students completed the Raven SPM. The Cito reading comprehension scale test was administered in class by the teacher halfway through third grade.

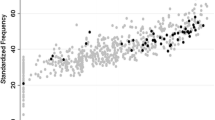

Data analyses

A principal component analysis (PCA) was conducted on the four reading comprehension tests to examine the factor structure. The PCA was conducted with R (R Core Team, 2015), an open source statistical program, using the principal function from the psych package (Revelle, 2014). The Kaiser–Meyer–Olkin measure of sampling adequacy, as an indicator of appropriateness of performing a factor analysis, was great (KMO = .84), indicating that performing a factor analysis should yield a distinct and reliable factor (Field, Miles, & Field, 2012). Bartlett’s test of sphericity indicated that correlations were large enough to perform a PCA (X 2 (6) = 681.83, p < .001). The PCA, using Kaiser’s criterion to retain only factors with an eigenvalue >1, yielded a one-factor solution that explained 76 % of the variance. All four reading comprehension tests loaded highly on the reading comprehension skills component (eigenvalue = 3.05). The factor loadings were as follows: short passage comprehension = 0.85; Cito reading comprehension scale = 0.89; narrative text reading comprehension = 0.88; and expository text reading comprehension = 0.88. Standardized test scores were combined to generate one composite score for reading comprehension. In addition, the same procedure was followed with the word reading and non-word reading task to create a composite score for decoding skills as well as with the two short-term memory tasks to create a composite score for short-term memory skills.

To assess the unique impact of the different dimensions of vocabulary on fourth graders’ reading comprehension skills, a complete regression model including all predictors was compared to four reduced regression models. In each reduced model, one of the four predictors of semantic quality was left out to calculate variance explained in predicting reading comprehension by each predictor. In the first reduced model, the measure of written vocabulary breadth was left out; in the second reduced model, the measure of oral vocabulary breadth was left out; in the third reduced model, the measure of vocabulary depth was left out; and in the fourth reduced model, the measure of semantic relatedness was left out.

Results

Descriptive statistics and correlations among the 13 measures are presented in Table 1. Strong correlations were found between the four reading comprehension measures (all r’s > .66, p < .001), between word and non-word reading tasks (r = .85, p < .001), and between the two measures of short-term memory (r = .55, p < .001). As previously described, composite scores were calculated for reading comprehension skills, decoding skills, and short-term memory skills. Correlations between the vocabulary tasks were medium to high.

Predictors of reading comprehension

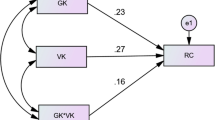

A hierarchical regression analysis (HRA), using the lm function in R (R Core Team, 2015), was carried out to gain insight into the impact of vocabulary on reading comprehension skills. Table 2 shows the results of the HRA. In the first step, two general cognitive control measures (nonverbal reasoning and short-term memory) were included to ensure that subsequent effects were not due to differences in these measures: F(2,289) = 58.16, p < .001, R 2 adj = .28. In the second step, decoding was included to control for the well-established effect of decoding skills on reading comprehension skills: F(3,288) = 52.27, p < .001, ∆R 2 adj = .06. In the third step, all the vocabulary variables were included: F(7,384) = 76.64, p < .001, ∆R 2 adj = .30. The results of the final step indicated significant effects for all predictors (nonverbal reasoning, short-term memory, decoding, written vocabulary breadth, oral vocabulary breadth, vocabulary depth, and connection strength); higher scores on each of these predictors resulted in higher scores on the reading comprehension tests. Relatively large standardized coefficients were found for nonverbal reasoning (β = .24) and reading vocabulary (β = .38), indicating stronger effects for these variables compared to the other predictors. All four measures of vocabulary were significantly related to reading comprehension skills (all p’s < .04), with written vocabulary breadth having the largest standardized coefficient (β = .38) and oral vocabulary breadth the smallest (β = .10). Additional analyses were conducted to examine the relative individual contribution of each of these vocabulary tasks. Together, all predictors explained 65 % of the variance in reading comprehension skill.

Individual effect of vocabulary measures on reading comprehension scores

To gain insight into the individual predictive quality of each semantic measure, additional analyses were conducted. To understand the predictive quality of a single predictor, two regression models have to be compared: a complete model in which all predictors are present and a reduced model in which the predictor of interest is left out. This procedure was adopted to examine the unique contribution of each lexical predictor in explaining individual differences in reading comprehension. The complete model was tested in the previous part of this results section. Four reduced models were fitted, one for each of the lexical predictors. These reduced models were compared to the complete model including all predictors in order to examine the unique contribution of each lexical predictor in explaining individual differences in reading comprehension.

The results indicated that each lexical predictor explained unique variance in predicting reading comprehension. Written vocabulary breadth explained 8 % of the variance in reading comprehension, after controlling for nonverbal reasoning, short-term memory, decoding, and the other three vocabulary measures: F(1,284) = 60.95, p < .001, ∆R 2 adj = .08. In addition, vocabulary depth explained an extra 2 % of the variance in reading comprehension, after controlling for the other variables: F(1,283) = 15.81, p < .001, ∆R 2 adj = .02. Furthermore, after controlling for the other variables, differences in connection strength explained an extra 1 % of variance in reading comprehension: F(1,284) = 12.08, p < .001, ∆R 2 adj = .01. Finally, a small portion of the variance in reading comprehension (0.4 %) can be explained by differences in oral vocabulary breadth, after controlling for the other variables: F(1, 284) = 4.38, p = .04, ∆R 2 adj = .004.

Taken together, these results indicated that reading comprehension skills were better developed in children who knew more words, had a deeper understanding of word meanings, and had stronger connections between words. Together, the four measures predicted 30 % of the variance in reading comprehension. Of this 30, 18.6 % was shared between the four indicators of semantic quality. Each measure also uniquely explained some additional variance in predicting reading comprehension. Although these effects were relatively small, all effects were (highly) significant. Of the four lexical measures, written vocabulary breadth was the strongest lexical predictor of individual differences in reading comprehension skill.

Discussion

The general aim of the present study was to examine more closely the relationship between the mental lexicon and reading comprehension skills in fourth graders. The question we answered with the current study was: How are individual differences in the mental lexicon related to individual differences in reading comprehension skills? Two tests were used to assess the number of lexical entries (vocabulary breadth): one based on oral language and one based on written language. The quality of semantic knowledge (vocabulary depth) was measured with a definition task. Finally, the strength of connections between representations was determined with a word association task. We hypothesized that individual differences in each of these lexical aspects would be related to individual differences in reading comprehension skills. The results supported our hypotheses; all four measures explained unique variance in reading comprehension skills.

Lexical knowledge was a significant predictor of reading comprehension skills while controlling for general reasoning, short-term memory, and decoding. These results line up with previous research (e.g., Cutting & Scarborough, 2006; Ricketts et al., 2007; Verhoeven et al., 2011). Of the total 65 % of explained variance in the current study, the four vocabulary measures combined explained 30 % of the variance in reading comprehension skills. Compared to previous studies, this is a relatively large amount. Ouellette (2006), for example, concluded that vocabulary explained 15 % of the variance in reading comprehension among fourth-grade students. Our design might be the reason why, when compared to other studies, a relatively large amount of variance is explained by the vocabulary measures. Although it has been suggested that the mental lexicon plays an important role in reading comprehension (e.g., Perfetti & Stafura, 2014), the complex nature of this storage component is not often considered when examining the role of vocabulary in reading comprehension. In the present study, we used a multicomponent approach in which different aspects of the lexicon were measured. The current results imply that testing only one dimension of the lexicon might bias the interpretation of the relationship between vocabulary and reading comprehension. The size of this relationship could be underestimated when using one or a limited number of measures.

Previous research has suggested that a relationship exists between size of the lexicon, depth of lexical knowledge, and strength of connections between representations, on the one hand, and reading comprehension, on the other hand (Betjemann & Keenan, 2008; Braze et al., 2007; Hall, Greenberg, Laures-Gorse, & Pae, 2014; Landi, 2010; Ouellette & Beers, 2010; Ricketts et al., 2007; Tilstra et al., 2009; Van Steensel, Oostdam, Van Gelderen, & Van Schooten, 2014; Veenendaal, Groen, & Verhoeven, 2015). The current study, however, was one of the first to combine these different lexical aspects into one design and examine each individual contribution in explaining individual differences in reading comprehension. As hypothesized, individual differences in reading comprehension skill could be predicted by individual differences in size of the lexicon, depth of lexical knowledge, and strength of connections while controlling for decoding skills, short-term memory capacity, and general reasoning. The results from the multiple regression analyses indicated that all lexical aspects contributed uniquely in explaining individual differences in reading comprehension skills. These results are in line with the complexity and centrality of the lexicon as proposed by Perfetti and Stafura (2014). Of the four semantic quality measures, the written vocabulary breadth test explained the most unique variance in predicting fourth graders’ reading comprehension skills (8 %). According to the LQH (Perfetti & Hart, 2002) representations consists of three chunks of information (i.e., orthographic, phonological, and semantic). The task measuring written vocabulary breadth and the reading comprehension tasks rely on all three constituents: the orthographic and phonological constituents in decoding and the semantic constituent for retrieving meaning. The overlap between the two tasks might be why the written vocabulary breadth task, of the four semantic quality measures, was best predictive of reading comprehension.

Although all lexical predictors explained unique variance in predicting reading comprehension, it should be noted that almost two-thirds of the total variance in predicting reading comprehension explained by the lexical measures was shared. Based on these results, we conclude that the various lexical components measured in the present study show overlap and can be considered to be part of the same construct. However, the additional unique variance explained in reading comprehension by each individual predictor suggests that, in addition to this shared construct, each component also has something unique to offer.

The results of the current study need to be interpreted with some caution. In the current study no measure of listening comprehension was included. It has been very well established that listening comprehension is strongly related to reading comprehension (SVR; Hoover & Gough, 1990). Previous work has indicated that, in contrast to the original theory, the listening comprehension components of the SVR should be regarded as a more general linguistic component including vocabulary knowledge (e.g., Tilstra et al., 2009; Verhoeven & Van Leeuwe, 2008). Therefore, listening comprehension and vocabulary might share variance in predicting reading comprehension skills. Future research on the unique contributions of different dimensions of vocabulary in explaining differences in reading comprehension could benefit from including a measure of listening comprehension.

As suggested by previous research, reading comprehension tests differ in the underlying skills they assess (e.g., Keenan et al., 2008; Kintsch, 2012; Nation & Snowling, 1997). Therefore, in the current study, we used four different tests covering different text types, different text lengths, and different types of questions. The purpose of the current study was to examine the relative contributions of the various aspects of semantic quality to reading comprehension in general. Future research exploring whether the relative contribution of the various aspects of semantic quality to various dimensions of reading comprehension (e.g., text length and text type) would be highly interesting, and the results might have important implications for both education and research.

The combination of findings presented in this paper offer important implications. Given that lexical measures related to lexical size, lexical depth, and strength of connections each explained unique variance in reading comprehension skill, future research on the role of vocabulary in reading comprehension should consider the complex nature of this relationship and include measures of these different dimensions of vocabulary. For educational purposes, the current results indicate that difficulties in reading comprehension might originate from a variety of lexical problems. Accordingly, vocabulary instruction in education should focus not only on increasing the number of lexical entries in the lexicon, but also on depth of knowledge and on the connections between words. Enhancing all these aspects of the lexicon may lead to an increase in reading comprehension skills. However, intervention studies on these different aspects of the lexicon and their relationship to reading comprehension are warranted. Finally, in order to make causal claims, future research could benefit from adopting a longitudinal perspective in which developmental trajectories are examined.

Conclusion

The results of the current study suggest that various aspects of lexical knowledge might be related to reading comprehension skills in different ways. Individual differences in fourth-grade reading comprehension skills can be explained by differences in the number of lexical entries (vocabulary breadth), semantic quality of these entries (vocabulary depth), and connection strength between lexical representations. In research, this complex relationship between the lexicon and reading comprehension should be taken into account. For education, vocabulary instruction should focus on enhancing the lexicon by increasing the number of representations stored in the lexicon and enhancing the quality and connection strengths of these representations.

References

Aarnoutse, C., & Kapinga, T. (2005). Begrijpend lezen 345678 [Reading comprehension 345678]. Ridderkerk: Uitgever 678 Onderwijs Advisering.

Aitchinson, J. (2012). Words in the mind: An introduction to the mental lexicon (4th ed.). West Sussex: Wiley.

Betjemann, R. S., & Keenan, J. M. (2008). Phonological and semantic priming in children with reading disability. Child Development, 79, 1086–1102. doi:10.1111/j.1467-8624.2008.01177.x.

Bock, K., & Levelt, W. (1994). Language production: Grammatical encoding. In M. A. Gernsbacher (Ed.), Handbook of psycholinguistics (pp. 539–588). San Diego, CA: Academic Press.

Braze, D., Tabor, W., Shankweiler, D. P., & Mencl, W. E. (2007). Speaking up for vocabulary: Reading skill differences in young adults. Journal of Learning Disabilities, 40, 226–243. doi:10.1177/00222194070400030401.

Brus, B. T., & Voeten, M. M. (1999). Eén-Minuut Test [One Minute Test]. Lisse: Swets.

Cain, K. (2010). Reading development and difficulties. West Sussex, UK: Wiley.

Cain, K., & Oakhill, J. (2014). Reading comprehension and vocabulary: Is vocabulary more important for some aspects of comprehension? L’Année Psychologique, 114, 647–662. doi:10.4074/S0003503314004035.

Cito. (2007). LOVS Begrijpend lezen groep 5 [LOVS Reading comprehension grade 3]. Arnhem: Cito.

Collins, A. M., & Loftus, E. F. (1975). A spreading-activation theory of semantic processing. Psychological Review, 82, 407–428. doi:10.1037/0033-295X.82.6.407.

Cronin, V. S. (2002). The syntagmatic–paradigmatic shift and reading development. Journal of Child Language, 29, 189–204. doi:10.1017/S0305000901004998.

Cutting, L. E., & Scarborough, H. S. (2006). Prediction of reading comprehension: Relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading, 10, 277–299. doi:10.1207/s1532799xssr1003_5.

De Deyne, S., Navarro, D. J., & Storms, G. (2013). Better explanations of lexical and semantic cognition using networks derived from continued rather than single-word associations. Behavior Research Methods, 45, 480–498. doi:10.3758/s13428-012-0260-7.

Dunn, L. M., Dunn, L. M., & Schlichting, J. E. P. T. (2005). Peabody picture vocabulary test-III-NL. Amsterdam: Harcourt Test Publishers.

Entwisle, D. R. (1966). Word associations of young children. Baltimore, MD: Johns Hopkins Press.

Field, A., Miles, J., & Field, Z. (2012). Discovering statistics using R. London: SAGE.

Fuchs, D., Compton, D. L., Fuchs, L. S., Bryant, V. J., Hamlett, C. L., & Lambert, W. (2012). First-grade cognitive abilities as long-term predictors of reading comprehension and disability status. Journal of learning disabilities, 45, 217–231. doi:10.1177/0022219412442154.

Gough, P. B., & Tunmer, W. E. (1986). Decoding, reading, and reading disability. Remedial and Special Education, 7, 6–10. doi:10.1177/074193258600700104.

Hagoort, P. (2005). On Broca, brain, and binding: A new framework. Trends in Cognitive Sciences, 9, 416–423. doi:10.1016/j.tics.2005.07.004.

Hagoort, P. (2007). The memory, unification, and control (MUC) model of language. In A. S. Meyer, L. R. Wheeldon, & A. Krott (Eds.), Automaticity and control in language processing (pp. 243–270). New York, NY: Psychology Press.

Hagoort, P. (2013). MUC (memory, unification, control) and beyond. Frontiers in Psychology, 4, 416. doi:10.3389/fpsyg.2013.00416.

Hall, R., Greenberg, D., Laures-Gore, J., & Pae, H. K. (2014). The relationship between expressive vocabulary knowledge and reading skills for adult struggling readers. Journal of Research in Reading, 37, S87–S100. doi:10.1111/j.1467-9817.2012.01537.x.

Hoover, W. A., & Gough, P. B. (1990). The simple view of reading. Reading and Writing: An Interdisciplinary Journal, 2, 127–160. doi:10.1007/BF00401799.

Keenan, J. M., Betjemann, R. S., & Olson, R. K. (2008). Reading comprehension tests vary in the skills they assess: Differential dependence on decoding and oral comprehension. Scientific Studies of Reading, 12, 281–300. doi:10.1080/10888430802132279.

Kendeou, P., Savage, R., & Van den Broek, P. (2009). Revisiting the simple view of reading. British Journal of Educational Psychology, 79, 353–370. doi:10.1348/978185408X369020.

Kintsch, W. (1988). The role of knowledge in discourse comprehension: A construction-integration model. Psychological Review, 95, 163–182. doi:10.1037/0033-295X.95.2.163.

Kintsch, W. (2012). Psychological models of reading comprehension and their implications for assessment. In J. P. Sabatini, E. R. Albro, & T. O’Reilly (Eds.), Measuring up: Advances in how we assess reading ability (pp. 21–38). Plymouth: Rowman & Littlefield Education.

Kort, W., Schittekatte, M., Dekker, P. H., Verhaeghe, P., Compaan, E. L., Bosmans, M., et al. (2005). WISC-III NL. Handleiding en verantwoording [WISC-III NL. Manual]. Amsterdam: Hardcourt Test Publishers.

Landi, N. (2010). An examination of the relationship between reading comprehension, higher-level and lower-level reading sub-skills in adults. Reading and Writing: An Interdisciplinary Journal, 23, 701–717. doi:10.1007/s11145-009-9180-z.

Mullis, I. V. S., Martin, M. O., Kennedy, A. M., Trong, K. L., & Sainsbury, M. (2009). PIRLS 2011 assessment framework. Chestnut Hill, MA: Boston College.

Nation, K. (2005). Children’s reading comprehension difficulties. In M. J. Snowling & C. Hulme (Eds.), The science of reading: A handbook (pp. 248–265). Oxford: Blackwell Publishing.

Nation, K., Adams, J. W., Bowyer-Crane, C. A., & Snowling, M. J. (1999). Working memory deficits in poor comprehenders reflect underlying language impairments. Journal of Experimental Child Psychology, 73, 139–158. doi:10.1006/jecp.1999.2498.

Nation, K., & Snowling, M. J. (1997). Assessing reading difficulties: The validity and utility of current measures of reading skill. British Journal of Educational Psychology, 67, 359–370. doi:10.1111/j.2044-8279.1997.tb01250.x.

Nation, K., & Snowling, M. J. (1999). Developmental differences in sensitivity to semantic relations among good and poor comprehenders: Evidence from semantic priming. Cognition, 70, B1–B13. doi:10.1016/S0010-0277(99)00004-9.

Ouellette, G. P. (2006). What’s meaning got to do with it: The role of vocabulary in word reading and reading comprehension. Journal of Educational Psychology, 98, 554–566. doi:10.1037/0022-0663.98.3.554.

Ouellette, G., & Beers, A. (2010). A not-so-simple view of reading: How oral vocabulary and visual-word recognition complicate the story. Reading and Writing: An Interdisciplinary Journal, 23, 189–208. doi:10.1007/s11145-008-9159-1.

Perfetti, C. (2007). Reading ability: Lexical quality to comprehension. Scientific Studies of Reading, 11, 357–383. doi:10.1080/10888430701530730.

Perfetti, C. A., & Hart, L. (2002). The lexical quality hypothesis. In L. Verhoeven, C. Elbro, & P. Reitsma (Eds.), Precursors of functional literacy (pp. 67–86). Amsterdam: John Benjamins Publishing Co.

Perfetti, C. A., Landi, N., & Oakhill, J. (2005). The acquisition of reading comprehension skill. In M. J. Snowling & C. Hulme (Eds.), The science of reading: A handbook (pp. 227–247). Oxford: Blackwell Publishing.

Perfetti, C., & Stafura, J. (2014). Word knowledge in a theory of reading comprehension. Scientific Studies of Reading, 18, 22–37. doi:10.1080/10888438.2013.827687.

Plaut, D. C., McClelland, J. L., Seidenberg, M. S., & Patterson, K. (1996). Understanding normal and impaired word reading: Computational principles in quasi-regular domains. Psychological Review, 103, 56–115. doi:10.1037/0033-295X.103.1.56.

R Core Team. (2015). R: A language and environment for statistical computing. R Foundation for Statistical Computing. http://www.R-project.org/.

Raven, J. C. (1960). Guide to the standard progressive matrices. London: H.K. Lewis & Co., Ltd.

Revelle, W. (2014). psych: Procedures for Personality and Psychological Research (Version 1.4.8). http://CRAN.R-project.org/package=psych.

Richter, T., Isberner, M. B., Naumann, J., & Neeb, Y. (2013). Lexical quality and reading comprehension in primary school children. Scientific Studies of Reading, 17, 415–434. doi:10.1080/10888438.2013.764879.

Ricketts, J., Nation, K., & Bishop, D. V. (2007). Vocabulary is important for some, but not all reading skills. Scientific Studies of Reading, 11, 235–257. doi:10.1080/10888430701344306.

Tannenbaum, K. R., Torgeson, J. K., & Wagner, R. K. (2006). Relationships between word knowledge and reading comprehension in third-grade children. Scientific Studies of Reading, 10, 381–398. doi:10.1207/s1532799xssr1004_3.

Tilstra, J., McMaster, K., Van den Broek, P., Kendeou, P., & Rapp, D. (2009). Simple but complex: Components of the simple view of reading across grade levels. Journal of Research in Reading, 32, 383–401. doi:10.1111/j.1467-9817.2009.01401.x.

Van den Bos, K. P., Lutje Spelberg, H. C., Scheepstra, A. J. M., & De Vries, J. R. (1994). De Klepel, een Test voor de Leesvaardigheid van Pseudowoorden. Verantwoording, Diagnostiek en Behandeling [De Klepel, a test for Reading Skills of Pseudowords. Manual, Diagnostics, and Treatment]. Nijmegen: Berkhout.

Van den Broek, P. (1994). Comprehension and memory of narrative texts: Inferences and coherence. In M. A. Gernsbacher (Ed.), Handbook of psycholinguistics (pp. 539–588). San Diego, CA: Academic Press.

Van Dijk, T. A., & Kintsch, W. (1983). Strategies of discourse comprehension. New York, NY: Academic Press.

Van Steensel, R., Oostdam, R., Van Gelderen, A., & Van Schooten, E. (2014). The role of word decoding, vocabulary knowledge and meta-cognitive knowledge in monolingual and bilingual low-achieving adolescents’ reading comprehension. Journal of Research in Reading,. doi:10.1111/1467-9817.12042.

Veenendaal, N. J., Groen, M. A., & Verhoeven, L. (2015). What oral text reading fluency can reveal about reading comprehension. Journal of Research in Reading, 38, 213–225. doi:10.1111/1467-9817.12024.

Verhoeven, L., & Van Leeuwe, J. (2008). Prediction of the development of reading comprehension: A longitudinal study. Applied Cognitive Psychology, 22, 407–423. doi:10.1002/acp.1414.

Verhoeven, L., van Leeuwe, J., & Vermeer, A. (2011). Vocabulary growth and reading development across the elementary school years. Scientific Studies of Reading, 15, 8–25. doi:10.1080/10888438.2011.536125.

Verhoeven, L. T. W., & Vermeer, A. R. (1986). Taaltoets allochtone kinderen [Language Test for Foreign Children]. Tilburg: Zwijsen.

Vermeer, A. (2001). Breadth and depth of vocabulary in relation to L1/L2 acquisition and frequency of input. Applied Psycholinguistics, 22, 217–234.

Acknowledgments

This research was supported by a Grant (411.10.925) from the Netherlands Organisation for Scientific Research (NWO).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Swart, N.M., Muijselaar, M.M.L., Steenbeek-Planting, E.G. et al. Differential lexical predictors of reading comprehension in fourth graders. Read Writ 30, 489–507 (2017). https://doi.org/10.1007/s11145-016-9686-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11145-016-9686-0