Abstract

We present a formula that expresses the Hankel determinants of a linear combination of length \(d+1\) of moments of orthogonal polynomials in terms of a \(d\times d\) determinant of the orthogonal polynomials. This formula exists somehow hidden in the folklore of the theory of orthogonal polynomials but deserves to be better known, and be presented correctly and with full proof. We present four fundamentally different proofs, one that uses classical formulae from the theory of orthogonal polynomials, one that uses a vanishing argument and is due to Elouafi (J Math Anal Appl 431:1253–1274, 2015) (but given in an incomplete form there), one that is inspired by random matrix theory and is due to Brézin and Hikami (Commun Math Phys 214:111–135, 2000), and one that uses (Dodgson) condensation. We give two applications of the formula. In the first application, we explain how to compute such Hankel determinants in a singular case. The second application concerns the linear recurrence of such Hankel determinants for a certain class of moments that covers numerous classical combinatorial sequences, including Catalan numbers, Motzkin numbers, central binomial coefficients, central trinomial coefficients, central Delannoy numbers, Schröder numbers, Riordan numbers, and Fine numbers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The purpose of this article is to put to the fore a fundamental formula for orthogonal polynomials that is implicitly hidden in the classical literature on orthogonal polynomials. It is so well hidden that seemingly even top experts of the theory of orthogonal polynomials are not aware of the formula. How and why this is possible is explained in greater detail in Sect. 2. My literature search led me to discover that the formula is stated in Lascoux’s book [11], albeit incorrectly, but with a correct proof. Subsequently, I realised that the formula is stated correctly by Elouafi in [6], however with an incomplete proof. Finally, in reaction to [10], Arno Kuijlaars pointed out to me that the formula appears in [1], in which a result due to Brézin and Hikami [2] is cited. Both papers contain correct statement and (different) proofs, however they use random matrix language. Again, see Sect. 2 for more details.

So, let me present this formula without further ado. Let \(\big (p_n(x)\big )_{n\ge 0}\) be a sequence of monic polynomials over a field K of characteristic zeroFootnote 1 with \(\deg p_n(x)=n\), and assume that they are orthogonal with respect to the linear functional L, i.e., they satisfy \(L(p_m(x)p_n(x))=\omega _n\delta _{m,n}\) with \(\omega _n\ne 0\) for all n, where \(\delta _{m,n}\) is the Kronecker delta. Furthermore, we write \(\mu _n\) for the n-th moment \(L(x^n)\) of the functional L, for which we also use the umbral notation \(\mu ^n\equiv \mu _n\).Footnote 2

Theorem 1

Let n and d be non-negative integers. Given variables \(x_1,x_2,\dots ,x_d\), and using the above explained umbral notation, we have

Here, determinants of empty matrices and empty products are understood to equal 1.

Remark. The theory of orthogonal polynomials guarantees that in our setting (namely due to the condition \(\omega _n\ne 0\) in the orthogonality) the Hankel determinant of moments in the denominator on the left-hand side of (1.1) is non-zero.

We may rewrite (1.1) using quantities that appear in the three-term recurrence

with initial values \(p_{-1} (x)= 0\) and \(p_0 (x)=1\), that is satisfied by the polynomials according to Favard’s theorem (see e.g. [8, Theorems 11–13]) for some sequences \((s_n)_{n\ge 0}\) and \((t_n)_{n\ge 0}\) of elements of K with \(t_n\ne 0\) for all n. Namely, using the well-known fact (see e.g. [15, Ch. IV, Cor. 6])

the formula (1.1) becomes

This form reveals that we may regard the formula as a polynomial formula in the \(x_i\)’s and the \(s_i\)’s and \(t_i\)’s. Indeed, the determinant on the right-hand side, being a skew-symmetric polynomial in the \(x_i\)’s, is divisible by the Vandermonde product in the denominator.

In the next section, I will present the history of Theorem 1, from a strongly biased (personal) view. As I explain there, I discovered the formula on my own while thinking about Conjecture 8 in [4], and also came up with a proof, presented here in Sect. 3. Later I found the earlier mentioned occurrences of the formula in [1, 6, 11] and [2]. Lascoux’s argument (the one in [1] is essentially the same), which follows the classical literature of orthogonal polynomials (but is presented in [11] in his very personal language), is presented in Sect. 4 (in “standard” language). Section 5 brings the completion of Elouafi’s vanishing argument. The random matrix-inspired proof due to Brézin and Hikami is the subject of Sect. 6.

Sections 7 and 8 address issues that come from my initial motivation (and Elouafi’s) that in the end led to the discovery of Theorem 1: Hankel determinants of linear combinations of combinatorial sequences. Section 7 addresses the case in which in (1.1) the \(x_i\)’s are all equal to each other. In that case, it is the limit formula in Proposition 5 in Sect. 5 that has to be applied. We show in Sect. 7 that Elouafi’s recurrence approach for that case can be replaced by an approach yielding completely explicit expressions. Finally, in Sect. 8 we show that the theory of linear recurrent sequences with constant coefficients implies that, in the case where the coefficients \(s_i\) and \(t_i\) in the three-term recurrence (1.2) are constant for large i, the scaled Hankel determinants of linear combinations of moments on the left-hand side of (1.1) satisfy a linear recurrence with constant coefficients of order \(2^d\), plus some more specific assertions about the coefficients in this linear recurrence, see Corollary 9. This proves conjectures from [5], vastly generalising them.

2 History of Theorem 1—a (very) personal view

I discovered Theorem 1 on my own, in a very roundabout way. It started with an email of Johann Cigler in which he asked me for a proof of a special case of

We quickly realised that we can actually prove the above identity, which became the first main result in [4] (see Theorem 1 there; the reader should notice that the left-hand side of (2.1) agrees with the left-hand side of (1.1), while the right-hand sides do not agree; in retrospect, the equality of the right-hand sides is equivalent to the Christoffel–Darboux identity, cf. [14, Theorem 3.2.2]). We then proceeded to derive a (more complicated) triple-sum expression for the “next” case (see [4, Theorem 5]),

In the special case where the \(s_i\)’s and the \(t_i\)’s are constant for \(i\ge 1\), the orthogonal polynomial \(p_n(x)\) can be expressed in terms of a linear combination of Chebyshev polynomials (see [4, Eq. (4.2)]). This allowed us to evaluate the sum on the right-hand side of (2.1) and the afore-mentioned triple sum. We recognised a pattern, and this led us to conjecture a precise formula for

(see [4, Conj. 8]), again expressed in terms of Chebyshev polynomials. Subsequently, I realised that this conjectural expression could be simplified (by means of [4, Eq. (4.2)]). The result was the right-hand side of (1.1), in the special case where the \(s_i\)’s and \(t_i\)’s are constant for \(i\ge 1\). The obvious question at that point then was: does Formula (1.1) also hold if the \(s_i\)’s and \(t_i\)’s are generic? Computer experiments said “yes”.

At this point I told myself: this identity, being a completely general identity of fundamental nature connecting orthogonal polynomials and their moments, must be known. Naturally, I consulted standard books on orthogonal polynomials, such as Szegő’s classic [14], but I could not find it. After a while I then started to think about a proof. I figured out the proof of (1.1) that can be found in Sect. 3.

Still, I had the strong feeling that this identity must be known. So, if I cannot find it in classical sources, what about “non-classical” sources? I remembered that Alain Lascoux had devoted one chapter of his book [11] on symmetric functions to orthogonal polynomials, revealing there that orthogonal polynomials can be seen as Schur functions of quadratic shapes, and demonstrating that formal identities for orthogonal polynomials can be conveniently established by adopting this point of view. So I consulted [11], and I quickly realised that Proposition 8.4.1 in the book addresses (1.1); it needed some more work to see what exactly was contained in that proposition.Footnote 3

Lascoux attributes his proposition to Christoffel, without any specific reference. With this information in hand, I returned to Szegő’s book [14] and made a text search for “Christoffel”. I finally found the relevant theorem: Theorem 2.5. I believe that the reader, after looking at that theorem, will forgive me for not recognizing on my first attempt its relevance for our Theorem 1.Footnote 4 In particular, [14, Theorem 2.5] does not say anything about the proportionality factor between the two sides in (1.1) (as opposed to Lascoux, even if the expression he gives is not correct; he does provide an argument thoughFootnote 5). Szegő tells that [14, Theorem 2.5] is due to Christoffel [3], but only in the special case of Legendre polynomials (indeed, at the end of [3] there appears Theorem 1 in that special case), a fact that also seems to have escaped many researchers in the theory of orthogonal polynomials.

Eventually, I found that Theorem 1 appears, correctly stated, as Theorem 1 in the relatively recent article [6] by Elouafi. However, the proof given there is incomplete.Footnote 6 I present a completion of this proof in Sect. 5.

With all this knowledge, I consulted Mourad Ismail and asked him if he knows the formula, respectively can refer me to a source in the literature. He immediately pointed out that the right-hand side determinant of (1.1) features in “Christoffel’s theorem" about the orthogonal polynomials with respect to the measure defined by the density \(\prod _{i=1} ^{d-1}(x+x_i)\,\mathrm{{d}}\mu (x)\) (with \(\mathrm{{d}}\mu (x)\) the density of the original orthogonality measure), that is, in [14, Theorem 2.5] respectively [7, Theorem 2.7.1]. However, the conclusion of a longer discussion was that he had not seen this formula earlier.

Finally, when I posted [10] (containing the extension of (1.1) to a rational deformation of the density \(\mathrm{{d}}\mu (x)\)) on the ar\(\chi \)iv, Arno Kuijlaars brought the article [1] to my attention. Indeed, Equation (2.6) in [1] is equivalent to (1.1), and it is pointed out there that this result had been earlier obtained by Brézin and Hikami in [2, Eq. (14)]. It requires some translational work to see this though, see Sect. 2.3.

In the next subsection, I provide a translation, into “standard English”, of Lascoux’s rendering of Theorem 1. Then, in Sect. 2.2, I present “Christoffel’s theorem” and explain its connection to Theorem 1. Finally, in Sect. 2.3 I translate the random matrix result [2, Eq. (14)] into the language that we use here to see that it is indeed equivalent to (1.1).

2.1 Lascoux’s Proposition 8.4.1 in [11]

This proposition says that, given alphabets \({\mathbb {A}}\) and \({\mathbb {B}}=\{b_1,b_2,\dots ,b_{k+1}\}\),Footnote 7

is proportional, up to a factor independent of \({\mathbb {B}}\), to

The proportionality factor is given in the proof of [11, Prop. 8.4.1] (except for the overall signFootnote 8), but it has not been correctly worked out.

In order to understand the connection with Theorem 1, let me translate Lascoux’s language to the notation that I use here in this paper. First of all, as mentioned in Footnote 7, the symbol \(\Delta ({\mathbb {B}})\) denotes the Vandermonde product (see [11, bottom of p. 11])

Next, \(S_{r^s}({\mathbb {C}})\) is the Schur function of rectangular shape \(r^s=(r,r,\dots ,r)\) (with s occurrences of r) in the alphabet \({\mathbb {C}}\) (not to be confused with the complex numbers!), defined by (see [11, Eq. (1.4.3)])

where \(S^a({\mathbb {C}})\) is the complete homogeneous symmetric function of degree a in the alphabet \({\mathbb {C}}\). Thus,

Here (this is implicit on page 6 of [11]),

where \(\Lambda ^{a}({\mathbb {B}})\) is the elementary symmetric function of degree a in \(b_1,b_2,\dots ,b_{k+1}\). Furthermore (cf. [11, second display on p. 115]), we have \(S^m({\mathbb {A}})=\mu _m\), with \(\mu _m\) being “our” m-th moment of the linear functional L. Thus, we recognise that \(S_{(n+k)^n}({\mathbb {A}}-{\mathbb {B}})\) is, up to the sign \((-1)^{\left( {\begin{array}{c}n\\ 2\end{array}}\right) }\), in our notation the Hankel determinant

on the left-hand side of (1.1) (with \(x_\ell \) replaced by \(-b_\ell \) and d replaced by \(k+1\)).

On the other hand, Lascoux’s polynomials \(P_n(x)\) are orthonormal with respect to the linear functional L with moments \(\mu _m\), \(m=0,1,\dots \), and are given by (cf. [11, Theorem 8.1.1])

while “our” monic orthogonal polynomials \(p_n(x)\) are given by (cf. [11, Theorem 8.1.1 and second display on p. 116])

Thus, we see that Lascoux’s determinant \(\det _{1\le i,j\le k}\big (P_{n-1+j}(b_j)\big )\) is, up to some overall factor, “our” determinant \(\det _{1\le i,j\le k}\big (p_{n-1+j}(b_j)\big )\) on the right-hand side of (1.1) (with \(x_\ell \) replaced by \(-b_\ell \) and d replaced by \(k+1\)).

It should now be clear to the reader that Lascoux’s Proposition 8.4.1 in [11] is equivalent to Theorem 1, except that he did not bother to figure out the correct sign, and that he did not get the proportionality factor right [both of which being very understandable given the complexity of the task ...; in fact, in order to not risk similar failure, I do not attempt to present the correct proportionality factor or sign in Lascoux’s notation—in “standard” notation, the correct identity is given in (1.1)].

2.2 “Christoffel’s Theorem”

This theorem (cf. [14, Theorem 2.5] or [7, Theorem 2.7.1]) says: in the setting of Section 1, the polynomials (in x)

form a sequence of orthogonal polynomials with respect to the linear functional with moments

The reader may now understand why I did not notice that Theorem 1 is hidden in the above assertion on my first attempt to find it in Szegő’s book [14]. I did of course see that the determinant in (2.2) is our determinant on the right-hand side of (1.1) (with \(x_d=x\)), and that the moments in (2.3) are “almost” the entries in the Hankel determinant on the left-hand side of (1.1). There are however two obstacles to overcome in order to “extract” (1.1) out of the above assertion: first, one has to recall a certain determinant formula for the orthogonal polynomials with respect to a given moment sequence, namely Lemma 4 in Sect. 4. Applied to the moments in (2.3), it produces indeed the Hankel determinant on the left-hand side of (1.1). The uniqueness of orthogonal polynomials up to scaling then implies that the determinants on the left-hand side of (1.1) (with \(x_d=x\)) and the expression (2.2) agree up to a multiplicative constant (meaning: independent of \(x=x_d\)). So, second, this constant has to be computed. A researcher in the theory of orthogonal polynomials does not really care about the normalisation of the orthogonal polynomials and this seems to be the reason why apparently nobody has done it there, although this is not really difficult, see Sects. 4 and 5 for two slightly different arguments.

2.3 Expectation of a product of characteristic polynomials of random Hermitian matrices

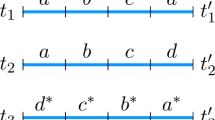

Let \(\mathrm{{d}}\mu (u)\) be the density of some positive measure with infinite support all of whose moments exist. Equation (14) in [2] (cf. also [1, Eq. (2.6)]) reads

(I have changed notation so that it is in line with our notation.) Here, the left-hand side is an expectation for products of characteristic polynomials of random Hermitian matrices. However, this fact does not need to concern us. According to [2, Eq. (5)] (see also [1, Eq. (1.3)]) it can be expressed as

where

Now, by Heine’s formula (cf. [14, Eq. (2.2.11)], [7, Cor. 2.1.3], or Lemma 8), we have

and

Since

(again using umbral notation), the equivalence of (2.4) and (1.1) now becomes obvious.

3 First proof of Theorem 1—condensation

In this section, we present the author’s proof of Theorem 1, which uses the method of condensation (frequently referred to as “Dodgson condensation”). This method provides inductive proofs that are based on a determinant identity due to Jacobi (see Proposition 2 below).

For convenience, we change notation slightly. Instead of the polynomials \(p_n(x)\), let us consider the polynomials \(f_n(x)\) defined by

with \(f_0(x) = 1\) and \(f_{-1}(x)=0\). (It should be noted that the only difference between the recurrences (1.2) and (3.1) is the sign in front of \(s_{n-1}\).) Using these polynomials, the formula (1.1) can be rewritten as

(That is, we got rid of the signs on the right-hand side of (1.1).) Our proof of (3.2) will be based on the method of condensation (see [8, Sect. 2.3]). The “backbone” of this method is the following determinant identity due to Jacobi.

Proposition 2

Let A be an \(N\times N\) matrix. Denote the submatrix of A in which rows \(i_1,i_2,\dots ,i_k\) and columns \(j_1,j_2,\dots ,j_k\) are omitted by \(A_{i_1,i_2,\dots ,i_k}^{j_1,j_2,\dots ,j_k}\). Then we have

for all integers \(i_1,i_2,j_1,j_2\) with \(1\le i_1<i_2\le N\) and \(1\le j_1<j_2\le N\).

The second ingredient of the proof of (3.2) will be the Hankel determinant identity below, which, as its proof will reveal, is actually a consequence of the condensation formula in (3.3).

Lemma 3

Let \((c_n)_{n\ge 0}\) be a given sequence, and \(\alpha \) and \(\beta \) be variables. Then, for all positive integers n, we have

Proof

By using multilinearity in the rows, it is easy to see (cf. also [9, Lemma 4]) that

where \(\chi ({\mathcal {S}})=1\) if \({\mathcal {S}}\) is true and \(\chi ({\mathcal {S}})=0\) otherwise. If we apply this identity to the first determinant on the left-hand side of (3.4), then we obtain

Now we use multilinearity in rows \(0,1,\dots ,r-1\) and in rows \(r,r+1,\dots ,n-1\) separately. This leads to

We substitute this as well as (3.5) (wherever it can be applied) in (3.4). The factor \(\beta -\alpha \) cancels. Subsequently, we compare coefficients of \(\alpha ^s\beta ^{t+1}\), respectively of \(\alpha ^{t+1}\beta ^s\), for \(0\le s\le t\le n\). Thus we see that we need to show

and this would moreover be sufficient for the proof of (3.4). As it turns out, the choice of

and \(i_1=s\), \(i_2=t+1\), \(j_1=n\), \(j_2=n+1\) in Proposition 2 yields exactly (3.6). \(\square \)

We are now ready for the proof of Theorem 1, which, as we have seen, is equivalent to (3.2).

Proof of (3.2)

We prove (3.2), in the form

by induction on d. For \(d=0\), the formula is trivially true. For \(d=1\), the formula has been proven by Mu, Wang and Yeh in [12, Theorem 1.3] in a different but equivalent form (see also [4, Eq. (3.2)]).

Let \({\text {LHS}}_{d,n}(x_1,\dots ,x_d)\) denote the left-hand side of (3.7). For the induction step, we observe that, according to (3.3) with \(N=d\), \(A=\big (f_{n+i-1}(x_j)\big )_{1\le i,j\le d}\), \(i_1=j_1=1\) and \(i_d=j_d=d\), we have

This can be seen as a recurrence formula for \({\text {LHS}}_{d,n}(x_1,\dots ,x_d)\), as one can use it to express \({\text {LHS}}_{d,n}(x_1,\dots ,x_d)\) in terms of expressions of the form \({\text {LHS}}_{e,m}(x_a,\dots ,x_b)\) with e smaller than d. Hence, for the proof of (3.7) it suffices to verify that the right-hand side of (3.7) satisfies the same recurrence. Consequently, we substitute this right-hand side in (3.8). After cancellation of factors that are common to both sides, we arrive at

This is the special case of Lemma 3 where \(c_{i+j}=\mu ^{i+j}\prod _{\ell =2} ^{d-1}(x_\ell +\mu )\), \(\alpha =x_1\) and \(\beta =x_d\). \(\square \)

4 Second proof of Theorem 1—theory of orthogonal polynomials

Here we describe a proof of Theorem 1 that is based on facts from the theory of orthogonal polynomials. We follow largely Lascoux’s arguments in the proof of Proposition 8.4.1 in [11]. They show that, using the uniqueness up to scaling of orthogonal polynomials with respect to a given linear functional, the right-hand side and the left-hand side in (1.1) agree up to a multiplicative constant. For the determination of this constant we provide a simpler argument than the one given in [11].

We prove (1.1) in the form

It should be recalled that, with L denoting the functional of orthogonality for the polynomials \(\big (p_n(x))_{n\ge 0}\), we have \(L(x^n)=\mu _n\), where we still use the umbral notation \(\mu ^n\equiv \mu _n\).

We start with a classical fact from the theory of orthogonal polynomials (cf. [14, Eq. (2.2.9)] or [7, Eq. (2.1.10)]).

Lemma 4

Let M be a linear functional on polynomials in x with moments \(\nu _n\), \(n=0,1,\dots \), such that all Hankel determinants \(\det _{0\le i,j\le n}(\nu _{i+j})\), \(n=0,1,\dots \), are non-zero. Then the determinants

are a sequence of orthogonal polynomials with respect to M.

Proof

We have

It is straightforward to check that the determinant in the last line is orthogonal with respect to \(1,x,x^2,\dots ,x^{n-1}\). Moreover, the coefficient of \(x^n\) is \(\pm \det _{0\le i,j\le n-1}(\nu _{i+j})\), which by assumption is non-zero so that the determinant in the assertion of the lemma is a polynomial of degree n, as desired. \(\square \)

Remark. The determinant in the last line of (4.2) represents another classical determinantal formula for orthogonal polynomials expressed in terms of the moments of the corresponding linear functional of orthogonality, see [14, Eq. (2.2.6)] or [7, Eq. (2.1.11)].

Proof of (4.1)

Using Lemma 4 with \(\nu _n=\mu ^{n}\prod _{\ell =1} ^{d-1}(\mu -x_\ell )\), we see that the determinants in the numerator of the left-hand side of (4.1),

seen as polynomials in \(x_d\), are a sequence of orthogonal polynomials for the linear functional with moments

Clearly, in terms of the functional L (now acting on polynomials in \(x_d\)) of orthogonality for the polynomials \(\big (p_n(x_d)\big )_{n\ge 0}\), this linear functional with moments (4.3) can be expressed as

We claim that also the right-hand side of (4.1) gives a sequence of orthogonal polynomials (in \(x_d\)) with respect to the linear functional (4.4). The first (and easy) observation is that the right-hand side of (4.1) has indeed degree n in \(x_d\).

Let us denote the right-hand side of (4.1) by \(q_n(x_d)\). When we apply the functional (4.4) to \(x_d^sq_n(x_d)\), for \(0\le s\le n-1\), then, up to factors which are independent of \(x_d\), we obtain

By expanding the determinant with respect to the last column, this becomes a linear combination of terms of the form \(L(x_d^sp_{n+i-1}(x_d))\). Since \(i\ge 1\) and \(s\le n-1\), all of them vanish, proving our claim.

By symmetry, the same argument can also be made for any \(x_\ell \) with \(1\le \ell \le d-1\).

The fact that orthogonal polynomials with respect to a particular linear functional are unique up to multiplicative constants then implies that

where C is independent of the variables \(x_1,x_2,\dots ,x_d\). In order to compute C, we divide both sides by \(x_1^nx_2^n\cdots x_d^n\), and then compute the limits as \(x_d\rightarrow \infty \), ...\(x_2\rightarrow \infty \), \(x_1\rightarrow \infty \), in this order. It is not difficult to see that in this manner the above equation reduces to

where A is a lower triangular matrix with ones on the diagonal. Hence, we get \(C=(-1)^{nd}\det _{0\le i,j\le n-1}(\mu _{i+j})\), as desired. \(\square \)

5 Third proof of Theorem 1—vanishing of polynomials

The purpose of this section is to present a completed version of Elouafi’s proof of Theorem 1 in [6]. It is based on a vanishing argument: it is shown that the left-hand side of (1.1) vanishes if and only if the right-hand side does. Since both sides have the same leading monomial as polynomials in the \(x_i\)’s, it follows that they must be the same up to a multiplicative constant. This constant is then determined in the last step.

To begin with, we need some preparations. Let us write

for the expression on the right-hand side of (1.1), forgetting the sign. Since the numerator is skew-symmetric in the \(x_i\)’s, it is divisible by the Vandermonde product \(\prod _{1\le i<j\le d} ^{}(x_i-x_j)\) in the denominator, so that \(R(x_1,x_2,\dots ,x_d)\) is actually a (symmetric) polynomial in the \(x_i\)’s. Thus, while in its definition it seems problematic to substitute the same value for two different \(x_i\)’s in \(R(x_1,x_2,\dots ,x_d)\), this is actually not a problem. Nevertheless, it would also be good to have an explicit form for such a case available as well. This is afforded by the proposition below.

Proposition 5

Let \(y_1,y_2,\dots ,y_e\) be variables and \(m_1,m_2,\dots ,m_e\) be non-negative integers with \(m_1+m_2+\dots +m_e=d\). Then we have

where \(y_i\) is repeated \(m_i\) times in the argument of R on the left-hand side. The matrix \(M_{m_1,m_2,\dots ,m_e}(y_1,y_2,\dots ,y_e)\) is defined by

where \(M_m(y)\) is the \(d\times m\) matrix

with \(p^{(j)}_n(y)\) denoting the j-th derivative of \(p_n(y)\) with respect to y.

Proof

We have to compute the limit

Since we know that \(R(x_1,x_2,\dots ,x_d)\) is in fact a polynomial in the \(x_i\)’s, we have a large flexibility of how to compute this limit. We choose to do it as follows: we put \(x_i=y_1+ih\) for \(i=1,2,\dots ,m_1\), \(x_i=y_2+ih\) for \(i=m_1+1,m_1+2,\dots ,m_1+m_2\), etc. In the end we let h tend to zero.

We describe how this works for the first group of variables, for the other the procedure is completely analogous. In the matrix appearing in the numerator of the definition of \(R(x_1,x_2,\dots ,x_d)\), we replace column j by

Clearly, this modification of the matrix can be achieved by elementary column operations, so that the determinant is not changed. Thus, we obtain

where

(Here, the terms describe the columns of the matrix \(N_1\).) We now perform the earlier described assignments for \(x_1,x_2,\dots ,x_{m_1}\). Under these assignments, we have

and

Therefore we get

where

To finish the argument, one has to proceed analogously for the remaining groups of \(x_i\)’s and finally put the arising factorials into the columns of the determinant. \(\square \)

The following auxiliary result is [6, Lemma 3].

Lemma 6

Let \(q(x)= \prod _{i=1} ^{d}(x+x_i)\). Furthermore, as before, we write L for the linear functional with respect to which the sequence \(\big (p_n(x)\big )_{n\ge 0}\) is orthogonal. Then

Proof

We first rewrite the determinant on the left-hand side,

Setting \(p_m(x)=\sum _{a=0}^mc_{ma}x^a\), for some coefficients \(c_{ma}\), we may rewrite the right-hand side of (5.2) as

Consequently, letting \(c_{ma}=0\) whenever \(m<a\), we have

where

Since A and C are triangular matrices with ones on the main diagonal (the latter follows from our assumption that the polynomials \(p_m(x)\) are monic), we conclude that \(\det A=\det C=1\). The combination of (5.3) and (5.4) then establishes the desired assertion. \(\square \)

The following lemma is [6, Lemma 4], which appears there with an incomplete proof (cf. Footnote 6).

Lemma 7

As polynomial functions in the variables \(x_1,x_2,\dots ,x_d\), the expression

vanishes in an extension field \({{\widehat{K}}}\) of the ground field K if and only if the determinant

vanishes.

Proof

Let \(R(x_1,x_2,\dots ,x_d)=0\), for some choice of elements \(x_i\) in \({{\widehat{K}}}\). We assume that

with the \(y_i\)’s being pairwise distinct, and where \(y_i\) appears with multiplicity \(m_i\) among the \(x_j\)’s, \(i=1,2,\dots ,e\). Since both \(R(x_1,x_2,\dots ,x_d)\) and \(S(x_1,x_2,\dots ,x_d)\) are symmetric polynomials in the \(x_j\)’s, without loss of generality we may assume that \(x_1=\dots =x_{m_1}=y_1\), \(x_{m_1+1}=\dots =x_{m_1+m_2}=y_2\), ..., \(x_{m_1+\dots +m_{e-1}+1}=\dots =x_{d}=y_e\). Thus, in view of Proposition 5, the vanishing of

where the \(y_i\)’s are pairwise distinct and \(y_i\) is repeated \(m_i\) times, is equivalent to the rows of the matrix \(M_{m_1,m_2,\dots ,m_e}(y_1,y_2,\dots ,y_e)\) being linearly dependent. In other words, there exist constants \(c_i\in {{\widehat{K}}}\), \(i=0,1,\dots ,n-1\), not all of them zero, such that

Define \(g(x):=\sum _{i=1}^{d}c_ip_{n+i-1}(x)\). Since the \(y_i\)’s are pairwise distinct, Identity (5.7) implies that

divides g(x) as a polynomial in x. Hence, there exists another polynomial h(x) such that

Now, by inspection, g(x) is a polynomial of degree at most \(n+d-1\), while q(x) is a polynomial of degree d. Hence, h(x) is a polynomial of degree at most \(n-1\), which therefore can be written as a linear combination

for some constants \(r_i\in {{\widehat{K}}}\), \(i=0,1,\dots ,n-1\). By its definition, g(x) is orthogonal to all \(p_j(x)\) with \(0\le j\le n-1\). In other words, we have

Equivalently, the rows of the matrix \(\big (L\big (q(x)p_i(x)p_j(x)\big )_{0\le i,j\le n-1}\) are linearly dependent, and consequently the determinant on the right-hand side of (5.2) vanishes, which in its turn implies that the determinant on the left-hand side of (5.2), which is equal to the determinant in (5.6), vanishes, as desired.

Conversely, let the determinant in (5.6) be equal to zero, for some choice of \(x_i's\) in \({{\widehat{K}}}\). Again, without loss of generality we assume that the first \(m_1\) of the \(x_i\)’s are equal to \(y_1\), the next \(m_2\) of the \(x_i\)’s are equal to \(y_2\), ..., and the last \(m_e\) of the \(x_i\)’s are equal to \(y_e\), the \(y_j\)’s being pairwise distinct. These assumptions imply again that

Using the equality of Lemma 6, we see that the determinant on the right-hand side of (5.2) must vanish. Thus, the rows of the matrix on this right-hand side must be linearly dependent so that there exist constants \(r_i\in {{\widehat{K}}}\), \(i=0,1,\dots ,n-1\), not all of them zero, such that

Now consider the polynomial \(g(x)=\sum _{i=0}^{n-1}r_iq(x)p_i(x)\). This is a non-zero polynomial of degree at most \(n+d-1\) which, by the last identity, is orthogonal to \(p_j(x)\), for \(j=0,1,\dots ,n-1\). Hence there must exist constants \(c_i\in {{\widehat{K}}}\) such that

On the other hand, by the definition of g(x), we have

We conclude that

This means that the rows of the matrix \(M_{m_1,m_2,\dots ,m_e}(y_1,y_2,\dots ,y_e)\) are linearly dependent, so that, by Proposition 5 the polynomial \(R(x_1,x_2,\dots ,x_d)\) in (5.5) vanishes. \(\square \)

We can now complete the proof of Theorem 1.

Proof of Theorem 1

By Lemma 7 we know that the symmetric polynomials \(R(x_1,x_2,\dots ,x_d)\) and \(S(x_1,x_2,\dots ,x_d)\) vanish only jointly. If we are able to show that in addition both have the same highest degree term then they must be the same up to a multiplicative constant. Indeed, the highest degree term in \(R(x_1,x_2,\dots ,x_d)\) is obtained by selecting the highest degree term in each entry of the matrix in the numerator of the fraction on the right-hand side of (5.5). Explicitly, this highest degree term is

by the evaluation of the Vandermonde determinant.

On the other hand, the highest degree term in \(S(x_1,x_2,\dots ,x_d)\) is obtained by selecting the highest degree term in each entry of the matrix in the numerator of the fraction on the right-hand side of (5.6). Explicitly, this highest degree term is

Both observations combined, we see that

with \(C=(-1)^{nd}\det _{0\le i,j\le n-1}(\mu _{i+j})\). This finishes the proof of the theorem. \(\square \)

6 Fourth proof of Theorem 1—Heine’s formula and Vandermonde determinants

The subject of this section is the random matrix-inspired proof of Theorem 1 due to Brézin and Hikami [2]. Among all the proofs of Theorem 1 that I present in this paper, it is the only one that does not need the knowledge of the formula beforehand. Rather, starting from the left-hand side, by the clever use of Heine’s formula—given in Lemma 8 below—and some obvious manipulations, one arrives almost effortlessly at the right-hand side. The meaning of the formula in the context of random matrices has been indicated in Sect. 2.3, and more specifically in (2.4) which tells that the right-hand side of (1.1) can be interpreted as an expectation of products of characteristic polynomials of random Hermitian matrices. The random matrix flavour of the calculations below is seen in the ubiquitous multivariate density

which is, up to scaling, the density function for the eigenvalues of random Hermitian matrices.

We prove (1.1) in the form

As indicated, we need Heine’s integral formula (cf. [14, Eq. (2.2.11)] or [7, Cor. 2.1.3]). Its proof is short enough that we provide it here for the sake of completeness. The integral that appears in the formula can equally well be understood in the analytic or in the formal sense.

Lemma 8

Let \(\mathrm{{d}}\nu (u)\) be a density with moments \(\nu _s=\int u^s\,\mathrm{{d}}\nu (u)\), \(s=0,1,\dots \). For all non-negative integers n, we have

Proof

We start with the left-hand side,

If in this expression we permute the \(u_i\)’s, then it remains invariant, except for a sign that is created by the determinant. Let \({\mathfrak {S}}_n\) denote the group of permutations of \(\{0,1,\dots ,n-1\}\). If we average the above multiple integral over all possible permutations of the \(u_i\)’s, then we obtain

In view of the evaluation of the Vandermonde determinant, up to a shift in the indices of the \(u_i\)’s, this is the right-hand side of (6.2). \(\square \)

Proof of (6.1)

Let \(\mathrm{{d}}\mu (u)\) be a density with moments \(\mu _s=\int u^s\,\mathrm{{d}}\mu (u)\), \(s=0,1,\dots \). We apply Lemma 8 with

This yields

Let \(\big (p_m(y)\big )_{m\ge 0}\) be the sequence of monic orthogonal polynomials with respect to the linear functional with moments \(\mu _s=\int u^s\,\mathrm{{d}}\mu (u)\), \(s=0,1,\dots .\) It is easy to see that

We use this observation with \(N=n+d\), where the role of the \(y_i\)’s is taken by \(u_1,u_2,\dots ,u_n, x_1,x_2,\dots ,x_d\). Then (6.3) may be rewritten as

By orthogonality of the \(p_j(x)\)’s, we have \(\int u^sp_{j-1}(u)\,\mathrm{{d}}\mu (u)=0\) for \(0\le s<n\le j-1\). Since the expansion of the Vandermonde determinant \(\prod _{1\le i<j\le n} ^{}(u_j-u_i)\) consists entirely of monomials \(\pm u_1^{s_1}u_2^{s_2}\cdots u_n^{s_n}\) with \(s_i<n\) for all i, we see that in the above expression we may replace the determinant in the integrand by

If we substitute this above and use (6.4) again, we get

This gives indeed (6.1) once we apply Lemma 8 another time, now with \(\mathrm{{d}}\nu (u)=\mathrm{{d}}\mu (u)\). \(\square \)

7 Hankel determinants of linear combinations of Motzkin and Schröder numbers

As described in Sect. 2, the origin of the author’s discovery of Theorem 1 has been the interest in the evaluation of Hankel determinants of linear combinations of combinatorial sequences. Elouafi in [6] has the same motivation. The point of (1.1) in this context is that it provides a compact formula for \(n\times n\) Hankel determinants of a fixed linear combination of \(d+1\) successive elements of a (moment) sequence (the left-hand side of (1.1)) that does not “grow” with n. (The right-hand side is a “fixed-size” formula for fixed d; the dependence on n is in the index of the orthogonal polynomials.) Elouafi provides numerous applications of Theorem 1 to the evaluation of Hankel determinants of linear combinations of Catalan, Motzkin, and Schröder numbers in [6, Sect. 3]. However, his treatment of Motzkin and Schröder numbers can be replaced by a better one.

The reader should recall that, if some of the \(x_i\)’s in (1.1) should be equal to each other, then on the right-hand side we would have to use Proposition 5 in order to make sense of the right-hand side of (1.1). In order to apply the proposition, we must evaluate derivatives of the orthogonal polynomials at \(-x_j\). How to accomplish this for the orthogonal polynomials corresponding to Motzkin and Schröder numbers as moments by a recursive approach, is described in [6, Sects. 3.2 and 3.3]. Here we show that one can be completely explicit. We do not try to treat the most general case but rather restrict ourselves to illustrate the main ideas by two examples.

By Theorem 1 with \(x_i=0\) for all i and Proposition 5 with \(y_1=0\) and \(m_1=d\), we have (see also [6, Eq. (1.3)])

We consider first the special case where \(\mu _n=M_n\), the n-th Motzkin number, defined by \(\sum _{n\ge 0}M_n\,z^n=\frac{1-z-\sqrt{1-2z-3z^2}}{2z^2}\) (cf. [13, Ex. 6.37]). It is well-known (see [15] or [6, p. 1265]) that the associated orthogonal polynomials satisfy the three-term recurrence (1.2) with \(s_i=t_i=1\) for all i. More explicitly, they are \(p_n(x)=U_n\left( \frac{x-1}{2}\right) \), where \(U_n(x)\) is the nth Chebyshev polynomial of the second kind, which is defined by

with generating function

From this generating function, we may now easily obtain an explicit expression for \(p^{(j)}_n(0)=\frac{\mathrm{{d}}^j}{\mathrm{{d}}x^j}U_n\left( \frac{x-1}{2}\right) \big \vert _{x=0}\). Namely, we have

Consequently, we get

Comparison of coefficients of \(z^n\) then yields

If we recall that \(\det _{0\le i,j\le n-1}\left( M_{i+j}\right) =1\) (by (1.3) and the choice \(t_i=1\) for all i), then we arrive at the identity

where

Second, we consider here the special case where \(\mu _n=r_n\), the n-th (large) Schröder number, defined by \(\sum _{n\ge 0}r_n\,z^n=\frac{1-z-\sqrt{1-6z+z^2}}{2z}\) (cf. [13, Second Problem on page 178]). Here, the associated orthogonal polynomials satisfy the three-term recurrence (1.2) with \(s_0=2\), \(s_i=3\) for \(i\ge 1\), and \(t_i=2\) for all i (see [4, Case (vii) in Sect. 4]). More explicitly, their generating function satisfies

From this generating function, we may now obtain an explicit expression for \(p^{(j)}_n(0)\). Namely, we have

Consequently, we get

where

and

Then, by comparing coefficients of \(z^n\), we obtain

If we recall that \(\det _{0\le i,j\le n-1}\left( r_{i+j}\right) =2^{\left( {\begin{array}{c}n\\ 2\end{array}}\right) }\) (by (1.3) and the choice \(t_i=2\) for all i), then we arrive at the identity

where

As a final remark, we point out that the above treatment of the “Motzkin case” is one which only applies in a specific situation, whereas the above treatment of the “Schröder case” works for all moment sequences for orthogonal polynomials which are generated by a three-term recurrence (1.2) where the coefficient sequences \((s_i)_{i\ge 0}\) and \((t_i)_{i\ge 0}\) become constant eventually, that is, where \(s_i\equiv s\) and \(t_i\equiv t\) for large enough i. For, under this assumption, the generating function \(\sum _{n\ge 0}p_n(x)z^n\) for the orthogonal polynomials \(\big (p_n(x)\big )_{n\ge 0}\) is a rational function with denominator \(1-(x-s)z+tz^2\), a quadratic polynomial, as in the special case of Schröder numbers, where we had \(s=3\) and \(t=2\).

8 Recursiveness of Hankel determinants of linear combinations of moments

In [5, Conj. 5], Dougherty, French, Saderholm and Qian conjectured that the Hankel determinants of a linear combination of Catalan numbers \(C_n=\frac{1}{n+1}\left( {\begin{array}{c}2n\\ n\end{array}}\right) \),

satisfy a linear recurrence with constant coefficients of order \(2^d\). This conjecture was proved by Elouafi in [6, Theorem 6] by using Theorem 1 and special properties of the orthogonal polynomials corresponding to the Catalan numbers as moments. However, Theorem 1 implies a much more general result. This is what we make explicit in the corollary below. For the statement of the corollary, we need to recall the definition of the elementary symmetric polynomials

Corollary 9

Within the setup in Sect. 1, let \(s_i\equiv s\) and \(t_i\equiv t\) for \(i\ge 1\). Furthermore, let

where the \(\lambda _k\)’s are some constantsFootnote 9 in the ground field K, \(k=0,1,\dots ,d-1\), and \(\lambda _d=1\). Then the sequence \((H_n)_{n\ge 0}\) of (scaled) Hankel determinants of linear combinations of moments satisfies a linear recurrence of the form

for some constants \(c_i\in K\), normalised by \(c_0=1\). Explicitly, these constants can be computed as the coefficients of the characteristic polynomial (in x) \(\sum _{i=0}^{2^d}c_ix^{2^d-i}\) of the tensor product of \(2\times 2\) matrices

where \(\lambda _k=e_{d-k}(x_1,x_2,\dots ,x_d)\). In particular, we have

and

Remarks. (1) The reader should note that, by (1.3), the scaling in (8.1) is exactly the value of the determinant

in the denominator on the left-hand side of (1.1).

(2) A small detail is that the proof of Corollary 9 given below shows that, if \(t_i\equiv t\) for all i, then the recurrence (8.2) holds even for \(n\ge 2^d\).

Furthermore, an inspection of the proof of the corollary shows that, if \(s_i\equiv s\) for \(i>N\) and \(t_i\equiv t\) for \(i\ge N\), where N is some positive integer, then the recurrence (8.2) still holds, but only for \(n\ge 2^d+N\).

(3) The formula (8.4) for the coefficient \(c_1\) is a far-reaching generalisation of Conjecture 6 in [5], while the symmetry relation (8.5) is a far-reaching generalisation of Conjecture 7 in [5].

(4) In view of the specialisations listed in items (i)–(xi) and (xiv)–(xviii) in the list given in [4, Sect. 4], Corollary 9 implies that the (properly scaled) Hankel determinants of linear combinations of numerous combinatorial sequences satisfy a linear recurrence with constant coefficients, which, aside from the already mentioned Catalan numbers, include Motzkin numbers, central binomial coefficients, central trinomial coefficients, central Delannoy numbers, Schröder numbers, Riordan numbers, and Fine numbers.

Proof of Corollary 9

We use Theorem 1 in the equivalent form (3.2). In order to apply this identity, we write

with the \(x_i\)’s in the algebraic closure of our ground field K. Equivalently,

For the moment, we assume that the \(x_i\)’s are pairwise distinct in order to avoid a zero denominator in (3.2). We will get rid of this restriction in the end by a limiting argument. (An alternative would be to base the arguments on Proposition 5. This would however be more complicated.)

What (3.2) affords is to express \(H_n\) in terms of a \(d\times d\) determinant with entries \(f_{n+i-1}(x_j)\), \(1\le i,j\le d\). If we expand the determinant on the right-hand side of (3.2) according to its definition, then we obtain a linear combination of products of the form \( \prod _{i=1} ^{d}f_{n+\tau _i}(x_i)\) with constant coefficients, where \(\tau _i\in \{0,1,\dots ,d-1\}\) for \(i=1,2,\dots ,d\). Now, by (3.1), for each fixed i and \(\tau _i\) the sequence \(\big (f_{n+\tau _i}(x_i)\big )_{n\ge 0}\) satisfies the recurrence relation

Hence, each product sequence \(\left( \prod _{i=1} ^{d}f_{n+\tau _i}(x_i)\right) _{n\ge 0}\) satisfies the same recurrence relation, namely the one resulting from the (Hadamard) product of the recurrences (8.6) over \(i=1,2,\dots ,d\). From the proof of [13, Theorem 6.4.9] (which is actually a much more general theorem), it follows immediately that the order of this “product” recurrence is at most \(2^d\).

In order to obtain the explicit description of the “product” recurrence in the statement of the corollary, we have to recall the basics of the solution theory of (homogeneous) linear recurrences with constant coefficients. This theory says that one has to determine the zeroes of the characteristic polynomial of the recurrence; the powers \(\alpha ^n\) of the zeroes \(\alpha \) multiplied by powers \(n^e\) of n, where the exponent e is less than the multiplicity of \(\alpha \), form a basis of the solution space of the recurrence.

The characteristic polynomial of the recurrence (8.6) is

which is also equal to the characteristic polynomial of the \(2\times 2\) matrix

Let \(y_{i,1}\) and \(y_{i,2}\) be the zeroes of the polynomial (8.7). Then the powers \(\big (y_{i,1}^n\big )_{n\ge 0}\) and \(\big (y_{i,2}^n\big )_{n\ge 0}\) form a basis of solutions to the recurrence (8.6) if \(y_{i,1}\) and \(y_{i,2}\) are distinct, while otherwise the sequences \(\big (y_{i,1}^n\big )_{n\ge 0}\) and \(\big (ny_{i,1}^n\big )_{n\ge 0}\) form a basis. The product recurrence that we want to find is one for which all products \(\left( n^{e-d}\prod _{i=1} ^{}y_{i,\varepsilon _i}^n\right) _{n\ge 0}\) are solutions, for \(\varepsilon _i\in \{1,2\}\) and e bounded above by the sum of the multiplicities of the \(y_{i,1}\)’s. Equivalently, the characteristic polynomial of the desired product recurrence is one which has all products \(\prod _{i=1} ^{}y_{i,\varepsilon _i}\), where \(\varepsilon _i\in \{1,2\}\), as zeroes, with multiplicities equal to the sum of the multiplicities of the \(y_{i,1}\)’s minus \(d-1\). It is a simple fact of linear algebra that such a polynomial is the characteristic polynomial of the tensor product of all matrices (8.8), that is, the matrix in (8.3). (For, the eigenvalues of the tensor product of matrices are all products of eigenvalues of the individual matrices.) This proves the assertion about the explicit form of the recurrence (8.2) in the case of pairwise distinct \(x_i\)’s.

Since everything—the expressions in (3.2), the coefficients of the recurrence (8.2)—is polynomial in the \(x_i\)’s, we may finally drop that restriction.

The coefficient \(c_1\) is the coefficient of \(x^{2^d-1}\) in the characteristic polynomial of (8.3). It is easy to see that this is

proving (8.4).

Finally, the symmetry relation (8.5) is a consequence of the inherent symmetry of a linear recurrence of order 2. In order to make this visible, let \({{\widehat{f}}}_n(x)=t^{-n/2}f_n(x)\). Then, from (3.1) we see that

Now, a recurrence can be read in the forward direction—that is, we compute the n-th term of the sequence from lower order terms—or in the backward direction—that is, we compute the n-th term from higher order terms. In this sense we see that the recurrence (8.9) is the same regardless whether it is read in forward or backward direction. Consequently, the recurrence relation for the Hadamard product \( \prod _{i=1} ^{d}{{\widehat{f}}}_n(x_i)\) must also be symmetric, that is, the same regardless whether it is read in forward or in backward direction. If one then substitutes back \(\widehat{f}_n(x)=t^{-n/2}f_n(x)\) in that symmetric recurrence, the relation (8.5) follows. \(\square \)

Notes

For the analyst, (usually) this field is the field of real numbers, and a further restriction is that the linear functional L is defined by a measure with non-negative density. However, the formulae in this paper do not need these restrictions and are valid in this wider context of “formal orthogonality”.

“Umbral notation” means that an expression that is a polynomial in \(\mu \) is expanded out, and then every occurrence of \(\mu ^n\) is replaced by \(\mu _n\). So, for example, the umbral expression \(\mu ^2(x_1+\mu )(x_2+\mu )\) means \(x_1x_2\mu _2+(x_1+x_2)\mu _3+\mu _4\).

The reader may judge for her/himself: I present the theorem further below in Sect. 2.2, together with explanations how this connects to our discussion.

In the statement of [11, Prop. 8.4.1], the resultant \(R(x,{\mathbb {B}})\) must be replaced by the Vandermonde product \(\Delta (x+{\mathbb {B}})\), as is done in the proof of that proposition in [11]. I have done this correction here. Furthermore I simplified the statement by incorporating the variable x in the alphabet \({\mathbb {B}}\), which means to replace “\(-{\mathbb {B}}-x\)” by “\(-{\mathbb {B}}\)” and “\(x+{\mathbb {B}}\)” by “\({\mathbb {B}}\)”. It is obvious that Lascoux was aware of this simplification. However, he needed to formulate the statement in that particular way in order to relate it to the classical result [14, Theorem 2.5] (see also [7, Theorem 2.7.1]) in the theory of orthogonal polynomials.

Lascoux’s attitude towards signs is best described by himself: “... with signs that specialists will know how to write.” [11, comment added below Eq. (3.1.5)]

We may also think of the \(\lambda _k\)’s as variables.

References

Baik, J., Deift, P., Strahov, E.: Products and ratios of characteristic polynomials of random Hermitian matrices. J. Math. Phys. 44, 3657–3670 (2003)

Brézin, E., Hikami, S.: Characteristic polynomials of random matrices. Commun. Math. Phys. 214, 111–135 (2000)

Christoffel, E.B.: Über die Gaußische Quadratur und eine Verallgemeinerung derselben. J. Reine Angew. Math. 55, 61–82 (1858)

Cigler, J., Krattenthaler, C.: Hankel determinants of linear combinations of moments of orthogonal polynomials. Int. J. Numb. Theory 17, 341–369 (2021)

Dougherty, M., French, C., Saderholm, B., Qian, W.: Hankel transforms of linear combinations of Catalan numbers. J. Integer Seq. 14 (2011), Article 11.5.1, 20 pp

Elouafi, M.: A unified approach for the Hankel determinants of classical combinatorial numbers. J. Math. Anal. Appl. 431, 1253–1274 (2015)

Ismail, M.E.H.: Classical and Quantum Orthogonal Polynomials in One Variable, Encyclopedia of Mathematics and its Applications, vol. 98. Cambridge University Press, Cambridge (2009)

Krattenthaler, C.: Advanced determinant calculus, Séminaire Lotharingien Combin. 42 (1999) (“The Andrews Festschrift”), Article B42q, 67 pp

Krattenthaler, C.: Determinants of (generalised) Catalan numbers. J. Stat. Plann. Inference 140, 2260–2270 (2010)

Krattenthaler, C.: A determinant identity for moments of orthogonal polynomials that implies Uvarov’s formula for the orthogonal polynomials of rationally related densities, preprint, arxiv:2103.03969v1

Lascoux, A.: Symmetric Functions and Combinatorial Operators on Polynomials, CBMS Regional Conference Series in Mathematics, vol. 99. Amer. Math. Soc, Providence (2003)

Mu, L., Wang, Y., Yeh, Y.-N.: Hankel determinants of linear combinations of consecutive Catalan-like numbers. Discrete Math. 340, 3097–3103 (2017)

Stanley, R.P.: Enumerative Combinatorics, vol. 2. Cambridge University Press, Cambridge (1999)

Szegő, G.: Orthogonal Polynomials. Amer. Math. Soc, Providence (1939)

Viennot, X.: Une théorie combinatoire des polynômes orthogonaux généraux, UQAM, Montréal, Québec, 1983; available at http://www.xavierviennot.org/xavier/polynomes_orthogonaux.html

Acknowledgements

I thank Mourad Ismail for insightful discussions and Arno Kuijlaars for bringing [1] to my attention.

Funding

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to the memory of Richard Askey.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research partially supported by the Austrian Science Foundation FWF (Grant S50-N15) in the framework of the Special Research Program “Algorithmic and Enumerative Combinatorics”

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Krattenthaler, C. Hankel determinants of linear combinations of moments of orthogonal polynomials, II. Ramanujan J 61, 597–627 (2023). https://doi.org/10.1007/s11139-021-00514-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11139-021-00514-8

Keywords

- Hankel determinants

- Moments of orthogonal polynomials

- Catalan numbers

- Motzkin numbers

- Schröder numbers

- Riordan numbers

- Fine numbers

- Central binomial coefficients

- Central trinomial numbers

- Delannoy numbers

- Chebyshev polynomials

- Dodgson condensation