Abstract

Purpose

In Australian adults diagnosed with a sleep disorder(s), this cross-sectional study compares the empirical relationships between two generic QoL instruments, the EuroQoL 5-dimension 5-level (EQ-5D-5L) and ICEpop CAPability measure for Adults (ICECAP-A), and three sleep-specific metrics, the Epworth Sleepiness Scale (ESS), 10-item Functional Outcomes of Sleep Questionnaire (FOSQ-10), and Pittsburgh Sleep Quality Index (PSQI).

Methods

Convergent and divergent validity between item/dimension scores was examined using Kendall’s Tau-B correlation, with correlations below 0.30 considered weak, between 0.30 and 0.50 moderate and those above 0.50 strong (indicating that instruments were measuring similar constructs). Exploratory factor analysis (EFA) was conducted to identify shared underlying constructs.

Results

A total of 1509 participants (aged 18–86 years) were included in the analysis. Convergent validity between dimensions/items of different instruments was weak to moderate. A 5-factor EFA solution, representing ‘daytime dysfunction’, ‘fatigue’, ‘wellbeing’, ‘physical health’, and ‘perceived sleep quality’, was simplest with close fit and fewest cross-loadings. Each instrument’s dimensions/items primarily loaded onto their own factor, except for the EQ-5D-5L and PSQI. Nearly two-thirds of salient loadings were of excellent magnitude (0.72 to 0.91).

Conclusion

Moderate overlap between the constructs assessed by generic and sleep-specific instruments indicates that neither can fully capture the complexity of QoL alone in general disordered sleep populations. Therefore, both are required within economic evaluations. A combination of the EQ-5D-5L and, depending on context, ESS or PSQI offers the broadest measurement of QoL in evaluating sleep health interventions.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Plain English summary

Sleep disorders are common in the general population, and reduced quality of life (QoL) is a consequence of them, attributing to $25.5 billion in associated costs in 2019–20. No single instrument is available to measure all aspects of QoL or sleep outcomes relevant to people with sleep disorders in an economic evaluation (comparing the costs versus outcomes of interventions). Currently, a combination of a generic and sleep-specific questionnaires is commonly used to measure the benefits of sleep disorder interventions because using one or the other alone does not measure QoL with enough breadth. We compared two generic and three sleep-specific instruments to identify which instruments are appropriate in economic evaluations and if generic and sleep-specific questionnaires share an underlying relationship in their measurement of QoL or sleep outcomes. In the absence of a single ‘gold-standard’ questionnaire, our results support the combined use of a generic and sleep-specific instrument to measure QoL and sleep outcomes in sleep disorder patients. Therefore, depending on the research’s context, a combination of the generic EuroQoL 5-dimension 5-level and the sleep-specific Epworth Sleepiness Scale (for the broadest measurement of sleep outcomes) or Pittsburgh Sleep Quality Index (where bodily function is more important) is recommended.

Introduction

Sleep disorders are prevalent in the general population despite being underdiagnosed and under-treated [1]. The incidence of the two most common sleep disorders in adults, obstructive sleep apnea (OSA) and insomnia, is estimated at approximately 1 in 7 [2] and 1 in 10 [3], respectively. The health consequences of sleep disorders include increased risk of cardiovascular disease, metabolic syndrome [4], reduced quality of life (QoL) [5], and mortality [6, 7]. Beyond the substantial burden of illness and loss of wellbeing, which put healthcare resources in high demand, sleep disorders pose a dramatic quantifiable economic burden [1] borne by patients, payers, and society [8]. Between 2019–20, the cost of sleep disorders in Australia was $35.4bil AUD, with 72% attributed to the non-financial reduction in wellbeing [1]. A cost incursion of this magnitude puts pressure on the distribution of limited public funds among competing healthcare interventions, making the knowledge of health economic aspects of intervention crucial for payers and decision-makers.

Economic evaluation is used by decision-makers to guide the cost-effective allocation of public funds and is recommended by decision-making bodies, including the Medical Benefits Advisory Committee (MSAC) in Australia, and the National Institute for Health and Clinical Excellence (NICE) in the United Kingdom [9, 10]. In addition to direct and indirect costs, outcomes such as QoL are a key health economic aspect of evaluating intervention benefits. Several generic preference and non-preference-based questionnaires have been used within sleep economic evaluations to measure QoL and other sleep outcomes. The EuroQoL 5-dimension 5-level (EQ-5D-5L) is amongst the most popular generic preference-based instruments used in such evaluations [13, 14]. An alternative instrument that, to our knowledge, has not been used in sleep economic evaluations is the ICEpop CAPability measure for Adults (ICECAP-A) [15], which focuses on capability-based QoL gains with a broader scope. It is however being used in other non-economic sleep studies [16, 17] Additionally, the ICECAP-A is considered a promising tool for future economic evaluations that extend beyond just health benefits [18]. Reimer and Flemons [19] have argued in support of using generic instruments to measure QoL in sleep- disordered populations, with consideration of physical, mental, and social function, symptom burden, and wellbeing. It is well-documented that sleep disturbance significantly impacts all aspects of QoL, often with a more pronounced effect on mental health [5], supporting the use of generic instruments broadly measuring the concept [19,20,21]. Measuring QoL with a broad scope is important, but capturing QoL attributes relevant to sleep disorder patients, such as sleep quality, fatigue, and energy, makes for a more effective measurement of intervention outcomes in sleep economic evaluations [11, 12]. Therefore, clinicians and researchers will often select condition-specific instruments with well-established validity because they focus on the unique aspects of a particular condition and can, therefore, detect subtle changes that might be missed by generic instruments [23]. While none of these instruments were developed to generate QALYs used in cost-utility analyses (CUA), and few measure QoL, they do take into account important factors relevant to populations dealing with sleep disorders and may have a place in sleep economic evaluations.

Our recent systematic review has shown that a combination of generic and sleep-specific instruments has been used in nearly half of the economic evaluations of sleep health interventions [14]. It is, however, unclear whether generic or sleep-specific instruments share an underlying, strong relationship in their measurement of QoL and other sleep outcomes. Therefore, this cross-sectional study explored the convergent and divergent validity between the generic EQ-5D-5L and ICECAP-A, and the sleep-specific 10-item Functional Outcomes of Sleep Questionnaire (FOSQ-10), Epworth Sleepiness Scale (ESS), and Pittsburgh Sleep Quality Index (PSQI) and their latent constructs in Australians who self-reporting having a sleep disorder. While the ESS and PSQI do not strictly measure QoL, they are commonly used screening tools, measuring daytime sleep propensity and sleep quality respectively. The conceptual overlap between instruments was also assessed using the International Classification of Functioning, Disability and Health (ICF) framework [24].

Methods

Sample

In this study, participants completed a structured questionnaire including a series of socio-demographic questions and five QoL instruments contemporaneously administered. Two instruments were generic: the EQ-5D-5L and ICECAP-A. Three instruments were sleep-specific: ESS, FOSQ-10, and the PSQI. The questionnaire was accessible via ‘PureProfile’, an online survey platform, on users’ dashboards from May 27th to August 9th 2022. Following advertisement of the study to PureProfile’s platform of users, informed consent was gained from all potential participants before proceeding to the questionnaire which gathered data from adults (aged 18 and above) who self-reported being diagnosed with a sleep disorder (participants were asked to specify which). Eligibility was based on predetermined quotas for various traits such as age, sleep disorder history, ethnicity, income, education, and employment status. Authentication criteria were applied to ensure legitimacy of respondents including CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) test, open ends connectivity, internet protocol (IP) geolocation, and speeder limits. The goal was to secure a nationally representative sample of the Australian population. The target sample size was 1500, well exceeding the minimum recommended number of 50 participants for this kind of survey [25].

Quality of life instruments

The EQ-5D-5L is a widely-used generic health-related QoL measure. Although not validated in sleep disordered populations, the EQ-5D-5L has shown excellent psychometric properties across a range of other disease populations and settings [26]. The EQ-5D, both 3- and 5- level, are the most frequently used generic instrument in economic evaluations within sleep disorders [14], though the EQ-5D-5L has reduced ceiling effects compared to its three-level counterpart [27]. It measures five dimensions: mobility, self-care, usual activities, pain/discomfort, and anxiety/depression each with five levels of impairment ranging from ‘no problem’ to ‘unable’ defining a possible 3125 health states. Using the Australian general population preference weights, a utility score ranging from −0.30 to 1 was attached to each health state [28].

The ICECAP-A is a generic measure of adult capability wellbeing measuring five dimensions: stability, attachment, achievement, autonomy, and enjoyment. Each attribute has four levels ranging from full to no capability to distinguish a possible 1024 capability states [15]. The algorithm based on a best–worst scaling method using UK population tariffs was applied to score the ICECAP-A and derive overall scores ranging from 0 (no capability) to 1 (full capability) [29].

The FOSQ-10 is a self-administered questionnaire assessing the impact of excessive somnolence on QoL in adults that is often applied in primary sleep disorders, particularly OSA. Shorter than its predecessor, the FOSQ-30 [30], the FOSQ-10 has performed well in recent studies [31, 32] and a newly established minimally important difference [33] improves its interpretability and applicability in practice. Due to the limited number of items per subscale (1 to 3), only the total score (ranging from 5 to 20) is recommended for use [34].

Applied across many sleep disorders and settings, the ESS is the most commonly used instrument for assessing excessive daytime sleepiness, which is a major consideration specifically in clinical decision-making concerning the diagnosis and management of OSA [35, 36]. It was also the most commonly applied sleep-specific instrument in economic evaluations [14]. Participants rate their propensity to doze or fall asleep on a four-point scale from 0 (never) to 3 (high chance) in eight different low-activity situations. Taking the sum of each item’s score, ranging from 0 to 24, an ESS score greater than 10 is indicative of excessive daytime sleepiness [37].

The PSQI is a self-report questionnaire used to discriminate between ‘poor’ and ‘good’ sleepers in clinical and non-clinical populations. Its application within sleep disorders is broad including sleep initiation and maintenance disorders and disorders of excessive somnolence [38]. Nineteen items generate 7 component scores (based on Likert response scored 0 to 3) and an overall ‘global’ score ranging from 0 to 21. Five additional items answered by a bed partner are excluded from scoring.

Higher scores for the EQ-5D-5L, ICECAP-A and FOSQ-10 indicated better outcomes, whereas the converse was true for the ESS and the PSQI.

Conceptual overlap between instruments

The dimensions/items of all instruments were compared using the ICF to assess the conceptual overlap between the instruments [24]. The ICF is a multipurpose and comprehensive framework used to classify health and disability concepts within a biopsychosocial model of health, functioning, and disability [24]. Using the ICF framework, the instrument dimensions were categorised into two of four possible domains: (i) ‘body functions’ (measuring impairments of a) physiological and psychological functioning and b) anatomical functioning of body systems), and (ii) ‘activities and participation’ (measuring the full range of life areas including execution of tasks and management of life situations) [24]. Both domains were broken down into chapters which were further broken down into categories.

Statistical analysis

Descriptive statistics (means, standard deviations, medians, interquartile ranges, frequencies) were generated. The distribution of the dimension/total scores of all QoL instruments were tested for normality using the Shapiro-Francia test [39]. All instrument dimension/total scores followed non-normal distribution (Shapiro-Francia test, p < 0.05), therefore, non-parametric tests of differences were applied (Wilcoxon-Mann Whitney, Kruskal Wallis, and Spearman’s correlation). Differences in dimension/total scores based on demographics (age band, gender, living arrangements, educational attainment) and self-rated QoL were tested using the Kruskal Wallis test.

Convergent validity between dimension/total scores of all instruments was assessed using Kendall’s Tau-B rank correlation significant at the 5% level, recommended for ordinal categorical data [40]. Correlations below 0.30 were considered weak, those between 0.30 and 0.50 moderate, and those above 0.50 strong. Strong absolute correlations between instruments, indicative of convergent validity, suggested they were measuring similar constructs [40]. We hypothesised that strong convergent validity would exist between items or dimensions belonging to the same ICF domains. Divergent validity was also assessed using Kendall’s Tau-B correlation. We hypothesised that divergent validity would be confirmed if items/dimensions belonging to different ICF domains had lower or no statistically significant correlation than those belonging to the same ICF chapter.

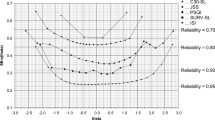

Exploratory Factor Analysis (EFA), incorporating Polychoric correlation matrices and iterative principal factor extraction to accommodate the ordinal and multivariate non-normal nature of the data (Mardia’s kurtosis p < 0.001 [41]), was conducted. Minimum average partials (MAP) [42] and Horn’s parallel analysis (PA) [43] were used to determine the optimal number of factors to retain. Promax oblique rotation with a kappa value of 3 was used to ensure realistic and statistically sound factor structures [44, 45]. The threshold for salient loadings was set at 0.32 as recommended in the literature [46]. The magnitude of loadings was interpreted as follows: ≥ 0.32 to < 0.45 was poor, ≥ 0.45 to < 0.55 was fair, ≥ 0.55 to < 0.63 was good, ≥ 0.63 to < 0.70 was very good, and ≥ 0.70 was excellent [47]. All candidate models were judged on their interpretability and theoretical sense to identify the most acceptable solution [48, 49]. Four sensitivity analyses testing the results’ robustness compared correlation matrices used (polychoric vs Pearson’s), factor extraction methods (iterative principal factor vs maximum likelihood), alternative rotation methods (promax vs oblimin), and outliers (using Mahalanobis distance (D2) [50].

There was no missing data to account for in our analyses. The assumed threshold for statistical significance in all analyses was 5% [51], and Stata version 15.1 (StataCorp, TX) was used to conduct all analyses.

Ethical approval

This study adhered to the ethics guidelines set forth by the 1964 Helsinki Declaration and received approval from the Flinders University Social and Behavioural Ethics Committee.

Consent

Informed consent was obtained from all individuals included in this study.

Results

Cohort selection is depicted in Fig. 1. Of the initial PureProfile panellist population (n = 5666), 1737 were eligible. The primary reason for exclusion was not having had a sleep disorder (n = 3488). After pre-determined quotas were reached, 1509 eligible panellists completed the survey. There were no partial completions or missing data.

Sample characteristics

Participant characteristics are presented in Table 1. Participants’ ages ranged from 18 to 86 years (mean 46 and median 45). The sample consisted of nearly equal numbers of males and females. Self-rated QoL was indicated as average, good, or very good by 80.7% of participants. The frequency of self-reported sleep disorders is reported in Table 2. Bruxism was most commonly reported (n = 569, 37.7%), followed by insomnia (n = 553, 36.7%) and OSA (n = 467, 31.0%). Narcolepsy was least frequently reported (n = 41, 2.7%).

Quality of life instrument scores

The distribution of total (ESS, FOSQ, PSQI) and utility (EQ-5D-5L, ICECAP-A) scores across participant characteristics is shown in Table 1. Median (IQR) EQ-5D-5L and ICECAP-A utility scores were 0.89 (0.78–0.96) and 0.85 (0.69–0.94) respectively. Median (IQR) total scores on the ESS, FOSQ-10, and PSQI were 6 (3–9), 35 (28–38), and 9 (6–12), respectively. For all five instruments, there was a statistically significant relationship between utility/total score and self-rated QoL, suggesting that all instruments were able to discriminate according to self-rated QoL (Kruskal Wallis test, p < 0.01). The distribution of utility/total scores for all instruments across all dimension levels of the EQ-5D-5L and ICECAP-A are presented in the supplementary material Tables S1 and S2.

Conceptual overlap between instruments

Table 3 presents the conceptual overlap according to the ICF framework between all instrument items/dimensions. When the generic instruments were compared to sleep-specific instruments, the most overlap between the two types of instruments was seen in their capture of the ICF ‘activities and participation’ domain. Three of the five (60%) EQ-5D-5L dimensions (‘usual activities’, ‘mobility’ and ‘self-care’) and all the ICECAP dimensions captured this domain. In comparison, all the ESS items, eight of the 10 (80%) FOSQ-10 items (i.e., all except ‘concentration’ and ‘remembering’), and two of the seven (29%) of the PSQI items (‘daytime dysfunction’ and ‘use of sleep medication’) also captured this domain. There was, however, less overlap in the way the two types of instruments captured the ICF ‘body functions’ domain. Only two of five (40%) EQ-5D-5L dimensions (‘anxiety/depression’ and ‘pain/discomfort’) and none of the ICECAP dimensions captured this domain. In contrast, all of the ESS and FOSQ-10, and six of the seven (86%) of the PSQI items (i.e., all except ‘use of sleep medication’) captured this domain.

Convergent and divergent validity

Results of the assessment of validity between the dimensions and item scores of all instruments is presented in supplementary Table S4. Although correlations existed in the direction expected between items/dimensions of different instruments hypothesised to measure similar constructs, and were statistically significant in most cases, they were weak to moderate at best. Between the EQ-5D-5L or ICECAP-A and all sleep-specific instruments, statistically significant absolute correlation ranged from 0.06 (‘car passenger’ [ESS] vs ‘pain’ [EQ-5D-5L]) to 0.35 (‘daytime dysfunction’ [PSQI] vs ‘anxiety/depression’ [EQ-5D-5L]). The EQ-5D-5L and FOSQ-10 shared the most moderate correlations. Indicative of poor divergent validity, correlation coefficients between items/dimensions of generic versus sleep-specific instruments hypothesised to belong to different ICF domains ranged from 0.05 (‘sleep duration’ [PSQI] vs ‘usual activities’ [EQ-5D-5L]) to 0.33 (‘sleep quality’ [PSQI] and ‘enjoyment’ [ICECAP-A]) (Kendall’s Tau-B, p < 0.05).

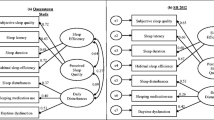

Exploratory factor analysis

EFA results are shown in Table 4, depicting loadings of instrument items/dimensions on to the latent factors. Parallel analysis suggested 5 factors be retained, whereas MAP suggested 6 factors be retained (supplementary Tables S5 and S6). Root mean squared residual (RMSR) indicated a close fit of the model (RMSR = 0.041). Assessing all candidate models’ fit showed that, compared to a 6-factor solution, a 5-factor solution had fewer cross-loadings, and removing Factor 6 did not change the RMSR suggesting that a 5-factor solution was simpler. Before rotation, Factors 1 and 2 explained 37.1% and 11.4% of total variance respectively while Factors 3, 4, and 5 collectively explained 14.5%. Nearly two thirds (64.9%) of salient loadings were excellent in magnitude. All items of the FOSQ-10 had excellent magnitude salient loadings onto Factor 1. In contrast, the PSQI ‘daytime dysfunction’ had fair magnitude, while ESS items ‘sitting and talking’ and ‘in a car’ showed poor magnitude. Only items of the ESS loaded on to Factor 2, with 5 of 8 items of excellent magnitude. Factor 3 was loaded onto by all ICECAP-A dimensions, 4 of 5 with an excellent magnitude, and one EQ-5D-5L item (‘anxiety/depression’) with fair magnitude respectively. The ICECAP-A dimension ‘autonomy’ had cross-loading on to Factors 3 and 4 of poor and fair magnitude. All EQ-5D-5L dimensions except ‘anxiety/depression’ loaded saliently on to Factor 5 with good or excellent magnitude. All PSQI components except ‘use of sleep medication’ and ‘daytime dysfunction’ loaded saliently on to Factor 5. The components ‘sleep quality’, ‘sleep duration’, and ‘sleep efficiency’ components loaded with a very good to excellent magnitude. PSQI ‘use of sleep medications’ was the only dimension/item not meeting the salient loading threshold.

Sensitivity analysis

Comparing polychoric and Pearson’s correlation matrices used for EFA, the determinant, Bartlett’s test of sphericity, and KMO results (Tables 4 and S7) show that either correlation matrix was similarly appropriate. Factor extraction using maximum likelihood was similarly parsimonious to iterative principal factor extraction, Factors 1 and 2 explained 48.2% and 48.5% of total variance before rotation respectively with similar uniqueness values. Promax and oblimin rotated solutions shared the same factor loadings and loading magnitudes. Based on the critical value (D2 = 59.7) with 30 degrees freedom and using the conservative p < 0.001 significance level, 146 observations were identified as potential outliers. EFA excluding outliers shared the same close fit (RMSR = 0.041) with identical factor loadings of similar magnitude (supplementary Table S8) and was also robust to extraction and rotation methods. Therefore, our EFA results were robust across correlation matrices, extraction method, rotation, and outliers.

Discussion

This study is the first to report an empirical comparison of two generic and three sleep-specific metrics using data from a sample of the Australian general population previously diagnosed with a sleep disorder(s). Weak to moderate convergence between the generic versus sleep-specific instruments supports their application in parallel versus as alternatives within an economic evaluation. Our results suggest that, due to their wider coverage of QoL concepts as summarised by the ICF framework, the EQ-5D-5L in conjunction with the ESS or PSQI are the most appropriate instruments amongst those used in this study to apply dependent on context, content coverage, and latent constructs in economic evaluations and other similar sleep evaluations.

Based on ICF chapters that instrument items/dimensions were hypothesised to load on, statistically significant correlations of items/dimensions measuring the same constructs was moderate at best. The weak to moderate convergence observed between items/dimensions of generic and sleep-specific instruments supports the practice of using both types of instrument in parallel [14] to adequately measure QoL in sleep disorders in general within economic evaluation. An explanation for the weak to moderate magnitudes of correlation observed is that the ICF framework may not adequately capture unaccounted-for, unrecognised, and/or unmeasured latent constructs shared between items/dimensions [52]. For example, ‘sleep quality’ (PSQI) is not only related to ‘anxiety/depression’ (EQ-5D-5L), but several confounding external factors including the physical and social environment [53, 54] and comorbid chronic conditions [55]. Consequently, the strength of association between two items/dimensions hypothesised to measure the same construct is reduced due to the confounding of variables unaccounted for. Similar findings have been reported elsewhere [56, 57].

The robust 5-factor solution EFA showed that each instrument loaded primarily onto its own factor with the exceptions of ‘anxiety/depression’ (EQ-5D-5L) and ‘daytime dysfunction’ (PSQI). Factor 1 was loaded onto by all FOSQ-10 items, 2 ESS items, and 1 PSQI component. Given these items primarily measure daytime functional limitations precipitating from somnolence or poor sleep, Factor 1 can be characterised as ‘daytime dysfunction’. Loading onto Factor 2 was exclusive to ESS items, which Johns [37] posited represents ‘somnoficity’. However, cross-loadings of items 6 (‘sitting and talking’) and 8 (‘in a car’) suggests a single-factor solution is inadequate to describe the latent constructs of the ESS, also shown elsewhere [58]. In fact, Smith et al. [58] found items 6 and 8 of the ESS to be poor measures of the single ‘somnoficity’ construct with few participants reporting ‘a high chance of dozing’ in these situations. Therefore, an alternative characterisation of Factor 2 is required. Evidence suggests that the subjective daytime symptoms of OSA, as measured by the ESS [37], are better conceptualised as ‘fatigue’ rather than ‘somnoficity’ or sleep propensity [58, 59]; hence ‘fatigue’ is a better descriptor of Factor 2. Factor 3 can be characterised as measuring ‘wellbeing’, a broader concept capturing attributes of QoL that reflects the item loadings [60] and is in line with the development approach of the ICECAP-A [15]. Cross-loading of ‘autonomy’ (ICECAP-A) on to Factors 3 and 4 supports the position that not all aspects of Factor 3 are ‘psychosocial’ [60], as posited by Davis et al. [61]. Based on the dominance of items assessing physical functioning loading onto Factor 4, it can be characterised as measuring ‘physical functioning’. These findings and characterisations are commensurate with previous EFAs comparing the EQ-5D-3L with the ICECAP-A [60] and ICECAP-O [61]. Further, the factor loadings of the EQ-5D-5L in this study were the same as those reported for the EQ-5D-3L [60, 61], showing that the additional dimension levels do not change the instrument’s measurement of latent constructs. All PSQI items requiring a subjective assessment of sleep loaded on to Factor 5, as such it is plausible to characterise this factor as ‘perceived sleep quality’. Failure of ‘use of sleep medication’ to load saliently onto any factor is consistent with another EFA [62].

In the absence of a ‘gold standard’ instrument capturing relevant attributes of QoL in sleep disorders, which combination of instruments to use within economic evaluations in general sleep disorder populations should be determined based on study aim(s), content coverage, and latent constructs. The economic evaluation results should enable standardised comparisons across other non-sleep disorders, as in a CUA or CEA. In the case where utility scores are required, as in a CUA, the EQ-5D-5L is one of the instruments recommended by decision-making bodies such as NICE and MSAC [9, 10]. While the EQ-5D-5L remains the most applied instrument in economic evaluations of sleep interventions, the ICECAP-A would be more appropriate in contexts where there is a need to reflect changes in wellbeing-related capability. However, some measurement properties of generic QoL instruments in sleep disorder populations have yet to be demonstrated. Therefore, using a complementary sleep-specific instrument in parallel is required, and ICF framework coverage and latent constructs are additional considerations informing instrument choice. The ICF ‘activities and participation’ domain was captured more than the ‘body functions’ domain. Between the generic EQ-5D-5L and ICECAP-A, the former covers more of the ICF framework (measuring two ICF domains) and two latent constructs (Factors 3 [‘wellbeing’] and 4 [‘physical health’]), suggestive of broader coverage of QoL aspects. Of the sleep-specific instruments, the ICF framework was most broadly covered by the ESS, but the PSQI better measured the ‘body functions’ domain. The consideration of latent constructs shows that ‘daytime dysfunction’ (Factor 1) is common to all sleep-specific instruments, however the ESS and PSQI measure one additional factor each, ‘fatigue’ (Factor 2) and ‘perceived sleep quality’ (Factor 5) respectively. Within sleep disorder cohorts in general, the combination of generic and sleep-specific instrument should be guided by the context in which they will be applied. From the selection of instruments used in this study, a full economic evaluation should employ the EQ-5D-5L as the generic instrument to allow QALY calculation. The accompanying sleep-specific instrument depends on aspects of QoL that need to be measured, with the ESS offering the broadest content coverage and measurement of latent constructs. Although, the PSQI would be more appropriate in contexts where measuring ‘body functions’ is of import.

Several limitations of this study merit note. Data used in this study was collected using a cross-sectional study design where future research should consider a longitudinal study design. Such study design would allow the evaluation of which instruments are most responsive and best capture clinically important changes over time, supporting the assessment of incremental effectiveness in economic evaluation [63, 64]. Further, expanding the comparisons to include other generic and/or sleep-specific instruments should be considered because they may be more applicable in the assessment of sleep health interventions. For example the newly-developed SF-6Dv2 [65] has shown comparative validity to the EQ-5D-5L [66] but is more sensitive to clinical improvements in OSA patients [67], and a recently derived value set enables the calculation of QALYs for health technology assessment [68]. Other jurisdictions should extrapolate our results cautiously given they are based on a nationally representative sample of Australians who self-reported having a sleep disorder. As such, replication of this study in other countries is appropriate.

Conclusion

In the absence of a gold standard, neither a generic nor sleep-specific instrument alone is sufficient for measuring outcomes in general populations diagnosed with a sleep disorder(s) within an economic or other evaluations. Therefore, a combination of both is required. The context of the research to be done should guide the instrument choice.

Data availability

The dataset analysed in support of the conclusions of this study is not publicly available but, upon reasonable request to the corresponding author, may be made available in accordance with the ethical approval provided by Flinders University Social and Behavioural Ethics Committee.

References

Streatfeild, J., Smith, J., Mansfield, D., Pezzullo, L., & Hillman, D. (2021). The social and economic cost of sleep disorders. Sleep, 44(11), zsab132. https://doi.org/10.1093/sleep/zsab132

Benjafield, A. V., Ayas, N. T., Eastwood, P. R., Heinzer, R., Ip, M. S. M., Morrell, M. J., Nunez, C. M., Patel, S. R., Penzel, T., Pépin, J.-L., Peppard, P. E., Sinha, S., Tufik, S., Valentine, K., & Malhotra, A. (2019). Estimation of the global prevalence and burden of obstructive sleep apnoea: A literature-based analysis. The Lancet Respiratory Medicine, 7(8), 687–698. https://doi.org/10.1016/S2213-2600(19)30198-5

Morin, C. M., Jarrin, D. C., Ivers, H., Mérette, C., LeBlanc, M., & Savard, J. (2020). Incidence, persistence, and remission rates of insomnia over 5 years. JAMA Network Open, 3(11), e2018782–e2018782. https://doi.org/10.1001/jamanetworkopen.2020.18782

Kim, D. H., Kim, B., Han, K., & Kim, S. W. (2021). The relationship between metabolic syndrome and obstructive sleep apnea syndrome: A nationwide population-based study. Science and Reports, 11(1), 8751. https://doi.org/10.1038/s41598-021-88233-4

Darchia, N., Oniani, N., Sakhelashvili, I., Supatashvili, M., Basishvili, T., Eliozishvili, M., Maisuradze, L., & Cervena, K. (2018). Relationship between sleep disorders and health-related quality of life-results from the Georgia SOMNUS study. International Journal of Environmental Research and Public Health, 15(8), 1588. https://doi.org/10.3390/ijerph15081588

von Schantz, M., Ong, J. C., & Knutson, K. L. (2021). Associations between sleep disturbances, diabetes and mortality in the UK Biobank cohort: A prospective population-based study. Journal of Sleep Research, 30(6), e13392. https://doi.org/10.1111/jsr.13392

Huyett, P., Siegel, N., & Bhattacharyya, N. (2021). Prevalence of sleep disorders and association with mortaity: Results from the NHANES 2009–2010. The Laryngoscope, 131(3), 686–689. https://doi.org/10.1002/lary.28900

Wickwire, E. M., Albrecht, J. S., Towe, M. M., Abariga, S. A., Diaz-Abad, M., Shipper, A. G., Cooper, L. M., Assefa, S. Z., Tom, S. E., & Scharf, S. M. (2019). The impact of treatments for OSA in monetiized health economic outcomes: A systematic review. Chest, 155(5), 947–961. https://doi.org/10.1016/j.chest.2019.01.009

Harris, A. H., & Bulfone, L. (2005). Getting value for money: The Australian experience. In T. S. Jost (Ed.), Health care coverage determinations: An international comparative study (1st ed., pp. 25–56). Open University Press.

National Institute for Clinical Excellence. (2004). Guide to the methods of technology appraisal.

Sforza, E., Janssens, J. P., Rochat, T., & Ibanez, V. (2003). Determinants of altered quality of life in patients with sleep-related breathing disorders. Eur Resp J, 21(4), 682. https://doi.org/10.1183/09031936.03.00087303

Moyer, C. A., Sonnad, S. S., Garetz, S. L., Helman, J. I., & Chervin, R. D. (2001). Quality of life in obstructive sleep apnea: A systematic review of the literature. Sleep Medicine, 2(6), 477–491. https://doi.org/10.1016/S1389-9457(01)00072-7

Herdman, M., Gudex, C., Lloyd, A., Janssen, M., Kind, P., Parkin, D., Bonsel, G., & Badia, X. (2011). Development and preliminary testing of the new five-level version of EQ-5D (EQ-5D-5L). Quality of Life Research, 20(10), 1727–1736. https://doi.org/10.1007/s11136-011-9903-x

Kaambwa, B., Woods, T.-J., Natsky, A., Bulamu, N., Mpundu-Kaambwa, C., Loffler, K. A., Sweetman, A., Catcheside, P. G., Reynolds, A. C., Adams, R., & Eckert, D. J. (2024). Content comparison of quality-of-life instruments used in economic evaluations of sleep disorder interventions: A systematic review. PharmacoEconomics. https://doi.org/10.1007/s40273-023-01349-5

Al-Janabi, H., Flynn, T. N., & Coast, J. (2012). Development of a self-report measure of capability wellbeing for adults: The ICECAP-A. Quality of Life Research, 21(1), 167–176. https://doi.org/10.1007/s11136-011-9927-2

Bertram, W., Penfold, C., Glynn, J., Johnson, E., Burston, A., Rayment, D., Howells, N., White, S., Wylde, V., Gooberman-Hill, R., Blom, A., & Whale, K. (2024). REST: A preoperative tailored sleep intervention for patients undergoing total knee replacement - feasibility study for a randomised controlled trial. British Medical Journal Open, 14(3), e078785. https://doi.org/10.1136/bmjopen-2023-078785

O’Toole, S., Moazzez, R., Wojewodka, G., Zeki, S., Jafari, J., Hope, K., Brand, A., Hoare, Z., Scott, S., Doungsong, K., Ezeofor, V., Edwards, R. T., Drakatos, P., & Steier, J. (2023). Single-centre, single-blinded, randomised, parallel group, feasibility study protocol investigating if mandibular advancement device treatment for obstructive sleep apnoea can reduce nocturnal gastro-oesophageal reflux (MAD-Reflux trial). British Medical Journal Open, 13(8), e076661. https://doi.org/10.1136/bmjopen-2023-076661

Brazier, J. E., Rowen, D., Lloyd, A., & Karimi, M. (2019). Future Directions in Valuing Benefits for Estimating QALYs: Is Time Up for the EQ-5D? Value Health, 22(1), 62–68. https://doi.org/10.1016/j.jval.2018.12.001

Reimer, M. A., & Flemons, W. W. (2003). Quality of life in sleep disorders. Sleep Medicine Reviews, 7(4), 335–349. https://doi.org/10.1053/smrv.2001.0220

Lee, S., Kim, J. H., & Chung, J. H. (2021). The association between sleep quality and quality of life: A population-based study. Sleep Medicine, 84, 121–126. https://doi.org/10.1016/j.sleep.2021.05.022

Uchmanowicz, I., Markiewicz, K., Uchmanowicz, B., Kołtuniuk, A., & Rosińczuk, J. (2019). The relationship between sleep disturbances and quality of life in elderly patients with hypertension. Clinical Interventions in Aging, 14, 155–165. https://doi.org/10.2147/CIA.S188499

Brazier, J., Deverill, M., & Green, C. (1999). A review of the use of health status measures in economic evaluation. Journal of Health Services Research & Policy, 4(3), 174–184. https://doi.org/10.1097/mlr.0000000000001325

Wiebe, S., Guyatt, G., Weaver, B., Matijevic, S., & Sidwell, C. (2003). Comparative responsiveness of generic and specific quality-of-life instruments. Journal of Clinical Epidemiology, 56(1), 52–60. https://doi.org/10.1016/S0895-4356(02)00537-1

World Health Organization. (2002). Towards a Common Language for Functioning, Disability and Health ICF: The International Classification of Functioning. World Health Organization.

Streiner, D. L., & Norman, G. R. (2008). Health Measurement Scales: A practical guide to their development and use. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199231881.001.0001

Feng, Y.-S., Kohlmann, T., Janssen, M. F., & Buchholz, I. (2021). Psychometric properties of the EQ-5D-5L: A systematic review of the literature. Quality of Life Research, 30(3), 647–673. https://doi.org/10.1007/s11136-020-02688-y

Janssen, M. F., Pickard, A. S., Golicki, D., Gudex, C., Niewada, M., Scalone, L., Swinburn, P., & Busschbach, J. (2013). Measurement properties of the EQ-5D-5L compared to the EQ-5D-3L across eight patient groups: A multi-country study. Quality of Life Research, 22(7), 1717–1727. https://doi.org/10.1007/s11136-012-0322-4

Norman, R., Mulhern, B., Lancsar, E., Lorgelly, P., Ratcliffe, J., Street, D., & Viney, R. (2023). The Use of a Discrete Choice Experiment Including Both Duration and Dead for the Development of an EQ-5D-5L Value Set for Australia. PharmacoEconomics, 41(4), 427–438. https://doi.org/10.1007/s40273-023-01243-0

Flynn, T. N., Huynh, E., Peters, T. J., Al-Janabi, H., Clemens, S., Moody, A., & Coast, J. (2015). Scoring the Icecap-a capability instrument. Estimation of a UK general population tariff. Health Economics, 24(3), 258–269. https://doi.org/10.1002/hec.3014

Weaver, T. E., Laizner, A. M., Evans, L. K., Maislin, G., Chugh, D. K., Lyon, K., Smith, P. L., Schwartz, A. R., Redline, S., Pack, A. I., & Dinges, D. F. (1997). An instrument to measure functional status outcomes for disorders of excessive sleepiness. Sleep, 20(10), 835–843.

Alessi, C. A., Fung, C. H., Dzierzewski, J. M., Fiorentino, L., Stepnowsky, C., Rodriguez Tapia, J. C., Song, Y., Zeidler, M. R., Josephson, K., Mitchell, M. N., Jouldjian, S., & Martin, J. L. (2021). Randomized controlled trial of an integrated approach to treating insomnia and improving the use of positive airway pressure therapy in veterans with comorbid insomnia disorder and obstructive sleep apnea. Sleep, 44(4), zsaa235. https://doi.org/10.1093/sleep/zsaa235

Weaver, T. E., Drake, C. L., Benes, H., Stern, T., Maynard, J., Thein, S. G., Andry, J. M., Sr., Hudson, J. D., Chen, D., Carter, L. P., Bron, M., Lee, L., Black, J., & Bogan, R. K. (2020). Effects of Solriamfetol on quality-of-life measures from a 12-week phase 3 randomized controlled trial. Annals of the American Thoracic Society, 17(8), 998–1007. https://doi.org/10.1513/AnnalsATS.202002-136OC

Weaver, T. E., Menno, D. M., Bron, M., Crosby, R. D., Morris, S., & Mathias, S. D. (2021). Determination of thresholds for minimally important difference and clinically important response on the functional outcomes of sleep questionnaire short version in adults with narcolepsy or obstructive sleep apnea. Sleep Breath, 25(3), 1707–1715. https://doi.org/10.1007/s11325-020-02270-3

Chasens, E. R., Ratcliffe, S. J., & Weaver, T. E. (2009). Development of the FOSQ-10: A short version of the Functional Outcomes of Sleep Questionnaire. Sleep, 32(7), 915–919. https://doi.org/10.1093/sleep/32.7.915

Kendzerska, T. B., Smith, P. M., Brignardello-Petersen, R., Leung, R. S., & Tomlinson, G. A. (2014). Evaluation of the measurement properties of the Epworth sleepiness scale: A systematic review. Sleep Medicine Reviews, 18(4), 321–331. https://doi.org/10.1016/j.smrv.2013.08.002

Qaseem, A., Holty, J. E., Owens, D. K., Dallas, P., Starkey, M., & Shekelle, P. (2013). Management of obstructive sleep apnea in adults: A clinical practice guideline from the American College of Physicians. Annals of Internal Medicine, 159(7), 471–483. https://doi.org/10.7326/0003-4819-159-7-201310010-00704

Johns, M. W. (1991). A new method for measuring daytime sleepiness: The Epworth sleepiness scale. Sleep, 14(6), 540–545. https://doi.org/10.1093/sleep/14.6.540

Buysse, D. J., Reynolds, C. F., Monk, T. H., Berman, S. R., & Kupfer, D. J. (1989). The Pittsburgh sleep quality index: A new instrument for psychiatric practice and research. Psychiatry Research, 28(2), 193–213. https://doi.org/10.1016/0165-1781(89)90047-4

Shapiro, S. S., & Francia, R. S. (1972). An approximate analysis of variance test for normality. Journal of American Statistical Association, 67(337), 215–216. https://doi.org/10.1080/01621459.1972.10481232

Tinetti, M. E., Doucette, J., Claus, E., & Marottoli, R. (1995). Risk factors for serious injury during falls by older persons in the community. Journal of the American Geriatrics Society, 43(11), 1214–1221. https://doi.org/10.1111/j.1532-5415.1995.tb07396.x

Flora, D. B., & Curran, P. J. (2004). An empirical evaluation of alternative methods of estimation for confirmatory factor analysis with ordinal data. Psychological Methods, 9(4), 466–491. https://doi.org/10.1037/1082-989x.9.4.466

Velicer, W. F. (1976). Determining the number of components from the matrix of partial correlations. Psychometrika, 41(3), 321–327. https://doi.org/10.1007/BF02293557

Horn, J. L. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika, 30(2), 179–185. https://doi.org/10.1007/BF02289447

Gorsuch, R. L. (1983). Factor analysis (2nd ed.). Psychology Press.

Schmitt, T. A. (2011). Current methodological considerations in exploratory and confirmatory factor analysis. Journal of Psychoeducational Assessment, 29(4), 304–321. https://doi.org/10.1177/0734282911406653

Norman, G. R., & Streiner, D. L. (2014). Biostatistics: The bare essentials (4th ed.). BC Decker.

Comrey, A. L., & Lee, H. B. (1992). A first course in factor analysis (2nd ed.). Lawrence Erlbaum Associates Inc.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299. https://doi.org/10.1037/1082-989X.4.3.272

Flora, D. B. (2018). Statistical methods for the social and behavioural sciences: A model- based approach (1st ed.). Sage.

Mahalanobis, P. C. (1936). On the generalized distance in statistics. Proceedings of the National Institute of Sciences of India, 2(1), 49–55.

Tabachnick, B. G., & Fidell, L. S. (2007). Using multivariate statistics (5th ed.). Allyn & Bacon.

Lu, Y., & Fang, J. (2003). Advanced medical statistics. World Scientific Publishing Co. Pte. Ltd.

Alhasan, D. M., Gaston, S. A., Jackson, W. B., 2nd., Williams, P. C., Kawachi, I., & Jackson, C. L. (2020). Neighborhood social cohesion and sleep health by age, sex/gender, and race/ethnicity in the United States. International Journal of Environmental Research and Public Health, 17(24), 9475. https://doi.org/10.3390/ijerph17249475

Billings, M. E., Hale, L., & Johnson, D. A. (2020). Physical and social environment relationship with sleep health and disorcers. Chest, 157(5), 1304–1312. https://doi.org/10.1016/j.chest.2019.12.002

Koyanagi, A., Garin, N., Olaya, B., Ayuso-Mateos, J. L., Chatterji, S., Leonardi, M., Koskinen, S., Tobiasz-Adamczyk, B., & Haro, J. M. (2014). Chronic conditions and sleep problems among adults aged 50 years or over in nine countries: A multi-country study. PLoS ONE, 9(12), e114742. https://doi.org/10.1371/journal.pone.0114742

Coast, J., Peters, T. J., Natarajan, L., Sproston, K., & Flynn, T. (2008). An assessment of the construct validity of the descriptive system for the ICECAP capability measure for older people. Quality of Life Research, 17(7), 967–976. https://doi.org/10.1007/s11136-008-9372-z

Kaambwa, B., Gill, L., McCaffrey, N., Lancsar, E., Cameron, I. D., Crotty, M., Gray, L., & Ratcliffe, J. (2015). An empirical comparison of the OPQoL-Brief, EQ-5D-3L and ASCOT in a community dwelling population of older people. Health and Quality of Life Outcomes, 13(1), 164. https://doi.org/10.1186/s12955-015-0357-7

Smith, S. S., Oei, T. P. S., Douglas, J. A., Brown, I., Jorgensen, G., & Andrews, J. (2008). Confirmatory factor analysis of the Epworth Sleepiness Scale (ESS) in patients with obstructive sleep apnoea. Sleep Medicine, 9(7), 739–744. https://doi.org/10.1016/j.sleep.2007.08.004

Hossain, J. L., Ahmad, P., Reinish, L. W., Kayumov, L., Hossain, N. K., & Shapiro, C. M. (2005). Subjective fatigue and subjective sleepiness: Two independent consequences of sleep disorders? Journal of Sleep Research, 14(3), 245–253. https://doi.org/10.1111/j.1365-2869.2005.00466.x

Keeley, T., Coast, J., Nicholls, E., Foster, N. E., Jowett, S., & Al-Janabi, H. (2016). An analysis of the complementarity of ICECAP-A and EQ-5D-3L in an adult population of patients with knee pain. Health and Quality of Life Outcomes, 14, 36. https://doi.org/10.1186/s12955-016-0430-x

Davis, J. C., Liu-Ambrose, T., Richardson, C. G., & Bryan, S. (2013). A comparison of the ICECAP-O with EQ-5D in a falls prevention clinical setting: Are they complements or substitutes? Quality of Life Research, 22(5), 969–977. https://doi.org/10.1007/s11136-012-0225-4

Otte, J. L., Rand, K. L., Landis, C. A., Paudel, M. L., Newton, K. M., Woods, N., & Carpenter, J. S. (2015). Confirmatory factor analysis of the Pittsburgh Sleep Quality Index in women with hot flashes. Menopause, 22(11), 1190–1196. https://doi.org/10.1097/gme.0000000000000459

Drummond, M. F., Sculpher, M., O’Brien, B., Stoddart, G. L., & Torrance, G. W. (2005). Methods for the economic evaluation of health care programmes. Oxford University Press.

Guyatt, G. H., Deyo, R. A., Charlson, M., Levine, M. N., & Mitchell, A. (1989). Responsiveness and validity in health status measurement: A clarification. Journal of Clinical Epidemiology, 42(5), 403–408. https://doi.org/10.1016/0895-4356(89)90128-5

Brazier, J. E., Mulhern, B. J., Bjorner, J. B., Gandek, B., Rowen, D., Alonso, J., Vilagut, G., Ware, J. E., on behalf of the, S. F. D. I. P. G. (2020). Developinga new version of the SF-6D health state classification system from the SF-36v2: SF-6Dv2. Medical Care, 58(6), 557–565. https://doi.org/10.1097/MLR.0000000000001325

Xie, S., Wang, D., Wu, J., Liu, C., & Jiang, W. (2022). Comparison of the measurement properties of SF-6Dv2 and EQ-5D-5L in a Chinese population health survey. Health and Quality of Life Outcomes, 20(1), 96. https://doi.org/10.1186/s12955-022-02003-y

Huber, F. L., Furian, M., Kohler, M., Latshang, T. D., Nussbaumer-Ochsner, Y., Turk, A., Schoch, O. D., Laube, I., Thurnheer, R., & Bloch, K. E. (2021). Health preference measures in patients with obstructive sleep apnea syndrome undergoing continuous positive airway pressure therapy: Data from a randomised trial. Respiration, 100(4), 328–338. https://doi.org/10.1159/000513306

Mulhern, B. J., Bansback, N., Norman, R., & Brazier, J. (2020). Valuing the SF-6Dv2 classification system in the United Kingdom using a discrete-choice experiment with duration. Medical Care, 58(6), 566–573. https://doi.org/10.1097/mlr.0000000000001324

Acknowledgements

The authors extend their heartfelt appreciation to the members of Flinders Health and Medical Research Institute (FHMRI) Sleep Health (formerly Adelaide Institute for Sleep Health, AISH) for their invaluable contribution in developing the quality of life survey employed for data collection in this study. Additionally, sincere appreciation is extended to the respondents who took the time to provide valuable responses to these questions, contributing significantly to the research effort.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Billingsley Kaambwa contributed to study conception and study design. All authors contributed to material preparation and data collection. Taylor-Jade Woods contributed to data analysis and wrote the first draft of the manuscript. All authors reviewed and commented on, read, and approved all iterations of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to declare.

Ethics approval

The methods used in this study adhered to the ethics guidelines set forth by the institutional and/or national research committee, as well as the 1964 Helsinki Declaration and its subsequent revisions, or equivalent ethical standards. Approval for this study was granted by the Flinders University Social and Behavioural Ethics Committee.

Consent to participate

Informed consent was obtained from all individuals included in this study.

Consent to publish

Informed consent to publish was obtained from all individuals included in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Woods, TJ., Kaambwa, B. An empirical comparison of sleep-specific versus generic quality of life instruments among Australians with sleep disorders. Qual Life Res 33, 2261–2274 (2024). https://doi.org/10.1007/s11136-024-03686-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-024-03686-0