Abstract

We propose the valuation of a real option in the telecommunications industry. According to the probabilistic present worth approach, we estimate the value of a contract between a television network and a company willing to advertise its business on this network. We assume that the value of the contract depends on a time-dependent variable, i.e., the number of viewers tuned into the network, which behaves like a Markov process. After discretizing and converting this number into a monetary value through a specific function, we compute the nth-order moment of the total discounted earnings. The knowledge of the moments, and the application of the maximum-entropy approach, allows to find the probability distribution of the payoff function and the consequential pricing of the real option. Finally, we apply the proposed model to the real television audience data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The advertising industry plays a prominent role in driving consumer behaviour in several fields, e.g., food advertising (Harris et al. 2009) and public service advertising (O’Keefe and Reid 2020). In general, the literature is rich in studies analyzing the relationship that advertising has with the number of sales, the brand purchase choices, and the psychology (see, e.g., Raj 1982; Krishnamurthi and Raj 1985; Guadagni and Little 1983; Snyder and DeBono 1985; Putrevu 2001).

However, there are few specific quantitative studies on advertising costs. For instance, Danaher and Rust (1996) consider advertising as an investment and find the level of expenditure that maximises the return on investment. This is maximization constrained to a set budget without deepening the timing of an advertising campaign. Martín-Oliver and Salas-Fumás (2008) study how investment in advertising, together with other inputs as labour, physical, and IT capital, affects the demand for deposit and loan bank services through a static model of profit maximisation. Therefore, we aim to estimate the value of a contract between a television network and a company willing to advertise its business on this network without budget constraints and valuing advertising airtime. To this extent, we compute this value by employing a real options approach, which is widely discussed in the literature (see, e.g., Lo and Lan 2008).

A key aspect of the real option pricing method is that it is not possible to build replicating portfolios because the assets are not tradable. However, it can be possible to relate the valuation of real projects to quoted assets with the same level of risk as the non-traded ones (see Borison (2005) and Smith and Nau (1995)). We can identify four main pricing methods. First, the Black and Scholes (B&S) option pricing model (Black and Scholes 1973) used for real option in McDonald and Siegel (1986). The second most popular method is the so-called Binomial Option Pricing Model (BOPM), which limits the underlying asset movement to two choices: up by a factor u and down by a factor d. The first contribution of the BOPM for financial options is by Cox et al. (1979). However, Kellogg and Charnes (2000) used decision trees and binomial lattice methods to value biotech companies as the sum of the value of their drug development programs. Also Di Bari et al. (2023) study the impact of polarity score on real option valuation by using a binomial approach. An alternative approach is to employ the Monte Carlo method for valuing options (see, e.g., Boyle et al. 1997; Glasserman 2004).Footnote 1 In particular, for the evaluation of real options with the Monte Carlo method see Abdel Sabour and Poulin (2006). Finally, we note the probabilistic present worth analysis, developed by Carmichael et al. (2011), who computes the present value as the sum of all the discounted cash flow at each period using only expected values and variances. Moreover, the same author presented a complete collection of plain and compound real options (Carmichael 2016).

Each of these pricing methods has strengths and weaknesses. For example, the B&S model presents the following limitations: the arbitrage principle is not applicable to real options as real assets are not traded; the Geometric Brownian motion may be a suitable model for stock price movements, but its applicability to real assets is not straightforward (Damodaran 1999; Newton et al. 2001); the computation of volatility in the real options analysis is difficult (Amram and Kulatilaka 1998; Kodukula and Papudesu 2006; Lewis et al. 2008); contrary to the real options, financial options are usually exercised instantaneously (Damodaran 1999; Lewis et al. 2008); while the decision pertaining to a financial option cannot change the value of the underlying asset, the same is not true for real options (Newton et al. 2001). The BOPM method is useful for pricing vanilla options with early exercise opportunities because of its accuracy and rapid convergence. However, it can be quite difficult to adapt to complex situations as stated in Fadugba et al. (2012). Contrary to the previous methods, the Monte Carlo method can handle any type of option with different complexities. However, its weakness resides in the required computational effort, which can be intense in more complex situations. Finally, the probabilistic present worth can be used with any kind of distribution for the asset value. However, it was employed using only the first two moments leading to an estimate that can be biased and must be improved.

In our work, we adopt the present worth method to compute the value of a contract between a television network and a company willing to advertise on this network. Contrary to the option valuation in Carmichael (2016), we employ a Markov chain reward to model the number of viewers of the advertising, thus including a time dependence into the computation of the present value. Moreover, we do not limit the analysis to the use of expected values and variances of the cash flows, but we also include higher moments. Then, we obtain the probability distribution with the maximum entropy approach by Mead and Papanicolaou (1984).

Specifically, we consider an option that gives the possibility, in exchange for a price paid today, to exercise the option at a future time to choose between airing the advertisement or not.

Our methodology is based on Markov chains due to the high versatility and robustness demonstrated in describing a variety of real-world problems (see, e.g., D’Amico and Villani 2021; Petronio et al. 2014; Kalligeris et al. 2021; De Blasis 2020. Other examples of works that have used Markov Chains in the context of options are D’Amico (2006), D’Amico (2008) and Duan et al. (2003).

The contribution of this work is twofold. On the one hand, we propose a new methodology to compute the pricing of an option in which the underlying asset is a television advertisement. In particular, this strategy requires the calculation of the expected value of the payoff function of a European call option depending on the sum of discounted cash flows for the pricing of a real option. To this extent, we compute the moments derived through a general Markovian reward process by identifying new recursive equations. On the other hand, by employing these moments as constraints within an entropy optimization problem, we can derive the density function of the underlying asset.

The methodology is applied to advertising data freely provided by the Italian Auditel website. The dataset comprises aggregated data referring to eight time slots for each month. The application to real data shows that results are consistent to variations of the general parameters of the model. Moreover, they show an increasing accuracy when using higher order moments, contrary to the classical B&S model which uses only two moments.

The paper is organised as follows. In Sect. 2, we present the mathematical model for option pricing. Then, in Sect. 3, an application case of the model is proposed and, finally, in Sect. 4, the conclusions of our work are given.

2 The model

We assume that company A wants to advertise its business and company B is a television network. To this end, they sign an option contract that gives the possibility to company A to advertise its business on a television owned by company B. At time \(t=0\) the option has a price equal to P and it can be exercised at the strike price K, at a specific time \(t_0\). Exercising the option allows company A to air multiple advertisements at future times \(t_0,t_1,\ldots ,t_n\). At maturity, cash flows are generated depending on the number of viewers \(N(t_i)\), tuned at time \(t_i\), through a function \(G:{\mathbb {N}}_0 \rightarrow \mathrm{I\! R}^+\) where \(G=G(N(t_i))\). The cash flow related to the option is illustrated in Fig. 1 and note that \(t_i\) times are not necessarily equispaced.

Obviously, the value assumed by \(G(N(t_i))\) depends on the estimate that company A will make.

Let r be a fixed discount rate.Footnote 2 We compute the sum of all the discounted cash flows as

and, since the considered option is a European call option, we can define the payoff function as

Finally, we can compute the option price as

where \(f_Z\) is the density function of the random variable Z.

Unfortunately, we do not know the density function \(f_Z\). However, one possibility to find it is to employ the maximum entropy approach by Mead and Papanicolaou (1984), which requires the knowledge of the moments \({\mathbb {E}}[Z^k]\), \(k \in {\mathbb {N}}\).

2.1 Method of moments

In the classical moment problem, the positive density \(f_Z(z)\) is sought from the knowledge of its finite \(N+1\) power moments. However, we can find an infinite number of densities with the same \(N+1\) moments. To overcome this issue, Mead and Papanicolaou (1984) proposed the maximum-entropy approach that leads to the construction of a sequence of approximations. The solution to the problem coincides with the solution to the constrained maximization of the following function:

where a and b are the extremes of the distribution support, \(\mu _k\), with \(0\le k\le N\), are the first \(N+1\) true moments, and \(\lambda _n\) are the Lagrange multipliers. Setting the partial derivatives of the function equal to zero, \(\frac{\partial S}{\partial f_Z(z)}=0\) and \(\frac{\partial S}{\partial \lambda _k}=0\), the authors obtain the maximum of the entropy, with general solution

Now, to find the maximum entropy solution, they solved the following system of \(N+1\) equations,

2.2 Moments computation

In order to compute the moments of the random variable Z, we assume that the number of viewers behaves like a Markov reward process. The related Markov chain \(\{J_{n}\}_{n\in {\mathbb {N}}}\) has a state space \(E=\left\{ 1, 2,\ldots ,s\right\} \) indicating the regimes of the viewers. The chain respects the following Markov property:

where \(p_{j_i,j_{i+1}}\) represents the probability to reach state \(j_{i+1}\) starting from state \(j_i\). These probabilities, which together make up the transition probability matrix \({\textbf{P}}\), are easily calculated by counting how many times the chain reaches the state \(j_{i+1}\) from the state \(j_i\) divided by the total number of times the chain reaches the state \(j_{i+1}\). Each state of the Markov process indicates at any time the listening regime. For instance, if \(s=3\), \(J_i=1\) could be associated with a low level of audience, \(J_i=2\) could represent a medium level and \(J_i=3\) will stand for a high audience level.

Conditionally to the regime \(J_i=l\) with \(l\in E\), the random number of listeners has specific cumulative distribution functions:

We define the k-th moment of Z, conditioned to the starting state \(J_{t_{0}}=j_0\) of our process, referred to \({\textbf{t}}_0^n =(t_0,t_1,\ldots ,t_n)\) that is the vector of times in which the advertisement will be aired, as follows:

We split the previous equation into two addends as follows,

Then, applying Newton’s binomial formula, we obtain

and we can consider the following three expected values,

Now, the previous expected values are calculated. As far as the first addend is concerned, the tower property of conditional expectation gives

We proceed to compute the internal expected value as,

This means that the value assumed by the first addend of Eq. (9) is

The quantity \(p_{j_0,j_1}^{(t_1-t_0)}\) is the probability of reaching the state \(j_1\) in the time \(t_1\) starting from state \(j_0\) in the time \(t_0\), obtainable as the place element \(j_0,j_1\) of the \(t_1-t_0\) power of the transition matrix \({\textbf{P}}\). Similarly, we calculate the expected value of the second addendum of Eq. (9). However, this time, we condition on the chain state and the number of tuned people,

The deterministic part comes out of the innermost expected value,

Now, the internal expected value is computed as follows:

By substituting the previous value into the second addend of Eq. (9), we obtain

After performing the necessary calculations, we arrive at the following form,

The final step is to determine the expected value of the third addend of Eq. (9) that is equal to

The moment equation results from replacing the addends in (9),

At this point, it is possible to find the first moment by setting \(k=1\) and we obtain the following result,

After some arrangements, we have

To solve the previous equation, we set \(t_0=t_{n-1}\), which is equivalent to say that \(t_1=t_{n}\). Thus, we obtain

The moment \(M_{j_n}^{(1)}({\textbf{t}}_n^n)\) is null by definition, which occurs when \(t_0=t_n\). Therefore, the formula becomes

We note that all terms to the right of the equality symbol are known and that this calculation must be done for all possible values of \(j_{n-1}\in E\). Subsequently, we replace \(t_0=t_{n-2}\), thus we have

The moment \(M_{j_{n-1}}^{(1)}({\textbf{t}}_{n-1}^n)\) is known from the previous step, hence the formula becomes,

At this point, we can continue recursively setting \(t_0=t_{n-3}\) up to \(t_0\) to finally have the first moment.

To compute the second moment, we set \(k=2\) in (16) and we obtain

thus,

We set \(t_0=t_{n-1}\), which is identical to saying \(t_1=t_{n}\), to find the solution,

The moments \(M_{j_n}^{(1)}({\textbf{t}}_n^n)\) and \(M_{j_n}^{(2)}({\textbf{t}}_{n}^n)\) are null by definition, which occurs when \(t_0=t_n\). Therefore, the formula becomes

We note that all terms to the right of the equality symbol are known. Subsequently, we replace \(t_0=t_{n-2}\) and \(t_1=t_{n-1}\), thus we obtain

Now, we substitute the previously calculated \(M_{j_{n-1}}^{(1)}({\textbf{t}}_{n-1}^n)\) and \(M_{j_{n-1}}^{(2)}({\textbf{t}}_{n-1}^n)\), and we have

Finally, we can recursively set \(t_0=t_{n-3}\) up to \(t_0\), to obtain the second moment.

Similarly to the computation of the first and second moments, we can compute higher moments.

3 Case study

We propose an application of the previously described method to advertising data from the Italian television network. In particular, we employ the audience data provided by Auditel and available at https://www.auditel.it/en/data/. We obtain the monthly Average Minute Rating (AMR) data which represents the average number of viewers for each of the following time slotsFootnote 3: 2:00 AM–7:00 AM, 7:00 AM–9:00 AM, 9:00 AM–12:00 PM, 12:00 PM–3:00 PM, 3:00 PM–6:00 PM, 6:00 PM–8:30 PM, 8:30 PM–10:30 PM and 10:30 PM–2:00 AM. As an example let “Canale 5” be the channel on which the advertisement will be broadcast. We collected the data referred to this channel from January 2017 to December 2021.

In this way, the time series of AMR consists of a number of observations equal to eight-time slots for 12 months for five years, resulting in 480 monthly observations.

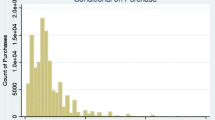

To get an overview, we report the summary statistics in Table 1 that shows that the AMR series assumes values from 359,774 to 4,756,853 viewers, with a mean of 1,945,300 and a standard deviation of 1,002,336.

In addition, we plot the full dataset in Fig. 2 that shows a monthly and yearly seasonality. Therefore, to eliminate the periodic component, we perform a deseasonalization employing the moving average with a period equal to eight which is the number of the time slots for each month.

To apply the model and thus compute the relative parameters, we discretize the moving average series into a 3-state space. Specifically, we assign to the lower state all values included between the lowest one to \(\mu -\frac{\sigma }{2}\), to the middle state the observations from \(\mu -\frac{\sigma }{2}\) to \(\mu +\frac{\sigma }{2}\), and to the higher state the values between \(\mu +\frac{\sigma }{2}\) and the greatest one.

To get an overview of the data distribution we report the histogram in Fig. 3 along with the frequencies of the discretized observations. The central dashed vertical line represents the mean of the distribution.

Using the maximum likelihood estimation (see, e.g., Billingsley 1961; Bharucha-Reid 1962, we are able to compute the transition probability matrix, as follows

We observe higher values along the main diagonal. Thus, the probability of remaining, for a time period, in the starting state is very high for one period. From this matrix, we obtain the following stationary distribution

that is the probability of the three audience levels in the stationary regime. Note that it is approximately equally distributed.

Being useful for the calculation of moments, we plot the Empirical Cumulative Distribution Function (ECDF) of State 1, State 2, and State 3 compared to the global ECDF in Fig. 4. We can see that the ECDFs of States 2 and 3 grow faster than the ECDF referring to State 1.

Since we want to check that the Markovian model is a right choice for the data considered, we perform the independence test (Basawa and Rao (1980)):

Where \(\phi _j\) is the component of the vector

where \(n_{ij}\) is the number of times the chain moves from state i to state j.

The null hypothesis states that the probability of going from state i to state j does not depend on the starting state i. If this happens the sequence of MA does not depend on the previous state and so instead of being represented by a Markov Chain, the observations would constitute a sequence of independent and identically distributed random variables. The statistic proposed in Basawa and Rao (1980) was used to test the independence hypothesis:

where \(n_{i}=\sum _{j\in E}n_{ij}\). We have that S has a limiting \(\chi ^2\) distribution with 4 degrees of freedom. The value of the statistic is 785.252 and we reject the null hypothesis with a confidence level of \(1\%\). Therefore, we can assume that there is time dependence on the previous state and Markov chains can be a suitable tool to do this modelling.

For our case study, we assume to air an advertisement for a time vector \(\varvec{t}_0^2=[4,12,20]\). According to the presented model, the time at which the option is exercised is \(t_0\), but we could also have chosen a different time to carry out the evaluation.Footnote 4 For simplicity, let the gain function be a linear function of the number of listeners connected at each time \(G(N(t_i))=N(t_i)\). Since we do not know the risk level of the investment, we chose the discount rate equal to \(r=3.873\%\), which is the rate of the Italian Treasury Bonds.Footnote 5

First, we calculate the first ten moments conditioning at each state using Eq. (16). Results are shown in Table 2.

Then, we apply the method of moments with the maximum entropy approach to determine the density functions generated using different orders of moments. For the implementation of this approach, we employ the Python code PyMaxEnt by Saad and Ruai (2019). Figures 5, 6, and 7 show the density functions obtained from the knowledge of 2, 3, 4, 5 and 10 moments conditioning on each state. It can be seen, for instance, that State 3 reveals changes in the shape of the distribution as the order of moments increases. This means that by increasing the moments we get a more detailed density function. This behaviour is less evident in State 2.

Employing the density function derived from the knowledge of the first ten moments, we proceed to calculate the option price using Eq. (3). The results are summarised in Table 3. We note that as the strike price increases, the option price decreases and as the status increases, the price also increases.

As a robustness test for our model, we compare the results with those obtained employing a parametric lognormal distribution which is compatible with a B&S model. We recall that the random variable \(X=e^{N}\) follows the lognormal distribution \(\log {{X}}(\mu ,\sigma ^{2})\) if \(N=\log X\) follows the normal distribution \({\mathcal {N}}(\mu ,\sigma ^{2})\). Its probability density function is:

with expected value and variance as follows:

Using the first two moments of our parametric distributions, conditional on each state, we find the prices in Table 4. Similarly to the previous table, prices decrease as the strike price increases. The comparison of Tables 3 and 4 reveals that the values are qualitatively similar. It can be noted that for the first state, the values from our model are lower than the ones calculated with the assumption of lognormally, while those for states 2 and 3 are higher at least up to \(K=\) 3,500,000. Since the values in Table 3 are generated by the knowledge of ten moments, they contain more information.

4 Conclusion

In the modern economy, the advertising sector has seen exponential growth in interest as it plays a key role in the sale of products and services. Advertising today is understood as a commercial vehicle for the realization of profit. With our work, we investigated how to price an option that allows for an advertisement to be aired at certain times in exchange for a strike price. First, we assumed the number of viewers of the advertisement behaves as a Markov chain. Then, we adopted the present worth method calculating the moments of the sum of all the discounted cash flows, earned from the fact that a certain number of people saw the advertisement.

To get the fair price of the option, we found the density function of the discounted cash flows via the method of moments with the maximum entropy approach by Mead and Papanicolaou (1984).

Applying this model to a concrete case, we found that the price value depends on the number of moments that are used for the calculation. Certainly, the way in which the number of viewers is converted into monetary amounts also influences the option price, along with the rate at which the amounts are discounted over time, the vector of the times in which the advertisement is aired, and the value of the strike price.

The accuracy of the result depends also on the accuracy of the data available and therefore, since we have data that are monthly averages, a sensitivity analysis is proposed as future work.

This paper leaves several open questions that can be addressed in future studies. Specifically, we can consider the possibility of making comparisons with further models or diversifying the application with other case studies. Moreover, it would be interesting to investigate the extension from a television network to a mobile platform with apps and software, to reach different viewers with on-demand requests. Finally, it can be possible to value the business as a compound option, in which n phases of an investment are involved.

Notes

For a comprehensive review of Monte Carlo method see Glasserman (2004).

For stochastic discount interest see Zhao and Zhang (2010).

In addition to the audience averages on the time slots, Auditel also owns data referring to audiences on the average minute, but these are not freely accessible. Hence, the results could be improved by using this data.

In this case, it would simply be necessary to discount the incoming and outgoing monetary amounts up to that time.

Auction Results for 12-month Treasury Bonds of the 12th September 2023 published by the Italian Ministry of Economy and Finance.

References

Abdel Sabour, S.A., Poulin, R.: Valuing real capital investments using the least-squares monte carlo method. Eng. Econ. 51, 141–160 (2006). https://doi.org/10.1080/00137910600705210

Amram, M., Kulatilaka, N.: Real options: managing strategic investment in an uncertain world. OUP Catalogue (1998)

Basawa, I., Rao, B.: Statistical inference for stochastic processes. Probability and mathematical statistics. Academic Press (1980) https://books.google.it/books?id=qNxQAAAAMAAJ

Bharucha-Reid, A.: Statistical inference for markov processes (p. billingsley). SIAM Rev. 4(3), 262 (1962)

Billingsley, P.: Statistical methods in markov chains. Ann. Math. Stat. 30, 12–40 (1961)

Black, F., Scholes, M.: The pricing of options and corporate liabilities. J. Polit. Econ. 81(3), 637–654 (1973)

Borison, A.: Real options analysis: where are the emperor’s clothes? J. Appl. Corp. Finance 17(2), 17–31 (2005). https://doi.org/10.1111/j.1745-6622.2005.00029.x

Boyle, P., Broadie, M., Glasserman, P.: Monte carlo methods for security pricing. J. Econ. Dynam. Control 21(8–9), 1267–1321 (1997)

Carmichael, D.G.: A cash flow view of real options. Eng. Econ. 61(4), 265–288 (2016)

Carmichael, D.G., Hersh, A.M., Parasu, P.: Real options estimate using probabilistic present worth analysis. Eng. Econ. 56(4), 295–320 (2011)

Cox, J.C., Ross, S.A., Rubinstein, M.: Option pricing: A simplified approach. J. Financ. Econ. 7(3), 229–263 (1979)

Damodaran, A.: Financing innovations and capital structure choices. J. Appl. Corp. Finance 12(1), 28–39 (1999)

Danaher, P.J., Rust, R.T.: Determining the optimal return on investment for an advertising campaign. Eur. J. Oper. Res. 95(3), 511–521 (1996)

De Blasis, R.: The price leadership share: a new measure of price discovery in financial markets. Ann. Finance 16(3), 381–405 (2020)

Di Bari, A., Santoro, D., Tarrazon-Rodon, M.A., et al.: The impact of polarity score on real option valuation for multistage projects. Quality & Quantity pp 1–20 (2023)

Duan, J., Dudley, E., Gauthier, G., et al.: Pricing discretely monitored barrier options by a Markov chain. J. Deriv. Summer 10(4), 9–31 (2003). https://doi.org/10.3905/jod.2003.319203

D’Amico, G.: Statistical inference for Markov chain european option: estimating the price, the bare risk and the theta by historical distributions of Markov chain. J. Stat. Manag. Syst. 9(3), 737–754 (2006)

D’Amico, G.: A convergence result in the estimation of Markov chains with application to compound options. J. Stat. Theory Pract. 2, 693–705 (2008)

D’Amico, G., Villani, G.: Valuation of R&D compound option using Markov chain approach. Ann. Finance 17(3), 379–404 (2021)

Fadugba, S.E., Okunlola, J.T., Adeyemo, O.A.: On the strength and weakness of binomial model for pricing vanilla options. Int. J. Adv. Res. Eng Appl. Sci. 1(1), 1–10 (2012)

Glasserman, P.: Monte Carlo methods in financial engineering, vol. 53. Springer, New York (2004)

Guadagni, P.M., Little, J.D.: A logit model of brand choice calibrated on scanner data. Mark. sci. 2(3), 203–238 (1983)

Harris, J.L., Bargh, J.A., Brownell, K.D.: Priming effects of television food advertising on eating behavior. Health Psychol. 28(4), 404 (2009)

Kalligeris, E.N., Karagrigoriou, A., Parpoula, C.: An advanced markov switching approach for the modelling of consultation rate data. Eng. Proc. 5(1), 2 (2021)

Kellogg, D., Charnes, J.M.: Real-options valuation for a biotechnology company. Finan. Anal. J. 56(3), 76–84 (2000)

Kodukula, P., Papudesu, C.: Project valuation using real options: a practitioner’s guide. J. Ross Publishing (2006)

Krishnamurthi, L., Raj, S.P.: The effect of advertising on consumer price sensitivity. J. Mark. Res. 22(2), 119–129 (1985)

Lewis, N.A., Eschenbach, T.G., Hartman, J.C.: Can we capture the value of option volatility? Eng. Econ. 53(3), 230–258 (2008)

Lo, K.H., Lan, Y.W.: An approach to the R&D value based upon real option method. Qual. Quant. 44(3), 509–527 (2008). https://doi.org/10.1007/s11135-008-9208-5

Martín-Oliver, A., Salas-Fumás, V.: The output and profit contribution of information technology and advertising investments in banks. J. Finan. Intermed. 17(2), 229–255 (2008)

McDonald, R., Siegel, D.: The value of waiting to invest. Q. J. Econ. 101(4), 707–727 (1986)

Mead, L.R., Papanicolaou, N.: Maximum entropy in the problem of moments. J. Math. Phys. 25(8), 2404–2417 (1984)

Newton, D., Paxon, D., Howell, S., et al.: Real options: evaluating corporate investment opportunities in a dynamic world. Prentice Hall, London (2001)

O’Keefe, G.J., Reid, K.: The uses and effects of public service advertising. In: Public relations research annual. Routledge, pp. 67–92 (2020)

Petronio, F., Tamborini, L., Lando, T., et al.: Portfolio selection in the brics stocks markets using markov processes. Int. J. Math. Models Methods Appl. Sci. 8, 311–318 (2014)

Putrevu, S.: Exploring the origins and information processing differences between men and women: Implications for advertisers. Acad. Mark. Sci. Rev. 10(1), 1–14 (2001)

Raj, S.P.: The effects of advertising on high and low loyalty consumer segments. J. Consum. Res. 9(1), 77–89 (1982)

Saad, T., Ruai, G.: Pymaxent: A python software for maximum entropy moment reconstruction. SoftwareX 10, 100353 (2019)

Smith, J.E., Nau, R.F.: Valuing risky projects: option pricing theory and decision analysis. Manag. Sci. 41(5), 795–816 (1995). https://doi.org/10.1287/mnsc.41.5.795

Snyder, M., DeBono, K.G.: Appeals to image and claims about quality: understanding the psychology of advertising. J. Pers. Soc. Psychol. 49(3), 586 (1985)

Zhao, X., Zhang, B.: Pricing perpetual options with stochastic discount interest rates. Qual. Quant. 46(1), 341–349 (2010). https://doi.org/10.1007/s11135-010-9358-0

Acknowledgements

Guglielmo D'Amico and Riccardo De Blasis are members of the Gruppo Nazionale Calcolo Scientifico-Istituto Nazionale di Alta Matematica (GNCS-INdAM).

Funding

Open access funding provided by Università degli Studi G. D'Annunzio Chieti Pescara within the CRUI-CARE Agreement. The authors did not receive support from any organization for the submitted work. The authors have no relevant financial or non-financial interests to disclose. All authors equally contributed to the study conception, design, material preparation, data collection and analysis. All authors read and approved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have not disclosed any competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

D’Amico, G., De Blasis, R. & Vigna, V. Advertising investments on television: real option estimation through Markov chains. Qual Quant 58, 4661–4678 (2024). https://doi.org/10.1007/s11135-024-01874-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-024-01874-1