Abstract

We study critical GI/G/1 queues under finite second-moment assumptions. We show that the busy-period distribution is regularly varying with index half. We also review previously known M/G/1/ and M/M/1 derivations, yielding exact asymptotics as well as a similar derivation for GI/M/1. The busy-period asymptotics determine the growth rate of moments of the renewal process counting busy cycles. We further use this to demonstrate a Balancing Reduces Asymptotic Variance of Outputs (BRAVO) phenomenon for the work-output process (namely the busy time). This yields new insight on the BRAVO effect. A second contribution of the paper is in settling previous conjectured results about GI/G/1 and GI/G/s BRAVO. Previously, infinite buffer BRAVO was generally only settled under fourth-moment assumptions together with an assumption about the tail of the busy period. In the current paper, we strengthen the previous results by reducing to assumptions to existence of \(2+\epsilon \) moments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper deals with the well-studied GI/G/1 queue. In many ways, this stochastic model lives in the centre of queueing theory and applied probability as it marks the border of tractable explicit models (e.g. M/G/1) and models for which asymptotic approximations are needed. Living on the explicitly intractable side of the border, GI/G/1 has motivated much early works in asymptotic approximate queueing theory such as [5, 13, 15]. Indeed, GI/G/1 is an extremely studied model (see [2, Chapter X] for an overview). Nevertheless, in this paper, we add new results to the body of knowledge about GI/G/1.

There are several stochastic processes and random variables associated with GI/G/1. Of key interest to us is the queue length process (including the customer in service), \(\{Q(t),~t\ge 0\}\), the departure counting process (indicating the number of service completions during [0, t]), \(\{D(t),~t \ge 0\}\), the busy-period random variable, B, as well as several other processes which we describe in the sequel. The processes \(Q(\cdot )\), \(D(\cdot )\) and the random variable B are constructed in a standard way (see, e.g. [2, Chapter X]) on a probability space supporting two independent i.i.d. sequences of strictly positive random variables. Namely, \(\{U_i\}_{i=1}^\infty \) denote the inter-arrival times and \(\{V_i\}_{i=1}^\infty \) denotes the service times. Note that in this paper we assume that an arrival to an empty system occurs at time 0 yielding service duration \(V_1\) and then after time \(U_1\) the next arrival occurs with service duration \(V_2\) and so forth.

As in many, but not all, of the GI/G/1 studies, our focus is on the very special critical case, i.e. we assume,

We also assume that \(\mathbb {E}[U_1^2],\, \mathbb {E}[V_1^2] < \infty \), that is, we are in the case where the inter-arrival and service sequences obey a Gaussian central limit law.

Critical GI/G/1 has been an exciting topic for research for many reasons. It lies on the border of stability (\(\mathbb {E}[U_1] > \mathbb {E}[V_1]\)) and instability (\(\mathbb {E}[U_1] < \mathbb {E}[V_1]\)). In the near-critical but stable case, sojourn time random variables are approximately exponentially distributed (under some regularity assumptions). Further the queue length and/or workload processes converge to reflected Brownian motion when viewed through the lens of diffusion scaling. Such scaling can be done both in the near-critical case or exactly at criticality. This is then a much more tractable object for further analysis, in comparison with the associated random walks that are used in the non-critical case. See, for example, [20] for a comprehensive treatment of diffusion scaling limits. A useful survey is also in [11]. So, in general, analysis in the critical case has attracted much attention.

A further specialty arising in the critical case is the well-known fact that the while the busy-period random variable is finite w.p. 1, it has infinite expectation. One of the contributions of this paper is that we further establish (under the aforementioned finite-second moment assumptions), that the busy period is a regularly varying random variable with index 1/2, where the associated slowly varying function is bounded from below. This property of the critical busy period is well-known and essentially elementary to show for the M/M/1 case. Further, for the M/G/1 case it was established as a side result in [21] with exact tail asymptotics. One of the main result of the current paper is that we find similar exact asymptotics for the GI/G/1 case. Tails and related properties of the busy period for GI/G/1 and related models have received much attention during the past 15 years. In addition to [21], the busy period has been studied under various conditions in [3, 4, 6, 7, 10, 14, 16, 18]. Our contribution to the body of knowledge in the critical case adds to this.

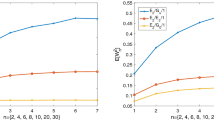

One of the important reasons for studying the tail behaviour of the critical busy-period is in relation to the BRAVO effect (Balancing Reduces Asymptotic Variance of Outputs). This is a phenomenon occurring in a variety of queueing systems (see, for example, [17]), and specifically for the critical GI/G/1. For systems without buffer limitations such as GI/G/1, it was first presented in [1]. The (somewhat counterintuitive) BRAVO effect is that the long-term variability of the output counts is less than the average variability of the arrival and service process. This is with respect to the asymptotic variance,

where \(c_a^2\) and \(c_s^2\) are the squared coefficient of variation of the building block random variables, i.e.

It thus follows that the variability function \(\lim _{t\rightarrow \infty } \mathrm{var} D(t)/\mathbb {E} D(t)\), when considered as a function of the system load, has a singular point at the system load equal 1, which can be regarded as a manifestation of the BRAVO phenomenon. More specifically, \(\overline{v}\) is essentially determined by either the arrival or the service process when for the system load is not equal 1, whereas for the system load equal 1 it is determined by both the arrival and service processes.

In [1], the BRAVO effect for GI/G/1 was established for M/M/1 by first principles and for the GI/G/1 it was derived via a classic diffusion limits for \(D(\cdot )\), [13]. Following the development of [1], a key technical component for a complete proof of GI/G/1 BRAVO is uniform integrability (UI) of the sequence,

for some non-negative \(t_0\). This UI property was only established in [1] under the simplifying assumption that \(\mathbb {E}[U_1^4],~ \mathbb {E}[V_1^4] < \infty \) and further under the assumption that the tail behaviour of the busy period was regularly varying with index half (as we establish in the current paper).

It was further conjectured in [1] that GI/G/1 BRAVO persists under the more relaxed assumptions that we consider here. By using new tail behaviour results for the busy period and further considering properties of renewal processes that generalise some results in [1], we settle this conjecture in the current paper under weaker assumptions of existence of \(2+\epsilon \) moments of generic inter-arrival and service time.

The structure of the remainder of the paper is as follows: In Sect. 2, we study the busy-period tail behaviour and compare it with the M/M/1 and M/G/1 cases. In Sect. 3, we establish GI/G/1 BRAVO under the relaxed assumptions.

2 Busy period

For analysis of the busy period, it is useful to denote \(\xi _i:= V_i-U_{i}\) for \(i=1,2,\ldots \) and

The random walk \(S_n\) is embedded within the well-known workload process. Within the first busy period, \(S_n\) denotes the workload of the system immediately after the arrival of customer n. Then the number of customers served during this busy period is \(N:= \inf \{ n \ge 1: S_n \le 0\}\). With N at hand we can then define the busy-period (duration) random variable as,

Note of course that N and the sequence \(\{V_i\}\) are generally dependent and further note that N is a stopping time with infinite expectation. We will also need the generic idle period that follows the busy period which equals

We recall that in this paper we assume the critical case when (1) holds. we will write \(f(x)\sim g(x)\) when \(\lim _{x\rightarrow \infty } f(x)/g(x)=1\). The main result of this section is given by the following theorem

Theorem 2.1

Consider the case where \(\mathbb {E}[U_1] = \mathbb {E}[V_1]\) and \(\mathbb {E}[U_1^2],~ \mathbb {E}[V_1^2]< \infty \). Then,

Proof

From [9, Thm. XVIII.5.1, p. 612], we know that the series

converges to some \(b>0\) and

where

Moreover, from [9, Thm. XII.7.1a, p. 415], we have

Combining above facts gives

In the last step we apply [19, Thm. 3.1] together with the representation (3). Namely, observe that by (6) is regularly varying and hence of consistent variation. Moreover, \(\mathbb {E}V_1^2<\infty \) and \(x\mathbb {P}(V_1>x)=o(\mathbb {P}(N>x))\). Thus

which completes the proof in view of (3). \(\square \)

From Theorem 2.1, one can recover known result for the M/G/1 queue. Indeed, in this case the idle period has an exponential distribution with the parameter \(\lambda >0\) and therefore \(\mathbb {E}I=\frac{1}{\lambda }\). Furthermore, \(c_a=1\) and hence

The M/G/1 result appeared in [21], but with a small typo for the constant in front of \(x^{-1/2}\).

In the case of G/M/1 queue, we have \(c_s=1\) and the first increasing ladder height \(H_1\) of the random walk (2) has exponential distribution with the parameter \(\lambda >0\) and therefore \(\mathbb {E}H_1=\frac{1}{\lambda }\). Moreover, by [8, eq. (4c)] we have

and therefore

Theorem 2.1 gives then

Finally we mention that the above agree with the M/M/1 case with \(\mathbb {P}(B > x ) \sim \lambda ^{-1/2} {(\pi x)}^{-1/2}\) which may be also obtained via asymptotics of Bessel functions as the distribution of B is exactly know.

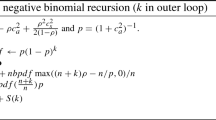

3 BRAVO

We now establish BRAVO for the GI/G/1 with what we believe to be the minimal possible set of assumptions. The result below unifies Theorem 2.1 and Theorem 2.2 of [1] and also settles Conjecture 2.2 of that paper for the single-server case.

Theorem 3.1

Consider the GI/G/1 queue with \(\mathbb {E}[U_1] = \mathbb {E}[V_1]\) and \(\mathbb {E}[U_1^{2+\epsilon }],~ \mathbb {E}[V_1^{2+\epsilon }]< \infty \) for any \(\epsilon >0\). Then,

Proof

Following Theorem 2.1 of [1], we need to show uniformly integrability (UI) of

for some \(t_0>0\). Once we establish this UI, the result follows.

We can follow the same idea like one can find in the proof of [1, Thm. 2.2]. Namely, we can apply [1, Lem. 2.1] with \(r=2+\epsilon \) for \(\epsilon >0\) and [1, Prop. 4.1, Thm. 4.1, Thm. 4.2(ii) and Thm.4.3] exchanging 4th moments by \(2+\epsilon \) ones and \(8=2^3\) should be replaced by \(2^{1+\epsilon }\). There is one crucial difference though in the proof of [1, Thm. 4.1]. Namely, without using the Wald idenity used in [1], we have to show there that, \(\mathbb {E}[M_{V(t)}^{2+\epsilon }] = O(t^{1+\epsilon /2})\), where \(M_n = \sum _{i=1}^n \frac{1}{\mathbb {E}[\zeta _i]} \zeta _i - n\) is mean zero random walk with \(\zeta _i\) being i.i.d. random variables with \(2+\epsilon \) finite moments and \(V(t) = \inf \{n: \sum _{i=1}^n \zeta _i \ge t \}\) is the first passage time.

From the Marcinkiewicz–Zygmund inequality for a stopped random walk given in [12, Thm. I.5.1(iii), p. 22] with \(r=2+\epsilon \), we can conclude that

for some constant K. Moreover, from [12, Thm. III.8.1, p. 98] we know that \(\lim _{t\rightarrow \infty } \mathbb {E}V(t)^{1+\epsilon /2}/t^{1+\epsilon /2} <\infty \). Hence

which completes the proof. \(\square \)

References

Al Hanbali, A., Mandjes, M., Nazarathy, Y., Whitt, W.: The asymptotic variance of departures in critically loaded queues. Adv. Appl. Probab. 43(1), 243–263 (2011)

Asmussen, S.: Applied Probability and Queues. Springer, Berlin (2003)

Baltrūnas, A.: Second-order tail behavior of the busy period distribution of certain GI/G/1 queues. Lith. Math. J. 42(3), 243–254 (2002)

Baltrūnas, A., Daley, D.J., Klüppelberg, C.: Tail behaviour of the busy period of a gi/gi/1 queue with subexponential service times. Stoch. Process. Appl. 111(2), 237–258 (2004)

Borovkov, A.A.: Some limit theorems in the theory of mass service. Theory Probab. Appl. 9(4), 550–565 (1964)

Denisov, D., Shneer, S.: Global and local asymptotics for the busy period of an M/G/1 queue. Queueing Syst. 64(4), 383–393 (2010)

Denisov, D., Shneer, V.: Asymptotics for the first passage times of Lévy processes and random walks. J. Appl. Probab. 50(1), 64–84 (2013)

Doney, R.A.: Moments of ladder heights in random walks. J. Appl. Probab. 17(1), 248–252 (1980)

Feller, W.: An Introduction to Probability Theory and its Applications. Volume 2. (1966)

Foss, S., Sapozhnikov, A.: On the existence of moments for the busy period in a single-server queue. Math. Oper. Res. 29(3), 592–601 (2004)

Glynn, P.W.: Diffusion approximations. In Handbooks in Operations Research, Vol. 2, D.P. Heyman and M.J. Sobel (eds.), North-Holland, Amsterdam, 145–198, (1990)

Gut, A.: Stopped Random Walks. In Limit Theorems and Applications. Sec. Ed., Springer (2009)

Iglehart, D.L., Whitt, W.: Multiple channel queues in heavy traffic: I. Adv. Appl. Probab. 2(1), 150–177 (1970)

Kim, B., Lee, J., Wee, I.: Tail asymptotics for the fundamental period in the MAP/G/1 queue. Queueing Syst. 57(1), 1–18 (2007)

Kingman, J.F.C.: On queues in heavy traffic. J. Roy. Stat. Soc. Ser. B 24, 383–392 (1962)

Liu, B., Wang, J., Zhao, Y.Q.: Tail asymptotics of the waiting time and the busy period for the M/G/1/K queues with subexponential service times. Queueing Syst. 76(1), 1–19 (2014)

Nazarathy, Y.: The variance of departure processes: puzzling behavior and open problems. Queueing Syst. 68(3–4), 385–394 (2011)

Palmowski, Z., Rolski, T.: On the exact asymptotics of the busy period in GI/G/1 queues. Adv. Appl. Probab. 38(3), 792–803 (2006)

Robert, C.Y., Segers, J.: Tails of random sums of a heavy-tailed number of light-tailed terms. Insur. Math. Econom. 43(1), 85–92 (2008)

Whitt, W.: Stochastic Process Limits. Springer, New York (2002)

Zwart, A.P.: Tail asymptotics for the busy period in the GI/G/1 queue. Math. Oper. Res. 26(3), 485–493 (2001)

Acknowledgements

The authors are very thankful to Peter Taylor that was involved in early discussions on this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is dedicated to Masakiyo Miyazawa in appreciation of his fundamental contributions to applied probability.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research of Zbigniew Palmowski is partially supported by Polish National Science Centre Grant No. 2018/29/B/ST1/00756 (2019–2022).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nazarathy, Y., Palmowski, Z. On busy periods of the critical GI/G/1 queue and BRAVO. Queueing Syst 102, 219–225 (2022). https://doi.org/10.1007/s11134-022-09858-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-022-09858-4