Abstract

Redundancy scheduling has emerged as a powerful strategy for improving response times in parallel-server systems. The key feature in redundancy scheduling is replication of a job upon arrival by dispatching replicas to different servers. Redundant copies are abandoned as soon as the first of these replicas finishes service. By creating multiple service opportunities, redundancy scheduling increases the chance of a fast response from a server that is quick to provide service and mitigates the risk of a long delay incurred when a single selected server turns out to be slow. The diversity enabled by redundant requests has been found to strongly improve the response time performance, especially in the case of highly variable service requirements. Analytical results for redundancy scheduling are unfortunately scarce however, and even the stability condition has largely remained elusive so far, except for exponentially distributed service requirements. In order to gain further insight in the role of the service requirement distribution, we explore the behavior of redundancy scheduling for scaled Bernoulli service requirements. We establish a sufficient stability condition for generally distributed service requirements, and we show that, for scaled Bernoulli service requirements, this condition is also asymptotically nearly necessary. This stability condition differs drastically from the exponential case, indicating that the stability condition depends on the service requirements in a sensitive and intricate manner.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Redundancy scheduling has recently attracted strong interest as a strategy for significantly reducing response times in parallel-server systems [1,2,3, 5,6,7,8,9,10, 13,14,18]. The key feature in redundancy scheduling is replication of a job upon arrival, allowing replicas to be assigned to, say, \(d\) different servers, chosen uniformly at random (without replacement). Redundant replicas are abandoned as soon as the first of these replicas either starts service (‘cancel-on-start’ or c.o.s.) or completes service (‘cancel-on-completion’ or c.o.c.). By creating multiple service opportunities, redundancy scheduling boosts the chance of a fast response from a server that is swift to provide service and alleviates the risk of a long delay incurred when a job is assigned to a single server that may be slow. Note that the c.o.c. and c.o.s. policies both ensure that the first replica starts service at the server with the smallest real workload, i.e., the amount of work a server needs to complete to become idle in the absence of any arrivals, among the \(d\) selected servers. The possibly concurrent service of multiple replicas under the c.o.c. policy provides a further hedge against potentially slow execution of the first replica in the case where replicas are independent (although it may also result in wastage of service effort).

The diversity offered by redundant requests has been shown to strongly improve the response time performance, especially in the case of highly variable service requirements. Analytical results for redundancy scheduling are unfortunately scarce, however, and have largely remained limited to exponentially distributed service requirements. Specifically, Gardner et al. [8] extensively analyzed the c.o.c. redundancy policy with exponentially distributed service requirements. They established the stability condition and showed that it does not depend on the number of replicas \(d\), and thus coincides with the nominal condition without any redundancy. This may be explained by the fact that even with concurrent service the expected aggregate amount of time invested in the service of a job remains equal to the mean service requirement of a single instance due to the memoryless property of the exponential distribution. In [3], the stability condition is analyzed for redundancy scheduling with exponentially distributed service requirements, but non-FCFS service disciplines such as processor sharing and random order of service, both for identical and i.i.d. replicas.

Gardner et al. [8] also derived an explicit expression for the expected latency and proved that the latency is decreasing in the number of replicas \(d\). Another approach to derive these expressions, which also can be applied to other models, such as the M / M / K queue with heterogeneous service rates or the MSCCC queue, is given in [5]. Simulation experiments additionally demonstrated greater improvements in the latency in the case of highly variable service requirements, particularly heavy-tailed distributions.

We are not aware of any analytical results for the c.o.c. redundancy policy with independent replicas and nonexponential service requirements. Hellemans & Van Houdt [12] consider the c.o.c. policy with identical replicas and derive a differential equation for the marginal workload distribution at each of the servers in a limiting regime where the number of servers grows large. While the differential equation implicitly captures the stability condition, it does not yield any analytical expression, and the derivations for identical replicas rely on highly specific arguments that do not extend to independent replicas. It is also worth observing that the c.o.s. redundancy policy is equivalent to a power-of-d version of the Join-the-Smallest-Workload (JSW) policy; see [6]. In this policy polling just two servers suffices to achieve most of the performance gain. Moreover, in [6] an exact analysis is given for c.o.s. redundancy in the case of exponential service requirements. While the workload and waiting-time distributions for these policies for general service requirements do not appear analytically tractable, the stability condition is simple and coincides with the nominal condition without any redundancy, since no concurrent service takes place.

In order to gain further insight in the role of the service requirement distribution, we focus in the present paper on the behavior of the c.o.c. redundancy policy for scaled Bernoulli service requirements. While this is admittedly a rather special case, it provides a typical instance of highly variable service requirements for which redundancy scheduling is particularly relevant, and is also of intrinsic merit given the paucity of analytical results for general service requirement distributions.

First of all, we establish a simple sufficient stability condition in terms of a lower bound for the system capacity, i.e., the maximum aggregate load that can be supported. The lower bound is obtained from a stochastic coupling between the maximum workload across all the servers and the workload in a related single-server queue with the same arrival process and a service requirement that corresponds to the minimum service requirement across d replicas. The lower bound for the system capacity grows without bound with (a) the ‘scale’ of the service requirement and (b) the number of replicas d, but remarkably enough (c) does not depend on the number of servers at all (assuming that number to be at least equal to the number of replicas d). The ‘scale’ of the service requirement here refers to its nonzero value relative to its mean and provides a proxy for the degree of variability. The growth in the system capacity with (a) and (b) reflects the huge benefits provided by redundancy scheduling for highly variable service requirements.

In view of (c), the lower bound may at first sight seem loose for a larger number of servers, but we will use a further stochastic comparison argument to prove that it is in fact asymptotically tight when the scale of the service requirement grows suitably large. This implies that increasing the number of replicas significantly increases the system capacity, while adding servers does not asymptotically. Or, stated differently, given the number of replicas d, redundancy scheduling ensures that asymptotically just d servers suffice to achieve the capacity achievable with any number of servers, which further highlights the great gains provided by redundancy scheduling for highly variable service requirements.

The remainder of the paper is organized as follows: In Sect. 2, we present a detailed model description and state a sufficient stability condition for generally distributed service requirements. In Sect. 3, we prove that this condition is also asymptotically nearly necessary for scaled Bernoulli service requirements. An upper bound for the expected waiting time is derived in Sect. 4 and in Sect. 5 we provide a conclusion.

2 Workload model and sufficient stability condition

We consider a system with \(N\) parallel servers. Jobs arrive according to a Poisson process with rate \(\lambda \). Each arriving job is replicated and immediately allocated to \(d\) servers chosen uniformly at random (without replacement). The replicas at each server are served in order of arrival (FCFS), and the job is completed as soon as the first replica finishes service, whereafter the other \(d-1\) replicas are instantaneously abandoned. The service requirements of the \(d\) replicas are assumed to be independent and identically distributed (i.i.d.) copies of some random variable \(B\). Note that this model corresponds to the independent runtime (IR) model described in [8].

Let \({\varvec{\omega }}= (\omega _{1},\dots ,\omega _{N})\) denote the workload of the system, where \(\omega _{i}\) is the workload at server i, for \(i=1,\dots ,N\). Here we define workload as the real amount of work, i.e., the amount of work a server needs to complete to become idle in the absence of any arrivals. This may be smaller than the sum of the service requirements of all the replicas at the server since some replicas may be partly or entirely abandoned; see Example 1. Let \(s_{j}\) and \(b_{j}\) denote the sampled server and the realized service requirement of the jth replica, respectively, for \(j=1,\dots ,d\). The first replica will finish service on server \(s_{j^{*}}\), where \(j^{*} = {{\,\mathrm{arg\,min}\,}}_{j \in \{1,\dots ,d\}}(\omega _{s_{j}} + b_{j} )\). The workload of server \(s_{j}\) is then \(\max \{\omega _{s_{j^{*}}} + b_{j^{*}}, \omega _{s_{j}}\}\), for \(j=1,\dots ,d\).

Example 1

Consider a system with \(N=4\), \(d=2\) and workload state \({\varvec{\omega }}=(4.1,4.1,3.5,2.3)\). Then, after an arrival with service requirements (2.2, 1.5) on servers 2 and 4, the new workload state is \({\varvec{\omega }}_{\text {new}}=(4.1,4.1,3.5,3.8)\).

Let \({\varvec{\omega }}_{(\cdot )}\) denote the workloads arranged in descending order, thus \({\varvec{\omega }}_{(\cdot )} = \{ {\varvec{\omega }}\in {\mathbb {R}}_{+}^{N}: \omega _{(1)} \ge \omega _{(2)} \ge \cdots \ge \omega _{(N)} \}\). Throughout this paper, we refer to synchronicity as the situation in which all workloads are equal, i.e., \(\omega _{1}=\cdots =\omega _{N}\). Moreover, let \({\mathcal {S}}_{\text {trun}}\) denote the truncated state space of the ordered workload vectors with \({\mathcal {S}}_{\text {trun}} = \{ {\varvec{\omega }}\in {\mathbb {R}}_{+}^{N}: \omega _{(1)} = \cdots = \omega _{(d)} \ge \omega _{(d+1)} \ge \cdots \ge \omega _{(N)} \}\).

The next property states that the \(d\) largest workloads will always be equal from some point onward. We will later see that under certain conditions the system will in fact be in full synchronicity nearly all the time.

Property 1

If \({\varvec{\omega }}\in {\mathcal {S}}_{\text {trun}}\), then \({\varvec{\omega }}_{\text {new}} \in {\mathcal {S}}_{\text {trun}}\), where \({\varvec{\omega }}_{\text {new}}\) is any future workload. In other words, once the largest \(d\) workloads are equal, they will always remain equal.

Proof

Consider the two options, either (i) \(\min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j}) \le \omega _{(1)}=\dots =\omega _{(d)}\), in which case we have \(\omega _{\text {new},s_{l}} = \max \{\min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j}),\omega _{s_{l}}\}\le \omega _{(1)}\), for \(l=1,\dots ,d\), therefore \(\omega _{(1)}=\dots =\omega _{(d)}=\omega _{\text {new},(1)}=\dots =\omega _{\text {new},(d)}\), or ii) \( \min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j} ) > \omega _{(1)} = \dots = \omega _{(d)}\), in which case \(\omega _{\text {new},s_{l}}=\max \{\min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j}),\omega _{s_{l}}\} = \min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j})\), for \(l=1,\dots ,d\), therefore \(\omega _{\text {new},s_{1}}=\dots =\omega _{\text {new},s_{d}}=\omega _{\text {new},(1)} =\dots = \omega _{\text {new},(d)}\). In both cases \({\varvec{\omega }}_{\text {new}} \in {\mathcal {S}}_{\text {trun}}\), thus by a simple induction argument it follows that there are always \(d\) servers with the same maximum workload. \(\square \)

Before stating and proving a sufficient stability condition, we prove the following lemma for generally distributed service requirements.

Lemma 1

The sequence of maximum workloads \(\omega _{(1)}\) at arbitrary epochs is stochastically upper bounded by the sequence of workloads \(\omega _{M/G/1}\) in a corresponding M / G / 1 queue with arrival rate \(\lambda _{M/G/1} = \lambda \) and generic service requirement \(B_{M/G/1}=\min \{ B_{1},\dots ,B_{d}\}\), provided that the initial maximum workload \(\omega _{(1)}\) is smaller than the initial workload in the M / G / 1 queue.

Proof

The proof follows by induction. Note that for the initial state the statement is satisfied. Assume that \(\omega _{(1)} \le \omega _{M/G/1}\) after the kth arrival. Then, after the \((k+1)\)th arrival, the new workload is \(\omega _{\text {new},s_{l}} = \max \{\min _{j \in \{1,\dots ,d\}} (\omega _{s_{j}} + b_{j}),\omega _{s_{l}}\} \le \max \{\min _{j \in \{1,\dots ,d\}} (\omega _{(1)} + b_{j}),\omega _{(1)}\} = \omega _{(1)} + \min _{j \in \{1,\dots ,d\}} b_{j}\), for \(l=1,\dots ,d\), since \(\omega _{i} \le \omega _{(1)}\) for all \(i=1,\dots ,N\). Thus the increase in maximum workload is bounded by \(\min _{j \in \{1,\dots ,d\}} b_{j}\), which is exactly the increase in workload in the corresponding M / G / 1 queue. \(\square \)

Remark 1

Observe that in synchronicity, in which all servers have the maximum workload, the bound \(\min _{j \in \{1,\dots ,d\}} b_{j}\) is tight, since here every arrival adds exactly \(\min _{j \in \{1,\dots ,d\}} b_{j}\) work to each of the \(d\) sampled servers.

Proposition 1

A sufficient stability condition is

Proof

By Lemma 1, we know that the maximum workload in the system is bounded by the workload in a corresponding M / G / 1 queue with arrival rate \(\lambda _{M/G/1} = \lambda \) and generic service requirement \(B_{M/G/1} = \min \{ B_{1},\dots ,B_{d}\}\). The (necessary and sufficient) stability condition for the latter M / G / 1 queue is given by

\(\square \)

Remark 2

Note that Property 1, Lemma 1 and Proposition 1 have an equivalent version in the case of identical replicas with \({\mathbb {E}} [\min \{ B_{1},\dots ,B_{d}\}] = {\mathbb {E}}[B]\).

In the case \(N= d\), the above condition is not only sufficient but in fact also necessary, since the system behaves exactly as the corresponding M / G / 1 queue, as also becomes apparent from [15]. In the case \(N> d\) the above condition is no longer strictly necessary; see also the stability condition for exponential service requirements in [8]. However, we will show that, surprisingly, it is asymptotically nearly necessary for independent scaled Bernoulli service requirements, which are defined as

where K is a fixed positive real number, and X is a general strictly positive random variable with \({\mathbb {E}}[X] = 1\). Moreover, we assume that \({\mathbb {E}}[B]=1\), which implies that \(p = 1 - 1/K\).

For notational convenience, we label jobs for which none of the \(d\) replicas have service requirement 0 as type-A jobs. For a type-A job \((X_{1}K,\dots ,X_{d}K)\) are the service requirements of the replicas at the \(d\) sampled servers, where the random variables \(X_{1},\dots ,X_{d}\) are i.i.d. copies of X. Jobs for which at least one replica but at most \(d-1\) replicas have service requirement equal to 0 are called type-B jobs, and jobs for which all \(d\) replicas have service requirement equal to 0 are called type-C jobs.

From Proposition 1, it follows that for independent scaled Bernoulli service requirements the sufficient stability condition reduces to

since all jobs, other than type-A jobs, which have arrival rate \((1-p)^{d} \lambda \) and service requirement \(\min \{ X_{1}K,\dots , X_{d}K \}\), have service requirements for which \(\min \{ B_{1},\dots , B_{d} \} = 0\).

3 Asymptotically necessary stability condition

In this section, we shall prove that the sufficient stability condition (2) is in fact also asymptotically nearly necessary. The proof relies on the property that the system is most of the time in synchronicity as K grows large.

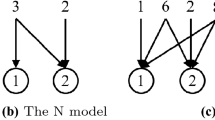

In preparation for the proof let us first define a measure for synchronicity. Let the surplus workload, denoted by \(\omega ^{+}\), be the sum of the (element-wise) differences between the maximum workload and the workload at server i for \(i=1,\dots ,N\), i.e., \(\omega ^{+} = \sum _{i=1}^{N} \left( \omega _{(1)} - \omega _{i} \right) \); see Fig. 1 for a visual representation. Note that \(\omega ^{+} = 0\) if and only if the system is in synchronicity.

In order to prove that the system is in synchronicity nearly all the time, we introduce an auxiliary system which is the same as our system except for three differences. In the auxiliary system (i) the workload at each server only decreases over time when in synchronicity, (ii) all type-A jobs are allocated to the first \(d\) ordered servers and (iii) only specific type-B jobs, so-called type-\(B_{1}\) jobs, are considered and the other type-B jobs are omitted. We define type-\(B_{1}\) jobs as ones for which \(d-1\) replicas, with at least one replica with service requirement equal to 0, are allocated to the first \(d-1\) ordered servers and one replica with service requirement \(X_{d} K\) to the \(N\)th ordered server, i.e., the server with the lowest current workload.

Below we comment on the properties of the surplus workload \({\tilde{\omega }}^{+}\) in the auxiliary system.

Property 2

The surplus workload in the auxiliary system, \({\tilde{\omega }}^{+}\), experiences downward jumps at the instants of a Poisson process of rate \(\frac{(N-d)!}{N!} (1-p)p^{d-1} \lambda \), which is exactly the arrival rate of type-\(B_{1}\) jobs. The sizes of the downward jumps are equal to \(\min \{ {\tilde{\omega }}_{(1)} - {\tilde{\omega }}_{(N)}, X_{d} K \}\).

Note that the surplus workload in the original system, \(\omega ^{+}\), experiences downward jumps at a higher rate than \({\tilde{\omega }}^{+}\), since not only type-\(B_{1}\) jobs decrease the surplus workload. Moreover, the sizes of the downward jumps in the surplus workload and in the surplus workload in the auxiliary system can differ, since these depend on the workloads in both systems (which are not necessarily equal).

Property 3

The surplus workload in the auxiliary system, \({\tilde{\omega }}^{+}\), experiences upward jumps of size exactly \((N-d) \min \{ X_{1},\dots ,X_{d}\} K\) as a Poisson process of rate \((1-p)^{d}\lambda \), which is the arrival rate of type-A jobs.

Note that the surplus workload in the original system, \(\omega ^{+}\), experiences upward jumps of smaller or equal size, since type-A jobs add at most \(\min \{ X_{1},\dots ,X_{d}\} K\) work to the current maximum workload; see Remark 1.

The number of jumps, denoted by Z, to reach synchronicity in the auxiliary system when only considering downward jumps is equal to the total number of type-\(B_{1}\) jobs that are needed at each server to bridge the difference between the maximum workload and the workload at this server. Thus, the expectation of the number of jumps to reach synchronicity, when only considering type-\(B_{1}\) jobs and starting in the initial workload state \({\varvec{\tilde{\omega }}}\), where \({\varvec{\tilde{\omega }}}\in {\mathcal {S}}_{\text {trun}}\), is

where \(S_{n} = \sum _{j=1}^{n} X_{j}\) and the renewal function m (cf. [11, Definition 10.1.6]) is given by \(m(t) = {\mathbb {E}}[ N(t)]\), with \(N(t) = \max \{n: S_{n} \le t \}\). Note that the third line holds with equality if \(\frac{{\tilde{\omega }}^{+}}{K} \not \in {\mathbb {N}}\).

For proving an asymptotically necessary stability condition, we first need to prove the following two lemmas. Lemma 2 states that the surplus workload in the auxiliary system stochastically dominates the surplus workload in the original system, and Lemma 3 states that the surplus workload in the auxiliary system is, a high fraction of the time, equal to 0 in the long term as K grows large. Together Lemmas 2 and 3 imply that the original system will also be in synchronicity a high fraction of the time in the long term as K grows large. This in turn implies that almost every arriving job will add \(B_{M/G/1} = \min \{B_{1},\dots ,B_{d} \}\) to the maximum workload. Observe that this is exactly the upper bound, see Lemma 1, which resulted in the sufficient stability condition.

Let \(\{ {\varvec{\omega }}(t) , \omega ^{+}(t) \}_{t \ge 0}\) denote the stochastic process that describes the evolution of the workload vector \({\varvec{\omega }}= (\omega _{1},\dots ,\omega _{N})\) and the surplus workload \(\omega ^{+}\) over time. We further introduce a stochastic process \(\{ {\tilde{{\varvec{\omega }}}}(t) , {\tilde{\omega }}^{+}(t) \}_{t \ge 0}\) that describes the evolution of the workload vector \({\varvec{\tilde{\omega }}}= ({\tilde{\omega }}_{1},\dots ,{\tilde{\omega }}_{N})\) and the surplus workload \({\tilde{\omega }}^{+}\) of the auxiliary system over time, with \({\tilde{\omega }}^{+}(t) = \sum _{i=1}^{N} \left( {\tilde{\omega }}_{(1)}(t) - {\tilde{\omega }}_{i}(t) \right) \).

Lemma 2

The workload vectors of the auxiliary system and the original system satisfy the inequality \({\tilde{\omega }}_{(1)}(t)-{\tilde{\omega }}_{(i)}(t) \ge \omega _{(1)}(t) - \omega _{(i)}(t)\) for all \(t \ge 0\) and \(i=1,\dots N\) when both systems experience the same arrivals and the same generic service requirements, and start in the same initial workload state \({\varvec{\tilde{\omega }}}\in {\mathcal {S}}_{\text {trun}}\), i.e., \({\varvec{\tilde{\omega }}}(0)={\varvec{\omega }}(0)={\varvec{\tilde{\omega }}}\) and \({\tilde{\omega }}_{(1)}=\dots ={\tilde{\omega }}_{(d)}\).

Proof

Since both systems start in the same initial workload state it follows that \({\tilde{\omega }}_{(1)}(0)-{\tilde{\omega }}_{(i)}(0) = \omega _{(1)}(0) - \omega _{(i)}(0)\), for \(i=1,\dots ,N\). Moreover, by Property 1, it follows that \({\tilde{\omega }}_{(1)}(t)-{\tilde{\omega }}_{(i)}(t) = \omega _{(1)}(t) - \omega _{(i)}(t) = 0\) for \(t \ge 0\) and \(i=1,\dots , d\). We prove the statement for \(i=d+1,\dots ,N\) by induction in time. Assume that \({\tilde{\omega }}_{(1)}(t_{1})-{\tilde{\omega }}_{(i)}(t_{1}) \ge \omega _{(1)}(t_{1}) - \omega _{(i)}(t_{1})\), then it should hold that \({\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2}) \ge \omega _{(1)}(t_{2}) - \omega _{(i)}(t_{2})\) for \(t_{2} > t_{1}\), when considering all the events that can occur between times \(t_{1}\) and \(t_{2}\):

-

When no arrivals occur only the value of \(\omega ^{+}(t)\) can decrease over time, since the workload at each server in the auxiliary system only decreases over time in synchronicity. Thus, it follows that \(\omega _{(1)}(t_{1}) - \omega _{(i)}(t_{1}) \ge \omega _{(1)}(t_{2}) - \omega _{(i)}(t_{2})\) (which is a strict inequality in the case \(\omega _{(i)}(t_{2})=0\)), whereas \({\tilde{\omega }}_{(1)}(t_{1})-{\tilde{\omega }}_{(i)}(t_{1}) = {\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2})\).

-

In the case of an arrival of a type-A job, the value of \({\tilde{\omega }}^{+}(t)\) increases by exactly \((N-d) \min \{ X_{1},\dots ,X_{d}\} K\), whereas the value of \(\omega ^{+}(t)\) increases by at most \((N-d) \min \{ X_{1},\dots ,X_{d}\} K\); see the proof of Lemma 1 and Property 3. Also, note that a type-A job in the auxiliary system is always allocated to the first \(d\) ordered servers, instead of \(d\) servers sampled uniformly at random. Thus, it follows that \(\min \{ X_{1},\dots ,X_{d}\} K = {\tilde{\omega }}_{(1)}(t_{2}) - {\tilde{\omega }}_{(1)}(t_{1}) \ge \omega _{(1)}(t_{2}) - \omega _{(1)}(t_{1})\) and \(0 ={\tilde{\omega }}_{(i)}(t_{2}) - {\tilde{\omega }}_{(i)}(t_{1}) \le \omega _{(i)}(t_{2}) - \omega _{(i)}(t_{1})\). Combining the latter two inequalities yields \({\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2}) \ge \omega _{(1)}(t_{2})-\omega _{(i)}(t_{2})\).

-

In the case of an arrival of a type-B job, excluding a type-\(B_{1}\) job, only the value of \(\omega ^{+}(t)\) can decrease. Thus, it follows that \(\omega _{(1)}(t_{1}) - \omega _{(i)}(t_{1}) \ge \omega _{(1)}(t_{2}) - \omega _{(i)}(t_{2})\) (which is a strict inequality in the case of a type-B job that adds workload to server i), whereas \({\tilde{\omega }}_{(1)}(t_{1})-{\tilde{\omega }}_{(i)}(t_{1}) = {\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2})\).

-

In the case of an arrival of a type-\(B_{1}\) job, the value of \({\tilde{\omega }}^{+}(t)\) decreases by \(\min \{ {\tilde{\omega }}_{(1)}(t_{1}) - {\tilde{\omega }}_{(N)}(t_{1}), X_{d} K \}\), whereas the value of \(\omega ^{+}(t)\) decreases by \(\min \{ \omega _{(1)}(t_{1}) - \omega _{(N)}(t_{1}), X_{d} K \}\). Observe that the decrement in the value of \({\tilde{\omega }}^{+}(t)\) can be greater than the decrement in the value of \(\omega ^{+}(t)\), see Property 2, but only if \(\omega _{(1)}(t_{2}) - \omega _{N*}(t_{2}) = 0\), where \(N*\) is the server at time \(t_{2}\) that had the minimum workload at time \(t_{1}\) (which is not necessarily the server with minimum workload at time \(t_{2}\)). Therefore, it follows that \({\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2}) \ge \omega _{(1)}(t_{2})-\omega _{(i)}(t_{2})\).

We conclude that in all scenarios it still holds that \({\tilde{\omega }}_{(1)}(t_{2})-{\tilde{\omega }}_{(i)}(t_{2}) \ge \omega _{(1)}(t_{2}) - \omega _{(i)}(t_{2})\). \(\square \)

Lemma 2 implies that the surplus workload in the auxiliary system, \({\tilde{\omega }}^{+}(t)\), stochastically dominates the surplus workload \(\omega ^{+}(t)\) when starting in the same initial workload state; see Fig. 2. Now we prove that the surplus workload in the auxiliary system, \({\tilde{\omega }}^{+}(t)\), is a high fraction of the time equal to 0 in the long term as K grows large.

Lemma 3

For every \(\epsilon >0\) there exists \(K_{\epsilon }(d,N)\) such that, for all \(K > K_{\epsilon }(d,N)\), the value of \( {\tilde{\omega }}^{+}(t)\) is at least a fraction \((1-\epsilon )\) of the time equal to 0 in the long term.

Proof

First denote \(\tau _{1} :=\inf \{t \ge 0 | {\tilde{\omega }}^{+}(t)>0 \}\) as the time that the value of \({\tilde{\omega }}^{+}(t)\) remains equal to 0, when starting in synchronicity. Note that \(\tau _{1}\) is the time until the next upward jump; see Property 3. Therefore the expectation of \(\tau _{1}\) is given by

Denote the time that the workload in the auxiliary system remains in non-synchronicity, i.e., the time that \({\tilde{\omega }}^{+}(t) > 0\) when starting in initial workload state \({\varvec{\tilde{\omega }}}(0) = {\varvec{\tilde{\omega }}}\), where \({\varvec{\tilde{\omega }}}\in {\mathcal {S}}_{\text {trun}}\), by \(\tau _{2} := \inf \{t \ge 0 | {\tilde{\omega }}^{+}(t)=0 \}\). Moreover, let \(\{ Y | {\varvec{\tilde{\omega }}}(0) = {\varvec{\tilde{\omega }}}\}\) denote the number of increments in the value of \({\tilde{\omega }}^{+}(t)\) before reaching synchronicity when starting in \({\varvec{\tilde{\omega }}}\in {\mathcal {S}}_{\text {trun}}\). Then the expectation of \(\tau _{2}\) is

with \({\mathbb {E}}[\min \{ X_{1},\dots ,X_{d}\}] \le {\mathbb {E}}[X] = 1\). The second equality results from Wald’s equation, i.e., the equality between the expected time to reach synchronicity (given the number of upward jumps) and the expected number of downward jumps (given the number of upward jumps) multiplied with the expected time between such downward jumps. The inequality in the next step results from the proof of Lemma 1, which implies that the surplus workload increases by at most \((N-d) \min \{ X_{1},\dots ,X_{d}\} K\) per upward jump, and using the bound on the expected number of downward jumps (given the number of upward jumps), i.e., Eq. (3).

Together with Wald’s equation

we can bound the expected time in non-synchronicity, namely

under the assumption that \(\frac{(N-d-1)!}{N!}(1-\frac{1}{K})^{d-1} > \frac{ m({\mathbb {E}}[\min \{ X_{1},\dots ,X_{d}\}])+1}{K^{d-1}}\). Moreover, \(m(\frac{{\tilde{\omega }}^{+}}{K}) \downarrow 0\) as K grows large, and by renewal theory (cf. [11]) we know that \(m({\mathbb {E}}[\min \{ X_{1},\dots ,X_{d}\}] ) < \infty \) since \({\mathbb {E}}[\min \{ X_{1},\dots ,X_{d}\}] \le 1\).

Now if we choose \(K_{\epsilon }(d,N)\) such that for \(K=K_{\epsilon }(d,N)\) one has

then for all \(K > K_{\epsilon }(d,N)\) it follows that

This completes the proof that the auxiliary surplus workload is at least a fraction \((1-\epsilon )\) of the time equal to 0 in the long term. \(\square \)

Remark 3

So far it has been assumed that in the initial state the first \(d\) ordered workloads are equal, but this assumption is not necessary. One can show via an approach analogous to Lemma 3, but with the bound \({\mathbb {E}}[Z ] < N\big ( m(\frac{{\tilde{\omega }}^{+}}{K}) + 1 \big )\), that the expected time to reach synchronicity when starting in an arbitrary initial workload state is still finite. Note that after reaching synchronicity the assumption is valid and that directly after synchronicity \({\tilde{\omega }}^{+}(t)=\omega ^{+}(t) = (N-d) \min \{ X_{1},\dots ,X_{d}\}K\).

Now we are ready to prove the main theorem of the paper.

Theorem 1

For every \(\epsilon >0\) there exists \(K_{\epsilon }(d,N)\) such that, for all \(K > K_{\epsilon }(d,N)\), a necessary stability condition for independent scaled Bernoulli service requirements is

Proof

From Lemma 2, we know that \({\tilde{\omega }}^{+}(t)\) stochastically dominates \(\omega ^{+}(t)\), and Lemma 3 states that for every \(\epsilon >0\) there exists \(K_{\epsilon }(d,N)\) such that for all \(K > K_{\epsilon }(d,N)\) the value of \({\tilde{\omega }}^{+}(t)\) is at least a fraction \((1-\epsilon )\) of the time equal to 0 in the long term. Hence, this latter statement also holds for the value of \(\omega ^{+}(t)\). Moreover, by definition, if \(\omega ^{+}(t) = 0\), then the system is in synchronicity. In synchronicity, type-A jobs add exactly \(\min \{ X_{1},\dots ,X_{d}\} K\) work to the sampled servers. We conclude that, independent of the behavior in non-synchronicity, in the long term at least a fraction \((1-\epsilon )\) of the type-A jobs adds exactly \(\min \{ X_{1},\dots ,X_{d}\} K\) work to the current maximum workload. Thus, for the system to be stable it should at least be able to handle these latter type-A jobs. \(\square \)

Remark 4

The expected time in non-synchronicity depends on the renewal function m(t); see Lemma 3. This function in turn depends on the distribution of the X component in the service requirement distribution \(B\). For some distributions an explicit expression for m(t) is known (cf. [11]):

-

\(X \equiv 1\): \(m(t) = \lfloor t \rfloor \),

-

\(X \sim \text {Exp}(1)\): \(m(t) = t\),

-

\(X \sim \text {Unif}[0,2]\): \(m(t)+1 = \sum _{i=0}^{\lfloor t/2 \rfloor } (-1)^{i} \frac{(t/2-i)^{i}}{i!} e^{t/2-i}\).

4 Numerical results

In Sect. 3 it is proven that the system, for scaled Bernoulli distributed service requirements and K large enough, is a high fraction of the time in synchronicity in the long term. In this section, we will use simulation to quantify this statement for various values of \(N\) and K, where \(d=2\) is fixed.

In Fig. 3, the long-term fraction of time in synchronicity is depicted as a function of K for various values of \(N\). The fraction \(\frac{\lambda }{K}\) is kept constant to ensure that the workload in the system is approximately equal for all K. It can be seen that the system with \(N=d\) is always in synchronicity, which follows from Property 1. Moreover, the long-term fraction of time in synchronicity is higher for lower values of \(\frac{\lambda }{K}\). The reason is that the empty state is included in the definition of synchronicity. Another observation is that for fixed \(\lambda \) and K, increasing \(N\) decreases the long-term fraction of time in synchronicity. This is related to the fact that \(K_{\epsilon }(d, N)\) defined in Lemma 3 depends on \(N\).

Figure 4 shows both the sufficient and nearly necessary stability conditions as functions of K in the setting \(N=10\) and \(d=2\). Note that the lines in the figure are not parallel; for \(K=5000\) the difference is 1000, and for \(K=10{,}000\) the difference is 850. More specifically, for K increasing the difference vanishes. Another observation obtained by simulation (not depicted in the figure) is that the stability condition of this system is (almost) equal to the sufficient stability condition. This implies that the nearly necessary stability condition tends to be a loose bound for finite values of K.

In Lemma 1 we proved that the maximum workload is bounded by the workload in a corresponding M / G / 1 queue. From this lemma it follows that for independent scaled Bernoulli service requirements, the maximum workload is bounded by the workload in a corresponding M / G / 1 queue with arrival rate \(\lambda _{M/G/1}(K) = (1-p)^{d}\lambda \) and service requirement \(B_{M/G/1}(K) =\min \{ X_{1},\dots ,X_{d}\}K\) since all arrivals, other than the arrivals of type-A jobs, have service requirements for which \(\min \{ B_{1},\dots , B_{d} \} = 0\). This bound can be used to find an upper bound on the expected waiting time since an arriving job needs to wait at most for the current maximum workload, which is bounded by the workload \(V_{M/G/1}\) in the corresponding M / G / 1 queue. From M / G / 1 theory (cf. [4, Section X.3]) we get

Note that this bound is tight for \( N= d\) since the system behaves exactly as the corresponding M / G / 1 queue, and is asymptotically tight in K for \(N> d\). For \(X \equiv 1\) constant and \(d=2\) we get

which is linear in K if we assume that \( \frac{\lambda }{K}\) is fixed. Notice that this upper bound does not depend on the number of servers.

Expected waiting time (obtained by simulation) for the setting \(d=2\), \(X \equiv 1\) and \(\frac{\lambda }{K} = 0.5\) (top) and \(\frac{\lambda }{K} = 0.9\) (bottom). Note that \(N=2\) corresponds to the upper bound given in (5)

Figure 5 shows the expected waiting time as a function of K for various values of \(N\); again we kept the fraction \(\frac{\lambda }{K}\) constant to ensure that the workload is approximately equal for all K. When comparing both figures we can conclude that for finite K the number of servers \(N\) influences the expected waiting time more than the value of the fraction \(\frac{\lambda }{K}\). Moreover, for \(N\) large, a larger K is needed for the upper bound to be accurate.

Observe that with the upper bound for the expected waiting time we also have an upper bound for the expected latency, since

where \({\mathbb {E}}[\min \{ B_{1},\dots ,B_{d}\}] \le 1\) since, by assumption, \({\mathbb {E}}[B_{i}] = 1\), for \(i=1,\dots ,d\).

Note that the upper bound for the service requirements, i.e., \({\mathbb {E}}[\min \{ B_{1},\dots ,B_{d}\}] \le 1\), is not (asymptotically) tight in K, since \({\mathbb {E}}[\min \{ B_{1},\dots ,B_{d}\}] \downarrow 0\) as K grows large. In Fig. 6 the difference between the expected latency and the expected waiting time is depicted as function of K for various values of \(N\). Indeed, it can be seen that this difference vanishes as K grows large.

5 Conclusion

In this paper, we have proven that the maximum workload in a parallel-server system with c.o.c. redundancy is upper bounded by the workload in a related M / G / 1 queue. This directly yields a sufficient stability condition. Moreover, we proved that in the case of independent scaled Bernoulli service requirements the system is asymptotically a high fraction of the time in so-called synchronicity in the long term. In synchronicity the upper bound of the related M / G / 1 queue is in fact tight, and this resulted in an asymptotically necessary stability condition. Interestingly, both the sufficient and asymptotically nearly necessary conditions are independent of the number of servers, but do depend on the number of replicas \(d\). In contrast, in the case of exponentially distributed service requirements the stability condition depends linearly on the number of servers and not on the number of replicas. This indicates that the stability condition in a c.o.c. redundancy system with i.i.d. service requirements is highly sensitive to the distribution of these service requirements.

The bound on the maximum workload also resulted in an upper bound for the expected waiting time, which is again (asymptotically) tight (as the scale of the service requirement grows large). This bound directly resulted in an upper bound for the expected latency.

We assumed that jobs arrive according to a Poisson process, but it might be possible to relax this assumption. In particular, the proof of the sufficient stability condition does not rely on Poisson arrivals and could be extended to a general arrival process. The extension of the proof of the asymptotically necessary condition is more involved. Another interesting topic for further research is to extend the developed framework to obtain the stability condition for more general service requirements. Finally, it might be possible to prove the stability condition in a c.o.c. redundancy system with i.i.d. service requirements with the additional feature of fork-join service. In such a system, a replica is created on \(d\) servers and k out of \(d\) replicas must be served completely. We expect that the notion of synchronicity continues to holds. More specifically, we expect that Proposition 1 (the sufficient stability condition) still holds when replacing \({\mathbb {E}}[\min \{B_{1},\dots ,B_{d}\}]\) by the expectation of the kth order statistic, i.e., the expectation of the kth smallest value. However, the proof of the necessary stability condition will require significantly more involved arguments.

References

Aktas, M.F., Peng, P., Sojanin, E.: Effective straggler mitigation: Which clones should attack and when? ACM SIGMETRICS Perf. Eval. Rev. 45(2), 12–14 (2017)

Aktas, M.F., Peng, P., Sojanin, E.: Straggler mitigation by delayed relaunch of tasks. ACM SIGMETRICS Perf. Eval. Rev. 45(3), 324–331 (2017)

Anton, E., Ayesta, U., Jonckheere, M., Verloop, I.M.: On the stability of redundancy models. arXiv:1903.04414 (2019)

Asmussen, S.: Applied Probability and Queues, 2nd edn. Springer, New York (2003)

Ayesta, U., Bodas, T., Dorsman, J.L., Verloop, I.M.: A token-based central queue with order-independent service rates. arXiv:1902.02137 (2019)

Ayesta, U., Bodas, T., Verloop, I.M.: On a unifying product form framework for redundancy models. Perf. Eval. 127–128, 93–119 (2018)

Gardner, K., Harchol-Balter, M., Scheller-Wolf, A., Van Houdt, B.: A better model for job redundancy: decoupling server slowdown and job size. IEEE/ACM Trans. Netw. 25(6), 3353–3367 (2017)

Gardner, K., Harchol-Balter, M., Scheller-Wolf, A., Velednitsky, M., Zbarsky, S.: Redundancy-\(d\): the power of \(d\) choices for redundancy. Oper. Res. 65(4), 1078–1094 (2017)

Gardner, K.S., Zbarsky, S., Doroudi, S., Harchol-Balter, M., Hyytia, E., Scheller-Wolf, A.: Reducing latency via redundant requests: exact analysis. ACM SIGMETRICS Perf. Eval. Rev. 43(1), 347–360 (2015)

Gardner, K.S., Zbarsky, S., Doroudi, S., Harchol-Balter, M., Hyytia, E., Scheller-Wolf, A.: Queueing with redundant requests: exact analysis. Queueing Syst. 83(3–4), 227–259 (2016)

Grimmett, G., Stirzaker, D.: Probability and Random Processes, 3rd edn. Oxford University Press, Oxford (2001)

Hellemans, T., Van Houdt, B.: Analysis of redundancy(\(d\)) with identical replicas. ACM SIGMETRICS Perf. Eval. Rev. 46(3), 74–79 (2018)

Joshi, G.: Synergy via redundancy: boosting service capacity with adaptive replication. ACM SIGMETRICS Perf. Eval. Rev. 45(3), 21–28 (2017)

Joshi, G., Soljanin, E., Wornell, G.: Efficient replication of queued tasks for latency reduction in cloud systems. In: Proc. of the Allerton Conf. (2015)

Poloczek, F., Ciucu, F.: Contrasting effects of replication in parallel systems: from overload to underload and back. ACM SIGMETRICS Perf. Eval. Rev. 44(1), 375–376 (2016)

Shah, N.B., Lee, K., Ramchandran, K.: When do redundant requests reduce latency? IEEE Trans. Commun. 64(2), 715–722 (2016)

Squillante, M.S., Xia, C.H., Yao, D.D., Zhang, L.: Threshold-based priority policies for parallel-server systems with affinity scheduling. Proc. Am. Control Conf. 4, 2992–2999 (2001)

Vulimiri, A., Godfrey, P.B., Mittal, R., Sherry, J., Ratnasamy, S., Shenker, S.: Low latency via redundancy. Proc. ACM CoNEXT 2013, 283–294 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was partly funded by the NWO Gravitation Programme NETWORKS, Grant Number 024.002.003.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Raaijmakers, Y., Borst, S. & Boxma, O. Redundancy scheduling with scaled Bernoulli service requirements. Queueing Syst 93, 67–82 (2019). https://doi.org/10.1007/s11134-019-09621-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-019-09621-2

Keywords

- Queueing

- Redundancy

- Parallel-server systems

- Dispatching

- Scaled Bernoulli service requirements

- Stability condition