Abstract

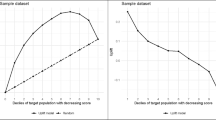

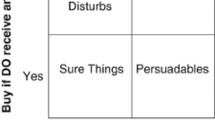

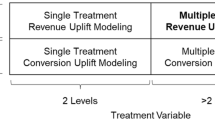

We present a general framework to target customers using optimal targeting policies, and we document the profit differences from alternative estimates of the optimal targeting policies. Two foundations of the framework are conditional average treatment effects (CATEs) and off-policy evaluation using data with randomized targeting. This policy evaluation approach allows us to evaluate an arbitrary number of different targeting policies using only one randomized data set and thus provides large cost advantages over conducting a corresponding number of field experiments. We use different CATE estimation methods to construct and compare alternative targeting policies. Our particular focus is on the distinction between indirect and direct methods. The indirect methods predict the CATEs using a conditional expectation function estimated on outcome levels, whereas the direct methods specifically predict the treatment effects of targeting. We introduce a new direct estimation method called treatment effect projection (TEP). The TEP is a non-parametric CATE estimator that we regularize using a transformed outcome loss which, in expectation, is identical to a loss that we could construct if the individual treatment effects were observed. The empirical application is to a catalog mailing with a high-dimensional set of customer features. We document the profits of the estimated policies using data from two campaigns conducted one year apart, which allows us to assess the transportability of the predictions to a campaign implemented one year after collecting the training data. All estimates of the optimal targeting policies yield larger profits than uniform policies that target none or all customers. Further, there are significant profit differences across the methods, with the direct estimation methods yielding substantially larger economic value than the indirect methods.

Similar content being viewed by others

Notes

Chapter 10, “The Predictive Modeling Process”

The uplift random forest is a precursor of the causal forest of Wager and Athey (2018).

See Imbens and Rubin (2015) for a comprehensive introduction.

It is straightforward to allow for heterogeneous profit margins and targeting costs.

The causal forest extends previous work on causal trees by Athey and Imbens (2016).

Unlike when using OLS, estimating Eq. 7 using the Lasso is not generally equivalent to estimating two separate regressions for the treated and untreated units.

We could include interactions of the features or other basis function expansions in the model to allow for non-linearities. In our application, this would increase the number of covariates from 128 to 4160. Hence, for computational ease and because we also use several non-parametric methods that allow for complicated interactions of covariates, we only employed the basic, linear specification.

Assuming normally distributed error terms, we can use the formula for the mean of a log-normally distributed random variable to predict expected spending based on the estimated expectation of the log of spending.

We implement the first architecture in the empirical application of Farrell et al. (2021) with one hidden layer, including 60 ReLU nodes and a 0.5 dropout rate. We train the network using the Adam optimizer with a 0.003 learning rate using mini-batches of the data and utilizing early stopping.

We provide an R package, causalKNN, for both the causal KNN and TEP methods: https://github.com/walterwzhang/causalKNN.

We standardize the features and measure the distance between two feature vectors using the Euclidean metric. For discrete variables, the Euclidean metric is a special case of the more general Mahalanobis distance metric. Our companion R package allows users to select either of these distance metrics.

In some regions, the company also sells through its retail stores.

The usual caveats apply: Page views and clicks are not recorded if they cannot be linked to a customer ID.

The company we collaborated with had a long history of evaluating its targeting tactics using A/B tests, and the data science team assured us that the treatment assignment in 2015 was completely randomized.

Four pairs of the selected variables have a correlation coefficient larger than 0.975. In each pair, we keep the variable that is more highly correlated with the outcome.

For instance, the universal approximation property of neural networks is guaranteed without screening or scaling the data. However, in practice, scaling the data and tweaking the architecture typically improves the estimator’s performance.

Because the words “training” and “test” have the same initial letter, we use the letter \(\mathcal {E}\), as in “estimation,” to refer to the training set.

Alternatively, we could split the sample into a training, validation, and test set. Such a splitting procedure would allow us to construct valid confidence intervals for the target parameters of interest (Chernozhukov et al., 2018; Athey and Imbens, 2019; Farrell et al., 2021). We, however, quantify the uncertainty around the expected profits for the different targeting methods using the described bootstrap algorithm. Further, using a validation set to tune hyperparameters and reduce overfitting would only apply to the Causal KNN and TEP methods. Because we pre-select the K value using the approach described in Section 7.4, overfitting is of little concern. If there were a substantial degree of overfitting, it would handicap the Causal KNN and TEP methods compared to the other targeting approaches.

We predict \(\hat{\tau }_{K}(X_{i})\) using the K nearest treated and untreated neighbors of observation i, not including i itself.

We randomly split the data into ten folds, \(k=1,\dots ,10\), and predict \(\hat{\tau }(X_{i})\) for the customers in fold k using all other folds as a training set. Because the sample size in the training sets is larger than in the bootstrap algorithm described in Section 7.3, the MSE minimizing K is also larger, \(K=2225\).

The direct estimation methods predict the CATEs but not the outcome levels. Hence, we cannot show standard fit statistics such as the MSE for outcome levels. However, Smith et al. (2023) can compare out-of-sample profit predictions and the corresponding fit statistics (hit rates and log-likelihood values) based on outcome levels. They find virtually no correlation between profits and the fit measures.

Our non-disclosure agreement with the company does not permit us to reveal the values of m and c.

The difference in the profits between the random forest and the two-forest methods suggests that forcing each tree to split on the treatment indicator at the root node, which is what the two-forest method implicitly does, affects the ability to predict CATEs accurately.

We show the expected profit estimates’ mean and 95% range over all 1000 bootstrap test sets.

The difference in profits compared to the treatment effect projections indicates that the causal forest is better at pinpointing the top customers with the largest CATEs, which accounts for the initial spikes in the profit differences.

The random forest, which generally performs poorly, is an outlier with a 15.8 targeting percentage.

The company decomposes \(\mathbb {E}[Y_{i}|X_{i}=x,W_{i}=1]\) into the purchase incidence, estimated using logistic regression, and the conditional expectation of (log) spending conditional on purchase, estimated using linear regression. The company uses only a small subset of all features chosen based on experience and experimentation with different model variants.

The predictions were obtained using ten-fold cross-validation in the 2015 data. The 95% confidence interval for the correlation with the causal forest predictions is (0.245, 0.293), and the confidence interval for the correlation with the TEP-Lasso predictions is (0.684, 0.711).

The two exceptions are the logit/OLS and two-forest models.

Quoting from Simon’s Nobel Memorial Lecture (Simon, 1979): “... by the middle 1950’s, a theory of bounded rationality had been proposed as an alternative to classical omniscient rationality, a significant number of empirical studies had been carried out that showed actual business decision making to conform reasonably well with the assumptions of bounded rationality but not with the assumptions of perfect rationality....”

The optimal K in the causal KNN regression is now larger, \(K=2475\), because we use training sets equal in size to the entire 2015 data.

Significant differences in the CATE distributions could occur if the distributions of the customer features changed substantially between 2015 and 2016.

One exception is the random forest, which has the second smallest MSE in 2016.

The transformed outcome regressions perform worse than many indirect methods. As discussed in Section 5.3.3, this is expected ex ante because the variance of the error term is dominated by the large scale of the outcome level relative to the treatment effect.

Let us state the obvious: Generalizability relies on replications and, hence, the willingness of editors and reviewers to accept replications instead of dismissing them as “already known” results.

References

Ascarza, E. (2018). Retention Futility: Targeting High Risk Customers Might be Ineffective. Journal of Marketing Research, 55, 80–98.

Ascarza, E., Ebbes, P., Netzer, O., & Danielson, M. (2017). Beyond the Target Customer: Social Effects of Customer Relationship Management Campaigns. Journal of Marketing Research, 54

Athey, S., & Imbens, G. (2016). Recursive partitioning for heterogeneous causal effects. Proceedings of the National Academy of Sciences, 113, 7353–7360.

Athey, S., & Imbens, G. W. (2019). Machine Learning Methods That Economists Should Know About. Annual Review of Economics, 11, 685–725.

Blattberg, R. C., Kim, B.-D., & Neslin, S. A. (2008). Database Marketing. Springer.

Breiman, L. (2001). Random Forests. Machine Learning, 45, 5–32.

Chernozhukov, V., Chetverikov, D., Demirer, M., Duflo, E., Hansen, C., Newey, W., & Robins, J. (2018). Double/debiased machine learning for treatment and structural parameters. Econometrics Journal, 21, C1–C68.

Dubé, J.-P., & Misra, S. (2023). Personalized Pricing and Consumer Welfare. Journal of Political Economy, 131, 131–189.

Ellickson, P. B., Kar, W., Reeder, J. C., & III. (2023). Estimating Marketing Component Effects: Double Machine Learning from Targeted Digital Promotions. Marketing Science, 42, 704–728.

Fan, J., & Lv, J. (2008). Sure Independence Screening for Ultrahigh Dimensional Feature Space. Journal of the Royal Statistical Society, Series B, 70, 849–911.

Farrell, M. H., Liang, T., & Misra, S. (2021). Deep Neural Networks for Estimation and Inference. Econometrica, 89, 181–213.

Feit, E. M., & Berman, R. (2019). Test & Roll: Profit-Maximizing A/B Tests. Marketing Science, 38, 1038–1058.

Goli, A., Reiley, D.H., & Zhang, H. (2022). Personalized Versioning: Product Strategies Constructed from Experiments on Pandora. manuscript.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. Cambridge, MA, USA: MIT Press.

Guelman, L., Guillén, M., & Pérez-Marín, A. M. (2015). Uplift Random Forests, Cybernetics and Systems: An. International Journal, 46, 230–248.

Hastie, T., Tibshirani, R., & Mainwright, M. (2015). Statistical Learning with Sparsity: The Lasso and Generalizations. CRC Press.

Hitsch, G. J., Hortaçsu, A., & Lin, X. (2021). Prices and Promotions in U.S. Retail Markets, Quantitative Marketing and Economics, 19, 289–368.

Hortaçsu, A., Natan, O. R., Parsley, H., Schwieg, T., & Williams, K. R. (2023). Organizational Structure and Pricing: Evidence From a Large U.S. Airline. Quarterly Journal of Economics (forthcoming)

Horvitz, D. G., & Thompson, D. J. (1952). A Generalization of Sampling Without Replacement from a Finite Universe. Journal of the American Statistical Association, 47, 663–685.

Imbens, G. W., & Rubin, D. B. (2015). Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction. Cambridge University Press.

Künzel, S. R., Sekhon, J. S., Bickel, P. J., & Yua, B. (2019). Meta-learners for Estimating Heterogeneous Treatment Effects using. Machine Learning

Lemmens, A., & Gupta, S. (2020). Managing Churn to Maximize Profits. Marketing Science, 39, 956–973.

Lewis, R. A., & Rao, J. M. (2015). The Unfavorable Economics of Measuring the Returns to Advertising. Quarterly Journal of Economics, 130, 1941–1973.

Misra, S., & Nair, H. S. (2011). A Structural Model of Sales-Force Compensation Dynamics: Estimation and Field Implementation. Quantitative Marketing and Economics, 9, 211–257.

Murphy, K. P. (2012). Machine Learning: A Probabilistic Perspective. MIT Press.

Nair, H. S., Misra, S., Hornbuckle, W. J., & IV., Mishra, R., & Acharya, A. (2017). Big Data and Marketing Analytics in Gaming: Combining Empirical Models and Field Experimentation. Marketing Science, 36, 699–725.

Rafieian, O., & Yoganarasimhan, H. (2022). AI and Personalization. manuscript.

Rzepakowski, P., & Jaroszewicz, S. (2012). Decision trees for uplift modeling with single and multiple treatments. Knowledge and Information Systems, 32, 303–327.

Shalev-Shwartz, S., & Ben-David, S. (2014). Understanding Machine Learning. Cambridge University Press.

Shapiro, B. T., Hitsch, G. J., & Tuchman, A. E. (2021). TV Advertising Effectiveness and Profitability: Generalizable Results from 288 Brands. Econometrica, 89, 1855–1879.

Simester, D., Timoshenko, A., & Zoumpoulis, S. I. (2020). Efficiently Evaluating Targeting Policies: Improving on Champion vs. Challenger Experiments, Management Science, 66, 3412–3424.

Simester, D., Timoshenko, A., & Zoumpoulis, S. I. (2020). Targeting Prospective Customers: Robustness of Machine-Learning Methods to Typical Data Challenges. Management Science, 66, 2495–2522.

Simon, H. A. (1979). Rational Decision Making in Business Organizations. American Economic Review, 69, 493–513.

Smith, A. N., Seiler, S., & Aggarwal, I. (2023). Optimal Price Targeting. Marketing Science, 42, 476–499.

Tibshirani, R. (1996). Regression Shrinkage and Selection via the Lasso, Journal of the Royal Statistical Society. Series B (Methodological), 58, 267–288.

Wager, S., & Athey, S. (2018). Estimation and Inference of Heterogeneous Treatment Effects using Random Forests. Journal of the American Statistical Association, 113, 1228–1242.

Yang, J., Eckles, D., Dhillon, P., & Aral, S. (2023). Targeting for Long-Term Outcomes. Management Science (forthcoming).

Yoganarasimhan, H., Barzegary, E., & Panib, A. (2023). Design and Evaluation of Optimal Free Trials. Management Science, 69, 3220–3240.

Zantedeschi, D., McDonnell Feit, E., & Bradlow, E. T. (2017). Measuring Multichannel Advertising Response. Management Science, 63, 2706–2728.

Acknowledgements

We thank Susan Athey, Sridhar Narayanan, Thomas Otter, and Bernd Skiera for their helpful comments and suggestions. We also benefited from the comments of seminar participants at the Berlin Applied Micro Seminar, Goethe University Frankfurt, the 2018 SICS Conference at Berkeley, the 2017 Marketing Science Conference, and Vienna University of Economics and Business (WU). We are particularly grateful to the data science team at the company that is the source of our data for their help and numerous insights.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Proof that \(\hat{\Pi }(d)\) is an unbiased profit estimator

In Section 3.3 we defined the inverse probability-weighted profit estimator,

\(\hat{\Pi }(d)\) is an unbiased estimator for the expected profit \(\mathbb {E}[\Pi (d)|X_{1},\dots ,X_{N}]\):

The second line follows because \(\pi _{i}(0)\) and \(\pi _{i}(1)\) are independent of \(W_{i}\) conditional on \(X_{i}\), which holds because each \(W_{i}\) is a Bernoulli draw with success probability e(x), \(x=X_{i}\). Further, because the N customers in the calculation of Eq. 3 are randomly drawn from the customer population, \(\hat{\Pi }(d)\) is also an unbiased estimator of the expected profit of d in the whole customer population,

B Approach to grow a causal tree

To understand how causal trees are grown using the approach proposed by Athey and Imbens (2016), we first review the approach to grow standard trees. Standard trees are trained to predict outcome levels. The algorithm to grow a tree employs recursive binary splits to obtain a partition of the feature space into regions (leaves) \(R_{1},R_{2},\dots \) Let \(\mathcal {R}_{k}=\{i:X_{i}\in R_{k}\}\) be the set of observations in leaf \(R_{k}\), and let \(N_{k}\) be the corresponding number of observations. For any feature \(x\in R_{k}\), the predicted outcome is the average over all observations in \(R_{k}\):

The algorithm adds a split to a current terminal node, \(R_{k}\), based on a feature, \(X_{l}\), and a cutoff, \(\kappa \), such that \(R_{k}\) is divided into the regions \(R_{k1}=\{x\in R_{k}:x_{l}<\kappa \}\) and \(R_{k2}=\{x\in R_{k}:x_{l}\ge \kappa \}\). The residual sum of squares that results from such a split is given by

The splitting rule chooses a feature and a cutoff such that the resulting split yields the smallest RSS among all possible binary splits. The residual sum of squares Eq. 16 can be written as

Here, \(\hat{\mu }(X_{i})\) is the outcome prediction after splitting \(R_{k}\) into \(R_{k1}\) and \(R_{k2}\). Hence, finding a split that minimizes the residual sum of squares Eq. 16 is equivalent to finding a split that maximizes the sum of the squared predictions, \(\sum _{i\in \mathcal {R}_{k}}\hat{\mu }(X_{i})^{2}\). Furthermore, maximizing the sum of the squared predictions is equivalent to finding a split that maximizes the variance of the predictions \(\hat{\mu }(X_{i})\) across the observations in the two new leaves, \(i\in \mathcal {R}_{k1}\cup \mathcal {R}_{k2}\).

Like standard trees, causal trees are also grown using binary splitting rules. For a feature vector \(x\in R_{k}\) we predict the CATE based on the mean difference between the outcome levels of the treated and untreated units,

Here, \(\mathcal {R}_{k}(w)\) is the set of observations i such that \(X_{i}\in R_{k}\) and \(W_{i}=w\), and \(N_{k}(w)\) is the corresponding number of observations.

Adding a split to a causal tree based on an analog of the residual sum of squares Eq. 16 appears infeasible, because the treatment effects are unobserved. However, for standard trees we can see from the equivalence between Eq. 16 and Eq. 17 that any split affects the RSS only through its impact on the sum of squared predictions, \(\sum _{i\in \mathcal {R}_{k}}\hat{\mu }(X_{i})^{2}\). Hence, analogous to the approach to grow a standard tree, Athey and Imbens (2016) propose a splitting rule that maximizes \(\sum _{i\in \mathcal {R}_{k}}\hat{\tau }(X_{i})^{2}\), which maximizes the variance of the predicted treatment effects \(\hat{\tau }(X_{i})\) across the observations in the two new leaves. This splitting rule is feasible given the data and mimics the approach that would be used if the treatment effect was observed.

Causal trees differ from regression trees that add splits using the transformed outcome loss (Equation 10). For the latter, the treatment indicator only enters through the loss function. In contrast, causal trees use information about the treatment indicator at each split and will be more efficient. See Athey and Imbens (2016) for a discussion contrasting the two alternative tree methods.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hitsch, G.J., Misra, S. & Zhang, W.W. Heterogeneous treatment effects and optimal targeting policy evaluation. Quant Mark Econ 22, 115–168 (2024). https://doi.org/10.1007/s11129-023-09278-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11129-023-09278-5

Keywords

- Targeting

- Customer relationship management (CRM)

- Causal inference

- Heterogeneous treatment effects

- Machine learning

- Field experiments