Abstract

One of the main important features of the noisy intermediate-scale quantum (NISQ) era is the correct evaluation and consideration of errors. In this paper, we analyse the main sources of errors in current (IBM) quantum computers and we present a useful tool (TED-qc) designed to facilitate the total error probability expected for any quantum circuit. We propose this total error probability as the best way to estimate a lower bound for the fidelity in the NISQ era, avoiding the necessity of comparing the quantum calculations with any classical one. In order to contrast the robustness of our tool we compute the total error probability that may occur in three different quantum models: 1) the Ising model, 2) the Quantum-Phase Estimation (QPE), and 3) the Grover’s algorithm. For each model, the main quantities of interest are computed and benchmarked against the reference simulator’s results as a function of the error probability for a representative and statistically significant sample size. The analysis is satisfactory in more than the \(99\%\) of the cases. In addition, we study how error mitigation techniques are able to eliminate the noise induced during the measurement. These results have been calculated for the IBM quantum computers, but both the tool and the analysis can be easily extended to any other quantum computer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum computing, i.e. the possibility to access real quantum states to realize complex calculations, has passed from a possibility to a reality [1]. Feynman’s idea [2] of using real quantum systems to simulate quantum mechanics is nowadays not a dream or an idea anymore. In the last 20 years, the capabilities of state-of-the-art quantum computers have improved a lot. As an example, this year IBM was able to implement the first 433 qubit computerFootnote 1 and it has foreseen to present a computer with more than 1000 qubits in the near futureFootnote 2 Ideal quantum computers are supposed to be able to realize calculations not possible for classical computers with great accuracy. To do so, these computers require at least thousands of qubits in order to use many of them for quantum error corrections [3,4,5]. Unfortunately, we are still far from this situation, and in the noisy intermediate-scale quantum (NISQ) era, the scientific and technological efforts focus on evaluation, control, and reduction of the physical errors [6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22].

The NISQ devices are composed of a couple of tens of qubits and presumably a couple of hundreds in the near future. One of their most important characteristics is their imperfect nature, as their name indicates they are noisy. Nowadays, the best-performing quantum computers are based on transmon qubits [23]; however, they suffer from four main limitations. First of all, in these devices, the qubit’s state stability is known to be of the order of hundreds of microseconds, so we cannot perform very long calculations. In the second order, the gates acting on the qubits are noisy, so every time we perform any calculation we are losing accuracy. In the third place, the measurement process of the qubit state is subject to errors. The last thing to take into account is the limited number of available physical qubits.

Normally, the errors induced by these limitations are analysed by comparing the noisy quantum calculations against the classical noiseless results. This method is really precise, but it cannot be used for calculations that exceed the capacity of classical computers. As quantum supremacy is expected in the NISQ era, we propose to use the total error probability as the best way to measure the role of errors in this period. Our main purpose in this paper is to analyse these limitations by considering representative statistical samples in different quantum algorithms as a function of the total error probability. By doing it, we will be able to ensure that this total error probability obtained before any quantum calculation correctly represents an upper bound for the error induced in the actual calculation. Several approaches are described in the literature to estimate the circuit error of a quantum program, which either need the use of a quantum devices [24, 25] or just take into account the gate operation errors [26,27,28]. Our method not only introduces the errors induced by the instability of the qubits, which as we will see is one of the main sources of the total error, but it can be used to set properly the qubit map before executing in a real quantum device.

To do so, we first produce a tool that connects with IBM’s application programming interface (API) to calculate the total error probability expected during the run of a given quantum circuit. Then, we estimate the effect of the total error probability by studying three different and representative quantum algorithms: the Ising model, the quantum phase estimation (QPE), and the Grover’s algorithm. In addition, the fidelity provides a good measure of similarity between the ideal and real quantum states/calculations. In order to contrast the difference between the ideal result (simulator) and the noisy one (physical quantum computer) we calculate the magnetization for the Ising model and phase for the QPE, which are the most important outputs of these models.

The effect of the error mitigation technique developed in Qiskit [29] is also evaluated for all these cases. The error mitigation techniques are post-processing routines that try to minimize the errors occurring in quantum computers [30,31,32,33,34,35,36,37]. The one developed in Qiskit consists in the elimination of the error induced during the measurement processes of the physical qubits. Although this technique may succeed in the elimination of measurement errors, it is important to notice that it scales exponentially with the number of qubits, so there might be situations in which its use is computationally really demanding.

The paper is structured as follows: in Sect. 2 we describe the proposed tool to calculate the total error probability. In Sect. 3 and 4 we analyse the main quantities of interest and the fidelity (respectively) of the three quantum circuits for six different IBM computers and for different qubit chains. In Sect. 5 we comment on the error mitigation routine of Qiskit. Finally, the conclusions are exposed in Sect. 6.

2 Calculating the total error probability

As we pointed out in the previous section, the estimation of errors in the NISQ era is essential in the field of quantum computing. These errors come basically from three different sources [7]:

-

The error induced by the instability of the qubits. In order to have non-trivial states we have to excite our physical qubits. The probability of finding the qubit in the excited state decays exponentially with time [29, 38], so the larger the quantum circuit the less likely is to find the qubit in the expected state. There are two possible decaying mechanisms, the decay of an excited state to the ground state, i.e. the probability of a state \(|1\rangle \) to decay to a \(|0\rangle \), and the change of the phase of an excited state, for example passing from the state \(1/\sqrt{2}(|0\rangle +|1\rangle )\) to the state \(1/\sqrt{2}(|0\rangle -|1\rangle )\).

-

Through the gates applied in the quantum circuit. The evaluation of any quantum gate carries an error with it. Single-qubit gates usually carry an error probability of the order of \(10^{-4}\) to \(10^{-3}\), while two-qubit gates usually carry an error of the order of \(10^{-3}\) to \(10^{-2}\).

-

Each qubit measurement induces an error due to the lack of precision in the physical act of measure which carries an error probability of the order of \(10^{-2}\).

All the errors depend on the physical hardware where the circuit is run. They depend not only on the specific machine, but also on the specific qubits and connections that are used. For this reason, we have developed a pre-processing computational tool that facilitates the total error probability expected for a given quantum circuit on an IBM quantum machine. We named the project “Tool for Error Description in quantum circuits” (TED-qc). The code is open source and available at the GitLab repository (https://gitlab.com/qjornet/ted-qc.git).

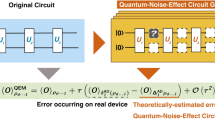

We have written our quantum circuits in Qiskit making use of more than one set of universal quantum gates. Once the quantum circuit is written on the chosen basis, it has to be sent to a particular quantum computer. This quantum computer transforms the provided gates to the universal set of quantum gates it operates. In Fig. 1 we can see how the ibmq\(\_\)belem transforms the QPE operator \(\sigma _x\) for the \(|+\rangle \) state circuit described in the Qiskit’s basis into its own gates.

All the information needed to calculate the total error probability can be extracted through IBM’s API. First of all, it gives us each gate error probability \(P^{\text {gate}}\), which takes into account which qubits have been used. The same quantum gate has different error probability depending on the qubit that is acting on. It also gives us the error probability committed during the measurement \(P^{\text {meas}}\). We will see that this error can be treated and completely reduced using the error mitigation technique. Finally, IBM’s API also provides the relaxation (\(\tau _1\)) and dephasing (\(\tau _2\)) times of the working qubits.

In order to calculate the total error probability we define the success probability, i.e. the possibility of non-committing any error, \(S_{T}\), as

where \(P_{i}\) represents the error probability from any source: single- and double-qubit gates, measurement, and qubit instabilities, and m is the total number of error sources. The relation between the error probability and success probability is defined as \(P+S=1\). The total error probability is therefore computed as

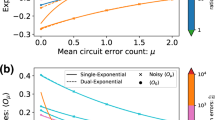

The first product in Eq. (2) accounts for all gate-related errors \(P_i^{\text {gate}}\) (both single and double), whereas the second product encompasses all explicit qubit-dependent sources of error. These include the measurement error, denoted as \(P_{\alpha }^{\text {meas}}\), and the errors arising from qubit instability, represented by \(P_{\alpha }^{\tau _1}\) and \(P_{\alpha }^{\tau _2}\). While the gate and measurement errors are directly obtained through the IBM’S API, to account for errors caused by instability qubits \(P_{\alpha }^{\tau _i}\), we consider an exponential decay of the form [29]

where \(i=1,2\) are the two instability mechanism explained before, \(t_{\alpha }\) is the total circuit time of qubit \(\alpha \) and \(\tau _{i,\alpha }\) are the relaxation and dephasing times of qubit \(\alpha \). The evaluation of Eq. (3) requires the knowledge of the time it takes for any qubit between the initialization of the circuit and the measurement \(t_{\alpha }\). This is done by adding up how long it takes for qubit \(\alpha \) to perform any single gate. The situation gets more complex when dealing with two-qubit gates. The process of executing a two-qubit gate necessitates the completion of all preceding gate operations on both qubits before the joint operation can be executed. Consequently, after incorporating the time needed for the two-qubit gate into the total time calculation, we are faced with the task of comparing the cumulative times of the two qubits involved. The crucial point here is that we do not simply add these times together. Instead, we analyse the total times for each qubit and then adopt the longer of the two as the updated qubit time. This approach ensures that we are accounting for the maximum time it could take, thus capturing the full potential for instability-induced error.

3 The error probability in three representative quantum circuits

The tool we have developed computes the total error probability of any quantum circuit for any physical qubit chain. In order to see if the computed total error probability corresponds to the real error induced by the physical qubits, we will perform calculations in three representative quantum circuits: the one-dimensional Ising model, the QPE for the \(\sigma _x\) operator, and the Grover’s algorithm, in many different qubit chains and several IBM quantum computers.

Before evaluating the effect of the total error probability in our results it is important to remind how a quantum computer works. Any time we send a job to a quantum computer it makes an important number of repetitions of the same quantum circuit, given by the number of shots, and it extracts the average between all these repetitions. So, if we say that the total error probability is, for example, of the 20\(\%\), we are saying that 80\(\%\) of the repetitions will give the correct result, but 20\(\%\) may be wrong, so the final result will be the linear combination of the 80\(\%\) correct wave functions and of the 20\(\%\) possibly wrong ones.

In the three mentioned circuits we will compare the “noisy” results, i.e. the ones obtained in a real quantum computer, with the ones obtained in the simulator. We will compare the magnetization for the Ising model, the phase for the QPE, and the probability of finding the target number for the Grover algorithm.

We will now comment on the results concerning the three different quantum circuits.

3.1 The one-dimensional Ising model

The Ising model is one of the most studied models in Physics. It explains ferro and antiferromagnetism, but it is also used to describe strongly correlated systems. The Ising model is a great example of the many advantages of quantum computing, as any electron spin can be easily mapped with a qubit, reducing a \(2^n\) problem to a linear one.

Our aim is to diagonalize the one-dimensional Ising Hamiltonian for a \(n = 4\) antiferromagnetic interaction in the presence of an external magnetic field through the unitary transformation U [39, 40].

where \(H_d\) is the diagonalized Hamiltonian, and H the Ising Hamiltonian that reads

If we apply the unitary transformation U to the eigenstates of the diagonalized Hamiltonian, we will obtain the eigenstates in our original Hamiltonian basis

The details of the construction of U are supplied in [39, 40].

We will calculate the ground state in the Hamiltonian basis for the case of a large external magnetic field, 2.5 times larger than the antiferromagnetic exchange field (\(\lambda =2.5\)), and later on, we will calculate the magnetization for this ground state. The magnetization is just the difference between spin ups and downs, or in this case between zeros and ones.

We have chosen a big external magnetic field in order to have a ground state which induces a magnetization close to the maximum solution; therefore, our ground state will be similar to the state \(|1111\rangle \). Due to this reason, any possible type of error in the calculation of the circuit will pop up with a high probability in the calculation of the magnetization. If we would have chosen other values for the external magnetic field closer to the antiferromagnetic exchange field, or even smaller, these would induce magnetization values around 0 and some of the errors could compensate with the others (the \(|0\rangle \) states which become \(|1\rangle \) may be compensated by the \(|1\rangle \) states becoming \(|0\rangle \)).

Ratio between the magnetization obtained in the physical qubits in different IBM quantum computers and the exact magnetization for the n = 4 Ising model in the large external magnetic field (\(\lambda =2.5\)) case as a function of the total error probability (\(P_{T}\)). Each point corresponds with a different qubit chain and each colour with a different IBM quantum computer

The results of the ratio between the measured and the simulated magnetizations for different physical qubit chains are presented in Fig. 2. The purple line represents the minimum value of the magnetization if the error provokes the maximum magnetization change due to the total error probability. For this value of \(\lambda \), it represents the possibility to switch from a 1 (down) to a 0 (up) for each qubit so the total change can be up to modulus 2. Therefore, the purple line changes linearly from 1 to −1 as the error probability goes from 0 to 1. We can see that all points for all different configurations stand above this line, which indicates that the total error induced by the imperfection of the physical qubits is compatible with the one that is induced taking into account the total error probability we have calculated using our tool. These results can be compared with the ones calculated by Cervera in Ref. [39] which were calculated in 2018 in the IBM quantum computers. The smallest error that we obtain for the calculation of the magnetization is smaller than 7\(\%\), while the ones in Ref. [39] for large values of the external magnetic field are in the best scenarios of the 50\(\%\). This is an impressive improvement of the performance of the current quantum computers; their error has been reduced more than 7 times in just 4 years.

3.2 The quantum phase estimation

The QPE is an algorithm that permits the calculation of the eigenvalue of any unitary matrix [1, 41] given the eigenstate or eigenvector. The eigenvalues of any unitary matrix U have modulus 1; therefore, its eigenvalue equation can be written as

where \(\theta \in \mathbb {R}\hspace{0.1cm}:\hspace{0.1cm}\theta \in [0,1)\). The QPE algorithm is crucial in quantum computing because all quantum circuits are unitary matrices. Its role is very important in more complex algorithms like Shor’s algorithm [42].

We will calculate the QPE for the \(\sigma _x\) operator and for the \(|+\rangle =(1/\sqrt{2})(|0\rangle +|1\rangle )\) eigenstate, using a total of 4 qubits, one as a register for preparing the eigenstate and other 3 to calculate the phase. Details of the quantum circuit are shown in A.1.

Results of the phase of different qubit chains in different IBM quantum computers for QPE \(\sigma _x\) circuit for the \(|+\rangle \) state as a function of the total error probability (\(P_{T}\)). Each point corresponds with a different qubit chain and each colour with a different IBM quantum computer

The phase results for different qubit chains are shown in Fig. 3. In this case, the expected result for \(\theta \) is 0 (the eigenvalue of the \(|+\rangle \) state is 1) and the purple line represents the maximum error we can generate as a function of the error probability. As in the previous case the worst scenario is to obtain \(|111\rangle \) instead of \(|000\rangle \). For the QPE circuit with 4 qubits this implies that the eigenvalue scales linearly from 0 to 7/8 as a function of the error probability. In this case, all the points stay below this line which indicates that the calculation of the total error probability matches perfectly with the errors induced by the imperfections of the physical qubits.

3.3 The Grover’s algorithm

The Grover’s algorithm, developed by Lov Grover in 1996, is a quantum search algorithm that can greatly improve the efficiency of searching through a large dataset. In classical algorithms, searching for an element that satisfies a certain property typically requires O(N) searches, where N is the size of the dataset. The Grover’s algorithm, on the other hand, can perform this search in \(O(N^{1/2})\) iterations, making it exactly (and not only asymptotically) optimal [43].

The Grover’s algorithm can be used to find elements that satisfy a wide range of properties, not just simple ones. It can be applied to search for a specific number in a list of numbers, for example. The algorithm will output the target number with a high probability if it is present in the list, and a low probability if it is not. To determine the algorithm’s performance, the probability of finding the target element can be used as a measure.

It is also worth noting that the Grover’s algorithm can only be used on unstructured databases and it is more efficient than classical algorithms when the number of solutions is smaller than the size of the data set. The algorithm is also known as the quantum search algorithm with quadratic speedup. Details of the quantum circuit are provided in A.2.

Probability of finding the target state of the Grover’s algorithm as a function of the total error probability (\(P_{T}\)). The total sample size is \(2^n=4\) for the triangles and \(2^n=8\) for the circles. Each point corresponds with a different qubit chain and each colour with a different IBM quantum computer

We can see in Fig. 4 the results of the target probability for 2 different sizes of the search list. In the first one, we have \(2^n=4\) elements, and we can see that the error probability is, in general, very small, leading to high probabilities of finding the target element. However, if we increase the size of our search list up to \(2^n=8\) elements, the total error probability increases dramatically and the probability of finding the target element decreases accordingly. The dashed (\(n=2\)) and solid (n=3) lines represent the minimum target probability [44, 45].

After evaluating the three different quantum circuits, we are able to conclude that both the information given by the API of IBM and the total error probability estimation tool are fully reliable.

In order to conclude the section, in Fig. 5 we present an error bar plot of our studied circuits highlighting the three main error sources: time-related, measurement-related, and gate operation-related errors, the latter being further differentiated into single- and double-gate operations. The error bars represent the mean value of all independently derived qubit errors for different qubit chains and quantum computers, with the corresponding standard deviation also presented. In our assessment of the three representative algorithms, the most substantial error source stems from the time factor, with gate operation errors—particularly from double gate operations—being the secondary contributor. Naturally, single-gate operations contribute negligible errors. Lastly, the error magnitude derived from measurement is solely reliant on the considered number of qubits n, thereby being independent of the circuit length.

Error contributions in the three different studied quantum circuits. The error contributions are coming from three main sources: time, measurement, and gate operations (single and double). The error bars correspond to the mean value of all independently derived qubit errors, and the standard deviations are presented

4 Fidelity

The fidelity is defined as a measure of similarity between two quantum states. In particular

where \(\Psi _{\text {sim}}\) is the state obtained in the simulator and \(\Psi _{\text {phys}}\) is the state obtained in the physical qubits. The fidelity calculation is far from trivial. In quantum computing, we do not have access to the full quantum state, but to the probabilities, therefore, methods such as the quantum state tomography [46,47,48] must be used. Even though the fidelity estimation is an active research field, the methods to calculate it are inefficient and the computation becomes unpractical even for small systems of a few qubits. In this work we consider the exact expression to compute the fidelity [48]

where \(\rho \) is the simulated state and \(\sigma \) is the physical state, \(d=2^{n}\), being n the number of qubits of the quantum circuit and k has \(4^{n}\) values, one for each operator that can be created combining n Pauli matrices. The terms \(\langle W_{k}\rangle _{\rho }\) correspond to quantum averages of combinations of Pauli matrices (\(W_{k}\)) in the \(\rho \) state. Even if the formula is exact, we can see from Eq. (9) that the number of terms increases exponentially with the number of qubits. A 4-qubit system already contains 256 terms. As every term is computed in a real quantum machine, the computed fidelity will contain errors. In order to estimate these errors we may differentiate two things. On the one hand, any single \(\langle W_{k}\rangle _{\sigma }\) calculation will carry an error that can be related as follows

where the total error probability \(P_{T\sigma }\) will be considered constant for every k. On the other hand, Eq. (9) contains a sum of a high number of circuits (\(4^n\)). We may assume that all of them are independent, so in order to estimate the total error in the fidelity we can consider the average error and not the maximum error. By doing so the obtained error for the confidence bound (purple line) is exactly \(P_{T\sigma }\), and, therefore, by using Eq. (2) we can get a value for the success probability, or what is the same, a lower bound for the fidelity (purple line). In this case, the purple line does not represent a strict lower bound because we have considered the average error and not the maximum one.

The results for the confidence bound (purple line) for the fidelity and the computed values are shown in Fig. 6. As we can see our results stay above the confidence line up to the \(90\%\) of the cases.

5 Measurement error mitigation

In this section, we evaluate the effect of the error mitigation techniques in correcting the error induced by the measurement. One way of achieving this is by using the Qiskit measurement error mitigation function, which generates a matrix M consisting of measurements over all the basis states of our system.

It is worth noting that the size of this basis grows exponentially as \(2^n\), where n is the total number of qubits. This means that for small circuits, the number of jobs is also small, but as the size of the circuit increases, the number of jobs increases dramatically. For example, for \(n=10\), it has to perform 1024 jobs, but for bigger numbers like \(n=20\), the number jumps to a million jobs. Due to this, this technique is only suitable for small circuits and its cost can be quite high.

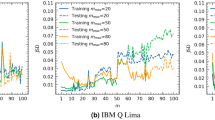

To test the effectiveness of this error mitigation routine, we repeated previous calculations using this function. The results are presented in Fig. 7, where we compare the raw and mitigated results for the Ising model, the QPE, and the Grover’s algorithm (Fig. 7a–c, respectively). The raw points have been calculated taking into account the error probability induced by the measurement in Eq. (1), while the mitigated ones have been calculated without taking it into account.

The mitigation error technique significantly improves the raw results, as all the points remain above the success probability line (purple line), Fig. 7. This means that the technique is able to eliminate all the errors induced by measurement. However, there are a few points that fall below the purple line, but since they represent less than \(1\%\), we can still assume that the mitigation error technique is successful in eliminating measurement errors.

Overall, measurement error mitigation is a crucial aspect of quantum computing and the results presented in this section demonstrate the effectiveness of using the Qiskit measurement error mitigation function.

6 Conclusions

In this work, we have developed a tool (TED-qc) that enables the calculation of the total error probability of any quantum circuit performed in an IBM quantum computer. This is a crucial result in the NISQ era because it permits us to advance the reliability of the result one may obtain in any real quantum computer. The algorithm can be run easily on any personal computer, which may help to reduce the unnecessary use of real quantum computers. It is important to remark that it permits us to estimate the error in any quantum calculation without comparing it with the classical one. Hence, the TED-qc provides a general and extensible framework designed to facilitate further progress in the field. In addition, it can be used as a pre-processing estimator for the lower bound of the fidelity.

In order to prove the robustness of this tool we have realized a big number of different calculations on three representative quantum circuits, the one-dimensional Ising model, the QPE, and the Grover’s algorithm. In these cases, we have compared the results of the physical qubits with the ones obtained in the simulator (which is noiseless) and we have printed them as a function of the total error probability. The results are very satisfactory because more than \(99\%\) of the errors were smaller than the maximum that could be predicted through the total error probability. Taking into account the statistical nature of the way this error probability is calculated we can assure that this concept as a measure of the error is both robust and reliable.

We have also studied the effect of the measurement error mitigation routine. This technique eliminates the noise produced during the measurement. In order to do so it has to perform \(2^n\) quantum jobs, being n the number of qubits we use in our quantum circuit. We have proven that this technique may be able to eliminate all the errors induced by the measurement. To do so we have studied the results obtained through the mitigation error as a function of the error probability which does not include the noise induced by the measurement. We have seen that the mitigated results are compatible with a total error probability which excludes the noise that occurs during the measurement. Nevertheless, this technique needs a very high number of evaluations and if the number of qubits we may use is high enough (more than 20), its cost may be too high to be used.

These results have been calculated for the IBM quantum computers, and in order to calculate the total error probability we have used the API of the company. Nevertheless, both the tool and the analysis can be easily extended to any other quantum computer following the same lines we have presented here.

Notes

IBM Unveils 400 Qubit-Plus Quantum Processor and Next-Generation IBM Quantum System Two, https://newsroom.ibm.com/2022-11-09-IBM-Unveils-400-Qubit-Plus-Quantum-Processor-and-Next-Generation-IBM-Quantum-System-Two.

IBM promises 1000-qubit quantum computer-a milestone-by 2023, https://www.science.org/content/article/ibm-promises-1000-qubit-quantum-computer-milestone-2023.

References

Nielsen, M.A., Chuang, I.L.: Quantum Computation and Quantum Information: 10th Anniversary Edition, 10th edn. Cambridge University Press, Cambridge (2011)

Feynman, R.P.: Simulating physics with computers. Int. J. Theoret. Phys. 21(6/7), 467–488 (1982)

Lidar, D.A., Brun, T.A.: Quantum Error Correction. Cambridge University Press, Cambridge (2013)

Terhal, B.M.: Quantum error correction for quantum memories. Rev. Mod. Phys. 87, 307–346 (2015). https://doi.org/10.1103/RevModPhys.87.307

Wendin, G.: Quantum information processing with superconducting circuits: a review. Rep. Progr. Phys. 80(10), 106001 (2017). https://doi.org/10.1088/1361-6633/aa7e1a

Bharti, K., Cervera-Lierta, A., Kyaw, T.H., Haug, T., Alperin-Lea, S., Anand, A., Degroote, M., Heimonen, H., Kottmann, J.S., Menke, T., Mok, W.-K., Sim, S., Kwek, L.-C., Aspuru-Guzik, A.: Noisy intermediate-scale quantum algorithms. Rev. Mod. Phys. 94, 015004 (2022). https://doi.org/10.1103/RevModPhys.94.015004

Leymann, F., Barzen, J.: The bitter truth about gate-based quantum algorithms in the nisq era. Quantum Sci. Technol. 5(4), 044007 (2020). https://doi.org/10.1088/2058-9565/abae7d

Porter, M.D., Joseph, I.: Observability of fidelity decay at the Lyapunov rate in few-qubit quantum simulations. Quantum 6, 799 (2022). https://doi.org/10.22331/q-2022-09-08-799

Kandala, A., Mezzacapo, A., Temme, K., Takita, M., Brink, M., Chow, J.M., Gambetta, J.M.: Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549(7671), 242–246 (2017). https://doi.org/10.1038/nature23879

Aspuru-Guzik, A., Dutoi, A.D., Love, P.J., Head-Gordon, M.: Simulated quantum computation of molecular energies. Science 309(5741), 1704–1707 (2005). https://doi.org/10.1126/science.1113479

Cerezo, M., Arrasmith, A., Babbush, R., Benjamin, S.C., Endo, S., Fujii, K., McClean, J.R., Mitarai, K., Yuan, X., Cincio, L., Coles, P.J.: Variational quantum algorithms. Nat. Rev. Phys. 3(9), 625–644 (2021). https://doi.org/10.1038/s42254-021-00348-9

Arute, F., Arya, K., Babbush, R., Bacon, D., Bardin, J.C., Barends, R., Biswas, R., Boixo, S., Brandao, F.G.S.L., Buell, D.A., Burkett, B., Chen, Y., Chen, Z., Chiaro, B., Collins, R., Courtney, W., Dunsworth, A., Farhi, E., Foxen, B., Fowler, A., Gidney, C., Giustina, M., Graff, R., Guerin, K., Habegger, S., Harrigan, M.P., Hartmann, M.J., Ho, A., Hoffmann, M., Huang, T., Humble, T.S., Isakov, S.V., Jeffrey, E., Jiang, Z., Kafri, D., Kechedzhi, K., Kelly, J., Klimov, P.V., Knysh, S., Korotkov, A., Kostritsa, F., Landhuis, D., Lindmark, M., Lucero, E., Lyakh, D., Mandrà, S., McClean, J.R., McEwen, M., Megrant, A., Mi, X., Michielsen, K., Mohseni, M., Mutus, J., Naaman, O., Neeley, M., Neill, C., Niu, M.Y., Ostby, E., Petukhov, A., Platt, J.C., Quintana, C., Rieffel, E.G., Roushan, P., Rubin, N.C., Sank, D., Satzinger, K.J., Smelyanskiy, V., Sung, K.J., Trevithick, M.D., Vainsencher, A., Villalonga, B., White, T., Yao, Z.J., Yeh, P., Zalcman, A., Neven, H., Martinis, J.M.: Quantum supremacy using a programmable superconducting processor. Nature 574(7779), 505–510 (2019). https://doi.org/10.1038/s41586-019-1666-5

Preskill, J.: Quantum Computing in the NISQ era and beyond. Quantum 2, 79 (2018). https://doi.org/10.22331/q-2018-08-06-79

Xiao, X., Freericks, J.K., Kemper, A.F.: Determining quantum phase diagrams of topological Kitaev-inspired models on NISQ quantum hardware. Quantum 5, 553 (2021). https://doi.org/10.22331/q-2021-09-28-553

Dalzell, A.M., Harrow, A.W., Koh, D.E., La Placa, R.L.: How many qubits are needed for quantum computational supremacy? Quantum 4, 264 (2020). https://doi.org/10.22331/q-2020-05-11-264

Georgopoulos, K., Emary, C., Zuliani, P.: Modeling and simulating the noisy behavior of near-term quantum computers. Phys. Rev. A 104(6), 062432 (2021)

Patel, T., Potharaju, A., Li, B., Roy, R.B., Tiwari, D.: Experimental evaluation of nisq quantum computers: error measurement, characterization, and implications. In: SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, pp. 1–15. IEEE (2020)

Nation, P.D., Kang, H., Sundaresan, N., Gambetta, J.M.: Scalable mitigation of measurement errors on quantum computers. PRX Quantum 2(4), 040326 (2021)

Weidenfeller, J., Valor, L.C., Gacon, J., Tornow, C., Bello, L., Woerner, S., Egger, D.J.: Scaling of the quantum approximate optimization algorithm on superconducting qubit based hardware. Quantum 6, 870 (2022). https://doi.org/10.22331/q-2022-12-07-870

Setiawan, F., Groszkowski, P., Ribeiro, H., Clerk, A.A.: Analytic design of accelerated adiabatic gates in realistic qubits: General theory and applications to superconducting circuits. PRX Quantum 2, 030306 (2021). https://doi.org/10.1103/PRXQuantum.2.030306

...Wu, Y., Bao, W.-S., Cao, S., Chen, F., Chen, M.-C., Chen, X., Chung, T.-H., Deng, H., Du, Y., Fan, D., Gong, M., Guo, C., Guo, C., Guo, S., Han, L., Hong, L., Huang, H.-L., Huo, Y.-H., Li, L., Li, N., Li, S., Li, Y., Liang, F., Lin, C., Lin, J., Qian, H., Qiao, D., Rong, H., Su, H., Sun, L., Wang, L., Wang, S., Wu, D., Xu, Y., Yan, K., Yang, W., Yang, Y., Ye, Y., Yin, J., Ying, C., Yu, J., Zha, C., Zhang, C., Zhang, H., Zhang, K., Zhang, Y., Zhao, H., Zhao, Y., Zhou, L., Zhu, Q., Lu, C.-Y., Peng, C.-Z., Zhu, X., Pan, J.-W.: Strong quantum computational advantage using a superconducting quantum processor. Phys. Rev. Lett. 127, 180501 (2021). https://doi.org/10.1103/PhysRevLett.127.180501

Headley, D., Müller, T., Martin, A., Solano, E., Sanz, M., Wilhelm, F.K.: Approximating the quantum approximate optimization algorithm with digital-analog interactions. Phys. Rev. A 106, 042446 (2022). https://doi.org/10.1103/PhysRevA.106.042446

Koch, J., Yu, T.M., Gambetta, J., Houck, A.A., Schuster, D.I., Majer, J., Blais, A., Devoret, M.H., Girvin, S.M., Schoelkopf, R.J.: Charge-insensitive qubit design derived from the cooper pair box. Phys. Rev. A 76, 042319 (2007). https://doi.org/10.1103/PhysRevA.76.042319

Proctor, T., Rudinger, K., Young, K., Nielsen, E., Blume-Kohout, R.: Measuring the capabilities of quantum computers. Nat. Phys. 18, 75–79 (2022). https://doi.org/10.1038/s41567-021-01409-7

Cross, A.W., Bishop, L.S., Sheldon, S., Nation, P.D., Gambetta, J.M.: Validating quantum computers using randomized model circuits. Phys. Rev. A 100, 032328 (2019). https://doi.org/10.1103/PhysRevA.100.032328

Nishio, S., Pan, Y., Satoh, T., Amano, H., Meter, R.V.: Extracting success from ibm’s 20-qubit machines using error-aware compilation. J. Emerg. Technol. Comput. Syst. (2020). https://doi.org/10.1145/3386162

Quetschlich, N., Burgholzer, L., Wille, R.: Predicting Good Quantum Circuit Compilation Options (2023)

Vadali, A., Kshirsagar, R., Shyamsundar, P., Perdue, G.N.: Quantum circuit fidelity estimation using machine learning. Quantum Mach. Intell. 6, 1 (2023). https://doi.org/10.1007/s42484-023-00121-4

Aleksandrowicz, G., Alexander, T., Barkoutsos, P., Bello, L., Ben-Haim, Y., Bucher, D., Cabrera-Hernández, F.J., Carballo-Franquis, J., Chen, A., Chen, C.-F., Chow, J.M., Córcoles-Gonzales, A.D., Cross, A.J., Cross, A., Cruz-Benito, J., Culver, C., González, S.D.L.P., Torre, E.D.L., Ding, D., Dumitrescu, E., Duran, I., Eendebak, P., Everitt, M., Sertage, I.F., Frisch, A., Fuhrer, A., Gambetta, J., Gago, B.G., Gomez-Mosquera, J., Greenberg, D., Hamamura, I., Havlicek, V., Hellmers, J., Herok, Horii, H., Hu, S., Imamichi, T., Itoko, T., Javadi-Abhari, A., Kanazawa, N., Karazeev, A., Krsulich, K., Liu, P., Luh, Y., Maeng, Y., Marques, M., Martín-Fernández, F.J., McClure, D.T., McKay, D., Meesala, S., Mezzacapo, A., Moll, N., Rodríguez, D.M., Nannicini, G., Nation, P., Ollitrault, P., O’Riordan, L.J., Paik, H., Pérez, J., Phan, A., Pistoia, M., Prutyanov, V., Reuter, M., Rice, J., Davila, A.R., Rudy, R.H.P., Ryu, M., Sathaye, N., Schnabel, C., Schoute, E., Setia, K., Shi, Y., Silva, A., Siraichi, Y., Sivarajah, S., Smolin, J.A., Soeken, M., Takahashi, H., Tavernelli, I., Taylor, C., Taylour, P., Trabing, K., Treinish, M., Turner, W., Vogt-Lee, D., Vuillot, C., Wildstrom, J.A., Wilson, J., Winston, E., Wood, C., Wood, S., Wörner, S., Akhalwaya, I.Y., Zoufal, C.: Qiskit:An Open-source Framework for Quantum Computing. Zenodo (2019). https://doi.org/10.5281/zenodo.2562111

Kandala, A., Temme, K., Córcoles, A.D., Mezzacapo, A., Chow, J.M., Gambetta, J.M.: Error mitigation extends the computational reach of a noisy quantum processor. Nature 567(7749), 491–495 (2019). https://doi.org/10.1038/s41586-019-1040-7

Berg, E.v.d., Minev, Z.K., Kandala, A., Temme, K.: Probabilistic error cancellation with sparse Pauli-Lindblad models on noisy quantum processors. arXiv (2022). https://doi.org/10.48550/ARXIV.2201.09866arXiv:2201.09866

Temme, K., Bravyi, S., Gambetta, J.M.: Error mitigation for short-depth quantum circuits. Phys. Rev. Lett. 119, 180509 (2017). https://doi.org/10.1103/PhysRevLett.119.180509

Czarnik, P., Arrasmith, A., Coles, P.J., Cincio, L.: Error mitigation with Clifford quantum-circuit data. Quantum 5, 592 (2021). https://doi.org/10.22331/q-2021-11-26-592

Cai, Z.: Quantum error mitigation using symmetry expansion. Quantum 5, 548 (2021). https://doi.org/10.22331/q-2021-09-21-548

LaRose, R., Mari, A., Kaiser, S., Karalekas, P.J., Alves, A.A., Czarnik, P., El Mandouh, M., Gordon, M.H., Hindy, Y., Robertson, A., Thakre, P., Wahl, M., Samuel, D., Mistri, R., Tremblay, M., Gardner, N., Stemen, N.T., Shammah, N., Zeng, W.J.: Mitiq: A software package for error mitigation on noisy quantum computers. Quantum 6, 774 (2022). https://doi.org/10.22331/q-2022-08-11-774

Suchsland, P., Tacchino, F., Fischer, M.H., Neupert, T., Barkoutsos, P.K., Tavernelli, I.: Algorithmic Error Mitigation Scheme for Current Quantum Processors. Quantum 5, 492 (2021). https://doi.org/10.22331/q-2021-07-01-492

Funcke, L., Hartung, T., Jansen, K., Kühn, S., Stornati, P., Wang, X.: Measurement error mitigation in quantum computers through classical bit-flip correction. Phys. Rev. A 105, 062404 (2022). https://doi.org/10.1103/PhysRevA.105.062404

McKay, D.C., Alexander, T., Bello, L., Biercuk, M.J., Bishop, L., Chen, J., Chow, J.M., Córcoles, A.D., Egger, D., Filipp, S., et al.: Qiskit backend specifications for openqasm and openpulse experiments. arXiv preprint arXiv:1809.03452 (2018)

Cervera-Lierta, A.: Exact Ising model simulation on a quantum computer. Quantum 2, 114 (2018). https://doi.org/10.22331/q-2018-12-21-114

Verstraete, F., Cirac, J.I., Latorre, J.I.: Quantum circuits for strongly correlated quantum systems. Phys. Rev. A 79, 032316 (2009). https://doi.org/10.1103/PhysRevA.79.032316

Dutkiewicz, A., Terhal, B.M., O’Brien, T.E.: Heisenberg-limited quantum phase estimation of multiple eigenvalues with few control qubits. Quantum 6, 830 (2022). https://doi.org/10.22331/q-2022-10-06-830

Shor, P.W.: Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM J. Comput. 26(5), 1484–1509 (1997). https://doi.org/10.1137/S0097539795293172

Zalka, C.: Grover’s quantum searching algorithm is optimal. Phys. Rev. A 60(4), 2746 (1999)

In a noisless evaluation of the Grover’s algorithm, the probability of finding the target state after k iterations is \(P = \sin ^2\left((2k+1)\theta \right)\) with \(\theta =\arcsin (2^{-n/2})\). Since we consider \(k\approx \frac{\pi }{4}\sqrt{2^{n}}-\frac{1}{2}\) we can write \(P = \sin ^2\left(\left(\pi 2^{\frac{n}{2}-1}+1\right)\arcsin \left(2^{-\frac{n}{2}}\right)\right)\)

Watrous, J.: Lecture Notes on Quantum Computation. https://cs.uwaterloo.ca/~watrous/QC-notes/QC-notes.13.pdf (2006)

Elben, A., Vermersch, B., Bijnen, R., Kokail, C., Brydges, T., Maier, C., Joshi, M.K., Blatt, R., Roos, C.F., Zoller, P.: Cross-platform verification of intermediate scale quantum devices. Phys. Rev. Lett. 124(1), 010504 (2020)

Lanyon, B., Maier, C., Holzäpfel, M., Baumgratz, T., Hempel, C., Jurcevic, P., Dhand, I., Buyskikh, A., Daley, A., Cramer, M., et al.: Efficient tomography of a quantum many-body system. Nat. Phys. 13(12), 1158–1162 (2017)

Flammia, S.T., Liu, Y.-K.: Direct fidelity estimation from few pauli measurements. Phys. Rev. Lett. 106(23), 230501 (2011)

Acknowledgements

We acknowledge the use of IBM Quantum services for this work. The views expressed are those of the authors and do not reflect the official policy or position of IBM or the IBM Quantum team. We thank the support of the Government of Biscay (Bizkaiko Foru Aldundia - Diputación Foral de Bizkaia) through Lantik and its Industry Focused Quantum Ecosystem initiative which provided access to the IBM quantum computers. This work was supported by Programa de Red Guipuzcoana de Ciencia, Tecnología e Innovación Proyectos de I+D, Convocatoria 2023.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

JB designed the project; UA, NS, JJ, and GS performed the calculations. All the authors contributed to the preparation of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest. The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The quantum circuits

Appendix: The quantum circuits

1.1 Quantum phase estimation

The QPE algorithm calculates the phase of the eigenvalue of a unitary matrix U for a proper eigenstate \(\psi \). The QPE uses two registers. The first one is composed of t qubits, the bigger is t the bigger is the precision of the estimation. The second register contains the \(\psi \) state. The circuit is described in Fig. 1, and it consists of applying t Hadamard gates to the t first register qubits and then controlled-U (\(U=\sigma _x\) in this case, represented with a \(+\) sign in the figure) operations in the way shown in Fig. 1. Being \(\psi \) (the eigenstate stored in the second register) an eigenstate of U (\(\sigma _x\)) it will not change during the execution of the quantum circuit as the only gates applied to it are U (\(\sigma _{x}\)) gates. After the appliance of the H and controlled-U (\(\sigma _x\)) gates the first register will read

If \(\theta \) can be expressed exactly by t bits using the binary fraction \(\theta =0._{\theta _{1}\dots \theta _{t}}=\frac{\theta _{1}}{2^{1}}+\dots +\frac{\theta _{t}}{2^{t}}\hspace{0.1cm}:\hspace{0.1cm}\theta _{1},\dots ,\theta _{t}=0,1\), then Eq. (11) may be rewritten

By taking the inverse Fourier transform of Eq. (11) the output is \(|\theta _{1}\dots \theta _{t}\rangle \) and, therefore, a measurement in the computational basis will give exactly \(\theta \) in its binary fraction form. It can be proven [1] that this method provides a good approximation of \(\theta \) even if it cannot be written as a binary fraction of t bits.

1.2 The Grover’s algorithm

The Grover algorithm is used to solve search problems. Let us understand a search problem as: Given a set \(S = \{0, 1,\ldots , 2^{n-1}\}\) of possible solutions, find x belonging to S such that \(f(x) = 1\) for a certain function f. In addition, we will assume that the element x that satisfies \(f(x) = 1\) is unique in S, and we will denote it as w. Let n be the number of qubits in the circuit.

To solve the search problem, we need to repeatedly apply the Oracle and Amplifier, where the Oracle is a unitary operator \(U_w\) that satisfies

and the Amplifier is another unitary operator that performs the inversion about the mean of amplitudes. That is, it modifies the amplitude of each state with respect to the mean of all amplitudes.

Therefore, by iterating the Oracle + Amplifier k times, where k is the integer closest to \(\frac{\pi }{4}\cdot \sqrt{2^n}\), the probability of not returning the desired element is of O(1/N), being negligible for sufficiently large N, with \(N = 2^n\).

The construction of the Oracle depends on the element that we are searching \(|w\rangle = |b_{n-1}.. b_1 b_0\rangle \). First, the Oracle applies an X gate to the i-th qubit if the element \(b_i=0\), for all \(i=0,\ldots ,n-1\). Then a multi-control-Z gate is applied on all qubits, and finally, an X gate is applied to the i-th qubit if the element \(b_i=0\). See Fig. 8 (left) for the Oracle’s implementation in the particular case for \(n = 3\) qubits and \(w = |3\rangle = |011\rangle \).

The Amplifier is constructed by applying a column of Hadamard gates on all qubits, a column of X gates on all qubits, a multi-control-Z gate on all qubits, and symmetrically a column of X gates followed by a column of Hadamard gates on all qubits, as can be appreciated in Fig. 8 (right) for \(n=3\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aseguinolaza, U., Sobrino, N., Sobrino, G. et al. Error estimation in current noisy quantum computers. Quantum Inf Process 23, 181 (2024). https://doi.org/10.1007/s11128-024-04384-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-024-04384-z