Abstract

With noisy intermediate scale quantum computers (NISQ) becoming larger in scale and more reliable, quantum circuits are growing in size and complexity. In order to face the challenge of achieving optimal circuits, design automation approaches for improving and mapping quantum circuits on different architectures have been proposed, each one characterized by a specific optimization strategy. In this article, the use of a template-based approach for quantum circuits optimization purposes is explored, and the proposal of a modular compilation toolchain, which supports three quantum technologies (nuclear magnetic resonance, trapped ions and superconducting qubits), is presented. The toolchain tackles the task of implementing logic synthesis for single-qubit and multi-qubit gates in the compilation process and it is structured with multiple steps and modular libraries. The toolchain was tested through a benchmarking procedure, and the results for a subset of complex quantum circuits as inputs are here reported, alongside a comparison with those provided by the compilers of IBM’s Qiskit and Cambridge Quantum Computing’s \( \mathrm{\left. {t|ket} \right\rangle } \). The current toolchain prototype was crafted to be an easily expandable and reliable core for future developments, which could lead it to support even more quantum technologies and a fully fledged layout synthesis. Nonetheless, the obtained results are quite encouraging, and they prove that in certain conditions the Toolchain can be competitive in quantum circuits optimization, especially when dealing with single-qubit gates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In an application scenario where quantum computing is going to be employed for facing concrete problems, in domains as Machine Learning, optimization, image processing or chemical simulations, manual quantum circuit design has become unpractical. To address the problem, the industry took inspiration from the design automation tools, vastly employed for the synthesis of digital circuits in classical computing, to produce refined, reliable quantum circuits to be adapted on the target devices. The goal is producing an optimized quantum circuit, equivalent to that of a certain quantum algorithm. The entity of the applied improvements and their focus may vary, depending on the desired performance, the target device and other degrees of freedom. These optimizations are usually performed by compilers [1], which take as input the high-level, abstract description of a certain application of a quantum algorithm or of a quantum circuit and automatically “translate” and adapt it, according to the given specifications. The resulting output is a quantum circuit built to work smoothly with the target device, tailored to properly optimize specific performance parameters.

Different figures of merit have been proposed for evaluating quantum circuit design and compilation. Among these, it is important to remind:

-

The circuit depth [2, 3], which is the length of the longest sequence of quantum gates from qubit initialization to measurement. It can be seen as a quantum equivalent of the critical path of digital circuits.

-

The total gate count, which is given by the total number of quantum gates belonging to a circuit. In some contexts, the gate count is done by distinguishing between single and two-qubit (usually CX) gates [4] or considering some application-specific critical gates, such as T and \(T^{\dagger }\) in the context of fault-tolerant quantum computation [5, 6].

The quantum circuit shown in Fig. 1 has a circuit depth equal to 5 (or 6, depending if measurement is counted or not), associated with all the gates involving the lowest and the intermediate qubits, while the total gate count is equal to 5—when no distinction between single and two-qubit gates is done—otherwise is equal to the pair (2, 3), where the two values are equal to the occurrences of single and two-qubit gates, respectively. These metrics permit to estimate the complexity of a quantum circuit in a very simple way, but in some circumstances they could be quite limiting, since they do not assign different weights to different quantum gates, as it would be expected by considering the features of current quantum computing hardware. For example, the evaluation of the effects of quantum gates duration on the circuit latency, i.e., the total time required for executing all the gates of a quantum circuit (Fig. 1 shows an example of circuit latency calculation, assuming that all quantum gates are executed in a strictly sequential way), is fundamental for estimating the circuit execution reliability on a quantum computer. It is important to remind that all quantum technologies, characterized by a qubit encoding derived from a two-level/spin-\(\frac{1}{2}\) formalism [7, 8], are affected by dynamic non-ideality phenomena, such as relaxation and decoherence [9], and that the duration of quantum gates is not fixed. For example, single-qubit gates \(R_{\{X,Y\}}(\theta )\) are implemented by exciting a two-level/spin-\(\frac{1}{2}\) system with a resonant finite-time electromagnetic field, whose duration is proportional to the gate rotation amount \(\theta \) [9, 10], as clear from Fig. 2. In order to keep negligible the effects of undesired phenomena, the circuit latency should be minimized; this can be done at compilation level by finding an equivalent circuit involving quantum gates with minimum duration.

The case of circuit latency optimization puts in evidence the necessity of exploiting figures of merit in quantum compilation taking into account the features of hardware in some way. In classical digital design and synthesis, dimensionless normalized quantities are often employed as cost functions. For example, the maximum delay of a circuit can be evaluated from the normalized execution times of its modules [11], which are estimated considering the logic gates constituting each module. A similar approach could be exploited for quantum circuits; figures of merit, assigning different weights to different gates according to their duration and implementation complexity, have been already proposed. An example of these is the cost function of the Exercise 4 of the IBM Quantum Challenge 2020 [12], related to the construction of the optimal quantum circuit of a unitary matrix:

Parameters \(n_{\text {U3}}\) and \(n_{\text {CX}}\) are the total number of U3 (single-qubit) and CX (two-qubit) gates, respectively, each of which has a different weight (1 and 10, respectively). Another example is the cost function discussed in Sect. 4.3 and used in this article, which takes into account the circuit depth and the fidelities of quantum gates, whose definition will be cleared in that section.

Current state-of-the-art compilers adopt different approaches for building the circuit, most of which exploit heuristic methods at their core. In this work, none of the more mainstream optimization strategies was chosen. In fact, instead of resorting to algebraic manipulations or graph theory, a more straightforward method was explored to evaluate if a simpler local optimization could favour the technology-specific compilation: a template-based approach. This method consists in exploiting circuit equivalences to shuffle the quantum circuit’s structure and then obtain optimizations, as represented by the example in Fig. 4 using IBM’s Quantum Experience [13]. When analyzing the quantum circuit represented in the left part of Fig. 4b, by employing the circuital equivalence described in Fig. 4a it is possible to create a redundancy to be exploited for the optimization of the circuit as a whole. The result is represented in the right part of Fig. 4b.

The goal of this article is twofold. Firstly, to determine whether a local compilation strategy based on templates could achieve similar (or even better) real-world results as those provided by a significantly more complex global compilation approach. Secondly, to provide a prototype of a core for a modular quantum circuits compilation toolchain, to use as an easily expandable and reliable base for future, more competitive developments. All the equivalences are reported in the supplementary document Avitabile et al., Supplementary Information. The compilation strategy has been tested on circuits to be executed on three supported quantum computing technologies: nuclear magnetic resonance (NMR) [14], trapped ions [15] and superconducting qubits [16]. These technologies are placed on the same level of attention in the proposed compiler; for this reason, the effort to equalize them can be considered a unique feature of the toolchain, since it differentiates it from other compilers in the state of art, that—even when they support native gates of different technologies—are mainly focused on the more easily accessible superconductive backends (suffice to remind the fewest-qubit freely accessible superconductive quantum computers from IBM).

After a brief overview of the state of the art in quantum Design Automation methods and a summary description of the compilers used in the testing phase in Sect. 2, the in-depth optimization approach, structure and technology-related optimizations of the proposed toolchain are discussed in Sect. 3. In Sect. 4 the benchmark procedures used to test the toolchain performance, in terms of compilation time and complexity of the final circuit, are explained and the obtained results are presented and compared with those of two state-of-the-art compilers, in terms of different weighted and non-weighted figures of merit. Evaluation of the toolchain competitiveness and future perspectives are then discussed in Sect. 5.

State-of-the-art quantum architecture and design automation toolchain. The logic synthesis step, on which the current version of the proposed compiler is focused, is highlighted. Image arranged from [17]

2 Background

Taking inspiration from classical computing, the quantum state of the art rapidly adopted the philosophy of the Design Automation to produce reliable architectures. The process of definition of an optimal workflow to design and optimize a quantum processor is still in part a matter of trial and error in development, but in the last decade the comprehension of quantum algorithms and quantum circuits has steadily improved in both industry and academy. Nowadays, the most crucial steps to efficiently optimize a quantum circuit are known, defined and implemented in several state-of-the-art design processes, and this gave birth to a somewhat canonical architecture design toolchain structure, such as the one described in [17] and represented in Fig. 3. As for the design methods adopted in the workflow, as stated before, there are many philosophies employed in the current state of the art. Some prefer to construct optimal realizations of reversible circuital structures defined by a given number of inputs whenever possible [18], but in more general case other strategies are commonly devised. Some of the most common approaches are based on heuristic methods that rely on a plethora of possible different logic mechanisms (transformations, Binary Decision Diagrams or BDDs, unitary matrices evaluation or search algorithms, such as the A*) [1, 18, 19] to produce reasonable solutions starting from a fixed amount of available computational resources [20]. These methods also have the peculiarity of being one of the few feasible ways to solve NP-hard problems in practice [21]. Other famous optimization strategies employ more advanced meta-heuristic algorithms to implement more unique and complex solutions. Furthermore, there is a niche of rare or experimental approaches, such as the template-based strategy [22], which will be discussed more in detail in Sect. 3.

Both the state-of-the-art quantum design flow and improvement strategies play a behind the scenes role and are implemented in the inner mechanisms of quantum software platforms [23]. The purpose of these software environments is to make available to the user a set of manipulation tools intended for quantum programs, and they are usually in charge of managing the compilation and optimization of quantum circuits in technology-agnostic and technology-specific contexts alike. Several big players in the quantum research field came up with their software platforms, using different programming languages or quantum intermediate representation languages. As references for the comparisons made to evaluate the proposed toolchain performance, two particularly successful state-of-the-art compilers were chosen: IBM’s Qiskit [24,25,26] and Cambridge Quantum Computer’s \( \left. {{\mathbf {t|ket}}} \right\rangle \) [27, 28]. Before introducing these compilers, it is important to specify that, similarly to the toolchain described in this work, they both handle quantum circuits described in OpenQASM 2.0 [29], an intermediate representation language proposed by IBM in 2017 for formalizing the design of circuits to be executed on its hardware through the Quantum Experience.

Qiskit is an open-source framework for quantum computing developed by IBM Research and implemented in Python language. The tool set specifically used to generate comparison circuits analyzed in Sect. 4 was the Qiskit Terra Transpiler, which allows an optimized transpiling of quantum circuits using a user-defined set of gates. The Transpiler supports four optimization levels, named 0, 1, 2 and 3, where an higher level corresponds to a finer optimization. Qiskit has been widely used in the quantum research community for years. Indeed, IBM’s policy of open-sourcing it and of making available tools like Quantum Experience for free made it one of the most employed quantum compilers, especially when dealing with superconducting devices, which are IBM’s flagship quantum technology.

The second reference compiler, \( \left. {{\mathbf {t|ket}}} \right\rangle \), is an architecture-agnostic quantum software developed by Cambridge Quantum Computing, implemented in C++ language. Originally this was a closed-source software, but Cambridge Quantum Computing decided to make it open-source recently [30]. This compiler manages the transpiling of machine-independent quantum algorithms into optimized quantum circuits, and it supports multiple intermediate languages (including OpenQASM 2.0). It also allows two optimization levels: standard and maximum. \( \left. \mathrm{{t|ket}} \right\rangle \) was closed-source at the time of development of the work described in this article and a Python module called pytket-qiskit was employed to access it and interface it with Qiskit for generating the reference circuits. In the past few years, \( \left. {{\mathrm{t|ket}}} \right\rangle \) proved to be an efficient platform in the state of the art, outperforming most competitors in several published benchmarks. It is especially renowned for its capability to smartly adapt a circuit to a given target device and to manage multi-qubit gates.

These two state-of-the-art compilers employ different optimization strategies. Qiskit uses a custom, internally defined sequence of algorithms and converters that employ on DoCPLEX [31], an IBM optimization library based on decision optimization through prescriptive analyitics [32]. \({\textrm{t}|\textrm{ket}} \rangle \) optimization strategy, as reported in [33], consists in a combination of “Peephole optimizations” (based on the identification of certain patterns in small windows inside the circuit), of algebraic Euler and KAK [34] decompositions (which are a known compaction method used for QCs) and of mathematical optimizations of high-level macroscopic structures. Most of these optimization methods are on average more complex than the template-based scan performed by the toolchain.

Example of application of a template as described in [35] to perform a circuital optimization

3 The proposed toolchain

3.1 The template-based approach

As introduced in Sect. 1, during the development of the toolchain instead of resorting to a complex optimization strategy based on algebraic evaluations or branching diagrams, the more “circuital” template-based approach was explored. According to this technique, the input quantum circuit is scanned, and a series of circuit equivalences and identities, denoted as templates and described in the toolchain’s libraries, are identified. Once a template is detected, the quantum gates adjacent to it are identified and, if deemed convenient, the template structure is “switched” to its equivalent form to obtain a compaction or optimization in the circuit (as represented in Fig. 4). This approach is similar to the “Peephole optimizations” employed by \( \left. {\mathrm{{t|ket}}} \right\rangle \) [33], and it is based on the very simple and intuitive concept of circuital equivalences that does not require complex mathematics or algebraic evaluation tools to be implemented. The intrinsic flaw of this philosophy is that it suffers from a limited foreseeing, since it is based on case-by-case applications performed while scanning the circuit, and is incapable of looking for multiple steps ahead. Moreover, since the quantum circuits are optimized by applying a remodeling based on a purely circuital evaluation, without analyzing the exact state of given qubits after or before each operation, the template-based approach does not consider all optimizations or equivalences based on knowing the state of certain qubits, favouring general optimizations instead. The only employed state-dependent optimizations involve the elimination of eventual \(R_Z\) gates at the beginning of each qubit line, which is assumed to be initialized to \(\left| 0\right\rangle \) (since \(R_Z \left| 0\right\rangle = \left| 0\right\rangle \)), or before a measurement (since it does not affect the probability distribution of eigenstates). Also, it has to be noted that, in the purpose of applying this core philosophy in the toolchain’s workflow, many implemented optimizations tend to prefer \(R_Z\) gates over other quantum gates. This is based on the staple principle that \(R_Z\) gates can be implemented virtually [10, 36] in an advantageous way that does not hamper the circuit latency.

Currently, the toolchain’s libraries contain a total of 27 templates belonging to three families (all reported in Avitabile et al., Supplementary Information). These templates are far more numerous \( \left. {\mathrm{{t|ket}}} \right\rangle \)’s 7 “Peephole” identities [33], but less than Qiskit’s 71 template circuits [37]. However, it has to be noted that a direct comparison between the toolchain’s templates and the Qiskit ones cannot be properly done.

First of all, Qiskit employs a broader definition of “template”, which is a sequence of gates providing the identity as global evolution. This implies that the simple sequences of two identical gates, with \(U^{\dagger } = U\) (such as H, X, Y, Z, CX and CZ) are properly treated as templates. On the other hand, templates of the presented toolchain are equivalent sequences of quantum gates, “topologically” different from each other (in sense that \(U^{\dagger } \ne U\)), which can be exploited for achieving a reduction of the total number of involved gates in a quantum circuit. This definition is a bit more strict and does not involve the previously mentioned sequences where \(U^{\dagger } = U\), which are in any case removed in a circuit by the toolchain because of their intrinsic simplicity.

Another reason of the difficulties of a quantitative comparison between the templates of Qiskit and those in the toolchain is that the first one involves forty-seven templates with Toffoli gates, which are currently too hard for a direct hardware implementation and for this reason they are not involved in the toolchain, operating with gates involving at most two qubits, more suitable for experimental execution.

The described prototype classifies templates structures in three groups:

-

1.

Simple gate equivalences (SGE), 15 templates reported in Section 2.1 of the supplementary file. They involve only single-qubit gates. Figure 7b shows equivalences belonging to this family.

-

2.

Templates that involve single-qubit gates and One Two-Qubit gate (T1TQ), 8 templates reported in Section 2.2 of the supplementary file. Some of these templates involve two different forms to be exploited as circuital equivalences, but for naming and counting purposes, those templates were still considered as a single instance instead of two different templates. In all these templates, the two-qubit gates are CX or CZ. A template belonging to this family is represented in Fig. 4a.

-

3.

Templates acting on clusters of multiple two-qubit gates (TCTQ), 4 templates reported in Section 2.3 of the supplementary file. Circuits with multiple two-qubit gates, such as the one represented in Fig. 11a, belong to this family.

There are also a few special templates that apply to the trapped ion technology’s own two-qubit gate \(\mathbf {R_{XX}}\) and to NMR technology’s own two-qubit gate \(\mathbf {R_{ZZ}}\) (reported in Section 3.2 of Avitabile et al., Supplementary Information) that for counting and nomenclature purposes were treated as an independent category.

The templates and identities contained in the toolchain’s libraries were extrapolated and validated from [8, 38,39,40,41,42] or obtained through calculation and matching of mathematical identities. These equivalences can be both technology-agnostic—i.e., applicable to all circuital structures and do not employ any quantum gate specific of a given target technology—and technology-specific, i.e., focused on creating more advantageous circuital structures for a given target technology, taking into account its native gates. The focus on the latter kind of optimization is a peculiarity which sets apart the toolchain from most state-of-the-art compilers. Instead of adopting an optimization philosophy which revolves around a single technology (usually the simpler superconducting one) and then performing a final translation and post-processing to adapt the output circuit to other target technologies, the toolchain evaluates during its run time specific optimizations based on the supported technologies’ own library of intrinsic gates. This is the case, for example, when it smartly manages CZ gates when optimizing the circuit for a technology that does not directly support them, or when considering the application of Special Templates right after the translation of CX gates into specific two-qubit gates has been performed (as described in Sect. 3.2).

3.2 The toolchain’s structure

The proposed quantum toolchain works by taking as input a given quantum circuit described in OpenQASM 2.0 and producing as output an optimized circuit, decomposed using the set of gates of a target technology, also described in OpenQASM 2.0. Referring to the state-of-the-art toolchain described in [17] and represented in Fig. 3, the toolchain operates by implementing the optimizations proper of the Logic Synthesis by using a sequence of three different scripts labeled as “Steps”. The overall workflow is represented in Fig. 5.

The optimizations performed by the toolchain are applied step-by-step on an incrementally specific target. In Step 1, the whole Clifford + T gate set is targeted by technology-agnostic optimizations with a focus on single-qubit gates, while the application of specific templates for multi-qubit gates are delegated to a later step. In Step 2, only the technology-dependent gate set is taken into account, so all single-qubit gates are translated into their corresponding of the target technology’s native set and then optimized accordingly; clearly, to perform these operations, Step 2 forsakes the technology-agnosticism of Step 1 to employ a technology-specific approach, taking in consideration only the chosen target technology and its inherent optimization process. Finally, in Step 3 an ad-hoc set of optimizations is applied to all multi-qubit gates through the application of appropriate templates and decomposition based on the target technology. Some of the Steps feature some inner loops, (e.g., those associated with the parameters IT1 and IT2 in Fig. 6) to allow a tunable grade of circuit compaction through the reiterate application of a certain subset of templates.

3.2.1 Single-qubit gates synthesis block

As observable in Fig. 5, the single-qubit gates synthesis block involves the first two steps of the toolchain:

-

Step 1: QASM template-based optimization (Fig. 6)—The aim of this step is to apply the main bulk of the circuital equivalences and template-based substitutions described in the toolchain’s libraries and to compact the input circuit as much as possible, as represented in Fig. 7a. This is achieved through the reiterative application of a series of “coarse” compactions (performed in the simple preoptimizer and simple postoptimizer blocks in Fig. 6 and based on the templates belonging to the SGE family) followed by specific, fine-grain remodelings (performed in the template-based optimizer block in Fig. 6 and based on the use of the T1TQ templates, which are applied to generate as many circuital null operations as possible, without hampering the logic of the circuit). When a straightforward elimination of redundant gates is not feasible, this Step implements some transformations to maximize the use of preferable kinds of gates, such as the virtually implementable \(R_Z\) gates (in this case, all equivalences pertaining \(R_Z\) gates from the ones of the SGE family). Step 1 is designed to be completely technology-agnostic and its optimizations are particularly efficient in reducing the number of single-qubit gates in the circuit. It exploits templates belonging not only to the SGE family, but also to the T1TQ one, such as the one represented in Fig. 4a, thus allowing a situational and yet efficient set of circuital improvements. As of now, Step 1 is compatible with an extended Clifford + T gate set, and it supports the usage of \(\varvec{R_X}\), \(\varvec{R_Y}\), \(\varvec{R_Z}\), X, Y, Z, S, T, \(\varvec{S^\dagger }\), \(\varvec{T^\dagger }\), H, CX, CZ and CCX (or Toffoli) gates in the input quantum circuit. IBM’s gate set comprising \({\textbf {U1}}(\lambda ) = R_Z(\lambda )\), \({\textbf {U2}}(\phi , \lambda ) = R_Z(\phi ) R_Y \left( \frac{\pi }{2} \right) R_Z(\lambda )\) and \({\textbf {U3}}(\theta , \phi , \lambda ) = R_Z(\phi ) R_X \left( -\frac{\pi }{2} \right) R_Z(\theta ) R_X \left( \frac{\pi }{2} \right) R_Z(\lambda )\) gates is also passively supported, but no optimizations are performed on such gates until Step 2, since it is assumed that the original input circuit only employs non technology-specific quantum gates. Generally speaking, the output produced by this Step is an optimized OpenQASM-described circuit in which all gates have been decomposed to the \(\varvec{R_X}\), \(\varvec{R_Y}\), \(\varvec{R_Z}\), CX, CZ subset of gates, and in which each Pauli gate is transformed in its rotational form using floating point notation. Step 1 accepts as input a Subcircuit parameter, which is a boolean flag that defines if the circuit is indeed a subcircuit to be used in conjunction with other QASM-described entities and thus if the optimization regarding \(R_Z\) gates at the beginning or the end of a circuit can be employed.

For what concerns the Step 1 matching order, the application of simple identities in the circuit is always performed preliminarily to the application of more complex templates. During this process, the toolchain evaluates all gates that could be a part of a complex template and leaves them “untouched”, while compacting the others. As for the complex templates, the matching is performed in a custom but interchangeable order, since all templates do not overlap between themselves. The only exception is a template concerning H gates (reported as Template H1 in Section 2.2 of Avitabile et al., Supplementary Information) which is a particularly convenient subcase of a more general template (reported as Template H2 in Section 2.2 of Avitabile et al., Supplementary Information); in particular, Template H1 is applied before Template H2 to ease the detection of the subcase structure and to speed up its substitution. Overall, the Step 1 makes extensive use of reiterated customizable loops to ensure the maximum grade of circuital compaction is achieved, as represented in Fig. 6.

-

Step 2: Technology-dependent gates compaction (Fig. 8)—The aim of this step is to translate the output circuit of Step 1 into the proper set of gates relative to the target technology, which could be specified as input and chosen from the nuclear magnetic resonance (NMR), trapped ions and superconducting technologies. The translation process, which is applied universally to single-qubit gates, does not implement a decomposition of two-qubit gates, such as the CX gates, which are left untouched in order to be exploited once in Step 3, where they will eventually be decomposed into their basic constituting gates. This procrastination in the workflow is due to the fact that all the templates that involve multiple two-qubit gates can be detected and performed much more easily with non-decomposed CX and CZ gates. This step is designed to take as inputs the optimized circuit .qasm files generated by Step 1 to work at maximum efficiency, but it can also be used on custom, unoptimized .qasm files. Along with this translation, Step 2 employs a manipulation on triplets of adjacent rotation gates of different type to achieve a further compaction of the circuit and to increase the number of \(R_Z\) gates when possible, based on the toolchain’s assumption that they can be implemented with a null duration and are thus advantageous to maximize.

This manipulation, named Eulercombo, is quite powerful, and it is based on coordinate transformations using Euler angles [43], as represented in Fig. 9. Basically, it scans the circuit and identifies triplets of consecutive single-qubit gates: once a triplet is detected, it identifies its adjacent gates and evaluates the most convenient coordinate transformations to manipulate the triplet in a way to change its external gates in the same type of their adjacent gates, thus allowing a compaction. When multi-qubit gates are involved, Eulercombo tries to generate a favourable template with them, and if this is accomplished then it manages an exploitation localized on that circuit subsection. As showed in Fig. 8, Step 2 also features:

-

A smart disposing of CZ gates for the technologies that do not support them, featuring a rearrangement in order to exploit the symmetry property of CZ gates in order to maximize the null operations, followed by an ad-hoc translation into CX gates using the equivalences reported in Figure 2 in Section 1 of Avitabile et al., Supplementary Information.

-

The optimized merging scheme for IBM’s U gates set proposed in [44], that uses the same core philosophy of the Eulercombo to maximize the compaction of U3 gates.

-

A series of further circuital compactions based on simple translations into preferable gates, such as the \(R_Z\) gates for NMR and trapped ions technologies and the U2 for the superconducting technology.

While Step 2’s role in the optimization process is fundamental to obtain quantum circuits that are tailored to a specific implementation technology, its optimizations are mostly situational, when compared to Step 1’s powerful set of circuit improvements. In terms of circuit optimization, Step 2 consolidates the reduction of the total number of single-qubit gates and it is particularly effective in dealing with long streaks of these kind of gates uninterrumpted by two-qubit gates. As of now, Step 2 supports the usage of \(\varvec{R_X}\), \(\varvec{R_Y}\), \(\varvec{R_Z}\), CX and CZ gates in the input quantum circuit and requires all single-qubit gates to be adjacent to gates of different type. The U gates set is supported in the case of the superconducting target technology, but not in the others, where an extra step of decomposition into \(R_X\), \(R_Y\) and \(R_Z\) gates (not implemented in the current toolchain) is required. This is due to the core assumption that input circuits do not employ technology-specific gate sets, just like in Step 1. All the manipulations involving Euler angles are performed through the usage of the SciPy Python library and of the NumPy Python library. Step 2 also accepts as inputs both the Subcircuit parameter and a parameter used to define the target technology. The toolchain supports the usage of the following gate sets depending on the target technology:

-

NMR technology: \(\varvec{R_X}\), \(\varvec{R_Y}\), \(\varvec{R_Z}\), CX and CZ gates.

-

Trapped ions technology: \(\varvec{R_X}\), \(\varvec{R_Y}\) or R(\(\varvec{\theta , \phi }\)) gates, \(\varvec{R_Z}\), CX gates.

-

Superconducting technology: U1, U2, U3 and CX gates.

-

Example of an Eulercombo application performed in Step 2. The triplet of gates in the middle is rearranged using Euler angles in order to allow a recombination of both gates on the sides and thus a compaction of the circuit. The usage of a central \(R_Z\) gate is preferred since none of the other two external gates is of the same type, as explained in Sect. 3.1

3.2.2 Two-qubit gates synthesis block

-

Step 3: Distribution/mirroring-based optimizations and CX gates decomposition (Fig. 10)—The aim of this step is double: the exploitation of a certain subset of templates that may ensure a steady reduction of the number of employed CX gates (using the TCTQ templates, reported in Section 2.3 of Avitabile et al., Supplementary Information), and the decomposition of each two-qubit gate by using the target technology’s own native library. This step currently does not cover the effective operations needed to the layout synthesis block described in the state-of-the-art toolchain in [17], since it lacks a routing mechanism capable of taking into account a given device’s connectivity and to map a circuit accordingly. For this first prototype of toolchain, in fact, it was preferred to focus on general-purpose optimizations and on the adaptation to theoretically fully connected technologies. Moreover, inserting SWAP gates to match the circuit’s usage of multi-qubit gate with a non-fully connected target device is left as a potential future evolution of the project. This step is designed to take as inputs the optimized circuit .qasm files generated by Step 2 to work at maximum efficiency, but it can also be used on custom, unoptimized .qasm files. This step is technology-specific exactly like Step 2, and the performed manipulations of the circuit differ greatly depending on the target technology. Step 3 has the important task of handling the complex templates that involve clusters of CX gates, such as the one represented in Fig. 11a, and is the toolchain’s primary source of multi-qubit gates optimizations. This task is followed by another important one: a smart decomposition of two-qubit gates (referred to as Special Templates in Fig. 10) and reported in Section 3.2 of Avitabile et al., Supplementary Information, aimed at minimizing the resulting translated and newly inserted single-qubit gates’ rotation angle and thus the overall circuit latency followed by some reiterated minor optimizations (including Eulercombo calls), all in order to fully adapt the circuit to the target technology with the least impact on the circuits’ gates, such as represented in Fig. 11b. In the superconducting case, where CX gates are not decomposed, this task focuses on ensuring the optimal compaction scheme proposed in [44] on all existing U gates (this is performed in the U gates merger block in Fig. 10). Both roles performed by Step 3 are essential to complete the toolchain’s proposed compilation process. This step requires all single-qubit gates to be adjacent to gates of different type. Step 3 also accepts as inputs both the subcircuit parameter and a parameter used to define the target technology. When processing an input file generated from the Step 2, it is capable of automatically recognizing the used target technology without needing an input. As represented in Fig. 10, the technology-specific templates are always applied after the technology-agnostic ones, which are also applied in a reiterated manner to achieve an higher grade of circuit compaction.

Overview of operations executed in Step [64]

At the end of the compilation phase, circuits compiled for each examined technologies are characterized by the following gates:

-

NMR technology: \(\varvec{R_X}\), \(\varvec{R_Y}\), \(\varvec{R_Z}\) and \(\varvec{R_{ZZ}}\).

-

Trapped ions technology: \(\varvec{R_X}\), \(\varvec{R_Y}\) or R(\(\varvec{\theta , \phi }\)) gates, \(\varvec{R_Z}\) and \(\varvec{R_{XX}}\).

-

Superconducting technology: U1, U2, U3 and CX gates.

While in the compilation of circuits for trapped ion and NMR technologies the effective native unitary evolutions are employed, the circuit compilation for superconducting qubits employs the CX, which is not native of this technology, but can be built from its effective two-qubit unitary evolutions [45]. According to the qubits functioning, the characteristic gate can be either the cross-resonance—substantially a \(\mathbf {R_{ZX}}\), exploited by devices with fixed resonance frequency—or the Controlled-phase or the iSWAP, typical of flux-tunable qubits.

In the proposed toolchain, the abstract gate set for IBM superconducting qubits, available in the Qiskit transpiler at the time of development of toolchain itself, is employed. This choice was made for two reasons: the first one is that the Qiskit transpiler was expected to be, even before the development of the toolchain, one of the references to be taken into account for the comparative evaluation of the presented work. The second one is that, in the compilations for all available technologies, gates which were fully supported by the QASM simulator available in Qiskit were chosen in order to facilitate the functional verification of the compiled circuits, and CX belonged to this gates set.

3.3 Implementation overview

The toolchain was implemented using a sequence of Python 3.x scripts. The choice of Python as the programming language used to build the current version of the prototype is due to the necessity to interface it with existing quantum computing frameworks, and in particular with their quantum circuits simulators, thus permitting to benchmark and validate template-based compilation with ease. Each of the three main scripts implements one of the three Steps and takes as input an OpenQASM 2.0-described .qasm file. To ensure the complete application of the toolchain as intended, each step must take as input the file produced as output by the previous step or, in Step 1’s case, the original quantum circuit file to be optimized. It is also possible to apply the specific optimization described into one of the three main scripts to an ad-hoc, custom file. In fact, even though a one-step technology-specific approach might lead to slightly better results for a single target technology, the whole toolchain was intentionally designed in a completely modular way through a libraries-based implementation, which allows the possibility of dealing with several technologies, total control on which steps are applied to a certain circuit, high flexibility in the functions’ usage, the capability to set some specific parameters through the edit of specific files and the faculty to allow future modifications and expansions, such as the integration of novel technologies for quantum computers, like semiconductor quantum dots [46], defects in diamond [47], et cetera. Following the philosophy of total modularity, several libraries in form of scripts were created, each containing a subset of functions designed to tackle a certain specific part of the optimization process. This allows the main script for each Step to remain well-ordered and easily customizable, and it also facilitates the nested usage of the functions in multiple occurrences, while making each library easily readable. In order to allow the edit of certain parameters that are particularly uncomfortable to pass as shell inputs, each step supports the reading of .cfg files to determine such parameters and to act accordingly. These parameters include the grade of approximation of \(\pi \) when dealing with rotational gates, the threshold of rotation value under which a gate is considered a null operation, the iterative parameters used to arrange the function loops in the workflow and other technology-related flags.

4 Benchmarks

4.1 Testing methodology

To test the circuits generated by the toolchain’s steps, some benchmark scripts were created in Python language. The first mandatory aim of these testing scripts was to verify that the introduced optimizations were actually correct, and that the outcome of each circuit was the same as the reference quantum circuit. To do so, both the .qasm file describing the reference quantum circuit and the .qasm files generated by the toolchain were used to create quantum circuits in IBM’s Qiskit and to simulate them with the QASM Simulator available in the Aer Library, thus permitting the verification of their equivalence.

QASM Simulator is the most consolidated simulator in Qiskit for simulating noisy or large and deep quantum circuits. One of its peculiarities is that, when a measurement operation is called for the circuit to be simulated, it returns a measurement counts distribution for the eigenstates, depending on the number of shots \(N_{\text {shots}}\), instead of the exact eigenstates probability distribution. In other words, QASM Simulator provides an \(N_{\text {shots}}\) “finite estimation” of the probability distribution, which would be obtained in the limit \(N_{\text {shots}} \rightarrow \infty \). From a practical point of view, \(N_{\text {shots}}\) should be higher for non-uniform distributions with most of eigenstates having non-null probabilities of being measured, such as in the ISING_N10 circuit, reported in Tables 2, 3 and 4, with mean probability value slightly lower than \(1 \cdot 10^{-3}\) and maximum one equal to \(42 \cdot 10^{-3}\)

By using the simulator’s standard settings, with \(N_{\text {shots}}\) depending on the circuit to be simulated, and by ensuring that every qubit line in each tested circuit had a final measurement performed, it was possible to simulate each circuit and to visualize the corresponding eigenstates measurement counts in form of an histogram. In case of circuits where a single output state was expected with a probability of 100%, the relative optimized circuit was deemed as “correct” if the output matched completely with a probability of also 100%. In case of circuits in which multiple output states with different probabilities were expected, the Kullback–Leibler deviation [48] between the obtained results was computed using the following formula, in which N is the total number of output states with non-null probability, opt(i) and ref(i) are the occurence of the i-th eigenstate in the optimized and reference circuit, respectively, and \(N_{\text {shots}}\) is the total number of measurement shots associated with the employed simulator:

\(N_{\text {shots}} = 8192\) was deemed as sufficient in all tests, except for ISING_N10, where it was set equal to 500000. The obtained results were then marked as “correct” only if the deviation did not exceed a value of \(5 \cdot 10^{-3}\). Once the optimized circuits were verified as “correct”, the scripts’ aim became to evaluate the effective capabilities of the toolchain. Evaluation was done in terms of estimation of the complexity of the compilation algorithm, in terms of execution time, and of comparison of its compiled circuits with those obtained with the compilers in state of the art, in terms of different figures of merit. All the results are available in Sects. 4.2 and 4.3.

4.2 Estimation of complexity of the compilation algorithm

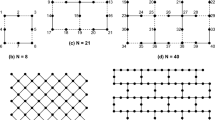

In order to estimate the complexity of the template-based compilation algorithm, a random-tests-based evaluation of the compilation time has been performed by changing the number of involved qubits and by considering all the target technologies currently supported by the toolchain.

First of all, for each qubits parallelism, 100 dense random unitary matrices were generated, then the equivalent quantum circuit of each matrix—based on the extended Clifford + T gate set discussed in Sect. 3.2.1—was obtained with Qiskit and subsequently compiled on the toolchain for supeconducting, trapped ions and NMR technologies. Repeated tests permitted to compute a mean of the compilation time for each qubits parallelism and each target technology.

These tests were done to stress the toolchain in a worst-case scenario, in terms of both total number of executed operations and compilation time. In fact, the choice of exploiting random matrices is due to the necessity of verifying the eventual efficiency limits of the toolchain in an unpredictable operating regime, where the presence of template-based optimizations is not known a priori. Moreover—considering that quantum circuits generated from random matrices have a total number of gates that is definitively higher than the corresponding ones of application-specific quantum circuits (such as those in Tables 2, 3 and 4)—the compilation times are expected to be definitively longer for the same number of qubits. It has been observed that, for each qubits parallelism, Qiskit generates quantum circuits from random unitary matrices with the same number of gates and order of single and two-qubit gates, thus implying that the same decomposition strategy is adopted. The number of gates for qubits parallelism from 2 to 6 are reported in Table 1. Another option forcing a worst-case computational scenario is the choice of Low precision, since its “looser” criteria brings to an higher number of allowed recombinations and, thus, to more performed evaluations and operations and to an higher computation time.

The left part (white background) of Fig. 12 shows the obtained results for a number of qubits between two and six. A similar exponential trend is visible in the three cases, in particular for a number of qubits between four and six. This implies that the compiler behaves in a coherent way for all the examined technologies, at least for what concerns the number of operations to be done, which is reflected in the total compilation time. In order to further investigate this similarity, fitting calculations were done, for estimating for each technology a relationship between compilation time \(\Delta t\) and number of qubits N of type

and in particular the characteristic multiplicative coefficient of the exponential \(\alpha \). Considering that \(\log (\Delta t) = \log (\alpha ) + \beta N\), linear fitting was executed for \(N \in [4,6]\) and the following \(\beta \) coefficients were obtained for the three technologies:

The obtained values can be reasonably considered a satisfactory proof of similarity of the compilation procedure in the three cases. In fact, \(\beta _{\text {M}}\), associated with NMR compilation, is slightly higher (6.4% and 4.1%) than \(\beta _{\text {S}}\) and \(\beta _{\text {I}}\), characteristic of the plots of superconducting and trapped ions, respectively. Moreover, \(\beta _{\text {I}}\) is about 0.7% lower than the mean value of \(\beta \), that is equal to 2.91, thus further proving that the exponential coefficient of the current implementation of the compiler is expected to be close to 2.90. This value is in any case quite high, as it is possible to see in the right part (red background) of the plot in Fig. 12. In fact, with \(\beta \simeq 2.90\), the compilation timescales of seven-qubit and eight-qubit circuits are \(\sim {1\times 10^{4}}\hbox {s}\) and \(\sim {1\times 10^{5}}\hbox {s}\), respectively.

In order to understand the reasons for which superconducting and NMR technologies provided the best and worst compilation time results, respectively, it is important to precise that Qiskit almost always preferred the use of CX gates for the creation of quantum circuits from random unitary matrices. This two-qubit gate is native for superconducting technology, so two-qubit-gate decompositions—according to the decomposition schemes reported in Avitabile et al., Supplementary Information—is not required. Hence, new single-qubit gates are not inserted and any additional circuit re-arrangement is required. On the other hand, in NMR’s case, all CX gates must be translated in CZ (refer to Figure 2 in Avitabile et al., Supplementary Information), with the consequent insertion of extra single-qubit gates and additional circuital manipulations. It is expected that NMR could provide lower compilation times with an input circuit with more CZ gates than CX.

In conclusions, according to the obtained results, it is possible to roughly write the total compilation time as:

where \(\beta _{\text {intrinsic}}\) is the exponential coefficient of an “intrinsic” compilation time, associated with all operations done before the eventual two-qubit-gate decomposition, while \(\delta _{\text {two-qubit}}\) and \(\delta _{\text {re-arrange}}\) are the coefficients of the overhead contributions due to two-qubit gates decomposition and final re-arrangement, respectively.

The exponential increase of the compilation time over the number of qubits casts some doubts on the potential scalability of the present prototype of the toolchain, i.e., on its capabilities of handling quantum circuits with an high number of qubits. Even though this is undoubtedly an important aspect to take into account in the development of classical software assisting quantum computation, it must be remarked that the optimization of the compilation time was not the leading concern of the proposed work. In fact, the latter mainly aims to assess whether a local optimization strategy could assist technology-specific compilation, leading to a reduction of the number of quantum gates, which is a critical task of the current NISQ era. In fact, this makes the difference between a circuit that can actually run on real hardware and one that does not, because of poor resulting fidelity associated with the emergence of dynamic non-ideality phenomena.

In this regard, it must be also highlighted that the current implementation of the compiler is limited, at least for what concerns the execution time, by the employed programming language (Python, which is intrinsically slower than other compiled languages, such as C/C++ [49]) and by the strictly sequential execution of the operations. The last one is a drawback due to an absence of effort profused in the prototype to try to overcome an intrinsic computational limitation of template-based optimizations. As it has been reminded, these revolve around gate-by-gate analysis and operations, often nested in recursive procedures, to be called whenever an improvement can be performed to maximize circuital compaction. It is clear that larger circuits require more recursions and longer compilation times, which could not be compensated by a strictly sequential execution. Parallelizing the compilation can represent a valuable tool to achieve a reduction of the compilation time. This could be done by partitioning the circuit in smaller “independent” regions through barriers—such as those in Figs. 4a and 7a—to be optimized in parallel, e.g., by exploiting multi-threading.

4.3 Comparison with other compilers

The comparison of the results provided by the proposed compilation toolchain and the ones generated by the other state-of-the-art compilers chosen as reference was done in terms of different figures of merit, which are reported in the following (each one with a label reported in parentheses):

-

The total number of single-qubit gates (1Q).

-

The total number of non-\(\varvec{R_Z}\)/U1 single-qubit gates (1QNZ), which are the de facto relevant single-qubit gates, since they have a non-null duration, in accordance to the assumption of a virtual implementation of \(R_Z\) gates.

-

The total number of multi-qubit gates (MQ).

-

The normalized weighted circuit latency for single-qubit gates (L), given by:

$$\begin{aligned} \text {L} = \sum _{R \in \text {1QNZ}} \frac{\vert \theta _{R} \vert }{\frac{\pi }{2}} = \sum _{R \in \text {1QNZ}} 2 \frac{\vert \theta _R \vert }{\pi } \, , \end{aligned}$$(6)i.e., the sum of the normalized durations of single-qubit gates R different from \(R_Z\) ones, assumed to be implemented virtually. Since the latency introduced by an \(R_{\{X,Y\}}\) gate is proportional to the rotation angle \(\varvec{\theta _R}\), a normalized duration \(\varvec{2 \frac{\vert \theta _R \vert }{\pi }}\) was chosen, so that gates with rotation angles equal to \(\frac{\pi }{2}\) and \(\pi \) provide circuit latency contributions equal to 1 and 2, respectively. In the case of the quantum circuit in Fig. 1, since H and X gates are both usually translated into one non-virtual single gate with \(\vert \theta _R \vert \) equal to \(\frac{\pi }{2}\) and \(\pi \), respectively, [10], \(\text {L} = 1 + 2 = 3\). These weights can be used not only for technologies based on \(R_{\{X,Y\}}\) gates, but also with IBM’s U gates. In fact, considering the U-R gates relations reported in Sect. 3.2.1, U2 and U3 gates are characterized by one and two non-virtual gates, respectively (all with \(\vert \theta _R \vert = \frac{\pi }{2}\)), so their corresponding circuit latency contributions are equal to 1 and 2.

-

The circuit cost C, that, according to [50], can be computed as follows:

$$\begin{aligned} \textrm{C} = - D\log K - \sum _i \log {\mathcal {F}}_i^{1q} - \sum _j \log {\mathcal {F}}_j^{2q}\,, \end{aligned}$$(7)where D is the circuit depth, K is a constant that increases the cost of deep circuits, \({\mathcal {F}}_i^{1q}\) is the average fidelity of the i-th single-qubit gate, \({\mathcal {F}}_j^{2q}\) is the average fidelity of the j-th two-qubit gate and the two summation operators run over all the quantum gates of the target quantum circuit. Defining as \({\mathcal {F}}^{1q}\) and \({\mathcal {F}}^{2q}\) the single and two-qubit native fidelities of the target hardware—i.e., the average fidelities of the native two-qubit gate and of a reference single-qubit gate (usually, the \(R_X(\pi /2)\) gate) customarily reported in open-source calibration data repositories of real quantum computers—the reference article recommends to select K such that \({\mathcal {F}}^{1q}< K < {\mathcal {F}}^{2q}\). Accordingly, here the value of K is computed as

$$\begin{aligned} K = \frac{{\mathcal {F}}^{1q} + {\mathcal {F}}^{2q}}{2}\,. \end{aligned}$$(8)For the target technologies of the proposed toolchain, the values of \({\mathcal {F}}^{1q}\) and \({\mathcal {F}}^{2q}\) are determined as discussed in the following:

-

Superconductors: the fidelities are defined as

$$\begin{aligned} \begin{aligned}&{\mathcal {F}}^{1q} \triangleq {\mathcal {F}}(R_X(\pi /2)) \sim 1 - e_{1q} = 0.99926 \\&{\mathcal {F}}^{2q} \triangleq {\mathcal {F}}(\text {CX}) \sim 1 - e_{2q} = 0.97917\,, \end{aligned} \end{aligned}$$(9)where \(e_{1q}\) and \(e_{2q}\) are the average single (\(\frac{\pi }{2}\)-pulse) and two-qubit native gate error rates, respectively, retrieved from the calibration data of the mock backend FakeToronto [51] available in Qiskit Terra, which is substantially a simplified model for classical simulations of the twenty-seven-qubit IBMQ Toronto quantum computer. According to the previous formula, fidelity is assumed to be the complement of the error rate with respect to the unit.

-

Trapped ions: the fidelities are

$$\begin{aligned} \begin{aligned}&{\mathcal {F}}^{1q} \triangleq {\mathcal {F}}(R_X(\pi /2)) = 0.99717 \\&{\mathcal {F}}^{2q} \triangleq {\mathcal {F}}(R_{XX}) = 0.96960\,, \end{aligned} \end{aligned}$$(10)where \({\mathcal {F}}(R_X(\pi /2))\) and \({\mathcal {F}}(R_{XX})\) are the single and two-qubit native gate fidelities, respectively, and they are retrieved from [52].

-

NMR: the fidelities are

$$\begin{aligned} \begin{aligned}&{\mathcal {F}}^{1q} \triangleq {\mathcal {F}}(R_X(\pi /2)) = 0.99895 \\&{\mathcal {F}}^{2q} \triangleq {\mathcal {F}}(R_{ZZ}) = 0.97977\,, \end{aligned} \end{aligned}$$(11)where \({\mathcal {F}}(R_X(\pi /2))\) and \({\mathcal {F}}(R_{ZZ})\) are the single and two-qubit native gate fidelities, respectively, and they are computed exploiting the simulator proposed in [9]. More in detail, the physical parameters (J-couplings, resonance frequencies, decoherence and relaxation time constants [53,54,55]) of a four-qubit fully connected quantum computer based on a crotonic acid molecule are chosen as inputs to the compact model simulation infrastructure. Then, the fidelities resulting from the application of \(R_X(\pi /2)\) pulses on each qubit and of \(R_{ZZ}\) gates on each couple of qubits are computed. Finally, the average fidelities are determined as follows:

$$\begin{aligned} \begin{aligned}&{\mathcal {F}}(R_X(\pi /2)) = \frac{1}{4} \sum _{i=0}^3 {\mathcal {F}}_i(R_X(\pi /2))\\&{\mathcal {F}}(R_{ZZ}) = \frac{1}{6} \sum _{i=0}^2 \sum _{j = i+1}^3 {\mathcal {F}}_{i,j}(R_{ZZ})\,. \end{aligned} \end{aligned}$$(12)

-

Since the toolchain’s output file exclusively contains the native gates of the target technology, it is reasonable to assume, as a first-order approximation, that all two-qubit gates show the same average fidelity. Therefore,

$$\begin{aligned} \begin{aligned} {\mathcal {F}}_i^{2q} = {\mathcal {F}}^{2q}\,,\, \forall i\,. \end{aligned}\end{aligned}$$(13)Conversely, since the execution time of single-qubit gates depends on the rotation angle, larger angles will lead to longer gate executions and, therefore, to higher error rates and lower fidelities. Hence, it is not acceptable to use the same single-qubit average fidelity for all single-qubit gates. It can be shown (see Appendix A) that there exists an approximated relation between the gate fidelity of an arbitrary single-qubit gate (\( {\mathcal {F}}^{1q}(R(\theta ))\)) describing a rotation of an angle \(\theta \) and the average fidelity of a \(R_X(\pi /2)\) pulse (\({\mathcal {F}}(R_X(\pi /2))\)):

$$\begin{aligned} {\mathcal {F}}^{1q}(R(\theta )) \sim 1 - \frac{2\left| \theta \right| }{\pi } \left( 1 - {\mathcal {F}}(R_X(\pi /2))\right) \,. \end{aligned}$$(14) -

-

The elapsed computation time (T) for completing the whole compilation, in seconds.

As for the choice of quantum circuits to be tested, it was preferred to use general, different-sized quantum circuits. Most of them are available on two GitHub repositories:

- 1.

-

2.

A repository of testing circuits belonging to Prof. Dr. R. Wille’s IIC Group from the Johannes Kepler University of Linz [58]. These quantum circuits implement classical Boolean functions, such as addition.

The OpenQASM 2.0 descriptions of SHOR and EDGE_DETECT, reported in Tables 2, 3 and 4 and associated with Shor’s algorithm and horizontal edge detection in image processing, were obtained using the Qiskit transpiler on the original circuits available in [41]. The set of circuits chosen for the benchmark was composed with the idea of involving small-to-large-scaled circuits. Only the latest version of each chosen circuit in the repositories was taken in consideration. The circuits were mostly left untouched: the only actions performed on them were adding a measurement on each involved qubit line at the end and, in a few circuits taken form QASMBench’s repository, to manually decompose custom user-defined gates, which the toolchain does not currently recognize nor support. Each circuital compilation executed by the toolchain was performed with three different sets of parameters: low precision (\(\varvec{\text {thr}}_1 = 10^{-4}\), \(\varvec{\text {thr}}_2 = 10^{-6}\)), average precision (\(\varvec{\text {thr}}_1 = 10^{-8}\), \(\varvec{\text {thr}}_2 = 10^{-10}\)) and high precision (\(\varvec{\text {thr}}_1 = 10^{-10}\), \(\varvec{\text {thr}}_2 = 10^{-12}\)), in which \(\varvec{\text {thr}}_1\) is the grade of approximation of \(\pi \) and \(\varvec{\text {thr}}_2\) is the threshold of rotation value under which a gate is considered a null operation. All the compilations were performed on a single-process Intel(R) Xeon(R) Gold 6134 CPU @ 3.20GHz opta-core, Model 85 [59] with a memory of 10296102+ KiB.

4.4 Comparison with the state of the art

The benchmarks were performed using Qiskit version 0.28, with Qiskit Aer Libraries version 0.8.2 and Qiskit Terra Libraries version 0.18.0 (the latest releases at the time of writing). The benchmarks were performed using pytket-qiskit version 0.16.1 too. When using both compilers and the toolchain, each quantum architecture was considered as perfectly fully connected, not taking thus into account the mapping capabilities in the benchmarks.

It is important to clarify that Qiskit compilation for NMR employed a slightly different basis set of quantum gates. In fact, the set \(R_X\), \(R_Y\), \(R_Z\), \(R_{ZZ}\) is not supported by the employed version of this library, so the \(R_{ZZ}\) was replaced by the CZ. Reminding that \(\text {CZ} = R_{ZZ} \left( \frac{\pi }{2} \right) \left[ R_{Z} \left( -\frac{\pi }{2} \right) \otimes R_{Z} \left( -\frac{\pi }{2} \right) \right] \) [10], this compilation strategy did not affect the calculation of the total number of two-qubit gates and of non-\(R_Z\) single-qubit gates, but it could slightly modify the circuit depth, which is generally expected a bit lower, considering that a single CZ requires two single-qubit gates and one two-qubit gate. In any case, this different basis set was employed to compare the NMR toolchain capabilities with a potentially better basis set in terms of circuit depth and cost.

The general trend noted in the benchmarks is that Qiskit features a versatile management of single-qubit gates, being able to optimize them well using the sets of gates of multiple target technologies. \( \left. {{\mathbf {t|ket}}} \right\rangle \), on the other hand, proved to be more unpredictable when dealing with single-qubit gates, because it alternates very good optimizations to completely suboptimal handles. At the same time, \( \left. {\mathrm{{t|ket}}} \right\rangle \) proved to be the best in the reduction of two-qubit gates, as it was able to reduce their number substantially by accepting some tradeoffs on the single-qubit gates number.

4.5 Obtained results

A subset of the obtained results is reported in Tables 2, 3, 4. It is important to precise that in the calculation of the normalized circuit latency (L), all non-integer results were rounded-up to stay in a worse-case scenario. All the toolchain-produced circuits proved to be functionally equivalent to the reference input circuits. Among the evaluated KL deviations, none exceeded the value of \(10^{-5}\), thus proving that, even when multiple output states were expected, the obtained results were remarkably similar to the ones of the original circuits. Moreover, the toolchain proved capable of optimizing efficiently the single-qubit gates in most circuits. Some potentially powerful tools, like the Eulercombo optimization, proved to be very situational, while other processes, such as the IBM’s U gates merging scheme, proved to be competitive even when compared to IBM’s own software platorm’s results. The toolchain also proved capable of handling two-qubit gates quite well, introducing optimizations capable of occasionally outperforming Qiskit and, most importantly, of competing with \( \left. {{\mathbf {t|ket}}} \right\rangle \) in some cases, whose strong point is actually the proficiency in reducing the number of multi-qubit gates involved in the circuit. This went against the expectation of the template-based approach being unsuited to tackle efficiently the management of clusters of CX gates, as the templates that involve them are not numerous and as other methods, such as the heuristic-based ones, seem theoretically more prone to detect advantageous circuit remodelings. As expected, the number of single-qubit gates drammatically increases all over the chart in some technologies, since each decomposition of two-qubit gates introduces several single-qubit gates in the circuit and can bring a lot of newly inserted gates in large-sized circuits.

The specific results obtained for each technology are commented in the following:

-

NMR technology Of all the supported technologies, the generated circuits with the NMR as target technology proved to be the ones in which the toolchain’s optimization is least competitive. As shown in Table 2, when compared to Qiskit, the toolchain performs quite well, in particular with larger circuits, since it can involve less physical quantum gates (involving both single and two qubits). Moreover, in most of the examined circuits, the advantage in the management of single-qubit-gate implies a lower weighted latency. On the other hand, \( \left. {{\mathbf {t|ket}}} \right\rangle \) obtains better performance than the other compilers. In fact, considering both optimization levels, it always finds the solution with the lowest number of two-qubit gates and in most of the cases it achieves the best results in managing single-qubit gates, in terms of both the total number of non-\(R_Z\) gates and circuit latency. However, for what concerns the circuit cost, \( \left. {\mathrm{{t|ket}}} \right\rangle \) is sometimes beat by Qiskit and by the toolchain, especially with medium-large circuits, because of a generally higher circuit depth. In any case, even though the circuit depth and cost are sometimes the lowest, the benchmarks showed that the the currently implemented toolchain is not more performing than other state-of-the-art compilers.

-

Trapped ions technology The benchmarks showed that the trapped ions technology is the most successfully managed by the toolchain. As shown in Table 3, even though the total number of two-qubit gates is not the lowest among all compilation strategies, the toolchain often achieves a consistent reduction of the number of all single-qubit gates and of those non-\(R_Z\), specially in medium-large circuits (lower half of table). Moreover, the obtained equivalent circuits are generally characterized by low circuit depths, which permit to obtain circuit costs which are the lowest or competitive with those obtained with other compilation strategies. Qiskit is capable of holding its ground quite well, while the same cannot be said for \( \left. {{\mathbf{t|ket}}} \right\rangle \), which features a less efficient implementation, apart from small quantum circuits; even if it manages well two-qubit gates, it is usually incapable of severely reducing single-qubit gates. Moreover, it also employs non-\(R_Z\) gates, with a very high associated weighted latency. The results obtained by the toolchain are particularly satisfying in the context of this technology, even more considering that chains of trapped ions are intrinsically fully connected. This means that the toolchain, even in this prototype form, is already capable of efficiently handling most scenarios involving quantum circuits implemented with trapped ions.

-

Superconducting technology The toolchain shows quite good performance with this target technology. As shown in Table 4, \( \left. {{\mathbf {t|ket}}} \right\rangle \) is usually able to minimize the total number of two-qubit gates, while managing at the same time the single-qubit U gates. Moreover, it is possible to ascertain that the maximum optimization level does not always imply better results, since in some cases the lower optimization level can generate an equivalent circuit with fewer two-qubit gates or circuit latency. Qiskit handles IBM’s gate set really well in terms of single-qubit gates number, but it is often beat by \( \left. {\mathrm{{t|ket}}} \right\rangle \) in CX optimization. The toolchain achieves in most of the cases competitive weighted latencies with respect to both the other compilers. The same thing can be substantially said for circuit depth, thus implying quite good costs, to the point that in two cases (ADDER_LARGE and SYM10) this quantity is the lowest. Generally speaking, the advantages over the results of the other compilers are not very strong, but the overall performance can be considered satisfactory, according to the current state of the presented work. However, it has to be noted that, in this specific technology, the critical layout mapping phase was not taken in consideration, and that the smart management of SWAP gates to adapt circuits to target devices is one of Qiskit’s and \( \left. {\mathrm{{t|ket}}} \right\rangle \)’s strong point: perhaps, with such feature implemented the toolchain would prove less ideal than Qiskit, \( \left. {\mathrm{{t|ket}}} \right\rangle \) or both.

As for the differences between the toolchain compilations using different sets of parameters, in the NMR and superconducting technologies circuits obtained with the high precision set proved to be slightly more optimized, although the differences between different sets of parameters were minimal. In the trapped ions technology case, a trade-off emerged: in most circuits, the higher precision was used for the parameters, the stronger the optimization of single-qubit gates was, but also the worse the weighted latency became. The computation time also proved to be a critical parameter. In the superconducting technology case, the compilation time of the toolchain was non-negligible in the case of large-sized circuits, but not excessively long (even lower than the corresponding one for \( \left. {\mathrm{{t|ket}}} \right\rangle \) with highest optimization level). Combining the running times of all the steps, the toolchain takes usually longer than both Qiskit and \( \left. {\mathrm{{t|ket}}} \right\rangle \), but in small to medium-size circuits this difference is negligible and is still acceptable in large circuits. In the NMR and trapped ions technologies cases, the compilation time of the toolchain was in general significant and absolutely crippling when dealing with large-sized circuits. This is probably due to the employment of the Eulercombo mechanism in a circuit whose number of gates dramatically increased after the decomposition of CX and CZ gates.

When considering the overall running time of the toolchain, it is clear that the template-based approach can be fast enough for small to average circuits, but it is also extremely slower than other heuristic methods when dealing with very large circuits. This is mostly due to the issue of Gimbal Locks [60] in the Eulercombo process, which drastically slow the compilation, especially when using highly precise approximations of \(\pi \).

4.5.1 Summary of the toolchain’s most notable highlights

A small summary of benchmark cases in which the toolchain’s performance stood out in terms of number of non-RZ quantum gates, weighted latency, circuit depth and circuit cost—when compared to the other state-of-the-art compilers—is here reported. Compilation times and scalability issues were not considered in the following highlights.

-

In NMR technology, the toolchain achieves the lowest circuit cost in URF5 and SYM10 cases, because of its lowest circuit depth. However, it is not possible to say that it generally performs better than the other two compilers, especially when compared with t\(\left| \text {ket}\right\rangle \), because of its good management of quantum gates with this technology. On the other hand, when compared solely to Qiskit, the toolchain produced circuits with a total number of single-qubit gates and weighted latency equal or lesser than Qiskit’s ones in all the tested cases, except for DNN, ISING and EDGE_DETECT.

-

In Trapped ions technology, the proposed toolchain yielded the overall best results, in terms of both single-qubit latency and cost, for ISING, ADDER_LARGE, SYM10, SHOR, SYS6 and URF5. In the first two cases, the compiled circuits have the lowest number of non-\(R_z\) single-qubit gates, two-qubit gates and the lowest circuit depth, so the overall performance can be considered the best. In the other cases, the cost function is minimized thanks to lower circuit depth, number of non-\(R_z\) single-qubit gates and weighted latency, compensating a worse management of two-qubit gates. ADDER_SMALL has the lowest single-qubit weighted latency, but the circuit cost is slightly higher than the one obtained with Qiskit with maximum optimization level, even though the total number of two-qubit gates is equal in both cases. The reason of this behaviour can be justified by the fact that the circuit depth is slightly higher (21 instead of 20). Finally, EDGE_DETECT has the lowest circuit cost, given by the lowest circuit depth (45665, with the second lowest equal to 46874), such as the total number of single-qubit gates (31971). On the other hand, the solution provided by the toolchain has a higher non-\(R_Z\) gates count (12687, instead of 11846) and single-qubit-gate weighted latency (29216, instead of 24409) with respect to Qiskit with maximum optimization level. In general, the obtained result can be considered competitive with respect to those provided by the other compilers.

-

In superconducting technology, the toolchain achieved the minimum cost, among all the considered compilation strategies, with ADDER_LARGE and SYM10 circuits, thanks to its lowest circuit depth. In the ADDER_SMALL case, it performed definitely on par with all the other compilers, especially with Qiskit. Finally, QPE, EDGE_DETECT and SYS6 can be mentioned, as they are characterized by the best obtained performance in terms of weighted latency, but not capable of achieving the minimum cost because of the higher circuit depth and the number of two-qubit gates equal or greater than the ones of Qiskit and \( \left. {\mathrm{{t|ket}}} \right\rangle \) compilers with maximum optimization level.

5 Conclusions and future perspectives

The obtained results show that the toolchain and its core philosophy can be considered competitive in the state of the art for the compilation of quantum circuits targeting specific technologies and that the designed optimization process can introduce some fine-grain technology-dependent optimizations that allow the toolchain to compete with well-established compilers in some cases, especially when dealing with trapped ions. In general, the toolchain is capable of steadily reducing the number of single-qubit gates and prioritizing an abundant use of advantageous and virtual \(R_Z\) gates, thus obtaining in some circumstances circuits with low depth and cost. However, it is important to remind that the toolchain evantual advantages would be compensated by compilation times usually very long. The largest circuits used in the benchmarks are probably too large in scale for current quantum computers to be able to handle them and actually use them for computation and use tens of thousands of gates, that once decomposed increase dramatically. In conclusion, the obtained results show a limit of some of the proposed optimizations, with compilations that require up to several hours to be completed. As reported through this paper, the toolchain is, however, still a prototype. Its structure could allow it to support even more quantum technologies, enhancing its already good versatility.

Regarding future improvements, a first step would be to extend the currently supported selection of quantum technologies. For instance, spin-based technologies are characterized by sets of native single and two-qubit gates similar to the ones of the NMR technology. Therefore, the routines and functions developed to optimize the compilation for NMR backends are expected to be easily adaptable to other spin-based technologies, such as quantum dots in semiconductors [61]. Moreover, the native gates of technologies already available could be updated and increased. This is, in particular, the case of superconducting qubits, where \(R_{ZX}\), controlled phase (cu1, according to OpenQASM 2.0 syntax) and iSWAP could be taken into account in compilation, thus permitting on one hand to operate on a gate level closer to the physical one, and on the other to employ the toolchain with backends of providers different from IBM’s ones. It is clear that considering new native two-qubit gates for superconducting qubits could also imply the identification of new templates, associated with these gates, to be integrated in the toolchain.

The most relevant part currently missing in the toolchain is the capability of handling the whole layout synthesis (placement and routing) process for non-fully connected technologies, such as the superconducting one, and optimizing the strengths of couplings for fully connected technologies, such as the NMR one. This feature would require implementing a tool capable of adapting the compilation to a given device’s layout and logically mapping each qubit line with a smart insertion of SWAP gates. In this regard, it shall be remarked that the compilation toolchain discussed in this article belongs to a broader project currently under development. The latter, in fact, shall include both a compilation part (proposed in this manuscript)—dedicated to compiling the quantum input circuit by reducing the quantum gates as much as possible, regardless of the error rate of each gate—and a placement and routing part (which at the time of writing is still missing)—with the aim of implementing a hardware-driven noise-and-fidelity-aware mapping of logical qubits to physical qubits, minimizing the execution time and maximizing the fidelity.

Moreover, in order to improve the compilation, other steps could be implemented in the toolchain, existing steps may also be modified to accommodate new features and currently implemented functions could be moved in the workflow to improve the overall process.