Introduction

Detecting and monitoring crop stress is crucial for ensuring sufficient and sustainable crop production. Recent advancements in unoccupied aerial vehicle (UAV) technology provide a promising approach to map key crop traits indicative of stress. While using single optical sensors mounted on UAVs could be sufficient to monitor crop status in a general sense, implementing multiple sensors that cover various spectral optical domains allow for a more precise characterization of the interactions between crops and biotic or abiotic stressors. Given the novelty of synergistic sensor technology for crop stress detection, standardized procedures outlining their optimal use are currently lacking.

Materials and methods

This study explores the key aspects of acquiring high-quality multi-sensor data, including the importance of mission planning, sensor characteristics, and ancillary data. It also details essential data pre-processing steps like atmospheric correction and highlights best practices for data fusion and quality control.

Results

Successful multi-sensor data acquisition depends on optimal timing, appropriate sensor calibration, and the use of ancillary data such as ground control points and weather station information. When fusing different sensor data it should be conducted at the level of physical units, with quality flags used to exclude unstable or biased measurements. The paper highlights the importance of using checklists, considering illumination conditions and conducting test flights for the detection of potential pitfalls.

Conclusion

Multi-sensor campaigns require careful planning not to jeopardise the success of the campaigns. This paper provides practical information on how to combine different UAV-mounted optical sensors and discuss the proven scientific practices for image data acquisition and post-processing in the context of crop stress monitoring.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Climate change is expected to increase crop abiotic (heat, drought, etc.) and biotic (pests, diseases, etc.) stresses, putting crop productivity at risk (Bevacqua et al., 2021; Fahad et al., 2017). Therefore, detecting and identifying crop stress prior to irreversible damage is essential to support management decisions and minimize stress impacts on crop growth and yield. While well-planned studies and experiments are essential for detecting and identifying crop stress, these endeavors are not exempt from technical challenges.

Optical remote sensing (RS) is an established method to detect the impact of different stressors on agricultural crops (Berger et al., 2022). Several optical sensors based on imaging spectroscopy, fluorescence spectroscopy, and thermal imaging have been developed and are being used in unoccupied aerial vehicles (UAVs), and airborne science campaigns (Adão et al., 2017; Bandopadhyay et al., 2020; Messina & Modica, 2020). Over the past decade, combinations of different spectral domains have been used in agriculture to estimate plant traits, and productivity (Damm et al., 2022; Weiss et al., 2020). These spectral domains include the visible (VIS; 400–700 nm), near-infrared (NIR; 700–1300 nm), which are also used for sun-induced fluorescence (SIF) retrieval, shortwave infrared (SWIR; 1300–2500 nm) and thermal-infrared (TIR; 8–14 µm).

The spectral signature of vegetation in the optical region is primarily determined by the plant’s biochemical and structural properties, related to plant vegetative growth and canopy development (Jacquemoud, 2000). Consequently, various plant traits are often estimated based on empirical relations with different spectral bands (Kaplan and Rozenstein, 2021), or they are retrieved with radiative transfer models (RTMs). Another promising approach for monitoring vegetation dynamics is to combine RTMs with plant physiological models (Gastellu-Etchegorry et al., 2015; Pacheco-Labrador et al., 2021; Van der Tol et al., 2009; Yang et al., 2021). The assimilation of multi-sensor data from complementary spectral domains into crop models has the potential to facilitate decision-making by identifying specific stressors and even predicting stress before it occurs (Berger et al., 2022).

While using single optical sensors may suffice to monitor crop status, integrating multi-sensor data acquired from airborne/UAV platforms is more powerful for understanding plant responses to different biotic or abiotic stressors (Aasen et al., 2018; Gerhards et al., 2019; Mohammed et al., 2019; Panigada et al., 2014). For example, the combination of multispectral (MS, discrete spectral bands of a predetermined wavelength range) or hyperspectral (HS, continuous spectral coverage across a spectral domain) with TIR sensors provides broad spectral coverage for detecting and quantifying crop stress at an early stage of development. By measuring the leaf or canopy temperature, changes in plant traits such as stomatal conductance can be observed in minutes to hours, providing an early (and pre-visual) indication of the plant responses to stress (Gräf et al., 2021) (Fig. 1). Additionally, the incorporation of VIS-NIR information enables the analysis of stress responses related to pigment contents, such as chlorophylls, carotenoids (Gitelson & Merzlyak, 1998) and anthocyanins (Gitelson et al., 2006) that are critical for photosynthesis and net primary productivity. In this context, the combination of different spectral resolutions and sensor types may complement the combination of spectral domains for increased spectral coverage.

The synergistic use of sensors from different spectral domains also improves the capacity for the observation of early to long-term crop responses to external stressors that affect growth and productivity (Raddi et al., 2022) (Fig. 1). The integrated use of MS VIS-NIR together with a broadband SWIR sensor or NIR+SWIR multi-camera array was shown as an efficient way to obtain information about crops’ leaf water status or nitrogen concentration (Jenal et al., 2021; Kandylakis et al., 2020). The VIS-NIR+SWIR synergy is particularly useful for medium-term but also long-term stress response detection (Fig. 1). The simultaneous collection of HS and TIR images in synergy with SIF data provides valuable insights into the response of SIF to crop stress (Camino et al., 2019; Gerhards et al., 2019). Additionally, RGB, MS, HS, and TIR image data can be used to derive biophysical traits and to assist in the quantification and interpretation of plant physiological changes reflected in SIF measurements (Bendig et al., 2020; Siegmann et al., Zeng et al., 2021; 2021; Wang et al., 2023b; Yang et al., 2020).

Optical sensor synergies for stress detection: different sensor combinations are useful to estimate different plant traits according to the period of time after plants started experiencing stress. Faded colors are used when the plant trait could still be measured but is less relevant than it was earlier. ETR electron transport rate, ALIA average leaf inclination angle, Cab leaf chlorophyll a and b content, Car leaf carotenoids content, LWC leaf water content, LAI leaf area index, RGB red, green, blue, VIS visible, NIR near infrared, MS multispectral, HS hyperspectral, SWIR shortwave infrared, SIF sun-induced fluorescence

Currently, few studies are available that make synergistic use of UAV-mounted sensors covering different spectral domains from which guidelines or best practice protocols can be derived. One exception is the use of MS or HS in combination with TIR for assessing crop water status and estimating crop evapotranspiration (ET). This approach, with a history in airborne science research (Bastiaanssen et al., 1998), has been successfully adapted to UAV VIS-NIR and TIR sensors (Bellvert et al., 2021; Nieto et al., 2019). By leveraging the higher spatial resolution of UAV sensors, researchers have improved ET calculations by extracting separate surface temperatures for soil and crops, enhancing accuracy. In another example, Zarco-Tejada et al. (2018) employed high-resolution (40 cm) HS and TIR images, combined with radiative transfer model, to detect traits related to Xylella fastidiosa (XF) in olive trees, achieving over 80 percent accuracy in detection. Although the method section of the above-mentioned studies describe various aspects, including leaf physiological measurements, image data collection and processing, RTM inversion methods, statistical analysis, detailed protocols and guidelines for combining multiple sensors from different spectral domains are scarce. Therefore, an integrated guideline is deemed beneficial for less experienced researchers to conduct similar experiments.

The guidelines that we present here are based on the collective expertise of a diverse group of remote sensing specialists, represented in the working group (WG3) of the COST Action SENSECO CA17134. They are a result of years of experience and professional collaboration within our group. Our aim is to enhance the synergistic use of sensors by offering recommendations and advice in situations where single-sensor protocols prove to be insufficient. In addition to best practice recommendations, we have gathered bad practice examples from our group’s experiences (see "Lessons learnt/bad examples" section), to help users avoid repeating them. However, guidelines, by their nature, are suggestive, not compulsory; they are intended to help practitioners follow some rules while allowing for flexibility and common sense in different scenarios.

The following outlines the structure of the paper: Data acquisition ("Pre-considerations on synergistic sensor use" section) data analysis ("Data analysis" section), and Lessons learnt / Bad Examples ("Lessons learnt/bad examples" section). In "Lessons learnt/bad examples" section we discuss lessons learned from selected examples that illustrate the loss of data or deterioration in data quality that could occur if certain preparations, installations, or rules have not been followed.

Pre-considerations on synergistic sensor use

Acquiring image data using multiple sensors poses unique challenges that would otherwise be approached differently when using only a single optical sensor. Without adequate preparation, users may face difficulties that cannot be easily resolved on-site, jeopardising the field campaign or research project. This chapter reviews pre-considerations for data acquisition with multiple sensors and briefly reviews important aspects of the process. These recommendations are summarized in Fig. 2 and Table 1.

Mission planning

Mission planning for any airborne science campaign depends on various factors such as atmospheric conditions, illumination conditions, and optimal time of day, considering detailed sensor properties, targeted spatial resolution, and associated ground components of the field campaign. The initial factor to consider for any UAV or airborne campaign should involve conducting a comprehensive assessment of atmospheric conditions. While the suitability of flight conditions is of paramount importance, it is noteworthy that several factors affecting flight—such as wind, rain, clouds, humidity, and air temperature—can also significantly influence crop conditions during the time of measurement. Oftentimes, acceptable conditions for UAV flight will not be adequate for image data acquisition, but weather circumstances for flight and data capture should both be conceptualized as a range from optimal to adequate. Suboptimal conditions may require additional calibration measures, whereas some risks are not worth it for gathering unsalvageable data. Furthermore, when acquiring images via aerial platforms using multiple sensors, mission planning becomes crucial, and an accurate consideration of the diversity of sensor specifications is necessary. While recent reviews have addressed this issue (Tu et al. 2020), current mission planning applications do not support multi-sensor campaigns. Most UAV manufacturers include flight planning applications that consider only the basic camera characteristics like field of view, resolution, and pixel size. However, other aspects like sensor stabilisation and illumination conditions require extra attention or may need additional considerations in windy conditions. This section will briefly review the mission planning aspects that need to be taken into account when conducting multiple sensor campaigns. Finally, it is strongly recommended to conduct a test flight of all sensors and sensor combinations before conducting experimental flights in order to test for aeronautical stability, flight times, and to address any unexpected issues.

-

Optimal timing for data acquisition (#1 in Fig. 2). Selecting the best time to perform data acquisition with UAV flights depends on the nature of the trait of interest, as well as the technical demands of optical sensors. For MS, HS, SIF, or TIR sensors, it is generally recommended to fly under clear sky conditions and around solar noon to minimize the effects of bidirectional reflectance distribution function (BRDF) and changes in irradiance (or temperatures). Not only are BRDF effects less at times closer to solar noon, but the sun angles are also changing slower, resulting in reduced changes in light conditions between the start and the end of flights. As crops often experience diurnal cycles of temperatures, it is important to consider the exact time of day for the level of stress (Fig. 4). However, image data acquisition that targets structural traits (Bendig et al., 2015) (i.e., 3D point clouds built using structure-from-motion (SfM) workflows) or texture-based object identification (Fernandez-Gallego et al., 2020) (i.e, counting yield or crop components/organs) might be collected with overcast sky conditions to avoid shadows. Additionally, for dynamic traits like photosynthetic parameters such as stomatal conductance or SIF, repetitive sampling on the same day may be required to determine diurnal patterns (Siegmann et al., 2021). Since some sensors, such as TIR, are influenced by shadow, image acquisition needs to consider shadowing, which is changing during the day and season. For the TIR signal wind might have higher priority than clear sky. For all sensors, it is important to be aware that solar azimuth and elevation change during the season, and protocols need to be adapted accordingly. The timing of image acquisition can be crucial for measuring certain phenomena. For instance, in crop water stress detection using TIR data, the typical recommendation is to acquire TIR data in the early afternoon around solar noon (Fig. 3).

In case not all sensors fit on one platform, the sensors need to be flown separately, which thus requires defining an acceptable time gap between flights, and prioritization between optimal flight times. In those cases, the order of flights is vital as certain sensors favor specific environmental conditions. For example photosynthesis-related traits that can be obtained from SIF sensors are time-critical and could change in a matter of minutes, which is also true for TIR information with respect to crop evapotranspiration estimations. In contrast, plant pigment content or biomass are not as time-critical as flux-related traits but critical with respect to stable/clear sky illumination conditions. In general, flux-related traits such as photosynthesis rates derived from SIF or evapotranspiration from TIR are highly variable in time and should be prioritized over collecting data on plant pigments and/or biomass. Nonetheless, the latter are dependent on stable illumination conditions (i.e. clear-sky) but with respect to the phenological stage and stress intensity, these flights could also be postponed for a few days.

-

Ground sampling distance (GSD; #2 in Fig. 2). GSD is a function of flight altitude, focal length, sensor resolution, and pixel size. This means that GSD will differ from sensor to sensor as well as between flights. In some cases, adjustments in focal length can offset minor differences in sensor resolution, but for other cases, the differences in sensor resolution will be too large and require separate flights. Therefore, if the user is targeting a specific GSD when performing multiple-sensor campaigns, they should consider all the above-mentioned attributes for GSD calculation, most importantly, flight altitude. Users need to be aware that HS, MS, and TIR sensors generally have different spatial resolutions (Fig. 5) as well as flight acquisition requirements, so if there is interest in acquiring plant-level information at a specific spatial resolution, flight altitude must be adjusted according to the sensor with the lowest resolution. This consideration may lead to long flight times, which can be limited by UAV battery capacity. If multiple flights are planned, the number of available batteries and charging opportunities (including cables and multi-plugs) in the field have to be taken into account in the flight planning as well. Varying terrain elevation of the study area has an effect on GSD. If these variations are large e.g more than 10m, the flight altitude needs to be adjusted accordingly. In these scenarios the terrain following option in the flight planning software should be used to ensure consistent GSD.

-

Image overlap and flight patterns. The choice of image overlap depends on many factors including but not limited to: type of the targeted crop traits (morphological, physiological or biochemichal), complexity of the terrain (homogeneous or heterogeneous), UAV battery capacity, type of the sensor and sensor resolution, data storage capacity etc. (Eskandari et al., 2020). When flying frame (camera array) imaging sensors over homogeneous agricultural fields characterised by a low number of discerning features, a high lateral (between flight lines) and longitudinal (along a flight line) overlap of >70% ensures the correct stitching of the images in the mosaicking process. Besides, it is important to optimize the placement of the different sensors on the UAV to ensure a high degree of overlap between images. During a multi-sensor mission, image overlap has to be computed for the sensor with the narrowest field of view. This will ensure that they capture images of the same area. Planning flight patterns with sufficient overlap so that every section of the ground is imaged close enough to nadir direction will ensure the mitigation of BRDF effects. Many SfM processing software will help to minimize BRDF effects by using the whole of the image overlapping regions to align the images (Aasen & Bolten, 2018), but then use only the central most nadir portion of each individual image for the final mosaic. High image overlap >70% substantially increases the generated image data volume and flight duration. Since for certain sensors time and duration of acquisition is critical, the image overlap settings as well as flight altitude should be reviewed (e.g lowering lateral overlap close to the recommended minimum of 60% and increasing flight altitude) and sensors prioritised during a multi-sensor campaign. For more details refer to Eskandari et al. (2020).

-

Sensor stabilisation (#2 in Fig. 2). Specific instruments, such as uncooled TIR cameras, require thermal stabilisation after take-off to minimize temperature drift that occurs when the camera temperature changes over time. Warming up on the ground, using an external heated shutter (Virtue et al., 2021), hovering, or loitering over 5-10 minutes can minimise these effects. However, this extra flight time has to be considered and will reduce the useful time available for the actual mission (Kelly et al., 2019). Field spectrometer systems for SIF retrieval theoretically require less warming-up in the field, however, during laboratory calibration, a warming-up time of around 15–20 minutes has often yielded the most stable results. Thus, we recommend powering up the instrument approx. 20 minutes prior to critical measurements. When fusing information across different sensors, it is recommended to minimize biases due to drifting of individual sensors by giving each sensor sufficient time to stabilize in the ambient air temperature. The stabilization time may vary depending on sensor type and the environmental conditions. Thermal expansion or contraction of lens materials and mechanical components can alter the physical distance between the lens elements, thereby affecting the focal length. Additionally, temperature variations can affect the properties of electronic components within the camera, such as sensors, circuit boards and calibration materials. This affects the imaging performance and accuracy and needs to be taken into account.

Daytime change of Pearson correlation coefficient (r) and slope and intercept of the linear model between Crop Water Stress Index and soil moisture in Hamerstorf, Germany (Lat: 52.91\(^{\circ }\), Long: 10.46\(^{\circ }\)). Data stems from ground measurements of canopy temperature and soil moisture over an experimental potato field in 2019 during periods of water stress. Periods of water stress were the following days: DOY 158–160, 181–185, 208–210, 224–225 (in total 13 days), n = 39 for the statistical measures. In the early to mid-afternoon (12–15 h) the correlation is strongest and the linear model parameters reach relatively stable values which indicates a robust relation between the thermal infrared measurements and soil water availability. This illustrates the typical recommendation to acquire TIR measurements in the early afternoon for crop stress applications. Details on the computation of CWSI can be found in Ekinzog et al. (2022) [Figure generated using data from the project “Sensor-based irrigation management in potatoes” funded by the program “European Innovation Partnership Productivity and Sustainability in Agriculture”, as described in Ekinzog et al. (2022)]

Relative uncertainties associated with diurnal measurements of SIF at 760 nm in June 2019 HyPlant campaign. The study area (Lat: 50.6\(^{\circ }\), Long: 6.9\(^{\circ }\)) covered summer and winter varieties of wheat and barley, as well as sugar beet and winter rapeseed. The relative uncertainty is a combination of the uncertainty of the SIF retrieval, as well as the uncertainties associated with data pre-processing and the sensor measurement itself. For detailed description of uncertainty calculation please refer to FLEXSense 2019 report (Rascher et al., 2022)

Ancillary data

-

Calibration targets (#3 in Fig. 2). Proper radiometric calibration with calibration targets is required for accurate crop trait mapping using imaging spectroscopy data. Different sensor types have unique requirements, and when using multiple sensors, each must be met for high-quality data acquisition. A standard approach for VIS-NIR sensors is the empirical line correction method (ELM) (Smith & Milton, 1999). Here it is recommended to use at least 2 gray-gradient targets with different reflectance factors and a Lambertian surface (Chakhvashvili et al., 2021; Lucieer et al., 2014). Targets for VIS-NIR sensors should be placed on a non-vegetated surface, not too close to each other to minimise adjacency effects, and cover several pixels from the desired flight altitude. This point is especially important when performing multiple sensor campaigns with varying spatial resolutions. For more detailed information on best practices of calibration targets refer to Aasen and Bolten (2018). TIR sensors require temperature calibration plates (TCPs) with a large temperature range and built-in insulation to avoid temperature fluctuations (Maes et al., 2017; Messina & Modica, 2020). Standard practice is to place contrasting TCPs at different locations in the field. TCP material should have high emissivity, be stable over time, have a uniform surface and be durable. Certain materials such as painted black and white polypropylene or aluminium plated panels have proven to be satisfy the above requirements (Kelly et al., 2019; Messina & Modica, 2020). Similar to VIS-NIR sensors, TCPs are used for radiometric calibration with ELM.

Reference targets to verify SIF retrievals require mimicking the double peak emission of chlorophyll fluorescence, ideally against a spectral signature background that is close to that of vegetation. Active SIF reference targets with LED panels are recommended for signal intensity validation and correction, but they require a time-consuming setup and management (Burkart et al., 2015). Passive SIF reference targets based on a dye that has a similar spectral shape as chlorophyll fluorescence are easier to set up but they rapidly age under sunlight and require continuous monitoring (Rascher et al., 2022). Both types have spectral differences compared to SIF in natural vegetation. It is recommended to characterize both types of reference targets on the ground and continuously monitor them during the experiment to consider ambient influences.

-

Ground control points (GCPs; #4 in Fig. 2). The installation of GCPs is strongly recommended, even if the UAV is equipped with real time kinematic (RTK) positioning. This is particularly important for wavelength ranges with less reflected or emitted energy like SWIR or TIR, where the spatial contrasts are less sharp and the identification of targets in the image might be challenging. SIF sensors typically require the same GCP characteristics as other hyperspectral sensors do. Suitable GCPs are aluminium panels, which are well visible in the thermal but also in all other spectral domains. In the context of multi-sensor operations GCP dimensions are of high importance. They should be large enough to be discernible from sensors with different spatial resolutions. Covering panels (for example thin wood) with standard household aluminum foil is an economic solution and they are easy to carry and install in the field. It should be noted that aluminum foil possesses highly reflective properties within the visible light spectrum. To mitigate specular reflection, the panels should be positioned in shaded areas, oriented appropriately to minimize direct exposure to sunlight, or be covered with crinkled aluminium foil. Furthermore, it is advisable for the GCPs to have a distinct shape, such as a square, rather than, for example, circles, to facilitate clear identification of positions. In addition, the GCPs should have a visible mark in the center to allow exact coordinate identification in post-processing. Black and white GCPs may also be discernable for VIS-NIR and TIR; if placed in the field 1-2 hours before UAV flights the black and white panels should achieve an adequate temperature differential compared to soil and vegetation. For more detailed information on GCPs refer to Aasen and Bolten (2018) and Cunliffe and Anderson (2019).

-

Weather data (#5 in Fig. 2) Local weather data is important for many applications, especially for TIR and SIF. TIR data is often normalised using air temperature, solar irradiance, and wind speed. Normalizing SIF by solar irradiance brings more insight into the crops’ physiological status. In the case of optical sensors, the use of a sun photometer can provide aerosol optical depth (AOD) and atmospheric water content for calculating irradiance and determining reflectance. Alternatively, irradiance measurements can be performed in the field (Hakala et al., 2018) using spectroradiometers or cosine irradiance sensors mounted on the UAVs. Monitoring the environmental conditions is also essential for comparing measurements across multiple days or environments. If local weather stations are nearby, their data could be used to characterize weather conditions during the flight. However, due to significant variations in parameters such as wind speed or irradiance over short distances and the typically limited temporal resolution of recorded parameters, it is generally recommended to use portable weather stations to more accurately characterize instantaneous weather conditions.

-

Annotation. Acquired data must be adequately annotated to make the fusion of various spatial, spectral or temporal resolutions possible. General attention should be paid to the geocoding including the used geographic coordinate system and time of the acquisition. The annotation of measurements with meta-data provides the first level of data products and is crucial for data quality and control and the potential usefulness for further studies. It is also needed to combine the data from the different sensors without mistakes like a mix-up of time or location etc.

Data analysis

Data analysis encompasses the pre- and post-processing of the imagery, starting from georectification and ending with retrieving crop traits using parametric and non-parametric methods (Fig. 6). In this process, data products are classified into different levels based on the extent of processing they undergo. These levels, denoted as (Level 0), (Level 1c), and (Level 3), represent distinct grades of pre-processing. Level 0 refers to raw data as digital numbers (DNs) recorded by the sensor and metadata, Level 1c involves radiometrically processed and calibrated data in absolute SI units, while Level 3 corresponds to higher-level information extraction such as SIF and vegetation indices (VI).

The level of pre-processing determines the usability and generality of data products, depending largely on the desired application. Synergistic use of multiple sensors requires a standardization of data products enabling the valid fusion of information. Fusion of raw data (Level 0) from multiple sensors is possible in principle if proper annotation is provided along with complete meta-data. This Level 0 information is sensor specific and therefore, cannot be generalized easily. We recommend pre-processing data to Level 1c, which provides absolute and comparable, physical SI units together with relevant meta-data to enable transferable results. It is advisable to keep a record of the used sensor calibration in the meta-data after processing to Level 1c. Furthermore, we recommend the use of data quality flags, that provide uncertainties and error budgets in the meta-data to filter unstable or biased measurements (Cogliati et al., 2015; Hueni et al., 2017).

One of the prerequisites of hyperspectral and multispectral data fusion across different sensors are spectral re-sampling and convolution (Aasen et al., 2018). In this context, temporal and spatial aspects need to be considered carefully and may require aggregation methods for combining data of different temporal resolutions and of different spatial extents. For example, to compare datasets pixel-to-pixel, spatial resampling for matching GSD is necessary as well as pixel-to-pixel co-registration of orthomosaics. Temporal mismatches may be addressed by normalisation such as vegetation indices, or by applying corrections e.g. for changes in PAR, e.g. relevant for data in radiance units such as SIF. Researchers are advised to make sure to always record the appropriate time-stamp, time-zone, field of view, sensor elevation and location with each measurement to ease the combination of information from sub-plots and experiments later on. When combining higher level information (Level 3), for example SIF or VI, from multiple sensors, ensure that the retrieval methods or band combinations for their retrievals were identical or at least as similar as possible.

Pre-processing: level 0 to level 1c

Georeferencing The synergistic use of image data from multiple sensors with different spectral domains requires careful geometric processing. Different sensors often have varying spatial resolutions and geometric accuracies. Additionally, data may have been acquired at different times or on different days. Two approaches for georeferencing the image data include image-to-image georeferencing and absolute georectification. Image-to-image georeferencing is suitable for sensor fusion with significantly different spatial resolutions or different acquisition times, while georectification is necessary when using other spatially aligned imagery, such as satellite scenes.

Radiometric calibration. To perform accurate multi-temporal crop stress analysis and correct for minor atmospheric influences in UAV data, raw DN pixel values are not sufficient (Song et al., 2001) and need to be converted to reflectance. Reflectance calibration slightly differs for visible (RGB), multispectral (Poncet et al., 2019), hyperspectral (Hruska et al., 2012; Lucieer et al., 2014) and thermal sensors (Kelly et al., 2019), due to varying atmospheric attenuation effects. A common and easy-to-use approach is one-point calibration, which involves using a single calibration panel and performing empirical line correction (Wang & Myint, 2015). However, using multiple panels yields more accurate results (Chakhvashvili et al., 2021). For more information on calibration targets refer to "Ancillary data" section. In recent years, standalone physical-based atmospheric correction software, such as DROACOR (Schläpfer et al., 2022), has emerged, offering a more streamlined approach to atmospheric correction, particularly beneficial for newcomers in the field.

More precise reflectance calibration approaches monitor the atmosphere at all times using incoming light sensors (ILS) and correct for short-term changes in the atmosphere (Olsson et al., 2021). Some MS sensors may have a single band broad range ILS, while other sensors may have dedicated ILS band filters for the separate calibration of each band, with the latter providing improved accuracy. However, under certain conditions, ILS mounted on UAVs are biased due to in-flight yaw and pitch angles. When using ILS synergistic application of multiple sensors, assure valid and congruent correction for all used sensors.

For thermal data, it is essential to understand the relationship between acquired DN values and targeted surface temperature (Kelly et al., 2019; Messina & Modica, 2020). Radiometric correction in the field is done by empirical line calibration regressing the measured TCPs temperature and DN values at the TCPs. This approach assumes high emissivities of TCPs (> 0.98). The validation may be done using linear regression of defined homogeneous regions measured by infrared thermometers. Even if absolute temperature is desirable, it is rather challenging (Heinemann et al., 2020). In some cases, just relative differences are sufficient or a simple correction with the ambient temperature of the closest weather station might be suitable. The calculation of a simplified, image-based version of the CWSI (crop water stress index) (Jackson et al., 1981) can also be an option to combine TIR data with further sensor information (see "Post-processing: level 1c to level 3" section).

Post-processing: level 1c to level 3

VIs

In this section we are going to show how UAV-acquired data from different spectral domains were used together in vegetation studies. The synergies we are going to describe are VIS-NIR, VIS-NIR-SWIR, VIS-NIR-TIR.

The most common example of sensor synergy is the usage of VIS-NIR for vegetation-soil separation with, for example, NDVI and further processing of vegetated pixels. Masking soil pixels with NDVI, Kandylakis et al. (2020) retrieved water stress as stomatal conductance from SWIR and Bahat et al. (2021) retrieved water stress from TIR. The SWIR region is affected by water availability in the vegetated canopy, highlighted in the synergy with NIR in normalized difference water index (NDWI) for vegetation water content (Gao, 1996) and normalized difference infrared index (NDII) for canopy water stress (Hardisky et al., 1984). NIR/SWIR indices were applied for grass (Jenal et al., 2020; Barber et al., 2021) and wheat (Jenal et al., 2021) biomass mapping from a UAV NIR/SWIR imaging system (Jenal et al., 2019). Several attempts were made to reconstruct SWIR regions from VIS-NIR images with machine learning for estimation of fuel moisture content (FMC) (Arevalo-Ramirez et al., 2020, 2021; Villacrés & Cheein, 2022; Villacrés et al., 2021).

ET (TIR)

For VIS-NIR-TIR synergy, the group of trapezoid (also known as triangular) approaches is used to explain the variation in land surface temperature (LST) with a variation in vegetation index, usually NDVI, to estimate the vegetation water stress (Lambin & Ehrlich, 1996). The first application was developed for Water Deficit Index (WDI) (Moran et al., 1994). Lately, the method was used with UAV-borne data for crop water stress mapping (Hoffmann et al., 2016) and ET computation (Mokhtari et al., 2021). Many ET models [TSEB (Norman et al., 1995), SEBS (Su, 2002), METRIC (Allen et al., 2007)] develop this LST-NDVI relation further, including shortwave albedo.

RTMs

One of the advanced methods for making use of VIS-NIR-SWIR synergy is exemplified by the RTMs, most of which function in the shortwave radiation range, 400-2500 nm. The models such as SAIL (Verhoef, 1984), INFORM (Schlerf & Atzberger, 2006) and DART (Gastellu-Etchegorry et al., 1995) upscale leaf reflectance, simulated usually with the PROSPECT model (Jacquemoud et al., 2009), to the canopy level. The inversion of RTMs enables retrieving leaf (chlorophyll and water content) and canopy (LAI) parameters, which can be further correlated with stress levels (Verrelst et al., 2019). As mentioned earlier, SWIR-carrying UAV systems were developed relatively recently (Jenal et al., 2019; Arroyo-Mora et al., 2021), therefore UAV studies that use RTM inversion are limited to the VIS-NIR domain, sampled either hyperspectrally (Abdelbaki et al., 2021; Duan et al., 2014; Lin et al., 2019; Wan et al., 2021; Wang et al., 2021; Yin et al., 2022) or multispectrally (Antonucci et al., 2023; Chakhvashvili et al., 2022; Jay et al., 2019; Roosjen et al., 2018; Singh et al., 2023; Sun et al., 2021; Verger et al., 2014). As a guideline, we advise to degrade the spatial resolution of a UAV image to satisfy turbid-medium models, such as SAIL, or to mask shaded and bare soil pixels before the inversion (Chakhvashvili et al., 2022).

The incorporation of the TIR domain in RTMs remains less studied due to challenges with temperature-emissivity separation. Some attempts have been made with codes like SAIL-Thermique (Olioso, 1995) and 4SAIL (Verhoef et al., 2007). Although it was shown that the SCOPE model has the potential to use TIR to retrieve leaf biophysical traits (maximum carboxylation capacity of RuBisCO, Vcmax25, related to the photosynthesis rate and Ball-Berry slope, BBS, related to stomatal conductance, photosynthesis and transpiration) (Prikaziuk & Van der Tol, 2019), the practical attempts required additional constraints with the flux data (GPP, ET) (Dutta et al., 2019; Prikaziuk et al., 2023) or look-up tables (Bayat et al., 2018). The main challenge for RTM applications with UAV-borne TIR data is the accurate absolute radiometric calibration of UAV-TIR data, because RTMs work with absolute physical quantities.

In spite of being more temporally dynamic than TIR, SIF was successfully introduced into leaf [FLUSPECT (Vilfan et al., 2016)] and canopy [SCOPE, CliMA (Wang et al., 2021), DART (Malenovskỳ et al., 2021)] RTMs. Wang et al. (2023a) used UAV-borne VIS-NIR-SIF synergistic retrieval of leaf and canopy traits and fluorescence quantum yield (fqe) with the SCOPE model for sugar beet under water stress. Additional TIR constraint was needed as a proxy of stomata closure. The UAV-borne systems suitable for SIF retrievals are being developed actively (Bendig et al., 2020; Chang et al., 2020; Vargas et al. 2020; Wang et al., n.d.; Zhang et al. 2022) and we expect more studies to use RTM for SIF data processing.

Overall, the inversion of RTMs in the VIS-NIR-SWIR domains sampled hyperspectrally can be considered a routine operation. In relation to multispectral UAV sensors, the success is limited by the number of bands (constraints) in the UAV system, plant phenological stage, time of the day, spatial resolution, viewing angle etc. The application of RTMs with SIF and TIR data is an emerging field and currently requires a two-step procedure (Wang et al., 2023a). In the first step, biophysical parameters retrievable from multispectral and hyperspectral are obtained. In the second step, plant physiological parameters such as Vcmax25 and fqe are retrieved from SIF or TIR.

Integration with crop models

Berger et al. (2022) had elaborately portrayed the advantages and potential of combining crop growth models with remotely sensed data obtained by several types of imaging instruments. While crop models focus on simulating the complex process of crop development and biomass accumulation, some only provide an output of plant and soil properties (Huang et al., 2019), and other, more complex CGMs, do account for the interaction of soil and plant with radiation by modeling the energy balance in the soil-plant-atmosphere but do not produce output variables directly related to remote sensing measurements (e.g., surface reflectance, etc.). Thus, some important aspects should be considered to assimilate remote sensing data into crop models, specifically data from multiple sensor types.

There are several crop models commonly used in the literature, which vary in aspects such as crop growth driving mechanism, simulated state variables, and model parameters (Jin et al., 2018). These differences might determine the adequacy of a certain crop model to benefit from integration of RS data in different domains. For example, canopy cover, used in AquaCrop to describe the canopy development (Steduto et al., 2009), is a property that can be evaluated either from plain RGB images or from VIS-NIR data (Zhang et al., 2019). More complex crop models, such as DSSAT (Jones et al., 2003) or APSIM (Holzworth et al., 2014), differ from AquaCrop in two major aspects: (a) plant growth is driven by the amount of intercepted radiation, and (b) they simulate more processes including the soil-plant-nitrogen balance (Boote, Jones, and Hoogenboom, 2018). Following the example of canopy development simulation, these two crop models use LAI to describe canopy expansion, which can be obtained, e.g., by empirical relations to vegetation indices (Masjedi et al., 2018). This approach, of using data from one spectral domain to evaluate a single property is straight forward, but still limited to very specific simulated state variables and data types.

To use data from several spectral domains with varying spectral resolution in a synergized way, and to utilize more crop related properties, one can couple the crop model with a radiative transfer model. This means to choose mathematical equations that relate the crop model’s state variables with the RTM input parameters. Since crop models are dynamic and usually use daily time steps, while RTMs are instantaneous, one should consider the rate of change of the simulated properties. Most previous studies that used this approach used different versions of SAIL (Verhoef, 1984) for the choice of RTM (Huang et al., 2019; Machwitz et al., 2014; Weiss et al., 2001), but this group of models, along with its diverse capabilities, is limited to the optical domain of the electromagnetic spectrum. Thus, to facilitate the use of SIF and thermal data, a more comprehensive model, such as SCOPE, is recommended, and the coupling relations should be extended to include relations between crop state variables and RTM parameters that affect thermal emission and SIF.

In previous studies, this framework was mainly used to improve prediction of yield and other crop traits (Guo et al., 2018), but in the context of this paper, it might as well be used for crop stress detection since GCMs (e.g., APSIM, DSSAT) simulate the daily stresses (water and nitrogen) that impact photosynthesis and growth processes (e.g. expansive growth processes) in a dynamic way. For this purpose, the method for integration of the RS data in the coupled model should be carefully chosen. Due to the dynamic nature of crop models, either a calibration or sequential updating are commonly used. Calibration methods consider the course of a full season, and determine the values of the crop model parameters, which does not directly relate to stress indicators and are less suitable for our purpose. In contrast, sequential updating methods (e.g, Kalman filter or particle filter), usually used to update the state variables, and thus they are more recommended in the context of stress detection. Following this approach, by assimilating RS data, both RTM parameters and stress-related crop model state variables can be evaluated dynamically. Finally, it should be noted, that although most of the previous studies used space-borne RS data because yield prediction does not require very high spatial resolution (Huang et al., 2019), stress detection requires the use of UAVs to obtain much higher spatial resolution.

Lessons learnt/bad examples

Sensor synergies can be challenging, above all due to inconsistencies arising from differences in overpass time and resolution (spatial, temporal, and spectral). To assist new users and avoid pitfalls and frustration, we compiled recommendations derived from real-world examples where data acquisition did not go as planned by experienced UAV operators using multiple sensors. These recommendations, are not strictly limited to multi-sensor synergies and apply to UAV field campaigns in general.

Checklist, protocols, fieldbook

First and foremost, we advise every operator to design/modify checklists and standardized protocols adequate to their respective field campaigns. Besides preventing rather apparent mistakes such as forgetting critical parts of the equipment in the lab, variations due to changing operators are minimized and the procedure can be reproduced at a later time (Tmušić et al., 2020; European Commission, 2018; TERN, 2023) Similarly, we recommend precisely documenting the metadata such as weather conditions, handling person, time, and any other source of information that appears to be useful while being in the field to comprehend eventual issues occurring when processing the data. Short and precise checklists and metadata documentation are most effective. They should focus on essential information to prevent user frustration. Lengthy checklists are less likely to be thoroughly reviewed by users. Often, noting metadata is overlooked during UAV operations as field campaigns typically require multitasking. To address this, we suggest taking photos of screens, the sky, instruments, and watches, and recording voice messages as reminders. Additionally, a pre-populated text on a mobile phone or tablet, or printed on paper can aid in remembering crucial information during fieldwork. This allows for quick data capture on-site, with more comprehensive documentation completed later in a quieter setting.

Solar illumination

We advise users to critically check their protocols based on daily and seasonal cycles of solar azimuth and zenith angles. Variations of these angles potentially induce uncertainties and change the distribution of direct and diffuse illumination of vegetation. Although not completely avoidable, it is advisable to minimize this effect, quantify the related uncertainties or consider strategies such as BRDF modeling where appropriate. Related to this, variation in illumination leads to variation in shading which can hinder successful analysis but, also allow for easier object detection (e.g. trunks not visible in nadir). Especially for thermal sensors, shaded objects can induce variations in radiometric temperature. These should be treated adequately as they might significantly affect derived variables (e.g. ET).

Test flights, GCP visibility, ROI markers

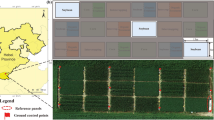

We also advise performing test flights and quickly analyzing the corresponding data as we encountered cases where we found an insufficient overlap of images, although image acquisition protocols provided by the manufacturer were met. While performing these test flights, we further recommend ensuring that GCP placement is correct and the markers are clearly visible through all spectral domains, with special emphasis on the thermal domain. We encountered problems with permanently placed markers which had poor visibility in certain spectral domains due to dirt accumulation and/or wear due to exposure to UV radiation (see Fig. 7). Additionally, we recommend using additional markers (again, visible in all spectral domains) defining regions of interest as differences in the field of view and spatial resolution can hinder identification, especially in homogeneous environments such as crop rows as shown in Fig. 5. From our experience, investing in high-quality reference panels can significantly improve radiometric quality, especially when dealing with narrowband hyperspectral sensors. Therefore, we recommend allocating a budget for suitable reference panels for the corresponding sensors. Lastly, ensure reference targets receive regular cleaning, especially in dry summer conditions when dust accumulates quickly.

Visibility of markers in different spectral domains. Yellow circles show panels made from aluminium foil, while blue circles represent panels with printouts of markers (i.e. white squares with geometric patterns). In RGB (left) both marker types are easy to spot, while the visibility of the standard panels is clearly restricted in the thermal image (right) (Color figure online)

Instantaneous weather conditions

Most of us found ourselves tackling problems due to weather conditions during image acquisition on many occasions. As the majority of flights are performed on sunny days, we encountered issues regarding equipment overheating (e.g. tablets for UAV operation, UAV batteries, spectrometers etc.) hindering the successful acquisition of data. Recommended practices are scheduling with breaks to let the equipment cool down, avoiding direct sunlight exposure (e.g. through a parasol) or the usage of active cooling systems in extreme environments. Also, we advise allocating resources carefully: Cancelling or delaying flights due to unfavorable weather conditions is regrettable but often a more prudent decision than collecting inaccurate data, which could result in even greater resource wastage. From our experience, decision-making is often not straightforward and discussions within teams are common. We, therefore, recommend defining clear criteria (e.g. maximum wind/gust speed thresholds) beforehand which are monitored during the campaign and allow to make an informed decision on campaign cancellation.

Flight and irrigation timing

Crop water status is dependent on the timing of irrigation, not only irrigation dose. When measuring Crop Water Stress Index (CWSI) from thermal imaging, one must take into account when the last irrigation was applied; if irrigation is applied just before or during the imaging, differences between irrigation treatments may be less pronounced compared to taking a thermal measurement before irrigation is applied. Whenever possible, we recommend scheduling the irrigation after the measurement flight. An example is given in Fig. 8, where the visibility of the different treatments is strongly reduced in the left panel.

Surface temperature and NDVI from UAV observations in two different days of an experiment in processing tomatoes in Israel in the summer of 2020. The figure shows experimental blocks with two different treatments: the right block features full irrigation (i.e., best practice irrigation, the control treatment), while the left block was given 50% of full irrigation. Each block comprises three crop rows (outer boxes), but the irrigation effect was measured only for the middle row (inner boxes), as the outer rows serve as buffers from adjacent treatments. If irrigation is applied just before or during the imaging, differences between irrigation treatments may be less pronounced compared to taking a thermal measurement before irrigation is applied

Conclusion and outlook

This manuscript summarises relevant aspects important to consider when exploiting multiple sensors onboard UAVs for crop stress and trait detection. The need for such guidelines has been identified as a result of increased stress occurrence caused by climate change effects, the increased availability of optical sensors onboard UAVs, and the current lack of existing protocols on how to collect and analyze such data.

The first part of the manuscript covered data acquisition of high-quality multi sensor imagery. The most relevant points to consider were: i) Optimal timing for data acquisition is crucial. The order of the flight is detrimental as some sensors have very specific timing requirements. ii) Sensor characteristics need to be taken into account for mission planning. iii) Sensor from different optical domain require calibration targets with different properties. iv) Use of ground control points that are discernible from every sensor are strongly recommended to ensure accurate image co-registration. v) Use of additional measurements (e.g. weather data) for atmospheric correction is advisable.

The second part of this guideline dealt with the data analysis. The most relevant points to consider were vi) Data fusion for synergistic usage should be done at data level 1c (physical units). vii) Data quality flags should be used to filter unstable or biased measurements. We presented some straightforward methods like vegetation indices to combine information from different spectral domains, along with some more complex processing such as retrieving biophysical variables from RTM inversion. The latter necessitates further research, and it is hoped that numerous studies will be conducted in line with the recommendations made by Berger et al. (2022).

Finally, the recommendations are rounded up by bad examples that scientists experienced during their research work. This collection of best practices and lessons learnt can help scientists and practitioners in making informed decisions regarding sensor selection, mission planning and the challenges associated with multiple sensor data acquisition and processing to advance crop stress monitoring with UAVs.

References

Aasen, H., & Bolten, A. (2018). Multi-temporal high-resolution imaging spectroscopy with hyperspectral 2D imagers—From theory to application. Remote Sensing of Environment, 205, 374–389. https://doi.org/10.1016/j.rse.2017.10.043

Aasen, H., Honkavaara, E., Lucieer, A., & Zarco-Tejada, P. (2018). Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, and data correction workflows. Remote Sensing, 10(7), 1091. https://doi.org/10.3390/rs10071091

Abdelbaki, A., Schlerf, M., Retzlaff, R., Machwitz, M., Verrelst, J., & Udelhoven, T. (2021). Comparison of crop trait retrieval strategies using UAV-based VNIR hyperspectral imaging. Remote Sensing, 13, 1748. https://doi.org/10.3390/rs13091748

Adão, T., Hruška, J., Pádua, L., Bessa, J., Peres, E., Morais, R., Sousa, J. J. (2017). Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sensing. https://doi.org/10.3390/RS9111110.

Allen, R. G., Tasumi, M., & Trezza, R. (2007). Satellite-based energy balance for mapping evapotranspiration with internalized calibration (metric)-model. Journal of Irrigation and Drainage Engineering, 133, 380–394. https://doi.org/10.1061/(ASCE)0733-9437(2007)133:4(380)

Antonucci, G., Impollonia, G., Croci, M., Potenza, E., Marcone, A., & Amaducci, S. (2023). Evaluating biostimulants via high-throughput field phenotyping: Biophysical traits retrieval through prosail inversion. Smart Agricultural Technology, 3, 100067. https://doi.org/10.1016/j.atech.2022.100067

Arevalo-Ramirez, T. A., Castillo, A. H. F., Cabello, P. S. R., & Cheein, F. A. A. (2021). Single bands leaf reflectance prediction based on fuel moisture content for forestry applications. Biosystems Engineering, 202, 79–95. https://doi.org/10.1016/j.biosystemseng.2020.12.003

Arevalo-Ramirez, T., Villacrés, J., Fuentes, A., Reszka, P., & Cheein, F. A. A. (2020). Moisture content estimation of pinus radiata and eucalyptus globulus from reconstructed leaf reflectance in the SWIR region. Biosystems Engineering, 193, 187–205. https://doi.org/10.1016/j.biosystemseng.2020.03.004

Arroyo-Mora, J. P., Kalacska, M., Løke, T., Schläpfer, D., Coops, N. C., Lucanus, O., & Leblanc, G. (2021). Assessing the impact of illumination on UAV pushbroom hyperspectral imagery collected under various cloud cover conditions. Remote Sensing of Environment, 258, 112396. https://doi.org/10.1016/j.rse.2021.112396

Bahat, I., Netzer, Y., Grünzweig, J. M., Alchanatis, V., Peeters, A., Goldshtein, E., & Cohen, Y. (2021). In-season interactions between vine vigor, water status and wine quality in terrain-based management-zones in a ‘Cabernet Sauvignon’ vineyard. Remote Sensing, 13(9), 1636. https://doi.org/10.3390/rs13091636

Bandopadhyay, S., Rastogi, A., & Juszczak, R. (2020). Review of top-of-canopy sun-induced fluorescence (Sif) studies from ground, UAV, airborne to spaceborne observations. Sensors (Switzerland). https://doi.org/10.3390/S20041144.

Barber, N., Alvarado, E., Kane, V. R., Mell, W. E., & Moskal, L. M. (2021). Estimating fuel moisture in grasslands using UAV-mounted infrared and visible light sensors. Sensors, 21, 6350. https://doi.org/10.3390/s21196350

Bastiaanssen, W., Menenti, M., Feddes, R., & Holtslag, A. (1998). A remote sensing surface energy balance algorithm for land (SEBAL). 1. Formulation. Journal of Hydrology, 212–213, 198–212. https://doi.org/10.1016/S0022-1694(98)00253-4

Bayat, B., van der Tol, C., & Verhoef, W. (2018). Integrating satellite optical and thermal infrared observations for improving daily ecosystem functioning estimations during a drought episode. Remote Sensing of Environment, 209, 375–394. https://doi.org/10.1016/j.rse.2018.02.027

Bellvert, J., Nieto, H., Pelechá, A., Jofre-Čekalović, C., Zazurca, L., & Miarnau, X. (2021). Remote sensing energy balance model for the assessment of crop evapotranspiration and water status in an almond rootstock collection. Frontiers in Plant Science, 12, 608967.

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., & Bareth, G. (2015). Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. International Journal of Applied Earth Observation and Geoinformation, 39, 79–87.

Bendig, J., Malenovský, Z., Gautam, D., Lucieer, A. (2020). Solar-induced chlorophyll fluorescence measured from an unmanned aircraft system: Sensor etaloning and platform motion correction. IEEE Transactions on Geoscience and Remote Sensing, 58(5), 3437–3444

Berger, K., Machwitz, M., Kycko, M., Kefauver, S. C., Van Wittenberghe, S., Gerhards, M., & Schlerf, M. (2022). Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sensing of Environment, 280, 113198. https://doi.org/10.1016/j.rse.2022.113198

Bevacqua, E., De Michele, C., Manning, C., Couasnon, A., Ribeiro, A. F. S., Ramos, A. M., & Zscheischler, J. (2021). Guidelines for studying diverse types of compound weather and climate events. Earth’s Future, 9(11), e2021EF002340. https://doi.org/10.1029/2021EF002340

Boote, K.J., Jones, J.W., & Hoogenboom, G. (2018). Simulation of crop growth: Cropgro model. CRC Press.

Burkart, A., Schickling, A., Mateo, M. P. C., Wrobel, T. J., Rossini, M., Cogliati, S., & Rascher, U. (2015). A method for uncertainty assessment of passive sun-Induced chlorophyll fluorescence retrieval using an infrared reference light. IEEE Sensors Journal, 15(8), 4603–4611. https://doi.org/10.1109/JSEN.2015.2422894

COST Action SENSECO CA17134 (n.d.). Optical synergies for spatiotemporal sensing of scalable ecophysiological traits (SENSECO), European cooperation in science and technology (COST) action. Retrieved July 21, 2019 from https://www.senseco.eu/

Camino, C., Gonzalez-Dugo, V., Hernandez, P., & Zarco-Tejada, P. J. (2019). Radiative transfer vcmax estimation from hyperspectral imagery and SIF retrievals to assess photosynthetic performance in rainfed and irrigated plant phenotyping trials. Remote Sensing of Environment, 231, 111186. https://doi.org/10.1016/j.rse.2019.05.005

Chakhvashvili, E., Siegmann, B., Muller, O., Verrelst, J., Bendig, J., Kraska, T., & Rascher, U. (2022). Retrieval of crop variables from proximal multispectral UAV image data using prosail in maize canopy. Remote Sensing, 14, 1247. https://doi.org/10.3390/rs14051247

Chakhvashvili, E., Siegmann, B., Bendig, J., Rascher, U. (2021). Comparison of reflectance calibration workflows for a UAV-mounted multi-camera array system. In 2021 IEEE international geoscience and remote sensing symposium, IGARSS (pp. 8225–8228).

Chang, C. Y., Zhou, R., Kira, O., Marri, S., Skovira, J., Gu, L., & Sun, Y. (2020). An unmanned aerial system (UAS) for concurrent measurements of solar-induced chlorophyll fluorescence and hyperspectral reflectance toward improving crop monitoring. Agricultural and Forest Meteorology, 294, 108145. https://doi.org/10.1016/j.agrformet.2020.108145

Cogliati, S., Rossini, M., Julitta, T., Meroni, M., Schickling, A., Burkart, A., & Colombo, R. (2015). Continuous and long-term measurements of reflectance and sun-induced chlorophyll fluorescence by using novel automated field spectroscopy systems. Remote Sensing of Environment, 164, 270–281. https://doi.org/10.1016/j.rse.2015.03.027

Cunliffe, A., & Anderson, K. (2019). Measuring above-ground biomass with drone photogrammetry: data collection protocol.

Damm, A., Cogliati, S., Colombo, R., Fritsche, L., Genangeli, A., Genesio, L., & Miglietta, F. (2022). Response times of remote sensing measured sun-induced chlorophyll fluorescence, surface temperature and vegetation indices to evolving soil water limitation in a crop canopy. Remote Sensing of Environment, 273, 112957. https://doi.org/10.1016/j.rse.2022.112957

Duan, S.-B., Li, Z.-L., Wu, H., Tang, B.-H., Ma, L., Zhao, E., & Li, C. (2014). Inversion of the prosail model to estimate leaf area index of maize, potato, and sunflower fields from unmanned aerial vehicle hyperspectral data. International Journal of Applied Earth Observation and Geoinformation, 26, 12–20. https://doi.org/10.1016/j.jag.2013.05.007

Dutta, D., Schimel, D. S., Sun, Y., van der Tol, C., & Frankenberg, C. (2019). Optimal inverse estimation of ecosystem parameters from observations of carbon and energy fluxes. Biogeosciences, 16(1), 77–103. https://doi.org/10.5194/bg-16-77-2019

Ekinzog, E. K., Schlerf, M., Kraft, M., Werner, F., Riedel, A., Rock, G., & Mallick, K. (2022). Revisiting crop water stress index based on potato field experiments in Northern Germany. Agricultural Water Management, 269, 107664. https://doi.org/10.1016/j.agwat.2022.107664

Eskandari, R., Mahdianpari, M., Mohammadimanesh, F., Salehi, B., Brisco, B., & Homayouni, S. (2020). Meta-analysis of unmanned aerial vehicle (UAV) imagery for agro-environmental monitoring using machine learning and statistical models. Remote Sensing, 12(21), 3511.

European Commission. (2018). Standardized protocol for UAV data acquisition. Retrieved September 1, 2023 from https://ec.europa.eu/research/participants/documents/downloadPublic?documentIds=080166e5bd3dfa4f &appId=PPGMS.

Fahad, S., Bajwa, A. A., Nazir, U., Anjum, S. A., Farooq, A., Zohaib, A., & Huang, J. (2017). Crop production under drought and heat stress: Plant responses and management options. Frontiers in Plant Science. https://doi.org/10.3389/fpls.2017.01147

Fernandez-Gallego, J. A., Lootens, P., Borra-Serrano, I., Derycke, V., Haesaert, G., Roldán-Ruiz, I., & Kefauver, S. C. (2020). Automatic wheat ear counting using machine learning based on rgb UAV imagery. The Plant Journal, 103(4), 1603–1613.

Gao, B.-C. (1996). NDWI-A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sensing of Environment, 58(3), 257–266. https://doi.org/10.1016/S0034-4257(96)00067-3

Gastellu-Etchegorry, J.-P., Yin, T., Lauret, N., Cajgfinger, T., Gregoire, T., Grau, E., & Ristorcelli, T. (2015). Discrete anisotropic radiative transfer (DART 5) for modeling airborne and satellite spectroradiometer and LIDAR acquisitions of natural and urban landscapes. Remote Sensing, 7(2), 1667–1701. https://doi.org/10.3390/rs70201667

Gastellu-Etchegorry, J. P., Demarez, V., Pinel, V., & Zagolski, F. (1995). Modeling radiative transfer in heterogeneous 3-d vegetation canopies (Vol. 58). Elsevier

Gerhards, M., Schlerf, M., Mallick, K., & Udelhoven, T. (2019). Challenges and future perspectives of multi-/hyperspectral thermal infrared remote sensing for crop water-stress detection: A review. Remote Sensing, 11(10), 1240. https://doi.org/10.3390/rs11101240

Gitelson, A. A., & Merzlyak, M. N. (1998). Remote sensing of chlorophyll concentration in higher plant leaves. Advances in Space Research, 22(5), 689–692. https://doi.org/10.1016/s0273-1177(97)01133-2

Gitelson, A. A., Keydan, G. P., & Merzlyak, M. N. (2006). Three-band model for noninvasive estimation of chlorophyll, carotenoids, and anthocyanin contents in higher plant leaves. Geophysical Research Letters. https://doi.org/10.1029/2006gl026457.

Gräf, M., Immitzer, M., Hietz, P. & Stangl, R. (2021) Water-stressed plants do not cool: Leaf surface temperature of living wall plants under drought stress. Sustainability, 13, 3910. https://doi.org/10.3390/SU13073910

Guo, C., Zhang, L., Zhou, X., Zhu, Y., Cao, W., Qiu, X., & Tian, Y. (2018). Integrating remote sensing information with crop model to monitor wheat growth and yield based on simulation zone partitioning. Precision agriculture, 19, 55–78.

Hakala, T., Markelin, L., Honkavaara, E., Scott, B., Theocharous, T., Nevalainen, O., & Fox, N. (2018). Direct reflectance measurements from drones: Sensor absolute radiometric calibration and system tests for forest reflectance characterization. Sensors, 18(5), 1417. https://doi.org/10.3390/s18051417

Hardisky, M. A., Daiber, F. C., Roman, C. T., & Klemas, V. (1984). Remote sensing of biomass and annual net aerial primary productivity of a salt marsh. Remote Sensing of Environment, 16(2), 91–106. https://doi.org/10.1016/0034-4257(84)90055-5

Heinemann, S., Siegmann, B., Thonfeld, F., Muro, J., Jedmowski, C., Kemna, A., Kraska, T., Muller, O., Schultz, J., Udelhoven, T., & Wilke, N. (2020). Land surface temperature retrieval for agricultural areas using a novel UAV platform equipped with a thermal infrared and multispectral sensor. Remote sensing, 12(7), 1075.

Hoffmann, H., Jensen, R., Thomsen, A., Nieto, H., Rasmussen, J., & Friborg, T. (2016). Crop water stress maps for an entire growing season from visible and thermal UAV imagery. Biogeosciences, 13(24), 6545–6563. https://doi.org/10.5194/bg-13-6545-2016. https://bg.copernicus.org/articles/13/6545/2016/

Holzworth, D. P., Huth, N. I., deVoil, P. G., Zurcher, E. J., Herrmann, N. I., McLean, G., & Keating, B. A. (2014). Apsim—Evolution towards a new generation of agricultural systems simulation. Environmental Modelling & Software, 62, 327–350. https://doi.org/10.1016/j.envsoft.2014.07.009

Hruska, R., Mitchell, J., Anderson, M., & Glenn, N. F. (2012). Radiometric and geometric analysis of hyperspectral imagery acquired from an unmanned aerial vehicle. Remote Sensing, 4(9), 2736–2752. https://doi.org/10.3390/RS4092736

Huang, J., Gómez-Dans, J. L., Huang, H., Ma, H., Wu, Q., Lewis, P. E., Liang, S., Chen, Z., Xue, J.H., Wu, Y., & Zhao, F. (2019). Assimilation of remote sensing into crop growth models: Current status and perspectives. Agricultural and forest meteorology, 276, 107609.

Huang, J., Ma, H., Sedano, F., Lewis, P., Liang, S., Wu, Q., & Zhu, D. (2019). Evaluation of regional estimates of winter wheat yield by assimilating three remotely sensed reflectance datasets into the coupled wofost-prosail model. European Journal of Agronomy, 102, 1–13. https://doi.org/10.1016/j.eja.2018.10.008

Hueni, A., Damm, A., Kneubuehler, M., Schlapfer, D., & Schaepman, M. E. (2017). Field and airborne spectroscopy cross validation-some considerations. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 10(3), 1117–1135. https://doi.org/10.1109/JSTARS.2016.2593984

Jackson, R. D., Idso, S., Reginato, R., & Pinter, P., Jr. (1981). Canopy temperature as a crop water stress indicator. Water Resources Research, 17(4), 1133–1138.

Jacquemoud, S., Verhoef, W., Baret, F., Bacour, C., Zarco-Tejada, P. J., Asner, G. P., & Ustin, S. L. (2009). Prospect+sail models: A review of use for vegetation characterization. Remote Sensing of Environment, 113, S56–S66. https://doi.org/10.1016/j.rse.2008.01.026

Jacquemoud, S. (2000). Comparison of four radiative transfer models to simulate plant canopies reflectance direct and inverse mode. Remote Sensing of Environment, 74(3), 471–481. https://doi.org/10.1016/s0034-4257(00)00139-5

Jay, S., Baret, F., Dutartre, D., Malatesta, G., Héno, S., Comar, A., & Maupas, F. (2019). Exploiting the centimeter resolution of UAV multispectral imagery to improve remote-sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sensing of Environment, 231, 110898. https://doi.org/10.1016/j.rse.2018.09.011

Jenal, A., Bareth, G., Bolten, A., Kneer, C., Weber, I., & Bongartz, J. (2019). Development of a VNIR/SWIR multispectral imaging system for vegetation monitoring with unmanned aerial vehicles. Sensors, 19(24), 5507. https://doi.org/10.3390/s19245507

Jenal, A., Lussem, U., Bolten, A., Gnyp, M. L., Schellberg, J., Jasper, J., & Bareth, G. (2020). Investigating the potential of a newly developed UAV-based VNIR/SWIR imaging system for forage mass monitoring. PFG—Journal of Photogrammetry, Remote Sensing and Geoinformation Science, 88(6), 493–507. https://doi.org/10.1007/s41064-020-00128-7

Jenal, A., Hüging, H., Ahrends, H. E., Bolten, A., Bongartz, J., & Bareth, G. (2021). Investigating the potential of a newly developed UAV-mounted VNIR/SWIR imaging system for monitoring crop traits—A case study for winter wheat. Remote Sensing, 13(9), 1697. https://doi.org/10.3390/rs13091697. https://www.mdpi.com/2072-4292/13/9/1697

Jin, X., Kumar, L., Li, Z., Feng, H., Xu, X., Yang, G., & Wang, J. (2018). A review of data assimilation of remote sensing and crop models. European Journal of Agronomy, 92, 141–152.

Jones, J., Hoogenboom, G., Porter, C., Boote, K., Batchelor, W., Hunt, L., & Ritchie, J. (2003). The DSSAT cropping system model. European Journal of Agronomy, 18, 235–265. https://doi.org/10.1016/S1161-0301(02)00107-7

Kandylakis, Z., Falagas, A., Karakizi, C., & Karantzalos, K. (2020). Water stress estimation in vineyards from aerial SWIR and multispectral UAV data. Remote Sensing, 12(15), 2499. https://doi.org/10.3390/rs12152499

Kaplan, G., & Rozenstein, O. (2021). Spaceborne estimation of leaf area index in cotton, tomato, and wheat using sentinel-2. Land, 10(5), 505. https://doi.org/10.3390/land10050505. https://www.mdpi.com/2073-445X/10/5/505

Kelly, J., Kljun, N., Olsson, P. O., Mihai, L., Liljeblad, B., Weslien, P., & Eklundh, L. (2019). Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sensing. https://doi.org/10.3390/RS11050567.

Lambin, E. F., & Ehrlich, D. (1996). The surface temperature-vegetation index space for land cover and land-cover change analysis. International Journal of Remote Sensing, 17(3), 463–487.

Lin, Q., Huang, H., Wang, J., Huang, K., & Liu, Y. (2019). Detection of pine shoot beetle (PSB) stress on pine forests at individual tree level using UAV-based hyperspectral imagery and lidar. Remote Sensing, 11, 2540. https://doi.org/10.3390/rs11212540

Lucieer, A., Malenovský, Z., Veness, T., & Wallace, L. (2014). HyperUAS-imaging spectroscopy from a multirotor unmanned aircraft system. Journal of Field Robotics, 31(4), 571–590. https://doi.org/10.1002/rob.21508

Machwitz, M., Giustarini, L., Bossung, C., Frantz, D., Schlerf, M., Lilienthal, H., & Udelhoven, T. (2014). Enhanced biomass prediction by assimilating satellite data into a crop growth model. Environmental Modelling & Software, 62, 437–453. https://doi.org/10.1016/j.envsoft.2014.08.010

Maes, W. H., Huete, A. R., & Steppe, K. (2017). Optimizing the processing of UAV-based thermal imagery. Remote Sensing, 9(5), 476.

Malenovskỳ, Z., Regaieg, O., Yin, T., Lauret, N., Guilleux, J., Chavanon, E., Duran, N., Janoutová, R., Delavois, A., Meynier, J., & Medjdoub, G. (2021). Discrete anisotropic radiative transfer modelling of solar-induced chlorophyll fluorescence: Structural impacts in geometrically explicit vegetation canopies. Remote Sensing of Environment, 263, 112564.

Masjedi, A., Zhao, J., Thompson, A.M., Yang, K-W., Flatt, J.E., Crawford, M.M., & Chapman, S. (2018). Sorghum biomass prediction using UAV-based remote sensing data and crop model simulation. (p. 7719–7722). IEEE.

Messina, G., & Modica, G. (2020). Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sensing, 12(9). https://doi.org/10.3390/RS12091491.

Mohammed, G. H., Colombo, R., Middleton, E. M., Rascher, U., van der Tol, C., Nedbal, L., & Zarco-Tejada, P. J. (2019). Remote sensing of solar-induced chlorophyll fluorescence (SIF) in vegetation: 50 years of progress. Remote Sensing of Environment, 231, 111177. https://doi.org/10.1016/j.rse.2019.04.030

Mokhtari, A., Ahmadi, A., Daccache, A., & Drechsler, K. (2021). Actual evapotranspiration from UAV images: A multi-sensor data fusion approach. Remote Sensing, 13(12), 2315. https://doi.org/10.3390/rs13122315

Moran, M., Clarke, T., Inoue, Y., & Vidal, A. (1994). Estimating crop water deficit using the relation between surface-air temperature and spectral vegetation index. Remote Sensing of Environment, 49(3), 246–263. https://doi.org/10.1016/0034-4257(94)90020-5. https://linkinghub.elsevier.com/retrieve/pii/0034425794900205

Nieto, H., Kustas, W. P., Torres-Rúa, A., Alfieri, J. G., Gao, F., Anderson, M. C., & McKee, L. G. (2019). Evaluation of TSEB turbulent fluxes using different methods for the retrieval of soil and canopy component temperatures from UAV thermal and multispectral imagery. Irrigation Science, 37(3), 389–406. https://doi.org/10.1007/s00271-018-0585-9

Norman, J., Kustas, W., & Humes, K. (1995). Source approach for estimating soil and vegetation energy fluxes in observations of directional radiometric surface temperature. Agricultural and Forest Meteorology, 77, 263–293. https://doi.org/10.1016/0168-1923(95)02265-Y

Olioso, A. (1995). Simulating the relationship between thermal emissivity and the normalized difference vegetation index. International Journal of Remote Sensing, 16(16), 3211–3216. https://doi.org/10.1080/01431169508954625

Olsson, P.-O., Vivekar, A., Adler, K., Garcia Millan, V. E., Koc, A., Alamrani, M., & Eklundh, L. (2021). Radiometric correction of multispectral UAS images: Evaluating the accuracy of the parrot sequoia camera and sunshine sensor. Remote Sensing, 13(4), 577.

Pacheco-Labrador, J., El-Madany, T. S., van der Tol, C., Martin, M. P., Gonzalez-Cascon, R., Perez-Priego, O., & Migliavacca, M. (2021). senSCOPE: Modeling mixed canopies combining green and brown senesced leaves. Evaluation in a mediterranean grassland. Remote Sensing of Environment, 257, 112352. https://doi.org/10.1016/j.rse.2021.112352.

Panigada, C., Rossini, M., Meroni, M., Cilia, C., Busetto, L., Amaducci, S., & Colombo, R. (2014). Fluorescence, PRI and canopy temperature for water stress detection in cereal crops. International Journal of Applied Earth Observation and Geoinformation, 30, 167–178. https://doi.org/10.1016/j.jag.2014.02.002

Poncet, A. M., Knappenberger, T., Brodbeck, C., Fogle, M., Shaw, J. N., Ortiz, B. V. (2019). Multispectral UAS data accuracy for different radiometric calibration methods. Remote Sensing, 11(16), https://doi.org/10.3390/RS11161917.

Prikaziuk, E., Migliavacca, M., Su, Z. B., & van der Tol, C. (2023). Simulation of ecosystem fluxes with the SCOPE model: Sensitivity to parametrization and evaluation with flux tower observations. Remote Sensing of Environment, 284, 113324. https://doi.org/10.1016/j.rse.2022.113324

Prikaziuk, E., & Van der Tol, C. (2019). Global sensitivity analysis of the SCOPE model in sentinel-3 bands: Thermal domain focus. Remote Sensing. https://doi.org/10.3390/rs11202424.

Raddi, S., Giannetti, F., Martini, S., Farinella, F., Chirici, G., Tani, A., & Mariotti, B. (2022). Monitoring drought response and chlorophyll content in quercus by consumer-grade, near-infrared (NIR) camera: A comparison with reflectance spectroscopy. New Forests, 53, 241–265. https://doi.org/10.1007/S11056-021-09848-Z/METRICS

Rascher, U., Baum, S., Siegmann, B., Buffat, J., Burkar, t.A., Cogliati, S. Quiros, J. (2022). Flexsense: Technical assistance for airborne measurement during the flex sentinel tandem experiment.

Roosjen, P. P., Brede, B., Suomalainen, J. M., Bartholomeus, H. M., Kooistra, L., & Clevers, J. G. (2018). Improved estimation of leaf area index and leaf chlorophyll content of a potato crop using multi-angle spectral data—Potential of unmanned aerial vehicle imagery. International Journal of Applied Earth Observation and Geoinformation, 66, 14–26. https://doi.org/10.1016/j.jag.2017.10.012

Schlerf, M., & Atzberger, C. (2006). Inversion of a forest reflectance model to estimate structural canopy variables from hyperspectral remote sensing data. Remote Sensing of Environment, 100, 281–294. https://doi.org/10.1016/j.rse.2005.10.006

Schläpfer, D., Richter, R., Popp, C., & Nygren, P. (2022). Droacor®-thermal: Automated temperature/emissivity retrieval for drone based hyperspectral imaging data. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 43, 429–434.

Siegmann, B., Cendrero-Mateo, M. P., Cogliati, S., Damm, A., Gamon, J., Herrera, D., & Rascher, U. (2021). Downscaling of far-red solar-induced chlorophyll fluorescence of different crops from canopy to leaf level using a diurnal data set acquired by the airborne imaging spectrometer HyPlant. Remote Sensing of Environment, 264, 112609. https://doi.org/10.1016/j.rse.2021.112609. https://www.sciencedirect.com/science/article/pii/S0034425721003291

Singh, P., Srivastava, P. K., Verrelst, J., Mall, R., Rivera, J. P., Dugesar, V., & Prasad, R. (2023). High resolution retrieval of leaf chlorophyll content over himalayan pine forest using visible/IR sensors mounted on UAV and radiative transfer model. Ecological Informatics, 75, 102099. https://doi.org/10.1016/j.ecoinf.2023.102099