Abstract

Volunteer potato is an increasing problem in crop rotations where winter temperatures are often not cold enough to kill tubers leftover from harvest. Poor control, as a result of high labor demands, causes diseases like Phytophthora infestans to spread to neighboring fields. Therefore, automatic detection and removal of volunteer plants is required. In this research, an adaptive Bayesian classification method has been developed for classification of volunteer potato plants within a sugar beet crop. With use of ground truth images, the classification accuracy of the plants was determined. In the non-adaptive scheme, the classification accuracy was 84.6 and 34.9% for the constant and changing natural light conditions, respectively. In the adaptive scheme, the classification accuracy increased to 89.8 and 67.7% for the constant and changing natural light conditions, respectively. Crop row information was successfully used to train the adaptive classifier, without having to choose training data in advance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Volunteer potato is a serious weed in many potato growing regions where winter temperatures often are not cold enough to kill tubers left in the ground after harvest (Lutman and Cussans 1977; Boydston 2001). Plants sprouting from overwintered tubers are difficult to control in sugar beet, where no selective herbicides are available (Cleal 1993). As a result of poor control, volunteer potatoes affect other crops in the crop rotation. Volunteer potato harbors diseases like Phytophthora infestans, insects and nematode pests of potato, negating the benefits of crop rotation (Turkensteen et al. 2000). Volunteer potatoes have to be removed to increase the benefit of crop rotation. Applying glyphosate to volunteer potato plants is the most effective control method but is very labour intensive (Paauw and Molendijk 2000) and therefore has to be automated. Precise application of glyphosate is required to prevent crop damage as glyphosate is a non-specific systemic herbicide (Giles et al. 2004). Therefore, precise application is needed on volunteer potato plants and application on sugar beets should be prevented. The automation of application of glyphosate to volunteer potato plants is the objective of current research. Essentially, an automated volunteer potato removal device consists of a system that detects and a system that removes or kills unwanted plants. A system analysis revealed that a vision system for such a precise control system should satisfy the following design criteria: (1) square centimeter precision within the sugar beet row seed line to assure targeted application on volunteer potato only, (2) handle daylight and weather variability, (3) handle within-field and between-field variability of crop and volunteer potato plant features like growth stages, occlusions and colours and, (4) no offline training data should be used, online training is required.

Weed detection systems have evolved from large scale remote sensing techniques to high resolution machine vision detection systems (Thorp and Tian 2004). Nevertheless, machine vision based systems for precise weed control at square cm level have hardly been researched besides, for example, a tomato seedling weed detection and removal application by Lee et al. (1999) and sugar beet and weed detection by Astrand (2005). Nieuwenhuizen et al. (2005), showed that colour-based detection of volunteer potatoes is to some extent feasible, although problems occurred with occlusions of plants, square centimeter precision could not be attained and variations between fields and daylight variations could not be handled. Marchant and Onyango (2001) showed methods for daylight invariant classification of vegetation from the soil background but they did not investigate classification of crops and weeds with their invariant image maps. For crop and weed classification, Astrand (2005) made an Integrated Plant Classifier (IPC) that combined a priori geometrical planting data and a posteriori features of the plant information. The combination of a priori and a posteriori information improved classification of sugar beet seedlings and weeds in the field. Tillett et al. (2002) gave a promising example of how a priori geometrical cropping information like row recognition can be gathered in the field. All algorithms that have been proposed for machine vision detection of weeds and crops use features of reflectance of the vegetation to define a certain decision function. Classification functions applied with machine vision use features that are in general identified as colour, shape and texture. El Faki et al. (2000) and Woebbecke et al. (1995b) applied colour features for weed detection. Shape features were applied by for example Guyer et al. (1986) and Woebbecke et al. (1995a). Woebbecke et al. (1995a) described problems with regard to shape features like occlusions of leaves; these could be due to the growth stage of the crops that were measured. Texture features were used for crop and weed detection by Burks et al. (2000). To derive classification functions from the features, most methods described in the literature need separate labeled training datasets of the classes. However, these training data are not always available. In addition to availability of training data, training images do not always give consistent classification results (Nieuwenhuizen et al. 2007). The results depend on the actual properties of the weeds and crops within the training images which is not known beforehand, and probably do not sufficiently represent the crop and weed population within the field.

The objective of this research was to extend weed detection methods to match the design criteria as mentioned before: (1) include row recognition information for automatic classifier training purposes, (2) include more features to increase correct classification rates and, (3) adaptively classify vegetation at square centimeter precision to handle variability and occlusion of plants.

Materials and methods

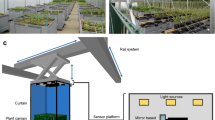

In spring 2006, data were gathered with a color camera for row recognition (VGA resolution, 640 × 480 pixels) and a color camera for crop recognition (SXGA resolution, 1392 × 1040 pixels). The camera for row recognition was mounted at a 45° angle looking forward and was fitted with a 4.8 mm focal length lens and gave a field of view containing three or more crop rows, as shown in Fig. 1. The row recognition camera had automatic shutter-time and white balance enabled based on the complete scene that was imaged.

The crop recognition camera was mounted perpendicular to the soil surface. A 6 mm focal length lens resulted in a field of view of 1.0 m length and 0.7 m width containing one sugar beet row. The angles of the cameras and the fields of view were calibrated before the experiments were started in the field. Shutter time and white balance for the crop recognition camera were automatically adjusted based on a grey reference board with 50% reflectance (Fotowand, Sudwalde, Germany) mounted in the field of view of the camera. The adjustment was done after each image was captured, and was done in the camera hardware. An optical wheel encoder triggered both cameras every 500 mm to acquire an image, therefore plant recognition camera images had 50% overlap. The images were recorded at walking speed between 0.5 and 1.0 ms−1. Images were recorded online and analyzed offline.

The classification procedure of the images was:

For image = 1 to N

{

-

1.

Determine crop row position in row recognition image

-

2.

Create vegetation grid cells of 100 mm2 in crop recognition image

-

3.

Determine crop row width

-

4.

Determine feature values for each vegetation grid cell

-

5.

Update a priori training data for classification

-

6.

Normalize the feature values

-

7.

Classify each grid cell and show decision

}

Vegetation was highlighted from the soil background in both the row and crop recognition images using the excessive green transformation defined by Woebbecke (1995b), Excessive Green, EG = 2·G–R–B. In the crop recognition images, a threshold on the excessive green values was set at 10 based on examining histograms of the data. Images of the row recognition camera gave information on the crop row position using an algorithm based on Tillett et al. (2002). Our row recognition algorithm contained a template with three Gaussian bell shaped curves that were convolved with the actual crop row image. The row position from the convolution procedure was used as a priori information to determine which plants grow within and which ones grow between the crop row in the crop recognition image. After the convolution procedure, the calculated crop row position was overlaid on the corresponding crop recognition image and the width of the sugar beet crop row was determined using a histogram approach. The histogram is made from an image where the bins represent the number of green pixels in the driving direction in the current image, resulting in a peak at the position of the sugar beet plants. The width of the peak represents the width of the sugar beet crop. Subsequently, the crop recognition image was split into grid cells that represented about 100 mm2 at the soil surface. If a grid cell contained vegetation, based on the excessive green value from Woebbecke (1995b), six features were determined for the specific grid cell. The features determined were: (1) distance to crop row, (2) mean red value, (3) mean green value, (4) mean EG value, (5) mean red–blue (RB) value and, (6) texture in terms of the length of edge segments. The distance to crop row is the perpendicular distance of the grid cell to the detected crop row position measured in mm. The mean red and mean green values were determined from histograms of the actual grid cell. The mean EG and mean RB values were calculated with the EGRBI transformation matrix (Eq. 2).

According to Steward and Tian (1998), this transformation is a rotation of the RGB co-ordinate system such that the resulting I-co-ordinate is collinear with the intensity axis. The EG and RB co-ordinates span a color plane with in one direction the greenness and in the other perpendicular direction values ranging from blue to red.

The texture measure was calculated as the length of the edge segments within a grid cell. The length of edge segments was calculated after a Canny edge detection algorithm (Canny 1986; National-Instruments 2005) was applied. The first feature, the distance to the crop row (a), was used for the context adaptive training of the classifier. The other five features were used for the multivariate Bayesian classification. Two classes were trained: volunteer potato and sugar beet.

For both the sugar beet class and the volunteer potato class, training feature vectors were stored in a buffer of 100 grid cells based on the following function to determine training candidate grid cells (Eq. 3), where σ is the variance of the crop row width, and a represents the distance to the center of the crop row:

Two-first-in-first-out (fifo) buffers were used. One for the sugar beet training data and one for the volunteer potato training data. Using a fifo-buffer means that the oldest information is pushed out, when newer training data of vegetation was available. The buffers were implemented as follows:

where B is a buffer of 100 grid cell feature vectors, i is the position in the buffer B, and m is the number of grid cell feature vectors that is added to the buffer B. When both buffers were filled with training data of 100 grid cells, the a priori training data were available. Then, the feature values were normalized by subtracting the mean and dividing by the standard deviation. Subsequently, covariance matrices and mean vectors were calculated from the buffer as a priori data for classification. A multivariate Bayesian statistical classifier was used as described in Gonzalez and Woods (1992) (Eq. 4)

d j (x) is the value of the decision function for class j, \( P\left( {\omega_{j} } \right) \) is the prior chance for class j, \( C_{j} \) is the covariance matrix for class j, \( x - m_{j} \) is the feature vector, x—mean feature vector for class j, j is 1 or 2, volunteer potato and weeds or sugar beet.

Then, the values of the d j (x) functions for all the grid cells in the image were determined and a grid cell was classified in the class with the highest value for d j (x). Finally, the resulting images with classified grid cells were filtered with a low-pass filter size of 4 cm2 to remove all small objects as these are too small to be sprayed within our research project.

We applied the Bayes classification in both an adaptive and a non-adaptive method. The non-adaptive classification was only trained at the start of the field until 100 vegetation grid cells for both the sugar beet and the volunteer potato class were available. Then the training was stopped, and the rest of the field was classified with the information gathered from the 100 vegetation grid cells. In the non-adaptive case, the crop row width was kept at the mean crop row width recorded until the 100 grid cells of training data were available.

In the adaptive classification, the training data were continuously updated in the fifo-buffer of 100 grid cells of sugar beet and volunteer potato plant along the travel through the field according to Eq. 3. In both classification schemes, the calculated crop row position was always taken into account, as this also compensated for operator or driver inaccuracy between the measurement days.

Classification results were obtained for two measurement days that included the same crop row section of 50 m length. At 18-05-2006 (Day 1), the measurements were done with an overcast sky and constant natural lighting conditions. At 24-05-2006 (Day 2), the measurements were done during changing natural lighting conditions, with sunlit and overcast periods.

For the crop recognition images recorded during the two measurement days, ground truth images were created manually. One by one, grid cells were manually identified as volunteer potato plant or as sugar beet plant. The plants were separated from each other by hand as well, in a way that the number of sugar beet and volunteer potato plants that were present within the images could be counted. The creation of ground truth images is a crucial step for evaluation of machine vision algorithms (Thacker et al. 2008). It is however not part of the final implementation of an algorithm in a practical situation. With use of the ground truth images, the validity of the features chosen for discrimination was determined. This was done by a linear discriminant analysis (SAS 1999), as this analysis shows the contribution of the individual features to the discrimination between the two classes. Secondly, confusion tables and the classification accuracy of our algorithm are given and the causes for its performance increase or decrease are discussed when an adaptive or non-adaptive classification scheme was applied.

Results

Image quality

The quality obtained from the row and crop recognition images was good, in the sense that a constant threshold value of 10 could be used on the excessive green value to separate vegetation from the soil background. For the crop recognition camera, the image intensity at the grey reference board was measured and is displayed in Fig. 2. The camera hardware was programmed to maintain constant lighting conditions based on the grey reference in the field of view. Nevertheless, the camera could not always directly correct for quick changes in lighting intensity as shown by the peaks in the intensity of the reference for day 2. Sometimes natural light conditions changed faster than the rate at which the camera grabbed images and could correct for changing light conditions. This was probably due to the hardware trigger that was used to grab images. The image recording speed was related to the travel velocity, which was a walking speed close to 1.0 ms−1. Approximately two images per second were recorded, and so two times per second the camera hardware algorithm could change its shutter-time to compensate for changing light conditions. On day 1, the natural lighting conditions were constant, and so the camera was able to keep a constant intensity of 128 at the reference board.

Crop row detection and crop row width

When vegetation was thresholded from the background soil, the crop row position and the crop row width were determined. The crop row position that was determined related well to the actual crop row position, based on visual assessment as shown in Fig. 3. Of course, the crop row position was not always exactly in the middle of the images, due to operator driving inaccuracy during the measurements but it was found at the correct position with regard to the real crop rows. Of higher importance for the training stage of the classifier was the crop row width as shown in Fig. 4. The crop row width was larger at day 2, because the plants were 1 week older and therefore larger in size. This means that the inter-row spacing area available for obtaining volunteer potato training data reduced with increasing growth stage. But also during one measurement day, considerable variations in crop row width could be observed. On day 1, crop row width varied between 70 and 160 mm. During day 2, the crop row width varied between 80 and 190 mm. These data show that differences up to 90 mm change in crop row width existed when the volunteer plants had to be detected. Adapting the crop row width to actual crop row width, maximized the area between the crop rows available for obtaining training data for volunteer plants according to Eq. 3.

Feature quality/linear discriminant analysis

The discriminative power of the features used were identified with a discriminant analysis. This was possible through the use of the ground truth images of the sugar beet and volunteer potato grid cells. The features that the linear discriminant analysis selected, in order of magnitude of variance that they explained, is given in Table 1. From the features Red, Green, EG, RB and Texture at day 1 only EG, Green, and RB were selected as discriminative. For the images of day 2, EG, Green, Texture and RB were selected as discriminative features. When the natural light conditions were constant, the texture was not needed as a feature. In both situations, the red color feature was not selected as a discriminative feature by the stepwise selection method, as this feature did not reach the F value of 3.84 to be entered as a variable in the discriminant analysis.

The feature mean values and variances within the experiments are given in Table 2. The variances of the feature values were larger at the second measurement day when the natural lighting conditions were more variable. The intensity of the reference board, which was the constant factor between experiments, showed an increase of variance from 1.97 to 147.26 between the two measurement days; proof that the camera could not maintain the desired intensity value of the grey reference board.

The color and texture features of the plants show an increased variance for the second measurement day with changing natural light conditions. During the first measurement day, when natural light conditions were constant, the variances of the volunteer potato plants were larger compared to the sugar beet plants.

In more detail, the EG feature value is shown in Fig. 5. The EG feature value explained the largest amount of variance and was therefore chosen to demonstrate the evolution of the feature values while travelling over the field over a distance of 50 m. During the first measurement day, under constant natural light conditions, the EG feature value varied considerably. As the natural light conditions were constant, this indicates an intrinsic color change of the plants within the field.

On day 2, when the natural light varied during the experiment, the EG feature value as shown in Fig. 6 changed similarly to the reference intensity as seen in Fig. 2, showing a peak at 50 m travel distance. On day 2, changes in EG feature value cannot completely be attributed to the intrinsic crop color changes, as the recording conditions were not constant. On day 2, the intensity of the images was not constant, therefore the EG feature values of the plants were not separated, but got mixed along the travel distance through the field as shown in Fig. 6.

Classification accuracy

The classification results were judged against the ground truth images and the percentages of classified plants are shown in Table 3.

Classification accuracy is the percentage of correctly classified sugar beet and volunteer potato plants combined. For the data of day 1, this resulted in a classification accuracy of 84.6% when the static, non-adaptive Bayes classification was applied. On the other hand, the classification accuracy was 89.8% when the Bayes classification was adaptive. On day 1, the SB misclassification reduced from 20.2 to 13.6% as a result of the application of the adaptive classification scheme. The data from day 2 gave 34.9% classification accuracy for the non-adaptive Bayes classification. The adaptive Bayes classification had an accuracy of 69.7%. On day 2, the SB misclassification reduced from 85.4 to 36.7% as a result of the application of the adaptive classification scheme. At both measurement days, the adaptive algorithm had a better classification accuracy, indicating that adapting to local plant color and texture features increased classifier performance.

An example of a classified volunteer potato plant within a sugar beet crop row is given in Fig. 7. The classification result in Fig. 7b shows that centimeter precision of classification was feasible within the crop row. The red color represents a volunteer potato plant, green color represents a sugar beet plant.

Discussion

Image quality and vegetation separation

Apparently our triggered acquisition frame rate of 2 Hz was too low to adjust the camera shutter-time fast enough to changing natural light conditions in the field. This was shown by the changing grey reference board value in Fig. 2. Tillett and Hague (1999) reported that their acquisition frame rate was 25 Hz, which means that their setup could adjust to changing conditions 12.5 times per second more than our setup. However, if the frame rate of our camera was increased, redundant data would have been recorded as the image scene itself would not have changed completely before a new image was recorded. On the other hand, an increased frame rate would provide data for faster adaptation to intensity changes as well, and improve the classification results. This would reduce the interference of EG values between sugar beet and volunteer potato plants as seen in Fig. 6. Our vegetation separation was based on a constant threshold on the excessive green. During the data analysis, no problems occurred using the constant threshold for vegetation separation, however Meyer and Camargo Neto (2008) showed that an excessive green minus an excessive red outperformed the traditional excessive green classifier for vegetation detection. This could be an improvement when problems arise with vegetation separation in future research. In our setup, no problems appeared with shadows in our images. However it could happen under natural light conditions that shadows become a problem for correct color classification. Marchant and Onyango (2000) reported a shadow invariant transformation to overcome the problems of shadows in images with vegetation that could be implemented in our work as well. Another solution to shading and intensity interference could be a covered measurement setup with controlled light conditions.

Crop row recognition and crop row width

The crop row recognition was not validated with help of ground truth images. The crop row positions however do determine which data is used for training of the classifier. In that way the crop row recognition is a crucial step in the performance of the classifier, either adaptive or static. Since our algorithm is based on Tillett and Hague (1999), the row recognition errors will be in the same range of 13 to 18 mm. Bakker et al. (2008) reported errors of row recognition with a Hough transform algorithm between 5 and 11 mm. Both Tillett and Hague (1999) and Bakker et al. (2008) do not report on the dimensions of the crop row width, although crop row width and growth stage are important parameters for weed detection and weed control systems as well. Tellaeche et al. (2008) reported a vision-based algorithm that takes into account the crop growth stage for weed detection as well. However the precision of their algorithm was not reported, but was estimated to be larger with a value of 0.1 m2. In that way, our algorithm for square centimeter precision weed detection is an improvement over existing algorithms reported in the literature, taking into account the crop row position and width.

Feature quality

The linear discriminant analysis for evaluation of the features used a stepwise selection procedure to identify the valuable features. However, the Bayes classifier itself was a multivariate classifier, taking into account all the five color and texture features. The efficiency of the multivariate classifier could be improved with an automatic feature selection process. Examples of these are principle components based classifiers, or automatic feature selection processes as explained in Maenpaa et al. (2003). The linear discriminant analysis was done on the complete data set available from the ground truth images. The Bayes classifier however, was used only on the local features that were stored in the buffer of 100 vegetation grid cells. Therefore, it could be that locally some features are more discriminative than others. This can explain some of the differences between the features that were selected at day 1 and 2 as well. The lighting conditions were different at day 2. Therefore the colors recorded will be different, and the texture feature was needed as well to discriminate between volunteer potato and sugar beet. Another valuable feature that could further increase the discriminative power between the weed potato and sugar beet class are the near-infrared reflection properties of the vegetation. Gerhards et al. (2002) mention that the near-infrared reflection properties of weeds and crops can help in distinguishing between them.

Adaptive and non-adaptive classification

Local multivariate normal distributions were used to discriminate between volunteer potato and sugar beet plants. When the classifier was applied non-adaptively, the training data at that point in the field determined the classification accuracy later on in the field. Our data showed that crop features like crop row width, color and texture, change throughout the field, and therefore adaptive classification outperformed non-adaptive classification. It took into account the changing crop row width, the actual crop color and texture, and could adapt to changing natural light conditions. This adaptive behavior was feasible through the buffer of grid cells with vegetation features that was stored. The size of the buffer of 100 grid cells was chosen because it represented the size of about five sugar beet plants. Obviously one could reduce adaptive behavior by increasing the buffer of stored vegetation features, thereby increasing the standard deviations of the multivariate normal distributions represented in the covariance matrices from Eq. 4. The classification results from Table 3 showed that the adaptive scheme resulted in lower percentages of mis-classification of sugar beet. This is even more important than an increase in classification accuracy, as the actuator will eventually spray a non-specific herbicide on all the grid cells that were identified as volunteer potato.

General

For a static classifier, it is necessary to choose training data in advance, although choosing representative training candidates for changing and different field situations can be difficult (Nieuwenhuizen et al. 2007). The progression made to other research is that the adaptive Bayes classification as used in this research showed that using actual context information improves classification results such as the context based methods proposed by Astrand (2005). Furthermore, the drawbacks of having to choose training data in advance are not valid anymore, as online training data is always available by using the crop row position for choosing training data progressively.

Conclusion

In this research, an adaptive Bayesian classification method has been developed for classification of volunteer potato plants within a sugar beet crop. Crop row information was successfully used to train the adaptive classifier without having to choose training data in advance. Adaptive classification, taking into account the crop growth stage, and the local crop and volunteer potato color and texture features, increased classification accuracy.

-

1.

Automatic classifier training is feasible. The only information needed is that one crop row position has to be detectable and its row width can be determined. The crop row width changes within a field for many agronomic reasons, e.g., growth conditions, pests, diseases. Therefore, adapting to the crop growth stage increases availability and quality of training data of the classifier.

-

2.

The features needed for detection were EG, green, RB and texture. These features were selected with a stepwise selection method followed by a linear discriminant analysis. Changing light conditions required one extra feature, in this case texture. Texture was not needed under constant natural light conditions.

-

3.

With use of ground truth images, the classification accuracy of the plants was determined. In the non-adaptive scheme, the classification accuracy was 84.6 and 34.9% for the constant and changing natural light conditions, respectively. In the adaptive scheme, the classification accuracy increased to 89.8 and 67.7% for the constant and changing natural light conditions, respectively, thus supporting the adaptive classification scheme.

References

Astrand, B. (2005). Vision based perception for mechatronic weed control. Phd Thesis, Chalmers University of Technology, Goteborg, Sweden, 106 pp.

Bakker, T., Wouters, H., Asselt, C. J., van Bontsema, J., Tang, L., Müller, J., et al. (2008). A vision based row detection system for sugar beet. Computers and Electronics in Agriculture, 60, 87–95.

Boydston, R. A. (2001). Volunteer potato (Solanum tuberosum) control with herbicides and cultivation in field corn (Zea mays). Weed Technology, 15(3), 461–466.

Burks, T. F., Shearer, S. A., Gates, R. S., & Donohue, K. D. (2000). Backpropagation neural network design and evaluation for classifying weed species using color image texture. Transactions of the ASAE, 43(4), 1029–1037.

Canny, J. (1986). A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 8(6), 679–698.

Cleal, R. A. E. (1993). Integrated control of volunteer potatoes in cereals and sugar beet. Aspects of Applied Biology, 35, 139–148.

El Faki, M. S., Zhang, N., & Peterson, D. E. (2000). Factors affecting color-based weed detection. Transactions of the ASAE, 43(4), 1001–1009.

Gerhards, R., Sokefeld, M., Nabout, A., Therburg, R. D., & Kuhbach, W. (2002). Online weed control using digital image analysis. Journal of Plant Diseases and Protection, special issue XVIII, 421–427.

Giles, D. K., Downey, D., Slaughter, D. C., Brevis-Acuna, J. C., & Lanini, W. T. (2004). Herbicide micro-dosing for weed control in field-grown processing tomatoes. Applied Engineering in Agriculture, 20(6), 735–743.

Gonzalez, R. C., & Woods, R. E. (1992). Digital image processing (p. 716). Upper Saddle River, New Jersey, USA: Addison-Wesley Publishing Company, Inc.

Guyer, D. E., Miles, G. E., Schreiber, M. M., Mitchell, O. R., & Vanderbilt, V. C. (1986). Machine vision and image processing for plant identification. Transactions of the ASAE American Society of Agricultural Engineers, 29(6), 1500–1507.

Lee, W. S., Slaughter, D. C., & Giles, D. K. (1999). Robotic weed control system for tomatoes. Precision Agriculture, 1, 95–113.

Lutman, P. J. W., & Cussans, G. W. (1977). Groundkeeper potatoes—much effort and a little progress. Arable Farming, 4(2), 23–24.

Maenpaa, T., Viertola, J., & Pietikainen, M. (2003). Optimizing color and texture features for real-time visual inspection. Pattern Analysis and Applications, 6(3), 169–175.

Marchant, J. A., & Onyango, C. M. (2000). Shadow-invariant classification for scenes illuminated by daylight. Journal of Optical Society of America, 17(11), 1952–1961.

Marchant, J. A., & Onyango, C. M. (2001). Color invariant for daylight changes: relaxing the constraints on illuminants. Journal of Optical Society of America, 18(11), 2704–2706.

Meyer, G. E., & Camargo Neto, J. (2008). Verification of color vegetation indices for automated crop imaging applications. Computers and Electronics in Agriculture, 63, 282–293.

National-Instruments. (2005). NI Vision Concepts Manual (p. 399). Austin, Texas, USA: National Instruments.

Nieuwenhuizen, A. T., Tang, L., Hofstee, J. W., Müller, J., & van Henten, E. J. (2007). Colour based detection of volunteer potatoes as weeds in sugar beet fields using machine vision. Precision Agriculture, 8(6), 267–278.

Nieuwenhuizen, A. T., van den Oever, J. H. W., Tang, L., Hofstee, J. W., & Müller, J. (2005). Vision based detection of volunteer potatoes as weeds in sugar beet and cereal fields. In J. V. Stafford (Ed.), Precision agriculture ‘05, proceedings of the 5th European conference on precision agriculture (pp. 175–182). The Netherlands: Wageningen Academic Publishers.

Paauw, J. G. M., & Molendijk, L. P. G. (2000). Aardappelopslag in wintertarwe vermeerdert aardappelcystenaaltjes (Volunteer potato plants in winter wheat multiply potato cyst nematodes). Praktijkonderzoek Akkerbouw Vollegrondsgroenteteelt bulletin Akkerbouw, pp. 4.

SAS Institute Inc. (1999). SAS/STAT User’s Guide, Version 8 (pp. 3155–3180). NC: Cary.

Steward, B. L., & Tian, L. F. (1998). Real-time weed detection in outdoor field conditions. In G. E. Meyer & J. A. DeShazer (Eds.), SPIE 3543, Precision agriculture and biological quality (pp. 266–278).

Tellaeche, A., Burguos-Artizzu, X. P., Pajares, G., Ribeiro, A., & Fernandez-Quintanilla, C. (2008). A new vision-based approach to differential spraying in precision agriculture. Computers and Electronics in Agriculture, 60, 144–155.

Thacker, N. A., Clark, A. F., Barron, J. L., Beveridge, J. R., Courtney, P., Crum, W. R., et al. (2008). Performance characterization in computer vision: A guide to best practices. Computer Vision and Image Understanding, 109, 305–334.

Thorp, K. R., & Tian, L. F. (2004). A review on remote sensing of weeds in agriculture. Precision Agriculture, 5(5), 477–508.

Tillett, N. D., & Hague, T. (1999). Computer-vision-based hoe guidance for cereals–an inital trial. Journal of Agricultural Engineering Research, 74(1999), 225–236.

Tillett, N. D., Hague, T., & Miles, S. J. (2002). Inter-row vision guidance for mechanical weed control in sugar beet. Computers and Electronics in Agriculture, 33(3), 163–177.

Turkensteen, L. J., Flier, W. G., Wanningen, R., & Mulder, A. (2000). Production, survival and infectivity of oospores of Phytophthora infestans. Plant Pathology, 49(6), 688–696.

Woebbecke, D., Meyer, G. E., Bargen, K. v., & Mortensen, D. A. (1995a). Shape features for identifying young weeds using image analysis. Transactions of the ASAE, 38(1), 271–281.

Woebbecke, D. M., Meyer, G. E., Bargen, K. v., & Mortensen, D. A. (1995b). Color indices for weed identification under various soil, residue, and lighting conditions. Transactions of the ASAE, 38(1), 259–269.

Acknowledgments

The authors would like to thank J.P. Stols for his BSc thesis work and S. van der Steen for programming assistance that supported this research. This research was primarily supported by the Dutch Technology Foundation STW, applied science division of NWO and the Technology Program of the Ministry of Economic Affairs. Secondly the Dutch Ministry of Agriculture, Nature and Food Quality supported this research. The research is part of research programme LNV-427: “Reduction disease pressure Phytophthora infestans”.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Nieuwenhuizen, A.T., Hofstee, J.W. & van Henten, E.J. Adaptive detection of volunteer potato plants in sugar beet fields. Precision Agric 11, 433–447 (2010). https://doi.org/10.1007/s11119-009-9138-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-009-9138-9