Abstract

This paper considers the iterative solution of finite element discretizations of second-order elliptic boundary value problems. Mesh independent estimations are given for the rate of superlinear convergence of preconditioned Krylov methods, involving the connection between the convergence rate and the Lebesgue exponent of the data. Numerical examples demonstrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The preconditioned conjugate gradient (PCG) method is a widespread way for the iterative solution of discretized elliptic partial differential equations. It can be efficiently coupled with multigrid methods and, under certain conditions, operator preconditioning can provide mesh-independent convergence. Hence, its convergence has been widely analyzed, see, e.g., [1, 3] and references therein.

In particular, superlinear convergence is often a characteristic second stage in the convergence history: this notion expresses, roughly speaking, that the number of iterations required to achieve a new correct digit will be decreasing in course of the iteration. This phenomenon is also favorable when the PCG method is used as an inner iteration for an outer process. Such results were already obtained in [11, 15] on operator level.

This paper considers some types of second-order elliptic boundary value problems with variable zeroth order coefficients and their finite element discretizations. Our goal is to find relevant estimations for the rate of superlinear convergence of the PCG method for this type of problem; furthermore, we are interested in robust, that is, mesh independent rates, which can be given independently of the finite element mesh size. This means that the favorable behavior does not deteriorate as the mesh is refined.

This mesh-independence property of superlinear convergence was studied in various joint papers of the second author, see, e.g., [2] for a general result, [3] for a survey in this journal, and [5] for some recent applications. The starting point of the present paper is [10], where a superlinear rate was found in a particular situation with continuous zeroth order coefficient. Our goal is to extend this result to a family of estimations for general zeroth order (“linearized reaction”) coefficients, that is, which are unbounded, and belong to some Lebesgue space. Furthermore, we would like to explore the connection between the convergence rate and the Lebesgue exponent. A practical motivation for such situations is, among other things, the Newton linearization arising in reaction-diffusion models where the nonlinear rate of reaction is typically of polynomial order, thus leading to linearized coefficients with given Lebesgue exponent.

We present eigenvalue-based estimations of the rate of superlinear convergence for such problems, first for single equations, then we show that similar estimations can be obtained in the case of proper systems of PDEs, involving GMRES in the nonsymmetric case. Finally, some numerical examples are shown, which properly demonstrate our theoretical results.

2 Theoretical background

2.1 The abstract problem and its discretization

Let H be a real Hilbert space and let us consider a linear operator equation

with some \(g \in H\), under the following

Assumption 2.1

-

(i)

The operator A is decomposed as

$$\begin{aligned} A=S+Q \end{aligned}$$(2)where S is a symmetric operator in H with dense domain D and Q is a compact self-adjoint operator defined on the domain H.

-

(ii)

There exists \(k>0\) such that \(\langle Su,u \rangle \ge k \Vert u\Vert ^2\) (\(\forall u \in D\)).

-

(iii)

\(\langle Qu,u \rangle \ge 0\) (\(\forall u \in H\)).

We recall that the energy space \(H_S\) is the completion of D under the energy inner product

and the corresponding norm is denoted by \(\Vert \cdot \Vert _S\). Assumption (ii) implies \(H_S \subset H\). Then, there exists a unique bounded linear operator, denoted by \(Q_S: H_S \mapsto H_S\), such that

We replace (1) by its formally preconditioned form

that is, \((I+S^{-1}Q)u=S^{-1}g\) in \(H_S\). This gives the weak formulation

Since by assumption (iii) the bilinear form on the left is coercive on \(H_S\), by the Lax-Milgram theorem, there exists a unique solution \(u \in H_S\) of (4).

Now (4) is solved numerically using a Galerkin discretization. Consider a given finite-dimensional subspace \(V=\text {span}\{\varphi _1,\dots ,\varphi _n\}\subset H_S\), and let

the Gram matrices corresponding to S and Q. We look for the numerical solution \(u_V \in V\) of (4) in V, i.e., for which

Then, \(u_V=\sum ^n_{j=1} c_j\varphi _j\), where \(\varvec{\textrm{c}}=(c_1,\dots ,c_n) \in \mathbb {R}^n\) is the solution of the system

with \(\varvec{\textrm{b}}=\{\langle g,\varphi _j \rangle \}^n_{j=1}\). The matrix \(\varvec{\textrm{A}}_h:=\varvec{\textrm{S}}_h+\varvec{\textrm{Q}}_h\) is SPD.

By using matrix \(\varvec{\textrm{S}}_h\) as the preconditioner for the system (6), we shall work with the preconditioned system

where \(\varvec{\textrm{I}}\) is the identity matrix in \(\mathbb {R}^n\) and \(\tilde{\varvec{\textrm{b}}}=\varvec{\textrm{S}}_h^{-1}\varvec{\textrm{b}}\). We apply the CGM for the solution of this system.

2.2 The preconditioned conjugate gradient method and superlinear convergence

Let us consider a general linear system \( \varvec{\textrm{A}}_h \varvec{\textrm{u}}= \varvec{\textrm{g}}\) and its preconditioned form

where \(\varvec{\textrm{B}}_h= \varvec{\textrm{S}}_h^{-1}\varvec{\textrm{A}}\) and \(\tilde{\varvec{ \textrm{g}}}= \varvec{\textrm{S}}^{-1}_h \varvec{ \textrm{g}}\). The preconditioner \(\textbf{S}_h\) induces the energy inner product \(\langle \textbf{c}, \textbf{d}\rangle _{\textbf{S}_h}:= \textbf{S}_h\, \textbf{c}\cdot \textbf{d}\), where \(\cdot \) denotes the standard Euclidean inner product.

Then, the PCG method is given by the following algorithm. Let \(\varvec{\textrm{u}}_0\) be arbitrary, \(\varvec{\mathrm {\rho }}_0= \varvec{\textrm{A}_h}\varvec{\textrm{u}}_0-\varvec{\textrm{g}}\), \(\varvec{\textrm{S}}_h \varvec{\textrm{p}}_0=\varvec{\mathrm {\rho }}_0\), \(\varvec{\textrm{r}}_0=\varvec{\mathrm {\rho }}_0\) and for \(k \in \mathbb {N}\)

with

In fact, the vector \(\varvec{\textrm{z}}_k:=\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{A}}_h \varvec{\textrm{p}}_k\) is computed by solving the auxiliary problem

Moreover, setting \( \varvec{\textrm{w}}_k=\varvec{\textrm{z}}_k-\varvec{\textrm{p}}_k\), this problem is equivalent to

We are interested in the superlinear convergence rates for the CGM, and now recall the corresponding well-known estimation. Let \(\varvec{\textrm{A}}_h=\varvec{\textrm{S}}_h+ \varvec{\textrm{Q}}_h\). Then, \(\varvec{\textrm{B}}_h\) in (8) has the compact perturbation form \(\varvec{\textrm{B}}_h=\varvec{\textrm{I}}_h+ \varvec{\textrm{E}}_h\) with \(\varvec{\textrm{E}}_h:=\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{Q}}_h.\) Let us order the eigenvalues of the latter according to \(|\lambda _1(\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{Q}}_h)|\ge |\lambda _2(\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{Q}}_h)| \ge \dots \ge |\lambda _n(\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{Q}}_h)|\). Then, the error vectors \(\varvec{\textrm{e}}_k:=\varvec{\textrm{c}}_k-\varvec{\textrm{c}}\) are measured by \(\left< \varvec{\textrm{B}}_h \varvec{\textrm{e}}_k, \varvec{\textrm{e}}_k\right>_{\varvec{\textrm{S}}_h}^{1/2}= \left<\varvec{\textrm{S}}^{-1}_h\varvec{\textrm{A}}_h \varvec{\textrm{e}}_k, \varvec{\textrm{e}}_k\right>_{\varvec{\textrm{S}}_h}^{1/2}= \left<\varvec{\textrm{A}}_h \varvec{\textrm{e}}_k, \varvec{\textrm{e}}_k\right>^{1/2} =\left\| \varvec{\textrm{e}}_k\right\| _{\varvec{\textrm{A}}_h}\), and they are known to satisfy

This follows, e.g., from formula (13.13) in [1], see also (2.16) in [3].

For the discretized problem described in subsection 2.1, the following result allows us to give a convergence rate for the upper bound of (10) through the eigenvalues of the operator \(Q_S\). This is a modification of Theorem 1 in [10] where the square of eigenvalues was considered.

Lemma 1

Let assumptions 2.1 hold. Then, for any \(k=1,2,\dots ,n\)

Proof

We have in fact

where the \(\sigma _j\) denote the singular values of the given matrix or operator, see [4]. Now both the matrix \(\varvec{\textrm{S}}_h^{-1}\varvec{\textrm{Q}}_h\) (with respect to the \(\varvec{\textrm{S}}_h\)-inner product) and the operator \(Q_S\) (in \(H_S\)) are self-adjoint, hence their singular values coincide with the modulus of the eigenvalues. Since \(Q_S\) is a positive operator from assumption (iii), the modulus can be omitted.\(\square \)

An immediate consequence of this lemma is the following mesh-independent bound.

Corollary 1

For any \(k=1,2,\dots ,n\)

Proof

By [2, Prop. 4.1], we are able to estimate \(\Vert \varvec{\textrm{B}}^{-1}_h\Vert _{\varvec{\textrm{S}}_h} \le \Vert B^{-1}\Vert _S\). This, together with (10) and (11), completes the proof. \(\square \)

Since \(|\lambda _1(Q_S)|\ge |\lambda _2(Q_S)| \ge \dots \ge 0\) and the eigenvalues tend to 0, the convergence factor is less than 1 for k sufficiently large. Hence, the upper bound decreases as \(k \rightarrow \infty \) and we obtain superlinear convergence rate.

3 Estimation of the rates of superlinear convergence

We present the rate estimates in the following stages. First, we develop the results in detail for single equations. The studied preconditioners have the advantage that the original PDE is reduced to simpler PDEs whose discretizations can be solved by proper optimal fast solvers. Then, we extend the estimates for systems of PDEs, first for the symmetric and then for the nonsymmetric case. This situation shows the real strength of the idea of preconditioning operators, since one can reduce large coupled systems of PDEs to independent single PDEs, hence the numerical solution of the latter can be parallelized. In each case, we provide an estimation of the rate of mesh-independent superlinear convergence such that the dependence of the rate on the integrability exponent of the reaction coefficient is determined.

3.1 Elliptic equations

Let \(d\ge 2\) and \(\Omega \subset \mathbb {R}^{d}\) be a bounded domain. We consider the elliptic problem

in the following situation.

Assumption 3.1

-

(i)

The symmetric matrix-valued function \(G \in {L}^{\infty } (\overline{\Omega },\mathbb {R}^{d} \times \mathbb {R}^{d})\) satisfies

$$ G(x) \xi \cdot \xi \ge m |\xi |^2 \qquad (\forall \xi \in \mathbb {R}^{d}) $$for some \(m>0\) independent of \(\xi \).

-

(ii)

We have \(\eta \ge 0\); furthermore, there exists \(2< p < \frac{2d}{d-2}\) such that

$$\begin{aligned} \eta \in {L}^{p/(p-2)}(\Omega ) . \end{aligned}$$(15) -

(iii)

\(\partial \Omega \) is a Lipschitz boundary.

-

(iv)

\(g \in {L}^{2}(\Omega )\).

Then, problem (14) has a unique weak solution in \({H}^1_{0}(\Omega )\). The relevance of the condition on p in (ii) is that the continuous embedding \({H}^1_{0}(\Omega )\subset L^p(\Omega )\) holds, which ensures the boundedness of the corresponding bilinear form.

In practice, we are mostly interested in the case when the principal part has constant or separable coefficients, whereas \(\eta =\eta (x)\) is a general variable (i.e., nonconstant) coefficient. In this case, the principal part will be an efficient preconditioning operator, see Remark 1 for background and extensions.

Let \(V_h \subset {H}^1_{0}(\Omega )\) be a given FEM subspace. We look for the numerical solution \(u_h\) of (14) in \(V_h\):

The corresponding linear algebraic system has the form

where \(\varvec{\textrm{G}}_h\) and \(\varvec{\textrm{D}}_h\) are the corresponding weighted stiffness and mass matrices, respectively. We apply the matrix \(\varvec{\textrm{G}}_h\) as preconditioner, thus the preconditioned form of (16) is given by

with \(\tilde{\varvec{\textrm{g}}}_h=\varvec{\textrm{G}}^{-1}_h\varvec{\textrm{g}}_h\). Then, we apply the CGM to (17). The auxiliary systems with \(\varvec{\textrm{G}}_h\) can be solved efficiently with fast solvers, see Remark 1.

Such equations for \(\eta \in C(\overline{\Omega })\) were considered in [10]. That was a rather restrictive assumption, see also Remark 2 for the motivations of the more general case (15).

Theorem 1

Let Assumptions 3.1 hold. Then, there exists \(C>0\) such that for all \(k \in \mathbb {N}\)

where \(\alpha =\frac{1}{d}-\frac{1}{2}+\frac{1}{p}\).

Proof

Let us consider the real Hilbert space \({L}^{2}(\Omega )\) endowed with the usual inner product. Let \(D=H^1_{0,G}:=\{u \in {H}_{0}^{1}(\Omega ) \cap {H}^{2}(\Omega ):\ \ G \nabla u \in H(\mathrm {div, \Omega }) \}\). We define the operators

Then,

where \(C_\Omega \) is the Poincaré–Friedrichs constant and m is the lower spectral bound of G given by assumption (i). Hence the energy space \(H_S\) is a well-defined Hilbert space with \(\langle u,v \rangle _S=\int _{\Omega }G \nabla u \cdot \nabla v\). It is easy to see that \(H_S={H}_{0}^{1}(\Omega )\) and that the following inequality holds:

Since \(p<\frac{2d}{d-2}\), the embedding \(\mathcal {I}:{H}_{0}^{1}(\Omega ) \rightarrow {L}^{p}(\Omega )\) is compact, in particular, there exists \(\hat{c}>0\) such that for all \(u \in {H}_{0}^{1}(\Omega )\)

Then,

where \(M=\Vert \eta \Vert _{{L}^{p/(p-2)}(\Omega )}\). Here, we applied the extension of Hölder’s inequality ([6, Th. 4.6]) with

Hence, \(Q_{S}\) is compact in \(H_{S}\). Altogether, \(Q_{S}\) is a compact self-adjoint operator in \(H_{S}\), hence, by [9, Ch.6, Th.1.5], we have the following characterization of the eigenvalues of \(Q_{S}\):

By taking the minimum over a smaller subset of finite rank operators, we obtain

This, together with (25) yields

where \(a_n(\mathcal {I})\) denotes the approximation numbers of the compact embedding \(\mathcal {I} :{H}_{0}^{1}(\Omega ) \mapsto {L}^{p}(\Omega )\), see [14]. Furthermore, we have the estimation from [8]:

for some constant \(\hat{C}>0\). Therefore, we arrive at the inequality

Now, taking the arithmetic mean on both sides and estimating the sum from above by an integral, we obtain

Then, by (13), we conclude. \(\square \)

Remark 1

The PCG method requires the solution of auxiliary problems \(\varvec{\textrm{S}}w_k=\varvec{\textrm{Q}}p_k\), see (9). In the main case, when the principal part has constant or separable coefficients, these problems can be solved easily with fast solvers due to the special structure of the operator \(Su \equiv -\textrm{div}(G\nabla u)\) (in particular, when \(Su=-\Delta u\)), see, e.g., [7, 12].

Moreover, to generalize the above, one may also incorporate a constant lower order term in S, i.e., (in the case of Laplacian principal part) define \(Su=-\Delta u + cu\) for some constant \(c>0\). This gives a better approximation of \(Lu=-\Delta u + \eta u\) and, since S has constant coefficients, the auxiliary problems can be still be solved by the mentioned fast solvers. Theorem 1 remains true, since \(Qu=(\eta -c)u\) is still compact; it may be no more a positive operator, but the only arising difference is that in Corollary 1 we replace \(\lambda _j(Q_S)\) by \(|\lambda _j(Q_S)|\).

Remark 2

The relevance of the extension of the results of [10] on \(\eta \in C(\overline{\Omega })\) to our more general case (15) is motivated, e.g., by the following model. Consider a reaction-diffusion equation

where \(q\in C^1(\mathbb {R})\) and there exists \(2< p < \frac{2d}{d-2}\) such that

Here, q describes the rate of reaction, which is typically of polynomial order as required in (30). The restriction on p means that the continuous embedding \({H}^1_{0}(\Omega )\subset L^p(\Omega )\) holds, hence the above problem is well-posed in \({H}_{0}^{1}(\Omega )\). Then, the Newton linearization around some \(z_n\) leads to the linear problem of the form

where

due to the above assumptions. That is, we obtain a problem of the type (14).

Remark 3

Owing to the equality \(\Vert e_k\Vert _{\varvec{\textrm{A}}_h} = \Vert A^{-1/2} r_k\Vert \), the estimate (18) implies a similar one for the residuals:

where \(C_1=C\, cond(A)\).

3.2 Elliptic systems

In this section, we prove that the previous results can be extended to certain systems of elliptic PDEs. For simplicity and also due to practical occurrence, we only include Laplacian principal parts; however, the results remain similar when the principal parts have the form (19).

3.2.1 Symmetric systems

First let us consider systems of the form

where \(\varvec{H}=\{\eta _{ij}\}^{s}_{i,j=1}\) is a symmetric positive semidefinite variable coefficient matrix such that

Such systems arise, e.g., in the Newton linearization of gradient systems: if a nonlinear reaction-diffusion system corresponds to a potential

then the linearized problems have the form (33), which extend (31)–(32) to systems and the gradient structure implies the symmetry of the Jacobians \(\varvec{H}=F'(u_1,...,u_s)\).

We work with the space \({L}^{p}(\Omega )^s\) with the norm

Let \(H={L}^{2}(\Omega )^{s}\); furthermore, \(D:= (H^1_{0,G})^s\), where \(H^1_{0,G}\) was defined in subsection 3.1 before (19). Using notation \(u=(u_1 \dots u_s)\), we define the operators

Clearly, S is a uniformly positive symmetric operator in H. In fact, from (20),

Then, the energy space \(H_S\) is well defined with

and so \(H_S={H}_{0}^{1}(\Omega )^s\). Furthermore, by (22), we have that

Then, there exists a unique bounded linear operator \(Q_S :{H}_{0}^{1}(\Omega )^s \rightarrow {H}_{0}^{1}(\Omega )^s\) such that

It is easy to see that \(Q_S\) is self-adjoint in \(H_S\). Analogously to (23), by (36), (35) and Hölder’s inequality, we get

where \(M=\max _{i,j}\Vert \eta _{ij}\Vert _{{L}^{p/(p-2)}(\Omega )}\). Hence, we have proved that \(Q_S\) is a compact self-adjoint operator in \(H_S\). Then, the characterization (24) of the eigenvalues of \(Q_S\) holds. The rest of the proof follows by modifying the scalar case. Now, instead of (25), we take the minimum in the following way over a smaller subset of finite rank operators:

where we define \(L_{n-1} \in \mathcal {L}_{\textrm{diag}}(H_S)\) by requiring

where [.] denotes the lower integer part. Furthermore, we shall use the approximation numbers

Note that if \(n\le s\) then we can use the bound \(\lambda _n(Q_S)\le \Vert Q_S\Vert \). For \(n\ge s+1\), from (27), the above numbers are estimated by

with \(\alpha =\frac{1}{d}-\frac{1}{2}+\frac{1}{p}.\) Then,

Therefore,

Hence, by (39), we obtain the estimations

Note that there exists \(k_0,k_1 >0\) such that

(in fact, \(k_0=1/2\) and \(k_1=1\)). Thus, for \(n\ge s+1\),

Hence, (40) becomes

and by taking arithmetic means on both sides and splitting the sum, we get

where \(C_2=\max \{s\Vert Q_S\Vert ,C_1(1-\alpha )^{-1}\}\). Finally, by Corollary 1, we obtain that there exists \(C>0\) such that for all \(k \in \mathbb {N}\)

with \(\alpha =\frac{1}{d}-\frac{1}{2}+\frac{1}{p}\), that is, Theorem 1 holds in exactly the same from for the above systems of PDEs as well.

3.2.2 Extension to non-symmetric systems

Let us now study (33) for \(\varvec{H}=\{\eta _{i,j}\}^{s}_{i,j=1}\) non-symmetric. We apply the generalized minimal residual (GMRES) method to the corresponding discretized system. This method is the most widespread Krylov type iteration for non-symmetric systems, see, e.g., [13].

By [5], we have an analog of Corollary 1 when A is non-Hermitian. In this case the GMRES method provides superlinear convergence estimates for the residuals \( r_k\), and (11) is replaced by the more general case (12). Altogether, we have

To show that Theorem 1 still holds in this case, we follow the same steps as we did previously. We define the operators \(S,Q,Q_S\) as before in (34), (37). Here \(Q_S\) is no longer self-adjoint and its eigenvalues do not coincide with its singular values. Nonetheless, by [9, Ch.6, Th.1.5], we have the following characterization of the singular values of \(Q_S\):

Then, similarly to the proof for symmetric systems, we can see that there exists \(C_1>0\) such that

Therefore, by (43), we obtain that there exists \(C_2>0\) such that

3.2.3 The efficiency of the preconditioners

For elliptic systems, the auxiliary problem \(\varvec{\textrm{S}}w_k=\varvec{\textrm{Q}}p_k\) is the FEM discretization of the elliptic system

where \(w_k = ( (w_k)_1, \dots , (w_k)_s )\) is the unknown function and the right-hand side arises from the known functions \((p_k)_1, \dots , (p_k)_s \). The main point is that (in contrast to the original one) this system is uncoupled, i.e., the above equations are independent of one another. Hence, they can be solved in parallel.

We note that the idea of Remark 1 can also be used here: one may include constant lower order terms in S, which is especially useful if \(\varvec{H}\) has large entries. Then, \(-\Delta (w_k)_i\) above is replaced by \(-\Delta (w_k)_i + c_i (w_k)_i\). For instance, we may set \(c_i= 1/2 \Vert \varvec{H}\Vert \) or \(c_i= 1/2 \sum ^{s}_{j=1} \eta _{ij}\).

In practice, these types of systems can be very large, e.g., in [16], long-range transport of air pollution models are described by a system of PDEs with \(s=30\). That is, whereas the original problem is a coupled PDE system of several components, the preconditioner leads to uncoupled problems corresponding to the FEM discretization of single PDEs, which is considerably cheaper. This shows the efficiency of the proposed preconditioners.

4 Numerical tests

Let us solve the following PDEs numerically:

with \(i=1,2\), and

where \(p>2\), and \(\eta _1,\eta _2 \in {L}^{\frac{p}{p-2}}(\Omega )\) are defined as

and

for some \(0<\beta < \frac{p-2}{p}\). Furthermore,

Applying the finite element method to (47) and (48) with stepsize \(h=1/(N+1)\), we obtain the algebraic system

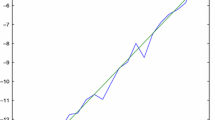

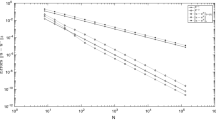

The cases \(i=1,2\) and \(i=3\) refer to the FEM discretization of (47) and (48), respectively. Then, we apply \(\varvec{\textrm{G}}_h\) as a preconditioner and we solve the preconditioned system using the CGM. We used Courant elements and the computations were executed in Matlab (Fig. 1).

To measure the error of the PCGM, we use the energy norm

where \(\varvec{\textrm{A}}_h=\varvec{\textrm{G}}_h+\varvec{\textrm{D}}_h\). Table 1 shows the residual error obtained at each iteration \(k\le 10\) of the method applied to (49) for \(i=1,2,3\), respectively.

To test Theorem 1, note that \(d=2\) and so \(\alpha =\frac{1}{p}\). Furthermore, recall that

That is, if \(p > \frac{2}{1-\beta }\), we get that the theorem holds when \(\alpha <\frac{1-\beta }{2}.\) Table 2 shows the values of

for \(i=1,2,3\), respectively, with different choices of \(\beta \) and \(\alpha \) while fixing a mesh size. The value of \(\hat{\delta }^i_k\) (\(i=1,2\)) corresponds to the system (47) with right-hand side \(f_i\) and the case \(i=3\) corresponds to the system (48). Note that residuals can be used when the exact solution is not known. In the symmetric case the bound (46) follows from the bound (18) owing to the equivalence of \(\Vert e_k\Vert _{\varvec{\textrm{A}}_h}\) and \(\Vert r_k\Vert \), see Remark 3. Altogether, the estimate (46) is equivalent to requiring that \(\hat{\delta }_k\) is bounded by some constant as k increases, and this is indeed demonstrated by Table 2 and Fig. 2.

Finally, Table 3 and Fig. 3 show the values of \(\hat{\delta }_k\) for different mesh sizes while fixing the values of \(\beta \). The numbers demonstrate that the results of Theorem 1 are not sensitive to the size of the mesh.

Graphical representation of Table 2

Graphical representation of Table 3

5 Summary and conclusions

We have studied the mesh independent superlinear convergence of preconditioned Krylov methods for the iterative solution of finite element discretizations of second-order elliptic boundary value problems. We have proved mesh independent estimations for proper operator preconditioners for single equations and for systems, setting up a connection between the convergence rate and the Lebesgue exponent of the data. We have run numerical tests for equations with singular coefficients using different parameters. The tests have demonstrated the theoretical results.

Data availability

Not applicable

References

Axelsson, O.: Iterative solution methods. Cambridge University Press (1994)

Axelsson, O., Karátson, J.: Mesh independent superlinear PCG rates via compact-equivalent operators. SIAM J. Numer. Anal. 45(4), 1495–1516 (2007)

Axelsson, O., Karátson, J.: Equivalent operator preconditioning for elliptic problems. Numer. Algorithms 50(3), 297–380 (2009)

Axelsson, O., Karátson, J.: Superlinear convergence of the GMRES for pde-constrained optimization problems. Numer. Funct. Anal. Optim. 39(9), 921–936 (2018)

Axelsson, O., Karátson, J., Magoules, F.: Robust superlinear Krylov convergence for complex non-coercive compact-equivalent operator preconditioners. SIAM J. Numer. Anal. 61(2), 1057–1079 (2023)

Brézis, H.: Functional analysis, Sobolev spaces and partial differential equations, vol. 2. Springer (2011)

Chávez, G., Turkiyyah, G., Zampini, S., Ltaief, H., Keyes, D.: Accelerated cyclic reduction: a distributed-memory fast solver for structured linear systems. Parallel Comput. 74, 65–83 (2018)

Edmunds, D.E., Triebel, H.: Entropy numbers and approximation numbers in function spacess. Proceedings of the London Mathematical Society 3(1), 137–152 (1989)

I. Gohberg, S. Goldberg, M.A. Kaashoek: Operator theory: advances and applications. Classes of Linear Operators pp. 49 (1992)

Karátson, J.: Mesh independent superlinear convergence estimates of the conjugate gradient method for some equivalent self-adjoint operators. Appl Math 50(3), 277–290 (2005)

Moret, I.: A note on the superlinear convergence of GMRES. SIAM J Numer. Anal. 34(2), 513–516 (1997)

Rossi, T., Toivanen T.: A parallel fast direct solver for the discrete solution of separable elliptic equations. In: PPSC. Citeseer (1997)

Saad, Y., Schultz, M.H.: Gmres: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comp. 7(3), 856–869 (1986)

Vybíral, J.: Widths of embeddings in function spaces. J. Complex. 24(4), 545–570 (2008)

Winther, R.: Some superlinear convergence results for the conjugate gradient method. SIAM J. Numer. Anal. 17(1), 14–17 (1980)

Zlatev, Z.: Numerical treatment of large air pollution models. In: Computer treatment of large air pollution models, pp. 69–109. Springer (1995)

Funding

Open access funding provided by Eötvös Loránd University. This research has been supported by the Hungarian National Research, Development and Innovation Fund (NKFIH), under the funding scheme ELTE TKP 2021-NKTA-62 and the grants no. K137699 and SNN125119.

Author information

Authors and Affiliations

Contributions

S.C. and J.K. derived the theoretical results and wrote the main manuscript text. S.C. wrote the codes.

Corresponding author

Ethics declarations

Ethical approval

Not applicable

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Castillo, S. ., Karátson, J. Rates of robust superlinear convergence of preconditioned Krylov methods for elliptic FEM problems. Numer Algor 96, 719–738 (2024). https://doi.org/10.1007/s11075-023-01663-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01663-1